The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination

Abstract

:1. Introduction

1.1. Overview of the PRISMA Mission and Instruments

1.2. Preprocessing Levels of PRISMA Hyperspectral Cubes

- Level0: The L0 product contains raw data in binary files, including instrument and satellite ancillary data, like the cloud cover percentage.

- Level1: The L1 product is a top-of-atmosphere radiance imagery organized as follows: two radiometrically calibrated hyperspectral and panchromatic radiance cubes and two co-registered HYPER and PAN radiance cubes.

- Level2: The L2 product is divided in:

- L2B: Atmospheric correction and geolocation of the L1 product (bottom-of-atmosphere radiance);

- L2C: Atmospheric correction and geolocation of the L1 product (bottom-of-atmosphere reflectance, including aerosol optical thickness and water vapor map);

- L2D: Geocoding (orthorectification) of L2C.

2. Materials and Methods

2.1. Study Areas

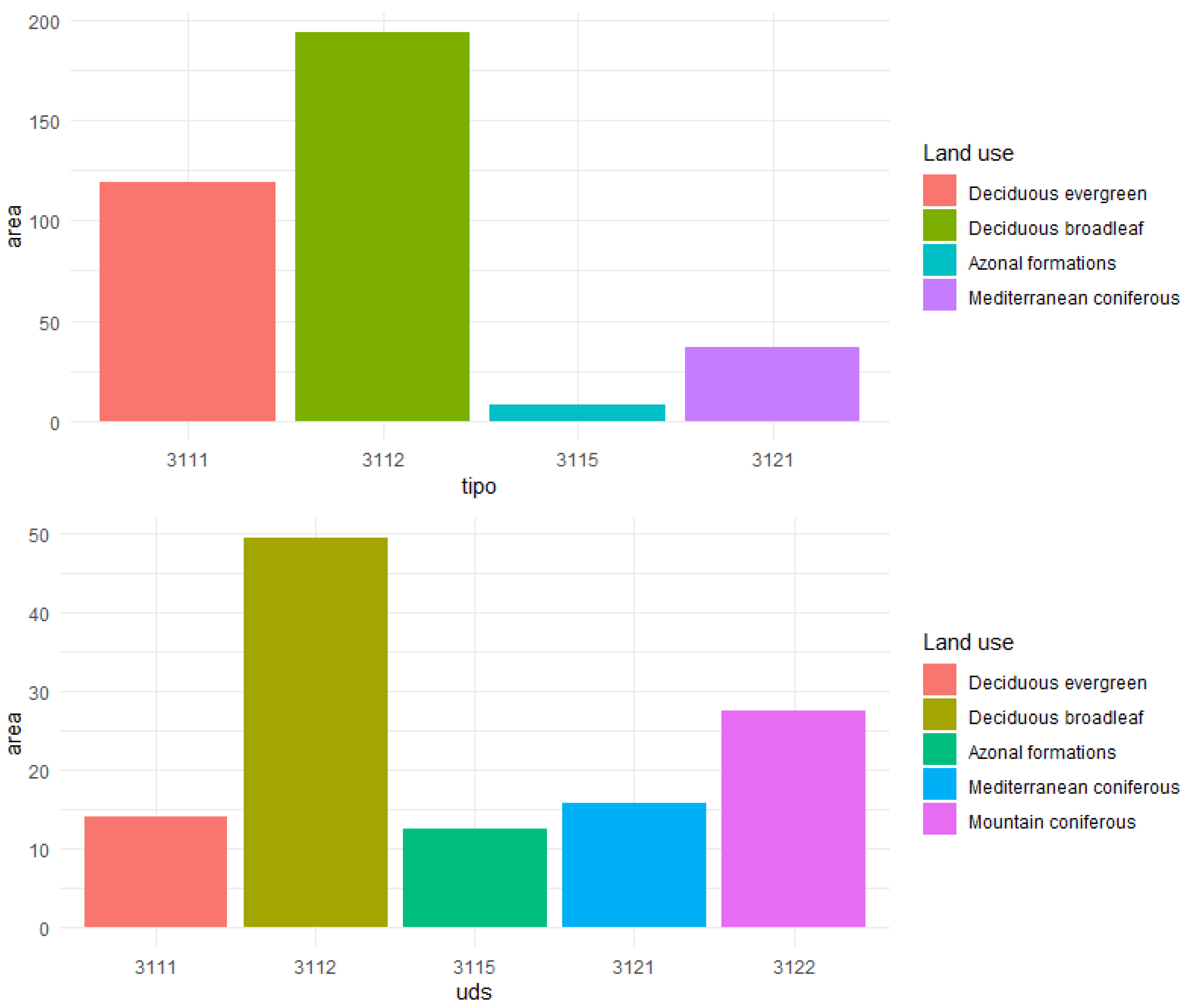

2.2. Reference Data

2.3. Remotely Sensed Data

2.4. Methods

- M-Statistic [45] (M): measures the difference of the distributional peaks of the reflectance values and is calculated as follows:where μx is the mean value for class x and σx the standard deviation of class x. A high M-statistic indicates a good separation between the two classes as the within-class variance is minimized and the between-class variance maximized. The limitation of the M-statistic is that when the means of two classes are equal, the M-statistic will always be zero and cannot accurately reflect the separability.

- Bhattacharyya distance [46] (B): measures the degree of dissimilarity between any two probability distributions, and is calculated as follows:where μx is the mean value for class x and Σx are the covariances. The advantage with respect to the M-statistic is that the Bhattacharyya distance takes into account the class separability due to the covariance difference, expressed in the second term of the equation.

- The Jeffries–Matusita distance [47] (JM distance): the JM distance is a function of separability that directly relates to the probability of how good a resultant classification will be. It is calculated as a function of the Bhattacharyya distance:where B is the Bhattacharyya distance.

- 4.

- Transformed divergence [48,49] (TD): is a maximum likelihood approach that provides a covariance weighted distance between the class means to determine whether spectral signatures were separable:where Cx is the covariance matrix of class x, μx is the mean value for class x, tr is the matrix trace function, and T is the matrix transposition function. Transformed divergence ranges between 0 and 2 and gives an exponentially decreasing weight to increasing distances between the classes. As for the JM distance, the transformed divergence values are widely interpreted as being indicative of the probability of performing a correct classification [48].

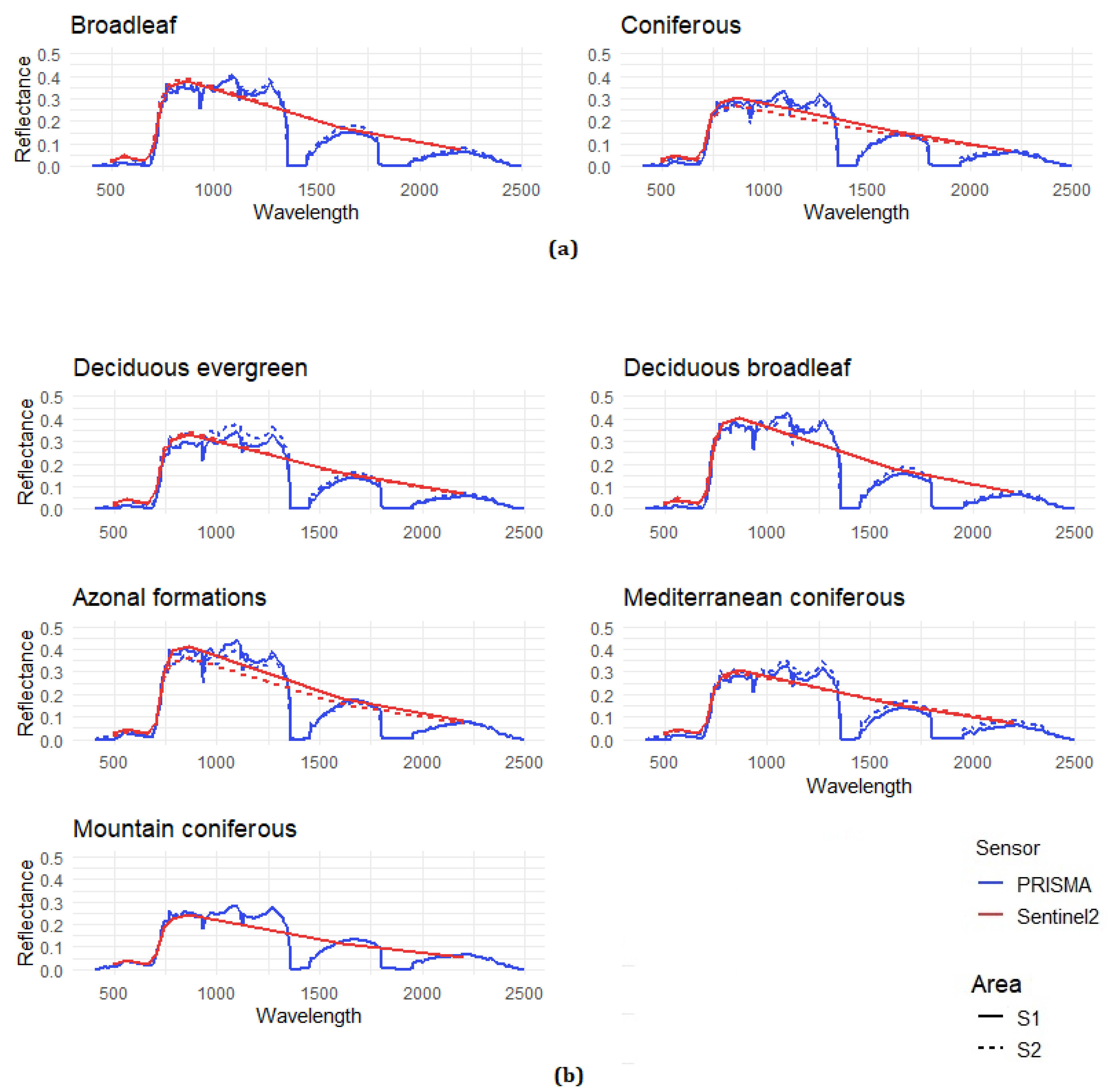

3. Results

4. Discussion

5. Conclusions

- Hyperspectral data were effective in discriminating forest types in both study areas and nomenclature system levels (average normalized separability higher than 0.50 for four out of six classes in Area 1, and nine out of 10 class pairs in Area 2). Only in Area 1 for the third level of nomenclature system the Sentinel-2 MSI was comparable with the PRISMA sensor.

- The SWIR spectral zone resulted as the most suitable for forest type discrimination. Other remarkable zones were the blue channel (in Area 1) for the broadleaf–coniferous class pair, the red-edge and the NIR-plateau (in Area 2) for most of the considered class pairs. Sentinel-2 relies primarily on the red-edge region (b6, b7) in separating the forest classes.

- The PRISMA sensor improved the separation between coniferous and broadleaves by 50% in Area 1 and 30% in Area 2. At the fourth level, the average separability of was 120% higher in Area 1 and 84% in Area 2.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kumar, B.; Dikshit, O.; Gupta, A.; Singh, M.K. Feature extraction for hyperspectral image classification: A review. Int. J. Remote Sens. 2020, 41, 6248–6287. [Google Scholar] [CrossRef]

- Li, S.; Wu, H.; Wan, D.; Zhu, J. An effective feature selection method for hyperspectral image classification based on genetic algorithm and support vector machine. Knowl. Based Syst. 2011, 24, 40–48. [Google Scholar] [CrossRef]

- Acquarelli, J.; Marchiori, E.; Buydens, L.M.C.; Tran, T.; Van Laarhoven, T. Spectral spatial classification of hyperspec-tral images: Three tricks and a new supervised learning setting. Remote Sens. 2018, 10, 1156. [Google Scholar] [CrossRef] [Green Version]

- Defourny, P.; D’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of Hyperspectral Earth Observation Applications from Space in the Sentinel-2 Context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Zhang, F.; Zhang, L.; Lin, Y.; Wang, S.; Xie, Y. UNVI-Based Time Series for Vegetation Discrimination Using Separability Analysis and Random Forest Classification. Remote Sens. 2020, 12, 529. [Google Scholar] [CrossRef] [Green Version]

- Guarini, R.; Loizzo, R.; Longo, F.; Mari, S.; Scopa, T.; Varacalli, G. Overview of the prisma space and ground segment and its hyperspectral products. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Rees, G. The Remote Sensing Data Book; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Travaglini, D.; Barbati, A.; Chirici, G.; Lombardi, F.; Marchetti, M.; Corona, P. ForestBIOTA data on deadwood monitoring in Europe. Plant Biosyst. 2007, 141, 222–230. [Google Scholar] [CrossRef] [Green Version]

- Hao, X.; Wu, Y.; Wang, P. Angle Distance-Based Hierarchical Background Separation Method for Hyperspectral Imagery Target Detection. Remote Sens. 2020, 12, 697. [Google Scholar] [CrossRef] [Green Version]

- Adams, J.B.; Smith, M.O.; Gillespie, A.R. Imaging spectroscopy: Interpretation based on spectral mixture analysis. In Remote Geochemical Analysis: Elemental and Mineralogical Composition; Pieters, C.M., Englert, P.A.J., Eds.; Press Syndicate of University of Cambridge: Cambridge, UK, 1993; pp. 145–166. [Google Scholar]

- Guo, B.; Damper, R.; Gunn, S.R.; Nelson, J. A fast separability-based feature-selection method for high-dimensional remotely sensed image classification. Pattern Recognit. 2008, 41, 1653–1662. [Google Scholar] [CrossRef] [Green Version]

- Staenz, K.; Held, A. Summary of current and future terrestrial civilian hyperspectral spaceborne systems. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 123–126. [Google Scholar]

- Verrelst, J.; Romijn, E.; Kooistra, L. Mapping Vegetation Density in a Heterogeneous River Floodplain Ecosystem Using Pointable CHRIS/PROBA Data. Remote Sens. 2012, 4, 2866–2889. [Google Scholar] [CrossRef] [Green Version]

- Cook, B.; Corp, L.; Nelson, R.; Middleton, E.; Morton, D.; McCorkel, J.; Masek, J.; Ranson, K.; Ly, V.; Montesano, P. NASA Goddard’s LiDAR, Hyperspectral and Thermal (G-LiHT) Airborne Imager. Remote Sens. 2013, 5, 4045–4066. [Google Scholar] [CrossRef] [Green Version]

- Middleton, E.M.; Ungar, S.G.; Mandl, D.J.; Ong, L.; Frye, S.W.; Campbell, P.E.; Landis, D.R.; Young, J.P.; Pollack, N.H. The Earth Observing One (EO-1) Satellite Mission: Over a Decade in Space. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 427–438. [Google Scholar] [CrossRef]

- Yin, J.; Wang, Y.; Hu, J. A New Dimensionality Reduction Algorithm for Hyperspectral Image Using Evolutionary Strategy. IEEE Trans. Ind. Inf. 2012, 8, 935–943. [Google Scholar] [CrossRef]

- Adams, J.B.; Smith, M.O.; Johnson, P.E. Spectral mixture modeling: A new analysis of rock and soil types at the Vi-king Lander 1 site. J. Geophys. Res. Atmos. Solid Earth Planets 1986, 91, 8098–8112. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Roberts, D.A.; Ustin, S.L.; Ogunjemiyo, S.; Greenberg, J.A.; Dobrowski, S.Z.; Chen, J.; Hinckley, T.M. Spectral and Structural Measures of Northwest Forest Vegetation at Leaf to Landscape Scales. Ecosystems 2004, 7, 545–562. [Google Scholar] [CrossRef]

- Vaiphasa, C.; Ongsomwang, S.; Vaiphasa, T.; Skidmore, A.K. Tropical mangrove species discrimination using hyperspec-tral data: A laboratory study. Estuar. Coast. Shelf Sci. 2005, 65, 371–379. [Google Scholar] [CrossRef]

- Dalponte, M.; Orka, H.O.; Gobakken, T.; Gianelle, D.; Naesset, E. Tree Species Classification in Boreal Forests with Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2632–2645. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree species classification in the Southern Alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and LiDAR data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Zeng, W.; Lin, H.; Yan, E.; Jiang, Q.; Lu, H.; Wu, S. Optimal selection of remote sensing feature variables for land cov-er classification. In Proceedings of the 2018 Fifth International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Xi’an, China, 18–20 June 2018. [Google Scholar]

- Aria, S.E.H.; Menenti, M.; Gorte, B. Spectral discrimination based on the optimal informative parts of the spectrum. In Proceedings of the Image and Signal Processing for Remote Sensing XVIII, Edinburgh, UK, 8 November 2012; Volume 8537, p. 853709. [Google Scholar]

- Attarchi, S.; Gloaguen, R. Classifying Complex Mountainous Forests with L-Band SAR and Landsat Data Integration: A Comparison among Different Machine Learning Methods in the Hyrcanian Forest. Remote Sens. 2014, 6, 3624–3647. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Roy, D.; Boschetti, L.; Zhang, H.K.; Yan, L.; Kumar, S.S.; Gomez-Dans, J.; Li, J. Separability Analysis of Sentinel-2A Multi-Spectral Instrument (MSI) Data for Burned Area Discrimination. Remote Sens. 2016, 8, 873. [Google Scholar] [CrossRef] [Green Version]

- Ba, R.; Song, W.; Li, X.; Xie, Z.; Lo, S. Integration of Multiple Spectral Indices and a Neural Network for Burned Area Mapping Based on MODIS Data. Remote Sens. 2019, 11, 326. [Google Scholar] [CrossRef] [Green Version]

- Chang, C.-I.; Wang, S. Constrained band selection for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1575–1585. [Google Scholar] [CrossRef]

- Büttner, G.; Feranec, J.; Jaffrain, G.; Mari, L.; Maucha, G.; Soukup, T. The corine land cover 2000 project. EARSeL eProc. 2004, 3, 331–346. [Google Scholar]

- FAO. Global Forest Resources Assessment 2010. Terms and Definition. Working Paper 144/E. Available online: http://www.fao.org/docrep/014/am665e/am665e00.pdf (accessed on 12 March 2020).

- Forest Europe. Streamline Global Forest Reporting and Strengthen Collaboration among International Criteria and Indicator Processes. In Proceedings of the Joint Workshop, Victoria, BC, Canada, 18–20 October 2011; ISBN 978-1-100-20248-8. [Google Scholar]

- Vizzarri, M.; Chiavetta, U.; Chirici, G.; Garfì, V.; Bastrup-Birk, A.; Marchetti, M. Comparing multisource harmonized forest types mapping: A case study from central Italy. iFor. Biogeosci. 2015, 8, 59–66. [Google Scholar] [CrossRef] [Green Version]

- Chiavetta, U.; Camarretta, N.; Garfı, V.; Ottaviano, M.; Chirici, G.; Vizzarri, M.; Marchetti, M. Harmonized forest categories in central Italy. J. Maps 2016, 12, 98–100. [Google Scholar] [CrossRef]

- ASI. Prisma Products Specification Document Issue 2.3. Available online: http://prisma.asi.it/missionselect/docs/PRISMA%20Product%20Specifications_Is2_3.pdf: (accessed on 12 March 2020).

- Loizzo, R.; Guarini, R.; Longo, F.; Scopa, T.; Formaro, R.; Facchinetti, C.; Varacalli, G. Prisma: The Italian Hyperspectral Mission. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 175–178. [Google Scholar]

- Fattorini, L.; Marcheselli, M.; Pisani, C. A three-phase sampling strategy for largescale multiresource forest invento-ries. J. Agric. Biol. Environ. Stat. 2006, 11, 296–316. [Google Scholar] [CrossRef]

- Busetto, L. Prismaread: An R Package for Imporing PRISMA L1/L2 Hyperspectral Data and Convert Them to a More User Friendly Format—v0.1.0. 2020. Available online: https://github.com/lbusett/prismaread (accessed on 2 March 2020). [CrossRef]

- Mohan, B.K.; Porwal, A. Hyperspectral image processing and analysis. Curr. Sci. 2015, 108, 833–841. [Google Scholar]

- Somers, B.; Asner, G.P. Multi-temporal hyperspectral mixture analysis and feature selection for invasive species mapping in rainforests. Remote Sens. Environ. 2013, 136, 14–27. [Google Scholar] [CrossRef]

- Yeom, J.; Han, Y.; Kim, Y. Separability analysis and classification of rice fields using KOMPSAT-2 High Resolution Satellite Imagery. Res. J. Chem. Environ. 2013, 17, 136–144. [Google Scholar]

- Hu, Q.; Sulla-Menashe, D.; Xu, B.; Yin, H.; Tang, H.; Yang, P.; Wu, W. A phenology-based spectral and temporal feature se-lection method for crop mapping from satellite time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 218–229. [Google Scholar] [CrossRef]

- Evans, J.; Murphy, M.A.; Ram, K. Spatialeco: Spatial Analysis and Modelling Utili-Ties. 2020. Available online: https://CRAN.R-project.org/package=spatialEco (accessed on 15 March 2020).

- Kaufman, Y.; Remer, L. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Bhattacharyya, A. On a measure of divergence between two statistical populations defined by their probability dis-tributions. Bull. Calcutta Math. Soc. 1943, 35, 99–109. [Google Scholar]

- Bruzzone, L.; Roli, F.; Serpico, S.B. An extension to multiclass cases of the Jefferys-Matusita distance. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 33, 1318–1321. [Google Scholar]

- Moik, J.G. Digital Processing of Remotely Sensed Images Scientific and Technical Information Branch; National Aero-Nautics and Space Administration: Washington, DC, USA, 1980.

- Du, H.; Chang, C.I.; Ren, H.; D’Amico, F.M.; Jensen, J.O. New Hyperspectral Discrimination Measure for Spectral Characterization. Opt. Eng. 2004, 43, 1777–1786. [Google Scholar]

- Teillet, P.; Guindon, B.; Goodenough, D. On the Slope-Aspect Correction of Multispectral Scanner Data. Can. J. Remote Sens. 1982, 8, 84–106. [Google Scholar] [CrossRef] [Green Version]

- Richter, R. Correction of satellite imagery over mountainous terrain. Appl. Opt. 1998, 37, 4004–4015. [Google Scholar] [CrossRef]

- Van Aardt, J.A.N.; Wynne, R.H. Examining pine spectral separability using hyperspectral data from an airborne sensor: An extension of field-based results. Int. J. Remote Sens. 2007, 28, 431–436. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Neumann, C.; Forster, M.; Buddenbaum, H.; Ghosh, A.; Clasen, A.; Joshi, P.K.; Koch, B. Comparison of Feature Reduction Algorithms for Classifying Tree Species With Hyperspectral Data on Three Central European Test Sites. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2547–2561. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Koch, B. Aframework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Liu, M.; Yu, T.; Gu, X.; Sun, Z.; Yang, J.; Zhang, Z.; Mi, X.; Cao, W.; Li, J. The Impact of Spatial Resolution on the Classification of Vegetation Types in Highly Fragmented Planting Areas Based on Unmanned Aerial Vehicle Hyperspectral Images. Remote Sens. 2020, 12, 146. [Google Scholar] [CrossRef] [Green Version]

- Roth, K.L.; Roberts, D.A.; Dennison, P.E.; Peterson, S.; Alonzo, M. The impact of spatial resolution on the classification of plant species and functional types within imaging spectrometer data. Remote Sens. Environ. 2015, 171, 45–57. [Google Scholar] [CrossRef]

- Herold, M.; Roberts, D.A. Multispectral Satellites—Imaging Spectrometry—LIDAR: Spatial—Spectral Tradeoffs in Urban Mapping. Int. J. Geoinform. 2006, 2, 1–13. [Google Scholar]

- Goodenough, D.; Dyk, A.; Niemann, K.; Pearlman, J.; Chen, H.; Han, T.; Murdoch, M.; West, C. Processing hyperion and ali for forest classification. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1321–1331. [Google Scholar] [CrossRef]

| Sensor | Spatial Resolution (m) | Number of Bands | Swath (km) | Spectral Range (nm) | Spectral Resolution | Launch |

|---|---|---|---|---|---|---|

| Hyperion, EO-1 (USA) | 30 | 196 | 7.5 | 427–2395 | 10 | 2000 |

| CHRIS, PROBA (ESA) | 25 | 19 | 17.5 | 200–1050 | 1.25–11 | 2001 |

| HyspIRI VSWIR (USA) | 60 | 210 | 145 | 380–2500 | 10 | 2020 |

| EnMAP HSI (Germany) | 30 | 200 | 30 | 420–1030 | 5–10 | Not launched yet |

| TianGong-1 (China) | 10 (VNIR) 20 (SWIR) | 128 | 10 | 400–2500 | 10 (VNIR) 23 (SWIR) | 2011 |

| HISUI (Japan) | 30 | 185 | 30 | 400–2500 | 10 (VNIR) 12.5 (SWIR) | 2019 |

| SHALOM (Italy–Israel) | 10 | 275 | 30 | 400–2500 | 10 | 2021 |

| HypXIM (France) | 8 | 210 | 145–600 | 400–2500 | 10 | 2022 |

| PRISMA (Italy | 30 | 240 | 30 | 400–2500 | 10 | 2019 |

| Orbit altitude reference | 615 km |

| Swath/Field of view | 30 km/2.77° |

| Ground Sample Distance | Hyperspectral: 30 m |

| PAN: 5 m | |

| Spatial pixels | Hyperspectral: 1000 |

| PAN: 6000 | |

| Pixel size | Hyperspectral: 30 × 30 μm |

| PAN: 6.5 × 6.5 μm | |

| Spectral range | VNIR: 400–1010 nm (66 bands) |

| SWIR: 920–2500 nm (173 bands) | |

| PAN: 400–700 nm | |

| Spectral sampling interval (SSI) | ≤12 nm |

| Spectral width | ≤12 nm |

| Spectral calibration accuracy | ±0.1 nm |

| Radiometric quantization | 12 bit |

| VNIR Signal to noise ratio (SNR) | >200:1 |

| SWIR SNR | >100:1 |

| PAN SNR | >240:1 |

| Absolute radiometric accuracy | Better than 5% |

| Third Level | Description | Fourth Level | Description |

|---|---|---|---|

| 3.1.1 | Broadleaf | 3.1.1.1 | Deciduous evergreen |

| 3.1.1.2 | Deciduous broadleaf | ||

| 3.1.1.5 | Azonal formation | ||

| 3.1.2 | Coniferous | 3.1.2.1 | Mediterranean coniferous |

| 3.1.2.2 | Mountain coniferous |

| Class Pair | PRISMA | Sentinel-2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Wavelength | B | JM | M | TD | Wavelength | B | JM | M | TD | ||

| Area 1 | 3112_3111 | 48 | 0.81 | 1.11 | 1.27 | 1.11 | 782 | 0.44 | 0.71 | 0.93 | 0.73 |

| 3115_3111 | 236 | 2.55 | 140 | 0.18 | 2.00 | 2202 | 0.47 | 0.75 | 0.43 | 1.47 | |

| 3115_3112 | 464 | 2.11 | 1.36 | 0.22 | 2.00 | 2202 | 0.63 | 0.93 | 0.39 | 1.88 | |

| 3121_3111 | 696 | 0.90 | 1.19 | 0.03 | 2.00 | 1613 | 0.15 | 0.27 | 0.05 | 0.37 | |

| 3121_3112 | 989 | 0.77 | 1.07 | 1.24 | 1.07 | 864 | 0.57 | 0.87 | 1.07 | 0.90 | |

| 3121_3115 | 1156 | 3.79 | 1.42 | 0.18 | 2.00 | 782 | 0.32 | 0.55 | 0.69 | 0.72 | |

| Area 2 | 3112_3111 | 100 | 1.00 | 1.27 | 0.22 | 2.00 | 1613 | 0.20 | 0.36 | 0.63 | 0.36 |

| 3115_3111 | 375 | 2.07 | 1.35 | 0.24 | 2.00 | 664 | 0.36 | 0.60 | 0.05 | 1.09 | |

| 3115_3112 | 561 | 1.71 | 1.224 | 0.10 | 2.00 | 664 | 0.51 | 0.80 | 0.03 | 1.62 | |

| 3121_3111 | 791 | 2.41 | 1.40 | 0.20 | 2.00 | 740 | 0.31 | 0.54 | 0.79 | 0.54 | |

| 3121_3112 | 1021 | 2.21 | 1.38 | 0.11 | 2.00 | 740 | 0.85 | 1.15 | 1.30 | 1.15 | |

| 3121_3115 | 1190 | 0.41 | 0.67 | 0.89 | 0.68 | 782 | 0.27 | 0.47 | 0.73 | 0.48 | |

| 3121_3122 | 1480 | 1.25 | 1.23 | 0.15 | 2.00 | 1613 | 0.30 | 0.52 | 0.77 | 0.53 | |

| 3122_3111 | 1985 | 2.10 | 1.35 | 0.24 | 2.00 | 740 | 0.61 | 0.91 | 1.10 | 0.92 | |

| 3122_3112 | 2171 | 1.75 | 1.25 | 0.09 | 2.00 | 740 | 1.35 | 1.28 | 1.64 | 1.48 | |

| 3122_3115 | 2380 | 0.88 | 1.17 | 1.33 | 1.18 | 782 | 0.58 | 0.88 | 1.07 | 0.93 | |

| Class 2 | |||||

|---|---|---|---|---|---|

| Area 1 | Area 2 | ||||

| 311 | 312 | 311 | 312 | ||

| Class 1 | 311 | / | 864 | / | 782 |

| 312 | 450 | / | 1841 | / | |

| Class 2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Area 1 | Area 2 | ||||||||

| 3111 | 3112 | 3121 | 3122 | 3112 | 3115 | 3121 | 3122 | ||

| Class 1 | 3111 | / | 782 | 664 | / | 1613 | 703 | 740 | 740 |

| 3112 | 814 | / | 864 | / | / | 740 | 740 | 740 | |

| 3115 | 443 | 428 | 782 | / | 1373 | / | 782 | 782 | |

| 3121 | 443 | 1029 | / | / | 1373 | 731 | / | 1613 | |

| 3122 | / | / | / | / | 1373 | 1142 | 1361 | / | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vangi, E.; D’Amico, G.; Francini, S.; Giannetti, F.; Lasserre, B.; Marchetti, M.; Chirici, G. The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination. Sensors 2021, 21, 1182. https://doi.org/10.3390/s21041182

Vangi E, D’Amico G, Francini S, Giannetti F, Lasserre B, Marchetti M, Chirici G. The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination. Sensors. 2021; 21(4):1182. https://doi.org/10.3390/s21041182

Chicago/Turabian StyleVangi, Elia, Giovanni D’Amico, Saverio Francini, Francesca Giannetti, Bruno Lasserre, Marco Marchetti, and Gherardo Chirici. 2021. "The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination" Sensors 21, no. 4: 1182. https://doi.org/10.3390/s21041182

APA StyleVangi, E., D’Amico, G., Francini, S., Giannetti, F., Lasserre, B., Marchetti, M., & Chirici, G. (2021). The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination. Sensors, 21(4), 1182. https://doi.org/10.3390/s21041182