Saliency-Guided Nonsubsampled Shearlet Transform for Multisource Remote Sensing Image Fusion

Abstract

1. Introduction

2. Related Works

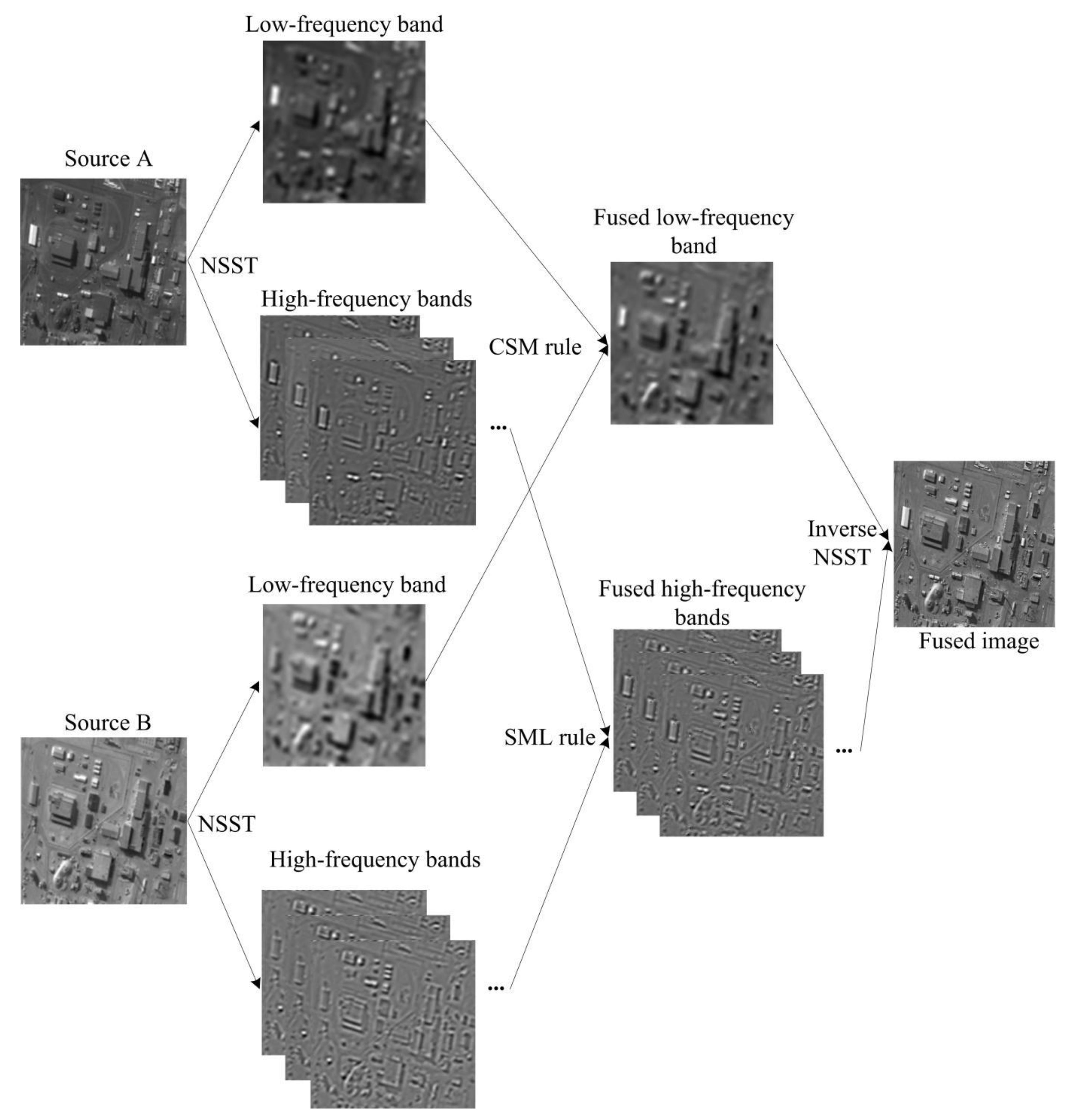

Nonsubsampled Shearlet Transform

3. Proposed Fusion Method

3.1. Fusion of Low-Frequency Components

3.2. Fusion of High-Frequency Components

| Algorithm 1 Remote sensing image fusion via NSST |

| Input: the source remote sensing images A and B Output: fused image F Parameters: the number of NSST decomposition levels—N; the number of directions at each decomposition level— Step 1: NSST decomposition The input images A and B are decomposed into low- and high-frequency sub-bands and , respectively. Step 2: low-frequency band fusion rule (1) The saliency maps and the corresponding weight matrices of the low-frequency bands are calculated by Equations (1)–(5). (2) The fused low-frequency band LF is obtained by Equation (6). Step 3: high-frequency band fusion rule (1) The SML of the high-frequency bands is constructed via Equations (7)–(8). (2) The fused high-frequency band HF is computed by Equation (9). Step 4: inverse NSST and image reconstruction The fused image F is reconstructed by inverse NSST performed on the fused low- and high-frequency bands . |

4. Experimental Results and Discussion

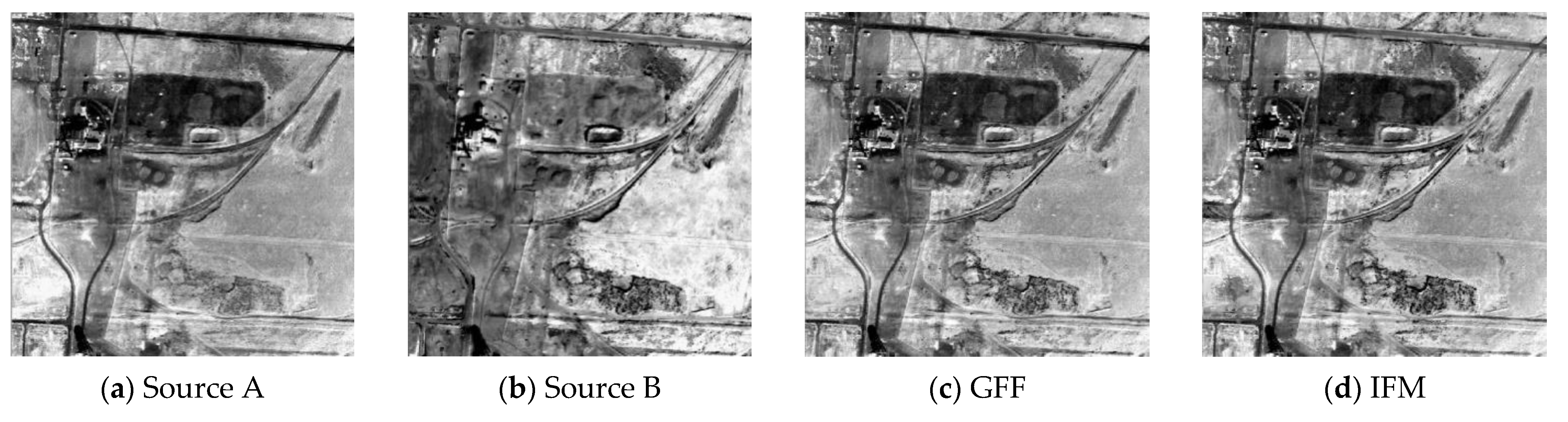

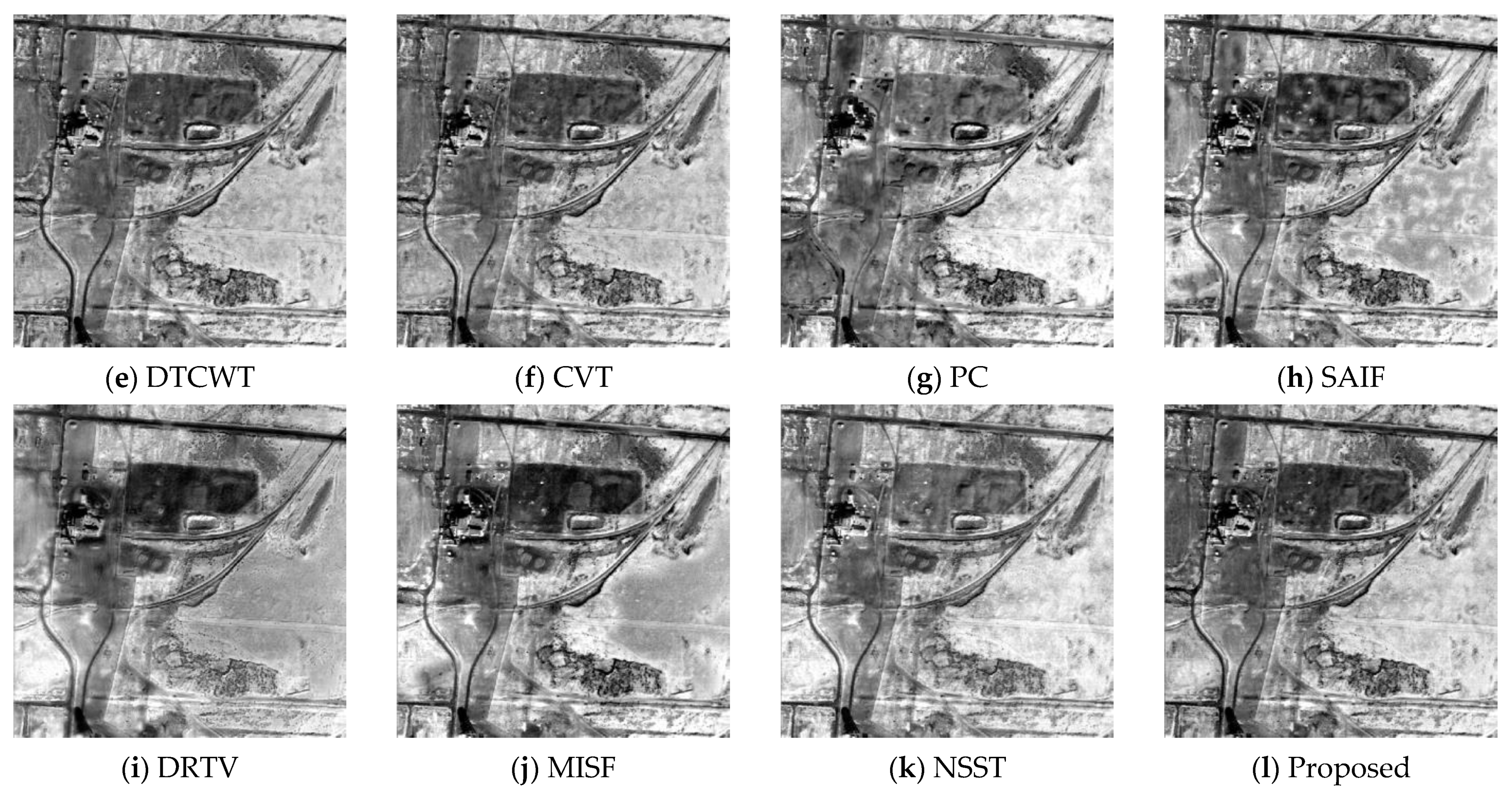

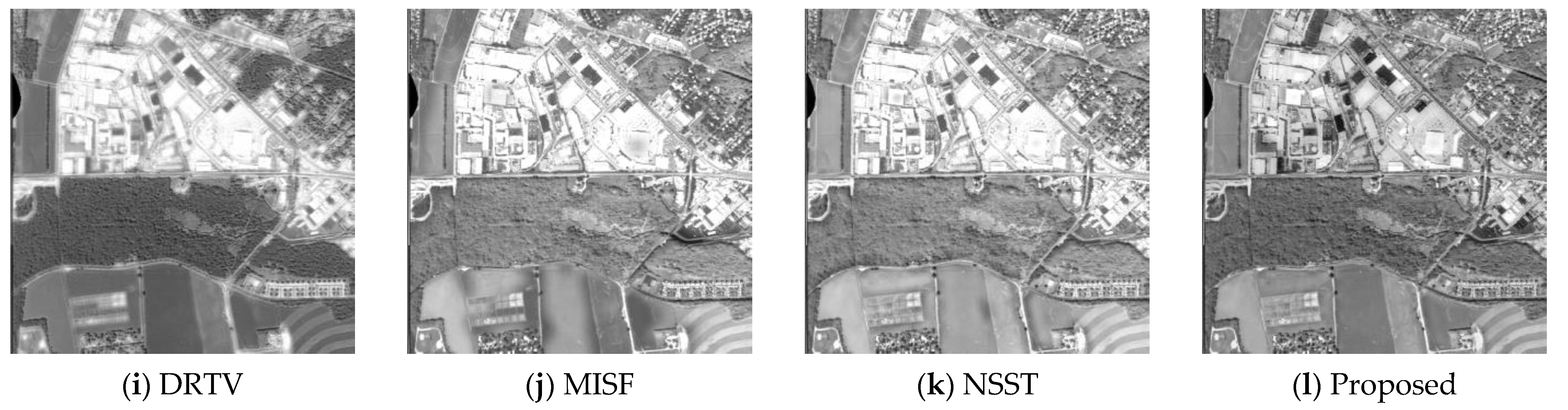

4.1. Qualitative Analysis

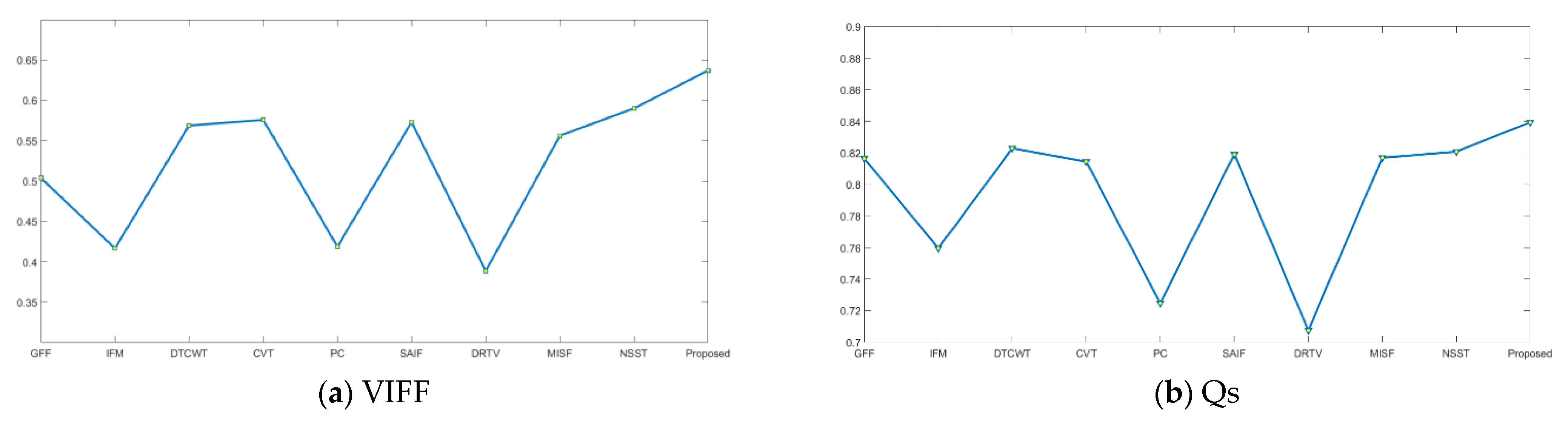

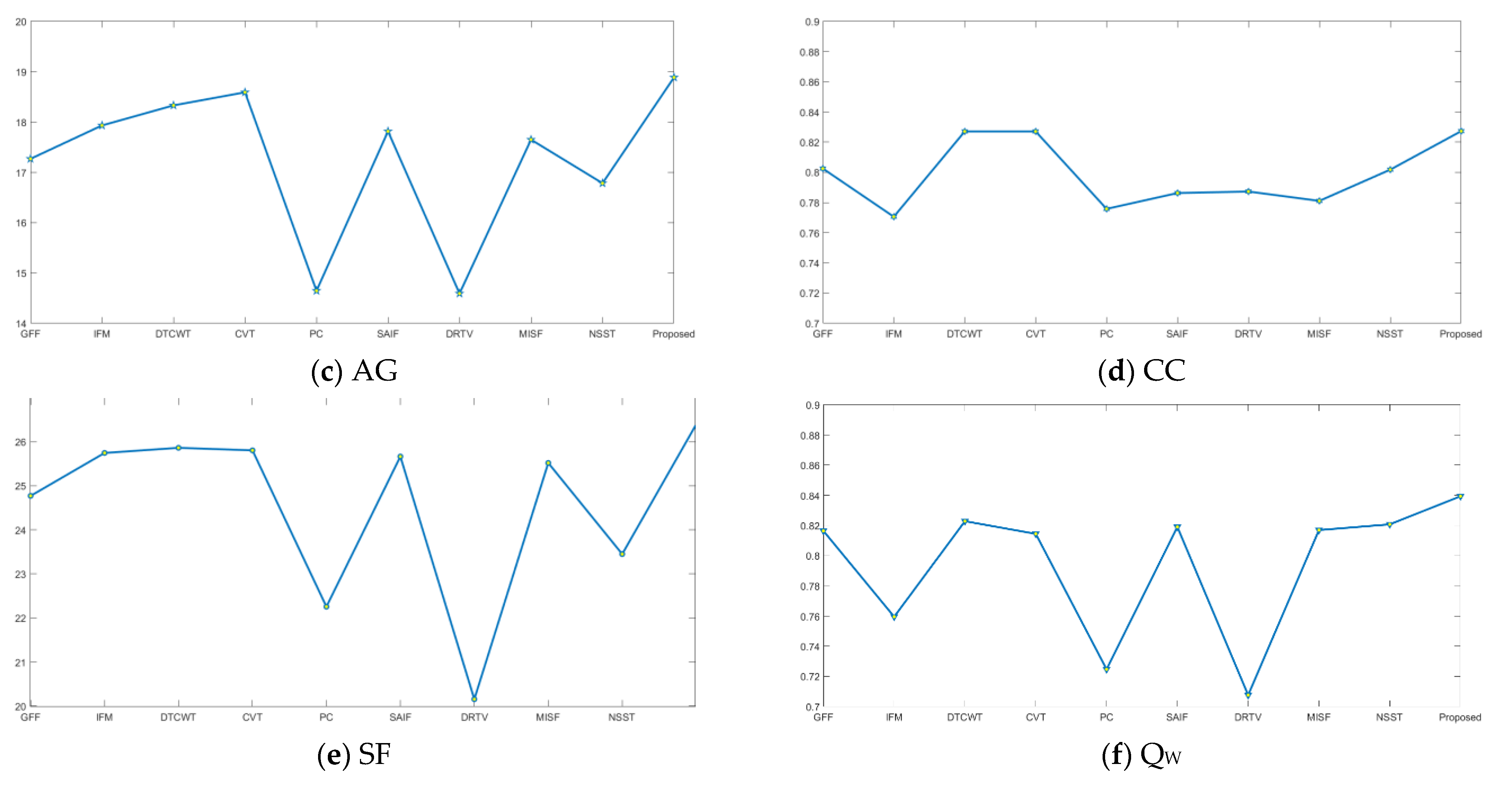

4.2. Quantitative Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, S.; Wang, M. Fusion of multiparametric SAR images based on SW-nonsubsampled contourlet and PCNN. Signal Process. 2009, 89, 2596–2608. [Google Scholar] [CrossRef]

- Azarang, A.; Kehtarnavaz, N. Image fusion in remote sensing by multi-objective deep learning. Int. J. Remote Sens. 2020, 41, 9507–9524. [Google Scholar] [CrossRef]

- Liao, B.; Liu, W. Multispectral image fusion based on joint sparse subspace recovery. J. Appl. Remote Sens. 2015, 9, 095068. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L. Multi-focus image fusion: A survey of the state of the art. Inf. Fusion 2020, 64, 71–91. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Bayram, I.; Selesnick, I. On the dual-tree complex wavelet packet and m-band transforms. IEEE Trans. Signal Process. 2008, 56, 2298–2310. [Google Scholar] [CrossRef]

- Yang, X.; Wang, J. Random walks for synthetic aperture radar image fusion in framelet domain. IEEE Trans. Image Process. 2018, 27, 851–865. [Google Scholar] [CrossRef]

- Do, M.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [PubMed]

- Da, C.; Zhou, J. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar]

- Guo, K.; Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef]

- Easley, G.; Labate, D.; Lim, W. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef]

- Iqbal, M.; Riaz, M.; Iltaf, N. A multifocus image fusion using highlevel DWT components and guided filter. Multimed. Tools Appl. 2020, 79, 12817–12828. [Google Scholar]

- Aishwarya, N.; Thangammal, C.B. Visible and infrared image fusion using DTCWT and adaptive combined clustered dictionary. Infrared Phys. Technol. 2018, 93, 300–309. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Zhu, R. Random walks for pansharpening in complex tight framelet domain. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5121–5134. [Google Scholar] [CrossRef]

- Yang, S.; Wang, M. Contourlet hidden markov tree and clarity-saliency driven PCNN based remote sensing images fusion. Appl. Soft Comput. 2012, 12, 228–237. [Google Scholar] [CrossRef]

- Li, B.; Peng, H. A novel fusion method based on dynamic threshold neural P systems and nonsubsampled contourlet transform for multi-modality medical images. Signal Process. 2021, 178, 107793. [Google Scholar] [CrossRef]

- Yin, M.; Liu, X.; Liu, Y. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Instrum. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Wang, X.; Mu, Z.; Song, R. A hyperspectral image NSST-HMF model and its application in HS-pansharpening. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4803–4817. [Google Scholar] [CrossRef]

- Li, S.; Kang, X. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Shreyamsha Kumar, B.K. Image fusion based on pixel significance using cross bilateral filter. Signal Image Video Process. 2015, 9, 1193–1204. [Google Scholar] [CrossRef]

- Jian, L.; Yang, X.; Zhou, Z. Multi-scale image fusion through rolling guidance filter. Future Gener. Comput. Syst. Int. J. Esci. 2018, 83, 310–325. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Y. Infrared and visible image fusion via gradientlet filter. Comput. Vis. Image Underst. 2020, 197, 103016. [Google Scholar] [CrossRef]

- Li, L.; Si, Y.; Wang, L. A novel approach for multi-focus image fusion based on SF-PAPCNN and ISML in NSST domain. Multimed. Tools Appl. 2020, 79, 24303–24328. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image matting for fusion of multi-focus images in dynamic scenes. Inf. Fusion 2013, 14, 147–162. [Google Scholar] [CrossRef]

- Zhan, K.; Li, Q.; Teng, J. Multifocus image fusion using phase congruency. J. Electron. Imaging 2015, 24, 033014. [Google Scholar] [CrossRef]

- Li, W.; Xie, Y. Structure-aware image fusion. Optik 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Du, Q.; Xu, H.; Ma, Y. Fusing infrared and visible images of different resolutions via total variation model. Sensors 2018, 18, 3827. [Google Scholar] [CrossRef]

- Zhan, K.; Kong, L.; Liu, B. Multimodal image seamless fusion. J. Electron. Imaging 2019, 28, 023027. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

- Li, L.; Ma, H.; Jia, Z. A novel multiscale transform decomposition based multi-focus image fusion framework. Multimed. Tools Appl. 2021, 80, 1–21. [Google Scholar]

- Li, H.; Wu, X.; Durrani, T. NestFuse: An infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE Trans. Instrum. Meas. 2020, 69, 9645–9656. [Google Scholar] [CrossRef]

- Wang, K.; Zheng, M.; Wei, H. Multi-modality medical image fusion using convolutional neural network and contrast pyramid. Sensors 2020, 20, 2169. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ma, H.; Lv, M. Multimodal medical image fusion via PCNN and WSEML in nonsubsampled contourlet transform domain. J. Med. Imaging Health Inform. 2021, 11, 1–18. [Google Scholar]

- Liu, Z.; Blasch, E.; Xue, Z. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhang, Y.; Huang, S. Infrared and visible image fusion using visual saliency sparse representation and detail injection model. IEEE Trans. Instrum. Meas. 2021, 70, 5001715. [Google Scholar]

- Li, L.; Si, Y.; Wang, L. Brain image enhancement approach based on singular value decomposition in nonsubsampled shearlet transform domain. J. Med. Imaging Health Inform. 2020, 10, 1785–1794. [Google Scholar] [CrossRef]

- Li, H.; Zhang, L.; Jiang, M. Multi-focus image fusion algorithm based on supervised learning for fully convolutional neural network. Pattern Recognit. Lett. 2021, 141, 45–53. [Google Scholar] [CrossRef]

- Li, L.; Wang, L.; Wang, Z. A novel medical image fusion approach based on nonsubsampled shearlet transform. J. Med. Imaging Health Inform. 2019, 9, 1815–1826. [Google Scholar] [CrossRef]

- Raudonis, V.; Paulauskaite-Taraseviciene, A.; Sutiene, K. Fast multi-focus fusion based on deep learning for early-stage embryo image enhancement. Sensors 2021, 21, 863. [Google Scholar] [CrossRef]

- Subbiah Parvathy, V.; Pothiraj, S.; Sampson, J. A novel approach in multimodality medical image fusion using optimal shearlet and deep learning. Int. J. Imaging Syst. Technol. 2020, 30, 847–859. [Google Scholar] [CrossRef]

- Du, J.; Li, W. Two-scale image decomposition based image fusion using structure tensor. Int. J. Imaging Syst. Technol. 2020, 30, 271–284. [Google Scholar] [CrossRef]

- Ganasala, P.; Prasad, A.D. Medical image fusion based on laws of texture energy measures in stationary wavelet transform domain. Int. J. Imaging Syst. Technol. 2020, 30, 544–557. [Google Scholar] [CrossRef]

- Li, X.; Yan, H.; Xie, W. An improved pulse-coupled neural network model for Pansharpening. Sensors 2020, 20, 2764. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, J. GTP-PNet: A residual learning network based on gradient transformation prior for pansharpening. ISPRS J. Photogramm. Remote Sens. 2021, 172, 223–239. [Google Scholar] [CrossRef]

- Lee, C.; Oh, J. Rigorous co-registration of KOMPSAT-3 multispectral and panchromatic images for pan-sharpening image fusion. Sensors 2020, 20, 2100. [Google Scholar] [CrossRef] [PubMed]

- Saxena, N.; Balasubramanian, R. A pansharpening scheme using spectral graph wavelet transforms and convolutional neural networks. Int. J. Remote Sens. 2021, 42, 2898–2919. [Google Scholar] [CrossRef]

- Wu, S.; Chen, H. Smart city oriented remote sensing image fusion methods based on convolution sampling and spatial transformation. Comput. Commun. 2020, 157, 444–450. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Peng, Y.; Li, W.; Luo, X. Integrated fusion framework based on semicoupled sparse tensor factorization for spatio-temporal-spectra fusion of remote sensing images. Inf. Fusion 2021, 65, 21–36. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Y.; Wang, Q. Hyperspectral and multispectral image fusion via nonlocal low-rank tensor approximation and sparse representation. IEEE Trans. Geosci. Remote Sens. 2021, 59, 550–562. [Google Scholar] [CrossRef]

| VIFF | QS | AG | CC | SF | QW | |

|---|---|---|---|---|---|---|

| GFF | 0.4057 | 0.8064 | 8.7903 | 0.7493 | 14.4590 | 0.8079 |

| IFM | 0.2871 | 0.7174 | 9.5061 | 0.6834 | 15.6730 | 0.7091 |

| DTCWT | 0.5380 | 0.8140 | 10.0384 | 0.7816 | 15.7787 | 0.8214 |

| CVT | 0.5534 | 0.7984 | 10.2397 | 0.7771 | 15.5899 | 0.8165 |

| PC | 0.4246 | 0.7477 | 9.1494 | 0.6668 | 14.6779 | 0.6555 |

| SAIF | 0.5662 | 0.8038 | 9.3884 | 0.6798 | 15.2025 | 0.8261 |

| DRTV | 0.2895 | 0.7316 | 7.8006 | 0.7176 | 11.6689 | 0.6561 |

| MISF | 0.5226 | 0.8051 | 9.1365 | 0.6575 | 14.9136 | 0.8142 |

| NSST | 0.6158 | 0.8218 | 10.0766 | 0.7272 | 15.4583 | 0.8304 |

| Proposed | 0.6130 | 0.8438 | 10.4592 | 0.7893 | 16.2149 | 0.8434 |

| VIFF | QS | AG | CC | SF | QW | |

|---|---|---|---|---|---|---|

| GFF | 0.3982 | 0.7197 | 26.7401 | 0.8926 | 35.1380 | 0.7640 |

| IFM | 0.3679 | 0.6925 | 27.4735 | 0.8840 | 36.6562 | 0.7345 |

| DTCWT | 0.5255 | 0.7384 | 28.8500 | 0.8899 | 37.5651 | 0.7866 |

| CVT | 0.5396 | 0.7310 | 29.2726 | 0.8896 | 37.6290 | 0.7828 |

| PC | 0.3712 | 0.6379 | 24.6670 | 0.8748 | 34.9834 | 0.6894 |

| SAIF | 0.4689 | 0.7239 | 27.9649 | 0.8875 | 37.6971 | 0.7872 |

| DRTV | 0.3633 | 0.6082 | 22.4563 | 0.8694 | 31.2856 | 0.6744 |

| MISF | 0.4630 | 0.7252 | 27.2744 | 0.8859 | 36.6062 | 0.7721 |

| NSST | 0.5119 | 0.7521 | 28.8961 | 0.8820 | 37.0427 | 0.7872 |

| Proposed | 0.5940 | 0.7625 | 30.1132 | 0.8921 | 38.9878 | 0.8034 |

| VIFF | QS | AG | CC | SF | QW | |

|---|---|---|---|---|---|---|

| GFF | 0.4048 | 0.7965 | 22.7779 | 0.6300 | 33.9869 | 0.7602 |

| IFM | 0.2564 | 0.6778 | 23.4184 | 0.6315 | 34.6252 | 0.5919 |

| DTCWT | 0.4120 | 0.7772 | 24.5238 | 0.6583 | 35.9560 | 0.7537 |

| CVT | 0.4258 | 0.7614 | 24.8528 | 0.6610 | 35.6106 | 0.7490 |

| PC | 0.3381 | 0.7186 | 22.9823 | 0.6226 | 35.0967 | 0.6680 |

| SAIF | 0.3493 | 0.7689 | 24.1520 | 0.6217 | 36.0128 | 0.7543 |

| DRTV | 0.2970 | 0.6430 | 18.5259 | 0.5972 | 25.2082 | 0.5422 |

| MISF | 0.3838 | 0.7722 | 23.6538 | 0.6112 | 36.1746 | 0.7535 |

| NSST | 0.4299 | 0.7911 | 24.2249 | 0.6324 | 35.5451 | 0.7750 |

| Proposed | 0.5430 | 0.7965 | 25.3122 | 0.6512 | 36.5362 | 0.7706 |

| VIFF | QS | AG | CC | SF | QW | |

|---|---|---|---|---|---|---|

| GFF | 0.7339 | 0.9520 | 13.4416 | 0.9325 | 17.0349 | 0.9294 |

| IFM | 0.6886 | 0.9465 | 13.5312 | 0.9302 | 17.1410 | 0.9100 |

| DTCWT | 0.7997 | 0.9497 | 13.7663 | 0.9413 | 17.6068 | 0.9306 |

| CVT | 0.8047 | 0.9485 | 13.8226 | 0.9409 | 17.5972 | 0.9304 |

| PC | 0.6968 | 0.8124 | 9.4584 | 0.8726 | 14.6077 | 0.8451 |

| SAIF | 0.7475 | 0.9510 | 13.2681 | 0.9320 | 17.0035 | 0.9297 |

| DRTV | 0.5262 | 0.6900 | 5.4341 | 0.9179 | 10.8994 | 0.7934 |

| MISF | 0.7429 | 0.9498 | 13.3593 | 0.9301 | 17.1603 | 0.9235 |

| NSST | 0.7133 | 0.9406 | 13.0894 | 0.9250 | 15.9954 | 0.9068 |

| Proposed | 0.8260 | 0.9529 | 13.9189 | 0.9414 | 17.7991 | 0.9366 |

| VIFF | QS | AG | CC | SF | QW | |

|---|---|---|---|---|---|---|

| GFF | 0.5040 | 0.8165 | 17.2688 | 0.8025 | 24.7722 | 0.8166 |

| IFM | 0.4167 | 0.7596 | 17.9319 | 0.7706 | 25.7461 | 0.7344 |

| DTCWT | 0.5689 | 0.8229 | 18.3304 | 0.8271 | 25.8626 | 0.8266 |

| CVT | 0.5759 | 0.8145 | 18.5907 | 0.8271 | 25.8054 | 0.8230 |

| PC | 0.4188 | 0.7248 | 14.6469 | 0.7758 | 22.2573 | 0.6786 |

| SAIF | 0.5730 | 0.8191 | 17.8152 | 0.7863 | 25.6650 | 0.8366 |

| DRTV | 0.3885 | 0.7077 | 14.5927 | 0.7873 | 20.1573 | 0.6742 |

| MISF | 0.5563 | 0.8170 | 17.6502 | 0.7811 | 25.5196 | 0.8265 |

| NSST | 0.5902 | 0.8208 | 16.7840 | 0.8018 | 23.4510 | 0.8168 |

| Proposed | 0.6372 | 0.8394 | 18.8870 | 0.8273 | 26.3930 | 0.8401 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Ma, H. Saliency-Guided Nonsubsampled Shearlet Transform for Multisource Remote Sensing Image Fusion. Sensors 2021, 21, 1756. https://doi.org/10.3390/s21051756

Li L, Ma H. Saliency-Guided Nonsubsampled Shearlet Transform for Multisource Remote Sensing Image Fusion. Sensors. 2021; 21(5):1756. https://doi.org/10.3390/s21051756

Chicago/Turabian StyleLi, Liangliang, and Hongbing Ma. 2021. "Saliency-Guided Nonsubsampled Shearlet Transform for Multisource Remote Sensing Image Fusion" Sensors 21, no. 5: 1756. https://doi.org/10.3390/s21051756

APA StyleLi, L., & Ma, H. (2021). Saliency-Guided Nonsubsampled Shearlet Transform for Multisource Remote Sensing Image Fusion. Sensors, 21(5), 1756. https://doi.org/10.3390/s21051756