1. Introduction

With the rapid spread of artificial intelligence (AI) and smart sensor technology to countries around the world, more and more scholars and experts in the field have proposed a variety of deep learning models and algorithms to solve human life problems and improve their effectiveness. One of the most high-profile examples in the retail industry is the development of unmanned stores. The first Amazon Go was successfully launched in Boston in 2016. Amazon Go is an unmanned supermarket with a fast checkout experience of “Just Walk Out Shopping.” The shopping model in which consumers can check out without the line has become the hottest topic. Discuss the reasons why unmanned stores can drive the market. On the one hand, e-commerce operators try to expand the market and connect the physical retail field. On the other hand, traditional retailers hope to reduce their demand for manpower. Amazon Go combines computer visual recognition technology in the store with a variety of environmental sensors to realize a fully automated unmanned self-checkout system.

The most typical Taiwan meal is the Bento buffet, and the cafeterias can be found everywhere. It originated from the Japanese Bento and is a staple in Taiwan cuisine. When a customer walks into a Bento buffet cafeteria, he or she must take an empty food plate first and then pick food from a buffet-style counter that is filled with plates of meat and vegetables.

Figure 1 shows the inside of a Bento buffet cafeteria. However, the customer has to pay for everything that he or she put on the plate, and the price of dishes is usually calculated by a cashier of the restaurant. The customer always being told to pay a price for the dishes taken instead of a proper listing on the price of each dish. This process sometimes makes the customers remain uncertain or feel unfair about the calculated price by the cashier. In addition, Taiwanese people are accustomed to dining in cafeterias at noon or in the evening, but dining at peak times usually takes too much time in line for the cashier to calculate the prices of food. Therefore, an automatic food price system is required to reduce the waiting time of customers. In this paper, we proposed a possible solution to overcome the above problems using food image recognition and food volume estimation. The study aims to train a convolutional neural network (CNN) model to recognize dishes from the food images and estimate the food volumes using depth images of dishes. We built a prototype of the Bento buffet pricing system using an angle steel shelf, a Kinect camera, a light source, and a desktop computer.

Figure 2 shows the prototype of the system structure.

2. Literature Review

Different kinds of food are cooked according to the cultures and environments. Food plays an important role in daily life. Recently, many research studies on food image recognition have been proposed. Chinese food image classification is a great challenge since food images from the same category are captured with different patterns, shapes, and perspectives, according to the people who take the images. Food images can be roughly divided into two categories. The first category is a single dish, such as beef noodles, braised pork rice, and stinky tofu. Each food has its own individual container to hold. Therefore, the entire image can be used as input for food image recognition. Usually, there is no need for preprocessing of detailed image cutting. The other type is mixed dishes, such as a buffet. This type of food is usually placed on the food plate. Therefore, when performing food identification, the image segmentation of each dish on the plate must be performed first. The identification of mixed food is generally considered a difficult problem. The main challenge is that if different dishes on a plate are not neatly arranged, they may overlap each other, and there may be no clear boundaries between them. For food image identification, the entire plate image must be segmented first, and various dishes are cut out from the image. The overall food image identification is more difficult and cumbersome than ordinary object identification. An example of these two types of food images is shown in

Figure 3.

Many research papers in the literature address food classification as a unique pattern recognition problem. Generally, food image recognition can be divided into two ways—the handcraft-feature approach and deep-learning approaches. The term “handscraft feature” comes from the researcher’s ability to identify relevant features of a particular object in an image. When classifying food, the shape, color, and texture of the food will be different. The selection of relevant features must be related to these three aspects [

1]. Thus far, challenges remain when it comes to identifying prepared food. Different food preparation methods may lead to different characteristics [

2]. For example, the ingredients of a prepared salad and the shape and appearance of the whole fruit or vegetable have different shapes and textures. In order to determine the best feature extraction process, informative visual data must be extracted from food images. Commonly used features mainly use the color, shape, or texture calculation of the image, and represent the image through the calculated statistical distribution or transformed into a feature vector. In the feature-based food image recognition, we divide the relevant process into three steps—the establishment of a food image database, the calculation of food image features, and the image classification method. Commonly used image features include scale-invariant feature transform (SIFT) [

3], bag of features (BoF) [

4], local binary pattern (LBP) [

5], etc., and commonly used image classification methods include k-dimensional tree (KD tree) [

6], k-nearest neighbors algorithm (KNN) [

7], support vector machine (SVM) [

8], or back propagation neural network (BPNN) [

9], etc.

Deep learning is part of machine learning, a new method of learning and training more effective neural networks. The built-in mechanism of the deep-learning algorithm passes a series of connected layers, and the final one is responsible for classification, i.e., the fully connected layer, which automatically uses feature extraction. Compared with other traditional methods, it has better performance and enhanced processing power. These methods usually must be trained using large datasets but have excellent classification capabilities. Convolutional Neural Network (CNN) is one of the most outstanding techniques in deep learning. Since CNN has an excellent ability to learn visual data and obtain high precision for challenging tasks with large-scale image data, it is widely used in computer vision applications [

10]. Compared with other traditional image feature extraction methods, CNN performs much better. Bossard et al. [

11] implemented the CNN model based on the previously proposed network architecture [

12,

13]. Using images from the Food-101 dataset, an average accuracy rate of 56.4% was obtained in 450,000 iterations. Yanai and Kawano [

14] implemented a deep convolutional neural network (DCNN) on three different food datasets Food-101, UEC-FOOD-100, and UECFOOD-256.

Pandey et al. [

15] believe that different convolutional neural networks can extract different image features, and therefore, they proposed to use three CNN convolutional neural networks (AlexNet, GoogLeNet, ResNet) to train the features and weights, respectively, and finally combine the trained features to perform classification. Their experimental results show that when the FOOD-101 database was used, a single CNN performs better with ResNet, with an accuracy rate of 67.6%, while using three CNNs at the same time can achieve maximum accuracy of 72.1%. According to the survey by Subhi et al. [

16], the effectiveness of pretraining and fine-tuning DCNN with 100 pieces of each food category obtained from each dataset as training images.

In this paper, our main contribution is the use of state-of-the-art deep convolutional neural networks to solve the need for food automatic recognition in Taiwan’s Bento buffet cafeterias. A prototype including an angle steel shelf, Kinect camera, and LED light was created. In this approach, we capture a large amount of food from one cooperated cafeteria and use depth images to estimate the food volumes. Finally, we have successfully complemented a Bento buffet pricing system. The paper is organized as follows: In

Section 2, the literature review is presented. In

Section 3, the proposed method is described. Experimental results are reported in

Section 4. The last section is the conclusion and future research.

3. Proposed Methods

3.1. System Flowchart

Figure 4 shows the flowchart of the proposed food price estimation framework. It consists of two stages—the training stage and the test stage. In the training stage, the following major steps are executed:

Put food on an empty plate and place it on the shelf;

Take a photo and obtain an RGB image;

Detect the regions of interest (ROIs) and cut the individual food images and then build the food image dataset;

Apply the food image dataset to train the parameters of CNN models.

In the test stage, the following steps are executed:

Put food on an empty plate and place it on the shelf;

Take a photo to obtain an RGB image and a depth image;

Detect ROIs and cut the individual food images for testing;

Perform food recognition on test images using the trained CNN models;

Estimate the food volumes using the depth image;

Sum up the price for each food on the food plate and display it on the screen.

The detailed operations for major steps are described in the following sections.

3.2. Image Preprocessing and ROI Detection

In this study, we set up the food plate as the region of interest (ROI). Plate detection is a critical operation to automatically capture the food images from the plate. A straightforward method to detect ROI in images is using Hough line transformation. If we can obtain the four straight lines surrounding the food plate, then the four corners of the plate and its bounding box are determined. Hough’s working method is basically to scan the entire image and use a transformation to convert all white pixels from Cartesian coordinates to polar coordinates; black pixels are ignored. Therefore, it is necessary to detect the edges well before performing Hough transform; otherwise, its efficiency will be further reduced. The plate detection process is shown in

Figure 5.

In addition, noisy images will not allow Hough transform to achieve good results since noises will cause erroneous white pixels in edge detection.

Before the plate detection process, Gaussian smoothing [

16] with an

kernel was performed to remove noises in the captured images. The general mathematical expression of this filter used to eliminate the noises on the image is presented in Equation (1).

where

r is the radius

,

is the standard deviation of the distribution, and

x and

y are the location indices. In our experiments, we set

.

Then, Canny edge detection [

17] was applied to obtain a binary edge image shown in

Figure 6b. The edge image was used as input for Hough transform [

18] to detect line segments. The Hough transform is perhaps most often used for detecting line segments in edge maps. A line segment in 2D can be described with two real-valued parameters using the classic slope-intercept form

where

k is the slope and

d the intercept, that is, the height at which the line would intercept the

y axis. A line segment that passes through two given edge points. Here, we have set a threshold of line length to leverage possible straight lines which would surround the plate.

The procedure described so far can efficiently detect line segments with different orientations.

Figure 6 shows the process of food plate region detection. However, threshold selection may generate duplicated line segments (see

Figure 6c). One possible solution to obtain the four boundary-line segments for the food plate would be to set to find the close line segments from an interior point toward the four different sides. The steps to leverage the closest four line segments surrounding the food plate and find the four corner points of the food plate are shown in Algorithm 1.

| Algorithm 1Food plate detection |

Input: an RGB image captured by Kinect

Output: four corner points of the food plate

1. Calculate the center point p of the captured image. The point p would locate inside the food plate.

2. Draw a vertical ray along the y-axis with starting point p and upwards until it meets a red line. The red line L1 is selected as the upper edge of the plate. Calculate the red line’s slope and the angle θ between the line and x-axis.

3. Draw a parallel rightwards ray of L1 with starting point p until it meets a red line and denoted as L2.

4. Draw a parallel leftwards ray of L1 with starting point p until it meets a red line and denoted as L3.

5. Draw a vertical downwards ray of L1 with starting point p until it meets a red line and denoted as L4.

6. Determine the four corners from the extracted four lines L1 to L4 and redraw a rectangle (bounding box) and then output the four corner points. |

To obtain a plate normalized form, the mass center of the extracted food plate is calculated with the following equations. First, the food plate image moment is defined as

represents the image grayscale value in image coordinate (

x,

y).

The plate image center

is defined as

Finally, we can obtain a normalized image by performing a θ rotation with plate image center

. The details about Equations (3) and (4) could be found in reference [

19].

The process of traditional image recognition includes three consecutive steps, i.e., image segmentation, feature extraction, and image classification. A food image may contain multiple dishes (such as food in a buffet). Image cutting is to divide the image into different areas, and each area represents a type of dish (food item). If food image cutting is implemented correctly, it will help the accuracy of subsequent image feature extraction and image recognition and have an absolute impact on the calculation of food volume and nutrients. When the buffet dishes are arranged neatly (some dishes are separated by grids), a better cutting result can be obtained, that is, several dishes are cut into several nonoverlapping areas.

In our experiments, the food plate is segmented into four regions with a predefined mask that is separated by grids.

Figure 7b shows the mask containing four grids with four different colors. The food items on different regions in the plate can be easily segmented with this mask.

Figure 7a,c shows the plate normalized form and the segmented dishes, respectively.

3.3. Food Image Recognition with Convolutional Neural Networks

Deep convolutional neural networks (DCNNs) have achieved state of the art in a variety of food image recognition tasks. However, there still exists no effective Chinese food recognition system matured enough to be used in the real world. The main reasons are the changeable factors including the lighting variation, scale variation, viewpoint variation, and background variation. In this study, we tried to control these variables in the proposed Bento buffet pricing system. Food recognition is a core process in the pricing system. The image dataset for food recognition is relatively small in our application since the number of dishes for a Bento buffet store is limited. In this study, many generally used CNNs were selected for food image recognition in the proposed application. Theses CNN models include AlexNet [

12], VGG [

20], ResNet [

21], Inception [

22], and DenseNet [

23] and are explained below. The basic concepts about the deep-learning models could be found in the highly cited references [

24,

25].

3.3.1. AlexNet

AlexNet [

12] participated in the ImageNet Large Scale Visual Recognition Challenge (LSVRC) in 2012. The network’s top five error rate was 15.3%, which was 10.8% lower than the second place error rate. AlexNet contains eight layers; the first five layers are convolutional layers, some of which are maximum pooling layers, and the last three layers are fully connected layers. Nonsaturated ReLU activation function is used in this network, and its training performance has been improved, compared with tanh- and S-curves.

3.3.2. VGG

VGGNet [

20] can be regarded as a deepened version of AlexNet, which is composed of two parts—convolutional layer and fully connected layer. An improvement of VGG compared to AlexNet is to use consecutive 3 × 3 convolution kernels instead of larger ones (11 × 11, 7 × 7, 5 × 5). For a given receptive field (the local size of the input image related to the output), using a stacked small convolution kernel is better than using a large convolution kernel because multiple nonlinear layers can increase the depth of the network to ensure a more complex learning mode, and the cost is relatively small (fewer parameters). VGG16 contains a 16-layer architecture and VGG19 contains a 19-layer architecture. The input size of the network is 224 × 224.

3.3.3. ResNet

From experience, the depth of the network is crucial to the performance of the model. When the number of network layers is increased, the network can extract more complex feature patterns, and therefore, theoretically better results can be achieved when the model is deeper. However, the experimental results found that the deep network has a degradation problem: when the network depth increases, the accuracy of the network becomes saturated or even decreases. The design of ResNet [

21] has an architectural trick, which makes the depth of the network play a role. This trick is residual learning. ResNet101 is 101 layers deep. The input size of the network is 224 × 224. ResNet won the ILSVRC competition in 2015 with just a 3.6% error rate.

3.3.4. Inception

Inception network [

22] is an important milestone in the history of CNN classifier development. Before the advent of Inception, most popular CNNs simply stacked more and more convolutional layers to make the network deeper and deeper, hoping to achieve better performance. The Inception network is complex (requires a lot of engineering work). It uses a lot of tricks to improve performance, including speed and accuracy. Its continuous evolution has brought about the emergence of multiple Inception network versions. Inception v4 does not use the idea of residual learning and basically continues the structure of Inception v2/v3.

3.3.5. DenseNet

ResNet proves that deepening the number of network layers and improving the accuracy of the model can be taken into account through its unique design. DenseNet [

23] uses a denser connection method. It is a dense convolutional neural network that uses a forward propagation method to connect each layer with the rest densely. The purpose of this network is to ensure that the information flow between the layers is maximized, and all layers (feature map size matching) are directly connected together. In traditional convolutional neural networks, the L layer will have a total of L connections, which is a one-to-one mode; in DenseNet, one layer will be connected to all other layers. Therefore, for the same L layer, there will be L(L + 1)/2 connections. This densely connected mode requires fewer parameters than traditional convolutional networks; hence, a densely connected mode can bring feature reuse, and there is no need to relearn redundant feature maps. Moreover, the operation of dimensional splicing brings rich feature information, and a lot of feature maps can be obtained with less convolution.

The setting of baseline CNN models is summarized and shown in

Table 1. The input images are resized to 224 × 224 for AlexNet, VGG16, VGG19, ResNet50, and DenseNet121 architectures. On the other hand, the input images are resized to 299 × 299 for the Inception V4 architecture.

In order to be able to evaluate the stability of the above models more clearly, this research adopts the K-fold cross-validation method during training.

Figure 8 shows the K-fold cross-validation method. The total dataset is divided into five equal parts, one for validation and the other four as a training set. A total of five rounds of training to ensure that each piece of data is tested as a validation set so that five validation accuracy rates can be obtained, and finally, they can be averaged as the overall performance of the model and then compared with other models.

3.4. Mapping from Color Coordinates to Depth Image with Kinect

Kinect has three lenses. The middle lens is an RGB color camera to collect color images. The left and right lenses are 3D structured light depth sensors composed of an infrared transmitter and an infrared complementary metal-oxide-semiconductor (CMOS) camera to collect depth data. There are two different coordinate systems—the depth camera coordinate frame of reference and the color camera coordinate system. In this study, the food volume is estimated by using the food area and the sum of corresponding depth values in the depth coordinate space. Therefore, a transformation from color space to depth space is required.

Figure 9 shows an example of mapping from RGB coordinate to depth coordinate and estimation of food volumes with depth values. When we want to map any sequence of 2D coordinate points

to another set of coordinate points

, there must be at least eight conversion parameters between them. The projection transformation can be expressed as a linear mapping between the corresponding coordinates. Compared with the affine transformation, it has two more parameters

and can be written as

In Cartesian coordinates, the result of the mapping function is obviously nonlinear, and the conversion equation is as follows:

Projection transformation [

26] is different from affine transformation [

27]. A pair of parallel lines may not be parallel after being mapped, and the distance ratio between two points on the line will also change. The four corresponding two-dimensional coordinate points,

,

, and the points in the color image

correspond to the points

in the depth image, and the eight unknown conversion parameters can be solved by simply using linear equation solutions. After we bring the four points coordinates of the quadrilateral into Formula (3), we can obtain four sets of corresponding linear equations and eight conversion parameters

to be solved.

These eight parameters

can be calculated using the four-point mapping method.

Once the food in the image is identified, if the volume of the food can be further estimated, the corresponding price can be calculated based on the volume. The nutrition assessment app proposed by Pouladzadeh et al. [

28] uses fingers as a reference to calculate the actual area and height of the food in the top view image and the side image and then estimate the volume of the food. However, the above method is limited due to the need to use reference objects; in this paper, we used a depth camera to obtain the depth information of the food and further estimated the volume of the food.

Initially, the average depth value of a region A in an empty plate is estimated and denoted as

. Image-based food volume evaluation must identify the area occupied by all food items, perform image cutting of the food objects in the image, and then calculate the volume of each segmentation item. In this way, the volume of a certain food on

A is calculated, as long as the depth values of all the pixels in the cutting area of the food on region

A are summed up. The volume of the food can be estimated and is denoted as

where

represents the depth value with food on the plate at the image coordinates

and

is a calibration parameter. In order to measure the correlation between the volume estimation using Equation (9) and the actual food volume, we selected different food items for testing. The experiments used Archimedes’ law to calculate the actual volume of a food object by measuring the amount of water rising when the food was put into the water of a measuring cup. Finally,

value was estimated as 1.2 from a linear regression result.

In practical applications, we set up a food volume threshold for each food per unit. If the food volume on A is larger than the threshold, double times of money is asked to pay.

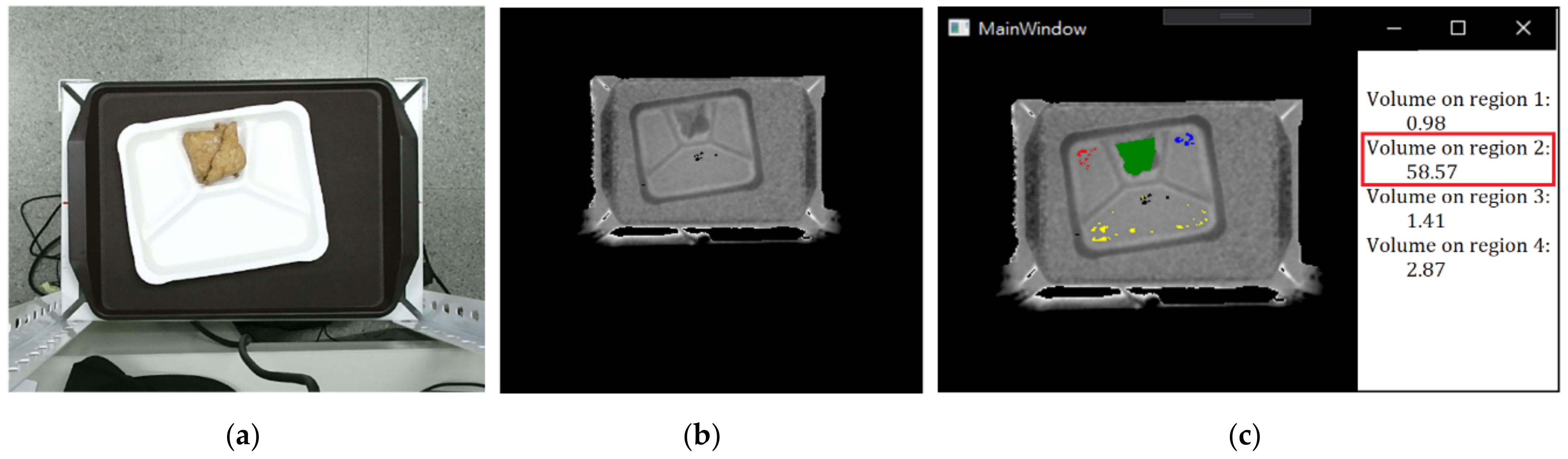

Figure 10 shows an example of volume estimation using a depth image for oily bean tofu. The estimated volume of tofu in region 2 is 58.57, and the volumes of other regions are relatively small, meaning no food in these regions.