An Infrared Array Sensor-Based Approach for Activity Detection, Combining Low-Cost Technology with Advanced Deep Learning Techniques

Abstract

:1. Introduction

1.1. Background

1.2. Related Work

1.3. Motivation

- We propose a lightweight DL model for activity classification that is robust to environmental changes. Being lightweight, such a model can run on devices with very low computation capabilities, making it a base for a cheap solution for activity detection.

- We apply SR techniques to LR data (i.e., 12 × 16 and 6 × 8) to reconstruct HR images (i.e., 24 × 32) from lower resolution ones.

- We use a denoising technique that requires no training to remove noise from the IR image, which significantly improves classification performance.

- We use an advanced data augmentation technique known as CGAN to generate synthetic data. The generated data are used as part of the training set to improve the training of the networks and generate more accurate models that are robust to environmental changes.

- We demonstrate that it is possible to use the LR data to achieve classification performance that is nearly identical to that of the classification of the HR data, namely 24 × 32.

2. Experiment Specifications

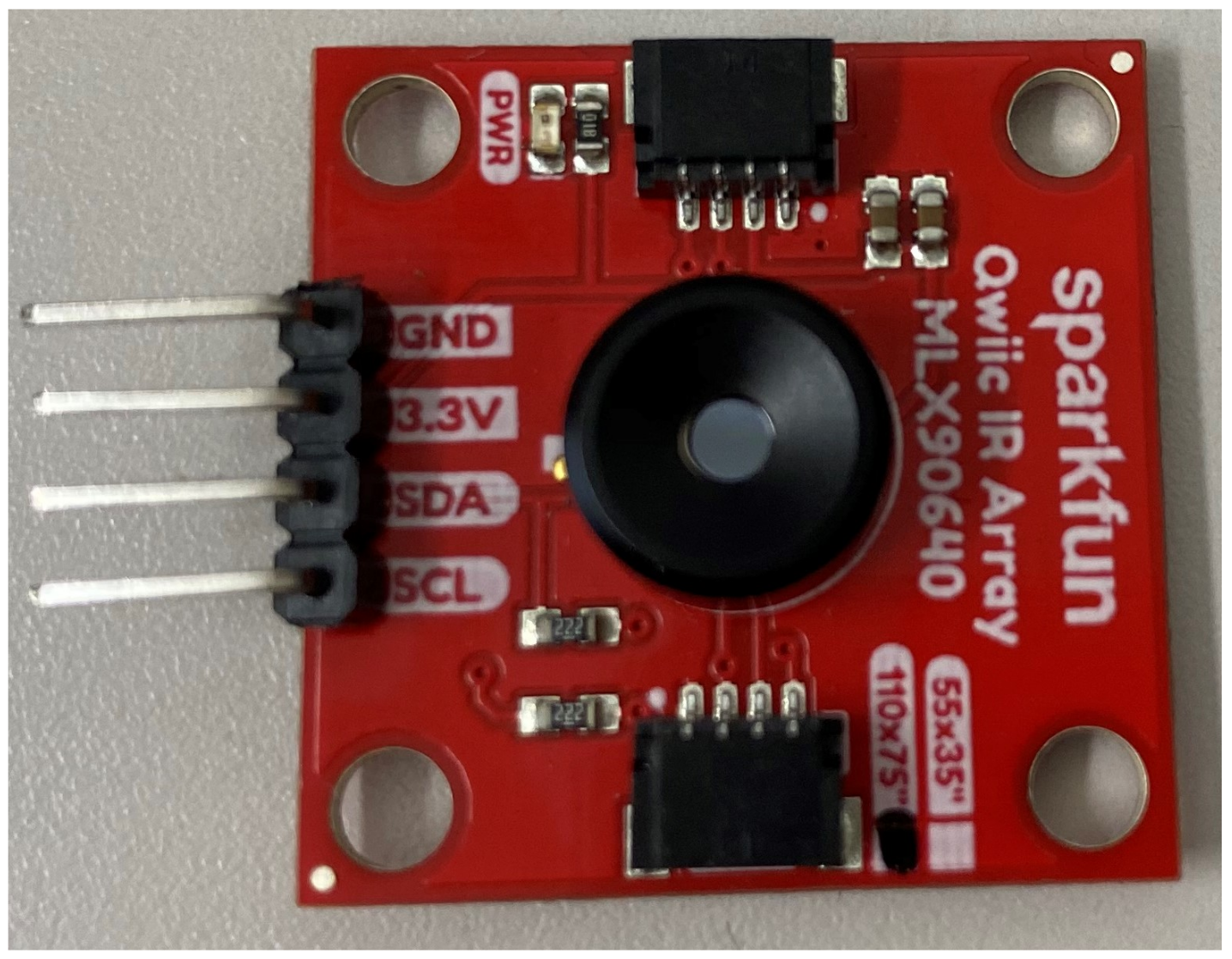

2.1. Device Specifications

2.2. Environment

- The first room is a small, closed space with only one window that lets in little light. The temperature in the room has been set to 24 °C.

- The second room is larger, brighter, and equipped with an air conditioner whose temperature is set to 22 °C.

- In comparison to the other rooms, the third room is a little dark, and its air conditioner temperature is set to 24 °C.

2.3. Framework

3. Detailed System Architecture and Description

3.1. Data Collection

3.2. Super-Resolution

- Feature extraction

- Shrinking

- Non-linear mapping

- Expanding

- Deconvolution

3.2.1. Feature Extraction

3.2.2. Shrinking

3.2.3. Non-Linear Mapping

3.2.4. Expanding

3.2.5. Deconvolution

3.2.6. Activations Functions and Hyperparameters

3.3. Denoising

3.4. Conditional Generative Adversarial Network (CGAN)

3.5. CNN and LSTM Classification

- In the first stage, the sensor’s raw data are given as an input to the CNN that classifies the individual frames and produces the first output.

- In the second stage, we perform the sequence classification using the LSTM. The output of the CNN is given as an input to the LSTM with a window size equal to five frames. The LSTM produces the sequence classification output.

3.6. Further Model Optimization Using Quantization

4. Experimental Results

4.1. Computer Vision Techniques Results

4.2. Classification Results

- Super-Resolution → Denoising,

- Denoising → SR,

- Denoising and CGAN.

| Method | Image6×8 | Image12×16 | Image24×32 |

|---|---|---|---|

| Raw data | 76.57% | 88.22% | 93.12% |

| SR | 77.72% | 89.24 % | – |

| Denoising | 76.88% | 88.30% | 93.71% |

| CGAN+Raw data | – | – | 95.24% |

| Denoising → SR | 78.25% | 89.31% | – |

| SR → Denoising | 80.47% | 91.72% | – |

| Denoising + CGAN | – | – | 96.54% |

| Denoising → SR + CGAN | 81.12% | 92.66% | – |

| SR → Denoising + CGAN | 83.58% | 94.44% | – |

- The raw sensor data with various resolutions are referred to as , , and .

- The SR technique applied to LR data is referred to as , and .

- The denoising technique applied to the HR and LR data is referred to as , , and .

- The combination of raw data with CGAN techniques is referred to as .

- The denoising and CGAN techniques applied to HR data are referred to as .

- The combination of denoising and SR techniques applied to LR data are referred to as , , , and .

- The combination of SR, denoising, and CGAN techniques applied to LR data are referred to as , , , and .

4.3. Neural Network Quantization

5. Discussion

- The SR technique considerably enhances the quality of the image; however, it is still challenging to detect any activity at the edge of the defined coverage area. One approach to overcome this issue is to combine SR with more advanced feature extraction methods or use a deeper neural network, for that matter.

- IR sensors generate the frames by collecting the IR heat rays and mapping them to a matrix. These rays are noisy by nature, which is exacerbated by the sensor’s receivers, which have a large uncertainty. This makes it hard for the IR sensors to generate high-quality data. In light of that, we used the deep learning denoising technique (DIP) to reduce the amount of noise in the image. However, the derived study results are still not up to the mark. Since this method works without any prescribed training and reduces the training cost, there is still a huge room for improvement in this direction.

- CGAN, one of the widely recognized advanced data augmentation techniques, was used in this research to generate synthetic data. The CGAN technique generates data based on the number of classes available and is a supervised learning technique. However, despite the use of CGAN, we found that the extraction of the features from the IR data is still very difficult, likely due to the image distortion in the raw data. It is inferred that less distortion in the raw data allows for the generation of more realistic and useful synthetic data that could contribute to much better classification performance through advanced feature extraction methods.

- One specific shortcoming of this research is that the only main source of heat present in the room during the experiments is the participants themselves. This means that the current model is trained for the case where there is a single person and no other heat-emitting devices, such as a stove or a computer, are present. Thus, even though the developed model performs well for the current experimental setup, it is not meant to simultaneously detect the activities of multiple persons and obstacles. A potential alternative to address this constraint could be the generation of three-dimensional data, as it could help to estimate the precise height/depth of any target individual and can also simultaneously differentiate between the activities of multiple people and objects.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IR | Infrared |

| DL | Deep Learing |

| SR | Super-Resolution |

| LR | Low Resolution |

| HR | High Resolution |

| DIP | Deep Image Prior |

| CNN | Convolutional Neural Network |

| SVM | Support Vector Machine |

| LSTM | Long Short-Term Memory |

| CGAN | Conditional Generative Adversarial Network |

| FSRCNN | Fast Super-Resolution Convolutional Neural Network |

| PSNR | Peak Signal-to-Noise Ratio |

| MSE | Mean Squared Error |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

References

- Statistics Japan. Statistical Handbook of Japan 2021; Statistics Bureau, Ministry of Internal Affairs and Communications: Tokyo, Japan, 2021.

- Mitsutake, S.; Ishizaki, T.; Teramoto, C.; Shimizu, S.; Ito, H. Patterns of Co-Occurrence of Chronic Disease Among Older Adults in Tokyo. Prev. Chronic Dis. 2019, 16, E11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mashiyama, S.; Hong, J.; Ohtsuki, T. Activity recognition using low resolution infrared array sensor. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 495–500. [Google Scholar]

- Mashiyama, S.; Hong, J.; Ohtsuki, T. A fall detection system using low resolution infrared array sensor. In Proceedings of the IEEE International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Helsinki, Finland, 2–5 September 2014; pp. 2109–2113. [Google Scholar]

- Hino, Y.; Hong, J.; Ohtsuki, T. Activity recognition using array antenna. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 507–511. [Google Scholar]

- Bouazizi, M.; Ye, C.; Ohtsuki, T. 2D LIDAR-Based Approach for Activity Identification and Fall Detection. IEEE Internet Things J. 2021. [Google Scholar] [CrossRef]

- Nakamura, T.; Bouazizi, M.; Yamamoto, K.; Ohtsuki, T. Wi-fi-CSI-based fall detection by spectrogram analysis with CNN. In Proceedings of the IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Lee, S.; Ha, K.N.; Lee, K.C. A pyroelectric infrared sensor-based indoor location-aware system for the smart home. IEEE Trans. Consum. Electron. 2006, 52, 1311–1317. [Google Scholar] [CrossRef]

- Liang, Q.; Yu, L.; Zhai, X.; Wan, Z.; Nie, H. Activity recognition based on thermopile imaging array sensor. In Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018; pp. 0770–0773. [Google Scholar]

- Muthukumar, K.A.; Bouazizi, M.; Ohtsuki, T. Activity Detection Using Wide Angle Low-Resolution Infrared Array Sensors. In Proceedings of the Institute of Electronics, Information and Communication Engineers (IEICE) Conference Archives, Virtual, 15–18 September 2020. [Google Scholar]

- Al-Jazzar, S.O.; Aldalahmeh, S.A.; McLernon, D.; Zaidi, S.A.R. Intruder localization and tracking using two pyroelectric infrared sensors. IEEE Sens. J. 2020, 20, 6075–6082. [Google Scholar] [CrossRef]

- Bouazizi, M.; Ohtsuki, T. An infrared array sensor-based method for localizing and counting people for health care and monitoring. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 4151–4155. [Google Scholar]

- Bouazizi, M.; Ye, C.; Ohtsuki, T. Low-Resolution Infrared Array Sensor for Counting and Localizing People Indoors: When Low End Technology Meets Cutting Edge Deep Learning Techniques. Information 2022, 13, 132. [Google Scholar] [CrossRef]

- Yang, T.; Guo, P.; Liu, W.; Liu, X.; Hao, T. Enhancing PIR-Based Multi-Person Localization Through Combining Deep Learning With Domain Knowledge. IEEE Sens. J. 2021, 21, 4874–4886. [Google Scholar] [CrossRef]

- Sam, D.B.; Peri, S.V.; Sundararaman, M.N.; Kamath, A.; Babu, R.V. Locate, Size, and Count: Accurately Resolving People in Dense Crowds via Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2739–2751. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Zhang, F.; Wang, B.; Ray Liu, K.J. mmTrack: Passive Multi-Person Localization Using Commodity Millimeter Wave Radio. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Virtual, 6–9 July 2020; pp. 2400–2409. [Google Scholar] [CrossRef]

- Kobayashi, K.; Ohtsuki, T.; Toyoda, K. Human activity recognition by infrared sensor arrays considering positional relation between user and sensors. IEICE Tech. Rep. 2017, 116, 509. [Google Scholar]

- Quero, J.M.; Burns, M.; Razzaq, M.A.; Nugent, C.; Espinilla, M. Detection of Falls from Non-Invasive Thermal Vision Sensors Using Convolutional Neural Networks. Proceedings 2018, 2, 1236. [Google Scholar] [CrossRef] [Green Version]

- Burns, M.; Cruciani, F.; Morrow, P.; Nugent, C.; McClean, S. Using Convolutional Neural Networks with Multiple Thermal Sensors for Unobtrusive Pose Recognition. Sensors 2020, 20, 6932. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Yang, B.; Zhang, T. Human Action Recognition Based on State Detection in Low-resolution Infrared Video. In Proceedings of the 2021 IEEE 16th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 August 2021; pp. 1667–1672. [Google Scholar]

- Ángel López-Medina, M.; Espinilla, M.; Nugent, C.; Quero, J.M. Evaluation of convolutional neural networks for the classification of falls from heterogeneous thermal vision sensors. Int. J. Distrib. Sens. Netw. 2020, 16, 1550147720920485. [Google Scholar] [CrossRef]

- Tateno, S.; Meng, F.; Qian, R.; Hachiya, Y. Privacy-Preserved Fall Detection Method with Three-Dimensional Convolutional Neural Network Using Low-Resolution Infrared Array Sensor. Sensors 2020, 20, 5957. [Google Scholar] [CrossRef] [PubMed]

- Muthukumar, K.A.; Bouazizi, M.; Ohtsuki, T. A novel hybrid deep learning model for activity detection using wide-angle low-resolution infrared array sensor. IEEE Access 2021, 9, 82563–82576. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 391–407. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Ilesanmi, A.E.; Ilesanmi, T.O. Methods for image denoising using convolutional neural network: A review. Complex Intell. Syst. 2021, 7, 2179–2198. [Google Scholar] [CrossRef]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, Q.; Hsieh, C.; Hsieh, J.; Liu, C. Memory-Efficient AI Algorithm for Infant Sleeping Death Syndrome Detection in Smart Buildings. AI 2021, 2, 705–719. [Google Scholar] [CrossRef]

- Huang, Q. Weight-Quantized SqueezeNet for Resource-Constrained Robot Vacuums for Indoor Obstacle Classification. AI 2022, 3, 180–193. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar]

| Study | IR Sensor (Resolution) | No.of Sensors | Position of Sensor | Methods | Accuracy | Limitations |

|---|---|---|---|---|---|---|

| Mashiyama et al. [3] | 1 | Ceiling | SVM | 94% | A few activities in a specific area. No pre-processing is performed. Data are highly noisy due to their low resolution. | |

| Mashiyama et al. [4] | 1 | Ceiling | k-NN | 94% | Due to the noise in the data, feature extraction is less effective. | |

| Kobayashi et al. [17] | 2 | Ceiling, Wall | SVM | 90% | No reprocessing is performed. Data are noisy. Activities are performed in very specific positions. | |

| Javier et al. [18] | 1 | Ceiling | CNN | 92% & 85% | Noisy and blurry image. Difficult to detect activities in high-temperature areas. | |

| Matthew et al. [19] | 5 | Ceiling and all corners | CNN based on alexnet | F1-score 92% | Requires multiple sensors. Expensive to deploy in the real-world. | |

| Tianfu et al. [20] | 2 | Ceiling, Wall | CNN | 96.73% | Fails to detect the position of the human near the edges of coverage, due to the blurriness and noise in the images. | |

| Miguel et al. [21] | , | 2 | Ceiling, Wall | CNN | 72% | No sequence data classification was performed. Noise removal and enhancement of the images were not performed. |

| Tateno et al. [22] | 1 | Ceiling | 3D-CNN 3D-LSTM | 93% | Gaussian filter is used to remove the noises, which causes a loss of information. | |

| Muthukumar et al. [23] | 2 | Ceiling, Wall | CNN and LSTM | 97% | Two sensors were used to detect the activity. Raw images are used for classification with lot of noise. |

| IR Sensor Model | Qwiic IR Array MLX90640 |

|---|---|

| Camera | 1 |

| Voltage | 3.3 V |

| Temperature range of targets | C∼85 C |

| Number of pixels | 24 × 32, 12 × 16, 6 × 8 |

| Viewing angle | |

| Frame rate | 8 frames/second |

| Embedded SBC | Raspberry Pi 3 Model B+ |

|---|---|

| SoC | Broadcom BCM2837B0, Cortex-A53 (ARMv8) 64-bit SoC |

| CPU | 1.4GHz 64-bit quad-core ARM Cortex-A53 CPU |

| RAM | 1GB LPDDR2 |

| OS | Ubuntu Mate |

| Power input | 5 V/2.5 A (12.5 W) |

| Connectivity to the sensor | Inter-Integrated Circuit (I2C) serial bus |

| I2C transmission rate | 3.4 Mbps |

| S.No. | Activity | Training Data Frames | Testing Data Frames |

|---|---|---|---|

| 1 | Walking | 5456 | 2351 |

| 2 | Standing | 1959 | 882 |

| 3 | Sitting | 3102 | 1566 |

| 4 | Lying | 2486 | 647 |

| 5 | Action change | 1961 | 939 |

| 6 | Falling | 613 | 264 |

| Model | Parameters |

|---|---|

| ResNET [30] | 21 Million |

| VGG16 [31] | 138 Million |

| CNN | 189 Thousand |

| CNN + LSTM | 568 Thousand |

| Method | Input-Output | PSNR(dB) |

|---|---|---|

| Super-Resolution | Image12×16→Image24×32 | 32.62 |

| Image6×8→Image24×32 | 20.47 | |

| Denoising | Image24×32 | 34.12 |

| Image12×16 | 30.52 | |

| Image6×8 | 23.74 |

| S.No. | Image6×8 | Image12×16 | Image24×32 |

|---|---|---|---|

| Raw data | 78.32% | 90.11% | 95.73% |

| SR | 79.07% | 90.89% | – |

| Denoising | 78.55% | 90.33% | 96.14% |

| CGAN+Raw data | – | – | 96.42% |

| Denoising → SR | 80.18% | 92.38% | – |

| SR → Denoising | 82.76% | 92.91% | – |

| Denoising + CGAN | – | – | 98.12% |

| Denoising → SR + CGAN | 80.41% | 93.43% | – |

| SR → Denoising + CGAN | 84.43% | 94.52% | – |

| Approach | Image6×8 | Image12×16 | Image24×32 |

|---|---|---|---|

| SVM [17] | 61.45% | 68.52% | 88.16% |

| CNN [21] | 67.11% | 82.97% | 90.14% |

| 3D-CNN [22] | 72.42% | 90.89% | 93.28% |

| CNN + LSTMSR → DE + CGAN | 84.43% | 94.52% | – |

| CNN + LSTMDE + CGAN | – | – | 98.12% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 80% | 68% | 77% | 68% | 57% | 55% | |

| 86% | 73% | 86% | 76% | 60% | 63% | |

| 85% | 86% | 82% | 68% | 62% | 64% | |

| 86% | 78% | 86% | 70% | 70% | 62% | |

| 84% | 82% | 84% | 72% | 73% | 71% | |

| 82% | 80% | 79% | 75% | 78% | 70% | |

| 80% | 84% | 83% | 81% | 80% | 68% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 80% | 88% | 86% | 75% | 82% | 81% | |

| 84% | 86% | 81% | 85% | 84% | 83% | |

| 83% | 85% | 86% | 85% | 84% | 79% | |

| 84% | 85% | 89% | 90% | 82% | 84% | |

| 90% | 92% | 88% | 91% | 90% | 94% | |

| 89% | 94% | 90% | 85% | 84% | 88% | |

| 91% | 92% | 89% | 92% | 93% | 91% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 88% | 90% | 87% | 93% | 90% | 91% | |

| 92% | 84% | 92% | 90% | 91% | 89% | |

| 90% | 95% | 90% | 94% | 92% | 92% | |

| 96% | 92% | 95% | 94% | 87% | 93% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 75% | 78% | 74% | 77% | 70% | 74% | |

| 77% | 74% | 73% | 78% | 73% | 76% | |

| 76% | 72% | 78% | 70% | 75% | 72% | |

| 81% | 78% | 78% | 75% | 77% | 73% | |

| 78% | 81% | 73% | 82% | 82% | 75% | |

| 80% | 74% | 70% | 76% | 78% | 75% | |

| 84% | 82% | 84% | 78% | 81% | 80% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 88% | 90% | 76% | 77% | 80% | 82% | |

| 85% | 88% | 82% | 90% | 86% | 79% | |

| 82% | 86% | 78% | 89% | 90% | 83% | |

| 92% | 84% | 77% | 89% | 91% | 86% | |

| 93% | 90% | 88% | 84% | 91% | 88% | |

| 87% | 90% | 93% | 94% | 82% | 90% | |

| 90% | 92% | 90% | 86% | 93% | 92% |

| Method | Walking | Standing | Sitting | Lying | Action Change | Falling |

|---|---|---|---|---|---|---|

| 92% | 91% | 93% | 90% | 94% | 89% | |

| 93% | 95% | 96% | 91% | 94% | 92% | |

| 95% | 94% | 95% | 93% | 92% | 90% | |

| 96% | 94% | 93% | 96% | 97% | 96% |

| Resolution | With Quantization | Accuracy | 100 Epochs Training Time (s) | Inference Time (ms) | Model Size (MB) |

|---|---|---|---|---|---|

| 6 × 8 | Yes | 76.23% | 17 | 0.003 | 0.3 |

| No | 78.32% | 54 | 0.048 | 1.4 | |

| 12 × 16 | Yes | 90.05% | 36 | 0.007 | 0.8 |

| No | 90.11% | 88 | 0.078 | 2.4 | |

| 24 × 32 | Yes | 94.20% | 44 | 0.009 | 1.1 |

| No | 95.73% | 132 | 0.093 | 3.2 |

| Resolution | With Quantization | Accuracy | 100 Epochs Training Time (s) | Inference Time (ms) | Model Size (MB) |

|---|---|---|---|---|---|

| 6 × 8SR → DE + CGAN | Yes | 82.27% | 145 | 0.38 | 4.18 |

| No | 84.43% | 321 | 2.57 | 10.20 | |

| 12 × 16SR → DE + CGAN | Yes | 93.18% | 164 | 0.60 | 5.43 |

| No | 94.52% | 352 | 3.21 | 14.68 | |

| 24 × 32DE + CGAN | Yes | 97.53% | 136 | 0.43 | 4.37 |

| No | 98.12% | 291 | 2.82 | 11.20 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muthukumar, K.A.; Bouazizi, M.; Ohtsuki, T. An Infrared Array Sensor-Based Approach for Activity Detection, Combining Low-Cost Technology with Advanced Deep Learning Techniques. Sensors 2022, 22, 3898. https://doi.org/10.3390/s22103898

Muthukumar KA, Bouazizi M, Ohtsuki T. An Infrared Array Sensor-Based Approach for Activity Detection, Combining Low-Cost Technology with Advanced Deep Learning Techniques. Sensors. 2022; 22(10):3898. https://doi.org/10.3390/s22103898

Chicago/Turabian StyleMuthukumar, Krishnan Arumugasamy, Mondher Bouazizi, and Tomoaki Ohtsuki. 2022. "An Infrared Array Sensor-Based Approach for Activity Detection, Combining Low-Cost Technology with Advanced Deep Learning Techniques" Sensors 22, no. 10: 3898. https://doi.org/10.3390/s22103898

APA StyleMuthukumar, K. A., Bouazizi, M., & Ohtsuki, T. (2022). An Infrared Array Sensor-Based Approach for Activity Detection, Combining Low-Cost Technology with Advanced Deep Learning Techniques. Sensors, 22(10), 3898. https://doi.org/10.3390/s22103898