An Efficient LiDAR Point Cloud Map Coding Scheme Based on Segmentation and Frame-Inserting Network

Abstract

:1. Introduction

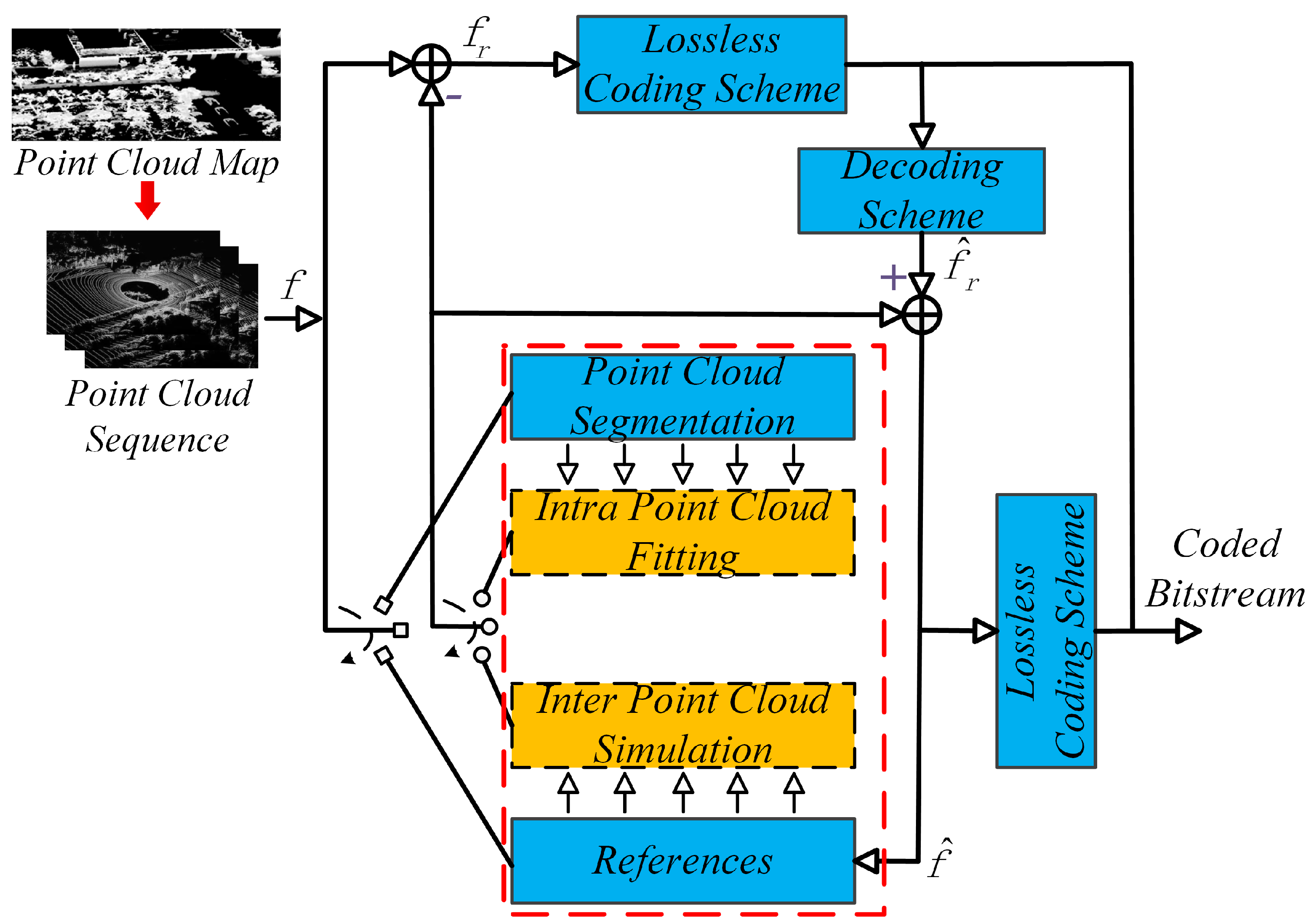

- By recording the time stamp and quaternion matrix of each scan during mapping, the large-scale point cloud map compression can be formulated as a point cloud sequence compression problem;

- For intra-coding, we develop an intra-prediction method based on segmentation and plane fitting, which can exploit and remove the spatial redundancy by utilizing the spatial structure characteristics of the point cloud.

- For inter-coding, we develop an interpolation-based inter-prediction network, in which the previous time and the next time encoded point clouds are utilized to synthesize the point clouds of the intermediate time to remove the temporal redundancy.

- Experimental results on the KITTI dataset demonstrate that the proposed method achieves a competitive compression performance for the dense LiDAR point cloud maps compared with other state-of-the-arts.

2. Point Cloud Coding: A Brief Review

2.1. Volumetric/Tree-Based Point Clouds Coding

2.2. Image/Video-Based Point Clouds Coding

2.3. Summary

3. Overall Codec Architecture

4. Intra-Frame Point Cloud Coding Based on Semantic Segmentation

4.1. Overview of Intra-Coding Network

4.2. LiDAR Point Cloud Segmentation

4.3. Segmentation-Based Intra-Prediction Technique

4.4. Residual Data Coding

5. Inter-Frame Point Cloud Coding Based on Inserting Network

5.1. Overall Inter-Prediction Network

5.2. Point Cloud Interpolation Module

5.3. Inter Loss Function Design

5.4. Visualization Results

6. Experimental Results

6.1. Evaluation Metric

6.2. Coding Performance for a Single Frame

6.3. Comparsion with Octree and Draco

6.4. Rate-Distortion Curves

6.5. Computational Complexity

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, C.; Ji, M.; Wang, J.; Wen, W.; Li, T.; Sun, Y. An improved DBSCAN method for LiDAR data segmentation with automatic Eps estimation. Sensors 2019, 19, 172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McGlade, J.; Wallace, L.; Reinke, K.; Jones, S. The potential of low-cost 3D imaging technologies for forestry applications: Setting a research agenda for low-cost remote sensing inventory tasks. Forests 2022, 13, 204. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry remote sensing from unmanned aerial vehicles: A review focusing on the data, processing and potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef] [Green Version]

- Sadeghifar, T.; Lama, G.; Sihag, P.; Bayram, A.; Kisi, O. Wave height predictions in complex sea flows through soft-computing models: Case study of Persian Gulf. Ocean Eng. 2022, 245, 110467. [Google Scholar] [CrossRef]

- Gale, M.G.; Cary, G.J.; Van Dijk, A.I.; Yebra, M. Forest fire fuel through the lens of remote sensing: Review of approaches, challenges and future directions in the remote sensing of biotic determinants of fire behaviour. Remote Sens. Environ. 2021, 255, 112282. [Google Scholar] [CrossRef]

- Jalonen, J.; Järvelä, J.; Virtanen, J.P.; Vaaja, M.; Kurkela, M.; Hyyppä, H. Determining characteristic vegetation areas by terrestrial laser scanning for floodplain flow modeling. Water 2015, 7, 420–437. [Google Scholar] [CrossRef] [Green Version]

- Lama, G.F.C.; Sadeghifar, T.; Azad, M.T.; Sihag, P.; Kisi, O. On the indirect estimation of wind wave heights over the southern coasts of Caspian Sea: A comparative analysis. Water 2022, 14, 843. [Google Scholar] [CrossRef]

- Godone, D.; Allasia, P.; Borrelli, L.; Gullà, G. UAV and structure from motion approach to monitor the maierato landslide evolution. Remote Sens. 2020, 12, 1039. [Google Scholar] [CrossRef] [Green Version]

- He, J.; Barton, I. Hyperspectral remote sensing for detecting geotechnical problems at Ray mine. Eng. Geol. 2021, 292, 106261. [Google Scholar] [CrossRef]

- Rao, Y.; Zhang, M.; Cheng, Z.; Xue, J.; Pu, J.; Wang, Z. Semantic Point Cloud Segmentation Using Fast Deep Neural Network and DCRF. Sensors 2021, 21, 2731. [Google Scholar] [CrossRef]

- Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. [Google Scholar] [CrossRef] [PubMed]

- Xiong, L.; Fu, Z.; Zeng, D.; Leng, B. An optimized trajectory planner and motion controller framework for autonomous driving in unstructured environments. Sensors 2021, 21, 4409. [Google Scholar] [CrossRef] [PubMed]

- Vanhellemont, Q.; Brewin, R.J.; Bresnahan, P.J.; Cyronak, T. Validation of Landsat 8 high resolution Sea Surface Temperature using surfers. Estuar. Coast. Shelf Sci. 2021, 265, 107650. [Google Scholar] [CrossRef]

- Walton, C.C. A review of differential absorption algorithms utilized at NOAA for measuring sea surface temperature with satellite radiometers. Remote Sens. Environ. 2016, 187, 434–446. [Google Scholar] [CrossRef]

- Lama, G.F.C.; Rillo Migliorini Giovannini, M.; Errico, A.; Mirzaei, S.; Padulano, R.; Chirico, G.B.; Preti, F. Hydraulic efficiency of green-blue flood control scenarios for vegetated rivers: 1D and 2D unsteady simulations. Water 2021, 13, 2620. [Google Scholar] [CrossRef]

- Pomerleau, F.; Liu, M.; Colas, F.; Siegwart, R. Challenging data sets for point cloud registration algorithms. Int. J. Robot. Res. 2012, 31, 1705–1711. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- De Oliveira Rente, P.; Brites, C.; Ascenso, J.; Pereira, F. Graph-based static 3D point clouds geometry coding. IEEE Trans. Multimed. 2018, 21, 284–299. [Google Scholar] [CrossRef]

- Krivokuća, M.; Chou, P.A.; Koroteev, M. A volumetric approach to point cloud compression—Part ii: Geometry compression. IEEE Trans. Image Process. 2019, 29, 2217–2229. [Google Scholar] [CrossRef]

- Guede, C.; Andrivon, P.; Marvie, J.E.; Ricard, J.; Redmann, B.; Chevet, J.C. V-PCC: Performance evaluation of the first MPEG Point Cloud Codec. In Proceedings of the SMPTE 2020 Annual Technical Conference and Exhibition, Virtual, 10–12 November 2020; pp. 1–27. [Google Scholar]

- Elseberg, J.; Borrmann, D.; Nüchter, A. One billion points in the cloud–an octree for efficient processing of 3D laser scans. ISPRS J. Photogramm. Remote Sens. 2013, 76, 76–88. [Google Scholar] [CrossRef]

- Tu, C.; Takeuchi, E.; Carballo, A.; Miyajima, C.; Takeda, K. Motion analysis and performance improved method for 3D LiDAR sensor data compression. IEEE Trans. Intell. Transp. Syst. 2019, 22, 243–256. [Google Scholar] [CrossRef]

- Wang, X.; Şekercioğlu, Y.A.; Drummond, T.; Natalizio, E.; Fantoni, I.; Frémont, V. Fast depth video compression for mobile RGB-D sensors. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 673–686. [Google Scholar] [CrossRef]

- Tu, C.; Takeuchi, E.; Miyajima, C.; Takeda, K. Compressing continuous point cloud data using image compression methods. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Janeiro, Brazil, 1–4 November 2016; pp. 1712–1719. [Google Scholar]

- Feng, Y.; Liu, S.; Zhu, Y. Real-time spatio-temporal lidar point cloud compression. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10766–10773. [Google Scholar]

- Tu, C.; Takeuchi, E.; Carballo, A.; Takeda, K. Real-time streaming point cloud compression for 3d lidar sensor using u-net. IEEE Access 2019, 7, 113616–113625. [Google Scholar] [CrossRef]

- Tu, C.; Takeuchi, E.; Carballo, A.; Takeda, K. Point cloud compression for 3D LiDAR sensor using recurrent neural network with residual blocks. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3274–3280. [Google Scholar]

- Google. Draco: 3D Data Compression. 2018. Available online: https://github.com/google/draco (accessed on 6 May 2022).

- Houshiar, H.; Nüchter, A. 3D point cloud compression using conventional image compression for efficient data transmission. In Proceedings of the 2015 XXV International Conference on Information, Communication and Automation Technologies (ICAT), Washington, DC, USA, 29–31 October 2015; pp. 1–8. [Google Scholar]

- Liu, Z.; Yeh, R.A.; Tang, X.; Liu, Y.; Agarwala, A. Video frame synthesis using deep voxel flow. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4463–4471. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. Rangenet++: Fast and accurate lidar semantic segmentation. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4213–4220. [Google Scholar]

- Sun, X.; Wang, S.; Liu, M. A novel coding architecture for multi-line LiDAR point clouds based on clustering and convolutional LSTM network. IEEE Trans. Intell. Transp. Syst. 2020, 23, 2190–2201. [Google Scholar] [CrossRef]

- Langer, F.; Milioto, A.; Haag, A.; Behley, J.; Stachniss, C. Domain Transfer for Semantic Segmentation of LiDAR Data using Deep Neural Networks. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Sun, X.; Wang, S.; Wang, M.; Cheng, S.S.; Liu, M. An advanced LiDAR point cloud sequence coding scheme for autonomous driving. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2793–2801. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Li, J. A hierarchical approach for refining point cloud quality of a low cost UAV LiDAR system in the urban environment-ScienceDirect. ISPRS J. Photogramm. Remote Sens. 2022, 183, 403–421. [Google Scholar] [CrossRef]

- He, P.; Emami, P.; Ranka, S.; Rangarajan, A. Learning Scene Dynamics from Point Cloud Sequences. Int. J. Comput. Vis. 2022, 130, 669–695. [Google Scholar] [CrossRef]

- Zhou, B.; He, Y.; Huang, W.; Yu, X.; Fang, F.; Li, X. Place recognition and navigation of outdoor mobile robots based on random Forest learning with a 3D LiDAR. J. Intell. Robot. Syst. 2022, 104, 267–279. [Google Scholar] [CrossRef]

- Shi, Y.; Yang, C. Point cloud inpainting with normal-based feature matching. Multimed. Syst. 2022, 28, 521–527. [Google Scholar] [CrossRef]

- Tian, J.; Zhang, T. Secure and effective assured deletion scheme with orderly overwriting for cloud data. J. Supercomput. 2022, 78, 9326–9354. [Google Scholar] [CrossRef]

- Luan, S.; Chen, C.; Zhang, B.; Han, J.; Liu, J. Gabor Convolutional Networks. IEEE Trans. Image Process. 2017, 2018, 4357–4366. [Google Scholar]

- Zhang, B.; Perina, A.; Li, Z.; Murino, V.; Liu, J.; Ji, R. Bounding multiple gaussians uncertainty with application to object tracking. Int. J. Comput. Vis. 2016, 118, 364–379. [Google Scholar] [CrossRef]

- Zhang, B.; Yang, Y.; Chen, C.; Yang, L.; Han, J.; Shao, L. Action recognition using 3D histograms of texture and a multi-class boosting classifier. IEEE Trans. Image Process. 2017, 26, 4648–4660. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Inter-Inserting Method | Octree [35] | Draco [28] | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Scene | QA | DR | QB | ||||||

| 2 mm | 5 mm | 1 cm | 1 mm3 | 5 mm3 | 1 cm3 | 17 (bits) | 15 (bits) | 14 (bits) | |

| Campus | 3.09 | 2.49 | 2.01 | 21.27 | 8.05 | 5.75 | 11.87 | 7.75 | 5.47 |

| City | 3.90 | 3.38 | 2.83 | 23.98 | 10.76 | 8.40 | 12.52 | 8.38 | 6.49 |

| Road | 3.16 | 0.26 | 2.12 | 23.56 | 10.35 | 7.99 | 12.31 | 8.35 | 6.59 |

| Residential | 4.29 | 3.68 | 3.16 | 22.94 | 9.72 | 7.37 | 12.66 | 8.59 | 6.33 |

| Average | 3.61 | 3.03 | 2.53 | 20.23 | 9.72 | 7.29 | 12.34 | 8.27 | 6.22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Jiang, L.; Sun, X.; Zhao, J.; Deng, Z.; Yang, S. An Efficient LiDAR Point Cloud Map Coding Scheme Based on Segmentation and Frame-Inserting Network. Sensors 2022, 22, 5108. https://doi.org/10.3390/s22145108

Wang Q, Jiang L, Sun X, Zhao J, Deng Z, Yang S. An Efficient LiDAR Point Cloud Map Coding Scheme Based on Segmentation and Frame-Inserting Network. Sensors. 2022; 22(14):5108. https://doi.org/10.3390/s22145108

Chicago/Turabian StyleWang, Qiang, Liuyang Jiang, Xuebin Sun, Jingbo Zhao, Zhaopeng Deng, and Shizhong Yang. 2022. "An Efficient LiDAR Point Cloud Map Coding Scheme Based on Segmentation and Frame-Inserting Network" Sensors 22, no. 14: 5108. https://doi.org/10.3390/s22145108

APA StyleWang, Q., Jiang, L., Sun, X., Zhao, J., Deng, Z., & Yang, S. (2022). An Efficient LiDAR Point Cloud Map Coding Scheme Based on Segmentation and Frame-Inserting Network. Sensors, 22(14), 5108. https://doi.org/10.3390/s22145108