Foggy Lane Dataset Synthesized from Monocular Images for Lane Detection Algorithms

Abstract

:1. Introduction

- A new dataset augmentation and synthesis method was proposed for lane detection in foggy conditions, which highly improved the accuracy of the lane detection model under foggy weather without introducing extra computational cost or complex framework for the algorithm.

- We established a new dataset, FoggyCULane, which contains 107,451 frames of labelled foggy lanes. This would help the community and researchers to develop and validate their own data-driven lane detection or dehaze algorithms.

2. Background

3. Methods

3.1. The Standard Optical Model of Foggy Images

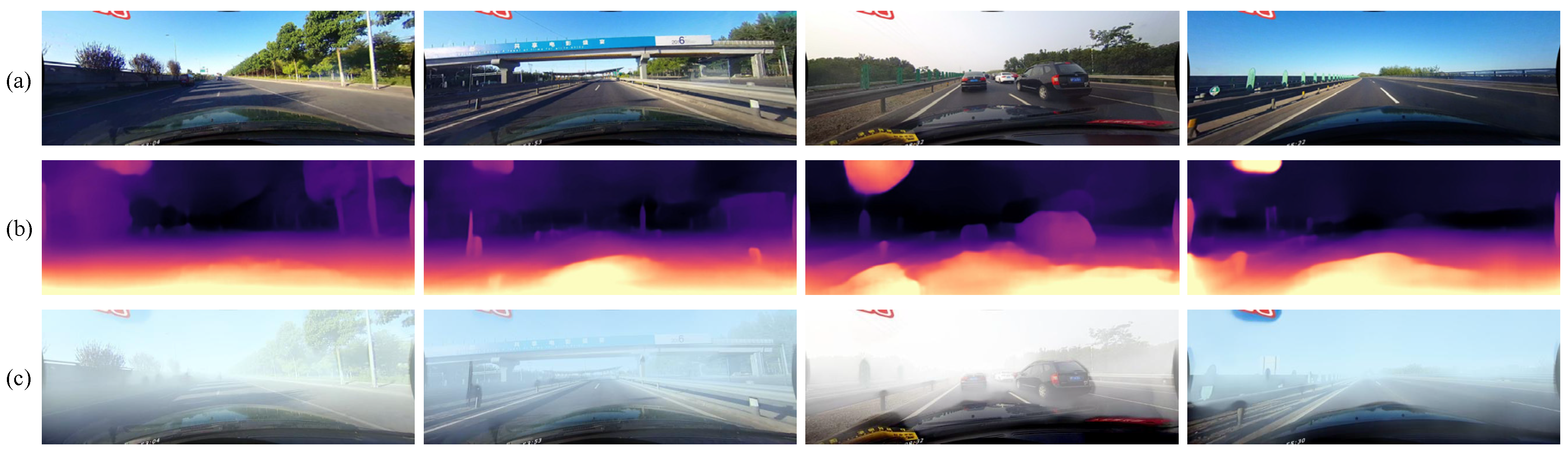

3.2. Monocular Depth Estimation

3.3. Foggy Image Generation

3.4. Establishment of FoggyCULane Dataset

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Effect of FoggyCULane Dataset on SCNN

- The dataset with mixed foggy densities has better performance than the dataset with a single foggy density. On the one hand, there are more haze images in the dataset with mixed fog densities, and the neural network can be more exposed to the foggy scene when training. Therefore, it is more sensitive to lane markings under foggy weather. On the other hand, the dataset with mixed fog densities contains fog images of three densities, making the network learn and extract features for lane detection in foggy condition comprehensively during model training.

- The model trained in the dataset with the corresponding fog density value achieves the best lane detection performance in each foggy scene. This indicates that the features extracted by the network vary with fog densities. Therefore, the dataset with mixed fog densities should be applied to obtain a better performance in practice.

4.5. Effect of FoggyCULane Dataset on Other State-of-Art Methods

4.6. Ablation Study

4.6.1. Lane Detection Results in Real Foggy Scene

- 1.

- The fog density in the image varies among images, and the fog density is also not uniform in the same image.

- 2.

- The angle and orientation of the images vary greatly from one another, including images taken from the perspective of roadside pedestrians, road surveillance cameras, and in-vehicle recorders.

- 3.

- Vehicles and pedestrians in the images occlude the lane marks to varying degrees, and the number of lane marks in each image is not the same.

4.6.2. Application of Proposed Framework on VIL-100 Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; et al. Autonomous driving in urban environments: Boss and the Urban Challenge. J. Field Robot. 2008, 25, 425–466. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.A.; Sayed, H.E.; Malik, S.; Zia, T.; Khan, J.; Alkaabi, N.; Ignatious, H. Level-5 Autonomous Driving—Are We There Yet? A Review of Research Literature. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R. An empirical evaluation of deep learning on highway driving. arXiv 2015, arXiv:1504.01716. [Google Scholar]

- Kim, J.; Lee, M. Robust lane detection based on convolutional neural network and random sample consensus. In Neural Information Processing. ICONIP 2014; Springer: Cham, Switzerland, 2014; pp. 454–461. [Google Scholar]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep neural network for structural prediction and lane detection in traffic scene. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 690–703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial CNN for traffic scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection cnns by self attention distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1013–1021. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- Tabelini, L.; Berriel, R.; Paixão, T.M.; Badue, C.; De Souza, A.F.; Olivera-Santos, T. Keep your eyes on the lane: Attention-guided lane detection. arXiv 2020, arXiv:2010.12035. [Google Scholar]

- Wang, Z.; Ren, W.; Qiu, Q. Lanenet: Real-time lane detection networks for autonomous driving. arXiv 2018, arXiv:1807.01726. [Google Scholar]

- Hu, X.; Fu, C.-W.; Zhu, L.; Heng, P.-A. Depth-attentional features for single-image rain removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8022–8031. [Google Scholar]

- Liu, T.; Chen, Z.; Yang, Y.; Wu, Z.; Li, H. Lane detection in low-light conditions using an efficient data enhancement: Light conditions style transfer. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1394–1399. [Google Scholar]

- Tarel, J.-P.; Hautiere, N.; Cord, A.; Gruyer, D.; Halmaoui, H. Improved visibility of road scene images under heterogeneous fog. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 478–485. [Google Scholar]

- Tarel, J.-P.; Hautiere, N.; Caraffa, L.; Cord, A.; Halmaoui, H.; Gruyer, D. Vision enhancement in homogeneous and heterogeneous fog. IEEE Intell. Transp. Syst. Mag. 2012, 4, 6–20. [Google Scholar] [CrossRef] [Green Version]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef] [Green Version]

- Mai, N.A.M.; Duthon, P.; Khoudour, L.; Crouzil, A.; Velastin, S.A. 3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions. Sensors 2021, 21, 6711. [Google Scholar] [CrossRef]

- He, Y.; Liu, Z. A feature fusion method to improve the driving obstacle detection under foggy weather. IEEE Trans. Transp. Electrif. 2021, 7, 2505–2515. [Google Scholar] [CrossRef]

- Yan, M.; Chen, J.; Xu, J.; Xiang, L. Visibility detection of single image in foggy days based on Fourier transform and convolutional neural network. In Proceedings of the 2nd International Conference on Applied Mathematics, Modelling, and Intelligent Computing (CAMMIC 2022), Kunming, China, 25–27 March 2022; pp. 1440–1445. [Google Scholar]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Instant dehazing of images using polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; p. I. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Berman, D.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [Green Version]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.-H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [Green Version]

- Li, R.; Zhang, X.; You, S.; Li, Y. Learning to Dehaze from Realistic Scene with A Fast Physics-based Dehazing Network. arXiv 2020, arXiv:2004.08554. [Google Scholar]

- Anvari, Z.; Athitsos, V. Dehaze-GLCGAN: Unpaired single image de-hazing via adversarial training. arXiv 2020, arXiv:2008.06632. [Google Scholar]

- Wang, Y.; Yan, X.; Wang, F.L.; Xie, H.; Yang, W.; Wei, M.; Qin, J. UCL-Dehaze: Towards Real-world Image Dehazing via Unsupervised Contrastive Learning. arXiv 2020, arXiv:2205.01871. [Google Scholar]

- Kumar, B.; Gupta, H.; Sinha, A.; Vyas, O. Lane Detection for Autonomous Vehicle in Hazy Environment with Optimized Deep Learning Techniques. In Proceedings of the International Conference on Advanced Network Technologies and Intelligent Computing, Varanasi, India, 17–18 December 2021; pp. 596–608. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- TuSimple. TuSimple Benchmark. Available online: https://github.com/TuSimple/tusimple-benchmark (accessed on 11 July 2017).

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The apolloscape dataset for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 954–960. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2636–2645. [Google Scholar]

- Behrendt, K.; Soussan, R. Unsupervised labeled lane markers using maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019; pp. 832–839. [Google Scholar]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S. A2d2: Audi autonomous driving dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Zhang, Y.; Zhu, L.; Feng, W.; Fu, H.; Wang, M.; Li, Q.; Li, C.; Wang, S. VIL-100: A New Dataset and A Baseline Model for Video Instance Lane Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 15681–15690. [Google Scholar]

- Harald, K. Theorie der Horizontalen Sichtweite: Kontrast und Sichtweite; Keim Nemnich: Munich, Germany, 1924; Volume 12. [Google Scholar]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons: New York, NY, USA, 1976. [Google Scholar]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 820–827. [Google Scholar]

- Facil, J.M.; Ummenhofer, B.; Zhou, H.; Montesano, L.; Brox, T.; Civera, J. CAM-Convs: Camera-aware multi-scale convolutions for single-view depth. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11826–11835. [Google Scholar]

- Garg, R.; Bg, V.K.; Carneiro, G.; Reid, I. Unsupervised cnn for single view depth estimation: Geometry to the rescue. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 740–756. [Google Scholar]

- Wang, R.; Pizer, S.M.; Frahm, J.-M. Recurrent neural network for (un-) supervised learning of monocular video visual odometry and depth. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5555–5564. [Google Scholar]

- Chakravarty, P.; Narayanan, P.; Roussel, T. GEN-SLAM: Generative modeling for monocular simultaneous localization and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 147–153. [Google Scholar]

- Aleotti, F.; Tosi, F.; Poggi, M.; Mattoccia, S. Generative adversarial networks for unsupervised monocular depth prediction. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 337–354. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. arXiv 2014, arXiv:1406.228327. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3828–3838. [Google Scholar]

| Train Set Images | Validation Set Images | Test Set Images | Total Images | ||

|---|---|---|---|---|---|

| CULane | 1 | 88,880 | 9675 | 34,680 | 133,235 |

| Foggy CULane | 1 | 88,880 | 9675 | 34,680 | 133,235 |

| 2 | 16,532 | 9675 | 9610 | 35,817 | |

| 3 | 16,532 | 9675 | 9610 | 35,817 | |

| 4 | 16,532 | 9675 | 9610 | 35,817 |

| Scenarios | CULane Test Set | FoggyCULane Test Set |

|---|---|---|

| Normal | 9610 | 9610 |

| Crowded | 8115 | 8115 |

| Night | 7040 | 7040 |

| No line | 4058 | 4058 |

| Shadow | 936 | 936 |

| Arrow | 900 | 900 |

| Dazzle light | 486 | 486 |

| Curve | 415 | 415 |

| Crossroad | 3120 | 3120 |

| Foggy_beta2 | 0 | 9610 |

| Foggy_beta3 | 0 | 9610 |

| Foggy_beta4 | 0 | 9610 |

| Category | SCNN Trained on Origin CULane | SCNN Trained on FoggyCULane | SCNN Trained on FoggyCULane | SCNN Trained on FoggyCULane | SCNN Trained on FoggyCULane |

|---|---|---|---|---|---|

| Normal | 89.21 | 89.82 | 89.81 | 89.20 | 90.09 |

| Crowded | 68.11 | 68.30 | 68.63 | 67.93 | 69.53 |

| Night | 64.85 | 65.49 | 64.00 | 64.24 | 65.08 |

| No line | 43.25 | 43.21 | 43.22 | 43.02 | 42.80 |

| Shadow | 59.27 | 58.31 | 61.40 | 56.57 | 63.77 |

| Arrow | 83.29 | 84.51 | 84.30 | 83.82 | 83.66 |

| Dazzle light | 59.93 | 65.64 | 64.07 | 58.74 | 61.16 |

| Curve | 62.65 | 65.61 | 66.51 | 62.51 | 62.09 |

| Crossroad (FP) | 2704 | 2679 | 3155 | 2386 | 3004 |

| Foggy_beta2 | 74.65 | 86.63 | 85.58 | 83.74 | 86.65 |

| Foggy_beta3 | 51.41 | 79.53 | 80.07 | 77.56 | 81.53 |

| Foggy_beta4 | 11.09 | 60.33 | 65.27 | 65.91 | 70.41 |

| Category | ENet-SAD | ERFNet | LaneATT | LaneNet | ||||

|---|---|---|---|---|---|---|---|---|

| CULane | Foggy CULane | CULane | Foggy CULane | CULane | Foggy CULane | CULane | Foggy CULane | |

| Normal | 88.52 | 89.64 | 87.46 | 88.57 | 85.06 | 87.03 | 88.13 | 88.95 |

| Foggy_beta2 | 71.86 | 85.21 | 69.56 | 84.34 | 69.20 | 84.99 | 65.24 | 83.51 |

| Foggy_beta3 | 50.62 | 80.35 | 49.73 | 79.42 | 47.14 | 78.21 | 41.47 | 77.32 |

| Foggy_beta4 | 12.13 | 71.32 | 10.23 | 65.28 | 10.93 | 66.45 | 9.82 | 59.48 |

| SCNN Trained on CULane | SCNN Trained on FoggyCULane | |

|---|---|---|

| Real foggy scene 1 | 59.13 | 66.82 |

| Real foggy scene 2 | 57.01 | 59.44 |

| Real foggy scene 3 | 40.15 | 46.08 |

| SCNN Trained on CULane | SCNN Trained on FoggyCULane | |

|---|---|---|

| FP | 33 | 118 |

| Train Set Images | Test Set Images | Total Images | ||

|---|---|---|---|---|

| VIL-100 | 8000 | 2000 | 10,000 | |

| Foggy VIL-100 | 8000 | 2000 | 10,000 | |

| 1400 | 400 | 1800 | ||

| 1400 | 400 | 1800 | ||

| 1400 | 400 | 1800 |

| Scenarios | VIL-100 Test Set | FoggyVIL-100 Test Set |

|---|---|---|

| Normal | 400 | 400 |

| Crowded | 700 | 700 |

| Curved road | 700 | 700 |

| Damaged road | 100 | 100 |

| Shadows | 200 | 200 |

| Road markings | 400 | 400 |

| Dazzle light | 200 | 200 |

| Night | 100 | 100 |

| Crossroad | 100 | 100 |

| Foggy_beta2 | 0 | 400 |

| Foggy_beta3 | 0 | 400 |

| Foggy_beta4 | 0 | 400 |

| Category | SCNN Trained on Origin VIL-100 | SCNN Trained on FoggyVIL-100 Beta = 2, 3, 4 | SCNN Trained on Origin CULane | SCNN Trained on FoggyCULane Beta = 2, 3, 4 |

|---|---|---|---|---|

| Normal | 84.31 | 87.41 | 78.94 | 82.03 |

| Crowded | 72.63 | 74.47 | 59.89 | 61.22 |

| Curved road | 65.09 | 65.13 | 61.39 | 62.03 |

| Damaged road | 40.63 | 41.86 | 41.98 | 42.21 |

| Shadows | 43.05 | 52.35 | 49.56 | 54.32 |

| Road markings | 76.21 | 77.48 | 71.92 | 72.01 |

| Dazzle light | 55.05 | 56.31 | 56.25 | 57.64 |

| Night | 56.45 | 56.70 | 58.71 | 58.90 |

| Crossroad | 60.68 | 60.41 | 60.29 | 63.76 |

| Foggy_beta2 | 72.84 | 81.57 | 62.81 | 73.25 |

| Foggy_beta3 | 54.00 | 79.22 | 50.03 | 69.89 |

| Foggy_beta4 | 13.57 | 66.19 | 10.42 | 55.14 |

| Category | SCNN Trained on Origin VIL-100 | SCNN Trained on FoggyVIL-100 Beta = 2, 3, 4 |

|---|---|---|

| Foggy_beta2 | 52.35 | 57.63 |

| Foggy_beta3 | 48.58 | 51.26 |

| Foggy_beta4 | 11.10 | 36.51 |

| SCNN Trained on VIL-100 | SCNN Trained on FoggyVIL-100 | |

|---|---|---|

| Real foggy scene 1 | 60.02 | 67.45 |

| Real foggy scene 2 | 53.81 | 55.68 |

| Real foggy scene 3 | 38.74 | 44.59 |

| SCNN Trained on VIL-100 | SCNN Trained on FoggyVIL-100 | |

|---|---|---|

| FP | 24 | 77 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nie, X.; Xu, Z.; Zhang, W.; Dong, X.; Liu, N.; Chen, Y. Foggy Lane Dataset Synthesized from Monocular Images for Lane Detection Algorithms. Sensors 2022, 22, 5210. https://doi.org/10.3390/s22145210

Nie X, Xu Z, Zhang W, Dong X, Liu N, Chen Y. Foggy Lane Dataset Synthesized from Monocular Images for Lane Detection Algorithms. Sensors. 2022; 22(14):5210. https://doi.org/10.3390/s22145210

Chicago/Turabian StyleNie, Xiangyu, Zhejun Xu, Wei Zhang, Xue Dong, Ning Liu, and Yuanfeng Chen. 2022. "Foggy Lane Dataset Synthesized from Monocular Images for Lane Detection Algorithms" Sensors 22, no. 14: 5210. https://doi.org/10.3390/s22145210