Collision Detection and Avoidance for Underwater Vehicles Using Omnidirectional Vision †

Abstract

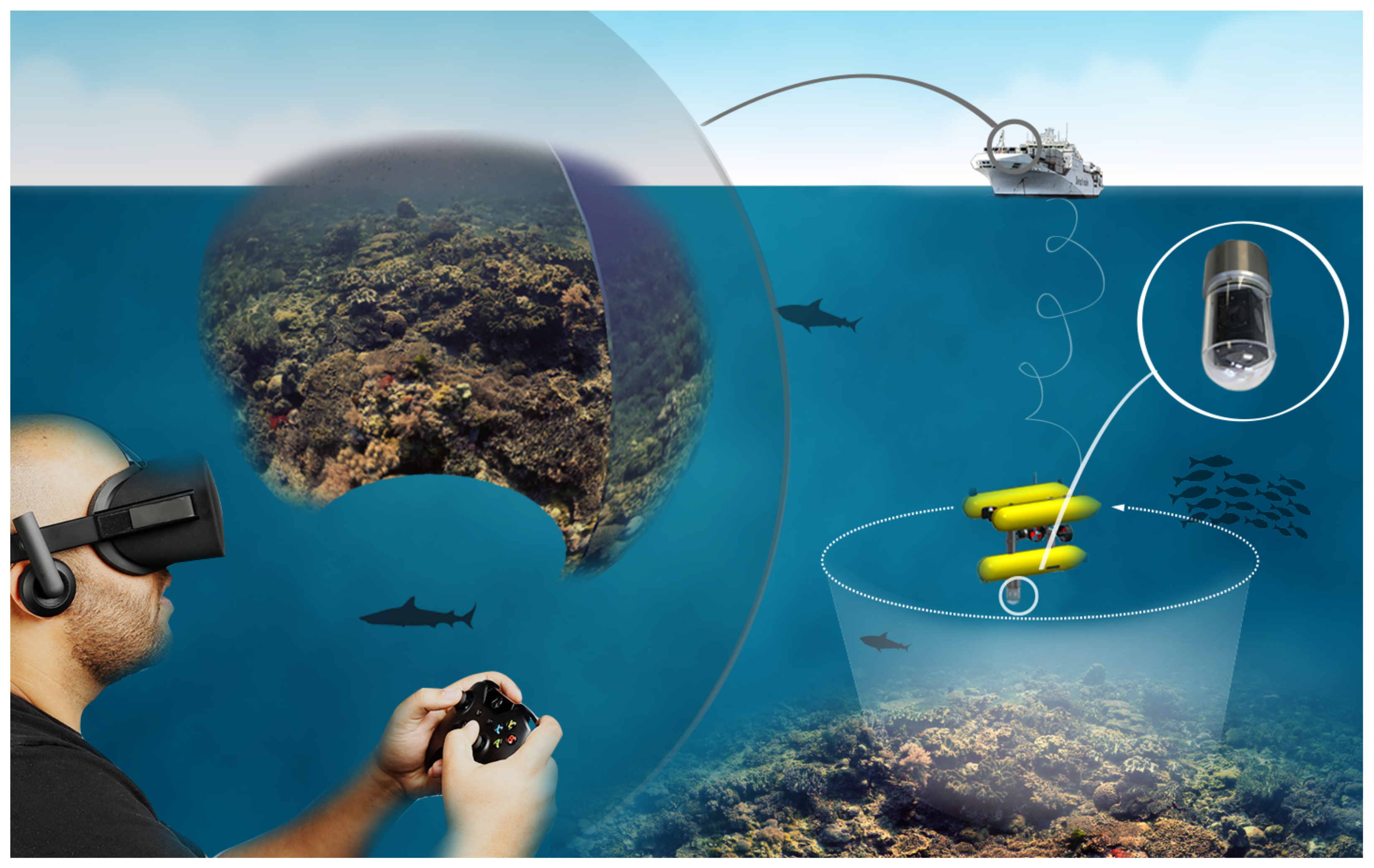

:1. Introduction

2. Related Work

2.1. Visual SLAM

2.2. Omnidirectional and Multi-Camera Systems

2.3. Collision Avoidance

2.4. Collision Avoidance in Underwater Robotics

3. Contributions

- We developed a new camera-based omnidirectional collision avoidance system that exploits the capabilities of multi-camera setups to perform a 360° real-time 3D reconstruction of the region surrounding an ROV/AUV, which allows the robot to assess the risk presented by local objects and act accordingly. A system such as this can carry out survey missions in a more autonomous way while ensuring the safety of the robot.

- A framework based on an open-source omnidirectional vSLAM package that can reconstruct a map of the surveyed environment, and assess in real-time how dangerous the surrounding objects are. To our knowledge, this is the first time an omnidirectional system has been applied to underwater environments.

- A set of warning signals that can assist operators during exploratory missions. These outputs provide an intuitive way of knowing where obstacles are and they allow the operator to perform evasive maneuvers. This also allows less-trained pilots to operate the vehicles safely, thus reducing operational costs.

4. Approach

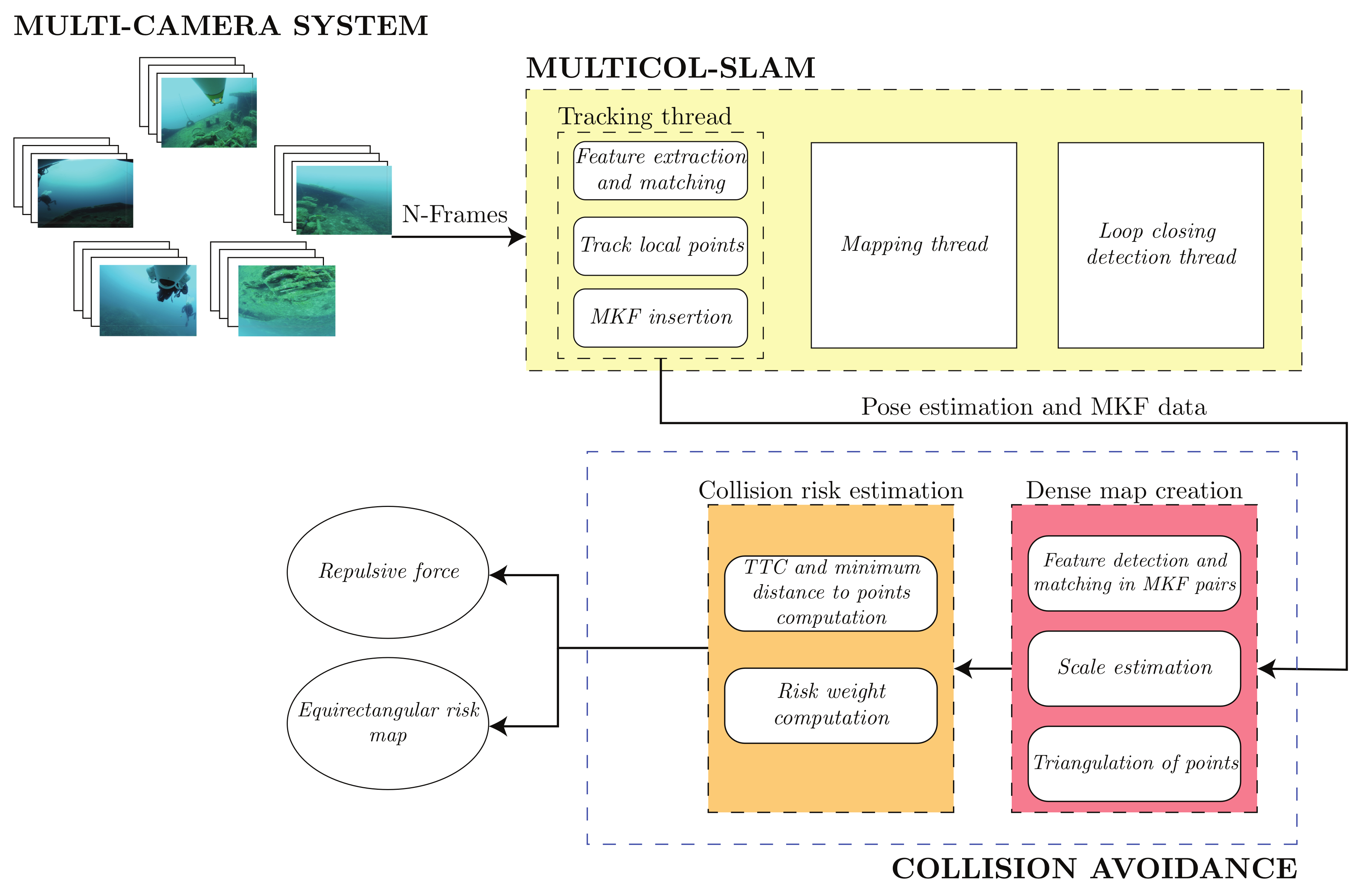

4.1. Framework

- An estimate of the repulsion force which would cause the robot to move away from a potential collision;

- An omnidirectional risk map.

4.1.1. Multi-Camera Tracking System

4.1.2. Tracking and Mapping

- More than 0.5 s (half of the acquisition period of time) have passed since the last MKF insertion.

- A certain number of poses must be successfully tracked since the last re-localization. This number is set to the current frame rate, as in [18].

- At least 50 points are tracked in the current pose.

- If the visual change is big enough (i.e., less than 90% of the current map points are assigned to the reference MKF).

4.2. Collision Avoidance

4.2.1. Dense Map Reconstruction

- Image preprocessing: Due to the lack of texture in underwater scenarios, the use of filtering techniques helps to extract more features from the scene.

- Feature detection and matching: Feature points can be extracted using any feature extractor and subsequent matching is performed by exploiting epipolar constraints obtained from the relative transformation matrix.

- Scale estimation: A scale correction procedure is applied to the initial 3D reconstruction before the 3D information is used for risk assessment. This process is necessary as MultiCol-SLAM can occasionally produce pose estimations with inaccurate scale estimates due to the inherent scale ambiguity of SfM-based techniques. The scale is corrected by taking measurements from the AUV odometry. By comparing the real displacement performed by the robot and the displacement given by the SLAM system from time to t, a scale correction factor can be calculated and multiplied by the transformation matrices. This ensures that the 3D information is obtained in metric units.

- Triangulation: The 3D point cloud is obtained by computing the projection matrices of both frames and by performing a triangulation in which the outliers are rejected based on the re-projection error.

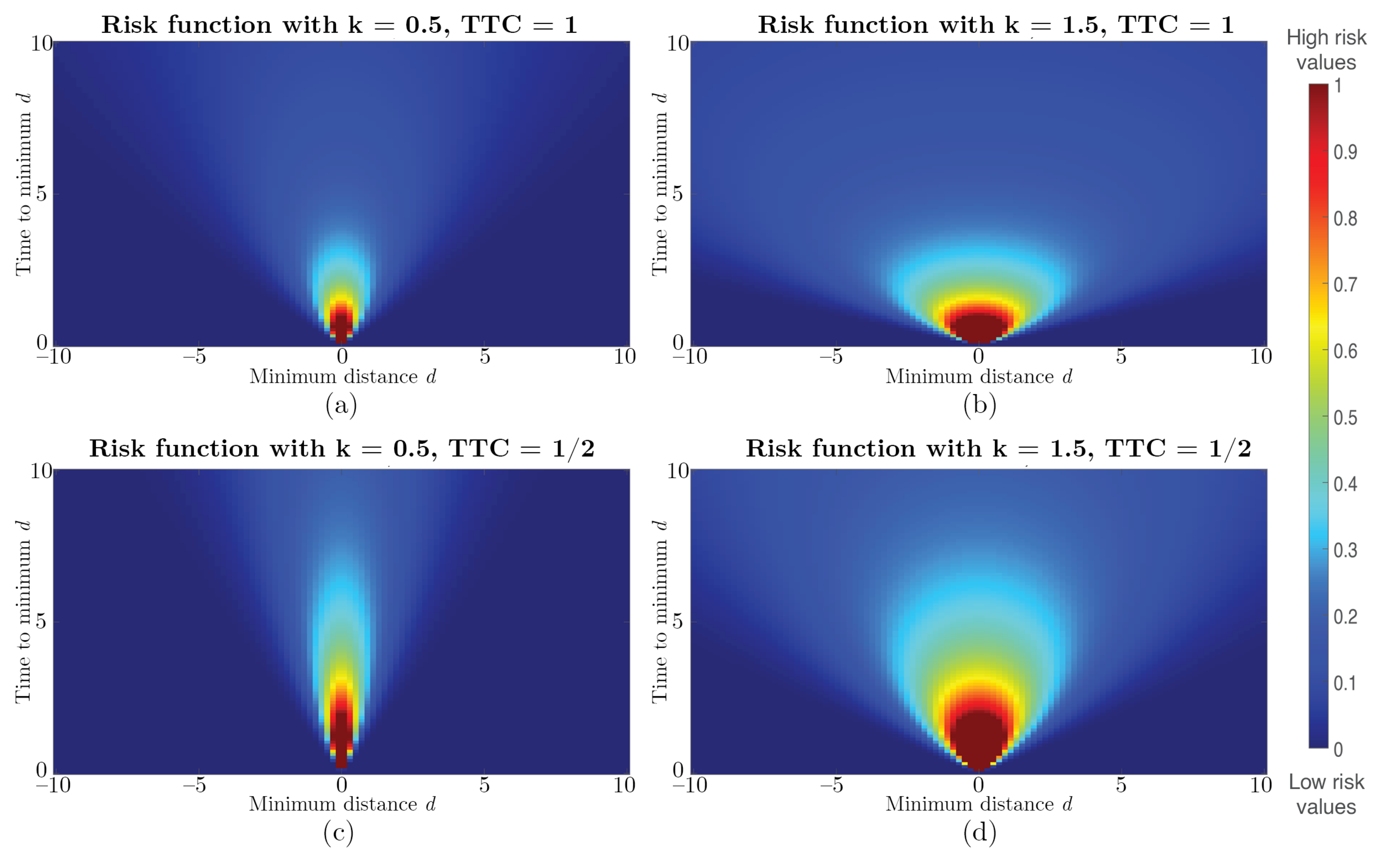

4.3. Risk Estimation

4.4. Avoidance Scheme

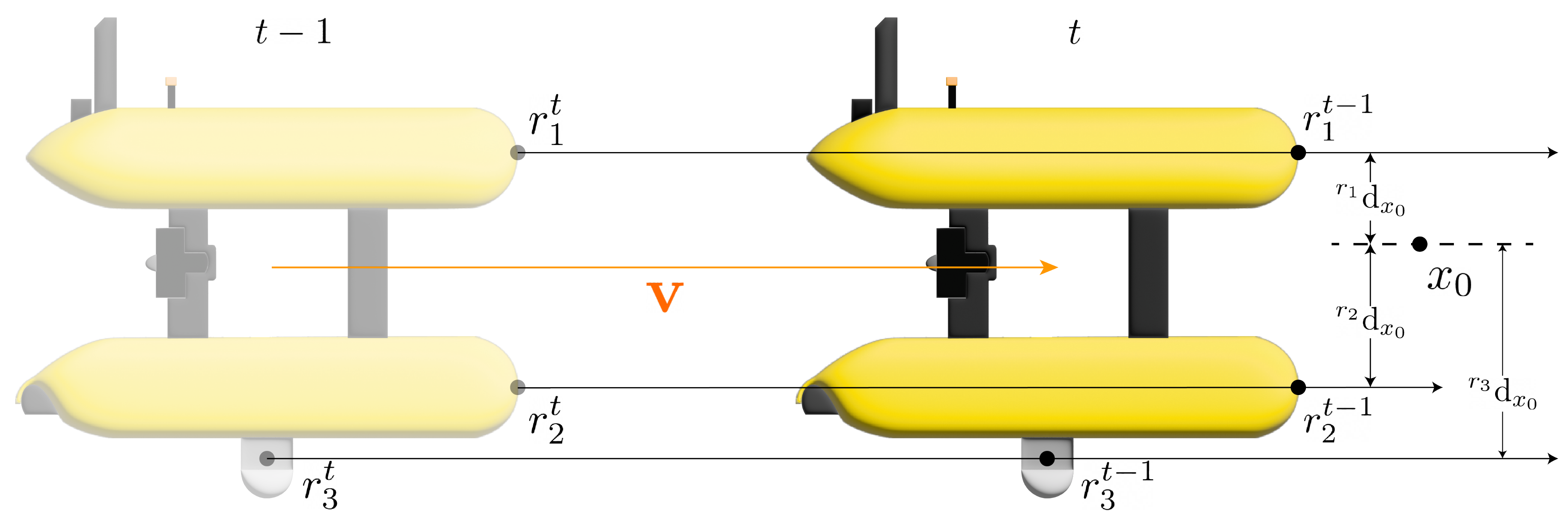

4.4.1. Resultant Repulsive Force

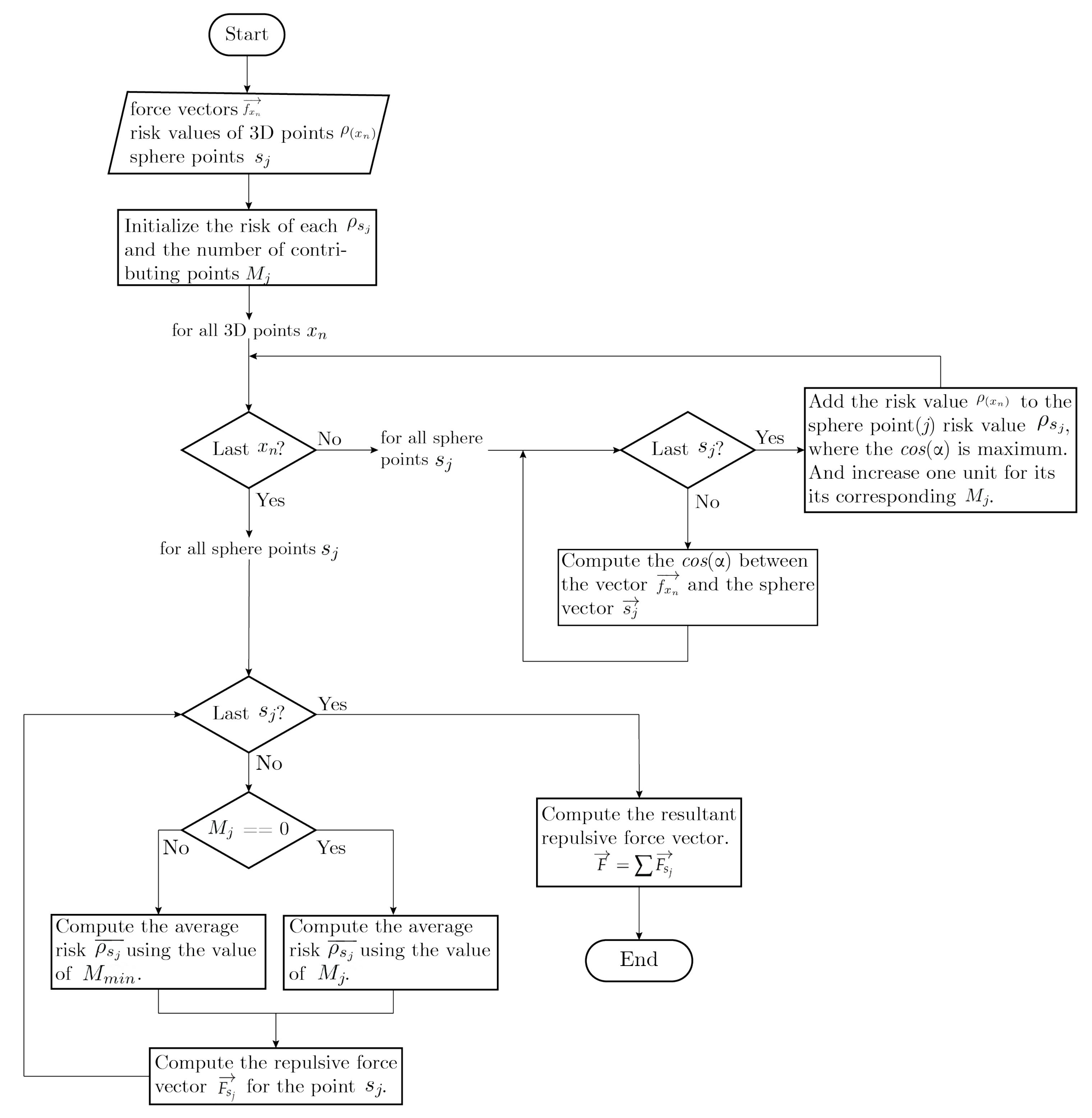

| Algorithm 1 Resultant repulsive force computation |

Input:

: Resultant repulsive force

|

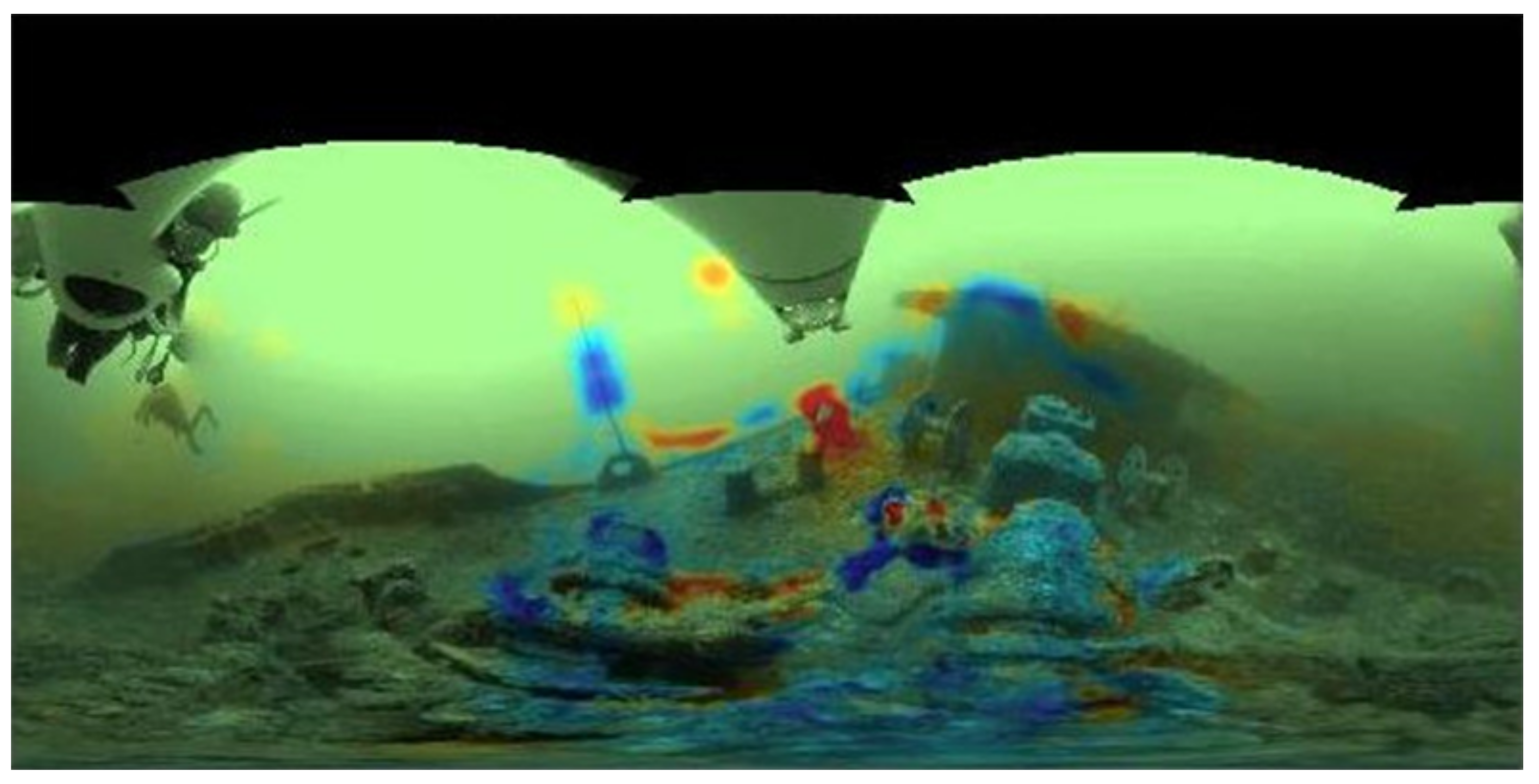

4.4.2. Equirectangular Risk Map Visualization

- Projecting 3D points to the image: Each 3D point with coordinates is first converted to spherical representation:where denote the spherical coordinates of point Q, i.e., the radial distance, polar angle, and azimuthal angle. The final image coordinates of point Q are computed through Equation (20).where W and H are the desired width and height of the equirectangular image.

- Smoothing: Since the direct projection of points from the dense 3D map leads to an unclear representation with spurious calculated risks in some areas, an additional step of interpolation of the missing results is required. Smoothing is carried out on a unit sphere to avoid the problems of performing it directly on the equirectangular images. Points with known risk values are projected onto this sphere. Then, the risk for each pixel of the equirectangular image is calculated by projecting them onto the same sphere. Finally, the average risk of the N nearest points with known risk, weighted by their distance from the projected pixel, is calculated. To limit the spread of risk to areas without known information, the interpolation is limited to pixels within a certain distance from the nearest point with known risk.

5. Results

5.1. Offline Test

5.1.1. Dataset

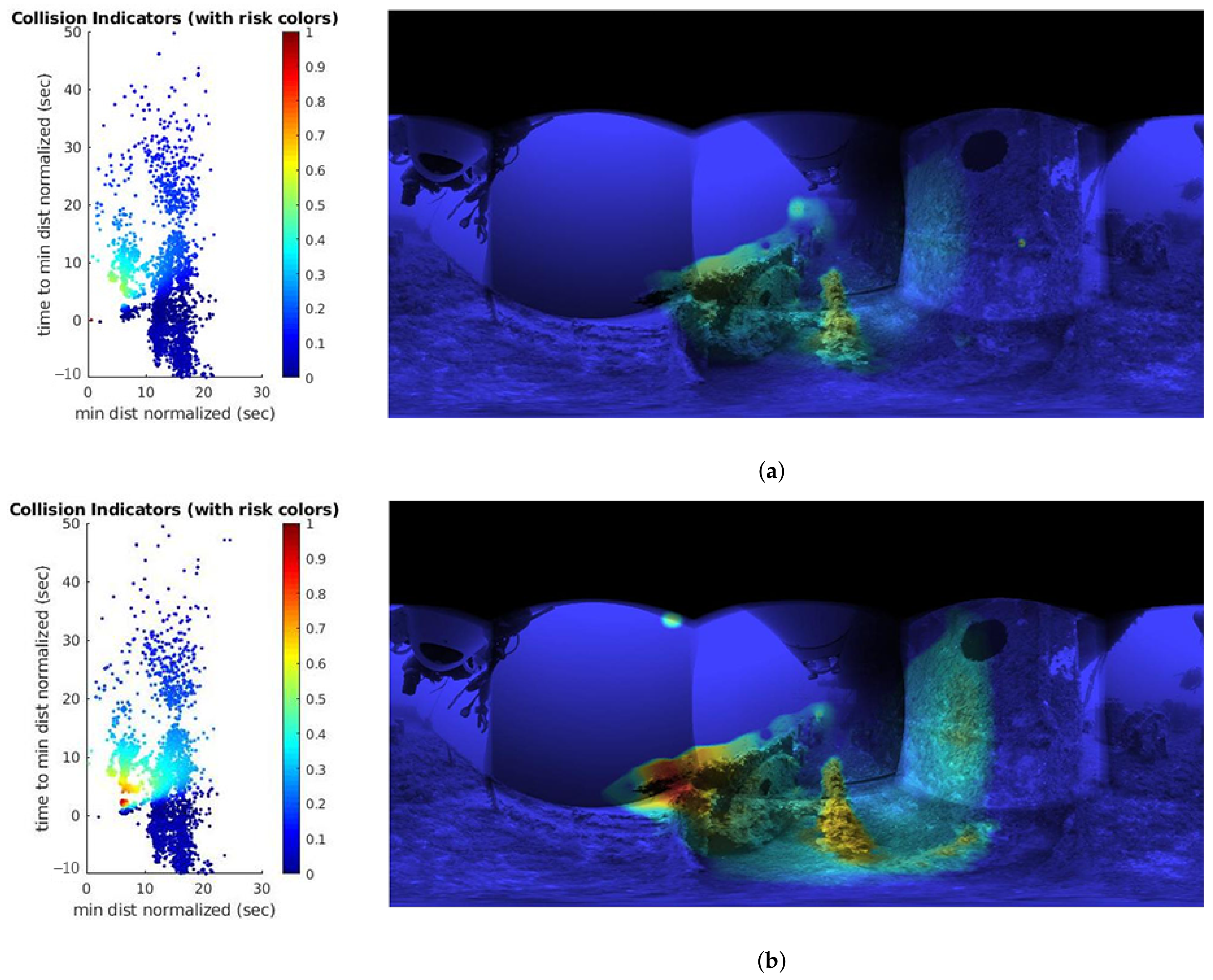

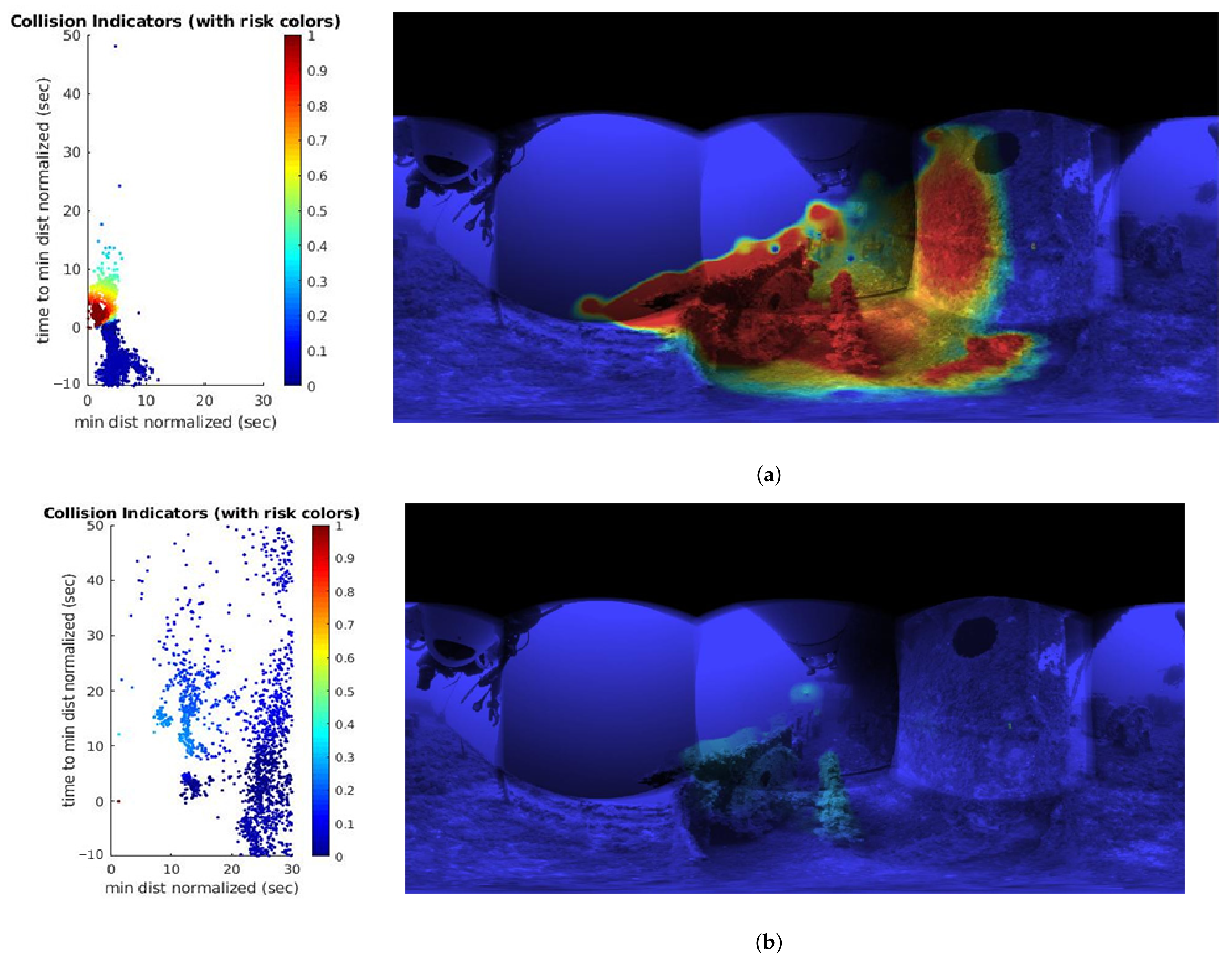

5.1.2. Effects of Parameter k on Risk Computation

5.1.3. Effects of Vehicle Speed

5.1.4. Resultant Repulsive Force Behavior

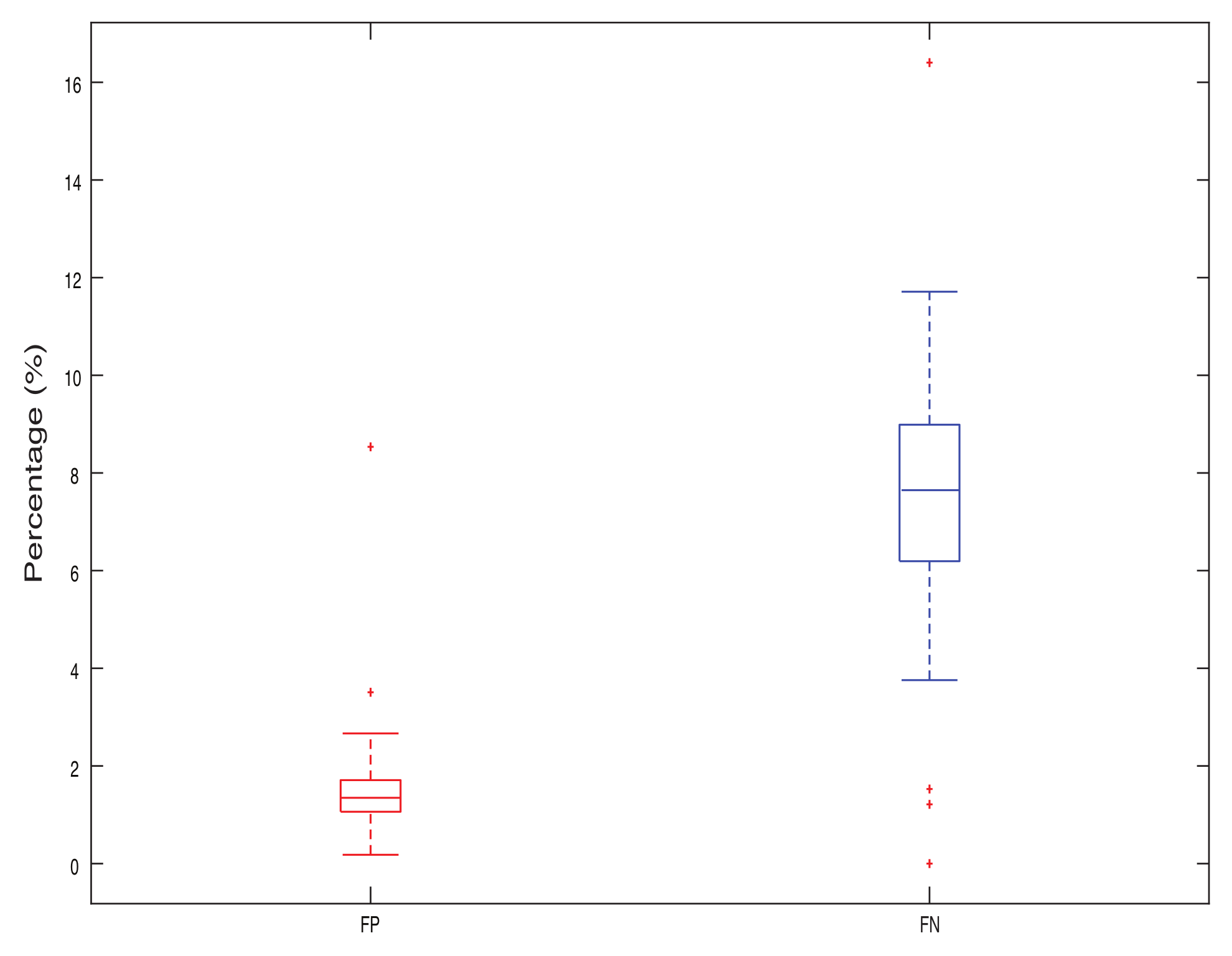

5.1.5. Ground Truth Comparison

5.2. Real-Time Simulation Testing

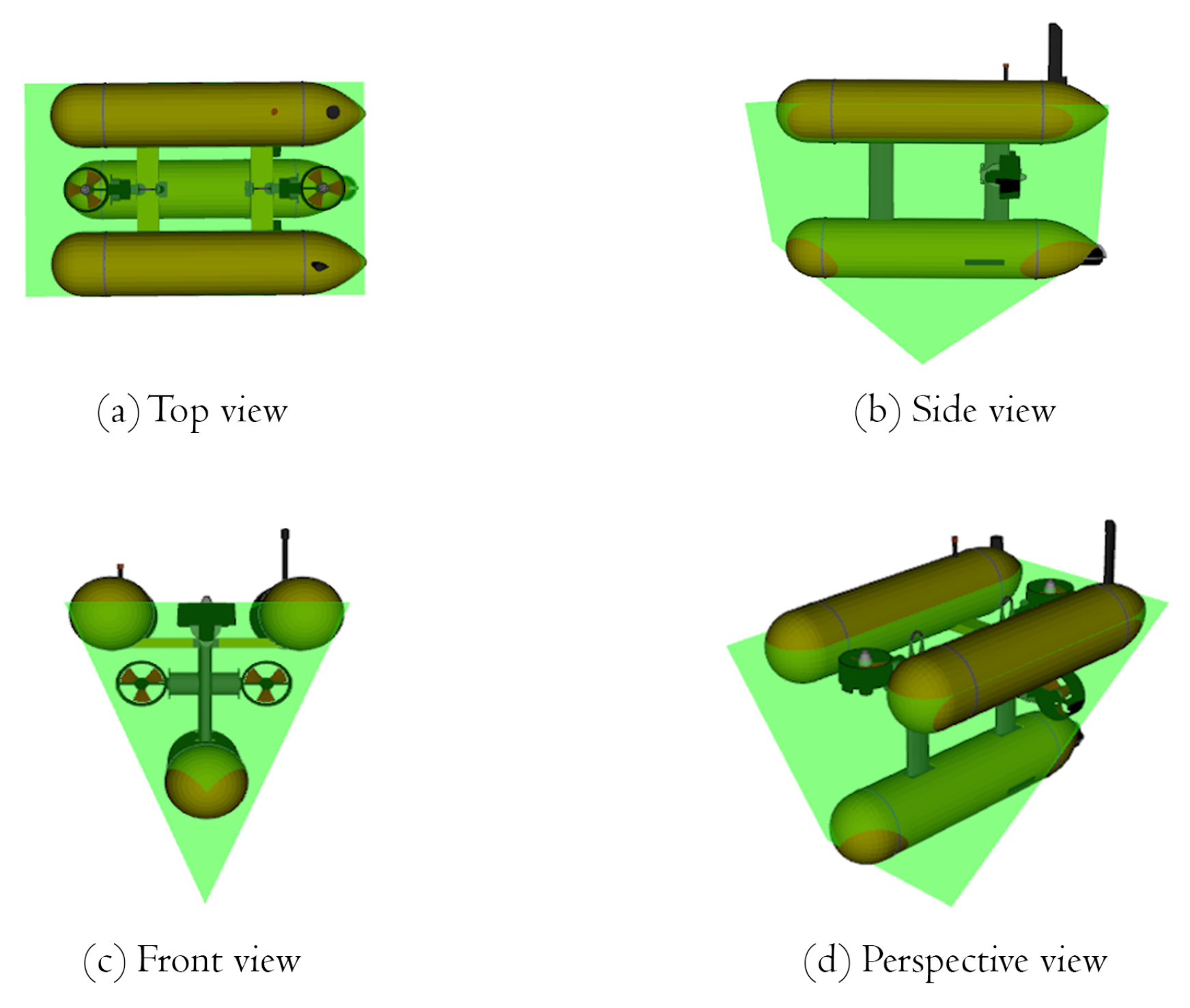

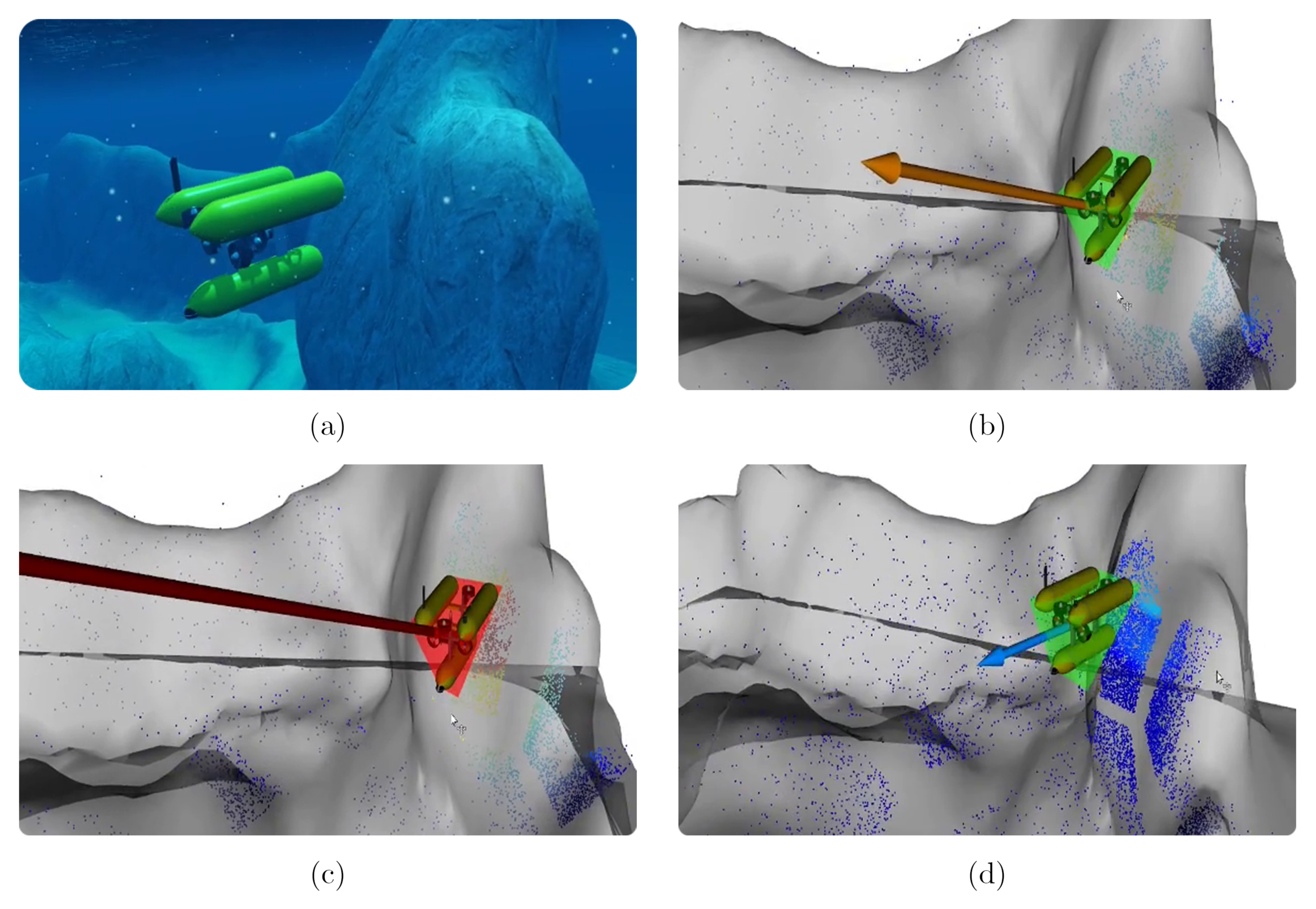

5.2.1. Realistic Simulated Experiment in Stonefish

5.2.2. System Performance in Simulation

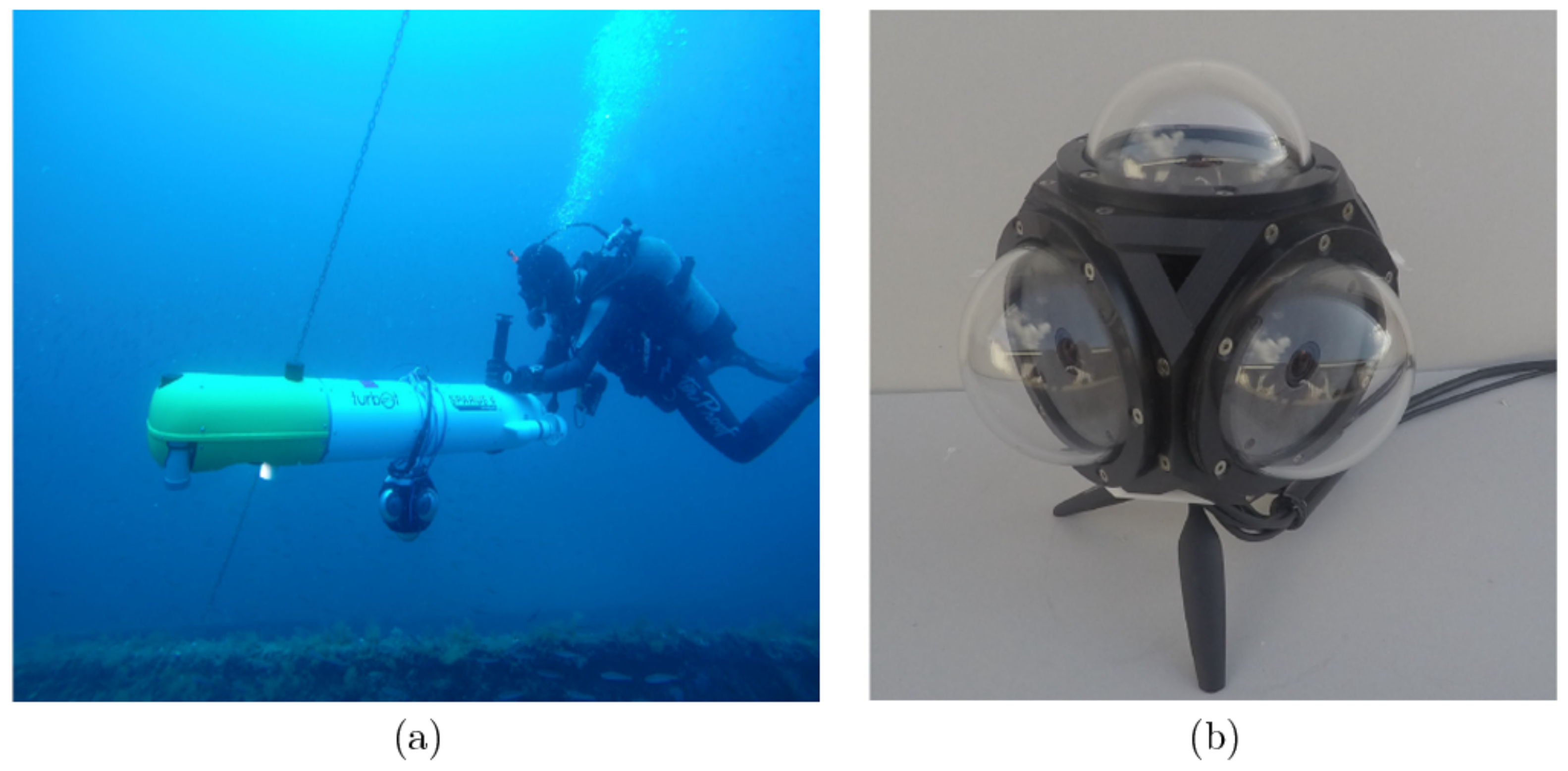

5.3. Real-Time AUV Deployment

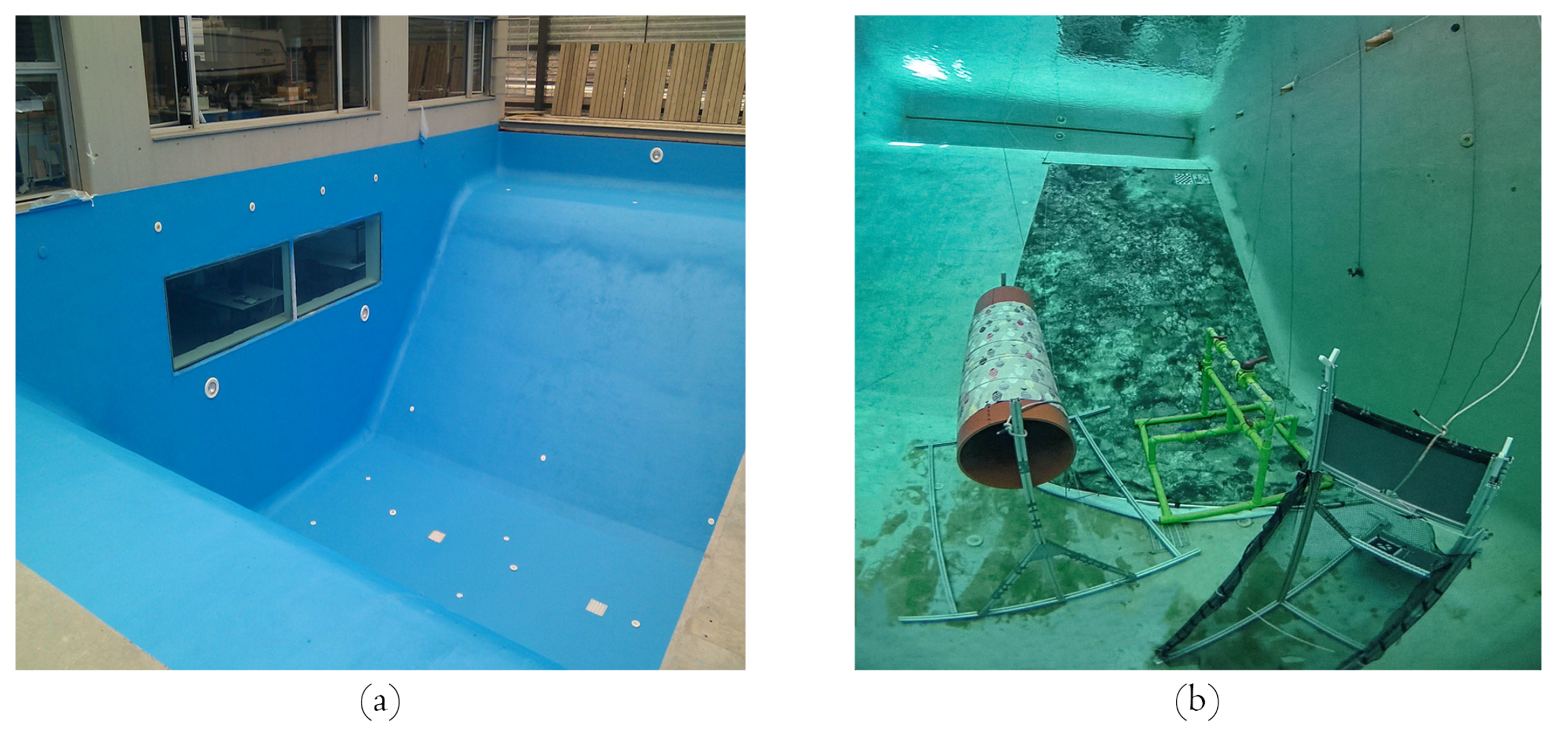

Experimental Setup

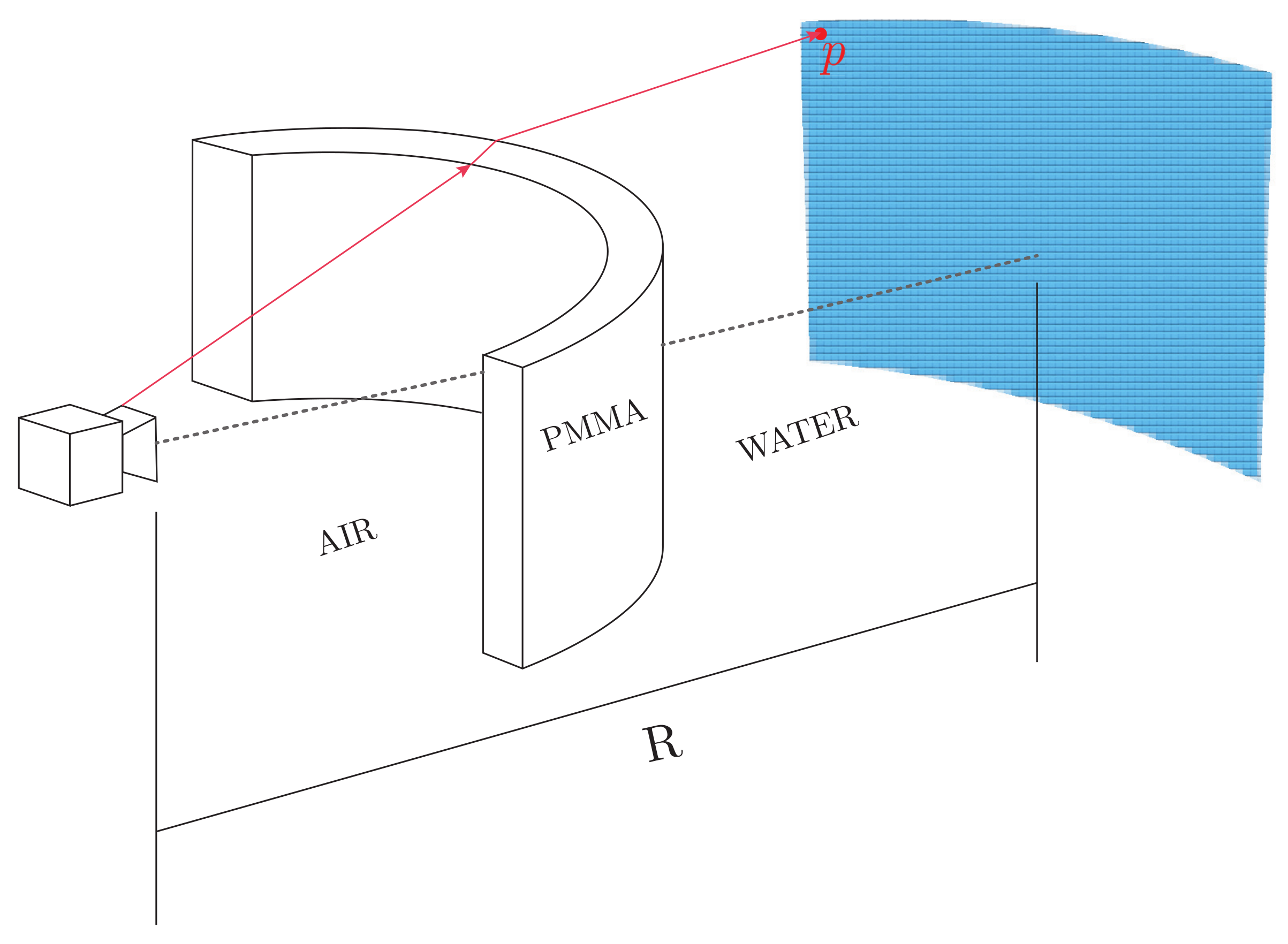

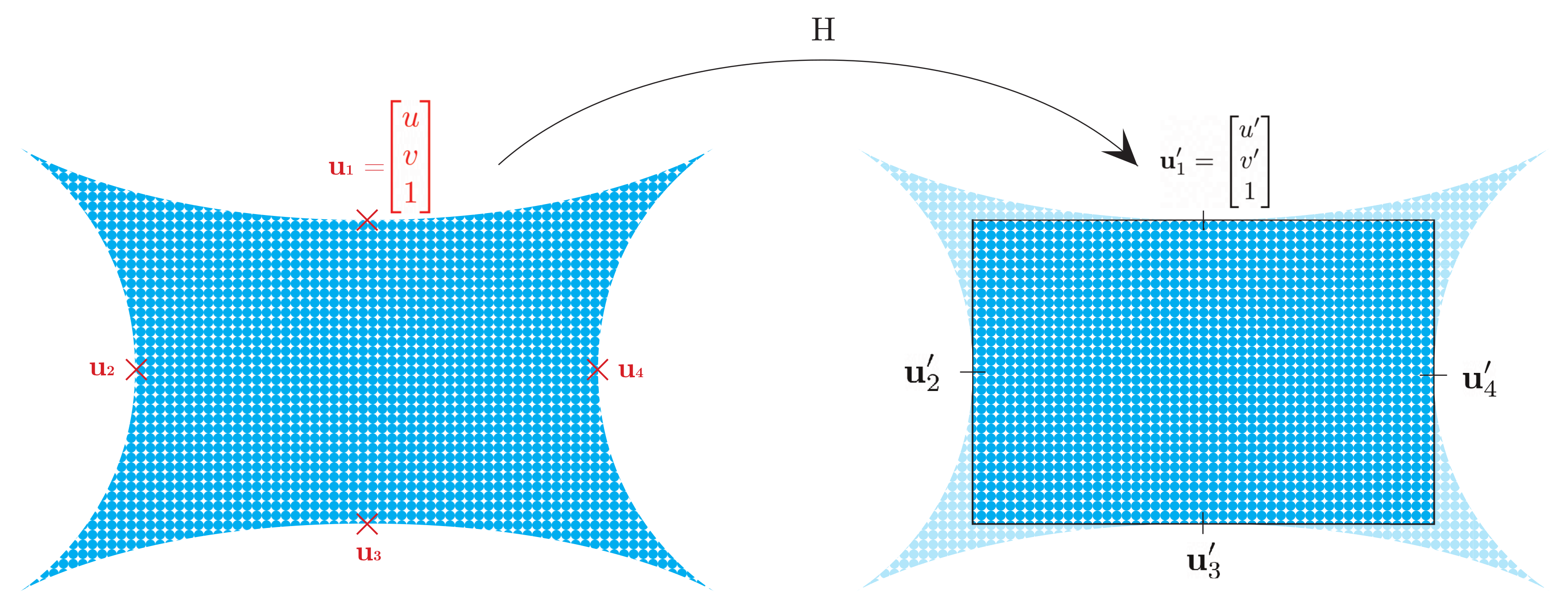

5.4. Camera Housing Image Distortion Correction

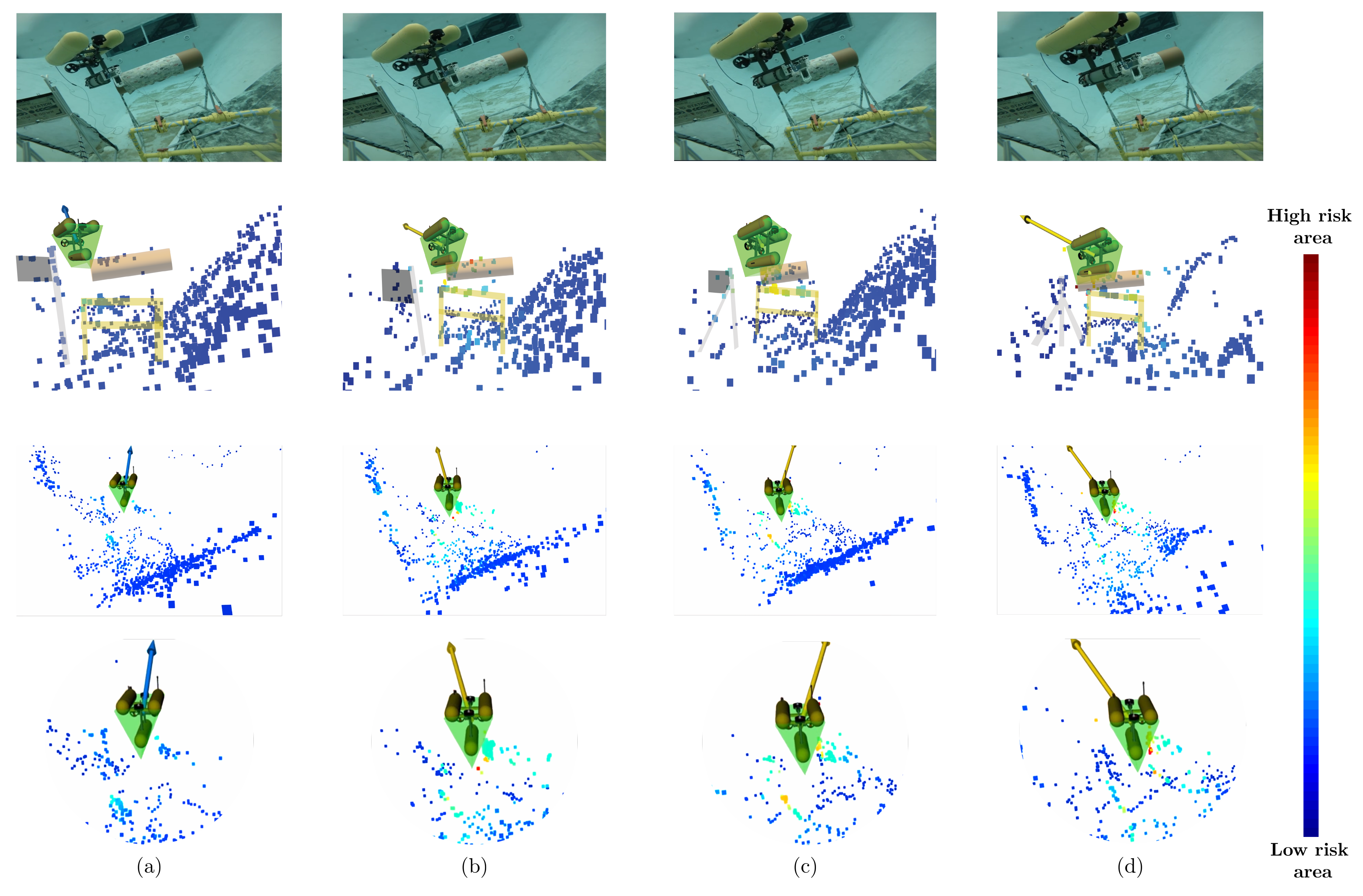

5.5. Evaluated Setups

5.5.1. Velocity Changes

5.5.2. Navigation through Narrow Passages

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Huletski, A.; Kartashov, D.; Krinkin, K. Evaluation of the modern visual SLAM methods. In Proceedings of the 2015 Artificial Intelligence and Natural Language and Information Extraction, Social Media and Web Search FRUCT Conference (AINL-ISMW FRUCT), St. Petersburg, Russia, 9–14 November 2015; IEEE: St. Petersburg, Russia, 2015; pp. 19–25. [Google Scholar] [CrossRef]

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An Overview to Visual Odometry and Visual SLAM: Applications to Mobile Robotics. Intell. Ind. Syst. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Saputra, M.R.U.; Markham, A.; Trigoni, N. Visual SLAM and Structure from Motion in Dynamic Environments: A Survey. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Davison, A.J. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; IEEE: Nice, France, 2003; Volume 2, pp. 1403–1410. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE: Nara, Japan, 2007; pp. 225–234. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Lim, H.; Lim, J.; Kim, H.J. Real-time 6-DOF monocular visual SLAM in a large-scale environment. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; IEEE: Hong Kong, China, 2014; pp. 1532–1539. [Google Scholar] [CrossRef] [Green Version]

- Pirker, K.; Ruther, M.; Bischof, H. CD SLAM—Continuous localization and mapping in a dynamic world. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: San Francisco, CA, USA, 2011; pp. 3990–3997. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open—Source Library for Visual, Visual—Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Engel, J.; Stuckler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Hamburg, Germany, 2015; pp. 1935–1942. [Google Scholar] [CrossRef]

- Caruso, D.; Engel, J.; Cremers, D. Large-scale direct SLAM for omnidirectional cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Hamburg, Germany, 2015; pp. 141–148. [Google Scholar] [CrossRef]

- Gamallo, C.; Mucientes, M.; Regueiro, C. Omnidirectional visual SLAM under severe occlusions. Robot. Auton. Syst. 2015, 65, 76–87. [Google Scholar] [CrossRef]

- Liu, S.; Guo, P.; Feng, L.; Yang, A. Accurate and Robust Monocular SLAM with Omnidirectional Cameras. Sensors 2019, 19, 4494. [Google Scholar] [CrossRef] [Green Version]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef] [Green Version]

- Urban, S.; Hinz, S. MultiCol-SLAM—A Modular Real-Time Multi-Camera SLAM System. arXiv 2016, arXiv:1610.07336. [Google Scholar]

- Kaess, M.; Dellaert, F. Probabilistic structure matching for visual SLAM with a multi-camera rig. Comput. Vis. Image Underst. 2010, 114, 286–296. [Google Scholar] [CrossRef]

- Zou, D.; Tan, P. CoSLAM: Collaborative Visual SLAM in Dynamic Environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 354–366. [Google Scholar] [CrossRef]

- Harmat, A.; Sharf, I.; Trentini, M. Parallel Tracking and Mapping with Multiple Cameras on an Unmanned Aerial Vehicle. In Intelligent Robotics and Applications; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., Steffen, B., Eds.; Series Title: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7506, pp. 421–432. [Google Scholar] [CrossRef]

- Harmat, A.; Trentini, M.; Sharf, I. Multi-Camera Tracking and Mapping for Unmanned Aerial Vehicles in Unstructured Environments. J. Intell. Robot. Syst. 2015, 78, 291–317. [Google Scholar] [CrossRef]

- Github/Urbste/MultiCol-SLAM. Available online: https://github.com/urbste/MultiCol-SLAM (accessed on 2 July 2021).

- Jiménez, P.; Thomas, F.; Torras, C. 3D collision detection: A survey. Comput. Graph. 2001, 25, 269–285. [Google Scholar] [CrossRef] [Green Version]

- Kockara, S.; Halic, T.; Iqbal, K.; Bayrak, C.; Rowe, R. Collision detection: A survey. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007; IEEE: Montreal, QC, Canada, 2007; pp. 4046–4051. [Google Scholar] [CrossRef]

- Haddadin, S.; De Luca, A.; Albu-Schaffer, A. Robot Collisions: A Survey on Detection, Isolation, and Identification. IEEE Trans. Robot. 2017, 33, 1292–1312. [Google Scholar] [CrossRef] [Green Version]

- Heo, Y.J.; Kim, D.; Lee, W.; Kim, H.; Park, J.; Chung, W.K. Collision Detection for Industrial Collaborative Robots: A Deep Learning Approach. IEEE Robot. Autom. Lett. 2019, 4, 740–746. [Google Scholar] [CrossRef]

- Nie, Q.; Zhao, Y.; Xu, L.; Li, B. A Survey of Continuous Collision Detection. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; IEEE: Guangzhou, China, 2020; pp. 252–257. [Google Scholar] [CrossRef]

- Ebert, D.; Henrich, D. Safe human-robot-cooperation: Image-based collision detection for industrial robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and System, Macau, China, 3–8 November 2019; IEEE: Lausanne, Switzerland, 2002; Volume 2. [Google Scholar] [CrossRef] [Green Version]

- Takahashi, O.; Schilling, R. Motion planning in a plane using generalized Voronoi diagrams. IEEE Trans. Robot. Autom. 1989, 5, 143–150. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Gavrilova, M. Roadmap-Based Path Planning—Using the Voronoi Diagram for a Clearance-Based Shortest Path. IEEE Robot. Autom. Mag. 2008, 15, 58–66. [Google Scholar] [CrossRef]

- Masehian, E.; Amin-Naseri, M.R. A voronoi diagram-visibility graph-potential field compound algorithm for robot path planning. J. Robot. Syst. 2004, 21, 275–300. [Google Scholar] [CrossRef]

- Pandey, A. Mobile Robot Navigation and Obstacle Avoidance Techniques: A Review. Int. Robot. Autom. J. 2017, 2, 00022. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.T.R.; Muhammad Saad, M.; Ru, Y.; Seo, J.; Kim, D. Aspects of unmanned aerial vehicles path planning: Overview and applications. Int. J. Commun. Syst. 2021, 34, e4827. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Institute of Electrical and Electronics Engineers: St. Louis, MO, USA, 1985; Volume 2, pp. 500–505. [Google Scholar] [CrossRef]

- Borenstein, J.; Koren, Y. The vector field histogram-fast obstacle avoidance for mobile robots. IEEE Trans. Robot. Autom. 1991, 7, 278–288. [Google Scholar] [CrossRef] [Green Version]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Borenstein, J.; Koren, Y. Real-time obstacle avoidance for fast mobile robots. IEEE Trans. Syst. Man Cybern. 1989, 19, 1179–1187. [Google Scholar] [CrossRef] [Green Version]

- Cherubini, A.; Spindler, F.; Chaumette, F. Autonomous Visual Navigation and Laser-Based Moving Obstacle Avoidance. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2101–2110. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Wu, Z.; Cao, Z.; Pang, L.; Ren, L.; Zhou, C. A laser-based multi-robot collision avoidance approach in unknown environments. Int. J. Adv. Robot. Syst. 2018, 15, 172988141875910. [Google Scholar] [CrossRef]

- Flacco, F.; Kroger, T.; De Luca, A.; Khatib, O. A depth space approach to human-robot collision avoidance. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Guangzhou, China, 11–14 December 2012; IEEE: Saint Paul, MN, USA, 2012; pp. 338–345. [Google Scholar] [CrossRef] [Green Version]

- Rehmatullah, F.; Kelly, J. Vision-Based Collision Avoidance for Personal Aerial Vehicles Using Dynamic Potential Fields. In Proceedings of the 2015 12th Conference on Computer and Robot Vision, Halifax, NS, Canada, 3–5 June 2015; IEEE: Halifax, NS, Canada, 2015; pp. 297–304. [Google Scholar] [CrossRef]

- Perez, E.; Winger, A.; Tran, A.; Garcia-Paredes, C.; Run, N.; Keti, N.; Bhandari, S.; Raheja, A. Autonomous Collision Avoidance System for a Multicopter using Stereoscopic Vision. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; IEEE: Dallas, TX, USA, 2018; pp. 579–588. [Google Scholar] [CrossRef]

- Lefèvre, S.; Vasquez, D.; Laugier, C. A survey on motion prediction and risk assessment for intelligent vehicles. ROBOMECH J. 2014, 1, 1. [Google Scholar] [CrossRef] [Green Version]

- Pham, H.; Smolka, S.A.; Stoller, S.D.; Phan, D.; Yang, J. A survey on unmanned aerial vehicle collision avoidance systems. arXiv 2015, arXiv:1508.07723. [Google Scholar]

- Ammoun, S.; Nashashibi, F. Real time trajectory prediction for collision risk estimation between vehicles. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; IEEE: Cluj-Napoca, Romania, 2009; pp. 417–422. [Google Scholar] [CrossRef] [Green Version]

- Pundlik, S.; Peli, E.; Luo, G. Time to Collision and Collision Risk Estimation from Local Scale and Motion. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Wang, S., Kyungnam, K., Benes, B., Moreland, K., Borst, C., DiVerdi, S., Eds.; Series Title: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6938, pp. 728–737. [Google Scholar] [CrossRef] [Green Version]

- Phillips, D.J.; Aragon, J.C.; Roychowdhury, A.; Madigan, R.; Chintakindi, S.; Kochenderfer, M.J. Real-time Prediction of Automotive Collision Risk from Monocular Video. arXiv 2019, arXiv:1902.01293. [Google Scholar]

- Berthelot, A.; Tamke, A.; Dang, T.; Breuel, G. A novel approach for the probabilistic computation of Time-To-Collision. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; IEEE: Alcal de Henares, Madrid, Spain, 2012; pp. 1173–1178. [Google Scholar] [CrossRef]

- Rummelhard, L.; Nègre, A.; Perrollaz, M.; Laugier, C. Probabilistic Grid-Based Collision Risk Prediction for Driving Application. In Experimental Robotics; Hsieh, M.A., Khatib, O., Kumar, V., Eds.; Series Title: Springer Tracts in Advanced Robotics; Springer: Cham, Switzerland, 2016; Volume 109, pp. 821–834. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Yang, Y.; Zhang, T.; Qu, X.; Cao, D.; Cheng, B.; Li, K. Risk assessment based collision avoidance decision-making for autonomous vehicles in multi-scenarios. Transp. Res. Part C Emerg. Technol. 2021, 122, 102820. [Google Scholar] [CrossRef]

- Strickland, M.; Fainekos, G.; Amor, H.B. Deep Predictive Models for Collision Risk Assessment in Autonomous Driving. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Brisbane, Australia, 2018; pp. 4685–4692. [Google Scholar] [CrossRef] [Green Version]

- Bansal, A.; Singh, J.; Verucchi, M.; Caccamo, M.; Sha, L. Risk Ranked Recall: Collision Safety Metric for Object Detection Systems in Autonomous Vehicles. In Proceedings of the 2021 10th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 7–10 June 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Hernández, J.D.; Vallicrosa, G.; Vidal, E.; Pairet, E.; Carreras, M.; Ridao, P. On-line 3D Path Planning for Close-proximity Surveying with AUVs. IFAC-PapersOnLine 2015, 48, 50–55. [Google Scholar] [CrossRef]

- Hernandez, J.D.; Vidal, E.; Vallicrosa, G.; Galceran, E.; Carreras, M. Online path planning for autonomous underwater vehicles in unknown environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: Seattle, WA, USA, 2015; pp. 1152–1157. [Google Scholar] [CrossRef]

- Hernández, J.; Istenič, K.; Gracias, N.; Palomeras, N.; Campos, R.; Vidal, E.; García, R.; Carreras, M. Autonomous Underwater Navigation and Optical Mapping in Unknown Natural Environments. Sensors 2016, 16, 1174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grefstad, O.; Schjolberg, I. Navigation and collision avoidance of underwater vehicles using sonar data. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; IEEE: Porto, Portugal, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Palomeras, N.; Hurtos, N.; Vidal, E.; Carreras, M. Autonomous Exploration of Complex Underwater Environments Using a Probabilistic Next-Best-View Planner. IEEE Robot. Autom. Lett. 2019, 4, 1619–1625. [Google Scholar] [CrossRef]

- Vidal, E.; Moll, M.; Palomeras, N.; Hernandez, J.D.; Carreras, M.; Kavraki, L.E. Online Multilayered Motion Planning with Dynamic Constraints for Autonomous Underwater Vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Montreal, QC, Canada, 2019; pp. 8936–8942. [Google Scholar] [CrossRef]

- Petillot, Y.; Ruiz, I.; Lane, D. Underwater vehicle obstacle avoidance and path planning using a multi-beam forward looking sonar. IEEE J. Ocean. Eng. 2001, 26, 240–251. [Google Scholar] [CrossRef] [Green Version]

- Tan, C.S.; Sutton, R.; Chudley, J. An integrated collision avoidance system for autonomous underwater vehicles. Int. J. Control 2007, 80, 1027–1049. [Google Scholar] [CrossRef]

- Zhang, W.; Wei, S.; Teng, Y.; Zhang, J.; Wang, X.; Yan, Z. Dynamic Obstacle Avoidance for Unmanned Underwater Vehicles Based on an Improved Velocity Obstacle Method. Sensors 2017, 17, 2742. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yan, Z.; Li, J.; Zhang, G.; Wu, Y. A Real-Time Reaction Obstacle Avoidance Algorithm for Autonomous Underwater Vehicles in Unknown Environments. Sensors 2018, 18, 438. [Google Scholar] [CrossRef] [Green Version]

- Wiig, M.S.; Pettersen, K.Y.; Krogstad, T.R. A 3D reactive collision avoidance algorithm for underactuated underwater vehicles. J. Field Robot. 2020, 37, 1094–1122. [Google Scholar] [CrossRef] [Green Version]

- Bosch, J.; Gracias, N.; Ridao, P.; Ribas, D. Omnidirectional Underwater Camera Design and Calibration. Sensors 2015, 15, 6033–6065. [Google Scholar] [CrossRef] [Green Version]

- Bosch, J.; Gracias, N.; Ridao, P.; Istenič, K.; Ribas, D. Close-Range Tracking of Underwater Vehicles Using Light Beacons. Sensors 2016, 16, 429. [Google Scholar] [CrossRef] [PubMed]

- Bosch, J.; Istenic, K.; Gracias, N.; Garcia, R.; Ridao, P. Omnidirectional Multicamera Video Stitching Using Depth Maps. IEEE J. Ocean. Eng. 2020, 45, 1337–1352. [Google Scholar] [CrossRef]

- Rodriguez-Teiles, F.G.; Perez-Alcocer, R.; Maldonado-Ramirez, A.; Torres-Mendez, L.A.; Dey, B.B.; Martinez-Garcia, E.A. Vision-based reactive autonomous navigation with obstacle avoidance: Towards a non-invasive and cautious exploration of marine habitat. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; IEEE: Hong Kong, China, 2014; pp. 3813–3818. [Google Scholar] [CrossRef]

- Wirth, S.; Negre Carrasco, P.L.; Codina, G.O. Visual odometry for autonomous underwater vehicles. In Proceedings of the 2013 MTS/IEEE OCEANS—Bergen, Bergen, Norway, 10–14 June 2013; IEEE: Bergen, Norway, 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Gaya, J.O.; Goncalves, L.T.; Duarte, A.C.; Zanchetta, B.; Drews, P.; Botelho, S.S. Vision-Based Obstacle Avoidance Using Deep Learning. In Proceedings of the 2016 XIII Latin American Robotics Symposium and IV Brazilian Robotics Symposium (LARS/SBR), Recife, Brazil, 8–12 October 2016; IEEE: Recife, Brazil, 2016; pp. 7–12. [Google Scholar] [CrossRef]

- Manderson, T.; Higuera, J.C.G.; Cheng, R.; Dudek, G. Vision-Based Autonomous Underwater Swimming in Dense Coral for Combined Collision Avoidance and Target Selection. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Madrid, Spain, 2018; pp. 1885–1891. [Google Scholar] [CrossRef]

- Ochoa, E.; Gracias, N.; Istenič, K.; Garcia, R.; Bosch, J.; Cieślak, P. Allowing untrained scientists to safely pilot ROVs: Early collision detection and avoidance using omnidirectional vision. In Proceedings of the Global Oceans 2020: Singapore—U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Weisstein, E.W. Point-Line Distance—3-Dimensional. Available online: https://mathworld.wolfram.com/Point-LineDistance3-Dimensional.html (accessed on 30 June 2022).

- Bosch, J.; Gracias, N.; Ridao, P.; Ribas, D.; Istenič, K.; Garcia, R.; Rossi, I.R. Immersive Touring for Marine Archaeology. Application of a New Compact Omnidirectional Camera to Mapping the Gnalić shipwreck with an AUV. In ROBOT 2017: Third Iberian Robotics Conference; Ollero, A., Sanfeliu, A., Montano, L., Lau, N., Cardeira, C., Eds.; Series Title: Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; Volume 693, pp. 183–195. [Google Scholar] [CrossRef]

- Cieslak, P. Stonefish: An Advanced Open-Source Simulation Tool Designed for Marine Robotics, With a ROS Interface. In Proceedings of the OCEANS 2019—Marseille, Marseille, France, 17–20 June 2019; IEEE: Marseille, France, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Ghatak, A. Optics, 2nd ed.; McGraw-Hill Higher Education: Boston, MA, USA, 2012. [Google Scholar]

- Ray Tracing—Intersection. Available online: https://www.rose-hulman.edu/class/csse/csse451/examples/notes/present7.pdf (accessed on 30 June 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ochoa, E.; Gracias, N.; Istenič, K.; Bosch, J.; Cieślak, P.; García, R. Collision Detection and Avoidance for Underwater Vehicles Using Omnidirectional Vision. Sensors 2022, 22, 5354. https://doi.org/10.3390/s22145354

Ochoa E, Gracias N, Istenič K, Bosch J, Cieślak P, García R. Collision Detection and Avoidance for Underwater Vehicles Using Omnidirectional Vision. Sensors. 2022; 22(14):5354. https://doi.org/10.3390/s22145354

Chicago/Turabian StyleOchoa, Eduardo, Nuno Gracias, Klemen Istenič, Josep Bosch, Patryk Cieślak, and Rafael García. 2022. "Collision Detection and Avoidance for Underwater Vehicles Using Omnidirectional Vision" Sensors 22, no. 14: 5354. https://doi.org/10.3390/s22145354