A Deep Learning Approach to Classify Sitting and Sleep History from Raw Accelerometry Data during Simulated Driving

Abstract

:1. Introduction

2. Materials and Methods

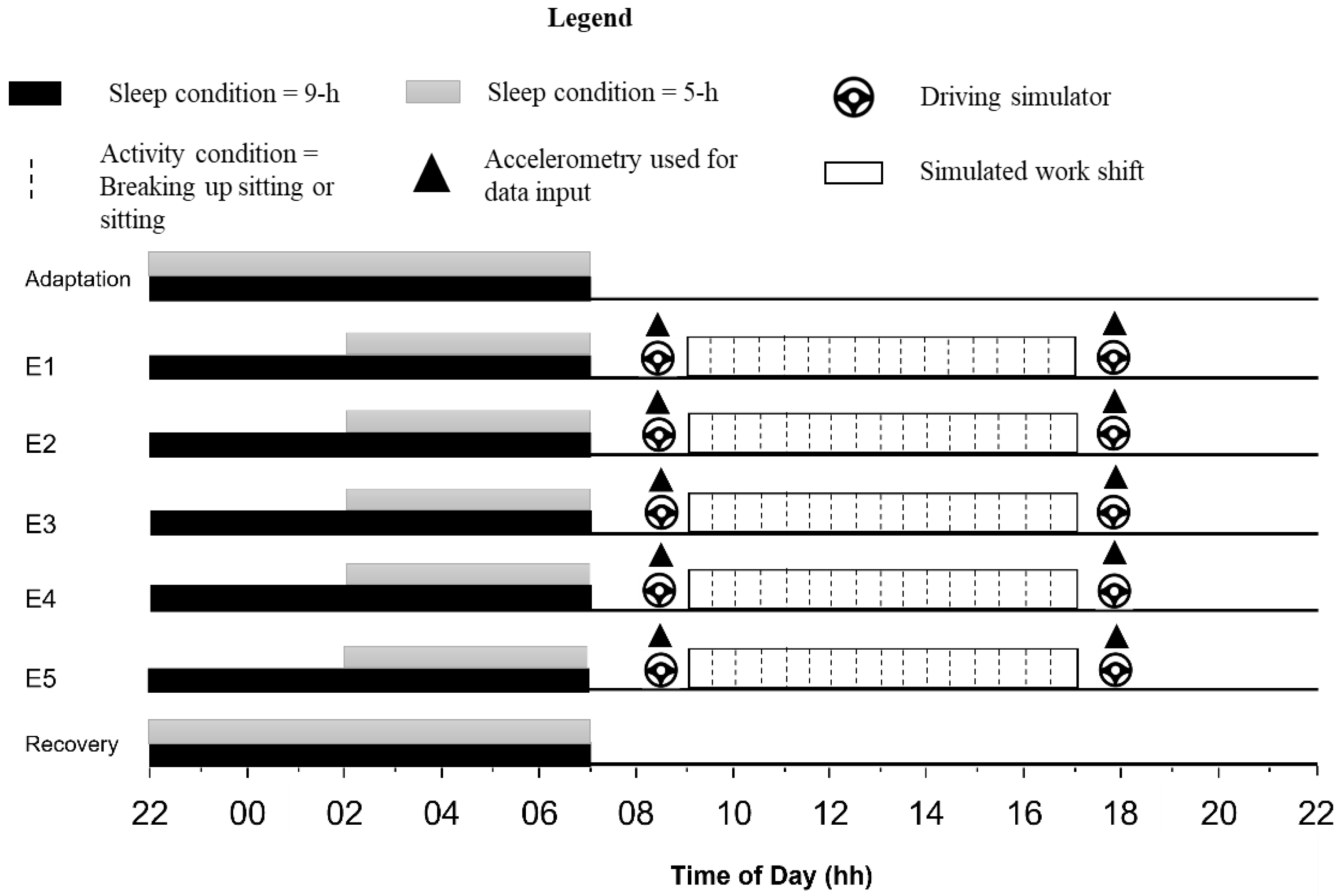

2.1. Study Design

2.2. Participants

2.3. Experimental Procedure

2.4. Measures

2.4.1. Accelerometry

2.4.2. Driving Simulator

2.4.3. Sleep Monitoring

2.5. Statistical Analysis

2.6. Accelerometry Data Preparation

2.7. Neural Network Architecture

2.7.1. DixonNet

2.7.2. ResNet-18

2.7.3. Model Settings and Training

2.8. Class Activation Mapping (CAM)

3. Results

3.1. Ground Truth Verification

3.2. Model Performance

3.3. Class Activation Mapping (CAM)

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, C.C.; Dorrian, J.; Grant, C.L.; Pajcin, M.; Coates, A.M.; Kennaway, D.J.; Wittert, G.A.; Heilbronn, L.K.; Della Vedova, C.B.; Banks, S. It’s not just what you eat but when: The impact of eating a meal during simulated shift work on driving performance. Chronobiol. Int. 2017, 34, 66–77. [Google Scholar] [CrossRef] [PubMed]

- Kosmadopoulos, A.; Sargent, C.; Zhou, X.; Darwent, D.; Matthews, R.W.; Dawson, D.; Roach, G.D. The efficacy of objective and subjective predictors of driving performance during sleep restriction and circadian misalignment. Accid. Anal. Prev. 2017, 99, 445–451. [Google Scholar] [CrossRef]

- Li, P.; Markkula, G.; Li, Y.; Merat, N. Is improved lane keeping during cognitive load caused by increased physical arousal or gaze concentration toward the road center? Accident 2018, 117, 65. [Google Scholar] [CrossRef]

- Jackson, M.L.; Croft, R.J.; Kennedy, G.A.; Owens, K.; Howard, M.E. Cognitive components of simulated driving performance: Sleep loss effects and predictors. Accid. Anal. Prev. 2013, 50, 438–444. [Google Scholar] [CrossRef] [PubMed]

- Davidović, J.; Pešić, D.; Lipovac, K.; Antić, B. The Significance of the Development of Road Safety Performance Indicators Related to Driver Fatigue. Transp. Res. Procedia 2020, 45, 333–342. [Google Scholar] [CrossRef]

- Meng, F.; Li, S.; Cao, L.; Li, M.; Peng, Q.; Wang, C.; Zhang, W. Driving Fatigue in Professional Drivers: A Survey of Truck and Taxi Drivers. Traffic Inj. Prev. 2015, 16, 474–483. [Google Scholar] [CrossRef] [PubMed]

- Vogelpohl, T.; Kühn, M.; Hummel, T.; Vollrath, M. Asleep at the automated wheel—Sleepiness and fatigue during highly automated driving. Accid. Anal. Prev. 2019, 126, 70–84. [Google Scholar] [CrossRef]

- Mahajan, K.; Velaga, N.R. Effects of Partial Sleep Deprivation on Braking Response of Drivers in Hazard Scenarios. Accid. Anal. Prev. 2020, 142, 105545. [Google Scholar] [CrossRef]

- Dunstan, D.W.; Wheeler, M.J.; Ellis, K.A.; Cerin, E.; Green, D.J. Interacting effects of exercise with breaks in sitting time on cognitive and metabolic function in older adults: Rationale and design of a randomised crossover trial. Ment. Health Phys. Act. 2018, 15, 11–16. [Google Scholar] [CrossRef]

- Edwardson, C.L.; Yates, T.; Biddle, S.J.H.; Davies, M.J.; Dunstan, D.W.; Esliger, D.W.; Gray, L.J.; Jackson, B.; O’Connell, S.E.; Waheed, G.; et al. Effectiveness of the Stand More AT (SMArT) Work intervention: Cluster randomised controlled trial. BMJ 2018, 363, 15. [Google Scholar] [CrossRef] [Green Version]

- Falck, R.S.; Davis, J.C.; Liu-Ambrose, T. What is the association between sedentary behaviour and cognitive function? A systematic review. Br. J. Sports Med. 2017, 51, 800–811. [Google Scholar] [CrossRef] [PubMed]

- Kline, C.E.; Hillman, C.H.; Bloodgood Sheppard, B.; Tennant, B.; Conroy, D.E.; Macko, R.F.; Marquez, D.X.; Petruzzello, S.J.; Powell, K.E.; Erickson, K.I. Physical activity and sleep: An updated umbrella review of the 2018 Physical Activity Guidelines Advisory Committee report. Sleep Med. Rev. 2021, 58, 101489. [Google Scholar] [CrossRef] [PubMed]

- Chrismas, B.C.R.; Taylor, L.; Cherif, A.; Sayegh, S.; Bailey, D.P. Breaking up prolonged sitting with moderate-intensity walking improves attention and executive function in Qatari females. PLoS ONE 2019, 14, e0219565. [Google Scholar] [CrossRef] [PubMed]

- Cyganek, B.; Gruszczyński, S. Hybrid computer vision system for drivers’ eye recognition and fatigue monitoring. Neurocomputing 2014, 126, 78–94. [Google Scholar] [CrossRef]

- Hooda, R.; Joshi, V.; Shah, M. A comprehensive review of approaches to detect fatigue using machine learning techniques. Chronic Dis. Transl. Med. 2021, 8, 26–35. [Google Scholar] [CrossRef]

- Aboagye, I.A.; Owusu-Banahene, W.; Amexo, K.; Boakye-Yiadom, K.A.; Sowah, R.A.; Sowah, N.L. Design and Development of Computer Vision-Based Driver Fatigue Detection and Alert System. In Proceedings of the 2021 IEEE 8th International Conference on Adaptive Science and Technology (ICAST), Accra, Ghana, 25–26 November 2021; pp. 1–6. [Google Scholar]

- Rezaee, K.; Alavi, S.R.; Madanian, M.; Ghezelbash, M.R.; Khavari, H.; Haddadnia, J. Real-time intelligent alarm system of driver fatigue based on video sequences. In Proceedings of the 2013 First RSI/ISM International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 13–15 February 2013; pp. 378–383. [Google Scholar]

- Ji, Q.; Zhu, Z.; Lan, P. Real-time nonintrusive monitoring and prediction of driver fatigue. IEEE Trans. Veh. Technol. 2004, 53, 1052–1068. [Google Scholar] [CrossRef]

- Albadawi, Y.; Takruri, M.; Awad, M. A Review of Recent Developments in Driver Drowsiness Detection Systems. Sensors 2022, 22, 2069. [Google Scholar] [CrossRef]

- Zhang, Y.; Hua, C. Driver fatigue recognition based on facial expression analysis using local binary patterns. Optik 2015, 126, 4501–4505. [Google Scholar] [CrossRef]

- Dong, L.; Cai, J. An Overview of Machine Learning Methods used in Fatigue Driving Detection. In Proceedings of the 2022 7th International Conference on Intelligent Information Technology, Foshan, China, 25–27 February 2022; pp. 65–69. [Google Scholar]

- Adão Martins, N.R.; Annaheim, S.; Spengler, C.M.; Rossi, R.M. Fatigue Monitoring Through Wearables: A State-of-the-Art Review. Front. Physiol. 2021, 12, 790292. [Google Scholar] [CrossRef]

- Cori, J.M.; Manousakis, J.E.; Koppel, S.; Ferguson, S.A.; Sargent, C.; Howard, M.E.; Anderson, C. An evaluation and comparison of commercial driver sleepiness detection technology: A rapid review. Physiol. Meas. 2021, 42, 074007. [Google Scholar] [CrossRef]

- Doudou, M.; Bouabdallah, A.; Berge-Cherfaoui, V. Driver Drowsiness Measurement Technologies: Current Research, Market Solutions, and Challenges. Int. J. Intell. Transp. Syst. Res. 2020, 18, 297–319. [Google Scholar] [CrossRef]

- Kundinger, T.; Sofra, N.; Riener, A. Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection. Sensors 2020, 20, 1029. [Google Scholar] [CrossRef] [PubMed]

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting Driver Drowsiness Based on Sensors: A Review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef]

- Lee, B.; Lee, B.; Chung, W. Standalone Wearable Driver Drowsiness Detection System in a Smartwatch. IEEE Sens. J. 2016, 16, 5444–5451. [Google Scholar] [CrossRef]

- Edwardson, C.L.; Winkler, E.A.H.; Bodicoat, D.H.; Yates, T.; Davies, M.J.; Dunstan, D.W.; Healy, G.N. Considerations when using the activPAL monitor in field-based research with adult populations. J. Sport Health Sci. 2017, 6, 162–178. [Google Scholar] [CrossRef] [PubMed]

- Hallvig, D.; Anund, A.; Fors, C.; Kecklund, G.; Karlsson, J.G.; Wahde, M.; Åkerstedt, T. Sleepy driving on the real road and in the simulator—A comparison. Accid. Anal. Prev. 2013, 50, 44–50. [Google Scholar] [CrossRef]

- Dixon, P.C.; Schütte, K.H.; Vanwanseele, B.; Jacobs, J.V.; Dennerlein, J.T.; Schiffman, J.M.; Fournier, P.A.; Hu, B. Machine learning algorithms can classify outdoor terrain types during running using accelerometry data. Gait Posture 2019, 74, 176–181. [Google Scholar] [CrossRef]

- Fong, R.C.; Scheirer, W.J.; Cox, D.D. Using human brain activity to guide machine learning. Sci. Rep. 2018, 8, 5397. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Ferreira, J.; Carvalho, E.; Ferreira, B.V.; De Souza, C.; Suhara, Y.; Pentland, A.; Pessin, G. Driver behavior profiling: An investigation with different smartphone sensors and machine learning. PLoS ONE 2017, 12, e0174959. [Google Scholar] [CrossRef]

- Sheng, B.; Moosman, O.M.; Del Pozo-Cruz, B.; Del Pozo-Cruz, J.; Alfonso-Rosa, R.M.; Zhang, Y. A comparison of different machine learning algorithms, types and placements of activity monitors for physical activity classification. Measurement 2020, 154, 107480. [Google Scholar] [CrossRef]

- Shi, J.; Chen, D.; Wang, M. Pre-Impact Fall Detection with CNN-Based Class Activation Mapping Method. Sensors 2020, 20, 4750. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Vincent, G.E.; Gupta, C.C.; Sprajcer, M.; Vandelanotte, C.; Duncan, M.J.; Tucker, P.; Lastella, M.; Tuckwell, G.A.; Ferguson, S.A. Are prolonged sitting and sleep restriction a dual curse for the modern workforce? a randomised controlled trial protocol. BMJ Open 2020, 10, e040613. [Google Scholar] [CrossRef] [PubMed]

- Chau, J.Y.; Daley, M.; Dunn, S.; Srinivasan, A.; Do, A.; Bauman, A.E.; van der Ploeg, H.P. The effectiveness of sit-stand workstations for changing office workers’ sitting time: Results from the Stand@Work randomized controlled trial pilot. Int. J. Behav. Nutr. Phys. Act. 2014, 11, 127. [Google Scholar] [CrossRef]

- Katzmarzyk, T.P. Standing and Mortality in a Prospective Cohort of Canadian Adults. Med. Sci. Sports Exerc. 2014, 46, 940–946. [Google Scholar] [CrossRef]

- Stamatakis, E.; Gale, J.; Bauman, A.; Ekelund, U.; Hamer, M.; Ding, D. Sitting Time, Physical Activity, and Risk of Mortality in Adults. J. Am. Coll. Cardiol. 2019, 73, 2062–2072. [Google Scholar] [CrossRef]

- Vincent, G.E.; Jay, S.M.; Sargent, C.; Kovac, K.; Vandelanotte, C.; Ridgers, N.D.; Ferguson, S.A. The impact of breaking up prolonged sitting on glucose metabolism and cognitive function when sleep is restricted. Neurobiol. Sleep Circadian Rhythm. 2018, 4, 17–23. [Google Scholar] [CrossRef]

- Gupta, C.C.; Centofanti, S.; Dorrian, J.; Coates, A.; Stepien, J.M.; Kennaway, D.; Wittert, G.; Heilbronn, L.; Catcheside, P.; Noakes, M.; et al. Altering meal timing to improve cognitive performance during simulated nightshifts. Chronobiol. Int. 2019, 36, 1691–1713. [Google Scholar] [CrossRef]

- Marottoli, R.; Allore, H.; Araujo, K.; Iannone, L.; Acampora, D.; Gottschalk, M.; Charpentier, P.; Kasl, S.; Peduzzi, P. A Randomized Trial of a Physical Conditioning Program to Enhance the Driving Performance of Older Persons. J. Gen. Intern. Med. 2007, 22, 590–597. [Google Scholar] [CrossRef]

- Marino, M.; Li, Y.; Rueschman, M.N.; Winkelman, J.W.; Ellenbogen, J.M.; Solet, J.M.; Dulin, H.; Berkman, L.F.; Buxton, O.M. Measuring Sleep: Accuracy, Sensitivity, and Specificity of Wrist Actigraphy Compared to Polysomnography. Sleep 2013, 36, 1747–1755. [Google Scholar] [CrossRef]

- Ridgers, N.D.; Fairclough, S. Assessing free-living physical activity using accelerometry: Practical issues for researchers and practitioners. Eur. J. Sport Sci. 2011, 11, 205–213. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 18 July 2022).

- Kraft, D.; Srinivasan, K.; Bieber, G. Deep Learning Based Fall Detection Algorithms for Embedded Systems, Smartwatches, and IoT Devices Using Accelerometers. Technologies 2020, 8, 72. [Google Scholar] [CrossRef]

- Cuadrado, J.; Michaud, F.; Lugrís, U.; Pérez Soto, M. Using Accelerometer Data to Tune the Parameters of an Extended Kalman Filter for Optical Motion Capture: Preliminary Application to Gait Analysis. Sensors 2021, 21, 427. [Google Scholar] [CrossRef]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Guan, X.; Liang, B.; Lai, Y.; Luo, X. Research on Overfitting of Deep Learning. In Proceedings of the 2019 15th International Conference on Computational Intelligence and Security (CIS), Macau, China, 13–16 December 2019; pp. 78–81. [Google Scholar]

- Filtness, A.J.; Beanland, V.; Miller, K.A.; Larue, G.S.; Hawkins, A. Sleep loss and change detection in simulated driving. Chronobiol. Int. 2020, 37, 1430–1440. [Google Scholar] [CrossRef] [PubMed]

- Philip, P.; Sagaspe, P.; Taillard, J.; Valtat, C.; Moore, N.; Åkerstedt, T.; Charles, A.; Bioulac, B. Fatigue, Sleepiness, and Performance in Simulated Versus Real Driving Conditions. Sleep 2005, 28, 1511–1516. [Google Scholar] [CrossRef]

- Otmani, S.; Pebayle, T.; Roge, J.; Muzet, A. Effect of driving duration and partial sleep deprivation on subsequent alertness and performance of car drivers. Physiol. Behav. 2005, 84, 715–724. [Google Scholar] [CrossRef]

- Van Dongen, H.P.; Maislin, G.; Mullington, J.M.; Dinges, D.F. The cumulative cost of additional wakefulness: Dose-response effects on neurobehavioral functions and sleep physiology from chronic sleep restriction and total sleep deprivation. Sleep 2003, 26, 117–126. [Google Scholar] [CrossRef]

- Wennberg, P.; Boraxbekk, C.-J.; Wheeler, M.; Howard, B.; Dempsey, P.C.; Lambert, G.; Eikelis, N.; Larsen, R.; Sethi, P.; Occleston, J.; et al. Acute effects of breaking up prolonged sitting on fatigue and cognition: A pilot study. BMJ Open 2016, 6, 9. [Google Scholar] [CrossRef]

- Chueh, T.Y.; Chen, Y.C.; Hung, T.M. Acute effect of breaking up prolonged sitting on cognition: A systematic review. BMJ Open 2022, 12, e050458. [Google Scholar] [CrossRef]

- Tuckwell, G.A.; Vincent, G.E.; Gupta, C.C.; Ferguson, S.A. Does breaking up sitting in office-based settings result in cognitive performance improvements which last throughout the day? A review of the evidence. Ind. Health 2022. [Google Scholar] [CrossRef]

- Mullane, S.L.; Buman, M.P.; Zeigler, Z.S.; Crespo, N.C.; Gaesser, G.A. Acute effects on cognitive performance following bouts of standing and light-intensity physical activity in a simulated workplace environment. J. Sci. Med. Sport 2017, 20, 489–493. [Google Scholar] [CrossRef] [PubMed]

- Chaabene, S.; Bouaziz, B.; Boudaya, A.; Hökelmann, A.; Ammar, A.; Chaari, L. Convolutional Neural Network for Drowsiness Detection Using EEG Signals. Sensors 2021, 21, 1734. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.-G.; Park, J.-H.; Pu, C.-C.; Chung, W.-Y. Smartwatch-Based Driver Vigilance Indicator With Kernel-Fuzzy-C-Means-Wavelet Method. IEEE Sens. J. 2016, 16, 242–253. [Google Scholar] [CrossRef]

- Lee, B.G.; Lee, B.L.; Chung, W.Y. Smartwatch-based driver alertness monitoring with wearable motion and physiological sensor. Annu Int Conf IEEE Eng Med Biol Soc 2015, 2015, 6126–6129. [Google Scholar] [CrossRef] [PubMed]

- Arvidsson, D.; Fridolfsson, J.; Börjesson, M. Measurement of physical activity in clinical practice using accelerometers. J. Intern. Med. 2019, 286, 137–153. [Google Scholar] [CrossRef]

- Steeves, J.A.; Bowles, H.R.; McClain, J.J.; Dodd, K.W.; Brychta, R.J.; Wang, J.; Chen, K.Y. Ability of Thigh-Worn ActiGraph and activPAL Monitors to Classify Posture and Motion. Med. Sci. Sports Exerc. 2015, 47, 952–959. [Google Scholar] [CrossRef]

- Edwardson, C.L.; Rowlands, A.V.; Bunnewell, S.; Sanders, J.; Esliger, D.W.; Gorely, T.; O’Connell, S.; Davies, M.J.; Khunti, K.; Yates, T. Accuracy of Posture Allocation Algorithms for Thigh- and Waist-Worn Accelerometers. Med. Sci. Sports Exerc. 2016, 48, 1085–1090. [Google Scholar] [CrossRef]

- He, B.; Bai, J.; Zipunnikov, V.V.; Koster, A.; Caserotti, P.; Lange-Maia, B.; Glynn, N.W.; Harris, T.B.; Crainiceanu, C.M. Predicting Human Movement with Multiple Accelerometers Using Movelets. Med. Sci. Sports Exerc. 2014, 46, 1859–1866. [Google Scholar] [CrossRef]

- Inoue, Y.; Komada, Y. Sleep loss, sleep disorders and driving accidents. Sleep Biol. Rhythm. 2014, 12, 96–105. [Google Scholar] [CrossRef]

- Liu, S.-Y.; Perez, M.A.; Lau, N. The impact of sleep disorders on driving safety—Findings from the Second Strategic Highway Research Program naturalistic driving study. Sleep 2018, 41, zsy023. [Google Scholar] [CrossRef] [PubMed]

- Cori, J.M.; Gordon, C.; Jackson, M.L.; Collins, A.; Philip, R.; Stevens, D.; Naqvi, A.; Hosking, R.; Anderson, C.; Barnes, M.; et al. The impact of aging on driving performance in patients with untreated obstructive sleep apnea. Sleep Health 2021, 7, 652–660. [Google Scholar] [CrossRef]

- Akerstedt, T.; Ingre, M.; Kecklund, G.; Anund, A.; Sandberg, D.; Wahde, M.; Philip, P.; Kronberg, P. Reaction of sleepiness indicators to partial sleep deprivation, time of day and time on task in a driving simulator--the DROWSI project. J. Sleep Res. 2010, 19, 298–309. [Google Scholar] [CrossRef] [PubMed]

- Caponecchia, C.; Williamson, A. Drowsiness and driving performance on commuter trips. J. Saf. Res. 2018, 66, 179–186. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Ye, R. Does daily commuting behavior matter to employee productivity? J. Transp. Geogr. 2019, 76, 130–141. [Google Scholar] [CrossRef]

- Sprajcer, M.; Crowther, M.E.; Vincent, G.E.; Thomas, M.J.W.; Gupta, C.C.; Kahn, M.; Ferguson, S.A. New parents and driver safety: What’s sleep got to do with it? A systematic review. Transp. Res. Part F: Traffic Psychol. Behav. 2022, 89, 183–199. [Google Scholar] [CrossRef]

- Johnson, D.A.; Trivedi, M.M. Driving style recognition using a smartphone as a sensor platform. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1609–1615. [Google Scholar]

- Cai, Q.; Abdel-Aty, M.; Mahmoud, N.; Ugan, J.; Al-Omari, M.M.A. Developing a grouped random parameter beta model to analyze drivers’ speeding behavior on urban and suburban arterials with probe speed data. Accid. Anal. Prev. 2021, 161, 106386. [Google Scholar] [CrossRef]

- Allouch, A.; Koubaa, A.; Abbes, T.; Ammar, A. RoadSense: Smartphone Application to Estimate Road Conditions Using Accelerometer and Gyroscope. IEEE Sens. J. 2017, 17, 4231–4238. [Google Scholar] [CrossRef]

- Basavaraju, A.; Du, J.; Zhou, F.; Ji, J. A Machine Learning Approach to Road Surface Anomaly Assessment Using Smartphone Sensors. IEEE Sens. J. 2020, 20, 2635–2647. [Google Scholar] [CrossRef]

| Sleep History | |||

|---|---|---|---|

| 9-h | 5-h | ||

| Sitting history | Sitting | 22 | 22 |

| Breaking up sitting | 20 | 20 | |

| Model | Class | Accuracy (%) | F-Score |

|---|---|---|---|

| Dixon Net | Sitting history | 77.24 (±2.61) | 0.76 (±0.03) |

| Sleep history | 75.71 (±2.69) | 0.76 (±0.02) | |

| ResNet-18 | Sitting history | 88.63 (±1.36) | 0.88 (±0.01) |

| Sleep history | 88.63 (±1.15) | 0.88 (±0.01) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tuckwell, G.A.; Keal, J.A.; Gupta, C.C.; Ferguson, S.A.; Kowlessar, J.D.; Vincent, G.E. A Deep Learning Approach to Classify Sitting and Sleep History from Raw Accelerometry Data during Simulated Driving. Sensors 2022, 22, 6598. https://doi.org/10.3390/s22176598

Tuckwell GA, Keal JA, Gupta CC, Ferguson SA, Kowlessar JD, Vincent GE. A Deep Learning Approach to Classify Sitting and Sleep History from Raw Accelerometry Data during Simulated Driving. Sensors. 2022; 22(17):6598. https://doi.org/10.3390/s22176598

Chicago/Turabian StyleTuckwell, Georgia A., James A. Keal, Charlotte C. Gupta, Sally A. Ferguson, Jarrad D. Kowlessar, and Grace E. Vincent. 2022. "A Deep Learning Approach to Classify Sitting and Sleep History from Raw Accelerometry Data during Simulated Driving" Sensors 22, no. 17: 6598. https://doi.org/10.3390/s22176598