Design of a Multimodal Oculometric Sensor Contact Lens

Abstract

:1. Introduction

2. Implementation in an SCL

2.1. Eye Gaze SCL

2.2. Pupillometer SCL

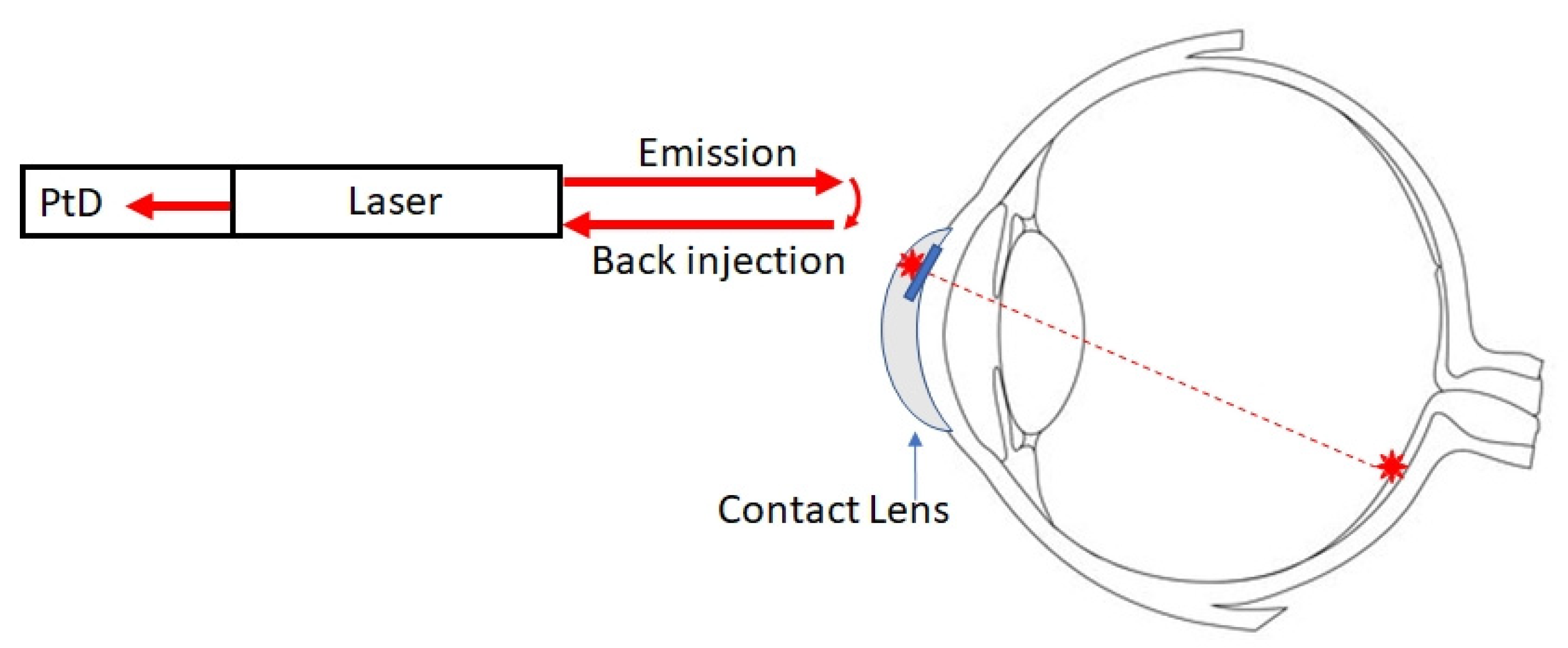

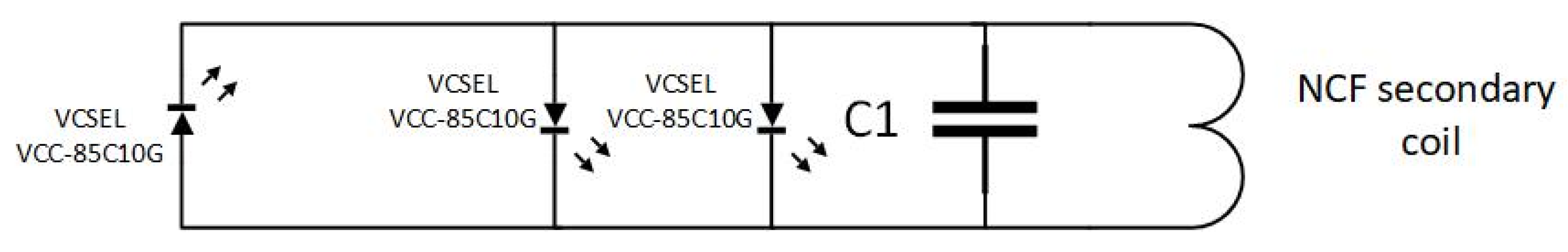

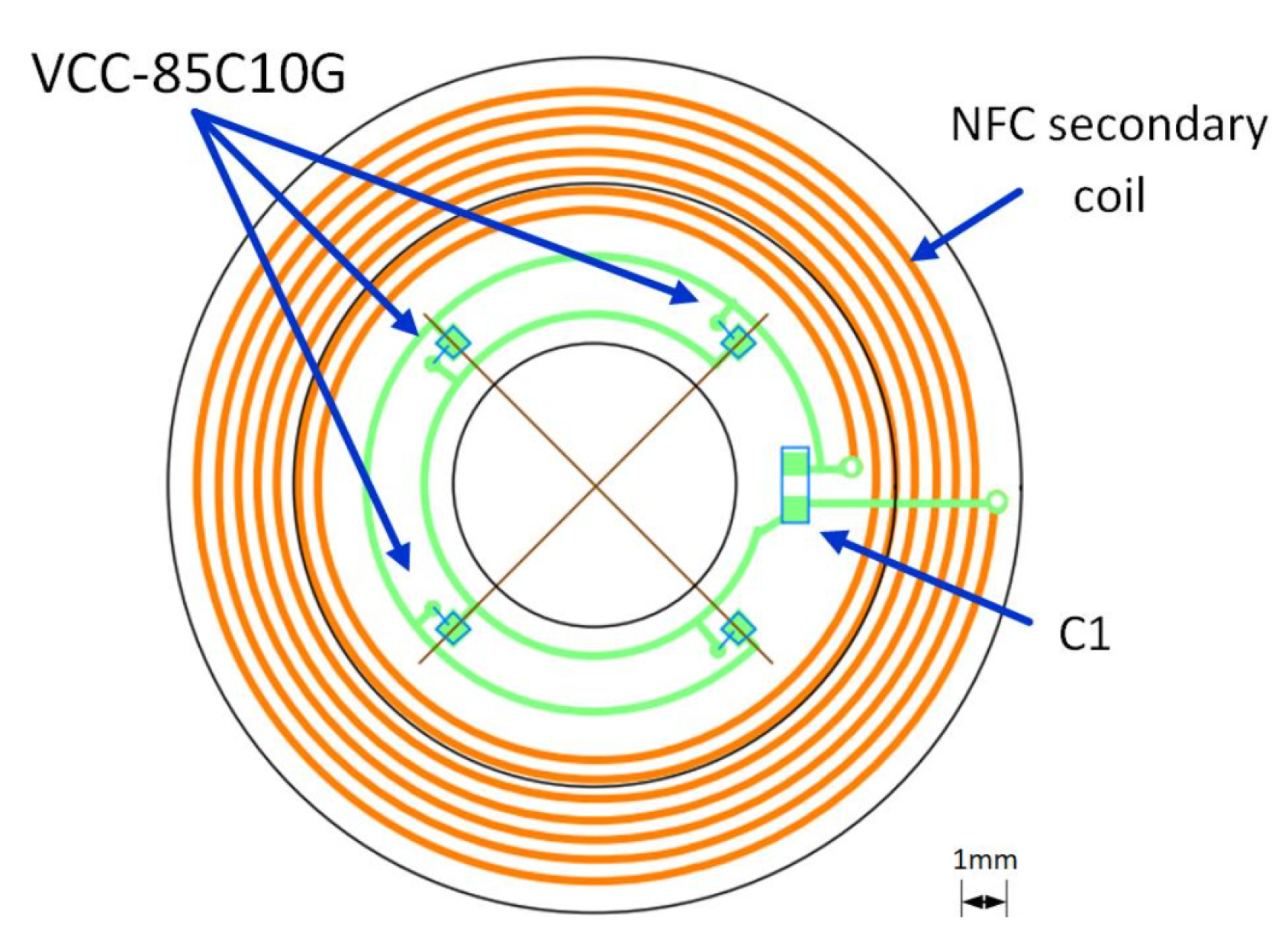

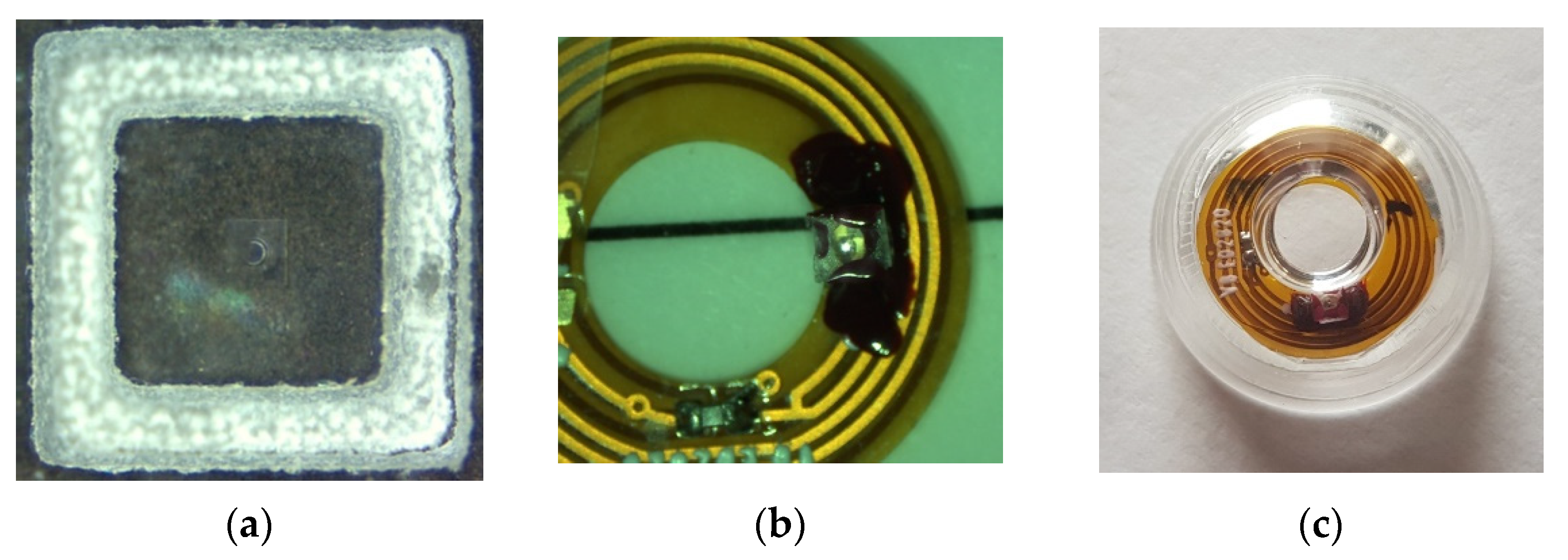

- Both the emission source and the detector (i.e., a photodiode) are in the SCL. In this case, a variation in light intensity is measured (reflected by the portion of the pupil illuminated by the light source—the rest is absorbed in the interior of the eye). A look-up table makes it possible to determine the pupil diameter. This value is computed within the contact lens and transmitted to the eyewear via a conventional NFC link, as in [15]. The principle is illustrated in Figure 2.

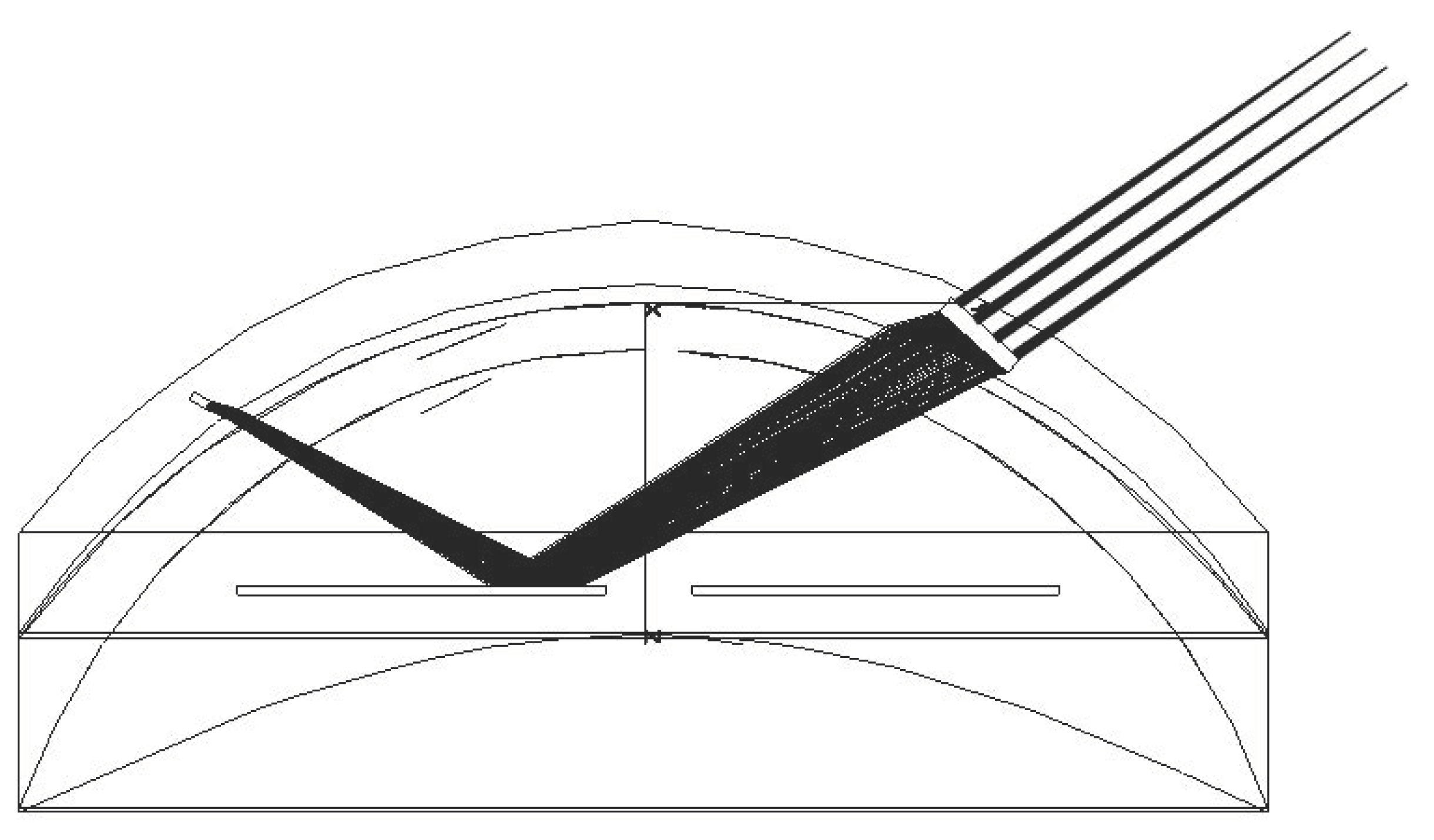

- The second solution combines a light source and a diffractive optical element (DOE) [20,21], which covers a given area of the iris. This optical element will therefore be partially illuminated with respect to the proportion of light that is reflected from the portion of the iris underlying it (as detailed later on). For this purpose, the DOE [22,23] consists of several facets, each of which creates a light spot at a given distance from the lens, where detectors are placed to determine the diameter of the iris opening. This implementation is illustrated in Figure 3a (operation) and Figure 3b (obtained ray-tracing).

2.3. Autorefractometer SCL

3. Combination of All Sensors in the Same SCL

3.1. Proposed Multimodal Oculometric Sensors

3.1.1. Autonomous Sensor (On-Lens Computing)

3.1.2. Remote Computing (Off-Lens Computing)

3.2. Importance of the Addition of DOE

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gambiraža, M.; Kesedžić, I.; Šarlija, M.; Popović, S.; Ćosić, K. Classification of Cognitive Load based on Oculometric Features. In Proceedings of the 44th International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 27 September–1 October 2021. [Google Scholar] [CrossRef]

- Massin, L.; Seguin, F.; Nourrit, V.; Daniel, E.; de Bougrenet de la Tocnaye, J.-L.; Lahuec, C. Multipurpose Bio-Monitored Integrated Circuit in a Contact Lens Eye-Tracker. Sensors 2022, 22, 595. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Mimica, M.R.M. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Xia, Y.; Khamis, M.; Anibal Fernandez, F.; Heidari, H.; Butt, H.; Ahmed, Z.; Wilkinson, T.; Ghannam, R. State-of-the-Art in Smart Contact Lenses for Human Machine Interaction, Electronics. arXiv 2021, arXiv:2112.10905. [Google Scholar]

- Truththeory. Available online: https://truththeory.com/sonys-new-blink-powered-contact-lens-records-everything-see/ (accessed on 1 May 2022).

- Interesting Engineering. Available online: https://interestingengineering.com/new-scientific-invention-contact-lens-that-zoom-in-the-blink-of-an-eye (accessed on 1 May 2022).

- Lech, M.; Andrzej Czyzewski, A.; Kucewicz, M.T. CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain. Sensors 2021, 21, 7605. [Google Scholar] [CrossRef] [PubMed]

- Jacob, R.J.K. What you look at is what you get: Eye movement-based interaction techniques. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’90, Seattle, WA, USA, 1–5 April 1990; pp. 11–18. [Google Scholar]

- Majaranta, P.; Räihä, K.-J. Twenty years of eye typing: Systems and design issues. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, ETRA ’02, New Orleans, LA, USA, 25–27 March 2002; pp. 15–22. [Google Scholar]

- Drouot, M.; Le Bigot, N.; Bricard, E.; de Bougrenet de la Tocnaye, J.-L.; Nourrit, V. Augmented reality on industrial assembly line: Impact on effectiveness and mental workload. Appl. Ergon. 2022, 103, 103793. [Google Scholar] [CrossRef]

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over Fifty Years with Brain-Computer Interfaces A Review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef] [PubMed]

- Katona, J. Analyse the Readability of LINQ Code using an Eye-Tracking-based Evaluation. Acta Polytech. Hung. 2021, 18, 193–215. [Google Scholar] [CrossRef]

- Glover, S.; Wall, M.B.; Smith, A.T. Distinct cortical networks support the planning and online control of reaching-to-grasp in humans. Eur. J. Neurosci. 2012, 35, 909–915. [Google Scholar] [CrossRef] [PubMed]

- Katona, J. A Review of Human–Computer Interaction and Virtual Reality Research Fields in Cognitive InfoCommunications. Appl. Sci. 2021, 11, 2646. [Google Scholar] [CrossRef]

- Khaldi, A.; Daniel, E.; Massin, L.; Kärnfelt, C.; Ferranti, F.; Lahuec, C.; Seguin, F.; Nourrit, V.; de Bougrenet de la Tocnaye, J.-L. The cyclops contact lens: A laser emitting contact lens for eye tracking. Sci. Rep. 2020, 10, 14804. [Google Scholar] [CrossRef] [PubMed]

- Massin, L.; Nourrit, V.; Lahuec, C.; Seguin, F.; Adam, L.; Daniel, E.; de Bougrenet de la Tocnaye, J.-L. Development of a new scleral contact lens with encapsulated photodetectors for eye tracking. Opt. Express 2020, 28, 28635–28647. [Google Scholar] [CrossRef] [PubMed]

- Robert, F.M.; Adam, L.; Nourrit, V.; de Bougrenet de la Tocnaye, J.-L. Contact lens double laser pointer for combined gaze tracking and target designation. PLoS ONE 2022, 17, e0267393. [Google Scholar] [CrossRef] [PubMed]

- Regal, S.; Troughton, J.; Djenizian, T.; Ramuz, M. Biomimetic models of the human eye, and their applications. Nanotechnology 2021, 32, 302001. [Google Scholar] [CrossRef] [PubMed]

- Nourrit, V.; Pigeon, Y.E.; Heggarty, K.; de Bougrenet de la Tocnaye, J.-L. Perifoveal Retinal Augmented Reality (PRAR) on contact lenses. Opt. Eng. 2021, 60, 115103. [Google Scholar] [CrossRef]

- Robert, F.M.; Coppin, G.; Nourrit, V.; de Bougrenet Bougrenet de la Tocnaye, J.-L. Smart contact lenses for visual human machine interactions. In Proceedings of the 10th Visual and Physiological Optics Meeting (VPO 2022), Cambridge, UK, 29–31 August 2022. [Google Scholar]

- Khurana, A.K. Asthenopia, anomalies of accommodation and convergence. In Theory and Practice of Optics and Refraction, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2008; pp. 98–99. ISBN 978-81-312-1132-8. [Google Scholar]

- Nasreldin, M.; Delattre, R.; Calmes, C.; Sugiawati, V.; Maria, S.; de Bougrenet Bougrenet de la Tocnaye, J.-L.; Djenizian, T. High Performance Stretchable Li-ion Microbattery. Energy Storage Mater. 2020, 33, 108–115. [Google Scholar] [CrossRef]

- Moench, H.; Bader, S.; Gudde, R.; Hellmig, J.; Moser, D.; Ott, A.; Spruit, H.; Weichmann, U.; Weidenfeld, S. ViP-VCSEL with integrated photodiode and new applications. In Vertical-Cavity Surface-Emitting Lasers XXVI; SPIE: Bellingham, WA, USA, 2022; Volume 1202006. [Google Scholar] [CrossRef]

- Kress, B.C.; Meyrueis, P. Applied Digital Optics: From Micro-Optics to Nanophotonics; Wiley: Hoboken, NJ, USA, 2009; Chapter 6; ISBN 978-0-470-0226. [Google Scholar]

- Wyrowski, F. Iterative quantization of digital amplitude holograms. Appl. Opt. 1989, 28, 3864–3870. [Google Scholar] [CrossRef] [PubMed]

- Kessels, M.M.; Bouz, M.E.; Pagan, R.; Heggarty, K. Versatile stepper based maskless microlithography using a liquid crystal display for direct write of binary and multilevel microstructures. J. Micro/Nanolithogr. MEMS MOEMS 2007, 6, 033002. [Google Scholar] [CrossRef] [Green Version]

- Perry, T.S. Mojo Vision Puts Its AR Contact Lens Into Its CEO’s Eyes (Literally). The Augmented Reality Contact Lens Reaches a Major Milestone, IEEE Spectrum. 2022. Available online: https://spectrum.ieee.org/looking-through-mojo-visions-newest-ar-contact-lens (accessed on 1 May 2022).

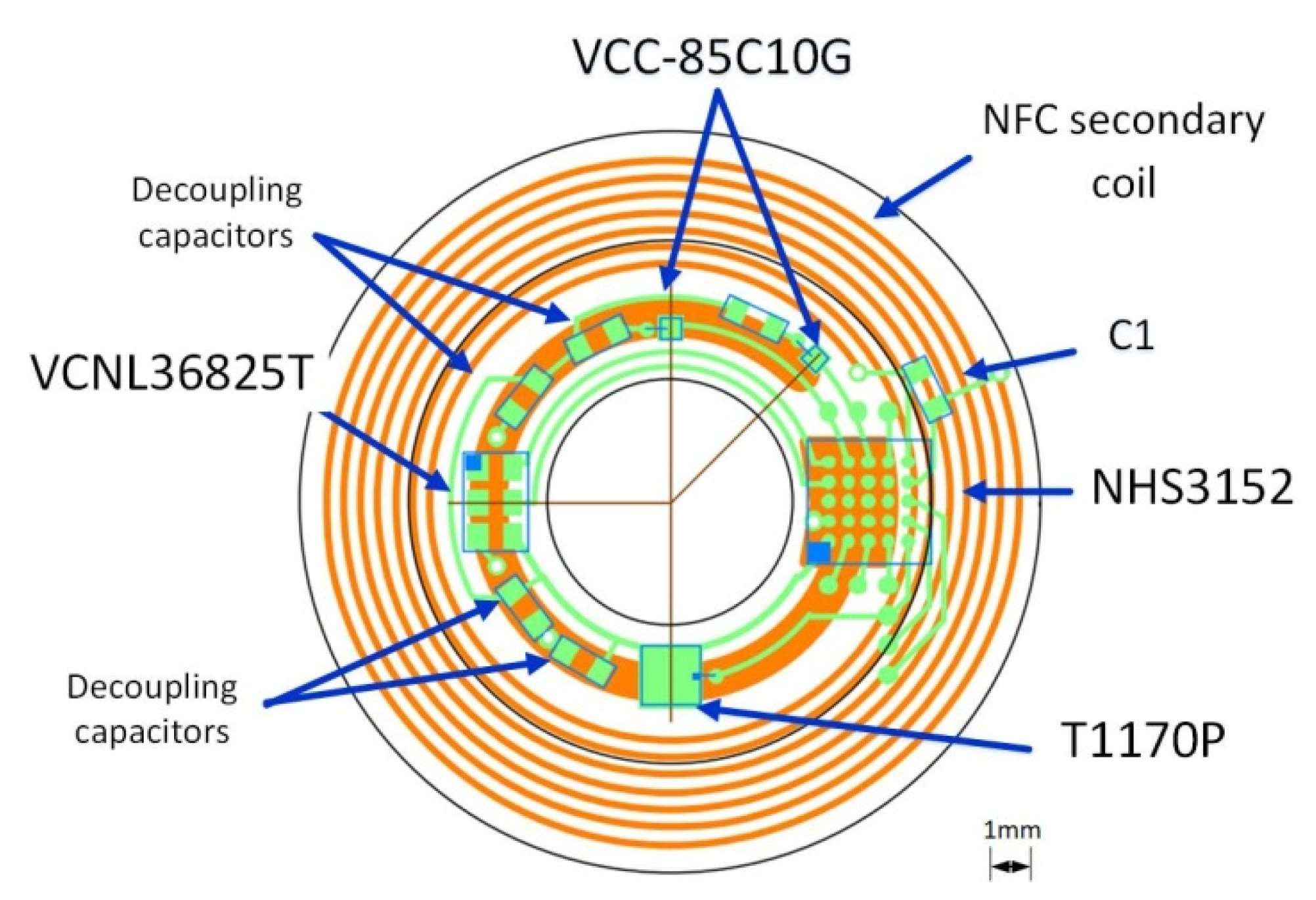

| Function | Part | Manufacturer | W (mm) | L (mm) | H (mm) |

|---|---|---|---|---|---|

| Integrated circuit | NHS3152 (WLCSP25) | NXP Semiconductor, Eindhoven, The Netherlands | 2.51 | 2.51 | 0.5 |

| Photodiode | T1170P | Vishay, Malvern, PA, USA | 1.17 | 1.17 | 0.28 |

| 850 nm VCSEL | VCC-85C10G | Lasermate Group, Walnut, CA, USA | 0.25 | 0.25 | 0.15 |

| Proximity sensor | VCNL36825T | Vishay Semiconductor, Malvern, PA, USA | 2 | 1.25 | 0.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Bougrenet de la Tocnaye, J.-L.; Nourrit, V.; Lahuec, C. Design of a Multimodal Oculometric Sensor Contact Lens. Sensors 2022, 22, 6731. https://doi.org/10.3390/s22186731

de Bougrenet de la Tocnaye J-L, Nourrit V, Lahuec C. Design of a Multimodal Oculometric Sensor Contact Lens. Sensors. 2022; 22(18):6731. https://doi.org/10.3390/s22186731

Chicago/Turabian Stylede Bougrenet de la Tocnaye, Jean-Louis, Vincent Nourrit, and Cyril Lahuec. 2022. "Design of a Multimodal Oculometric Sensor Contact Lens" Sensors 22, no. 18: 6731. https://doi.org/10.3390/s22186731

APA Stylede Bougrenet de la Tocnaye, J.-L., Nourrit, V., & Lahuec, C. (2022). Design of a Multimodal Oculometric Sensor Contact Lens. Sensors, 22(18), 6731. https://doi.org/10.3390/s22186731