A Comparative Analysis between Efficient Attention Mechanisms for Traffic Forecasting without Structural Priors

Abstract

:1. Introduction

- We perform the first fair comparison between efficient attention modules in the context of a spatio-temporal forecasting model, by analyzing the performance-complexity trade-off of these modules. To our best knowledge, this is the first analysis of this type in the a spatio-temporal modeling context.

- We examine the results for two distinct datasets of different sizes to verify the relationship between the theoretical complexity of the attention modules and the effective training and inference times.

- We open-source all the data and code used in the experiments to facilitate further research in this direction.

2. Materials and Methods

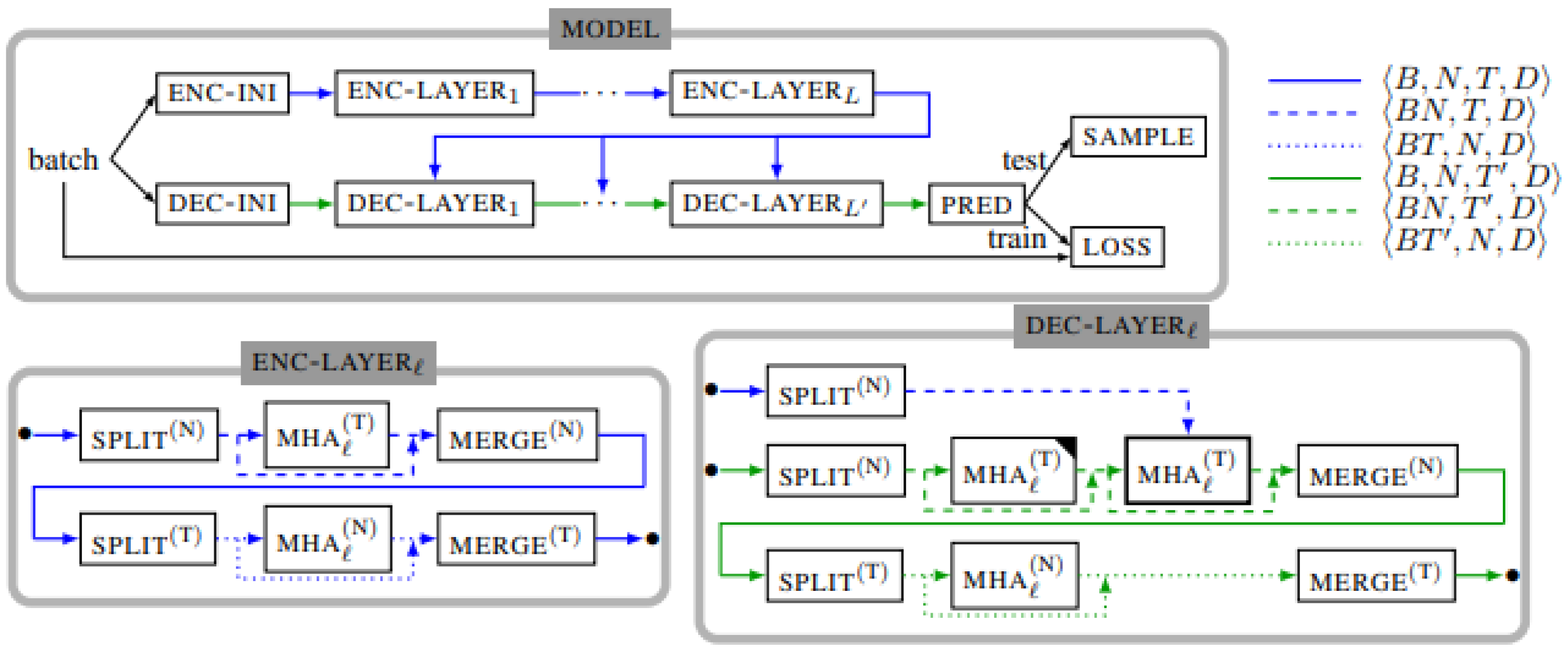

2.1. Baseline Model

2.2. Efficient Attention Mechanisms

2.2.1. Group Attention

2.2.2. Reformer Attention

2.2.3. Fast Linear Attention

2.2.4. Efficient Attention

2.2.5. Linformer Attention

2.2.6. FAVOR+ Attention

2.3. Evaluation Data

2.4. Experiment Methodology

2.4.1. Error Metrics

- Mean Absolute Error (MAE)—

- Root Mean Squared Error (RMSE)—

- Mean Absolute Percentage Error (MAPE)—

2.4.2. Resource Metrics

- Training time (sec./epoch)—The amount of time, in seconds, required for training the model for a single epoch. This includes any epoch-level pre-processing, plus the times needed for the forward and backward passes for all the batches.

- Inference time (ms./epoch)—The amount of time, in milliseconds, required for inference on a single sample. This is effectively the time required to produce the output sequence for a single sample by repeatedly forward passing the data in an auto-regressive manner.

- Maximum GPU utilisation during training (GB)—We measure the maximum GPU utilization from a practical point of view. Knowing or approximating the maximum GPU usage, one could select an appropriate machine with a large enough GPU.

2.5. Experimental Setup

3. Results

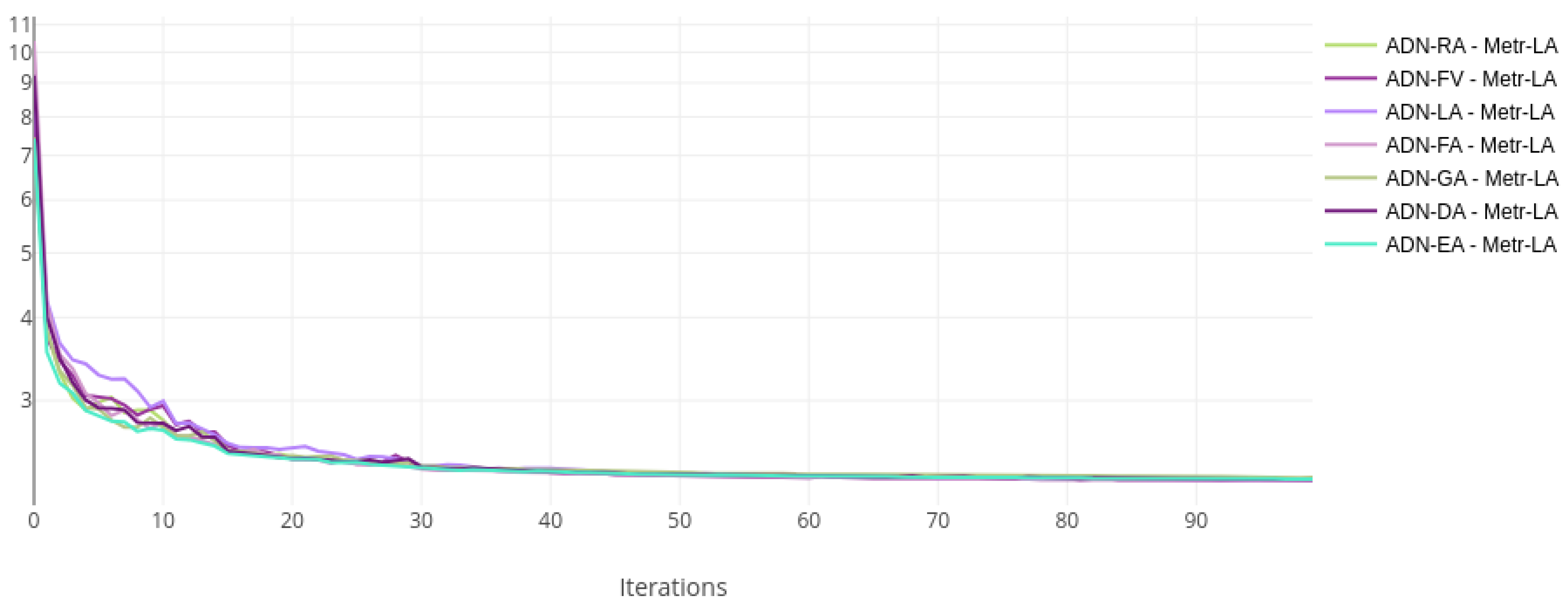

3.1. Learning Curves

3.2. Times and Resource Utilization

3.3. Prediction Performance

4. Discussion

4.1. Theoretical Complexity vs. Training Time

4.2. Performance of Alternative Attention Mechanisms

4.3. Social Considerations

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. arXiv 2016, arXiv:1606.09375. [Google Scholar]

- Cai, L.; Janowicz, K.; Mai, G.; Yan, B.; Zhu, R. Traffic transformer: Capturing the continuity and periodicity of time series for traffic forecasting. Trans. GIS 2020, 24, 736–755. [Google Scholar] [CrossRef]

- Zhang, Q.; Chang, J.; Meng, G.; Xiang, S.; Pan, C. Spatio-Temporal Graph Structure Learning for Traffic Forecasting. Proc. AAAI Conf. Artif. Intell. 2020, 34, 1177–1185. [Google Scholar] [CrossRef]

- Li, M.; Zhu, Z. Spatial-Temporal Fusion Graph Neural Networks for Traffic Flow Forecasting. arXiv 2020, arXiv:2012.09641. [Google Scholar] [CrossRef]

- Jiang, W.; Luo, J. Graph Neural Network for Traffic Forecasting: A Survey. arXiv 2021, arXiv:2101.11174. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Zheng, C.; Fan, X.; Wang, C.; Qi, J. GMAN: A Graph Multi-Attention Network for Traffic Prediction. arXiv 2019, arXiv:1911.08415. [Google Scholar] [CrossRef]

- Tian, C.; Chan, V.W.K. Spatial-temporal attention wavenet: A deep learning framework for traffic prediction considering spatial-temporal dependencies. IET Intell. Transp. Syst. 2021, 15, 549–561. [Google Scholar] [CrossRef]

- Drakulic, D.; Andreoli, J. Structured Time Series Prediction without Structural Prior. arXiv 2022, arXiv:2202.03539. [Google Scholar]

- Hong, J.; Lee, C.; Bang, S.; Jung, H. Fair Comparison between Efficient Attentions. arXiv 2022, arXiv:2206.00244. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In Proceedings of the International Conference on Learning Representations (ICLR ’18), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Gao, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting. Proc. AAAI Conf. Artif. Intell. 2019, 33, 922–929. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Williams, R.J.; Zipser, D. A Learning Algorithm for Continually Running Fully Recurrent Neural Networks. Neural Comput. 1989, 1, 270–280. [Google Scholar] [CrossRef]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The Efficient Transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar]

- Katharopoulos, A.; Vyas, A.; Pappas, N.; Fleuret, F. Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention. In Proceedings of the International Conference on Machine Learning (ICML), Vienna, Austria, 12–18 July 2020. [Google Scholar]

- Shen, Z.; Zhang, M.; Zhao, H.; Yi, S.; Li, H. Efficient Attention: Attention with Linear Complexities. In Proceedings of the WACV, Online, 5–9 January 2021. [Google Scholar]

- Wang, S.; Li, B.Z.; Khabsa, M.; Fang, H.; Ma, H. Linformer: Self-Attention with Linear Complexity. arXiv 2020, arXiv:2006.04768. [Google Scholar]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlós, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking Attention with Performers. arXiv 2020, arXiv:2009.14794. [Google Scholar]

| Attention Type | Complexity | Strategy |

|---|---|---|

| Dot-product Attention (DA) [7] | All-to-all | |

| Group Attention (GA) [8,10] | Inter-group all-to-all | |

| Reformer Attention (RA) [17] | Locality-sensitive hashing | |

| Fast Linear Attention (FA) [18] | Kernelization, associativity | |

| Efficient Attention (EA) [19] | Associativity | |

| Linformer Attention (LA) [20] | Low-Rank approximation | |

| Performer Attention (FV) [21] | Algebraic approximation |

| Model | No. Parameters | Training Time (s/epoch) | Inference Time (ms/sample) | Peak GPU Usage (GB) |

|---|---|---|---|---|

| ADN-DA | 331 K | 30 | 7.8 | 9.9 |

| ADN-GA | 331 K | 46 | 1.9 | 4.7 |

| ADN-RA | 324 K | 50 | 8.1 | 11 |

| ADN-FA | 331 K | 23 | 2.4 | 4.5 |

| ADN-EA | 331 K | 27 | 1.7 | 5.5 |

| ADN-LA | 341 K | 23 | 2.1 | 4.5 |

| ADN-FV | 330 K | 25 | 2.2 | 4.7 |

| Model | No. Parameters | Training Time (s/epoch) | Inference Time (ms/sample) | Peak GPU Usage (GB) |

|---|---|---|---|---|

| ADN-DA | 334 K | 69 | 8.4 | 14 |

| ADN-GA | 335 K | 72 | 5.4 | 7.1 |

| ADN-RA | 328 K | 103 | 8.3 | 17 |

| ADN-FA | 335 K | 52 | 4.1 | 7.2 |

| ADN-EA | 334 K | 50 | 4.0 | 8.5 |

| ADN-LA | 356 K | 48 | 4.7 | 7.9 |

| ADN-FV | 334 K | 43 | 5.0 | 8.1 |

| 15-min | 30-min | 60-min | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | MAE | RMSE | MAPE | MAE | RMSE | MAPE | MAE | RMSE | MAPE |

| ADN-DA * | 3.01 | 6.04 | 8.18% | 3.57 | 7.40 | 10.28% | 4.30 | 8.88 | 12.82% |

| ADN-GA * | 3.06 | 6.14 | 8.37% | 3.62 | 7.54 | 10.50% | 4.37 | 9.04 | 12.98% |

| ADN-RA | 3.04 | 6.10 | 8.25% | 3.60 | 7.45 | 10.40% | 4.32 | 8.91 | 12.99% |

| ADN-FA | 3.02 | 6.01 | 8.20% | 3.56 | 7.30 | 10.22% | 4.31 | 8.70 | 12.61% |

| ADN-EA | 3.06 | 6.11 | 8.33% | 3.61 | 7.47 | 10.41% | 4.36 | 8.93 | 13.08% |

| ADN-LA | 3.05 | 6.14 | 8.20% | 3.64 | 7.53 | 10.35% | 4.42 | 9.16 | 13.03% |

| ADN-FV | 3.02 | 6.05 | 8.20% | 3.58 | 7.42 | 10.32% | 4.38 | 8.94 | 13.75% |

| 15-min | 30-min | 60-min | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | MAE | RMSE | MAPE | MAE | RMSE | MAPE | MAE | RMSE | MAPE |

| ADN-DA * | 1.48 | 3.04 | 3.04% | 1.86 | 4.14 | 4.16% | 2.34 | 5.28 | 5.74% |

| ADN-GA * | 1.51 | 3.07 | 3.10% | 1.89 | 4.18 | 4.22% | 2.38 | 5.31 | 5.79% |

| ADN-RA | 1.48 | 3.05 | 3.02% | 1.87 | 4.15 | 4.10% | 2.35 | 5.28 | 5.59% |

| ADN-FA | 1.48 | 3.04 | 3.05% | 1.87 | 4.12 | 4.16% | 2.34 | 5.22 | 5.72% |

| ADN-EA | 1.50 | 3.05 | 3.06% | 1.88 | 4.14 | 4.15% | 2.33 | 5.21 | 5.72% |

| ADN-LA | 1.49 | 3.07 | 3.06% | 1.90 | 4.20 | 4.20% | 2.41 | 5.42 | 5.84% |

| ADN-FV | 1.48 | 3.06 | 3.05% | 1.90 | 4.18 | 4.17% | 2.42 | 5.36 | 5.71% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rad, A.-C.; Lemnaru, C.; Munteanu, A. A Comparative Analysis between Efficient Attention Mechanisms for Traffic Forecasting without Structural Priors. Sensors 2022, 22, 7457. https://doi.org/10.3390/s22197457

Rad A-C, Lemnaru C, Munteanu A. A Comparative Analysis between Efficient Attention Mechanisms for Traffic Forecasting without Structural Priors. Sensors. 2022; 22(19):7457. https://doi.org/10.3390/s22197457

Chicago/Turabian StyleRad, Andrei-Cristian, Camelia Lemnaru, and Adrian Munteanu. 2022. "A Comparative Analysis between Efficient Attention Mechanisms for Traffic Forecasting without Structural Priors" Sensors 22, no. 19: 7457. https://doi.org/10.3390/s22197457