Digital Single-Image Smartphone Assessment of Total Body Fat and Abdominal Fat Using Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

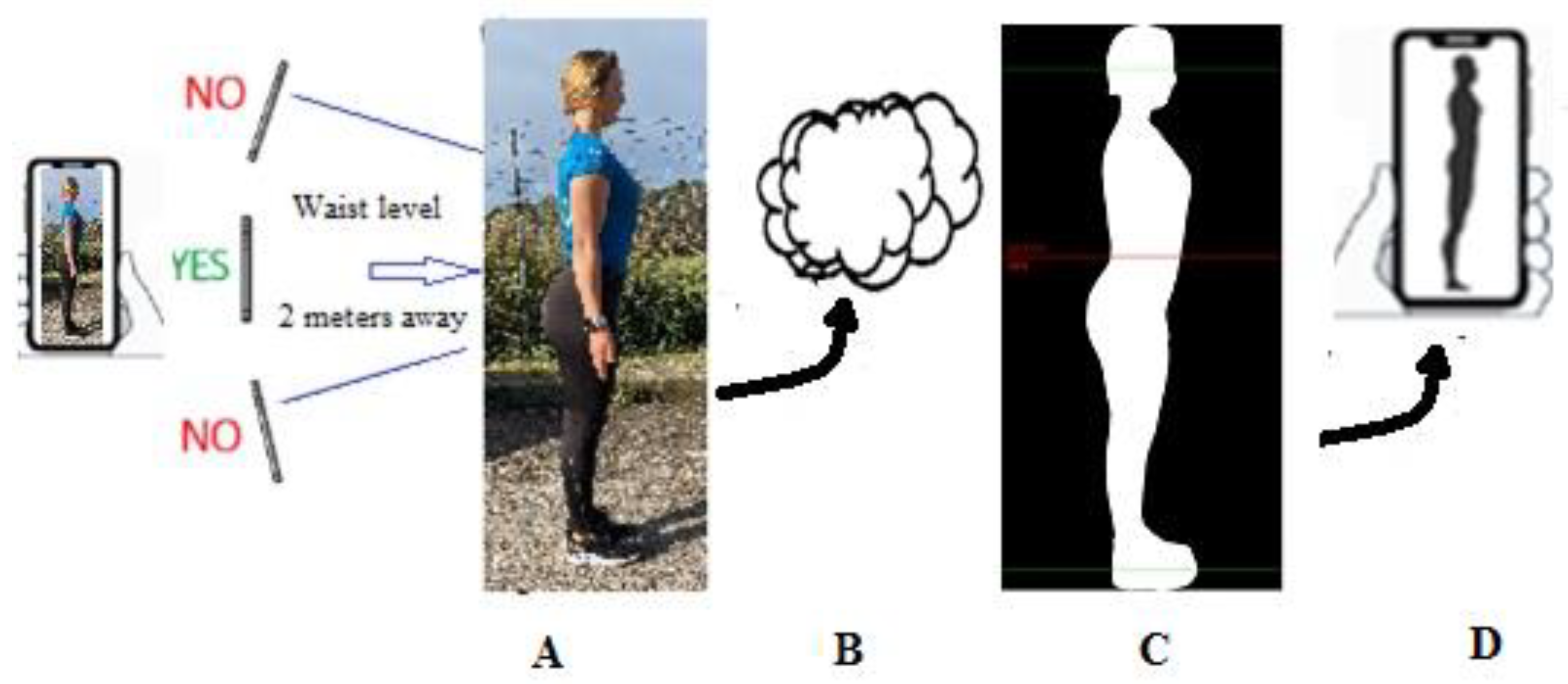

2.1. Digital Photography

2.2. Procedure

2.3. Validation of a Prediction Model for Total Body and Abdominal FM

2.4. Statistical Methods

3. Results

3.1. Estimation of Whole-Body FM: Model Validation

3.2. Estimation of Abdominal FM

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Obesity and Overweight—Fact Sheet. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/obesity-and-overweight (accessed on 1 February 2022).

- GBD 2015 Obesity Collaborators; Afshin, A.; Forouzanfar, M.H.; Reitsma, M.B.; Sur, P.; Estep, K.; Lee, A.; Marczak, L.; Mokdad, A.H.; Moradi-Lakeh, M.; et al. Health effects of overweight and obesity in 195 countries over 25 years. N. Engl. J. Med. 2017, 377, 13–27. [Google Scholar] [CrossRef]

- Okunogbe, A.; Nugent, R.; Spencer, G.; Ralston, J.; Wilding, J. Economic impacts of overweight and obesity: Current and future estimates for eight countries. BMJ Glob. Health 2021, 6, e006351. [Google Scholar] [CrossRef]

- Gonzalez, M.C.; Correia, M.I.T.D.; Heymsfield, S.B. A requiem for BMI in the clinical setting. Curr. Opin. Clin. Nutr. Metab. Care 2017, 20, 314–321. [Google Scholar] [CrossRef]

- Neeland, I.J.; Ross, R.; Després, J.P.; Matsuzawa, Y.; Yamashita, S.; Shai, I.; Seidell, J.; Magni, P.; Santos, R.D.; Arsenault, B.; et al. International Atherosclerosis Society; International Chair on Cardiometabolic Risk Working Group on Visceral Obesity. Visceral and ectopic fat, atherosclerosis, and cardiometabolic disease: A position statement. Lancet Diabetes Endocrinol. 2019, 7, 715–725. [Google Scholar] [CrossRef]

- Piché, M.E.; Tchernof, A.; Després, J.P. Obesity phenotypes, diabetes, and cardiovascular diseases. Circ. Res. 2020, 126, 1477–1500. [Google Scholar] [CrossRef]

- Longo, M.; Zatterale, F.; Naderi, J.; Parrillo, L.; Formisano, P.; Raciti, G.A.; Beguinot, F.; Miele, C. Adipose tissue dysfunction as determinant of obesity-associated metabolic complications. Int. J. Mol. Sci. 2019, 20, 2358. [Google Scholar] [CrossRef] [Green Version]

- Powell-Wiley, T.M.; Poirier, P.; Burke, L.E.; Després, J.P.; Gordon-Larsen, P.; Lavie, C.J.; Lear, S.A.; Ndumele, C.E.; Neeland, I.J.; Sanders, P.; et al. American Heart Association Council on Lifestyle and Cardiometabolic Health; Council on Cardiovascular and Stroke Nursing; Council on Clinical Cardiology; Council on Epidemiology and Prevention; and Stroke Council. Obesity and cardiovascular disease: A scientific statement from the American Heart Association. Circulation 2021, 143, e984–e1010. [Google Scholar] [CrossRef]

- Sun, J.; Liu, Z.; Zhang, Z.; Zeng, Z.; Kang, W. The correlation of prediabetes and type 2 diabetes with adiposity in adults. Front. Nutr. 2022, 9, 818263. [Google Scholar] [CrossRef]

- Ackerman, S.E.; Blackburn, O.A.; Marchildon, F.; Cohen, P. Insights into the link between obesity and cancer. Curr. Obes. Rep. 2017, 6, 195–203. [Google Scholar] [CrossRef]

- Avgerinos, K.I.; Spyrou, N.; Mantzoros, C.S.; Dalamaga, M. Obesity and cancer risk: Emerging biological mechanisms and perspectives. Metabolism 2019, 92, 121–135. [Google Scholar] [CrossRef]

- Duren, D.L.; Sherwood, R.J.; Czerwinski, S.A.; Lee, M.; Choh, A.C.; Siervogel, R.M.; Chumlea, W.C. Body composition methods: Comparisons and interpretation. J. Diabetes Sci. Technol. 2008, 2, 1139–1146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Borga, M.; West, J.; Bell, J.D.; Harvey, N.C.; Romu, T.; Heymsfield, S.B.; Dahlqvist Leinhard, O. Advanced body composition assessment: From body mass index to body composition profiling. J. Investig. Med. 2018, 66, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sommer, I.; Teufer, B.; Szelag, M.; Nussbaumer-Streit, B.; Titscher, V.; Klerings, I.; Gartlehner, G. The performance of anthropometric tools to determine obesity: A systematic review and meta-analysis. Sci. Rep. 2020, 10, 12699. [Google Scholar] [CrossRef] [PubMed]

- Ross, R.; Neeland, I.J.; Yamashita, S.; Shai, I.; Seidell, J.; Magni, P.; Santos, R.D.; Arsenault, B.; Cuevas, A.; Hu, F.B.; et al. Waist circumference as a vital sign in clinical practice: A Consensus Statement from the IAS and ICCR Working Group on Visceral Obesity. Nat. Rev. Endocrinol. 2020, 16, 177–189. [Google Scholar] [CrossRef] [Green Version]

- Kuk, J.L.; Lee, S.; Heymsfield, S.B.; Ross, R. Waist circumference and abdominal adipose tissue distribution: Influence of age and sex. Am. J. Clin. Nutr. 2005, 81, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Bosy-Westphal, A.; Booke, C.A.; Blöcker, T.; Kossel, E.; Goele, K.; Later, W.; Hitze, B.; Heller, M.; Glüer, C.C.; Müller, M.J. Measurement site for waist circumference affects its accuracy as an index of visceral and abdominal subcutaneous fat in a Caucasian population. J. Nutr. 2010, 140, 954–961. [Google Scholar] [CrossRef] [Green Version]

- Fourman, L.T.; Kileel, E.M.; Hubbard, J.; Holmes, T.; Anderson, E.J.; Looby, S.E.; Fitch, K.V.; Feldpausch, M.N.; Torriani, M.; Lo, J.; et al. Comparison of visceral fat measurement by dual-energy X-ray absorptiometry to computed tomography in HIV and non-HIV. Nutr. Diabetes 2019, 9, 6. [Google Scholar] [CrossRef]

- Farina, G.L.; Spataro, F.; De Lorenzo, A.; Lukaski, H. A smartphone application for personal assessments of body composition and phenotyping. Sensors 2016, 16, 2163. [Google Scholar] [CrossRef] [Green Version]

- Heymsfield, S.B.; Bourgeois, B.; Ng, B.K.; Sommer, M.J.; Li, X.; Shepherd, J.A. Digital anthropometry: A critical review. Eur. J. Clin. Nutr. 2018, 72, 680–687. [Google Scholar] [CrossRef]

- Harty, P.S.; Sieglinger, B.; Heymsfield, S.B.; Shepherd, J.A.; Bruner, D.; Stratton, M.T.; Tinsley, G.M. Novel body fat estimation using machine learning and 3-dimensional optical imaging. Eur. J. Clin. Nutr. 2020, 74, 842–845. [Google Scholar] [CrossRef]

- Tinsley, G.M.; Moore, M.L.; Dellinger, J.R.; Adamson, B.T.; Benavides, M.L. Digital anthropometry via three-dimensional optical scanning: Evaluation of four commercially available systems. Eur. J. Clin. Nutr. 2020, 74, 1054–1064. [Google Scholar] [CrossRef] [PubMed]

- Cabre, H.E.; Blue, M.N.M.; Hirsch, K.R.; Brewer, G.J.; Gould, L.M.; Nelson, A.G.; Smith-Ryan, A.E. Validity of a 3-dimensional body scanner: Comparison against a 4-compartment model and dual energy X-ray absorptiometry. Appl. Physiol. Nutr. Metab. 2021, 46, 644–650. [Google Scholar] [CrossRef]

- Fedewa, M.V.; Sullivan, K.; Hornikel, B.; Holmes, C.J.; Metoyer, C.J.; Esco, M.R. Accuracy of a mobile 2D imaging system for body volume and subsequent composition estimates in a three-compartment model. Med. Sci. Sports Exerc. 2021, 53, 1003–1009. [Google Scholar] [CrossRef]

- Nana, A.; Staynor, J.M.D.; Arlai, S.; El-Sallam, A.; Dhungel, N.; Smith, M.K. Agreement of anthropometric and body composition measures predicted from 2D smartphone images and body impedance scales with criterion methods. Obes. Res. Clin. Pract. 2022, 16, 37–43. [Google Scholar] [CrossRef]

- Majmudar, M.D.; Chandra, S.; Yakkala, K.; Kennedy, S.; Agrawal, A.; Sippel, M.; Ramu, P.; Chaudhri, A.; Smith, B.; Criminisi, A.; et al. Smartphone camera based assessment of adiposity: A validation study. NPJ Digit. Med. 2022, 5, 79. [Google Scholar] [CrossRef] [PubMed]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef] [Green Version]

- Talluri, A. A Method for Estimating the Fat Mass of a Subject through Digital Images. EU WO2016/189400A1, 1 December 2016. [Google Scholar]

- Lin, L.; Hedayat, A.S.; Sinha, B.; Yang, M. Statistical methods in assessing agreement: Models, issues and tools. J. Am. Stat. Assoc. 2002, 97, 257–270. [Google Scholar] [CrossRef]

- Rumbo-Rodríguez, L.; Sánchez-SanSegundo, M.; Ferrer-Cascales, R.; García-D’Urso, N.; Hurtado-Sánchez, J.A.; Zaragoza-Martí, A. Comparison of body scanner and manual anthropometric measurements of body shape: A systematic review. Int. J. Environ. Res. Public Health 2021, 18, 6213. [Google Scholar] [CrossRef]

- Smith, B.; McCarthy, C.; Dechenaud, M.E.; Wong, M.C.; Shepherd, J.; Heymsfield, S.B. Anthropometric evaluation of a 3D scanning mobile application. Obesity 2022, 30, 1181–1188. [Google Scholar] [CrossRef]

- Ulijaszek, S.; Kerr, D.A. Anthropometric measurement error and the assessment of nutritional status. Br. J. Nutr. 1999, 82, 165–177. [Google Scholar] [CrossRef]

- Mason, C.; Katzmarzyk, P.T. Effect of the site of measurement of waist circumference on the prevalence of the metabolic syndrome. Am. J. Cardiol. 2009, 103, 1716–1720. [Google Scholar] [CrossRef]

- Ng, B.K.; Sommer, M.J.; Wong, M.C.; Pagano, I.; Nie, Y.; Fan, B.; Kennedy, S.; Bourgeois, B.; Kelly, N.; Liu, Y.E.; et al. Detailed 3-dimensional body shape features predict body composition, blood metabolites, and functional strength: The Shape Up! studies. Eur. J. Clin. Nutr. 2017, 71, 1329–1335. [Google Scholar] [CrossRef]

| n | Female | Males |

|---|---|---|

| n | 84 | 74 |

| Weight, kg | 66.8 ± 13.1 (42.0 to 103.6) | 80.1 ± 11.5 (63.0 to 109.0) |

| Height, cm | 163.6 ± 6.7 (151.0 to 183.0) | 178.8 ± 7.0 (163 to 199) |

| BMI a, kg/m2 | 25.1 ± 5.1 (16.2 to 37.2) | 25.1 ± 3.6 (19.1 to 37.1) |

| Fat mass b, kg | 22.8 ± 10.9 (8.6 to 59.2) | 17.0 ± 7.5 (6.2 to 33) |

| Body fat b, % | 32.6 ± 10.1 (16.0 to 52.0) | 20.8 ± 7.4 (9.5 to 37.2) |

| Fat-free mass b, kg | 44.5 ± 5.6 (31.0 to 58.7) | 63.2 ± 8.5 (47.0 to 87.6) |

| n | Female | Males |

|---|---|---|

| n | 32 | 31 |

| Weight, kg | 67.0 ± 15.7 (42 to 103.0) | 78.2 ± 12.2 (63 to 108.0) |

| Height, cm | 162.5 ± 5.8 (152 to 174) | 177.2 ± 7.4 (165 to 193) |

| BMI a, kg/m2 | 25.5 ± 6.1 (16.2 to 37.2) | 25.0 ± 4.1 (19.6 to 37.1) |

| Fat mass b, kg | 23.6 ± 12.8 (7.4 to 52.0) | 17.0 ± 8.5 (6.2 to 35.0) |

| Abdominal fat mass b,c, kg | 2.45 ± 2.0 (0.24 to 6.9) | 1.94 ± 1.41 (0.35 to 5.0) |

| Fat-free mass b, kg | 44.5 ± 5.6 (31.0 to 58.7) | 63.2 ± 8.5 (47 to 87.6) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farina, G.L.; Orlandi, C.; Lukaski, H.; Nescolarde, L. Digital Single-Image Smartphone Assessment of Total Body Fat and Abdominal Fat Using Machine Learning. Sensors 2022, 22, 8365. https://doi.org/10.3390/s22218365

Farina GL, Orlandi C, Lukaski H, Nescolarde L. Digital Single-Image Smartphone Assessment of Total Body Fat and Abdominal Fat Using Machine Learning. Sensors. 2022; 22(21):8365. https://doi.org/10.3390/s22218365

Chicago/Turabian StyleFarina, Gian Luca, Carmine Orlandi, Henry Lukaski, and Lexa Nescolarde. 2022. "Digital Single-Image Smartphone Assessment of Total Body Fat and Abdominal Fat Using Machine Learning" Sensors 22, no. 21: 8365. https://doi.org/10.3390/s22218365

APA StyleFarina, G. L., Orlandi, C., Lukaski, H., & Nescolarde, L. (2022). Digital Single-Image Smartphone Assessment of Total Body Fat and Abdominal Fat Using Machine Learning. Sensors, 22(21), 8365. https://doi.org/10.3390/s22218365