Implementing Performance Accommodation Mechanisms in Online BCI for Stroke Rehabilitation: A Study on Perceived Control and Frustration

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Brain–Computer Interface

2.3. Game

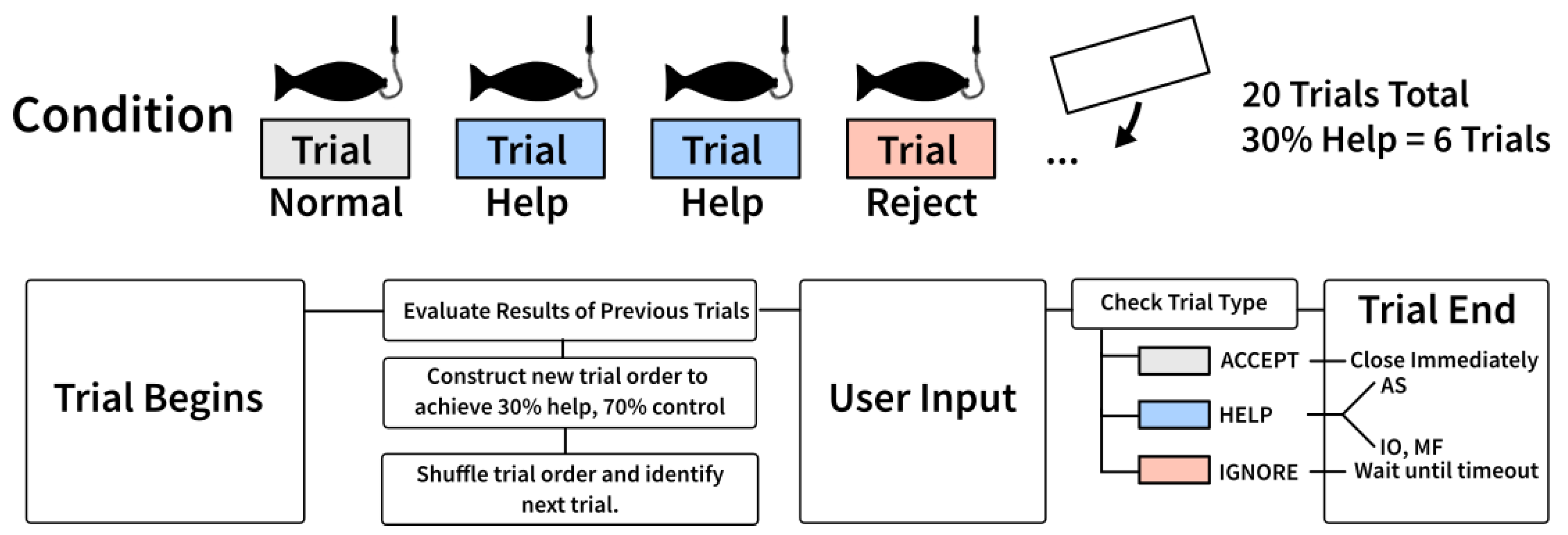

2.3.1. Performance Accommodation Mechanisms

2.3.2. Urn Model

2.4. Experimental Setup

- Control condition: The facilitator explained the core game. This condition was always the first condition the participants went through.

- Augmented Success: “In this condition, the fisherman will occasionally become stronger.”

- Mitigated Failure: “In this condition, occasionally a clip on the fishing rod will prevent the fish from escaping.”

- Input Override: “In this condition, a girl will occasionally come to help you.”

2.5. Data Analysis

2.5.1. Variables

| Augmented Success (AS) | Input Override (IO) | Mitigated Failure (MF) | Normal Condition | ||||

|---|---|---|---|---|---|---|---|

| Negative (No Change) | 46% | Negative (No Change) | 33% | Negative (No Change) | 30% | Negative (No Change) | 42% |

| Positive (No Change) | 28% | Negative to Positive (IO) | 15% | Negative to Neutral (MF) | 17% | Positive (No Change) | 57% |

| Positive to Extra Positive (AS) | 14% | Positive (No Change) | 37% | Positive (No Change) | 40% | ||

| Positive to Negative | 12% | Positive to Positive (IO) | 15% | Positive to Neutral (MF) | 13% |

2.5.2. Analysis Method

3. Results

3.1. Perceived Control

3.2. Frustration

3.3. Qualitative Results

4. Discussion

4.1. Methodological Considerations

4.2. Implications

4.3. Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Feigin, V.L.; Forouzanfar, M.H.; Krishnamurthi, R.; Mensah, G.A.; Connor, M.; Bennett, D.A.; Moran, A.E.; Sacco, R.L.; Anderson, L.; Truelsen, T.; et al. Global and regional burden of stroke during 1990–2010: Findings from the Global Burden of Disease Study 2010. Lancet 2014, 383, 245–254. [Google Scholar] [CrossRef]

- Langhorne, P.; Coupar, F.; Pollock, A. Motor recovery after stroke: A systematic review. Lancet Neurol. 2009, 8, 741–754. [Google Scholar] [CrossRef]

- Krakauer, J.W. Motor learning: Its relevance to stroke recovery and neurorehabilitation. Curr. Opin. Neurol. 2006, 19, 84–90. [Google Scholar] [CrossRef] [PubMed]

- Belda-Lois, J.M.; Mena-del Horno, S.; Bermejo-Bosch, I.; Moreno, J.C.; Pons, J.L.; Farina, D.; Iosa, M.; Molinari, M.; Tamburella, F.; Ramos, A.; et al. Rehabilitation of gait after stroke: A review towards a top-down approach. J. Neuroeng. Rehabil. 2011, 8, 66. [Google Scholar] [CrossRef] [Green Version]

- Dimyan, M.A.; Cohen, L.G. Neuroplasticity in the context of motor rehabilitation after stroke. Nat. Rev. Neurol. 2011, 7, 76–85. [Google Scholar] [CrossRef] [PubMed]

- Biasiucci, A.; Leeb, R.; Iturrate, I.; Perdikis, S.; Al-Khodairy, A.; Corbet, T.; Schnider, A.; Schmidlin, T.; Zhang, H.; Bassolino, M.; et al. Brain-actuated functional electrical stimulation elicits lasting arm motor recovery after stroke. Nat. Commun. 2018, 9, 2421. [Google Scholar] [CrossRef] [Green Version]

- Pichiorri, F.; Morone, G.; Petti, M.; Toppi, J.; Pisotta, I.; Molinari, M.; Paolucci, S.; Inghilleri, M.; Astolfi, L.; Cincotti, F.; et al. Brain–computer interface boosts motor imagery practice during stroke recovery. Ann. Neurol. 2015, 77, 851–865. [Google Scholar] [CrossRef]

- Grosse-Wentrup, M.; Mattia, D.; Oweiss, K. Using brain–computer interfaces to induce neural plasticity and restore function. J. Neural Eng. 2011, 8, 025004. [Google Scholar] [CrossRef] [Green Version]

- Niazi, I.K.; Mrachacz-Kersting, N.; Jiang, N.; Dremstrup, K.; Farina, D. Peripheral electrical stimulation triggered by self-paced detection of motor intention enhances motor evoked potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 595–604. [Google Scholar] [CrossRef]

- Jochumsen, M.; Navid, M.S.; Nedergaard, R.W.; Signal, N.; Rashid, U.; Hassan, A.; Haavik, H.; Taylor, D.; Niazi, I.K. Self-paced online vs. cue-based offline brain–computer interfaces for inducing neural plasticity. Brain Sci. 2019, 9, 127. [Google Scholar] [CrossRef]

- Jochumsen, M.; Navid, M.S.; Rashid, U.; Haavik, H.; Niazi, I.K. EMG-versus EEG-triggered electrical stimulation for inducing corticospinal plasticity. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1901–1908. [Google Scholar] [CrossRef] [PubMed]

- Niazi, I.K.; Navid, M.S.; Rashid, U.; Amjad, I.; Olsen, S.; Haavik, H.; Alder, G.; Kumari, N.; Signal, N.; Taylor, D.; et al. Associative cued asynchronous BCI induces cortical plasticity in stroke patients. Ann. Clin. Transl. Neurol. 2022, 9, 722–733. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Cremoux, S.; Robinault, L.; Lauber, J.; Arceo, J.C.; Navid, M.S.; Nedergaard, R.W.; Rashid, U.; Haavik, H.; Niazi, I.K. Investigation of optimal afferent feedback modality for inducing neural plasticity with a self-paced brain-computer interface. Sensors 2018, 18, 3761. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, R.; Jiang, N.; Mrachacz-Kersting, N.; Lin, C.; Prieto, G.A.; Moreno, J.C.; Pons, J.L.; Dremstrup, K.; Farina, D. A closed-loop brain–computer interface triggering an active ankle–foot orthosis for inducing cortical neural plasticity. IEEE Trans. Biomed. Eng. 2014, 61, 2092–2101. [Google Scholar]

- Jochumsen, M.; Janjua, T.A.M.; Arceo, J.C.; Lauber, J.; Buessinger, E.S.; Kæseler, R.L. Induction of neural plasticity using a low-cost open source brain-computer interface and a 3D-printed wrist exoskeleton. Sensors 2021, 21, 572. [Google Scholar] [CrossRef] [PubMed]

- De Vries, S.; Mulder, T. Motor imagery and stroke rehabilitation: A critical discussion. J. Rehabil. Med. 2007, 39, 5–13. [Google Scholar] [CrossRef] [Green Version]

- Cervera, M.A.; Soekadar, S.R.; Ushiba, J.; Millán, J.d.R.; Liu, M.; Birbaumer, N.; Garipelli, G. Brain-computer interfaces for post-stroke motor rehabilitation: A meta-analysis. Ann. Clin. Transl. Neurol. 2018, 5, 651–663. [Google Scholar] [CrossRef]

- Nojima, I.; Sugata, H.; Takeuchi, H.; Mima, T. Brain–Computer Interface Training Based on Brain Activity Can Induce Motor Recovery in Patients with Stroke: A Meta-Analysis. Neurorehabilit. Neural Repair 2021, 36, 83–96. [Google Scholar] [CrossRef] [PubMed]

- Kenah, K.; Bernhardt, J.; Cumming, T.; Spratt, N.; Luker, J.; Janssen, H. Boredom in patients with acquired brain injuries during inpatient rehabilitation: A scoping review. Disabil. Rehabil. 2018, 40, 2713–2722. [Google Scholar] [CrossRef]

- de Castro-Cros, M.; Sebastian-Romagosa, M.; Rodríguez-Serrano, J.; Opisso, E.; Ochoa, M.; Ortner, R.; Guger, C.; Tost, D. Effects of gamification in BCI functional rehabilitation. Front. Neurosci. 2020, 14, 882. [Google Scholar] [CrossRef]

- Mubin, O.; Alnajjar, F.; Jishtu, N.; Alsinglawi, B.; Al Mahmud, A. Exoskeletons with virtual reality, augmented reality, and gamification for stroke patients’ rehabilitation: Systematic review. JMIR Rehabil. Assist. Technol. 2019, 6, e12010. [Google Scholar] [CrossRef]

- Amjad, I.; Toor, H.; Niazi, I.K.; Pervaiz, S.; Jochumsen, M.; Shafique, M.; Haavik, H.; Ahmed, T. Xbox 360 Kinect cognitive games improve slowness, complexity of EEG, and cognitive functions in subjects with mild cognitive impairment: A randomized control trial. Games Health J. 2019, 8, 144–152. [Google Scholar] [CrossRef]

- Jeunet, C.; N’Kaoua, B.; Lotte, F. Advances in user-training for mental-imagery-based BCI control: Psychological and cognitive factors and their neural correlates. Prog. Brain Res. 2016, 228, 3–35. [Google Scholar]

- Niazi, I.K.; Jiang, N.; Tiberghien, O.; Nielsen, J.F.; Dremstrup, K.; Farina, D. Detection of movement intention from single-trial movement-related cortical potentials. J. Neural Eng. 2011, 8, 066009. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Niazi, I.K.; Mrachacz-Kersting, N.; Jiang, N.; Farina, D.; Dremstrup, K. Comparison of spatial filters and features for the detection and classification of movement-related cortical potentials in healthy individuals and stroke patients. J. Neural Eng. 2015, 12, 056003. [Google Scholar] [CrossRef] [PubMed]

- Karimi, F.; Kofman, J.; Mrachacz-Kersting, N.; Farina, D.; Jiang, N. Detection of movement related cortical potentials from EEG using constrained ICA for brain-computer interface applications. Front. Neurosci. 2017, 11, 356. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kamavuako, E.N.; Jochumsen, M.; Niazi, I.K.; Dremstrup, K. Comparison of features for movement prediction from single-trial movement-related cortical potentials in healthy subjects and stroke patients. Comput. Intell. Neurosci. 2015, 2015, 858015. [Google Scholar] [CrossRef] [Green Version]

- Jiang, J.; Wang, C.; Wu, J.; Qin, W.; Xu, M.; Yin, E. Temporal combination pattern optimization based on feature selection method for motor imagery bcis. Front. Hum. Neurosci. 2020, 14, 231. [Google Scholar] [CrossRef]

- Kæseler, R.L.; Johansson, T.W.; Struijk, L.N.A.; Jochumsen, M. Feature and Classification Analysis for Detection and Classification of Tongue Movements From Single-Trial Pre-Movement EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 678–687. [Google Scholar] [CrossRef]

- Jin, J.; Miao, Y.; Daly, I.; Zuo, C.; Hu, D.; Cichocki, A. Correlation-based channel selection and regularized feature optimization for MI-based BCI. Neural Netw. 2019, 118, 262–270. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef] [Green Version]

- Jeunet, C.; Jahanpour, E.; Lotte, F. Why standard brain-computer interface (BCI) training protocols should be changed: An experimental study. J. Neural Eng. 2016, 13, 036024. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Larrue, F.; Mühl, C. Flaws in current human training protocols for spontaneous brain-computer interfaces: Lessons learned from instructional design. Front. Hum. Neurosci. 2013, 7, 568. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hougaard, B.I.; Rossau, I.G.; Czapla, J.J.; Miko, M.A.; Bugge Skammelsen, R.; Knoche, H.; Jochumsen, M. Who Willed It? Decreasing Frustration by Manipulating Perceived Control through Fabricated Input for Stroke Rehabilitation BCI Games. Proc. Annu. Symp. Comput. Hum. Interact. Play 2021, 5, 1–19. [Google Scholar]

- Hougaard, B.I.; Knoche, H.; Kristensen, M.S.; Jochumsen, M. Modulating Frustration and Agency Using Fabricated Input for Motor Imagery BCIs in Stroke Rehabilitation. IEEE Access 2022, 10, 72312–72327. [Google Scholar] [CrossRef]

- van de Laar, B.; Bos, D.P.O.; Reuderink, B.; Poel, M.; Nijholt, A. How Much Control Is Enough? Influence of Unreliable Input on User Experience. IEEE Trans. Cybern. 2013, 43, 1584–1592. [Google Scholar] [CrossRef]

- Évain, A.; Argelaguet, F.; Strock, A.; Roussel, N.; Casiez, G.; Lécuyer, A. Influence of Error Rate on Frustration of BCI Users. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Bari, Italy, 7–10 June 2016; ACM: New York, NY, USA, 2016; pp. 248–251. [Google Scholar] [CrossRef] [Green Version]

- Burde, W.; Blankertz, B. Is the locus of control of reinforcement a predictor of brain-computer interface performance? In Proceedings of the 3rd International Brain-Computer Interface Workshop and Training Course, Graz, Austria, 21–24 September 2006; Verlag der Technischen Universität Graz: Graz, Austria, 2006. [Google Scholar]

- Voznenko, T.I.; Urvanov, G.A.; Dyumin, A.A.; Andrianova, S.V.; Chepin, E.V. The research of emotional state influence on quality of a brain-computer interface usage. Procedia Comput. Sci. 2016, 88, 391–396. [Google Scholar] [CrossRef] [Green Version]

- Kjeldsen, T.K.K.; Nielsen, T.B.; Ziadeh, H.; Lehmann, S.; Nielsen, L.D.; Gulyás, D.; Hougaard, B.I.; Knoche, H.; Jochumsen, M. Effect of Continuous and Discrete Feedback on Agency and Frustration in a Brain-Computer Interface Virtual Reality Interaction. In Proceedings of the 2021 IEEE 21st International Conference on Bioinformatics and Bioengineering (BIBE), Kragujevac, Serbia, 25–27 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Kleih, S.; Kaufmann, T.; Hammer, E.; Pisotta, I.; Pichiorri, F.; Riccio, A.; Mattia, D.; Kübler, A. Motivation and SMR-BCI: Fear of failure affects BCI performance. In Proceedings of the Fifth International Brain–Computer Interface Meeting 2013, Pacific Grove, CA, USA, 3–7 June 2013; Verlag der Technischen Universität Graz: Graz, Austria, 2013; pp. 160–161. [Google Scholar]

- Hunicke, R. The Case for Dynamic Difficulty Adjustment in Games. In Proceedings of the 2005 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology, Valencia, Spain, 15–17 June 2005; Association for Computing Machinery: New York, NY, USA, 2005; pp. 429–433. [Google Scholar] [CrossRef]

- Goll Rossau, I.; Skammelsen, R.B.; Czapla, J.J.; Hougaard, B.I.; Knoche, H.; Jochumsen, M. How can we help? Towards a design framework for performance-accommodation mechanisms for users struggling with input. In Proceedings of the Extended Abstracts of the 2021 Annual Symposium on Computer–Human Interaction in Play, Virtual Event, 18–21 October 2021; pp. 10–16. [Google Scholar]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990. [Google Scholar]

- Cowley, B.; Charles, D.; Black, M.; Hickey, R. Toward an understanding of flow in video games. Comput. Entertain. 2008, 6, 20. [Google Scholar] [CrossRef] [Green Version]

- Michailidis, L.; Balaguer-Ballester, E.; He, X. Flow and immersion in video games: The aftermath of a conceptual challenge. Front. Psychol. 2018, 9, 1682. [Google Scholar] [CrossRef] [Green Version]

- Renard, Y.; Lotte, F.; Gibert, G.; Congedo, M.; Maby, E.; Delannoy, V.; Bertrand, O.; Lécuyer, A. Openvibe: An open-source software platform to design, test, and use brain–computer interfaces in real and virtual environments. Presence Teleoper. Virtual Environ. 2010, 19, 35–53. [Google Scholar] [CrossRef]

- Ziadeh, H.; Gulyás, D.; Nielsen, L.; Lehmann, S.; Nielsen, T.; Kjeldsen, T.; Hougaard, B.; Jochumsen, M.; Knoche, H. “Mine works better”: Examining the influence of embodiment in virtual reality on the sense of agency during a binary motor imagery task with a brain-computer interface. Front. Psychol. 2021, 12, 806424. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Da Silva, F.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Christensen, R.H.B. Regression Models for Ordinal Data. R Package Version: v2019.12-10. Available online: https://cran.r-project.org/web/packages/ordinal/index.html (accessed on 3 October 2022).

- Lazic, S.E. The problem of pseudoreplication in neuroscientific studies: Is it affecting your analysis? BMC Neurosci. 2010, 11, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef] [Green Version]

- Barbero, A.; Grosse-Wentrup, M. Biased feedback in brain-computer interfaces. J. Neuroeng. Rehabil. 2010, 7, 34. [Google Scholar] [CrossRef] [PubMed]

| Variables | Min | Max | Mean | SD | Description |

|---|---|---|---|---|---|

| Response | |||||

| Perceived Control | 0 | 1 | 0.46 | 0.27 | Normalized 7-point Likert scale rating by participants after playing a condition. |

| Frustration | 0 | 1 | 0.50 | 0.29 | Normalized 7-point Likert scale rating by participants after playing a condition. |

| Explanatory | |||||

| MI Conv. Rate | 0 | 1 | 0.54 | 0.28 | Normalized count of trials that were caused by successful motor imagery activations in a condition. |

| Pos. Feedback | 0 | 1 | 0.52 | 0.24 | Normalized count of how many trials delivered a positive outcome (reeling fish, catching fish, receiving help) in a condition, regardless of cause. |

| Fish Caught | 0 | 8 | 3.59 | 2.39 | Count of how many fish were reeled all the way up and caught in a given condition. |

| Fish Lost | 0 | 6 | 1.69 | 1.69 | Count of how many fish participants lost when playing a given condition. |

| Fish Reel | 0 | 20 | 6.75 | 3.54 | Count of how many times participants managed to reel a fish closer to them in a condition. |

| Fish Unreel | 0 | 14 | 6.54 | 3.31 | Count of how many times the fishing rod unreeled (the fish trying to escape) in a condition. |

| PAM rate | 0 | 0.3 | 0.18 | 0.13 | Normalized count of trials in which participants received help in a condition. |

| Condition | - | - | - | - | Participants played four conditions: Normal (no PAM), augmented success, input override, and mitigated failure. |

| Variable | 1 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gender | F | M | M | M | M | F | M | M | F | F | F | F | M | M | M | M | M | F |

| Age | 27 | 29 | 60 | 27 | 22 | 23 | 24 | 24 | 23 | 22 | 33 | 24 | 22 | 24 | 21 | 28 | 26 | 25 |

| Perceived Performance | 0.85 | 0.95 | 0.35 | NA | 0.7 | 0.75 | 0.2 | 0.75 | 0.8 | 0.075 | 0.6 | 0.5 | 0.15 | 0.35 | 0.6 | 0.35 | 0.45 | 0.5 |

| BCI Experience | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | Yes | No | No | Yes | No | No | No | Yes | Yes |

| Perc. Control | 0.67 | 0.75 | 0.21 | 0.37 | 0.63 | 0.58 | 0.29 | 0.54 | 0.46 | 0.04 | 0.29 | 0.71 | 0.11 | 0.54 | 0.75 | 0.42 | 0.38 | 0.54 |

| Frustration | 0.33 | 0.13 | 1.00 | 0.38 | 0.54 | 0.42 | 0.50 | 0.58 | 0.42 | 1.00 | 0.54 | 0.25 | 0.67 | 0.50 | 0.29 | 0.83 | 0.54 | 0.21 |

| MI Conv. Rate | 92% | 85% | 21% | 61% | 32% | 80% | 32% | 75% | 36% | 11% | 71% | 80% | 27% | 52% | 34% | 45% | 78% | 55% |

| Pos. Feedback | 78% | 74% | 28% | 57% | 35% | 70% | 35% | 68% | 40% | 18% | 66% | 70% | 32% | 50% | 40% | 49% | 65% | 52% |

| Aug. Success | ||||||||||||||||||

| Perc. Control | 0.67 | 0.83 | 0.33 | 0.33 | 0.50 | 0.33 | 0.33 | 0.17 | 0.33 | 0.00 | 0.17 | 0.50 | 0.33 | 0.67 | 1.00 | 0.67 | 0.17 | 0.67 |

| Frustration | 0.33 | 0.17 | 1.00 | 0.17 | 0.67 | 0.50 | 0.67 | 0.83 | 0.33 | 1.00 | 0.50 | 0.17 | 0.67 | 0.33 | 0.17 | 0.67 | 0.67 | 0.17 |

| MI Conv. Rate | 95% | 80% | 15% | 60% | 15% | 90% | 50% | 30% | 35% | 15% | 85% | 75% | 35% | 65% | 45% | 45% | 85% | 55% |

| Pos. Feedback | 65% | 60% | 15% | 50% | 15% | 55% | 35% | 20% | 25% | 15% | 70% | 50% | 30% | 50% | 45% | 45% | 60% | 45% |

| Fish Caught | 0 | 6 | 1 | 4 | 1 | 5 | 1 | 0 | 2 | 1 | 8 | 4 | 2 | 6 | 5 | 6 | 5 | 5 |

| Fish Lost | 0 | 0 | 5 | 1 | 5 | 2 | 4 | 5 | 4 | 5 | 0 | 2 | 3 | 2 | 3 | 2 | 2 | 2 |

| Input Override | ||||||||||||||||||

| Perc. Control | 0.50 | 0.50 | 0.17 | 0.50 | 0.67 | 0.67 | 0.33 | 0.50 | 0.50 | 0.00 | 0.33 | 0.67 | 0.00 | 0.67 | 0.83 | 0.33 | 0.33 | 0.33 |

| Frustration | 0.50 | 0.17 | 1.00 | 0.50 | 0.67 | 0.50 | 0.33 | 0.50 | 0.17 | 1.00 | 0.67 | 0.83 | 0.50 | 0.50 | 0.33 | 0.83 | 0.50 | 0.50 |

| MI Conv. Rate | 100% | 95% | 5% | 55% | 35% | 95% | 30% | 95% | 15% | 5% | 50% | 90% | 30% | 65% | 40% | 30% | 65% | 45% |

| Pos. Feedback | 100% | 95% | 35% | 70% | 50% | 100% | 55% | 95% | 40% | 35% | 60% | 95% | 50% | 75% | 65% | 60% | 70% | 60% |

| Fish Caught | 0 | 8 | 2 | 6 | 3 | 7 | 5 | 8 | 2 | 2 | 4 | 7 | 3 | 7 | 4 | 4 | 5 | 4 |

| Fish Lost | 0 | 0 | 3 | 0 | 2 | 0 | 2 | 0 | 3 | 4 | 1 | 0 | 2 | 0 | 1 | 1 | 0 | 2 |

| Mit. Failure | ||||||||||||||||||

| Perc. Control | 0.50 | 0.67 | 0.00 | 0.33 | 0.67 | 0.33 | 0.33 | 0.67 | 0.33 | 0.17 | 0.00 | 0.67 | 0.50 | 0.67 | 0.17 | 0.33 | 0.67 | |

| Frustration | 0.33 | 0.17 | 1.00 | 0.33 | 0.33 | 0.50 | 0.33 | 0.67 | 0.50 | 1.00 | 0.83 | 0.00 | 0.50 | 0.33 | 1.00 | 0.50 | 0.00 | |

| MI Conv. Rate | 90% | 80% | 10% | 70% | 30% | 50% | 25% | 75% | 30% | 25% | 80% | 75% | 55% | 15% | 40% | 85% | 60% | |

| Pos. Feedback | 60% | 55% | 5% | 50% | 25% | 40% | 25% | 55% | 30% | 20% | 65% | 55% | 50% | 15% | 25% | 55% | 45% | |

| Fish Caught | 0 | 4 | 0 | 4 | 1 | 2 | 1 | 5 | 1 | 1 | 5 | 4 | 3 | 1 | 1 | 4 | 3 | |

| Fish Lost | 0 | 0 | 4 | 1 | 2 | 1 | 3 | 0 | 2 | 3 | 0 | 0 | 1 | 3 | 2 | 0 | 1 | |

| Ref. Condition | ||||||||||||||||||

| Perc. Control | 1.00 | 1.00 | 0.33 | 0.33 | 0.67 | 1.00 | 0.17 | 0.83 | 0.67 | 0.00 | 0.67 | 1.00 | 0.00 | 0.33 | 0.50 | 0.50 | 0.67 | 0.50 |

| Frustration | 0.17 | 0.00 | 1.00 | 0.50 | 0.50 | 0.17 | 0.67 | 0.33 | 0.67 | 1.00 | 0.17 | 0.00 | 0.83 | 0.67 | 0.33 | 0.83 | 0.50 | 0.17 |

| MI Conv. Rate | 85% | 85% | 55% | 60% | 50% | 85% | 25% | 100% | 65% | 0% | 70% | 80% | 15% | 25% | 35% | 65% | 75% | 60% |

| Pos. Feedback | 85% | 85% | 55% | 60% | 50% | 85% | 25% | 100% | 65% | 0% | 70% | 80% | 15% | 25% | 35% | 65% | 75% | 60% |

| Fish Caught | 0 | 6 | 3 | 5 | 4 | 8 | 1 | 8 | 4 | 0 | 6 | 6 | 1 | 2 | 2 | 5 | 7 | 4 |

| Fish Lost | 0 | 0 | 2 | 1 | 2 | 0 | 4 | 0 | 0 | 6 | 0 | 0 | 5 | 4 | 3 | 0 | 0 | 2 |

| Predicted | Fixed Effect | AIC | ML | LR | |

|---|---|---|---|---|---|

| Perceived Control | Fish Lost + PAM Rate | 215.82 | −98.91 | 15.61 | <0.001 |

| Fish Lost + Condition | 219.11 | −98.55 | 16.32 | 0.001 | |

| Fish Lost + Fish Caught | 226.70 | −104.35 | 4.72 | 0.030 | |

| Fish Lost | 229.43 | −106.71 | 24.05 | <0.001 | |

| Fish Caught | 232.12 | −108.06 | 21.36 | <0.001 | |

| Pos. Feedback | 233.27 | −108.63 | 20.21 | <0.001 | |

| MI Conv. Rate | 237.67 | −110.83 | 15.81 | <0.001 | |

| Fish Reel | 242.10 | −113.05 | 11.38 | 0.001 | |

| Fish Unreel | 245.62 | −114.81 | 7.86 | 0.005 | |

| Predicted | Fixed Effect | Estimate | Std. Error | z Value | p |

| Perceived Control | PAM Rate | −7.86 | 2.14 | −3.68 | <0.001 |

| Fish Lost | −1.39 | 0.27 | −5.11 | <0.001 |

| Predicted | Fixed Effect | AIC | ML | LR | |

|---|---|---|---|---|---|

| Frustration | Fish Lost | 239.63 | −111.82 | 8.81 | 0.003 |

| Fish Caught | 240.46 | −112.23 | 7.99 | 0.005 | |

| MI Conv. Rate | 242.49 | −113.25 | 5.95 | 0.015 | |

| Pos. Feedback | 244.20 | −114.10 | 4.24 | 0.039 | |

| Predicted | Fixed Effect | Estimate | Std. Error | z Value | p |

| Frustration | Fish Lost | 0.62 | 0.21 | 2.96 | 0.003 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jochumsen, M.; Hougaard, B.I.; Kristensen, M.S.; Knoche, H. Implementing Performance Accommodation Mechanisms in Online BCI for Stroke Rehabilitation: A Study on Perceived Control and Frustration. Sensors 2022, 22, 9051. https://doi.org/10.3390/s22239051

Jochumsen M, Hougaard BI, Kristensen MS, Knoche H. Implementing Performance Accommodation Mechanisms in Online BCI for Stroke Rehabilitation: A Study on Perceived Control and Frustration. Sensors. 2022; 22(23):9051. https://doi.org/10.3390/s22239051

Chicago/Turabian StyleJochumsen, Mads, Bastian Ilsø Hougaard, Mathias Sand Kristensen, and Hendrik Knoche. 2022. "Implementing Performance Accommodation Mechanisms in Online BCI for Stroke Rehabilitation: A Study on Perceived Control and Frustration" Sensors 22, no. 23: 9051. https://doi.org/10.3390/s22239051