1. Introduction

Robotics is evolving rapidly and has dramatically improved the efficiency of industrial production and the convenience of people’s lives. Motion planning is indispensable in robotics, which requires finding a feasible path from the initial state to the target state subject to obstacle avoidance constraints. Nowadays, motion planning has been widely applied to various robots, including but not limited to industrial robots [

1,

2], free-floating space robots [

3], rescue robots [

4], medical robots [

5], and autonomous vehicles [

6].

According to the research order and fundamental principles, various motion planning algorithms can be mainly divided into four categories: bionic algorithms, artificial potential field methods, grid-based searches, and sampling-based algorithms [

7]. The ant colony algorithm [

8], one of the representative bionic algorithms, draws a lesson from the behavior of ants exploring paths to find food, showing strong robustness. However, it has the problems of slow convergence and a poor quality of the solution when dealing with large-scale problems [

9]. The artificial potential field (APF) [

10] method assumes a gravitational force of the goal state and repulsive forces of the obstacles. By calculating their resultant force, the following motion state of the moving object can be determined. Though APF has a simple structure and a small amount of computation, it is faced with the disadvantages of a local minimum value, large path oscillations, and complex path searches between similar obstacles [

11]. The grid-based searches represented by A* [

12,

13] map motion planning problems into graphs and solve them in discrete state spaces. Although A* can return to the path with a minimal cost, the computation time and storage space of the data grow exponentially as the dimension of the state space increases.

Compared with grid-based searches, sampling-based algorithms avoid discretizing the state space in motion planning and efficiently perform in high-dimensional state spaces, such as Rapidly exploring Random Tree (RRT) [

14] and Probabilistic RoadMap (PRM) [

15]. Since RRT-based algorithms have the characteristics of a high search efficiency and low resource consumption, they have been frequently used to solve manipulators, autonomous surface vehicles, and other robot motion planning problems [

16,

17,

18]. Furthermore, many variants of RRT have emerged. RRT-connect [

19] simultaneously expands two trees from the initial and target states and then connects the two at the appropriate position, speeding up the pathfinding process. Kang et al. proposed a triangular inequality-based rewiring method for the RRT-connect algorithm [

20]. The improved algorithm shows a shorter path length than RRT-connect. The above algorithms have probabilistic completeness, i.e., as the number of iterations approaches infinity, the probability of finding a feasible solution tends towards one. However, none of these methods can ensure optimality. RRT* [

21] solves the problem by adopting the ChooseParent and Rewire procedures, which provide asymptotic optimality. An algorithm with asymptotic optimality means that as the number of iterations approaches infinity, the algorithm guarantees that the probability of finding an optimal solution approaches one [

22].

Although RRT* can improve the initial solution to the optimum, it has a slow convergence rate due to the large amount of computation caused by the continuous increase in the number of vertices. Therefore, enhancing the convergence rate of RRT* is of great significance and has attracted extensive attention. Jonathan et al. proposed Informed-RRT* [

23] based on direct sampling. This method defines the acceptable sampling space as a hyper-ellipsoid and samples directly from it. By narrowing the sampling region, Informed-RRT* may converge to the optimal path rapidly. However, when the relevant hyper-ellipsoid exceeds the region of the motion planning problem, the algorithm will not be applicable. Quick-RRT* [

24] uses triangular inequality to improve the ChooseParent and Rewire procedures and converges faster than RRT*. The downside is that it wastes many computing resources on useless vertices, which do not help in finding the optimal path. MOD-RRT* [

25] introduces a re-planning procedure to improve the performance of the algorithm, which is used to modify unfeasible paths to generate high-quality paths. GMR-RRT* [

26] uses Gaussian mixture regression (GRM). This algorithm learns the human driving path and finds key features through GRM to form a probability distribution to guide sampling. Jun et al. proposed Feedback-RRT* (F-RRT*) [

27], with a data-driven risk network and feedback module. F-RRT* can use the information extracted from situation data to constrain the growth of the random tree and make biased adjustments to improve planning efficiency.

This paper proposes Metropolis-RRT* (M-RRT*) for the motion planning of mobile robots. Based on the principle of the Metropolis acceptance criterion, on one hand, in the initial path estimation phase of the algorithm, an asymptotic vertex acceptance criterion is developed, which preferentially accepts vertices close to the target state. On the other hand, in the optimal path search phase, a nonlinear dynamic vertex acceptance criterion is also developed, which preferentially accepts vertices that may lower the current optimal path cost. Therefore, M-RRT* substantially reduces the number of vertices required for planning, thereby obtaining the initial path more efficiently and converging to the optimal path faster.

The M-RRT* algorithm proposed in this study is applied to solve the motion planning problem of mobile robots, with the following main contributions:

Propose an asymptotic vertex acceptance criterion in the initial path estimation phase of the algorithm to effectively reduce the time of finding the initial path and make the algorithm start searching for the optimal path earlier.

Propose a nonlinear dynamic vertex acceptance criterion in the optimal path search phase of the algorithm. This criterion can reduce the number of vertices in the algorithm that are not capable of improving the current path so as to rapidly converge to the optimal path.

The experimental results in three common types of environments show that the proposed algorithm has an outstanding performance. It takes less time to find the initial path and has a fast convergence rate in the cluttered environment and the regular environment. Due to the complexity of the maze, M-RRT* does not improve much in the initial path estimation phase, but more importantly, its convergence speed still increases significantly.

The rest of this paper is structured as follows.

Section 2 introduces the definition of the motion planning problem and related algorithms.

Section 3 explains the proposed algorithm in detail.

Section 4 analyzes the simulation results compared with RRT*, Informed-RRT*, and Q-RRT*.

Section 5 summarizes this paper and looks forward to future research plans.

2. Problem Definition and Related Work

This section defines the motion planning problem and details the basic algorithm of M-RRT*. To understand the comparative analysis in

Section 4, this section also provides an explanation of Informed-RRT* and Q-RRT*.

2.1. Problem Definition

When a robot performs a given task, the robot itself or a certain part moves from the initial state to the target state. Motion planning can provide the robot with a collision-free path from the initial state to the target state, which is called a feasible path. As mentioned in the previous section, motion planning has been applied to various robots, and the planning results directly affect the efficiency of the robot in completing a given task. Planners require motion planning not only to be able to search for an optimal path but also to make the planning process as fast as possible. The mathematical definition of motion planning is described in detail below.

Let ⊆ ℝd be a d-dimensional configuration space, obs ⊂ be the obstacle region, and free = cl(\obs) be the obstacle-free region, where d ∈ ℕ, d ≥ 2, and cl(·) refers to the closure of the set. xinit ∈ free is the initial state, and goal ⊂ free is the goal region, where the motion planning will be completed if the object reaches this region. The continuous function σ: [0, 1] ↦ is called a path. A path σ: [0, 1] ↦ free is collision-free if ∀τ ∈ [0, 1], σ(τ) ∈ free.

Definition 1. (Feasible motion planning) Given a motion planning problem {xinit, goal, obs}, find a collision-free path σ, where σ(0) = xinit, σ(1) ∈ goal. This path is called a feasible path.

Definition 2. (Optimal motion planning) Given a motion planning problem {xinit, goal, obs}, find a feasible path σ that minimizes the cost c(σ*), i.e., c(σ*) = min{c(σ*): σ ∈ Σ}, where c(σ) is the cost of a feasible path σ in measured by the Euclidean distance, and Σ is the set of all feasible paths.

Definition 3. (Fast motion planning) Given a motion planning problem {xinit, goal, obs}, find the optimal feasible path σ* in the shortest possible time.

2.2. RRT*

RRT* is a sampling-based motion planning algorithm. Since RRT* is an extended algorithm of RRT, a brief description of its basic algorithm is required first. RRT gradually explores the collision-free path from the initial state xinit which is the only vertex with no edges. In each iteration, xrand is randomly sampled in the collision-free space free. Then, we find the vertex xnearest closest to the sample xrand from the tree based on the Euclidean metric. After finding xnearest, extend a distance from xnearest towards xrand to obtain a new vertex xnew, and this distance η is called the step size. Determine whether the path from xnearest to xnew collides with obstacles based on the environmental information. If a collision occurs, the path is discarded, and the next iteration proceeds. If no collision occurs, it is a feasible path, and this path and the vertex xnew are added to the tree. Repeat the above steps until a feasible path is found and output. As shown in Algorithm 1, the major difference from RRT is that RRT* contains the ChooseParent and Rewire procedures, which makes it asymptotically optimal.

| Algorithm 1 RRT* |

| 1: | G ← (V, E); V ← xinit; |

| 2: | for i = 1 to n do |

| 3: | xrand ← Sample(i); |

| 4: | xnearest ← Nearest(V, xrand); |

| 5: | (xnew, σ) ← Steer(xnearest, xrand); |

| 6: | if CollisionFree(σ) then |

| 7: | Xnear ← Near(V, xnew); |

| 8: | (xparent, σparent) ← ChooseParent(Xnear, xnearest, xnew, σ); |

| 9: | V ← AddVertex(xnew); |

| 10: | E ← AddEdge(xparent, xnew); |

| 11: | G ← Rewire(G, xnew, Xnear); |

| 12: | end if |

| 13: | end for |

| 14: | return G; |

In the ChooseParent procedure, RRT* searches for a vertex in a hypersphere of a specific radius centered at

xnew such that the path through this vertex to

xnew has the lowest cost. It is then used as the parent vertex of

xnew, as shown in

Figure 1a. In the Rewire procedure, the vertices in the hypersphere are represented by

xnear in turn. Compare the cost of the current path to

xnear with the cost of the path through

xnew to

xnear until every vertex in the hypersphere has been compared. During each comparison, if the path through

xnew is less costly, change its wiring, as shown in

Figure 1b. Algorithm 2 shows the ChooseParent procedure, and Algorithm 3 shows the Rewire procedure.

| Algorithm 2 ChooseParent(Xnear, xnearest, xnew, σnearest) |

| 1: | xmin ← xnearest; |

| 2: | σmin ← σnearest; |

| 3: | cmin ← Cost(xmin) + Cost(σmin); |

| 4: | for each xnear ∈ Xnear do |

| 5: | σ ← Connect(xnear, xnew); |

| 6: | c ← Cost(xnear) + Cost(σ); |

| 7: | if c < cmin then |

| 8: | if CollisionFree(σ) then |

| 9: | xmin ← xnear; |

| 10: | σmin ← σ; |

| 11: | cmin ← c; |

| 12: | end if |

| 13: | end if |

| 14: | end for |

| 15: | return (xmin, σmin); |

| Algorithm 3 Rewire(G, xnew, Xnear) |

| 1: | for each xnear ∈ Xnear do |

| 2: | σ ← Connect(xnew, xnear); |

| 3: | if Cost(xnew) + Cost(σ) < Cost(xnear) then |

| 4: | if CollisionFree(σ) then |

| 5: | G ← Reconnect(G, xnew, Xnear, σ); |

| 6: | end if |

| 7: | end if |

| 8: | end for |

| 9: | returnG; |

The following briefly describes the basic procedures used in the RRT* algorithm.

Sample: Returns a random sample from .

Nearest: Given a graph G = (V, E) and a state x, it returns the vertex closest to x according to the given Euclidean distance function.

Steer: Given two states xs, xt ∈ , it extends xs to xt by a given distance to obtain the state xd ∈ . Then, it returns xd and the path from xs to xt.

CollisionFree: Checks whether the given path σ is a feasible path.

Near: Given a graph G = (V, E) and a state x, it sets a hypersphere of a given radius centered at x and returns the set Xnear of vertices in V that are contained in this hypersphere.

AddVertex: Given a state x, it adds x to the graph.

AddEdge: Given two states xs, xt ∈ , it adds the path from xs to xt to the graph.

Connect: Given two states xs, xt ∈ , it returns the path from xs to xt.

Reconnect: Given a graph G = (V, E), two states xs, xt ∈ , and a path σ from xs to xt, it replaces the parent vertex of xt with xs and adds σ to graph G.

RRT searches for a feasible path by imitating a randomly grown tree, but this algorithm cannot find the optimal path. Unlike RRT, RRT* has asymptotic optimality by introducing the ChooseParent and Rewire procedures. However, due to the frequent running of these two procedures, the convergence speed of RRT* is slow.

2.3. Informed-RRT*

Informed-RRT* has the same procedure as RRT* until the initial path is found. In the optimal path search phase, Informed-RRT* constructs a hyper-ellipsoid with focal points

xinit and

xgoal. It then samples directly inside the hyper-ellipsoid to find the optimal path. The sampling region of the set of vertices satisfies

where

x is the random sample,

σ* is the best path with the minimum cost at the current time,

is the cost of

σ*, and

is the Euclidean distance between

x and

y.

Figure 2 shows the hyper-ellipsoid set by Informed-RRT*. When the current best solution is updated, its cost

is also updated. Therefore, the sampling region, i.e., the hyper-ellipsoid, gradually becomes smaller, and the probability of the sample being on the optimal path is greater.

The direct sampling method significantly increases the convergence rate of In-formed-RRT*. However, Informed-RRT* does not improve the efficiency of finding the initial solution. Moreover, although Informed-RRT* converges faster than RRT*, its efficiency decreases when the region of the hyper-ellipsoid exceeds the configuration space.

2.4. Q-RRT*

Quick-RRT* (Q-RRT*) makes adjustments to the ChooseParent and Rewire procedures. In the ChooseParent procedure, RRT* searches for the potential parent vertex of

xnew from

Xnear, while the search scope of Quick-RRT* also includes the ancestors of

Xnear. Quick-RRT* defines a depth in advance, which is used to set the number of vertices backtracked when searching for ancestors. In

Figure 3a, through the ChooseParent procedure,

xnew selects

xparent as the parent vertex, and

xparent is the ancestor vertex of

xnearest, with a depth of 2. Similar to the ChooseParent procedure, the Rewire procedure also considers the ancestor of the vertex

xnew, as shown in

Figure 3b.

Q-RRT* essentially improves the structure of the random tree. Compared with RRT*, the ChooseParent and Rewire procedures of Q-RRT* make the path more optimized. Although this algorithm can improve the structure, it needs to search more vertices. If most of the vertices do not meet the connection conditions, it means that the algorithm wastes a lot of time.

3. Methods

This section describes the proposed M-RRT* algorithm in detail. Most algorithms based on RRT* are divided into two phases: finding the initial path and finding the optimal path. The initial path is the first feasible path found by the algorithm. Since the initial path of the sampling-based algorithm is not optimal, it is necessary to continue sampling to find the optimal path.

Similarly, M-RRT* includes the initial path estimation and optimal path search phases. Inspired by the Metropolis acceptance criterion, we design different vertex acceptance criteria in the above two phases to calculate the retention probability of the new vertex generated by each iteration. M-RRT* uses the asymptotic vertex acceptance criterion in the initial path estimation phase. These enable the algorithm to obtain the initial solution faster. In the optimal path search phase, M-RRT* uses the nonlinear dynamic vertex acceptance criterion to improve the efficiency of finding the optimal solution. The following briefly introduces the Metropolis acceptance criterion.

3.1. Metropolis Acceptance Criterion

The core of the Metropolis acceptance criterion is the limited acceptance of inferior solutions. It is generally used in the simulated annealing algorithm to calculate the acceptance probability of a solution. The probability of a new solution being accepted is given by

where exp(·) refers to an exponential function,

T is temperature, defined as the control parameter,

E is internal energy, defined as the objective function, and

. The energy of the current state

n of the system is

E(

n), and the energy of the next state is

E(

n + 1). The algorithm tries to gradually decrease the value of the objective function as the temperature

T decreases until

E tends to the global minimum, just like the solid annealing process. A new solution will change the internal energy at the corresponding temperature, and the size of the change is

. It can be seen from (2) that if

the new solution is accepted. If

the new solution is accepted by probability

The following describes the effect of the variation of

T on the candidate solutions.

If T is a fixed value, the probability of accepting the candidate solution that reduces the value of E is greater than the probability of accepting the solution that increases the value.

If T gradually decreases, the probability of accepting the candidate solution that increases the value of E also decreases.

If T tends to zero, the algorithm only accepts the candidate solution that reduces the value of E.

Generally, a large T will perform a global search, but the computational cost will increase. While a small T will search locally and make a fast convergence rate, it tends to trap the algorithm in a local optimum.

3.2. Asymptotic Vertex Acceptance Criterion

The ChooseParent and Rewire procedures make RRT* asymptotically optimal, but frequent collision detection and searching for neighboring vertices increase the algorithm’s complexity. Therefore, this paper introduces the asymptotic vertex acceptance criterion into the initial path estimation phase. After M-RRT* samples a new vertex, the impact of this vertex on finding the initial solution is quantified as a prediction value through a prediction function, and the probability of retaining this vertex is calculated according to the predicted value. If the new vertex is not retained, M-RRT* does not perform the subsequent calculation steps of this iteration and directly starts the next iteration. This method enables the random tree to grow to the target region faster; thus, an initial feasible solution is found more quickly.

To facilitate the description, we denote the target state by

xgoal, the new vertex generated by the nth iteration by

xn, and the vertex closest to the

xgoal, i.e., the vertex with the smallest Euclidean distance to

xgoal, by

xpeak. We can describe the process of finding an initial path as making

xpeak asymptotically approach

xgoal until it reaches the location of

xgoal. The admissible heuristic estimate

is cost-to-go, i.e., the cost to go from any state to the goal. The prediction function for

xn is as follows,

where

is the Euclidean distance from

xn to

xgoal and

is the Euclidean distance from

xpeak to

xgoal. Similar to the Metropolis acceptance criterion, we can judge whether

xn is closer to

xgoal by

C(

n). As shown in

Figure 4, one of the following two cases occurs during the

nth iteration.

If

,

xn lies in a spherical range with

xgoal as the center and

(

xpeak) as the radius; this means that

xn is closer to

xgoal than

xpeak. Therefore,

xn is likely to play a positive role in finding the initial feasible path, at which point

xn is retained with a probability of one and is defined as

xpeak, as shown in

Figure 4a.

If

,

xn is outside the spherical range, and it is further away from

xgoal than

xpeak. In this case, the probability of retaining

xn must be calculated based on the predicted value, as shown in

Figure 4b.

It is worth noting that at the beginning of M-RRT*, the only vertex in the state space,

xinit, which is the start state, is at the same position as

xpeak. To quickly estimate the initial feasible solution, the heuristic value of each extended vertex preferably shows a downward trend. However, when

,

xn must be accepted with a certain probability to prevent the algorithm from falling into a local optimum. Therefore, this paper proposes the asymptotic vertex acceptance criterion combined with the prediction function. The probability of accepting

xn is

where

is the Euclidean distance from

xinit to

xgoal. Compared with

xpeak, it is clear that the probability of acceptance is smaller if

xn is further away from the goal, and vice versa. Since

(

xinit) is the cost of the theoretical optimal path, it has a reference value for the calculation of the acceptance probability of vertices. In this paper, we define

(

xinit) as the control parameter.

In this phase, the probability of accepting each expanded vertex is greater than zero; thus, M-RRT* is capable of jumping out of the local optimum, but in some complex environments, it may take longer. We design a method to jump out of the local optimum quickly. If the value of (xpeak) does not change after using Equation (4) to calculate the probability 20 times, the algorithm is considered to fall into the local optimum. The acceptance probability of the subsequent extended new vertex is one until the value of (xpeak) changes. Then, the random tree is closer to the goal, indicating that the algorithm jumps out of the local optimum and continues to use Equation (4).

The asymptotic vertex acceptance criterion makes M-RRT* obtain the initial feasible path faster. At the same time, the algorithm performs the optimal path search phase earlier and improves the overall efficiency.

3.3. Nonlinear Dynamic Vertex Acceptance Criterion

Given an optimal path σ from xinit through x ∈ ; to xgoal, g(x) is equal to the cost of the optimal path from xinit to x, h(x) is equal to the cost of the optimal path from x to xgoal, and the cost of σ is . and are admissibility heuristics for and , respectively. In the problem of solving the optimal path length in ℝd, the Euclidean distance applies to both heuristics.

An algorithm based on RRT* will continue to iterate to search for the optimal path after completing the process of finding the initial feasible path. When xn is generated at the nth iteration, there exists a feasible path σ* with the minimum cost at the current time. To make σ* approach the optimal path asymptotically, M-RRT* not only estimates the path cost from the new vertex xn to xgoal but also considers the path cost from xinit to xn. Next, we will introduce the nonlinear dynamic vertex acceptance criterion in detail, which is applied to the optimal path search phase of M-RRT*.

In

Figure 5,

is the Euclidean distance from

xinit to

xn,

is the Euclidean distance from

xn to

xgoal,

σ* is the best path with the minimum cost at the current time, and

is the cost of

σ*. Obviously,

is the cost of the theoretically optimal path through

xn.

If , it indicates that the cost of any path through xn cannot be less than . Then, the probability of accepting xn is , and M-RRT* starts the next iteration directly. Therefore, it removes vertices that are entirely impossible to improve the path length, avoiding wasting time on these useless vertices.

If

, we must refer to the actual cost

g(

xn) from

xinit to

xn and establish the prediction function

where

g(

xn) is the actual cost of the path from

xinit to

xn, and

is the estimated cost from

xn to

xgoal, which is the Euclidean distance. Similar to the Metropolis acceptance criterion, we predict whether the new vertex

xn can improve the current optimal path

σ* by

C(

n). As shown in

Figure 6,

xn is generated by extending the distance

η from the nearest vertex

xnearest on the random tree; thus, the actual cost

As mentioned in the previous section,

is the estimated cost of

xn to

xgoal. One of the following two cases occurs during the nth iteration.

If , the predicted value is lower than the current minimum cost . Therefore, xn is likely to play a positive role in finding the optimal path, and the probability of retaining xn is one.

If , the probability of retaining xn must be calculated based on the predicted value.

In the case of

, the probability of retaining

xn cannot be zero, because after the ChooseParent and Rewire procedures, the actual cost from

xinit to

xn may be reduced. In this case,

xn has the possibility of optimizing the path, but, of course, the larger the value of the prediction function, the smaller the possibility. Combined with the prediction function, the probability of accepting a vertex is

where

is the current minimum cost,

n is the current number of iterations, and

N is the number of iterations when

σ* is found. Therefore,

N changes dynamically with the update of

σ*, and the next iteration number

n after the update is equal to

N + 1. The nonlinear dynamic cost

increases from

, and then the algorithm gradually reduces the probability of accepting vertices that make

. The nonlinear dynamic vertex acceptance criterion makes the algorithm sample in an extensive range in the early stage to prevent falling into the local optimum. As the number of iterations increases, the retained samples are more targeted, enabling the algorithm to quickly converge and find the optimal path. Moreover, there is always a probability of global sampling, allowing M-RRT* to explore a wider area while converging quickly, ensuring its asymptotic optimality.

3.4. M-RRT*

Compared with RRT*, M-RRT* adopts the asymptotic vertex acceptance criterion and nonlinear dynamic vertex acceptance criterion in finding the initial path and optimal path, respectively. It not only preserves the probability of global sampling but also selectively accepts vertices conducive to finding solutions and removes many useless vertices. M-RRT* prunes the random tree, significantly improving computational efficiency and reducing memory usage. Algorithm 4 shows the pseudocode of M-RRT*.

| Algorithm 4 M-RRT* |

| 1: | G ← (V, E); V ← xinit; xpeak ← xinit; N ← 0; |

| 2: | (xpeak) ← Dis(xpeak, xgoal); |

| 3: | (xinit) ← Dis(xinit, xgoal); |

| 4: | for i = 1 to n do |

| 5: | xrand ← Sample(i); |

| 6: | xnearest ← Nearest(V, xrand); |

| 7: | (xn, σ) ← Steer(xnearest, xrand); |

| 8: | if CollisionFree(σ) then |

| 9: | if N = 0 then |

| 10: | AVAC(xn, (xpeak), (xinit)); |

| 11: | else |

| 12: | NDVAC(xn, xnearest, n, N, σ*); |

| 13: | Xnear ← Near(V, xn); |

| 14: | (xparent, σparent) ← ChooseParent(Xnear, xnearest, xn, σ); |

| 15: | V ← AddVertex(xn); |

| 16: | E ← AddEdge(xparent, xn); |

| 17: | G ← Rewire(G, xn, Xnear); |

| 18: | (σ*, N) ← NearGoal(G, xn, n); |

| 19: | end if |

| 20: | end for |

| 21: | return G; |

The following briefly describes the basic procedures used in the M-RRT* algorithm.

Dis: Given two states xs, xt ∈ , it returns the Euclidean distance between xs and xt.

AVAC: Asymptotic vertex acceptance criterion.

NDVAC: Nonlinear dynamic vertex acceptance criterion.

NearGoal: Given a graph G = (V, E), a state x, and the current number of iterations, if x is within a given range around xgoal, this procedure returns the feasible path σ* with the least cost and the current number of iterations N.

Figure 7 shows the flow chart of M-RRT*, and

lout is the set path length to which to converge. The ChooseParent and Rewire procedures have been described in related work, so they are not shown in detail in the figure. The NearGoal procedure has also been introduced in this section.

4. Simulations

Since RRT* is the basic algorithm of the algorithm proposed in this paper, Informed-RRT* is widely recognized as an efficient RRT-based algorithm, and Q-RRT* is a newly proposed correlation algorithm, this paper uses them for comparative analysis. Q-RRT* has the best efficiency when the depth is 2, so 2 is chosen as the depth in this paper.

4.1. Environment Configuration and Indicator Description

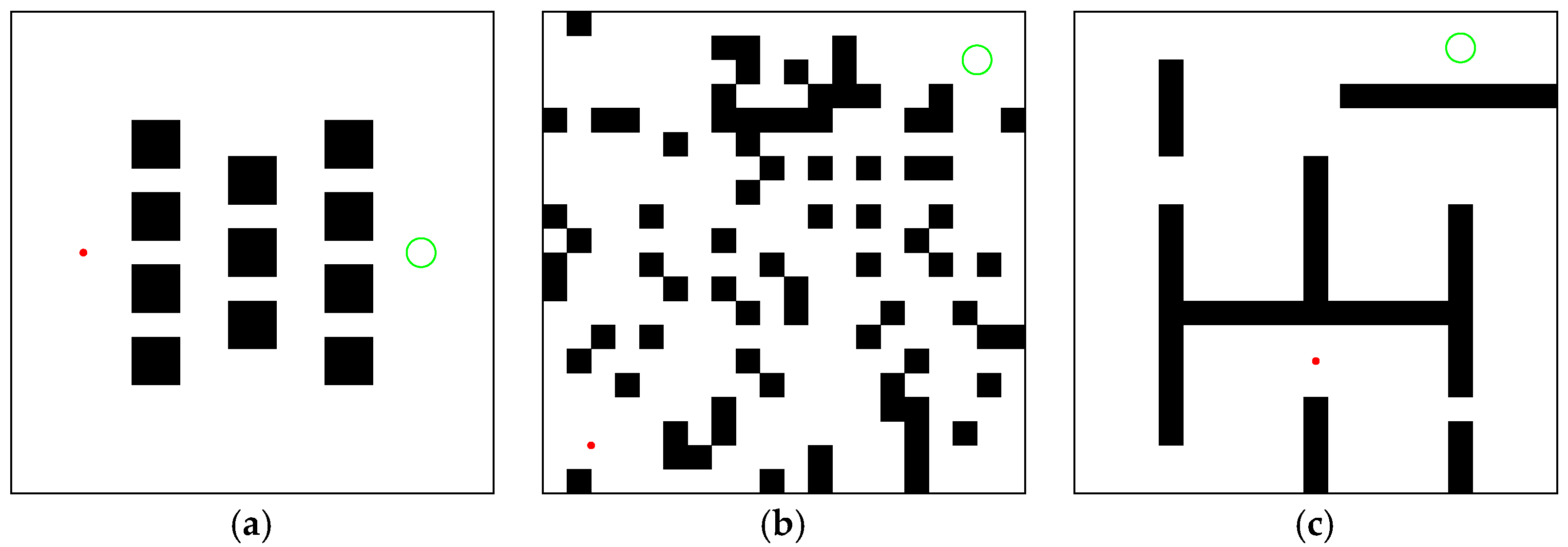

M-RRT* is compared with the above three algorithms in three environments of the same size of 100 × 100. The simulation environments are shown in

Figure 8. Each algorithm was run 100 times because of the randomness of the sampling-based algorithms. The system and resource characteristics used in the simulation implementation are shown in

Table 1.

In this section, all algorithms were simulated with the same parameters. Two evaluation indicators are used to compare the performances of the algorithms. Firstly, some problems require finding a feasible solution for motion planning in a short time; hence, this paper compares the time of the four algorithms to find the initial path. We define this time as the index ‘tinit’. Secondly, four algorithms have asymptotic optimality; thus, they can all find the optimal path as the number of iterations approaches infinity. Due to the randomness of the sampling-based algorithm, this paper first determines the optimal path length in each environment and then compares the time it takes for the algorithms to converge to an approximate optimal path. We define this time as the index ‘T5%’. The length of the approximate optimal solution is ‘1.05∗loptimal’, where ‘loptimal’ is the length of the optimal solution.

Again, because of randomness, the statistical results of 100 runs of each algorithm are described by boxplots, and the specific values are shown in tables. In a boxplot, a line in the middle of the box represents the median of the data. The bottom and top of the box are the upper and lower quartiles of the data, respectively. Therefore, the height of the box reflects the fluctuation of the data to some extent. The median can show the performance of the randomness algorithms. In addition, the paper also describes the mean, maximum, and minimum to prove the efficiency and stability of the algorithms. The difference between the upper and lower quartiles is called the interquartile range (IQR). Values that are 1.5 times IQR larger than the upper quartile, or 1.5 times IQR less than the lower quartile, are classified as outliers and shown as rhombus in the boxplot.

4.2. Regular Environment

The regular environment is shown in

Figure 8a. In

Figure 9, the paths generated by RRT* (

tinit = 0.1093,

T5% = 1.093), Informed-RRT* (

tinit = 0.0781,

T5% = 0.453), Q-RRT* (

tinit = 0.6876,

T5% = 0.7059), and M-RRT* (

tinit = 0.0625,

T5% = 0.1406) are shown. In the regular environment,

loptimal = 70.9268. The grey lines in each figure are the paths explored by the algorithm through the extended vertices, and the red line is the approximate optimal path. RRT* randomly sampled the entire state space; hence, its vertices grew in any direction. Unlike RRT*, once the initial path was found, Informed-RRT* sampled only inside the hyper-ellipsoid, with the start and target states as the foci. Therefore, most of the vertices of Informed-RRT* appear to be inside an ellipse. Q-RRT* optimizes the structure of the random tree so that the path between its vertices and the initial vertex is as straight as possible. Since M-RRT* preferentially accepted vertices that are conducive to searching for solutions and had a limited acceptance of vertices that provide inferior solutions, it generated fewer vertices than the other two algorithms.

The 100 simulation results of

tinit and

T5% are described by boxplots, as shown in

Figure 10 and

Figure 11, respectively.

Table 2 counts the specific numerical values of the simulation results. For the convenience of the comparison, the data in the table are generally displayed to the fourth decimal place. If the size of the same indicator for the algorithms is very close, it shows the decimal place where their numbers are different. The two minimum values of the three algorithms are the same because the length of the initial path is less than 1.05∗

loptimal. Due to the regular distribution of obstacles in this environment and the small distance between the initial and goal states, the above situation is common for sampling-based algorithms. Although RRT* and Informed-RRT* have identical procedures for finding initial solutions, they are less stable, as shown in

Figure 10. Since Q-RRT* consumes more computing resources on the optimized path, it takes a long time to find the initial path. In addition, M-RRT* takes less time to find the initial solution. It is clear from

Figure 11 that M-RRT* converges faster than the other three algorithms. Based on the above analysis, M-RRT* outperforms RRT*, Informed-RRT*, and Q-RRT* in the regular environment.

4.3. Cluttered Environment

The cluttered environment is shown in

Figure 8b. In

Figure 12, the paths generated by RRT* (

tinit = 0.337,

T5% = 3.1296), Informed-RRT* (

tinit = 0.346,

T5% = 1.6216), Q-RRT* (

tinit = 1.0265,

T5% = 4.6131), and M-RRT* (

tinit = 0.2852,

T5% = 0.8666) are shown. In the cluttered environment,

loptimal = 114.8326. RRT* required many vertices to explore the state space fully, while M-RRT* generated the fewest vertices to converge to the approximate optimal solution.

The 100 simulation results of

tinit and

T5% are described by boxplots, respectively.

Table 3 counts the specific numerical values of the simulation results. As shown in

Figure 13, in the cluttered environment, the median of M-RRT* is still the smallest, but the median of RRT* is larger than that of Informed-RRT*. Therefore, M-RRT* can obtain the initial solution earlier, while the other two algorithms are not stable. Q-RRT* still takes the longest time to find the initial path. As can be seen from

Figure 14, although the efficiency of Informed-RRT* searching for the optimal solution has been improved compared to RRT*, M-RRT* is the most effective algorithm. Q-RRT* can continuously search ancestor vertices to optimize paths, but these paths often collide in the cluttered environments. Thus, Q-RRT* wastes a lot of time trying to change the structure of the tree instead, and it performs the worst in this environment. According to the running results of two performance indicators, M-RRT* has an outstanding performance in the cluttered environment.

4.4. Maze

The maze is shown in

Figure 8c. In

Figure 15, the paths generated by RRT* (

tinit = 0.2163,

T5% = 4.1688), Informed-RRT* (

tinit = 0.375,

T5% = 14.4523), Q-RRT* (

tinit = 0.8908,

T5% = 1.5375), and M-RRT* (

tinit = 0.0647,

T5% = 1.3433) are shown. In the maze,

loptimal = 138.6089. It can be seen that Informed-RRT* always performed global sampling like RRT*. Thus, Informed-RRT* has no advantage in this environment. Q-RRT* optimized paths more efficiently in the maze. On the contrary, due to the two vertex acceptance criteria proposed in this paper, M-RRT* rejected a lot of inferior vertices and obtained the solution with fewer vertices.

Figure 16 and

Figure 17 show 100 simulation results of

tinit and

T5%.

Table 4 counts the specific numerical values of the simulation results. Since maze-type environments tend to trap the algorithm in a local optimum, M-RRT* requires more extensive sampling when estimating the initial solution. It can be seen from

Figure 16 that Q-RRT* takes the longest time, and although the medians of the other three algorithms are relatively close, the time needed for M-RRT* to obtain the initial solution is slightly less. As shown in

Figure 17, M-RRT* obviously has the fastest convergence rate, while Informed-RRT* has the worst performance. The region of the hyper-ellipsoid set by Informed-RRT* in searching for the optimal solution in this environment is larger than the state space, which causes it to converge even slower than RRT*. The convergence speed of Q-RRT* is also very fast, so this paper indirectly proves that when Q-RRT* has enough space to search for ancestor vertices without collision, its efficiency will be greatly improved. Although RRT* performs worse, the minimum values of its two indicators are the lowest. This is because, in one of the experiments on RRT*, the random vertices of the algorithm found the path very quickly, which is accidental. By combining

Figure 16 and

Figure 17 and

Table 4, it can be seen that M-RRT* performs significantly better in the maze.

5. Conclusions

There has been an increase in research on sampling-based motion planning algorithms in recent years. RRT* is a commonly used optimal algorithm, but this method has the problems of a low search efficiency and a slow convergence speed. M-RRT* is proposed to solve the motion planning problem of mobile robots. First, this research proposes an asymptotic vertex acceptance criterion in the initial path estimation phase of M-RRT*, which can effectively reduce the time of finding the initial path and make the algorithm start searching for the optimal path earlier. Secondly, this research proposes a nonlinear dynamic vertices acceptance criterion in the optimal path search phase of M-RRT*. This criterion preferentially accepts vertices that may improve the current path so as to rapidly converge to the optimal path.

Although M-RRT* is a promising algorithm, it can only rely on global sampling to jump out of the local optimum when finding initial solutions in some special environments. Moreover, this paper mainly studies static motion planning, but the actual environment is dynamic, so we should take into account the real-time nature of motion planning in a further work. Since the motion planning of the manipulators has complex constraints, a small number of path points can reduce its calculation. We apply the algorithm proposed in this paper to the motion planning of the manipulator as the next research content.