Enhancement and Restoration of Scratched Murals Based on Hyperspectral Imaging—A Case Study of Murals in the Baoguang Hall of Qutan Temple, Qinghai, China

Abstract

1. Introduction

- (1)

- A method combining linear information enhancement and triplet domain translation network pretrained model is proposed to recover the scratch lesions in the mural images, which includes using hyperspectral data for principal component analysis and enhancing the first principal component with high-pass filtering, replacing the original first principal component with the enhanced first principal component by principal component fusion, and recovering the data dimension with principal component inversion to produce an improved hyperspectral mural image. Then, a triplet domain translation network pretrained model was used to complete the repair of the scratch lesions. In addition, we added a Butterworth high-pass filter after the pretrained model restoration to produce sharper and higher visual quality mural restoration results. As such, this study fills a gap in the existing literature on the virtual restoration of mural scratches.

- (2)

- The 2D gamma function light uneven image correction algorithm is applied to the mural to solve the problem of local low luminance, enhance the information in the dark areas, and provide more accurate restoration results.

- (3)

- The proposed method can provide auxiliary reference and support for the actual restoration of murals. It is helpful to provide conservators with a scratch-free appearance of the murals before the restoration process begins. In addition, the work in this study is an attempt to provide novel ideas for the digital conservation of wall paintings for World Heritage sites.

2. Materials and Methods

2.1. Materials

2.1.1. Murals

2.1.2. Data Acquisition

2.2. Methods

- (1)

- Data denoising using radiometric correction;

- (2)

- Mural line information enhancement based on principal component transformation, high-pass filtering, and principal component fusion;

- (3)

- Enhancement of local dark information in the mural using multiscale Gaussian and 2D gamma functions;

- (4)

- Extraction and repair of scratched murals using a triplet domain translation pretrained network model and Butterworth high-pass filter.

2.2.1. Data Preprocessing

2.2.2. Line Information Enhancement

2.2.3. Enhancement of Local Darkness

2.2.4. The Pretrained Model and Butterworth High-Pass Filter for Recovery

3. Results

3.1. Enhancement of Mural Line Information

3.2. Enhancement of Local Darkness Information for Murals

3.3. Restoration of Mural Scratches

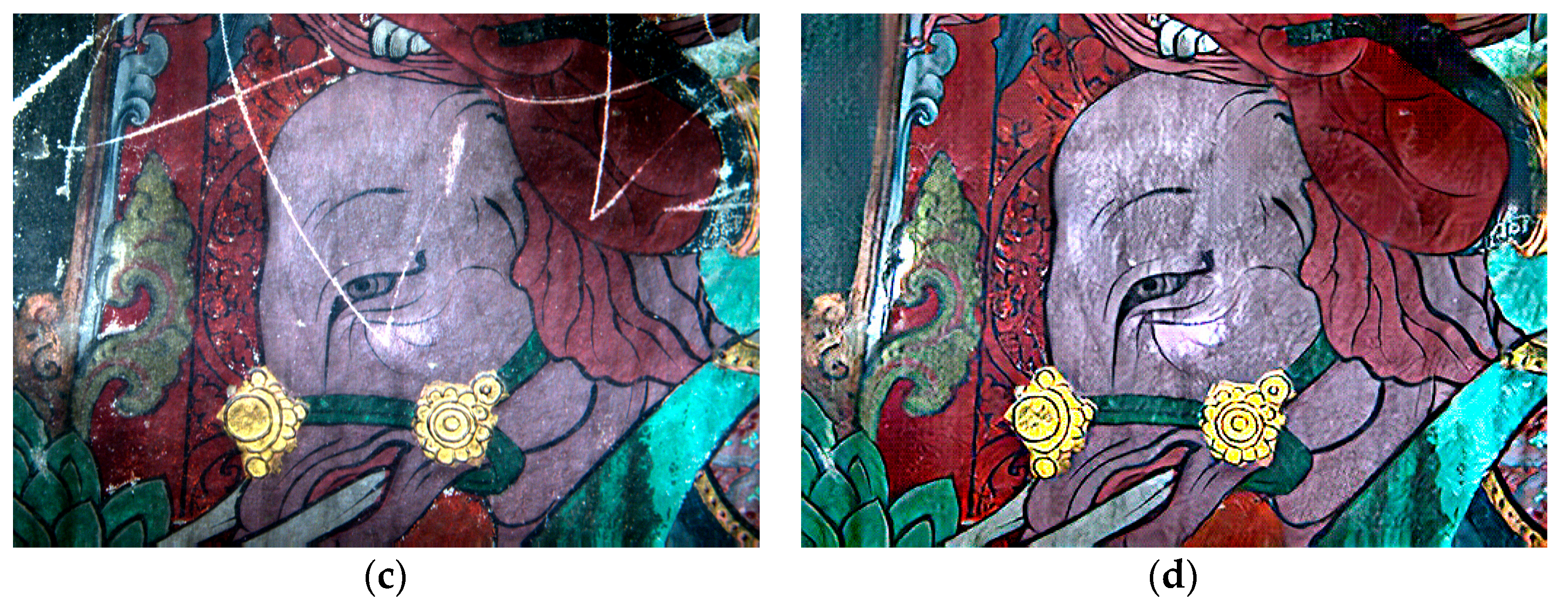

3.4. Visual Comparison

4. Discussion

4.1. Combination of Different Steps

4.2. Comparison of Scratched Mural Repair Methods

4.3. Applicability of the Proposed Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bertrand, L.; Janvier, P.; Gratias, D.; Brechignac, C. Restore world’s cultural heritage with the latest science. Nature 2019, 570, 164–165. [Google Scholar] [CrossRef] [PubMed]

- Pietroni, E.; Ferdani, D. Virtual Restoration and Virtual Reconstruction in Cultural Heritage: Terminology, Methodologies, Visual Representation Techniques and Cognitive Models. Information 2021, 12, 167. [Google Scholar] [CrossRef]

- Pei, S.; Zeng, Y.-C.; Chang, C.-H. Virtual Restoration of Ancient Chinese Paintings Using Color Contrast Enhancement and Lacuna Texture Synthesis. IEEE Trans. Image Process. 2004, 13, 416–429. [Google Scholar] [CrossRef] [PubMed]

- Baatz, W.; Fornasier, M.; Markowich, P.A.; Schönlieb, C.B. Inpainting of ancient Austrian frescoes. In Bridges Leeuwarden: Mathematics, Music, Art, Architecture, Culture; The Bridges Organization: London, UK, 2008; pp. 163–170. [Google Scholar]

- Cornelis, B.; Ružić, T.; Gezels, E.; Dooms, A.; Pizurica, A.; Platisa, L.; Martens, M.; De Mey, M.; Daubechies, I. Crack detection and inpainting for virtual restoration of paintings: The case of the Ghent Altarpiece. Signal Process. 2013, 93, 605–619. [Google Scholar] [CrossRef]

- Hou, M.; Zhou, P.; Lv, S.; Hu, Y.; Zhao, X.; Wu, W.; He, H.; Li, S.; Tan, L. Virtual restoration of stains on ancient paintings with maximum noise fraction transformation based on the hyperspectral imaging. J. Cult. Herit. 2018, 34, 136–144. [Google Scholar] [CrossRef]

- Purkait, P.; Ghorai, M.; Samanta, S.; Chanda, B. A Patch-Based Constrained Inpainting for Damaged Mural Images, Digital Hampi: Preserving Indian Cultural Heritage; Springer: Singapore, 2017; pp. 205–223. [Google Scholar] [CrossRef]

- Mol, V.R.; Maheswari, P.U. The digital reconstruction of degraded ancient temple murals using dynamic mask generation and an extended exemplar-based region-filling algorithm. Herit. Sci. 2021, 9, 137. [Google Scholar] [CrossRef]

- Wang, H.; Li, Q.; Jia, S. A global and local feature weighted method for ancient murals inpainting. Int. J. Mach. Learn. Cybern. 2019, 11, 1197–1216. [Google Scholar] [CrossRef]

- Cao, N.; Lyu, S.; Hou, M.; Wang, W.; Gao, Z.; Shaker, A.; Dong, Y. Restoration method of sootiness mural images based on dark channel prior and Retinex by bilateral filter. Herit. Sci. 2021, 9, 30. [Google Scholar] [CrossRef]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Nogales, A.; Delgado-Martos, E.; Melchor, Á.; García-Tejedor, J. ARQGAN: An evaluation of generative adversarial network approaches for automatic virtual inpainting restoration of Greek temples. Expert Syst. Appl. 2021, 180, 115092. [Google Scholar] [CrossRef]

- Gupta, V.; Sambyal, N.; Sharma, A.; Kumar, P. Restoration of artwork using deep neural networks. Evol. Syst. 2021, 12, 439–446. [Google Scholar] [CrossRef]

- Huang, R.; Feng, W.; Fan, M.; Guo, Q.; Sun, J. Learning multi-path CNN for mural deterioration detection. J. Ambient Intell. Humaniz. Comput. 2020, 11, 3101–3108. [Google Scholar] [CrossRef]

- Wang, N.; Wang, W.; Hu, W.; Fenster, A.; Li, S. Thanka Mural Inpainting Based on Multi-Scale Adaptive Partial Convolution and Stroke-Like Mask. IEEE Trans. Image Process. 2021, 30, 3720–3733. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wang, H.; Deng, Z.; Pan, M.; Chen, H. Restoration of non-structural damaged murals in Shenzhen Bao’an based on a generator–discriminator network. Herit. Sci. 2021, 9, 6. [Google Scholar] [CrossRef]

- Wan, Z.; Zhang, B.; Chen, D.; Zhang, P.; Chen, D.; Liao, J.; Wen, F. Bringing Old Photos Back to Life. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2744–2754. [Google Scholar] [CrossRef]

- GB/T 30237-2013; Ancient Wall Painting Deterioration and Legends. Chinese National Standard for the Protection of Cultural Relics. Cultural Relics Press: Beijing, China, 2008.

- Stevens, J.R.; Resmini, R.G.; Messinger, D.W. Spectral-Density-Based Graph Construction Techniques for Hyperspectral Image Analysis. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5966–5983. [Google Scholar] [CrossRef]

- Lazcano, R.; Madroñal, D.; Salvador, R.; Desnos, K.; Pelcat, M.; Guerra, R.; Fabelo, H.; Ortega, S.; Lopez, S.; Callico, G.; et al. Porting a PCA-based hyperspectral image dimensionality reduction algorithm for brain cancer detection on a manycore architecture. J. Syst. Arch. 2017, 77, 101–111. [Google Scholar] [CrossRef]

- Azimbeik, M.; Badr, N.S.; Zadeh, S.G.; Moradi, G. Graphene-based high pass filter in terahertz band. Optik 2019, 198, 163246. [Google Scholar] [CrossRef]

- Das, S.; Krebs, W. Sensor fusion of multispectral imagery. Electron. Lett. 2000, 36, 1115–1116. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, D.; Liu, Y.; Liu, X. Adaptive correction algorithm for illumination inhomogeneousimages based on 2D gamma function. J. Beijing Univ. Technol. 2016, 36, 191–196. [Google Scholar] [CrossRef]

- Land, E.H. An alternative technique for the computation of the designator in the retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef]

- Fuwen, L.; Weiqi, J.; Wei, C.; Yang, C.; Xia, W.; Lingxue, W. Global color image enhancement algorithm based on Retinex model. J. Beijing Univ. Technol. 2010, 8, 947–951. [Google Scholar] [CrossRef]

- Banic, N.; Loncaric, S. Light Random Sprays Retinex: Exploiting the Noisy Illumination Estimation. IEEE Signal Process. Lett. 2013, 20, 1240–1243. [Google Scholar] [CrossRef]

- Lee, S.; Kwon, H.; Han, H.; Lee, G.; Kang, B. A Space-Variant Luminance Map based Color Image Enhancement. IEEE Trans. Consum. Electron. 2010, 56, 2636–2643. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherland, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. Comput. Sci. 2014. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Shen, J.; Chan, T.F. Mathematical Models for Local Nontexture Inpaintings. SIAM J. Appl. Math. 2001, 62, 1019–1043. [Google Scholar] [CrossRef]

- Chan, T.F.; Shen, J. Nontexture Inpainting by Curvature-Driven Diffusions. J. Vis. Commun. Image Represent. 2001, 12, 436–449. [Google Scholar] [CrossRef]

- Criminisi, A.; Perez, P.; Toyama, K. Object removal by exemplar-based inpainting. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003. [Google Scholar] [CrossRef]

| Environment | Parameters |

|---|---|

| Systems | Windows 10 (Microsoft, Redmond, WA, USA) |

| GPU | NVIDIA RTX 2080 (NVIDIA, Santa Clara, CA, USA) |

| CPU | i7-9700 k, CPU @3.60 GHz (8 CPUs) (Intel, Santa Clara, CA, USA) |

| RAM | 16 GB |

| Study Area | Evaluation Indicators | No Linear Information Enhancement and Butterworth High-Pass Filtering | No Local Dark Enhancement Recovery Effect and Butterworth High-Pass Filtering | No Butterworth High-Pass Filtering | Complete Method |

|---|---|---|---|---|---|

| Area 1 | Average gradient | 10.1506 | 10.8247 | 14.1354 | 28.0683 |

| Edge strength | 96.8508 | 99.6460 | 131.4485 | 168.5789 | |

| Space frequency | 23.3682 | 37.0902 | 53.5409 | 56.3064 | |

| Area 2 | Average gradient | 7.9620 | 10.5215 | 17.4626 | 30.0952 |

| Edge strength | 75.4469 | 94.9784 | 157.6848 | 179.4221 | |

| Space frequency | 16.6812 | 43.2561 | 44.5785 | 48.2505 |

| Method | Average Score of Area 1 | Average Score of Area 2 |

|---|---|---|

| TV | 7.01 | 6.89 |

| CDD | 7.54 | 7.27 |

| Criminisi | 5.23 | 5.03 |

| Proposed Method | 9.12 | 9.07 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, P.; Hou, M.; Lyu, S.; Wang, W.; Li, S.; Mao, J.; Li, S. Enhancement and Restoration of Scratched Murals Based on Hyperspectral Imaging—A Case Study of Murals in the Baoguang Hall of Qutan Temple, Qinghai, China. Sensors 2022, 22, 9780. https://doi.org/10.3390/s22249780

Sun P, Hou M, Lyu S, Wang W, Li S, Mao J, Li S. Enhancement and Restoration of Scratched Murals Based on Hyperspectral Imaging—A Case Study of Murals in the Baoguang Hall of Qutan Temple, Qinghai, China. Sensors. 2022; 22(24):9780. https://doi.org/10.3390/s22249780

Chicago/Turabian StyleSun, Pengyu, Miaole Hou, Shuqiang Lyu, Wanfu Wang, Shuyang Li, Jincheng Mao, and Songnian Li. 2022. "Enhancement and Restoration of Scratched Murals Based on Hyperspectral Imaging—A Case Study of Murals in the Baoguang Hall of Qutan Temple, Qinghai, China" Sensors 22, no. 24: 9780. https://doi.org/10.3390/s22249780

APA StyleSun, P., Hou, M., Lyu, S., Wang, W., Li, S., Mao, J., & Li, S. (2022). Enhancement and Restoration of Scratched Murals Based on Hyperspectral Imaging—A Case Study of Murals in the Baoguang Hall of Qutan Temple, Qinghai, China. Sensors, 22(24), 9780. https://doi.org/10.3390/s22249780