Investigating User Proficiency of Motor Imagery for EEG-Based BCI System to Control Simulated Wheelchair

Abstract

:1. Introduction

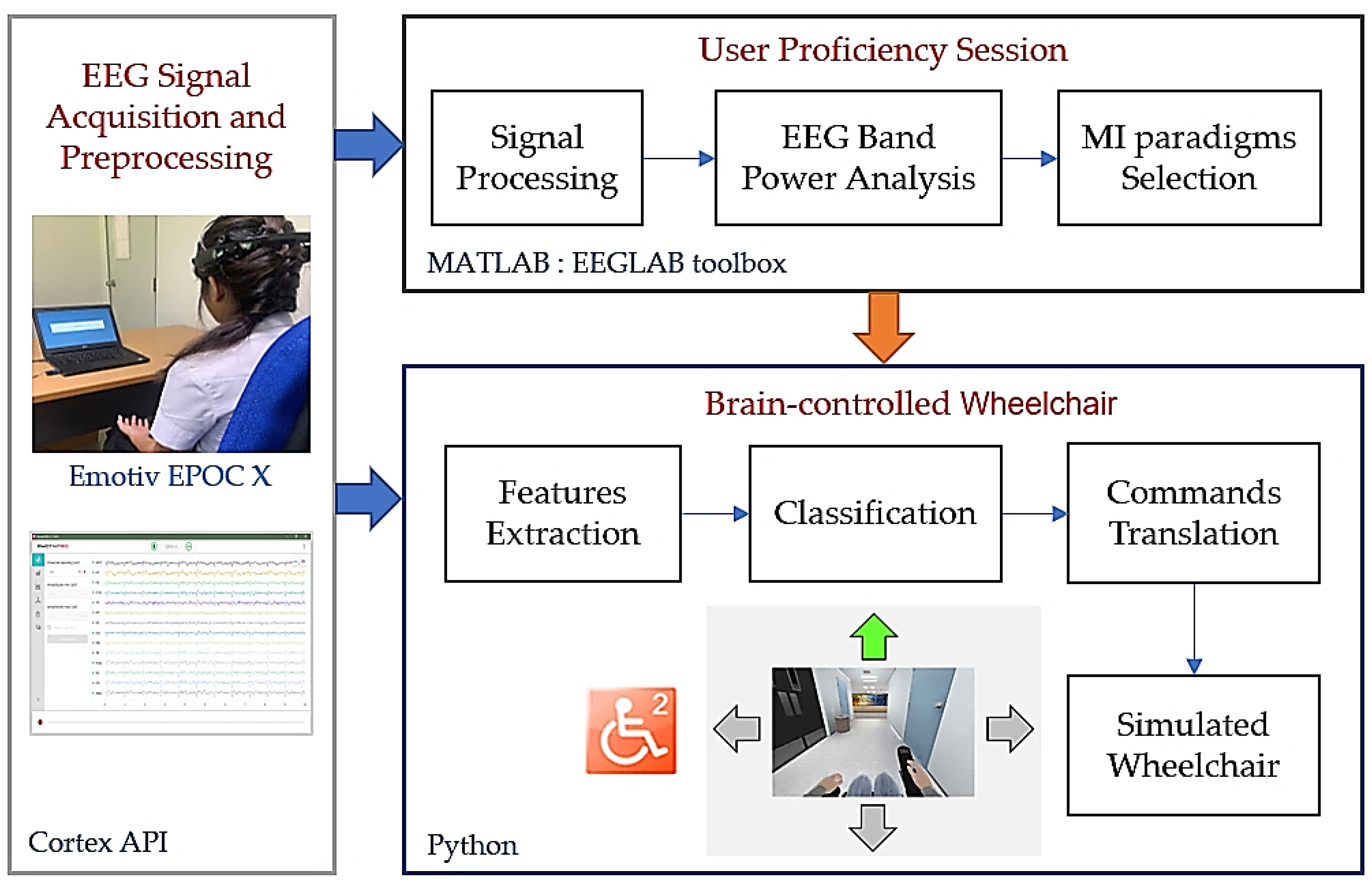

2. Materials and Methods

2.1. Proposed Paradigms and Commands

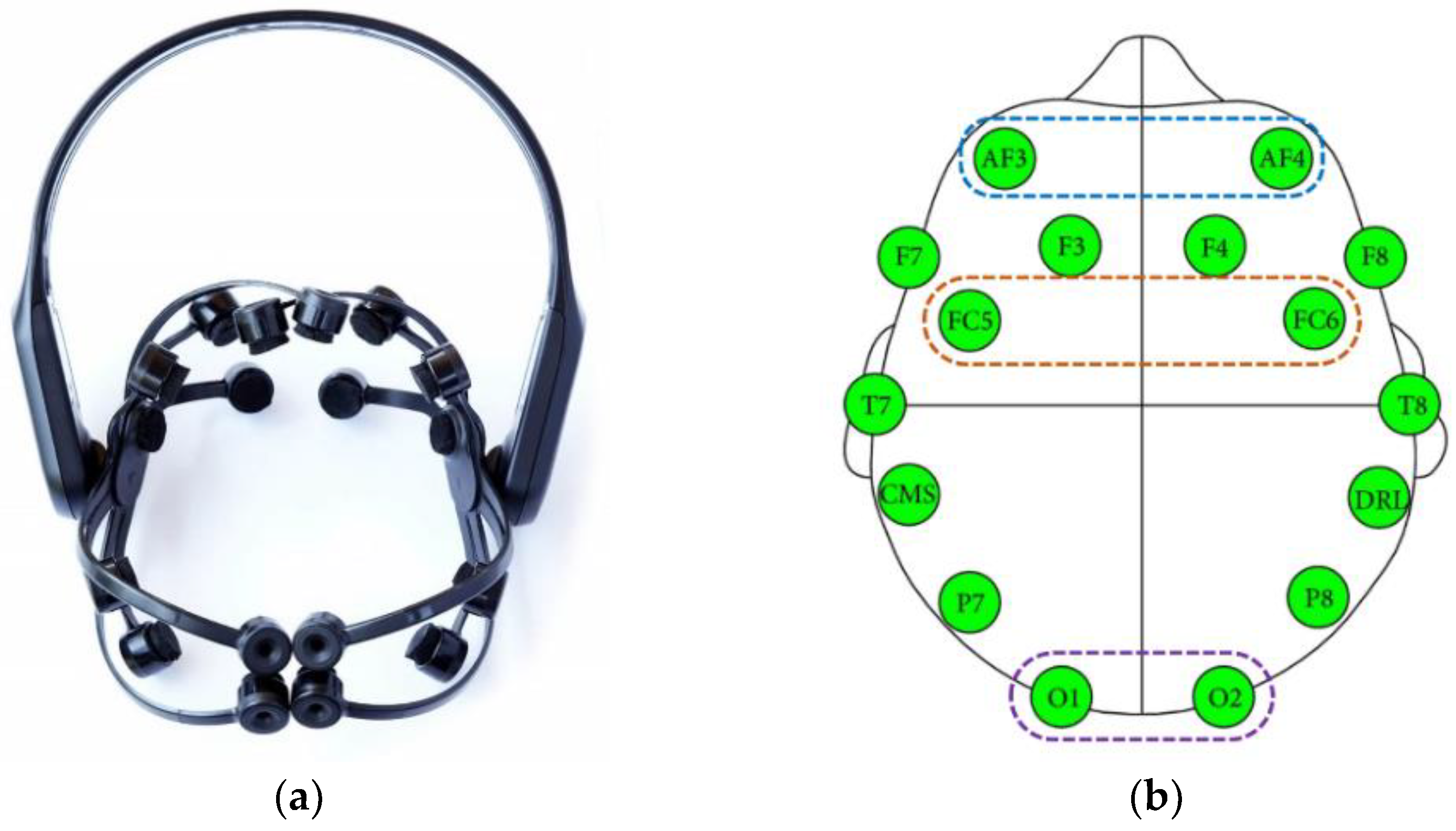

2.2. EEG Acquisition and Preprocessing

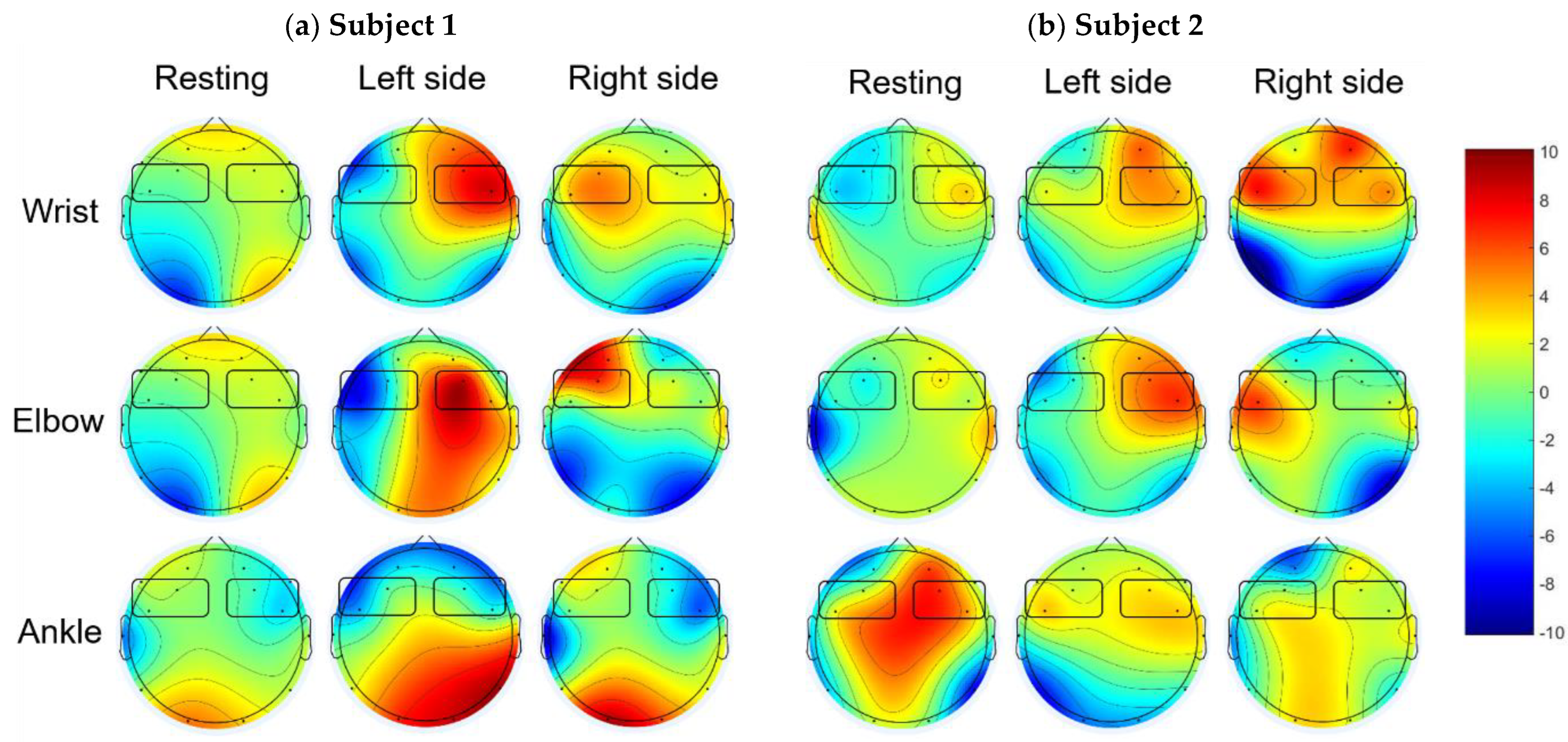

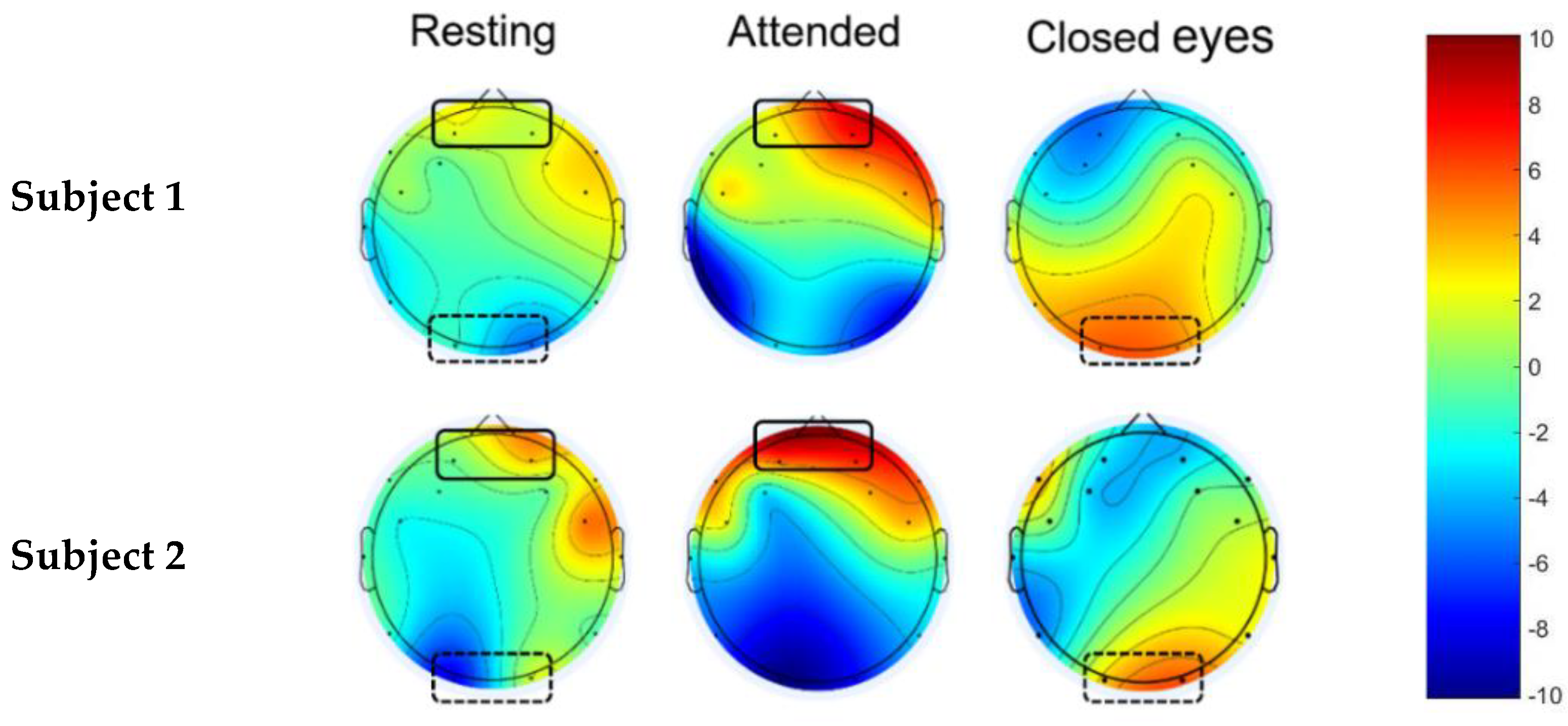

2.3. Observations of EEG Alpha Power with Difference Task

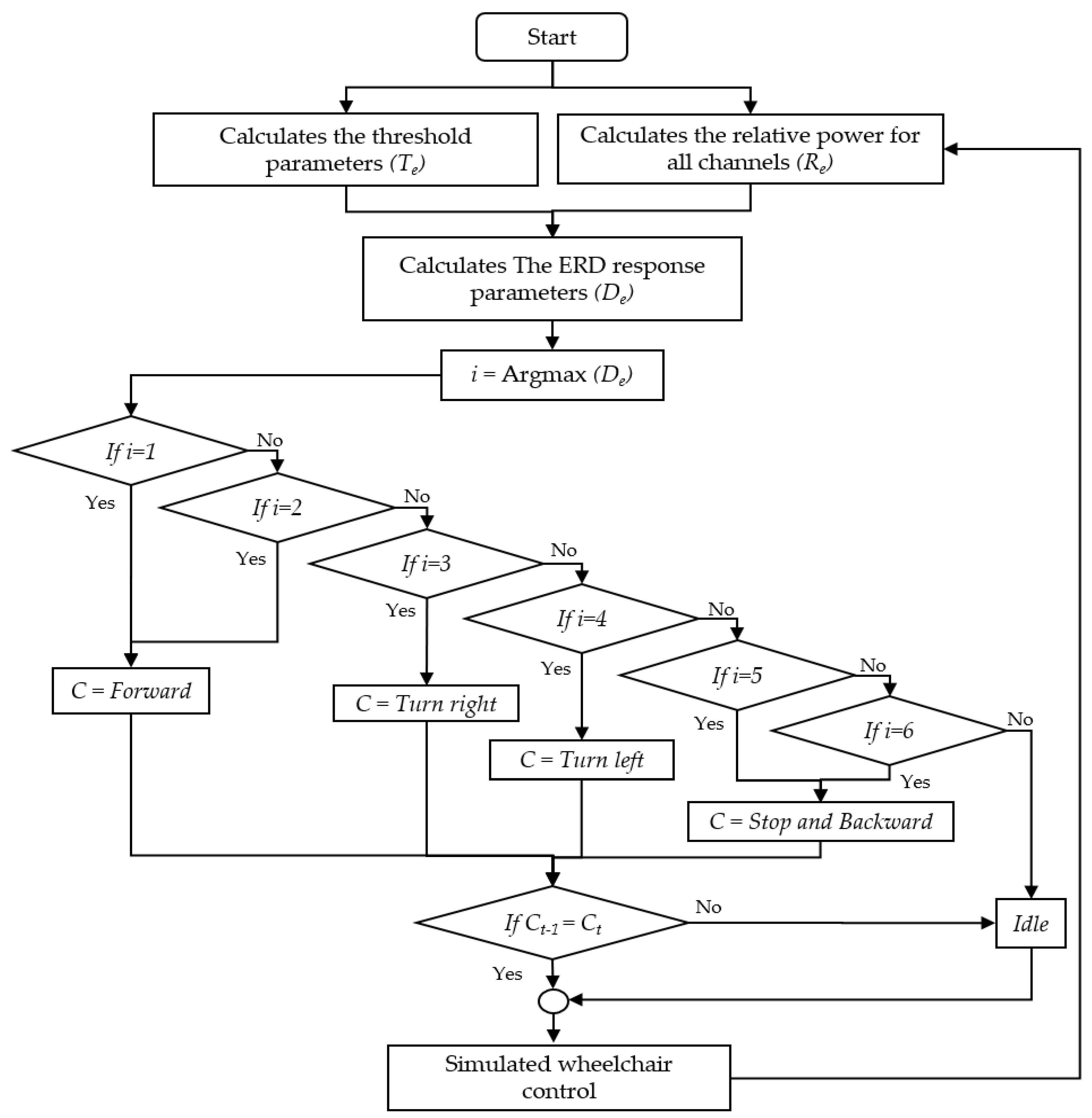

3. Proposed BCI for Simulated Wheelchair Control

- if = 1 or = 2, C = “Forward”

- if = 3, C = “Turn right”

- if = 4, C = “Turn left”

- if = 5 or = 6, C = “Stop and Backward”Otherwise, C = “Idle”

4. Experiments and Results

4.1. Experiment I: Performance of Using Recommended Paradigm

4.2. Experiment II: Performance of the Proposed BCI System for Simulated Wheelchair Control

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain-Computer Interface Spellers: A Review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bockbrader, M.A.; Francisco, G.; Lee, R.; Olson, J.; Solinsky, R.; Boninger, M.L. Brain Computer Interfaces in Rehabilitation Medicine. PM&R 2018, 10 (Suppl. 2), S233–S243. [Google Scholar] [CrossRef] [Green Version]

- Chandler, J.A.; Van der Loos, K.I.; Boehnke, S.; Beaudry, J.S.; Buchman, D.Z.; Illes, J. Brain Computer Interfaces and Communication Disabilities: Ethical, Legal, and Social Aspects of Decoding Speech from the Brain. Front. Hum. Neurosci. 2022, 16, 841035. [Google Scholar] [CrossRef] [PubMed]

- Palumbo, A.; Gramigna, V.; Calabrese, B.; Ielpo, N. Motor-Imagery EEG-Based BCIs in Wheelchair Movement and Control: A Systematic Literature Review. Sensors 2021, 21, 6285. [Google Scholar] [CrossRef]

- Lopez-Gordo, M.A.; Sanchez-Morillo, D.; Valle, F.P. Dry EEG Electrodes. Sensors 2014, 14, 12847–12870. [Google Scholar] [CrossRef]

- Lievesley, R.; Wozencroft, M.; Ewins, D. The Emotiv EPOC Neuroheadset: An Inexpensive Method of Controlling Assistive Technologies Using Facial Expressions and Thoughts? J. Assist. Technol. 2011, 5, 67–82. [Google Scholar] [CrossRef]

- Chai, X.; Zhang, Z.; Guan, K.; Lu, Y.; Liu, G.; Zhang, T.; Niu, H. A Hybrid BCI-Controlled Smart Home System Combining SSVEP and EMG for Individuals with Paralysis. Biomed. Signal Process. Control 2020, 56, 101687. [Google Scholar] [CrossRef]

- Baniqued, P.D.E.; Stanyer, E.C.; Awais, M.; Alazmani, A.; Jackson, A.E.; Mon-Williams, M.A.; Mushtaq, F.; Holt, R.J. Brain-Computer Interface Robotics for Hand Rehabilitation After Stroke: A Systematic Review. J. Neuroeng. Rehabil. 2021, 18, 15. [Google Scholar] [CrossRef]

- Jeon, Y.; Nam, C.S.; Kim, Y.; Whang, M.C. Event-Related (De)Synchronization (ERD/ERS) during Motor Imagery Tasks: Implications for Brain-Computer Interfaces. Int. J. Ind. Ergon. 2011, 41, 428–436. [Google Scholar] [CrossRef]

- Nam, C.S.; Jeon, Y.; Kim, Y.J.; Lee, I.; Park, K. Movement Imagery-Related Lateralization of Event-Related (De)Synchronization (ERD/ERS): Motor-Imagery Duration Effects. Clin. Neurophysiol. 2011, 122, 567–577. [Google Scholar] [CrossRef]

- Siribunyaphat, N.; Punsawad, Y. Steady-State Visual Evoked Potential-Based Brain-Computer Interface Using a Novel Visual Stimulus with Quick Response (QR) Code Pattern. Sensors 2022, 22, 1439. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; McFarland, D.J.; Schalk, G.; Zheng, S.D.; Jackson, M.M.; Wolpaw, J.R. Towards an Independent Brain-Computer Interface Using Steady State Visual Evoked Potentials. Clin. Neurophysiol. 2008, 119, 399–408. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, Y.; Li, Y.; Lu, J.; Li, P. A Hybrid BCI Based on SSVEP and EOG for Robotic Arm Control. Front. Neurorobot. 2020, 14, 583641. [Google Scholar] [CrossRef]

- Punsawad, Y.; Wongsawat, Y. Multi-command SSAEP-Based BCI System with Training Sessions for SSVEP during an Eye Fatigue State. IEEJ Trans. Electr. Electron. Eng. 2017, 12, S72–S78. [Google Scholar] [CrossRef]

- Hong, K.S.; Khan, M.J. Hybrid Brain-Computer Interface Techniques for Improved Classification Accuracy and Increased Number of Commands: A Review. Front. Neurorobot. 2017, 11, 35. [Google Scholar] [CrossRef] [Green Version]

- Punsawad, Y.; Wongsawat, Y.; Parnichkun, M. Hybrid EEG-EOG Brain-Computer Interface System for Practical Machine Control. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 1360–1363. [Google Scholar] [CrossRef]

- Khan, M.J.; Hong, K.S. Hybrid EEG-fNIRS-Based Eight-Command Decoding for BCI: Application to Quadcopter Control. Front. Neurorobot. 2017, 11, 6. [Google Scholar] [CrossRef] [Green Version]

- Choi, I.; Rhiu, I.; Lee, Y.; Yun, M.H.; Nam, C.S. A Systematic Review of Hybrid Brain-Computer Interfaces: Taxonomy and Usability Perspectives. PLoS ONE 2017, 12, e0176674. [Google Scholar] [CrossRef] [Green Version]

- Nakagome, S.; Craik, A.; Sujatha Ravindran, A.; He, Y.; Cruz-Garza, J.G.; Contreras-Vidal, J.L. Deep Learning Methods for EEG Neural Classification. In Handbook of Neuroengineering; Thakor, N.V., Ed.; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Aline, R.; Léa, P.; Jelena, M.; Camille, B.; Bernard, N.; Camille, J.; Fabien, L. A Review of User Training Methods in Brain Computer Interfaces Based on Mental Tasks. J. Neural Eng. 2020, 18, 011002. [Google Scholar]

- Jelena, M. Standardization of Protocol Design for User Training in EEG-Based Brain Computer Interface. J. Neural Eng. 2021, 18, 011003. [Google Scholar]

- Khan, M.M.; Safa, S.N.; Ashik, M.H.; Masud, M.; AlZain, M.A. Research and Development of a Brain-Controlled Wheelchair for Paralyzed Patients. Intell. Autom. Soft Comput. 2021, 30, 49–64. [Google Scholar] [CrossRef]

- Zhang, H.; Dong, E.; Zhu, L. Brain-Controlled Wheelchair System Based on SSVEP. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; Volume 2020, pp. 2108–2112. [Google Scholar] [CrossRef]

- Liu, M.; Wang, K.; Chen, X.; Zhao, J.; Chen, Y.; Wang, H.; Wang, J.; Xu, S. Indoor Simulated Training Environment for Brain-Controlled Wheelchair Based on Steady-State Visual Evoked Potentials. Front. Neurorobot. 2019, 13, 101. [Google Scholar] [CrossRef] [PubMed]

- Lopes, A.C.; Pires, G.; Nunes, U. Assisted Navigation for a Brain-Actuated Intelligent Wheelchair. Robot. Auton. Syst. 2013, 61, 245–258. [Google Scholar] [CrossRef]

- Tang, J.; Liu, Y.; Hu, D.; Zhou, Z. Towards BCI-Actuated Smart Wheelchair System. Biomed. Eng. Online 2018, 17, 111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Värbu, K.; Muhammad, N.; Muhammad, Y. Past, Present, and Future of EEG-Based BCI Applications. Sensors 2022, 22, 3331. [Google Scholar] [CrossRef]

- Routhier, F.; Archambault, P.S.; Choukou, M.A.; Giesbrecht, E.; Lettre, J.; Miller, W.C. Barriers and Facilitators of Integrating the miWe Immersive Wheelchair Simulator as a Clinical Tool for Training Powered Wheelchair-Driving Skills. Ann. Phys. Rehabil. Med. 2018, 61, e91. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [Green Version]

- Mukul, M.K.; Prasad, R.; Matsuno, F. Relative Spectral Power and Power Spectral Density Changes in Motor Rhythm for Movement Imagination. ICCAS-SICE 2009, 2009, 1611–1616. [Google Scholar]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-Based Brain-Computer Interfaces Using Motor-Imagery: Techniques and Challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [Green Version]

- Al-Turabi, H.; Al-Junaid, H. Brain Computer Interface for Wheelchair Control in Smart Environment. In Proceedings of the Smart Cities Symposium 2018, Bahrain, 22–23 April 2018; p. 23. [Google Scholar] [CrossRef]

- Saichoo, T.; Boonbrahm, P.; Punsawad, Y. A Face-Machine Interface Utilizing EEG Artifacts from a Neuroheadset for Simulated Wheelchair Control. Int. J. Smart Sens. Intell. Syst. 2021, 14, 1–10. [Google Scholar] [CrossRef]

- Varona-Moya, S.; Velasco-Alvarez, F.; Sancha-Ros, S.; Fernández-Rodríguez, A.; Blanca, M.J.; Ron-Angevin, R.R. Wheelchair Navigation with an Audio-Cued, Two-Class Motor Imagery-Based Brain-Computer Interface System. In Proceedings of the 7th International IEEE EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 174–177. [Google Scholar] [CrossRef]

| Commands | Actions | Output Commands |

|---|---|---|

| 1 | Attention to the arrow | Forward |

| 2 | Imagined left limb movement | Turn Left |

| 3 | Imagined right limb movement | Turn Right |

| 4 | Closed eyes | Stop and Backward |

| Subjects | Recommended Limb Movement Paradigm | |

|---|---|---|

| Left | Right | |

| 1 | Elbow | Wrist |

| 2 | Elbow | Elbow |

| 3 | Elbow | Ankle |

| 4 | Elbow | Elbow |

| 5 | Wrist | Elbow |

| 6 | Elbow | Elbow |

| 7 | Elbow | Elbow |

| 8 | Elbow | Elbow |

| 9 | Ankle | Elbow |

| 10 | Wrist | Wrist |

| 11 | Wrist | Ankle |

| 12 | Elbow | Elbow |

| Sequence No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Commands | Left | Right | Right | Left | Left | Right | Right | Left | Left | Right | Right | Left |

| Subjects | Average Classification Accuracy (%) | |||

|---|---|---|---|---|

| Left and Right Sides of Motor Imagery Paradigms | ||||

| Wrists | Elbows | Ankles | Recommended (Table 2) | |

| 1 | 83.3 | 83.3 | 75.0 | 87.5 |

| 2 | 79.2 | 87.5 | 70.8 | 87.5 |

| 3 | 70.8 | 75.0 | 79.2 | 83.3 |

| 4 | 75.0 | 79.2 | 83.3 | 83.3 |

| 5 | 70.8 | 91.7 | 75.0 | 91.7 |

| 6 | 87.5 | 83.3 | 83.3 | 87.5 |

| 7 | 62.5 | 75.0 | 70.8 | 79.2 |

| 8 | 83.3 | 75.0 | 62.5 | 79.2 |

| 9 | 75.0 | 79.2 | 75.0 | 83.3 |

| 10 | 75.0 | 75.0 | 75.0 | 79.2 |

| 11 | 75.0 | 83.3 | 79.2 | 83.3 |

| 12 | 66.7 | 79.2 | 70.8 | 79.2 |

| Mean ± S.D. | 75.3 ± 7.20 | 80.6 ± 5.43 | 75.0 ± 5.89 | 83.7 ± 4.14 |

| Subjects | Time (s) | |||||||

|---|---|---|---|---|---|---|---|---|

| Route 1 | Route 2 | |||||||

| Joystick | Round 1 | Round 2 | Avg. | Joystick | Round 1 | Round2 | Avg. | |

| 1 | 53 | 182 | 192 | 193 | 54 | 204 | 244 | 218 |

| 2 | 45 | 196 | 176 | 184 | 47 | 172 | 182 | 179 |

| 3 | 57 | 222 | 242 | 203 | 65 | 184 | 224 | 233 |

| 4 | 65 | 202 | 188 | 200 | 58 | 198 | 208 | 198 |

| 5 | 47 | 178 | 184 | 173 | 52 | 168 | 182 | 183 |

| 6 | 50 | 190 | 212 | 200 | 59 | 210 | 222 | 217 |

| 7 | 55 | 240 | 260 | 243 | 61 | 246 | 266 | 263 |

| 8 | 58 | 228 | 208 | 213 | 63 | 198 | 216 | 212 |

| 9 | 62 | 280 | 272 | 273 | 66 | 266 | 286 | 279 |

| 10 | 50 | 168 | 158 | 179 | 49 | 190 | 188 | 173 |

| 11 | 51 | 214 | 200 | 225 | 55 | 236 | 232 | 216 |

| 12 | 60 | 266 | 242 | 257 | 58 | 248 | 250 | 246 |

| Mean ± S.D. | 54.40 ± 6.16 | 213.83 ± 35.00 | 211.17 ± 35.46 | 211.92 ± 31.62 | 57.25 ± 6.06 | 210.00 ± 31.85 | 225.00 ± 32.87 | 218.08 ± 32.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saichoo, T.; Boonbrahm, P.; Punsawad, Y. Investigating User Proficiency of Motor Imagery for EEG-Based BCI System to Control Simulated Wheelchair. Sensors 2022, 22, 9788. https://doi.org/10.3390/s22249788

Saichoo T, Boonbrahm P, Punsawad Y. Investigating User Proficiency of Motor Imagery for EEG-Based BCI System to Control Simulated Wheelchair. Sensors. 2022; 22(24):9788. https://doi.org/10.3390/s22249788

Chicago/Turabian StyleSaichoo, Theerat, Poonpong Boonbrahm, and Yunyong Punsawad. 2022. "Investigating User Proficiency of Motor Imagery for EEG-Based BCI System to Control Simulated Wheelchair" Sensors 22, no. 24: 9788. https://doi.org/10.3390/s22249788