Virtual Reality Systems as an Orientation Aid for People Who Are Blind to Acquire New Spatial Information

Abstract

1. Introduction

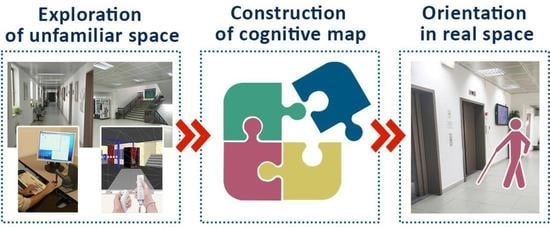

- How do people who are blind explore unfamiliar spaces using BlindAid or Virtual Cane, or in RS?

- What were the participants’ cognitive mapping characteristics after exploration using the BlindAid or Virtual Cane, or in RS?

- How did the control group and the two experimental groups (BlindAid and Virtual Cane) perform the RS orientation tasks?

2. Materials and Methods

2.1. Participants

2.2. Variables

2.3. Instrumentation

2.4. Data Analysis

2.5. Procedure

2.6. Research Limitation

3. Results

4. Discussion

4.1. The Impact of Multisensorial VE Systems on the Spatial Abilities of People Who Are Blind

4.2. The Impact of Unique Action Commands Embedded in Multisensorial VR Interface on the Spatial Abilities of People Who Are Blind

4.3. Implications for Researchers and Developers

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Jacobson, W.H. The Art and Science of Teaching Orientation and Mobility to Persons with Visual Impairments; American Foundation for the Blind: New York, NY, USA, 1993. [Google Scholar]

- Roentgen, U.R.; Gelderblom, G.J.; Soede, M.; de Witte, L.P. Inventory of electronic mobility aids for persons with visual impairments: A literature review. JVIB 2008, 102, 702–724. [Google Scholar] [CrossRef]

- Lahav, O. Improving orientation and mobility skills through virtual environments for people who are blind: Past research and future potential. Int. J. Child Health Hum. Dev. 2014, 7, 349–355. [Google Scholar]

- Schultheis, M.T.; Rizzo, A.A. The application of virtual reality technology for rehabilitation. Rehabil. Psychol. 2001, 46, 296–311. [Google Scholar] [CrossRef]

- Kreimeier, J.; Götzelmann, T. Two decades of touchable and walkable virtual reality for blind and visually impaired people: A high-level taxonomy. Multimodal Technol. Interact. 2020, 4, 79. [Google Scholar] [CrossRef]

- Lahav, O.; Schloerb, D.W.; Srinivasan, M.A. Rehabilitation program integrating virtual environment to improve orientation and mobility skills for people who are blind. Comput. Educ. 2015, 80, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Evett, L.; Battersby, S.J.; Ridley, A.; Brown, D.J. An interface to virtual environments for people who are blind using Wii technology—Mental models and navigation. JAT 2009, 3, 26–34. [Google Scholar] [CrossRef]

- Lahav, O.; Mioduser, D. Exploration of unknown spaces by people who are blind using a multi-sensory virtual environment. JSET 2004, 19, 15–23. [Google Scholar] [CrossRef]

- Lahav, O.; Schloerb, D.; Kummar, S.; Srinivasan, M.A. A virtual map to support people who are blind to navigate through real spaces. JSET 2011, 26, 41–57. [Google Scholar]

- Max, M.L.; Gonzalez, J.R. Blind persons navigate in virtual reality (VR): Hearing and feeling communicates “reality.”. In Medicine Meets Virtual Reality: Global Healthcare Grid; Morgan, K.S., Hoffman, H.M., Stredney, D., Weghorst, S.J., Eds.; IOS Press: San Diego, CA, USA, 1997; pp. 54–59. [Google Scholar]

- Ohuchi, M.; Iwaya, Y.; Suzuki, Y.; Munekata, T. Cognitive-map formation of blind persons in virtual sound environment. In Proceedings of the 12th International Conference on Auditory Display, London, UK, 19–24 June 2006. [Google Scholar]

- Pokluda, L.; Sochor, J. Spatial haptic orientation for visually impaired people. In The Eurographics Ireland Chapter Workshop Series; University of Ulster: Coleraine, UK, 2003. [Google Scholar]

- Simonnet, M.; Vieilledent, S.; Jacobson, D.R.; Tisseau, J. The assessment of non-visual maritime cognitive maps of a blind sailor: A case study. J. Maps 2010, 6, 289–301. [Google Scholar] [CrossRef][Green Version]

- Torres-Gil, M.A.; Casanova-Gonzalez, O.; Gonzalez-Mora, J.L. Applications of virtual reality for visually impaired people. WSEAS Trans. Comput. 2010, 2, 184–193. [Google Scholar]

- Merabet, L.; Sánchez, J. Development of an audio-haptic virtual interface for navigation of large-scale environments for people who are blind. Lecture notes in computer science. In In Proceedings of the 10th International Conference, UAHCI 2016, Held as Part of HCI International, Toronto, ON, Canada, 17–22 July 2016; pp. 595–606. [Google Scholar]

- Guerreiro, J.; Sato, D.; Ahmetovic, D.; Ohn-Bar, E.; Kitani, K.M.; Asakawa, C. Virtual navigation for blind people: Transferring route knowledge to the real-World. Int. J. Hum.-Comput. Stud. 2020, 135, 102369. [Google Scholar] [CrossRef]

- Bowman, E.L.; Liu, L. Individuals with severely impaired vision can learn useful orientation and mobility skills in virtual streets and can use them to improve real street safety. PLoS ONE 2017, 12, e0176534. [Google Scholar] [CrossRef]

- D’Atri, E.; Medaglia, C.M.; Serbanati, A.; Ceipidor, U.B.; Panizzi, E.; D’Atri, A. A system to aid blind people in the mobility: A usability test and its results. In Proceedings of the Second International Conference on Systems, Sainte-Luce, France, 10–13 April 2007. [Google Scholar]

- González-Mora, J.L.; Rodriguez-Hernández, A.F.; Burunat, E.; Martin, F.; Castellano, M.A. Seeing the world by hearing: Virtual acoustic space (VAS) a new space perception system for blind people. In Proceedings of the IEEE International Conference on Information and Communication Technologies, Damascus, Syria, 24–28 April 2006. [Google Scholar]

- Seki, Y.; Ito, K. Study on acoustical training system of obstacle perception for the blind. In Assistive Technology Research Series 11, Assistive Technology—Shaping the Future; IOS Press: Dublin, Ireland, 2003; pp. 461–465. [Google Scholar]

- Seki, Y.; Sato, T. A training system of orientation and mobility for blind people using acoustic virtual reality. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 95–104. [Google Scholar] [CrossRef]

- Ungar, S.; Blades, M.; Spencer, S. The construction of cognitive maps by children with visual impairments. In The Construction of Cognitive Maps; Portugali, J., Ed.; Kluwer Academic: Dordrecht, The Netherlands, 1996; pp. 247–273. [Google Scholar]

- Kitchin, R.M. Cognitive maps: What are they and why study them. J. Environ. Psychol. 1994, 14, 1–19. [Google Scholar] [CrossRef]

- Noordzij, M.L.; Zuidhoek, S.; Postma, A. The influence of visual experience on the ability to form spatial mental models based on route and survey descriptions. Cognition 2006, 100, 321–342. [Google Scholar] [CrossRef]

- Norman, D.A. The Design of Everyday Things, 2nd ed.; The Perseus Books Group: New York, NY, USA, 2013. [Google Scholar]

- Froehlich, J.; Brock, A.; Caspi, A.; Guerreiro, J.; Hara, K.; Kirkham, R.; Schöning, J.; Tannert, B. Grand challenges in accessible maps. Univers. Interact. 2019, 26, 78–81. [Google Scholar] [CrossRef]

- Lazar, J.; Feng, J.H.; Hochheiser, H. Human-Computer Interaction, 2nd ed.; Elsevier, Morgan Kaufmann Publisher: Cambridge, MA, USA, 2017; Available online: https://books.google.co.il/books?hl=en&lr=&id=hbkxDQAAQBAJ&oi=fnd&pg=PP1&dq=human+computer+interface+research&ots=Sp232b235W&sig=Y758ptVO7zxAOrrc8GxWVr5uCpE&redir_esc=y#v=onepage&q=human%20computer%20interface%20research&f=true (accessed on 21 October 2021).

- Lahav, O.; Gedalevitz, H.; Battersby, S.; Brown, D.J.; Evett, L.; Merritt, P. Virtual environment navigation with look-around mode to explore new real spaces by people who are blind. Disabil. Rehabil. 2018, 40, 1072–1084. [Google Scholar] [CrossRef] [PubMed]

- Lahav, O. Blind Persons’ Cognitive Mapping of Unknown Spaces and Acquisition of Orientation Skills, by Using Audio and Force-Feedback Virtual Environment. Unpublished Doctoral Dissertation, Tel Aviv University, Tel Aviv, Israel, 2003. [Google Scholar]

- Schloerb, D.W.; Lahav, O.; Desloge, J.G.; Srinivasan, M.A. BlindAid: Virtual environment system for self-reliant trip planning and orientation and mobility training. In Proceedings of the IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 363–370. [Google Scholar]

- Battersby, S. The Nintendo Wii controller as an adaptive assistive device—A technical report. In Proceedings of the HEA ICS Supporting Disabled Students through Games Workshop, Middlesbrough, UK, 4 February 2008. [Google Scholar]

- Peek, B. Managed Library for Nintendo’s Wiimote. 2008. Available online: http://www.brianpeek.com (accessed on 21 October 2021).

- Jacobson, R.D.; Kitchin, R.; Garling, T.; Golledge, R.; Blades, M. Learning a complex urban route without sight: Comparing naturalistic versus laboratory measures. In Proceedings of the International Conference of the Cognitive Science Society of Ireland, Mind III, 17–19 August 1998; University College Dublin: Dublin, Ireland. [Google Scholar]

- Hill, E.; Rieser, J.; Hill, M.; Hill, M.; Halpin, J.; Halpin, R. How persons with visual impairments explore novel spaces: Strategies of good and poor performers. JVIB 1993, 87, 295–301. [Google Scholar] [CrossRef]

- Hill, E.; Ponder, P. Orientation and Mobility Techniques: A Guide for the Practitioner; American Foundation for the Blind Press: New York, NY, USA, 1976. [Google Scholar]

- Lynch, K. The Image of the City; MIT Press: Cambridge, MA, USA, 1960. [Google Scholar]

- Campbell, N. Mapping. In The Sound of Silence; Rosenbaum, R., Ed.; The Carroll Center for The Blind: Newton, MA, USA, 1992. [Google Scholar]

- Thevin, L.; Briant, C.; Brock, A.M. X-Road: Virtual reality glasses for orientation and mobility training of people with visual impairments. ACM Trans. Access. Comput. 2020, 13, 1–47. [Google Scholar] [CrossRef]

- Cobo, A.; Serrano Olmedo, J.J.; Guerrón, N.E. Methodology for building virtual reality mobile applications for blind people on advanced visits to unknown interior spaces. In Proceedings of the 14th International Conference Mobile Learning, Lisbon, Portugal, 14–16 April 2018. [Google Scholar]

- Guerrón, N.E.; Cobo, A.; Serrano Olmedo, J.J.; Martín, C. Sensitive interfaces for blind people in virtual visits inside unknown spaces. Int. J. Hum.-Comput. Stud. 2020, 133, 13–25. [Google Scholar] [CrossRef]

- Siu, A.F.; Sinclair, M.; Kovacs, R.; Ofek, E.; Holz, C.; Cutrell, E. Virtual reality without vision: A haptic and auditory white cane to navigate complex virtual worlds. In CHI 2020; ACM Press: Honolulu, HI, USA, 2020. [Google Scholar]

- Sinclair, M.; Ofek, E.; Gonzalez-Franco, M.; Holz, H. CapstanCrunch: A haptic VR Controller with user-supplied force feedback. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST’19); Association for Computing Machinery: New York, NY, USA, 2019; pp. 815–829. [Google Scholar]

| Age Mean | Gender | Age Of Vision Loss | O&M Aids | ||||

|---|---|---|---|---|---|---|---|

| Female | Male | Congenitally | Adventitiously | White Cane | Guide Dog | ||

| BlindAid experimental group (n = 5) | 43 (28–59) | 2 | 3 | 5 | 0 | 4 | 1 |

| Virtual Cane experimental group (n = 5) | 30 (25–40) | 3 | 2 | 3 | 2 | 3 | 2 |

| Control group (n = 5) | 40 (27–56) | 1 | 4 | 2 | 3 | 3 | 2 |

| n | Duration (min) | Look-Around Mode | Walk-Around Mode Spatial Strategy | Pauses (min; %) | Second Hand | ||||

|---|---|---|---|---|---|---|---|---|---|

| Name | Distance | Perimeter | Object-to-Object | Other | |||||

| BlindAid experimental group | 1 | 31:00 | NA | NA | 100% | 0% | 0% | 0% | NA |

| 2 | 24:30 | NA | NA | 99% | 0% | 0% | 0% | NA | |

| 3 | 4:56 | NA | NA | 98% | 0% | 1% | 0% | NA | |

| 4 | 28:02 | NA | NA | 90% | 0% | 0% | 02:31; 9% | NA | |

| 5 | 10:08 | NA | NA | 86% | 0% | 12% | 00:06; 1% | NA | |

| Mean | 19:43 | NA | 95% | 0% | 3% | 00:24; 2% | NA | ||

| Virtual Cane experimental group | 1 | 29:24 | 37% | 33% | 2% | 6% | 0% | 06:46; 23% | NA |

| 2 | 49:39 | 28% | 54% | 5% | 6% | 0% | 03:29; 7% | NA | |

| 3 | 38:32 | 41% | 33% | 0% | 3% | 0% | 08:29; 22% | NA | |

| 4 | 58:45 | 45% | 21% | 4% | 3% | 0% | 16:27; 28% | NA | |

| 5 | 32:18 | 50% | 28% | 6% | 8% | 0% | 02:16; 7% | NA | |

| Mean | 41:44 | 74% | 3% | 5% | 0% | 07:06; 17% | NA | ||

| Control group | 1 | 3:44 | NA | NA | 87% | 0% | 3% | 00:22; 10% | 90% |

| 2 | 11:27 | NA | NA | 62% | 2% | 29% | 00:48; 7% | 97% | |

| 3 | 2:01 | NA | NA | 81% | 0% | 6% | 00:16; 13% | 72% | |

| 4 | 1:26 | NA | NA | 100% | 0% | 0% | 0% | 7% | |

| 5 | 4:38 | NA | NA | 89% | 11% | 0% | 0% | 63% | |

| Mean | 4:39 | NA | 84% | 3% | 8% | 00:17; 6% | 52% | ||

| n | Duration (min) | Look-Around Mode | Walk-Around Mode Spatial Strategy | Pauses (min; %) | Second Hand | ||||

|---|---|---|---|---|---|---|---|---|---|

| Name | Distance | Perimeter | Object-to-Object | Other | |||||

| BlindAid experimental group | 1 | 22:45 | NA | NA | 90% | 1% | 0% | 01:36; 7% | NA |

| 2 | 73:04 | NA | NA | 99% | 0% | 0% | 0% | NA | |

| 3 | 13:16 | NA | NA | 100% | 0% | 0% | 0% | NA | |

| 4 | 55:34 | NA | NA | 92% | 0% | 3% | 01:07; 2% | NA | |

| 5 | 19:10 | NA | NA | 100% | 0% | 0% | 0% | NA | |

| Mean | 36:46 | NA | 96% | 0% | 1% | 00:44; 2% | NA | ||

| Virtual Cane experimental group | 1 | 26:36 | 51% | 22% | 1% | 7% | 0% | 05:35; 21% | NA |

| 2 | 63:11 | 26% | 49% | 3% | 13% | 0% | 03:47; 6% | NA | |

| 3 | 44:43 | 46% | 32% | 2% | 2% | 0% | 07:09; 16% | NA | |

| 4 | 86:53 | 43% | 23% | 5% | 6% | 0% | 19:07; 22% | NA | |

| 5 | 45:13 | 51% | 20% | 9% | 11% | 0% | 03:10; 7% | NA | |

| Mean | 53:19 | 73% | 4% | 8% | 0% | 08:00; 15% | NA | ||

| Control group | 1 | 7:18 | NA | NA | 78% | 6% | 15% | 0% | 76% |

| 2 | 19:23 | NA | NA | 82% | 0% | 18% | 0% | 100% | |

| 3 | 10:05 | NA | NA | 84% | 3% | 9% | 0% | 4% | |

| 4 | 3:01 | NA | NA | 100% | 0% | 0% | 0% | 4% | |

| 5 | 6:24 | NA | NA | 68% | 0% | 23% | 00:35; 9% | 60% | |

| Mean | 9:14 | NA | 82% | 2% | 13% | 00:05; 1% | 43% | ||

| n | Space Components | Spatial Strategy | Spatial Representation | Chronology | |

|---|---|---|---|---|---|

| BlindAid experimental group | 1 | 65% | Perimeter | Route model | Structure |

| 2 | 87% | Perimeter | Route model | Structure | |

| 3 | 26% | List | List | Content | |

| 4 | 78% | Perimeter | Route model | Structure | |

| 5 | 37% | Area | Route model | Structure | |

| Mean | 59% | 4 Route model; 1 List | 4 Structure; 1 Content | ||

| Virtual Cane experimental group | 1 | 66% | List | Map model | Structure |

| 2 | 79% | Starting point | Map model | Structure | |

| 3 | 68% | Perimeter | Route model | Structure | |

| 4 | 72% | Perimeter & object to object | Map model | Structure | |

| 5 | 62% | Starting point & object to object | Map model | Structure | |

| Mean | 69% | 4 Map model; 1 Route model | 5 Structure | ||

| Control group | 1 | 36% | Perimeter | Route model | Structure |

| 2 | 54% | Area | Map model | Structure | |

| 3 | 27% | Starting point | Route model | Structure | |

| 4 | 15% | List | List | Structure | |

| 5 | 70% | Starting point | Route model | Structure | |

| Mean | 40% | 3 Route model; 1 Map model; 1 List | 5 Structure | ||

| n | Space Components | Spatial Strategy | Spatial Representation | Chronology | |

|---|---|---|---|---|---|

| BlindAid experimental group | 1 | 42% | Perimeter & list | Route model | Structure |

| 2 | 63% | Perimeter | Route model | Structure | |

| 3 | 50% | Perimeter | Route model | Structure | |

| 4 | 53% | Perimeter & area | Route model | Content | |

| 5 | 28% | Area | List | Structure | |

| Mean | 47% | 4 Route model; 1 List | 4 Structure; 1 Content | ||

| Virtual Cane experimental group | 1 | 20% | List | List | Structure |

| 2 | 46% | Area | Route model | Structure | |

| 3 | 41% | Perimeter | Route model | Structure | |

| 4 | 53% | Perimeter & object to object | Map model | Structure | |

| 5 | 59% | Area | Map model | Structure | |

| Mean | 44% | 2 Map model; 2 Route model; 1 List | 5 Structure | ||

| Control group | 1 | 30% | Starting point | Map model | Structure |

| 2 | 50% | Area | Map model | Structure | |

| 3 | 30% | Object to object | Route model | Structure | |

| 4 | 14% | Perimeter & starting point | Route model | Content | |

| 5 | 27% | Perimeter & starting point | Route model | Structure | |

| Mean | 30% | 3 Route model; 2 Map model | 4 Structure; 1 Content | ||

| n | Object-Oriented | Perspective-Change | Point-to-the-Location | |||||

|---|---|---|---|---|---|---|---|---|

| RTC (s) | Success | Direct Path | RTC (s) | Success | Direct Path | |||

| BlindAid experimental group | 1 | 9 | 67% | 67% | 37 | 100% | 100% | 83% |

| 2 | 18 | 100% | 100% | 53 | 67% | 67% | 33% | |

| 3 | 26 | 100% | 100% | 25 | 100% | 100% | 100% | |

| 4 | 194 | 33% | 0% | 92 | 67% | 67% | 50% | |

| 5 | NA | 67% | 33% | NA | 100% | 100% | 67% | |

| Mean | 62 | 73% | 60% | 52 | 87% | 87% | 67% | |

| Virtual Cane experimental group | 1 | 57 | 100% | 100% | 192 | 100% | 67% | 83% |

| 2 | 24 | 67% | 67% | 129 | 33% | 0% | 67% | |

| 3 | 34 | 67% | 67% | 68 | 67% | 67% | 83% | |

| 4 | 39 | 67% | 67% | 74 | 67% | 33% | 67% | |

| 5 | 68 | 67% | 33% | 93 | 67% | 67% | 100% | |

| Mean | 44 | 74% | 67% | 111 | 67% | 47% | 80% | |

| Control group | 1 | 7 | 33% | 33% | 27 | 33% | 33% | 67% |

| 2 | 10 | 100% | 100% | 27 | 100% | 100% | 100% | |

| 3 | 17 | 100% | 100% | 9 | 100% | 100% | 100% | |

| 4 | 9 | 100% | 100% | 21 | 67% | 67% | 83% | |

| 5 | 10 | 33% | 33% | 22 | 67% | 67% | 17% | |

| Mean | 11 | 73% | 73% | 21 | 73% | 73% | 73% | |

| n | Object-Oriented | Perspective-Change | Point-to-the-Location | |||||

|---|---|---|---|---|---|---|---|---|

| RTC (s) | Success | Direct Path | RTC (s) | Success | Direct Path | |||

| BlindAid experimental group | 1 | NA | 0% | 0% | NA | 50% | 50% | 67% |

| 2 | NA | 100% | 100% | NA | 0% | 0% | 33% | |

| 3 | 122 | 50% | 50% | 25 | 100% | 100% | 100% | |

| 4 | 43 | 50% | 50% | 0 | 0% | 0% | 67% | |

| 5 | 237 | 100% | 100% | 185 | 100% | 50% | 83% | |

| Mean | 134 | 60% | 60% | 105 | 50% | 40% | 70% | |

| Virtual Cane experimental group | 1 | 0 | 0% | 0% | 333 | 100% | 100% | 33% |

| 2 | 0 | 0% | 0% | 132 | 50% | 0% | 17% | |

| 3 | 194 | 50% | 0% | 117 | 50% | 50% | 50% | |

| 4 | 185 | 100% | 50% | 320 | 100% | 50% | 0% | |

| 5 | 185 | 50% | 0% | 0 | 0% | 0% | 50% | |

| Mean | 188 | 40% | 10% | 180 | 60% | 40% | 38% | |

| Control group | 1 | 67 | 50% | 0% | 72 | 50% | 50% | 33% |

| 2 | 44 | 100% | 100% | 69 | 100% | 50% | 100% | |

| 3 | 19 | 50% | 50% | 42 | 100% | 100% | 67% | |

| 4 | 175 | 100% | 50% | 60 | 100% | 100% | 50% | |

| 5 | 0 | 0% | 0% | 95 | 50% | 0% | 17% | |

| Mean | 76 | 60% | 40% | 68 | 80% | 60% | 53% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lahav, O. Virtual Reality Systems as an Orientation Aid for People Who Are Blind to Acquire New Spatial Information. Sensors 2022, 22, 1307. https://doi.org/10.3390/s22041307

Lahav O. Virtual Reality Systems as an Orientation Aid for People Who Are Blind to Acquire New Spatial Information. Sensors. 2022; 22(4):1307. https://doi.org/10.3390/s22041307

Chicago/Turabian StyleLahav, Orly. 2022. "Virtual Reality Systems as an Orientation Aid for People Who Are Blind to Acquire New Spatial Information" Sensors 22, no. 4: 1307. https://doi.org/10.3390/s22041307

APA StyleLahav, O. (2022). Virtual Reality Systems as an Orientation Aid for People Who Are Blind to Acquire New Spatial Information. Sensors, 22(4), 1307. https://doi.org/10.3390/s22041307