3. Experimental Methods

3.1. Ethics

In order to evaluate the SSD described, a quasi-experiment was proposed and approved by the ethics committee of the faculty of medicine at the university hospital of the Eberhard-Karls-University Tübingen in accordance with the 2013 Helsinki Declaration. All participants were informed about the study objectives, design and associated risks and signed an informed consent form to publish pseudonymous case details. The individuals shown in photographs in this paper have explicitly consented to the publication of those by signing an additional informed consent form.

3.2. Hypotheses

The study included training and testing of the white long cane. This was not done with the intention of pitting the two aids against each other or eventually replacing the cane with the SSD. Rather, the aim was to be able to discuss the SSD comparatively with respect to a controlled set of navigation tasks. In fact, the glove is designed in a way that it could be worn and used in combination with the cane in the other hand. Testing not only both aids but also the combination of both would, however, introduce new unknown interactions and confounding factors. The main objective of this study thus reads: “The impact of the studied SSD on the performance of the population in both navigation and obstacle detection is comparable to that of the white long cane.” The hypothesis derived from this is complex due to one problem: blind subjects usually have at least basic experience with the white cane or have been using it on a daily basis for decades. A newly learned tool such as the SSD can therefore hardly be experimentally compared with an internalised device like the cane. A second group of naive sighted subjects was therefore included to test two separate sub-hypotheses:

Hypothesis 1. Performance.

Sub-Hypothesis H1a. Non-Inferiority of SSD Performance: after equivalent structured training with both aids, sighted subjects (no visual impairment but blindfolded, no experience with the cane) achieve a non-inferior performance in task completion time (25 percentage points margin) with the SSD compared to the cane in navigating an obstacle course.

Sub-Hypothesis H1b. Equivalency of Learning Progress across Groups: at the same time, blind subjects who have received identical training (here only with the SSD) show equivalent learning progress with the SSD (25 percentage points margin) as the sighted group.

With both sub-hypotheses confirmed, one can therefore, in simple terms, make assumptions about the effect of the SSD on the navigation of blind people compared to the cane, if both had been learned similarly. In addition, two secondary aspects should be investigated:

Hypothesis 2. Usability & Acceptance.The device is easy to learn, simple to use, achieves a high level of user enjoyment and satisfaction and thus strong acceptance rates.

Hypothesis 3. Distal Attribution.Users report unconscious processing of stimuli and describe the origin of these haptic stimuli distally in space at the actual location of the observed object.

3.3. Study Population

A total of 14 participants were recruited mainly through calls at the university and through local associations for blind and visually impaired people in Cologne, Germany.

Appendix F contains a summary table with the subject data presented below. The complete data set is also available in the study data in

Supplementary Materials S3.

Six of the subjects were normally sighted and had a visual acuity of 0.3 or higher; eight were blind (congenitally and late blind), thus had a visual acuity of less than 0.05 and/or a visual field of less than 10° (category 3–5 according to ICD-10 H54.9 definition) on the better eye. Participants’ self-reports about their visual acuity were confirmed with a finger counting test (1 m distance) and, if passed, with the screen based Landolt visual acuity test “FrACT” [

62] (3 m distance) using a tactile input device (

Figure 2A).

Two subjects were excluded from the evaluation despite having completed the study: on average, subject f (cane = 0.75, SSD = 2.393) and subject z (cane = 1.214, SSD = 1.500) caused a remarkably higher number of contacts (two to three-fold) with both aids than the average of the remaining blind subjects (cane = 0.375, SSD = 0.643). For the former this can be explained by a consistently high level of nervousness when walking through the course. With both aids, the subject changed their course very erratically in the event of a contact, causing further contacts or even collisions right away. The performance of the latter worsened considerably towards the end of the study, again with both aids, so much so that the subject was no longer able to fulfil the task of avoiding obstacles at all, citing “bad form on the day” and fatigue as reasons. In order to not influence the available data by this apparent deviation, these two subjects were excluded from all further analysis.

The age of the remaining participants (six female, five male, one not specified) averaged 45 ± 16.65 years and ranged from 25 to 72 years. All were healthy—apart from visual impairments—and stated that they were able to assess and perform the physical effort of the task; none had prior experience with the Unfolding Space Glove or other visual SSDs.

All participants in the blind group have been using the white cane on a daily basis and for at least five years and/or did an Orientation and Mobility (O&M) training. Some occasionally use technical aids like GPS-based navigation and one even had prior experience using the feelspace belt (for navigation reasons only, not for augmentation of the Earth’s magnetic field). Two reported to use Blind Square from time to time and one used a monocular.

None of the sighted group had prior experience with the white cane.

3.4. Experimental Setup

The total duration of the study per subject differs between the blind and the sighted group, as the sighted have to do the training with both aids and the blind with the SSD only (since one inclusion criterion was experience in using the cane). The total length thus was about 4.5 h in the blind group and 5.5 h in the sighted group.

In addition to paper work, introduction and breaks, participants of the sighted group received 10 min of an introductory tutorial on both aids, had 45 min of training with them, spent 60 min using them during the trials (varied slightly due to the time required for completion) and thus reached a total wearing time of about 2 h with each aid. In the blind group, the wearing time of the SSD was identical, while the wearing time of the cane is lower due to the absence of tutorial and training sessions with it.

The study was divided into three study sessions, which took place at the Köln International School of Design (TH Köln, Cologne, Germany) over the span of six weeks. In the middle of a 130 square meter room, a 4 m wide and 7 m long obstacle course was built (

Figure 2B), bordered by 1.80 m high cardboard side walls and equipped with eight cardboard obstacles (35 × 31 × 171 cm) distributed on a 50 cm grid (

Figure 2C) according to the predefined course layouts.

3.5. Procedure

Before the first test run (baseline), the participants received a 10-min

Tutorial in which they were introduced to the handling of the devices. Directly afterwards, they had to complete the first

Trial Session (TS). This was followed by total of three

Practices Sessions (PS), each of them being followed by another TS—making the Tutorial, four TS and three PS in total. The study concluded with a questionnaire at the end of the third study session after completion of the fourth and very last TS. An exemplary timetable of the study procedure can be found in the

Supplementary Materials S4.

3.5.1. Tutorial

In the 10-min Tutorial, participants were introduced to the functional design of the device, its components and its basic usage such as body posture and movements while interacting with the device. At the end, the participants had the opportunity to experience one of the obstacles with the aid and to walk through a gap between two of these obstacles.

3.5.2. Trial Sessions

Each TS consisted of seven consecutive runs in the aid condition cane and seven runs in the condition SSD, with a flip of a coin in each TS deciding which condition to start with. The task given verbally after the description of the obstacle course read:

“You are one meter from the start line. You are not centered, but start from an unknown position. Your task is to cross the finish line seven meters behind the start line by using the aid. There are eight obstacles on the way which you should not touch. The time required for the run is measured and your contacts with the objects are counted. Contacts caused by the hand controlling the aid are not counted. Time and contacts are equally weighted—do not solely focus on one. You are welcome to think out loud and comment on your decisions, but you won‘t get assistance with finishing the task.”

Contacts with the cane were not included in the statistics, as an essential aspect of its operation is the deliberate induction of contact with obstacles. In addition, for both aids, contacts caused by the hand guiding it were not included in the statistics as well in order to motivate the subjects to freely interact with the aids. There was a clicking sound positioned at the end of the course (centred and 2 m behind the finish line) to roughly guide the direction. There was no help or other type of interference while participants were performing the courses. Only when they accidentally turned more than 90 degrees away from the finish line were they reminded to pay attention to the origin of the clicking sound. Both task completion time and obstacle contacts (including a rating in mild/severe contacts) were entered into a macro-assisted Excel spreadsheet on a hand-held tablet by the experimenter, who was following the subjects at a non-distracting distance. The data of all runs can be found in the study data in

Supplementary Materials S3.

A total of 14 different course layouts were used (

Figure 3), seven of which were longitudinal axis mirror images of the other seven. The layout order within one aid condition (SSD/cane) over all TS was the same for all participants and predetermined by drawing all 14 possible variations for each TS without laying back. This means that all participants went through the 14 layouts four times each, but in a different order for each TS and with varying aids, so that a memory effect can be excluded.

The layouts were created in advance using an algorithm that distributed the obstacles over the 50 cm grid. A sequence of 20 of these layouts was then evaluated in self-tests and with pre-subjects, leaving the final seven equally difficult layouts (

Figure 3).

The study design and the experimental setup were inspired by a proposal of a standardised obstacle course for assessment of “visual function in ultra low vision and artificial vision” [

63] but has been adapted due to spatial constraints and selected study objectives (e.g., testing with two groups and limited task scope only). There are two further studies suggesting a very similar setup for testing sensory substitution devices [

64,

65] that were not considered for the choice of this study design.

3.5.3. Practice Session

The practice sessions were limited to 15 min and followed a fixed sequence of topics and interaction patterns to be learned with the two aids (

Figure 4A–C). In the training sessions obstacles were arranged in varying patterns by the experimenter. Subjects received support as needed from the experimenter and were not only allowed to touch objects in their surroundings, but were even encouraged to do so in order to compare the stimuli perceived by the aid with reality.

In the case of the SSD training, after initially learning the body posture and movement, the main objective was to understand exactly this relationship between stimuli and real object. For this purpose, the subjects went through, for example, increasingly narrow passages with the aim of maintaining a safe distance to the obstacles on the left and right. Later, the tasks increasingly focused on finding strategies to find ways through course layouts similar to the training layouts.

While the training with the white cane (in the sighted group) took place in comparable spatial settings, here the subjects learned exercises from the cane programme within an O&M training (posture, swing of the cane, gait, etc.). The experimenter himself received a basic cane training from an O&M trainer in order to be able to carry it out in the study. The sighted subjects were therefore not trained by an experienced trainer, but all by the same person. At the same time, the SSD was not trained “professionally” either, as there are no standardised training methods specifically for the device yet.

3.5.4. Qualitative Approaches

In addition to the quantitative measurements, the subjects were asked to think aloud, to comment on their actions and to describe why they made certain decisions, both during training and during breaks between trials. These statements were written down by hand by the experimenter.

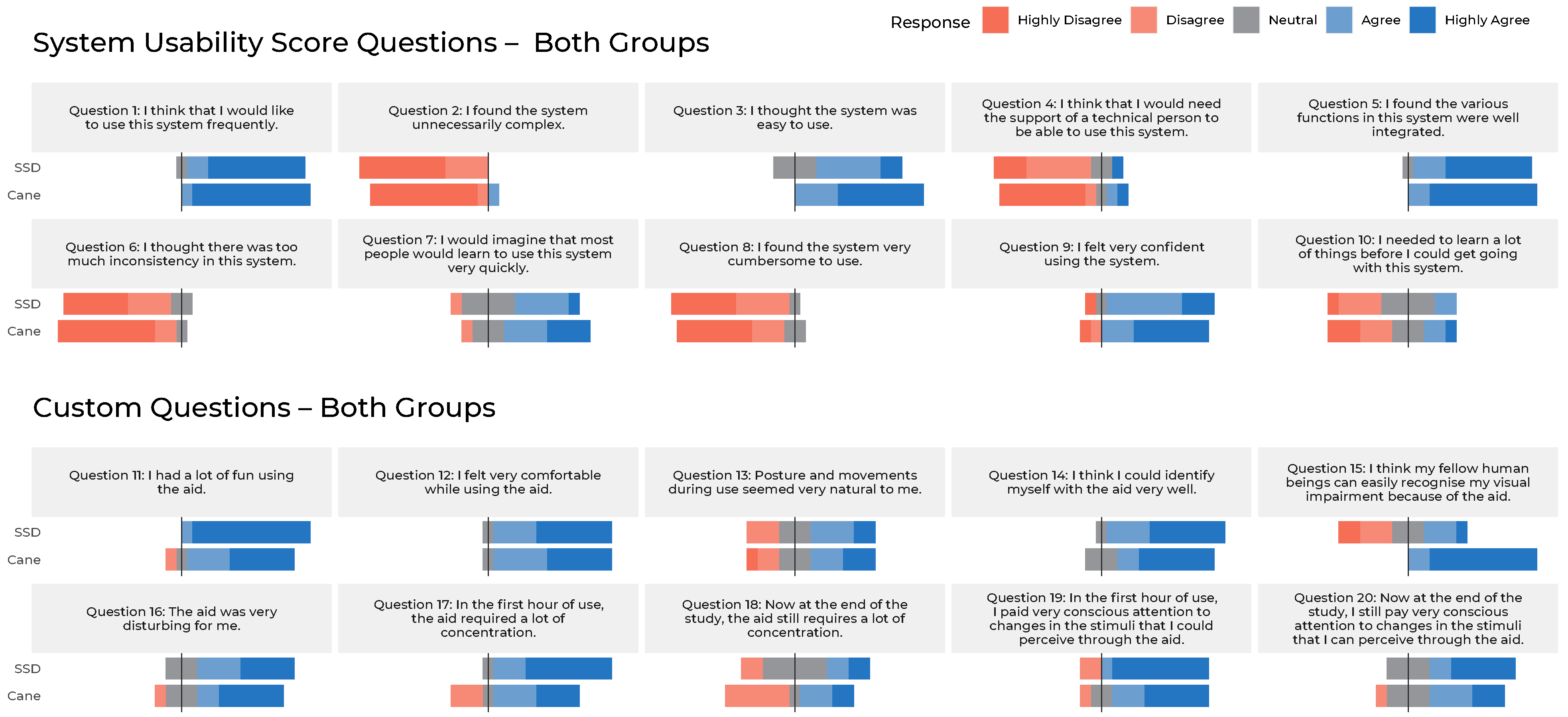

After completion of the last trial, the subjects were asked to fill out the final questionnaire. It consisted of three parts: firstly, the 10 statements of the System Usability Score (SUS) Test [

66] on a 0–4 Likert agreement scale; Secondly, 10 further custom statements on handling and usability on the same 0–4 Likert scale; And finally seven questions on different scales and in free text about perception, suggestions for improvement and the possibility to leave a comment on the study. The questions of part one and two were always asked twice: once for the SSD and once for the cane. The subjects could complete this part of the questionnaire either by handwriting or with the help of an audio survey with haptic keys on a computer. This allowed both sighted and blind subjects to answer the questions without being influenced by the presence of the investigator. The third part, on the other hand, was read out to the blind subjects by the investigator, who noted down the answers.

Due to the small number of participants, the results of the questionnaire are not suitable for drawing statistically significant conclusions, but should rather serve the qualitative comparison of the SSD with the cane and support further developments on this or similar SSDs. In the study data in

Supplementary Materials S3 there is a list with all questionnaire items and the Likert scale answers of items 1–20. In the Results section and in

Appendix H relevant statements made in the free text questions are included.

3.6. Analysis and Statistical Methods

A total of 784 trials in 14 different obstacle course layouts were performed by every subject over all sessions. The dependent variables were task completion time (in short time) and number of contacts (in short contacts).

Fixed effects were:

group: between-subject, binary (blind/sighted)

aid: within-subject, binary (SSD/cane)

TS: within-subject, numerical and discrete (the four levels of training)

Variables with random effects were: layout as well as the subjects themselves, nested within their corresponding group.

The quantitative data of the dependent variable task completion time was analysed by means of parametric statistics using a linear mixed model (LMM). In order to check whether the chosen model corresponds to the established assumptions for parametric tests, the data were analysed according to the recommendation of Zuur et al. [

67]. The time variable itself has been normalised in advance using a logarithmic function to meet those assumptions (referred to in the following as

log time). With the assumptions met, all variables were then tested for their significance to the model and their interactions with each other. See

Appendix G for details on the model, its fitting procedure, the assumption and interactions tests and corresponding plots.

Most statistical methods only test for the presence of differences between two treatments and not for their degree of similarity. To test the sub-hypotheses of H1, a non-inferiority test (H1a) and an equivalence test (H1b) were thus carried out. These check whether the least squares (LS) means and corresponding confidence intervals (CI) of a selected contrast exceed a given range (here 25 percentage points in both sub-hypotheses) either in the lower or in the upper direction. In order to confirm the latter, equivalence, both directions must be significant; For non-inferiority only the “worse” (in this case the slower side) has to be significant (since it would not falsify the sub-hypothesis if the SSD were unequally faster) [

68].

No statistical tests were performed on the contacts data. Since the data structure is zero-inflated and poisson distributed, non-parametric tests such as a generalised linear mixed model would be required, resulting in low statistical power given the sample size. Nevertheless, descriptive statistical plots of these data alongside the analysis of the log time statistics are to be included in the next section.

All analyses have been executed using the statistical computing environment R and the graphical user interface RStudio. The lme4 package was used to run LMMs. To calculate Least LS means, their CI and the non-inferiority/equivalency tests, the emmeans and the emtrends package was used. In this paper averages are shown as arithmetic mean with the corresponding standard deviation. For all statistical tests an alpha level of 0.05 was chosen.

5. Discussion

The results presented above demonstrate not only the perceptibility and processibility of 3D images by means of vibrotactile interfaces for the purpose of navigation, but also the feasibility, learnability and usefulness of the novel Unfolding Space Glove—a haptic spatio-visual sensory substitution system.

Before discussing the results, it has to be made explicitly clear that the study design and the experimental set-up do not yet allow generalisations to be made about real-life navigational tasks for blind people. In order to be able to define the objective of the study precisely, many typical, everyday hazards and problems were deliberately excluded. These include objects close to the ground (thresholds, tripping hazards and steps) or the recognition of approaching staircases. Furthermore, auditory feedback from the cane, which allows conclusions to be drawn about the material and condition of the objects in question, were omitted. In addition, there is the risk of a technical failure or error, the limit of a single battery charge and other smaller everyday drawbacks (waterproofness, robustness, etc.) that the prototype currently still suffers from. Of course, many of the points listed here could be solved technically and could be integrated into the SSD at a later stage. However, they would require development time, would have to be evaluated separately and can therefore not simply be taken for granted in the present state.

With that being said, it is possible to draw a number of conclusions from the data presented. First of all, some technical aspects: the prototype withstood the entire course of the study with no technical problems and was able to meet the requirements placed on it that allow the sensory experience itself to be assessed as independently of the device as possible. These include, for example, intuitive operation of the available functions, sufficient wearing comfort, easy and quick donning and doffing, sufficient battery life and good heat management.

The experimental design can also be pointed out: components such as data collection via tablet, the labelled grid system for placing the obstacles plus corresponding set-up index cards and the interface for real-time monitoring of the prototype enabled the sessions to be carried out smoothly with only one experimenter. An assistant helped to set up and dismantle the room, provided additional support (e.g., by reconfiguring the courses and documented the study in photos and videos), but neither had to be, nor was present at every session. Observations, ratings and participant communication were carried out exclusively by the experimenter.

Turning now to the sensory experience under study, it can be deduced that 3D information of the environment is very direct and easy to learn. Not only were the subjects able to successfully complete all 392 trials with the SSD, but they also showed good results as soon as the first session and thus after only a few minutes of wearing the device. This is, to the best of our knowledge, in contrast to many other SSDs in the literature, which require several hours of training before the new sensory information can be used meaningfully (in return, usually offering higher information density).

Nevertheless, the cane outperforms the SSD in time and contacts, in both groups in the first TS and at every other stage of the study (also see

Figure 5 and

Figure 6). Apart from the measurements, the fact that the cane seems to be even easier to access than the glove is also shown in the results of the questionnaire among the sighted subjects, for whom both aids were new: while many answers between the SSD and the cane only differed slightly on average (

≤ 0.5), the deviations are greatest in questions about the learning progress. Sighted subjects thought the cane was easier to use (Q3,

= 1.0), could imagine that “most people would learn to use this system” more quickly (Q7,

= 0.8) and stated that they had to learn less things before they could use the system (Q10,

= 0.7) compared to the SSD.

5.1. Hypothesis 1 and Further Learning Progress

At the end of the study and after about 2 h of wearing time, H1a states that the SSD is still about 54% slower than the cane. Even though this difference in walking speed could be acceptable if (and only if) other factors gave the SSD an advantage, H1 had to be rejected: the deviation exceeds the predefined 25% tolerance range and thus can no longer be understood as an “non-inferior performance”. H1b, however, can be accepted, indicating that due to a “equivalent learning progress” between the groups these results would also apply to blind people who have not yet learned either device.

Left unanswered and holding potential for further research is the question of what the further progression of learning would look like. The fact that the cane (in comparison to the SSD) already reached a certain saturation in the given task spectrum at the end of the study is indicated by several aspects: looking at the performance of B&C, one can roughly estimate the time and average contacts that blind people need to complete the course with their well-trained aid. At the same time, the measurements of S&C are already quite close to those of B&C at the end of the study, so that it can be assumed that only a few more hours of training would be necessary for the sighted to align with them (within the mentioned limited task spectrum of the experimental setup). The assumption that the learning curve of the SSD is less saturated than that of the cane at the end of the study is supported by sighted subjects stating that the cane required less concentration at the end of the study (Q18, 1.8) than the SSD (Q18, 2.7). Furthermore, they expected less learning progress for the cane with “another 3 h of training” (Q23, 2.2) in contrast to the SSD (Q23, 3.5) and also in contrast to the learning progress they already had with the cane during the study (Q22, 3.3). Therefore, a few exciting research questions are whether the learning progress of the SDD would continue in a similar way, at which threshold value it could come to rest and whether this value would eventually be equal to or better than that of the cane. Note that the training time of 2 h in this study is far below that of many other publications on SS; one often-cited study e.g., reached 20–40 h of training with most and 150 h with one particular subject [

32].

Another aspect that confounds the interpretation of the data is the presence of a correlation between the two independent variables time/log time and contacts. The reason for this is quite simple: faster walking paces lead to a higher number of contacts. A slow walking pace, on the other hand, allows more time for the interpretation of information and, when approaching an obstacle, to come to a halt in time or to correct the path and not collide with the obstacle. The subjects were asked to consider time and contacts as being equivalent. Yet these variables lack any inherent value that would allow comparing a potential contact with loss of time, for example. Personal preference may also play a role in the weighting of the two variables: fear of collisions with objects (possibly overrepresented in sighted people due to unfamiliarity with the task) may lead to slower speeds. At the same time, the motivation to complete the task particularly quickly may lead to faster speeds but higher collision rates. Several subjects reported that towards the end of the study, they felt that they had learned the device to the point where contacts were completely avoidable for them given some focus. This attitude may have led to a bias in the data, which can be observed in the fact that time increased in the sighted group with both aids towards the end of the study while the collisions continued to fall. It seems to be difficult to solve this problem only by changing the formulation of the task and without expressing the concrete value of an obstacle contact in relation to time (e.g., a collision would add 10 s to the time or leads to the exclusion of this trial from the evaluation).

5.2. Usability & Acceptance

While there is an ongoing debate about how to interpret SUS and what value the scoring system has in the first place, the scores of the SSD (50) and the cane (53) are comparably low. Therefore they can be interpreted as being “OK” only and, in the context of all the systems tested with this score, they tend to be in the 20% or even 10% percentile [

69]. It should be noted, however, that the score is rarely used to evaluate assistive devices at all, which may partly explain a generally poorer performance in those. The score is, however, suitable to “compare two versions of an application” [

69]: the presented results therefore indicate that usability in the two tested aids does not fundamentally differ in the somewhat small experimental group. This equivalence can also be assessed from other questionnaire items with most having very few deviations:

Looking at the Likert scale averages of the entire study population (blind & sighted), biggest deviations ( ≥ 0.5) can be observed in the expected recognisability of a “visual impairment because of the aid” that is stated to be much lower (Q15, = 2.0) with the SSD (Q15, 1.8) than with the cane (Q15, 3.8). Just as in the sighted group, the average of both groups stated that the cane was easier to use (Q3, = 0.8) and required less concentration at the beginning of the study (Q17, = 0.8), whereas this difference becomes negligible by the end of the study (Q18, = 0.3). Last but not least, the two aids differed in Q11 (“I had a lot of fun using the aid”), in which the SSD scored = 0.7 points better.

Exemplary statements have already been presented in the results section, summarised and classified into topics. They support the theses that the Unfolding Space Glove achieves its goal of being easier and quicker to learn than many other SSDs while providing users with a positive user experience. However, the sample size and the survey methods are not sufficient for more in-depth analyses. The presentation should rather serve the purpose of completeness and provide insights into how the learning process was perceived by the test persons in the course of the study.

5.3. Distal Attribution and Cognitive Processing

The phenomenon of distal attribution (sometimes externalisation of the stimulus) in simplified terms describes when users of an SSD report to no longer consciously perceive the stimulus at the application site on the body (here e.g., the hand), but instead refer to the perceived objects in space (distal/outside the body). This can also be observed, for example, when sighted people describe visual stimuli and do not describe the perception of stimuli on their retina, but instead the things they

see at their position in space. Distal attribution was first mentioned in early publications of Paul Bach-y-Rita [

31,

33] in which participants received 20–40 h of training (one individual even 150 h) with a TVSS and has also been described in other publications e.g., about AVSS devices [

40]. Ever since it has been discussed in multifaceted and sometimes even philosophical discourses and has been topic of many experimental investigations [

70,

71,

72,

73]. As already described in the results section, the statements do not indicate the existence of this specific attribution. However, they do show a high degree of spatio-motor coupling of the stimuli and suggest the emergence of distal-like localisation patterns. The wearing time of only 2 h, however, was comparatively short and studies with longer wearing times would be of great interest on this topic.

5.4. Compliance with the Criteria Set

In the introduction to this paper, 14 criteria were defined that are important for a successful development of an SSD. See also

Appendix A for a description of those. Chebat et al. [

55], who collected most of them, originally did so to show problems of known SSD proposals. In the design and development process of the Unfolding Space Glove these criteria did play a crucial role from the very start. Now, with the findings of this study in mind, it is time to examine to what degree it can meet the list of criteria by classifying six of its key aspects (with numbers of the respective criteria in parentheses):

Open Source and Open Access. The research, the material, the code and all blueprints of the device, are open source and open access. In the long run, this can lead to lower costs (1) of the SSD and a higher dissemination (4), as development expenses are already eliminated; the device can theoretically even be reproduced by the users themselves.

Low Complexity of Information. The complexity and thus the resolution of the information provided by an SSD is on a low level in order to offer low entry barriers when learning (1) the SSD. The user experience and enjoyment (13) is not affected much by the cognitive load (5). This requirement is of course in contrast to the problems of low resolution (9).

Usage of 3D Input. The use of spatial depth (7) images from a 3D camera inherently provides suitable information for locomotion, reduces the cognitive load (5) of extracting them from conventional two-dimensional images and is independent of lighting situations and bad contrast (8).

Using Responsive Vibratory Actuators as Output. Vibration patterns can be felt (in different qualities) all over the body, are non-invasive, non-critical and have no medically proven harmful effect. Linear resonance actuators (LRA), offer low latency (3), are durable, do not heat up too much and are still cost (10) effective, although they require special integrated circuits to drive them.

Positioning at the Back of the Hand. The site of stimulation on the back of the hand, although disadvantaged by other factors such as total available surface or density of vibrotactile receptors [

74], proved to be a suitable site of stimulation with regard to several aspects: a fairly natural posture of the hand when using the device enables a discrete body posture, does not interfere with the overall

aesthetical appearance (14) and

preserves sensory and motor habits (12). The

orientation of the Sensor (6) on the back of the hand is hoped to be quite accurate as we can use our hands for detailed motor actions and have a high proprioceptive precision in our elbow and shoulder [

75]. Last but not least, the hand has a

high motor potential (11) (rotation and movement in three axes), facilitating the sensorimotor coupling process.

Thorough Product & Interaction Design. A good design does not only consist of the visible shell. Functionality, interaction design and product design must be considered holistically and profoundly, and in the end they pay off on many aspects apart from the aesthetic appearance (14) itself, such as almost all of the key aspects discussed in this section and on user experience and joy of use (13).

6. Conclusions

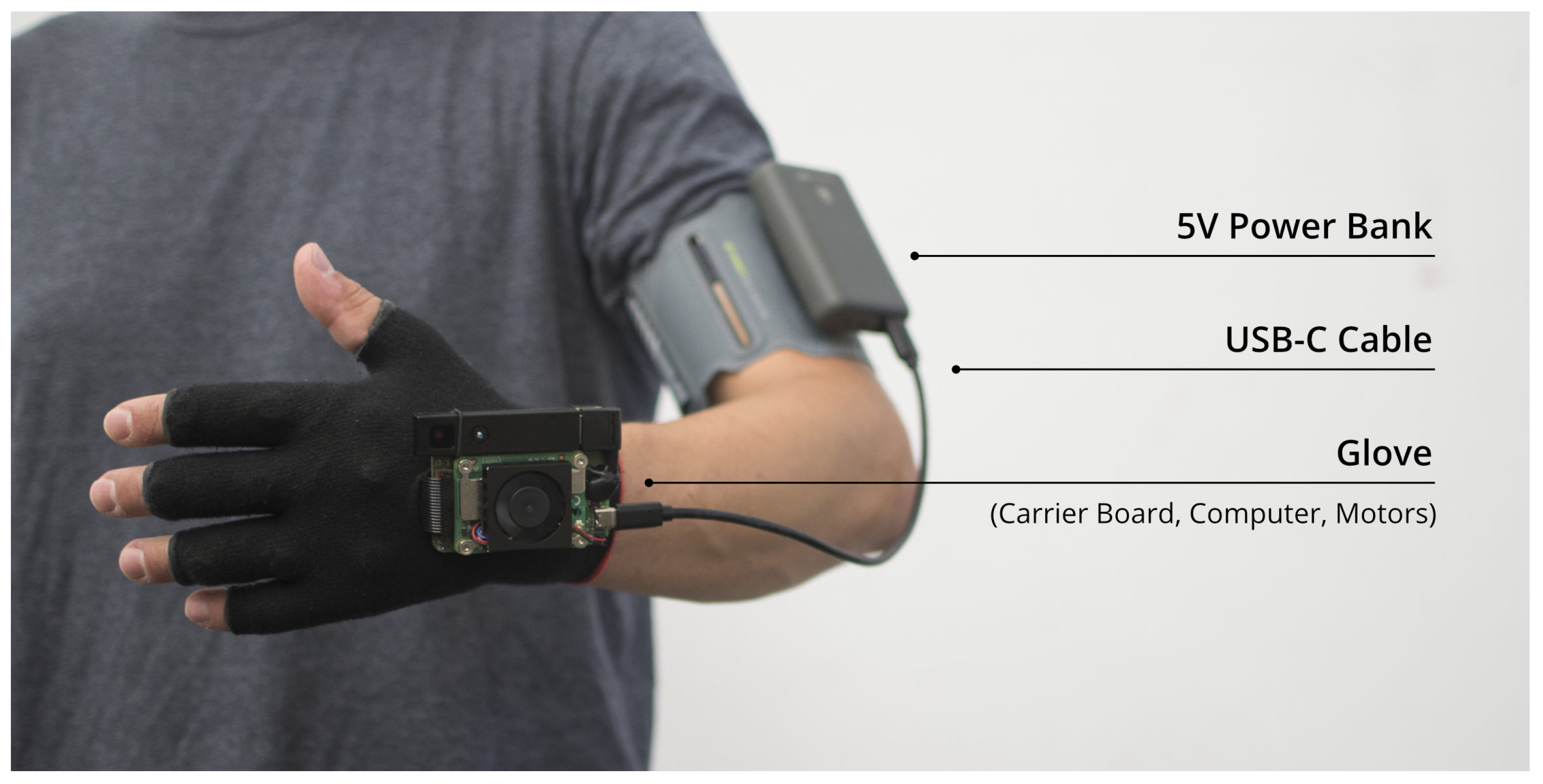

The Unfolding Space Glove, a novel wearable haptic spatio-visual sensory substitution system, has been presented in this paper. The glove transforms three-dimensional depth images from a time of flight camera into vibrotactile stimuli on the back of the hand. Blind users can thus haptically explore the depth of the space surrounding them and obstacles contained therein by moving their hand. The device, in its somewhat limited functional scope, can already be used and tested without professional support and without the need of external hardware or specific premises. It already is highly portable and offers a continuous and very immediate feedback, while its design is unobtrusive and discreet.

In a study with eight blind and six sighted (but blindfolded) subjects, the device was tested and evaluated in obstacle courses. It could be shown that all subjects were able to learn the device and successfully complete the parcours presented to them. Handling has low entry barriers and can be learned almost intuitively in a few minutes, with the learning progress between blind and sighted subjects being fairly comparable. However, at the end of the study and after about 2 h of wearing the device, the sighted subjects were significantly slower (by about 54%) in solving the courses with the glove compared to the white long cane they had worn and trained for the same amount of time.

The device meets many basic requirements that a novel SSD has to fulfil in order to be accepted by the target group. This is also reflected in the fact that the participants reported a level of user satisfaction and usability that is—despite its different functions and complexity—quite comparable to that of the white long cane.

The results in the proposed experimental set-up are promising and confirm that depth information presented to the tactile system can be cognitively processed and used to strategically solve navigation tasks. It remains open how much improvement could be achieved in another two or more hours of training with the Unfolding Space Glove. On the other hand, the results are of limited applicability to real-world navigation for blind people: too many basic requirements for a navigation aid system (e.g., detection of ground level objects) are not yet included in the functional spectrum of the device and would have to be implemented and tested in further research.