Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network

Abstract

:1. Introduction

- In real scenes, humans who have a rich tactile perception system will obtain a delicate sensory feedback by touching an object and will have a specific cognition of the strength level of the object’s attribute. For networks, only using binary tactile labels to describe objects will simplify object attributes to binary space and the network has a very rough understanding of the level of the strength of an object’s attribute [22].

2. Related Work

3. Materials

3.1. Robot Platform

- The ROS master is the core of ROS. It registers the names of nodes, services, and topics and maintains a parameter server.

- The Kinova node plans a reasonable path to move the end of the manipulator to a fixed point by manipulating the Kinova manipulator, then manipulating the gripper to open and close the object. The gripper continues to close after contacting the object. The gripper stops closing when the fixed force threshold is reached.

- The SynTouch node is used to publish the tactile data generated by the interaction between the tactile sensor and the object. During the execution of a complete exploration action to generate tactile data, the two tactile sensors following the two-finger gripper physically interact with the object to obtain the tactile sensor data and publish data to the ROS network at a frequency of 100 Hz.

- The judge is used to judge whether the tactile sensor is in contact with the target object. When the ratio of the PAC signal value at the next moment to at the previous moment is greater than 1.1 or less than 0.9, it is considered that the initial touch occurs and the data will be published to the process node. The values 1.1 and 0.9 are experimentally measured thresholds that can determine whether the initial contact is generated.

- The process node subscribes to the haptic data released by the SynTouch node and judges whether an initial contact occurs. After satisfying the conditions, it intercepts the data released by the SynTouch node and superimposes them into a dual-channel haptic sample. Since the Kinova robotic arm needs to reach the preset position before performing the exploration action, the NumaTac returns useless data in this process. In order to solve this problem, a sub-thread is established. First, it continuously receives tactile data in the main thread and judges whether the tactile sensor has initial contact with the object. When the initial contact is made, the sub-thread waits for 3 s until all the sample data in the main thread are received, where 3 is the experimentally measured time required to collect a complete sample. The data are then intercepted to create haptic samples.

3.2. NumaTac Haptic Dataset

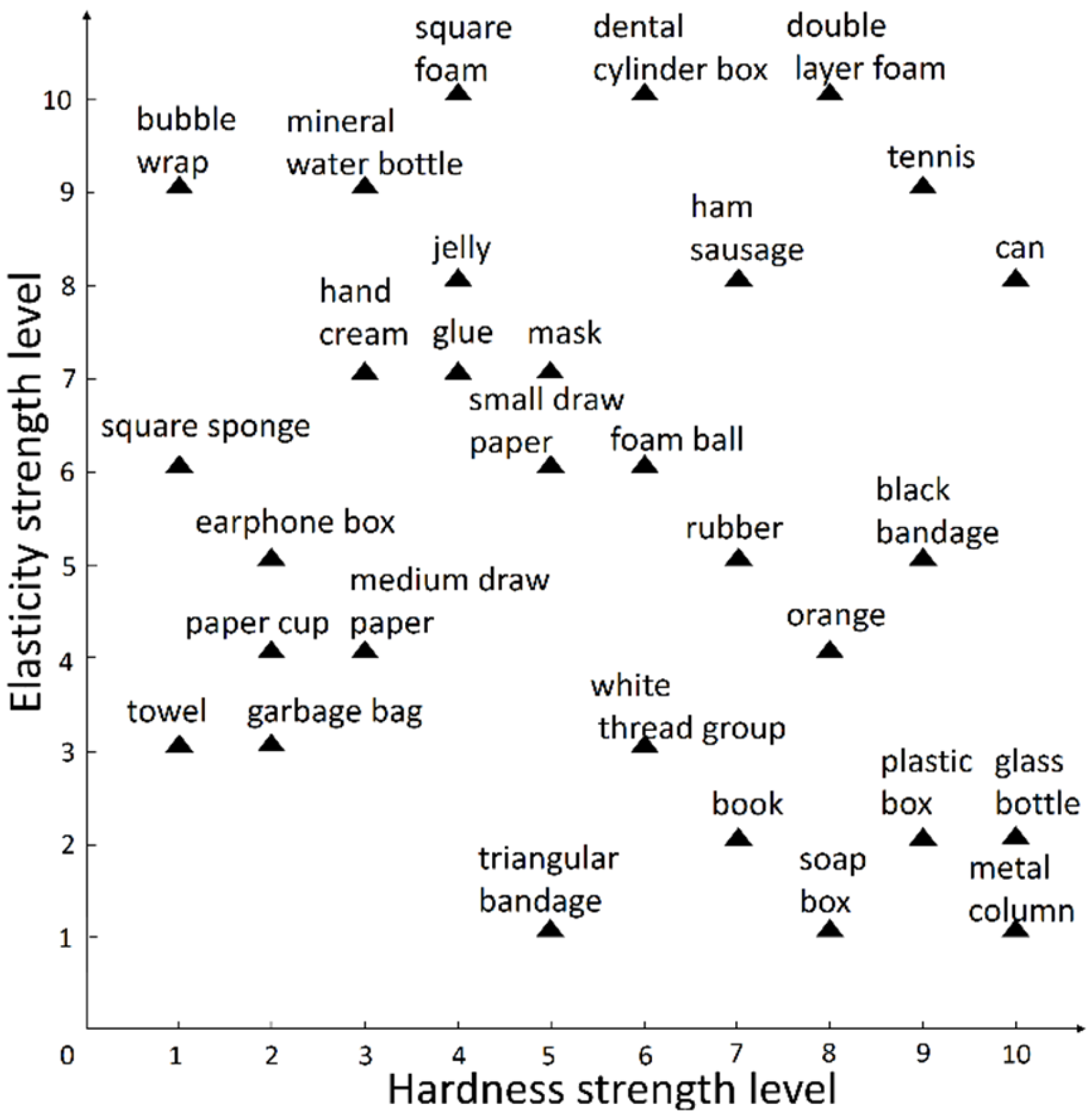

3.2.1. Objects

3.2.2. Data Collection Detail Settings

3.2.3. Data Preprocessing

3.2.4. Labels

4. Method

4.1. Multi-Scale Convolutional Neural Network Architecture

- The original feature map: the obtained feature map is subjected to a max-pooling operation with a kernel size of two to correspond to the size of the other two convolutional layers, and no convolution operation is performed to preserve the original information.

- The single-channel feature map: the output of the previous step is subjected to a convolution operation through conv1_1, conv2_1, and conv3_1, and a max-pooling operation with a kernel size of two is performed, and then multiplied by a weight coefficient of a convolutional layer. The is used for the weight coefficient of training single-channel output features in the network.

- The multi-channel feature map: the output feature map of the previous step is subjected to a convolution operation through conv1_2, conv2_2, and conv3_2, and a max-pooling operation with a kernel size of two is performed, and then multiplied by the weight coefficient . The is the weight coefficient of training multi-channel feature maps in the network.

4.2. Multi-Scale Convolutional Neural Network Parameters

4.3. Calculation Process

5. Experiment and Results

5.1. Object Attribute Strength Level Recognition Experiment

5.2. Analysis of Results

5.2.1. Accuracy

5.2.2. Precision

5.2.3. Recall

5.2.4. F1_Score

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Bai, Z.X.; Zhang, X.L. Speaker recognition based on deep learning: An overview. Neural Netw. 2021, 140, 65–99. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Kroemer, O.; Su, Z.; Veiga, F.F.; Kaboli, M.; Ritter, H.J. A Review of Tactile Information: Perception and Action through Touch. IEEE Trans. Robot. 2020, 36, 1619–1634. [Google Scholar] [CrossRef]

- Lederman, S.J.; Klatzky, R.L. Extracting object properties through haptic exploration. Acta Psychol. 1993, 84, 29–40. [Google Scholar] [CrossRef]

- Komeno, N.; Matsubara, T. Tactile perception based on injected vibration in soft sensor. IEEE Robot. Autom. Lett. 2021, 6, 5365–5372. [Google Scholar] [CrossRef]

- Zhang, Y.; Kan, Z.; Tse, A.Y.; Yang, Y.; Wang, M.Y. Fingervision tactile sensor design and slip detection using convolutional lstm network. arXiv 2018, arXiv:1810.02653. [Google Scholar]

- Siegel, D.; Garabieta, I.; Hollerbach, J.M. An integrated tactile and thermal sensor. In Proceedings of the IEEE International Conference on Robotics & Automation, San Francisco, CA, USA, 7–10 April 1986; pp. 1286–1291. [Google Scholar]

- Tsuji, S.; Kohama, T. Using a convolutional neural network to construct a pen-type tactile sensor system for roughness recognition. Sens. Actuators A-Phys. 2019, 291, 7–12. [Google Scholar] [CrossRef]

- Kaboli, M.; Cheng, G. Robust Tactile Descriptors for Discriminating Objects from Textural Properties via Artificial Robotic Skin. IEEE Trans. Robot. 2018, 34, 985–1003. [Google Scholar] [CrossRef]

- Fishel, J.A.; Loeb, G.E. Bayesian exploration for intelligent identification of textures. Front. Neurorobotics 2012, 6, 4. [Google Scholar] [CrossRef] [Green Version]

- Spiers, A.J.; Liarokapis, M.V.; Calli, B.; Dollar, A.M. Single-Grasp Object Classification and Feature Extraction with Simple Robot Hands and Tactile Sensors. IEEE Trans. Haptics 2016, 9, 207–220. [Google Scholar] [CrossRef]

- Lepora, N.F. Biomimetic Active Touch with Fingertips and Whiskers. IEEE Trans. Haptics 2016, 9, 170–183. [Google Scholar] [CrossRef] [Green Version]

- Okamura, A.M.; Cutkosky, M.R. Feature detection for haptic exploration with robotic fingers. Int. J. Robot. Res. 2001, 20, 925–938. [Google Scholar] [CrossRef]

- Chu, V.; McMahon, I.; Riano, L.; McDonald, C.G.; He, Q.; Perez-Tejada, J.M.; Arrigo, M.; Fitter, N.; Nappo, J.C.; Darrell, T.; et al. Using robotic exploratory procedures to learn the meaning of haptic adjectives. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3048–3055. [Google Scholar]

- Chu, V.; McMahon, I.; Riano, L.; McDonald, C.G.; He, Q.; Perez-Tejada, J.M.; Arrigo, M.; Darrell, T.; Kuchenbecker, K.J. Robotic learning of haptic adjectives through physical interaction. Robot. Auton. Syst. 2015, 63, 279–292. [Google Scholar] [CrossRef]

- Okatani, T.; Takahashi, H.; Noda, K.; Takahata, T.; Matsumoto, K.; Shimoyama, I. A Tactile Sensor Using Piezoresistive Beams for Detection of the Coefficient of Static Friction. Sensors 2016, 16, 718. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.J.; Lo, S.H.; Young, H.-T.; Hung, C.-L. Evaluation of Deep Learning Neural Networks for Surface Roughness Prediction Using Vibration Signal Analysis. Appl. Sci. 2019, 9, 1462. [Google Scholar] [CrossRef] [Green Version]

- Sinapov, J.; Sukhoy, V.; Sahai, R.; Stoytchev, A. Vibrotactile Recognition and Categorization of Surfaces by a Humanoid Robot. IEEE Trans. Robot. 2011, 27, 488–497. [Google Scholar] [CrossRef]

- Feng, J.H.; Jiang, Q. Slip and roughness detection of robotic fingertip based on FBG. Sens. Actuators A-Phys. 2019, 287, 143–149. [Google Scholar] [CrossRef]

- Gandarias, J.M.; Gómez-de-Gabriel, J.M.; García-Cerezo, A.J. Enhancing perception with tactile object recognition in adaptive grippers for human-robot interaction. Sensors 2018, 18, 692. [Google Scholar] [CrossRef] [Green Version]

- Lu, J. Multi-Task CNN Model for Attribute Prediction. IEEE Trans. Multimed. 2015, 17, 1949–1959. [Google Scholar]

- Richardson, B.A.; Kuchenbecker, K.J. Learning to predict perceptual distributions of haptic adjectives. Front. Neurorobotics 2020, 13, 116. [Google Scholar] [CrossRef]

- Melchiorri, C. Slip detection and control using tactile and force sensors. IEEE/ASME Trans. Mechatron. 2000, 5, 235–243. [Google Scholar] [CrossRef]

- Su, Z.; Hausman, K.; Chebotar, Y.; Molchanov, A.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Force Estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 297–303. [Google Scholar]

- Luo, S.; Mou, W.X.; Althoefer, K.; Liu, H. Novel Tactile-SIFT Descriptor for Object Shape Recognition. IEEE Sens. J. 2015, 15, 5001–5009. [Google Scholar] [CrossRef]

- Gandarias, J.M.; Gomez-de-Gabriel, J.M.; Garcia-Cerezo, A. Human and Object recognition with a high-resolution tactile sensor. In Proceedings of the 2017 IEEE Sensors, Glasgow, UK, 29 October–1 November 2017; pp. 981–983. [Google Scholar]

- Pastor, F.; Garcia-Gonzalez, J.; Gandarias, J.M.; Medina, D.; Closas, P.; Garcia-Cerezo, A.J.; Gomez-De-Gabriel, J.M. Bayesian and Neural Inference on LSTM-Based Object Recognition from Tactile and Kinesthetic Information. IEEE Robot. Autom. Lett. 2021, 6, 231–238. [Google Scholar] [CrossRef]

- Okamura, A.M.; Turner, M.L.; Cutkosky, M.R. Haptic exploration of objects with rolling and sliding. In Proceedings of the International Conference on Robotics and Automation, Albuquerque, NM, USA, 25–25 April 1997; pp. 2485–2490. [Google Scholar]

- Dong, S.Y.; Yuan, W.Z.; Adelson, E.H. Improved gelsight tactile sensor for measuring geometry and slip. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 137–144. [Google Scholar]

- Schmitz, A.; Bansho, Y.; Noda, K.; Iwata, H.; Ogata, T.; Sugano, S. Tactile object recognition using deep learning and dropout. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; pp. 1044–1050. [Google Scholar]

- Yuan, W.; Zhu, C.; Owens, A.; Srinivasan, M.A.; Adelson, E.H. Shape-independent hardness estimation using deep learning and a GelSight tactile sensor. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 951–958. [Google Scholar]

- Han, D.; Nie, H.; Chen, J.; Chen, M.; Deng, Z.; Zhang, J. Multi-modal haptic image recognition based on deep learning. Sens. Rev. 2018, 38, 486–493. [Google Scholar] [CrossRef]

- Hui, W.; Li, H. Robotic tactile recognition and adaptive grasping control based on CNN-LSTM. Yi Qi Yi Biao Xue Bao/Chin. J. Sci. Instrum. 2019, 40, 211–218. [Google Scholar]

- Pastor, F.; Gandarias, J.M.; García-Cerezo, A.J.; Gómez-De-Gabriel, J.M. Using 3D convolutional neural networks for tactile object recognition with robotic palpation. Sensors 2019, 19, 5356. [Google Scholar] [CrossRef] [Green Version]

- Tiest, W.M.B.; Kappers, A.M.L. Analysis of haptic perception of materials by multidimensional scaling and physical measurements of roughness and compressibility. Acta Psychol. 2006, 121, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Holliins, M.; Faldowski, R.; Rao, S.; Young, F. Perceptual dimensions of tactile surface texture: A multidimensional scaling analysis. Percept. Psychophys. 1993, 54, 697–705. [Google Scholar] [CrossRef] [Green Version]

- Hollins, M.; Bensmaïa, S.; Karlof, K.; Young, F. Individual differences in perceptual space for tactile textures: Evidence from multidimensional scaling. Percept. Psychophys. 2000, 62, 1534–1544. [Google Scholar] [CrossRef]

- Zhang, Z.H.; Mei, X.S.; Bian, X.; Cai, H.; Ti, J. Development of an intelligent interaction service robot using ROS. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 3–5 October 2016; pp. 1738–1742. [Google Scholar]

- Tan, M.X.; Chen, B.; Pang, R.M.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. MnasNet: Platform-aware neural architecture search for mobile. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2815–2823. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ma, N.N.; Zhang, X.Y.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision—ECCV, Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

| Layers | Parameters | Next Operation | Output | |

|---|---|---|---|---|

| Size, Stride, Padding | ||||

| Input | Input max-pooling | 2 × 2, 0, 0 | 23 × 300 × 2 11 × 150 × 2 | |

| Conv1_1 | conv max-pooling | 3 × 3 × 1, 1, 1 2 × 2, 0, 0 | BN | 23 × 300 × 1 11 × 150 × 1 |

| Conv1_2 | conv max-pooling | 3 × 3 × 5, 1, 1 2 × 2, 0, 0 | BN | 23 × 300 × 5 11 × 150 × 5 |

| Cat_layer1 | Leaky_relu | 11 × 150 × 8 | ||

| Conv2_1 | conv max-pooling | 3 × 3 × 1, 1, 1 2 × 2, 0, 0 | BN | 11 × 150 × 1 5 × 75 × 1 |

| Conv2_2 | conv max-pooling | 3 × 3 × 15, 1, 1 2 × 2, 0, 0 | BN | 11 × 150 × 15 5 × 75 × 15 |

| Cat_layer2 | Leaky_relu | 5 × 75 × 24 | ||

| Conv3_1 | conv max-pooling | 3 × 3 × 1, 1, 1 2 × 2, 0, 0 | BN | 5 × 75 × 1 2 × 37 × 1 |

| Conv3_2 | conv max-pooling | 3 × 3 × 32, 1, 1 2 × 2, 0, 0 | BN | 5 × 75 × 32 2 × 37 × 32 |

| Cat_layer3 | Leaky_relu | 2 × 37 × 57 | ||

| Fc1 | (4218,512) | BN | 512 | |

| Fc2 | (512,20) | BN | 20 | |

| Net | Level | Elasticity Level_Score (%) | Hardness Level_Score (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| accu | prec | reca | f1_score | accu | prec | reca | f1_score | ||

| Multi-scale convolutional neural network | 1 | 90 | 95 | 77 | 85 | 95 | 95 | 89 | 92 |

| 2 | 85 | 98 | 91 | 87 | 97 | 92 | |||

| 3 | 80 | 74 | 77 | 93 | 95 | 94 | |||

| 4 | 85 | 89 | 87 | 93 | 99 | 95 | |||

| 5 | 98 | 99 | 98 | 96 | 100 | 98 | |||

| 6 | 98 | 82 | 89 | 99 | 95 | 97 | |||

| 7 | 94 | 96 | 95 | 95 | 96 | 95 | |||

| 8 | 82 | 97 | 89 | 98 | 81 | 89 | |||

| 9 | 93 | 95 | 94 | 97 | 99 | 98 | |||

| 10 | 95 | 94 | 94 | 99 | 99 | 99 | |||

| Mnasnet1_0 | 1 | 81 | 98 | 79 | 87 | 81 | 99 | 83 | 91 |

| 2 | 98 | 77 | 87 | 100 | 81 | 90 | |||

| 3 | 95 | 78 | 86 | 35 | 99 | 52 | |||

| 4 | 98 | 81 | 89 | 99 | 82 | 90 | |||

| 5 | 99 | 79 | 88 | 99 | 76 | 86 | |||

| 6 | 99 | 79 | 88 | 100 | 78 | 88 | |||

| 7 | 99 | 77 | 86 | 99 | 83 | 91 | |||

| 8 | 99 | 78 | 87 | 98 | 75 | 85 | |||

| 9 | 35 | 99 | 52 | 100 | 75 | 85 | |||

| 10 | 100 | 80 | 89 | 99 | 81 | 89 | |||

| Resnet18 | 1 | 86 | 98 | 91 | 94 | 81 | 100 | 76 | 86 |

| 2 | 65 | 99 | 78 | 83 | 89 | 86 | |||

| 3 | 94 | 75 | 83 | 93 | 75 | 83 | |||

| 4 | 83 | 91 | 87 | 96 | 77 | 86 | |||

| 5 | 80 | 96 | 87 | 98 | 79 | 87 | |||

| 6 | 99 | 81 | 89 | 100 | 77 | 87 | |||

| 7 | 93 | 83 | 87 | 39 | 99 | 56 | |||

| 8 | 80 | 84 | 82 | 100 | 79 | 88 | |||

| 9 | 87 | 82 | 85 | 100 | 81 | 89 | |||

| 10 | 100 | 73 | 85 | 98 | 81 | 88 | |||

| Shufflenet_v2 | 1 | 85 | 76 | 81 | 78 | 85 | 88 | 86 | 87 |

| 2 | 85 | 93 | 89 | 63 | 90 | 74 | |||

| 3 | 90 | 81 | 86 | 90 | 81 | 85 | |||

| 4 | 88 | 91 | 89 | 86 | 82 | 84 | |||

| 5 | 85 | 89 | 87 | 86 | 93 | 90 | |||

| 6 | 88 | 87 | 88 | 94 | 85 | 90 | |||

| 7 | 82 | 84 | 83 | 83 | 75 | 79 | |||

| 8 | 79 | 71 | 75 | 89 | 82 | 85 | |||

| 9 | 91 | 83 | 87 | 93 | 95 | 94 | |||

| 10 | 85 | 88 | 86 | 93 | 85 | 89 | |||

| Mobilenet_v2 | 1 | 89 | 96 | 87 | 91 | 90 | 90 | 95 | 92 |

| 2 | 97 | 95 | 96 | 99 | 91 | 95 | |||

| 3 | 71 | 95 | 81 | 96 | 77 | 86 | |||

| 4 | 97 | 69 | 83 | 99 | 88 | 93 | |||

| 5 | 97 | 97 | 97 | 97 | 85 | 90 | |||

| 6 | 99 | 83 | 91 | 76 | 95 | 85 | |||

| 7 | 97 | 90 | 93 | 68 | 97 | 80 | |||

| 8 | 73 | 92 | 81 | 97 | 93 | 95 | |||

| 9 | 94 | 90 | 92 | 98 | 87 | 92 | |||

| 10 | 97 | 93 | 95 | 99 | 95 | 97 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Yu, G.; Shan, D.; Chen, Z.; Wang, X. Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network. Sensors 2022, 22, 1908. https://doi.org/10.3390/s22051908

Zhang P, Yu G, Shan D, Chen Z, Wang X. Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network. Sensors. 2022; 22(5):1908. https://doi.org/10.3390/s22051908

Chicago/Turabian StyleZhang, Peng, Guoqi Yu, Dongri Shan, Zhenxue Chen, and Xiaofang Wang. 2022. "Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network" Sensors 22, no. 5: 1908. https://doi.org/10.3390/s22051908

APA StyleZhang, P., Yu, G., Shan, D., Chen, Z., & Wang, X. (2022). Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network. Sensors, 22(5), 1908. https://doi.org/10.3390/s22051908