Sensors and Data Processing in Robotics

Share This Topical Collection

Editors

Prof. Dr. Frantisek Duchon

Prof. Dr. Frantisek Duchon

Prof. Dr. Frantisek Duchon

Prof. Dr. Frantisek Duchon

E-Mail

Website

Collection Editor

Slovak University of Technology, Slovakia

Interests: industrial robotics; mobile robotics; visionIndustry 4.0; manufacturing

Prof. Dr. Peter Hubinsky

Prof. Dr. Peter Hubinsky

Prof. Dr. Peter Hubinsky

Prof. Dr. Peter Hubinsky

E-Mail

Website

Collection Editor

Slovak University of Technology, Slovakia

Dr. Andrej Babinec

Dr. Andrej Babinec

Dr. Andrej Babinec

Dr. Andrej Babinec

E-Mail

Collection Editor

Slovak University of Technology in Bratislava, Slovakia

Topical Collection Information

Dear colleagues,

Recently, the sensors that enhance the senses and abilities of robots have been recorded. Visual systems offer contextually richer information; force-torque sensors provide tactile sense to robots; for HRI a multimodal perception is created; and sensors are miniaturized with the possibility of creating unique solutions for robots, drones, and other robotic devices. AI is also used to evaluate the information from the sensors.

Such advances are relevant for the creation of smarter robots capable of processing large amounts of data and ensuring their autonomous operation in many areas.

Prof. Dr. Frantisek Duchon

Dr. Peter Hubinsky

Dr. Andrej Babinec

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 250 words) can be sent to the Editorial Office for assessment.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Published Papers (22 papers)

Open AccessArticle

Research on Structural Optimization of High-Sensitivity Torque Sensors for Robotic Joints

by

Yizhou Chen, Shenglin Yu and Jinjie Xu

Viewed by 238

Abstract

To address the urgent need for real-time and high-precision torque perception in robotic manipulators operating in complex environments, this study focuses on the structural optimization design of joint torque sensors. By proposing a novel hourglass-hole spoke-type elastic body structure, a systematic parametric optimization

[...] Read more.

To address the urgent need for real-time and high-precision torque perception in robotic manipulators operating in complex environments, this study focuses on the structural optimization design of joint torque sensors. By proposing a novel hourglass-hole spoke-type elastic body structure, a systematic parametric optimization study was conducted with the objectives of improving material utilization and output sensitivity. To enhance optimization efficiency, single-factor experiments and explanatory notes on parameter selection ranges were incorporated to identify factors significantly influencing the target response and to determine their appropriate experimental ranges. Building upon this, the Box–Behnken experimental design method was employed, combined with response surface methodology, to perform multi-objective optimization on the key dimensions of the elastic body. Experimental results demonstrate that the optimized sensor structure achieved a 13.1% improvement in material utilization and an 11.9% increase in sensitivity. The baseline sensitivity of the final sensor reached 0.558 mV/N·m, representing a 19.2% enhancement compared to the optimized dumbbell-hole structure, while material utilization was also improved by 3.1%. This study proposes a novel high-sensitivity hourglass-hole spoke-type elastic body configuration and establishes an efficient response surface optimization framework applicable to the structural design of joint torque sensors fabricated from linear elastic materials, offering new insights for the design and optimization of high-sensitivity torque sensors.

Full article

►▼

Show Figures

Open AccessArticle

Calibration of Mobile Robots Using ATOM

by

Bruno Silva, Diogo Vieira, Manuel Gomes, Miguel Riem Oliveira and Eurico Pedrosa

Viewed by 1613

Abstract

The calibration of mobile manipulators requires accurate estimation of both the transformations provided by the localization system and the transformations between sensors and the motion coordinate system. Current works offer limited flexibility when dealing with mobile robotic systems with many different sensor modalities.

[...] Read more.

The calibration of mobile manipulators requires accurate estimation of both the transformations provided by the localization system and the transformations between sensors and the motion coordinate system. Current works offer limited flexibility when dealing with mobile robotic systems with many different sensor modalities. In this work, we propose a calibration approach that simultaneously estimates these transformations, enabling precise calibration even when the localization system is imprecise. This approach is integrated into Atomic Transformations Optimization Method (ATOM), a versatile calibration framework designed for multi-sensor, multi-modal robotic systems. By formulating calibration as an extended optimization problem, ATOM estimates both sensor poses and calibration pattern positions. The proposed methodology is validated through simulations and real-world case studies, demonstrating its effectiveness in improving calibration accuracy for mobile manipulators equipped with diverse sensor modalities.

Full article

►▼

Show Figures

Open AccessArticle

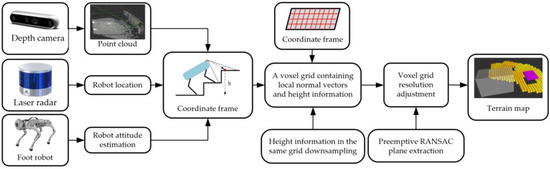

An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment

by

Hang Zhou, Peng Ping, Quan Shi and Hailong Chen

Cited by 4 | Viewed by 2553

Abstract

Accurate terrain mapping information is very important for foot landing planning and motion control in foot robots. Therefore, a terrain mapping method suitable for an indoor structured environment is proposed in this paper. Firstly, by constructing a terrain mapping framework and adding the

[...] Read more.

Accurate terrain mapping information is very important for foot landing planning and motion control in foot robots. Therefore, a terrain mapping method suitable for an indoor structured environment is proposed in this paper. Firstly, by constructing a terrain mapping framework and adding the estimation of the robot’s pose, the algorithm converts the distance sensor measurement results into terrain height information and maps them into the voxel grid, and effectively reducing the influence of pose uncertainty in a robot system. Secondly, the height information mapped into the voxel grid is downsampled to reduce information redundancy. Finally, a preemptive random sample consistency (preemptive RANSAC) algorithm is used to divide the plane from the height information of the environment and merge the voxel grid in the extracted plane to realize the adaptive resolution 2D voxel terrain mapping (ARVTM) in the structured environment. Experiments show that the proposed mapping algorithm reduces the error of terrain mapping by 62.7% and increases the speed of terrain mapping by 25.1%. The algorithm can effectively identify and extract plane features in a structured environment, reducing the complexity of terrain mapping information, and improving the speed of terrain mapping.

Full article

►▼

Show Figures

Open AccessArticle

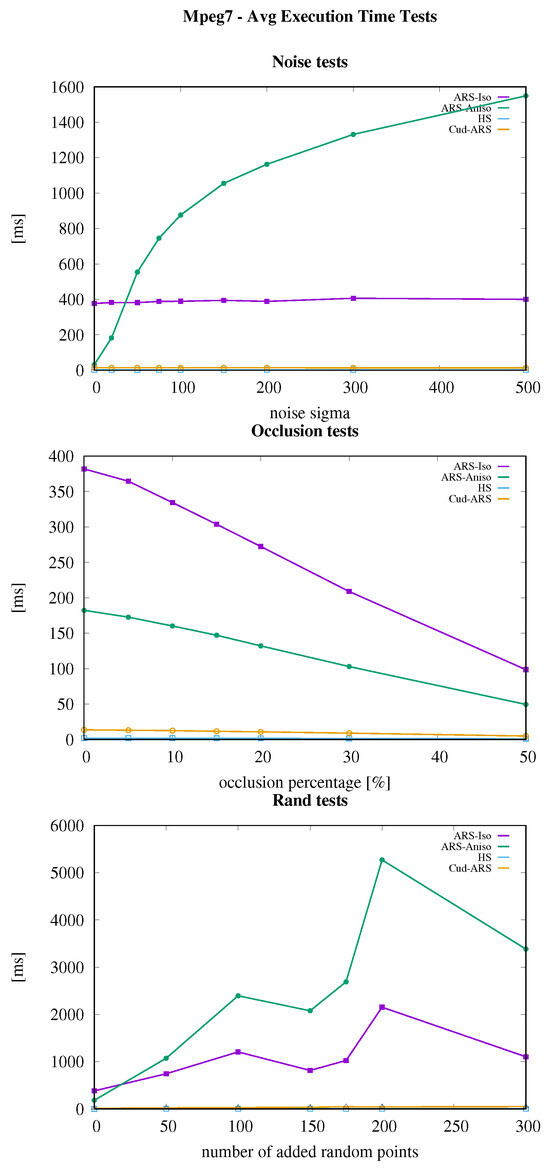

Accurate Global Point Cloud Registration Using GPU-Based Parallel Angular Radon Spectrum

by

Ernesto Fontana and Dario Lodi Rizzini

Cited by 2 | Viewed by 2465

Abstract

Accurate robot localization and mapping can be improved through the adoption of globally optimal registration methods, like the Angular Radon Spectrum (ARS). In this paper, we present Cud-ARS, an efficient variant of the ARS algorithm for 2D registration designed for parallel execution of

[...] Read more.

Accurate robot localization and mapping can be improved through the adoption of globally optimal registration methods, like the Angular Radon Spectrum (ARS). In this paper, we present Cud-ARS, an efficient variant of the ARS algorithm for 2D registration designed for parallel execution of the most computationally expensive steps on Nvidia™ Graphics Processing Units (GPUs). Cud-ARS is able to compute the ARS in parallel blocks, with each associated to a subset of input points. We also propose a global branch-and-bound method for translation estimation. This novel parallel algorithm has been tested on multiple datasets. The proposed method is able to speed up the execution time by two orders of magnitude while obtaining more accurate results in rotation estimation than state-of-the-art correspondence-based algorithms. Our experiments also assess the potential of this novel approach in mapping applications, showing the contribution of GPU programming to efficient solutions of robotic tasks.

Full article

►▼

Show Figures

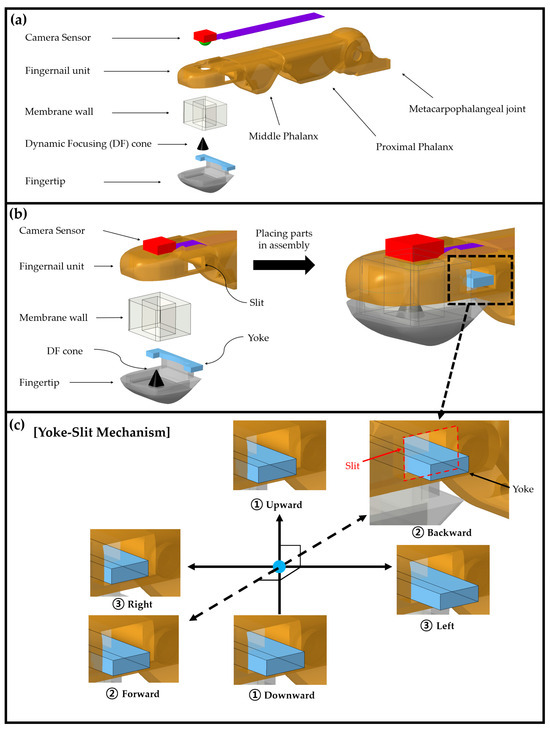

Open AccessArticle

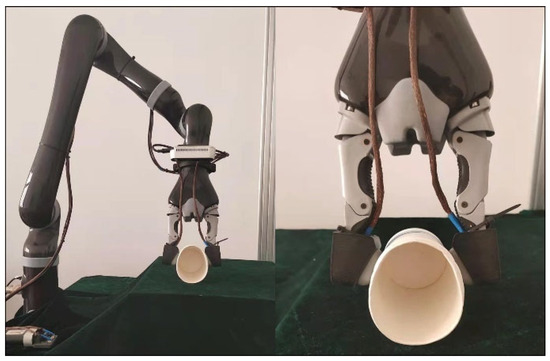

Dynamic Focusing (DF) Cone-Based Omnidirectional Fingertip Pressure Sensor with High Sensitivity in a Wide Pressure Range

by

Moo-Jung Seo and Jae-Chern Yoo

Cited by 1 | Viewed by 2087

Abstract

It is essential to detect pressure from a robot’s fingertip in every direction to ensure efficient and secure grasping of objects with diverse shapes. Nevertheless, creating a simple-designed sensor that offers cost-effective and omnidirectional pressure sensing poses substantial difficulties. This is because it

[...] Read more.

It is essential to detect pressure from a robot’s fingertip in every direction to ensure efficient and secure grasping of objects with diverse shapes. Nevertheless, creating a simple-designed sensor that offers cost-effective and omnidirectional pressure sensing poses substantial difficulties. This is because it often requires more intricate mechanical solutions than when designing non-omnidirectional pressure sensors of robot fingertips. This paper introduces an innovative pressure sensor for fingertips. It utilizes a uniquely designed dynamic focusing cone to visually detect pressure with omnidirectional sensitivity. This approach enables cost-effective measurement of pressure from all sides of the fingertip. The experimental findings demonstrate the great potential of the newly introduced sensor. Its implementation is both straightforward and uncomplicated, offering high sensitivity (0.07 mm/N) in all directions and a broad pressure sensing range (up to 40 N) for robot fingertips.

Full article

►▼

Show Figures

Open AccessArticle

Analytical Models for Pose Estimate Variance of Planar Fiducial Markers for Mobile Robot Localisation

by

Roman Adámek, Martin Brablc, Patrik Vávra, Barnabás Dobossy, Martin Formánek and Filip Radil

Cited by 7 | Viewed by 4247

Abstract

Planar fiducial markers are commonly used to estimate a pose of a camera relative to the marker. This information can be combined with other sensor data to provide a global or local position estimate of the system in the environment using a state

[...] Read more.

Planar fiducial markers are commonly used to estimate a pose of a camera relative to the marker. This information can be combined with other sensor data to provide a global or local position estimate of the system in the environment using a state estimator such as the Kalman filter. To achieve accurate estimates, the observation noise covariance matrix must be properly configured to reflect the sensor output’s characteristics. However, the observation noise of the pose obtained from planar fiducial markers varies across the measurement range and this fact needs to be taken into account during the sensor fusion to provide a reliable estimate. In this work, we present experimental measurements of the fiducial markers in real and simulation scenarios for 2D pose estimation. Based on these measurements, we propose analytical functions that approximate the variances of pose estimates. We demonstrate the effectiveness of our approach in a 2D robot localisation experiment, where we present a method for estimating covariance model parameters based on user measurements and a technique for fusing pose estimates from multiple markers.

Full article

►▼

Show Figures

Open AccessArticle

Identifying the Strength Level of Objects’ Tactile Attributes Using a Multi-Scale Convolutional Neural Network

by

Peng Zhang, Guoqi Yu, Dongri Shan, Zhenxue Chen and Xiaofang Wang

Cited by 3 | Viewed by 2975

Abstract

In order to solve the problem in which most currently existing research focuses on the binary tactile attributes of objects and ignores identifying the strength level of tactile attributes, this paper establishes a tactile data set of the strength level of objects’ elasticity

[...] Read more.

In order to solve the problem in which most currently existing research focuses on the binary tactile attributes of objects and ignores identifying the strength level of tactile attributes, this paper establishes a tactile data set of the strength level of objects’ elasticity and hardness attributes to make up for the lack of relevant data, and proposes a multi-scale convolutional neural network to identify the strength level of object attributes. The network recognizes the different attributes and identifies differences in the strength level of the same object attributes by fusing the original features, i.e., the single-channel features and multi-channel features of the data. A variety of evaluation methods were used for comparison with multiple models in terms of strength levels of elasticity and hardness. The results show that our network has a more significant effect in accuracy. In the prediction results of the positive examples in the predicted value, the true value has a higher proportion of positive examples, that is, the precision is better. The prediction effect for the positive examples in the true value is better, that is, the recall is better. Finally, the recognition rate for all classes is higher in terms of f1_score. For the overall sample, the prediction of the multi-scale convolutional neural network has a higher recognition rate and the network’s ability to recognize each strength level is more stable.

Full article

►▼

Show Figures

Open AccessArticle

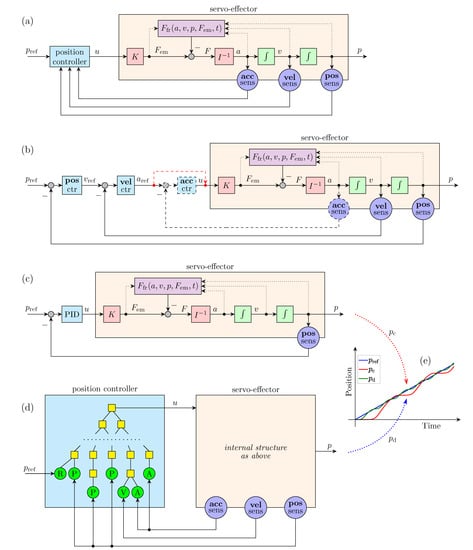

Discovering Stick-Slip-Resistant Servo Control Algorithm Using Genetic Programming

by

Andrzej Bożek

Cited by 3 | Viewed by 3437

Abstract

The stick-slip is one of negative phenomena caused by friction in servo systems. It is a consequence of complicated nonlinear friction characteristics, especially the so-called Stribeck effect. Much research has been done on control algorithms suppressing the stick-slip, but no simple solution has

[...] Read more.

The stick-slip is one of negative phenomena caused by friction in servo systems. It is a consequence of complicated nonlinear friction characteristics, especially the so-called Stribeck effect. Much research has been done on control algorithms suppressing the stick-slip, but no simple solution has been found. In this work, a new approach is proposed based on genetic programming. The genetic programming is a machine learning technique constructing symbolic representation of programs or expressions by evolutionary process. In this way, the servo control algorithm optimally suppressing the stick-slip is discovered. The GP training is conducted on a simulated servo system, as the experiments would last too long in real-time. The feedback for the control algorithm is based on the sensors of position, velocity and acceleration. Variants with full and reduced sensor sets are considered. Ideal and quantized position measurements are also analyzed. The results reveal that the genetic programming can successfully discover a control algorithm effectively suppressing the stick-slip. However, it is not an easy task and relatively large size of population and a big number of generations are required. Real measurement results in worse control quality. Acceleration feedback has no apparent impact on the algorithms performance, while velocity feedback is important.

Full article

►▼

Show Figures

Open AccessArticle

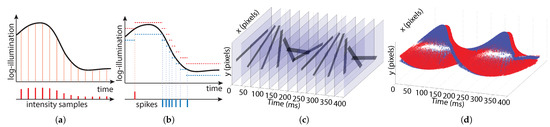

ESPEE: Event-Based Sensor Pose Estimation Using an Extended Kalman Filter

by

Fabien Colonnier, Luca Della Vedova and Garrick Orchard

Cited by 13 | Viewed by 4624

Abstract

Event-based vision sensors show great promise for use in embedded applications requiring low-latency passive sensing at a low computational cost. In this paper, we present an event-based algorithm that relies on an Extended Kalman Filter for 6-Degree of Freedom sensor pose estimation. The

[...] Read more.

Event-based vision sensors show great promise for use in embedded applications requiring low-latency passive sensing at a low computational cost. In this paper, we present an event-based algorithm that relies on an Extended Kalman Filter for 6-Degree of Freedom sensor pose estimation. The algorithm updates the sensor pose event-by-event with low latency (worst case of less than 2 μs on an FPGA). Using a single handheld sensor, we test the algorithm on multiple recordings, ranging from a high contrast printed planar scene to a more natural scene consisting of objects viewed from above. The pose is accurately estimated under rapid motions, up to 2.7 m/s. Thereafter, an extension to multiple sensors is described and tested, highlighting the improved performance of such a setup, as well as the integration with an off-the-shelf mapping algorithm to allow point cloud updates with a 3D scene and enhance the potential applications of this visual odometry solution.

Full article

►▼

Show Figures

Open AccessArticle

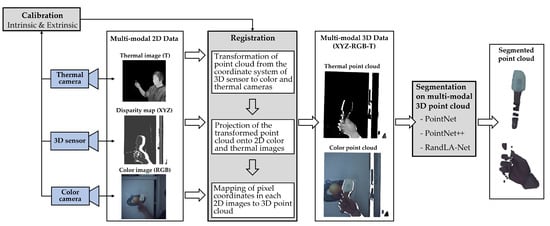

Point Cloud Hand–Object Segmentation Using Multimodal Imaging with Thermal and Color Data for Safe Robotic Object Handover

by

Yan Zhang, Steffen Müller, Benedict Stephan, Horst-Michael Gross and Gunther Notni

Cited by 16 | Viewed by 5067

Abstract

This paper presents an application of neural networks operating on multimodal 3D data (3D point cloud, RGB, thermal) to effectively and precisely segment human hands and objects held in hand to realize a safe human–robot object handover. We discuss the problems encountered in

[...] Read more.

This paper presents an application of neural networks operating on multimodal 3D data (3D point cloud, RGB, thermal) to effectively and precisely segment human hands and objects held in hand to realize a safe human–robot object handover. We discuss the problems encountered in building a multimodal sensor system, while the focus is on the calibration and alignment of a set of cameras including RGB, thermal, and NIR cameras. We propose the use of a copper–plastic chessboard calibration target with an internal active light source (near-infrared and visible light). By brief heating, the calibration target could be simultaneously and legibly captured by all cameras. Based on the multimodal dataset captured by our sensor system, PointNet, PointNet++, and RandLA-Net are utilized to verify the effectiveness of applying multimodal point cloud data for hand–object segmentation. These networks were trained on various data modes (XYZ, XYZ-T, XYZ-RGB, and XYZ-RGB-T). The experimental results show a significant improvement in the segmentation performance of XYZ-RGB-T (mean Intersection over Union:

by RandLA-Net) compared with the other three modes (

by XYZ-RGB,

by XYZ-T,

by XYZ), in which it is worth mentioning that the Intersection over Union for the single class of hand achieves

.

Full article

►▼

Show Figures

Open AccessArticle

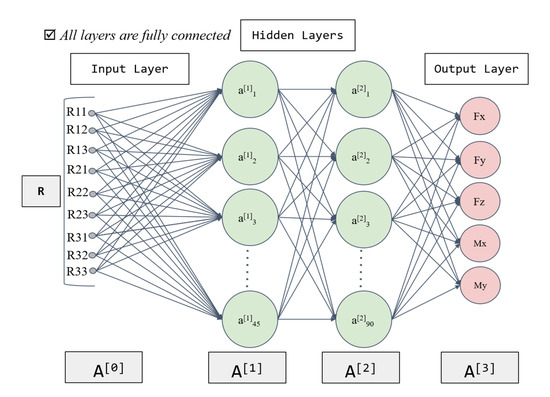

An Integrated Compensation Method for the Force Disturbance of a Six-Axis Force Sensor in Complex Manufacturing Scenarios

by

Lei Yao, Qingguang Gao, Dailin Zhang, Wanpeng Zhang and Youping Chen

Cited by 16 | Viewed by 4343

Abstract

As one of the key components for active compliance control and human–robot collaboration, a six-axis force sensor is often used for a robot to obtain contact forces. However, a significant problem is the distortion between the contact forces and the data conveyed by

[...] Read more.

As one of the key components for active compliance control and human–robot collaboration, a six-axis force sensor is often used for a robot to obtain contact forces. However, a significant problem is the distortion between the contact forces and the data conveyed by the six-axis force sensor because of its zero drift, system error, and gravity of robot end-effector. To eliminate the above disturbances, an integrated compensation method is proposed, which uses a deep learning network and the least squares method to realize the zero-point prediction and tool load identification, respectively. After that, the proposed method can automatically complete compensation for the six-axis force sensor in complex manufacturing scenarios. Additionally, the experimental results demonstrate that the proposed method can provide effective and robust compensation for force disturbance and achieve high measurement accuracy.

Full article

►▼

Show Figures

Open AccessArticle

A Two-Stage Data Association Approach for 3D Multi-Object Tracking

by

Minh-Quan Dao and Vincent Frémont

Cited by 18 | Viewed by 5651

Abstract

Multi-Object Tracking (MOT) is an integral part of any autonomous driving pipelines because it produces trajectories of other moving objects in the scene and predicts their future motion. Thanks to the recent advances in 3D object detection enabled by deep learning, track-by-detection has

[...] Read more.

Multi-Object Tracking (MOT) is an integral part of any autonomous driving pipelines because it produces trajectories of other moving objects in the scene and predicts their future motion. Thanks to the recent advances in 3D object detection enabled by deep learning, track-by-detection has become the dominant paradigm in 3D MOT. In this paradigm, a MOT system is essentially made of an object detector and a data association algorithm which establishes track-to-detection correspondence. While 3D object detection has been actively researched, association algorithms for 3D MOT has settled at bipartite matching formulated as a Linear Assignment Problem (LAP) and solved by the Hungarian algorithm. In this paper, we adapt a two-stage data association method which was successfully applied to image-based tracking to the 3D setting, thus providing an alternative for data association for 3D MOT. Our method outperforms the baseline using one-stage bipartite matching for data association by achieving 0.587 Average Multi-Object Tracking Accuracy (AMOTA) in NuScenes validation set and 0.365 AMOTA (at level 2) in Waymo test set.

Full article

►▼

Show Figures

Open AccessArticle

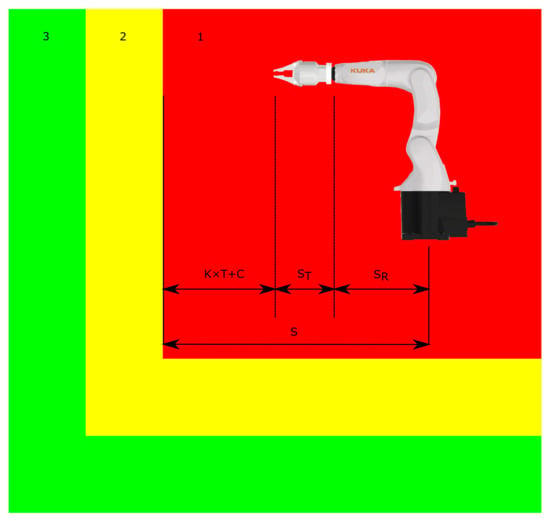

Vision and RTLS Safety Implementation in an Experimental Human—Robot Collaboration Scenario

by

Juraj Slovák, Markus Melicher, Matej Šimovec and Ján Vachálek

Cited by 10 | Viewed by 4826

Abstract

Human–robot collaboration is becoming ever more widespread in industry because of its adaptability. Conventional safety elements are used when converting a workplace into a collaborative one, although new technologies are becoming more widespread. This work proposes a safe robotic workplace that can adapt

[...] Read more.

Human–robot collaboration is becoming ever more widespread in industry because of its adaptability. Conventional safety elements are used when converting a workplace into a collaborative one, although new technologies are becoming more widespread. This work proposes a safe robotic workplace that can adapt its operation and speed depending on the surrounding stimuli. The benefit lies in its use of promising technologies that combine safety and collaboration. Using a depth camera operating on the passive stereo principle, safety zones are created around the robotic workplace, while objects moving around the workplace are identified, including their distance from the robotic system. Passive stereo employs two colour streams that enable distance computation based on pixel shift. The colour stream is also used in the human identification process. Human identification is achieved using the Histogram of Oriented Gradients, pre-learned precisely for this purpose. The workplace also features autonomous trolleys for material supply. Unequivocal trolley identification is achieved using a real-time location system through tags placed on each trolley. The robotic workplace’s speed and the halting of its work depend on the positions of objects within safety zones. The entry of a trolley with an exception to a safety zone does not affect the workplace speed. This work simulates individual scenarios that may occur at a robotic workplace with an emphasis on compliance with safety measures. The novelty lies in the integration of a real-time location system into a vision-based safety system, which are not new technologies by themselves, but their interconnection to achieve exception handling in order to reduce downtimes in the collaborative robotic system is innovative.

Full article

►▼

Show Figures

Open AccessArticle

A Deep Learning Framework for Recognizing Both Static and Dynamic Gestures

by

Osama Mazhar, Sofiane Ramdani and Andrea Cherubini

Cited by 15 | Viewed by 6152

Abstract

Intuitive user interfaces are indispensable to interact with the human centric smart environments. In this paper, we propose a unified framework that recognizes both static and dynamic gestures, using simple RGB vision (without depth sensing). This feature makes it suitable for inexpensive human-robot

[...] Read more.

Intuitive user interfaces are indispensable to interact with the human centric smart environments. In this paper, we propose a unified framework that recognizes both static and dynamic gestures, using simple RGB vision (without depth sensing). This feature makes it suitable for inexpensive human-robot interaction in social or industrial settings. We employ a pose-driven spatial attention strategy, which guides our proposed Static and Dynamic gestures Network—

StaDNet. From the image of the human upper body, we estimate his/her depth, along with the region-of-interest around his/her hands. The Convolutional Neural Network (CNN) in

StaDNet is fine-tuned on a background-substituted hand gestures dataset. It is utilized to detect 10 static gestures for each hand as well as to obtain the hand image-embeddings. These are subsequently fused with the augmented pose vector and then passed to the stacked Long Short-Term Memory blocks. Thus, human-centred frame-wise information from the augmented pose vector and from the left/right hands image-embeddings are aggregated in time to predict the dynamic gestures of the performing person. In a number of experiments, we show that the proposed approach surpasses the state-of-the-art results on the large-scale

Chalearn 2016 dataset. Moreover, we transfer the knowledge learned through the proposed methodology to the

Praxis gestures dataset, and the obtained results also outscore the state-of-the-art on this dataset.

Full article

►▼

Show Figures

Open AccessPerspective

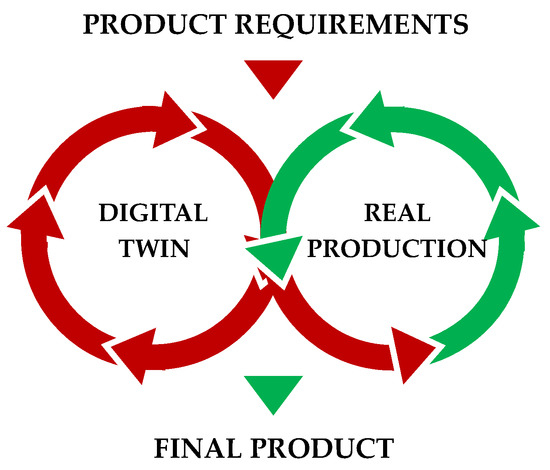

Design and Implementation of Universal Cyber-Physical Model for Testing Logistic Control Algorithms of Production Line’s Digital Twin by Using Color Sensor

by

Ján Vachálek, Dana Šišmišová, Pavol Vašek, Ivan Fiťka, Juraj Slovák and Matej Šimovec

Cited by 24 | Viewed by 3916

Abstract

This paper deals with the design and implementation of a universal cyber-physical model capable of simulating any production process in order to optimize its logistics systems. The basic idea is the direct possibility of testing and debugging advanced logistics algorithms using a digital

[...] Read more.

This paper deals with the design and implementation of a universal cyber-physical model capable of simulating any production process in order to optimize its logistics systems. The basic idea is the direct possibility of testing and debugging advanced logistics algorithms using a digital twin outside the production line. Since the digital twin requires a physical connection to a real line for its operation, this connection is substituted by a modular cyber-physical system (CPS), which replicates the same physical inputs and outputs as a real production line. Especially in fully functional production facilities, there is a trend towards optimizing logistics systems in order to increase efficiency and reduce idle time. Virtualization techniques in the form of a digital twin are standardly used for this purpose. The possibility of an initial test of the physical implementation of proposed optimization changes before they are fully implemented into operation is a pragmatic question that still resonates on the production side. Such concerns are justified because the proposed changes in the optimization of production logistics based on simulations from a digital twin tend to be initially costly and affect the existing functional production infrastructure. Therefore, we created a universal CPS based on requirements from our cooperating manufacturing companies. The model fully physically reproduces the real conditions of simulated production and verifies in advance the quality of proposed optimization changes virtually by the digital twin. Optimization costs are also significantly reduced, as it is not necessary to verify the optimization impact directly in production, but only in the physical model. To demonstrate the versatility of deployment, we chose a configuration simulating a robotic assembly workplace and its logistics.

Full article

►▼

Show Figures

Open AccessArticle

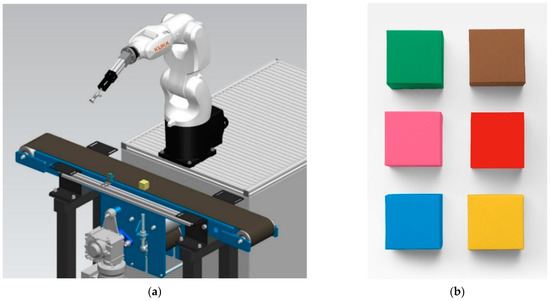

Intelligent Dynamic Identification Technique of Industrial Products in a Robotic Workplace

by

Ján Vachálek, Dana Šišmišová, Pavol Vašek, Jan Rybář, Juraj Slovák and Matej Šimovec

Cited by 2 | Viewed by 3221

Abstract

The article deals with aspects of identifying industrial products in motion based on their color. An automated robotic workplace with a conveyor belt, robot and an industrial color sensor is created for this purpose. Measured data are processed in a database and then

[...] Read more.

The article deals with aspects of identifying industrial products in motion based on their color. An automated robotic workplace with a conveyor belt, robot and an industrial color sensor is created for this purpose. Measured data are processed in a database and then statistically evaluated in form of type A standard uncertainty and type B standard uncertainty, in order to obtain combined standard uncertainties results. Based on the acquired data, control charts of RGB color components for identified products are created. Influence of product speed on the measuring process identification and process stability is monitored. In case of identification uncertainty i.e., measured values are outside the limits of control charts, the K-nearest neighbor machine learning algorithm is used. This algorithm, based on the Euclidean distances to the classified value, estimates its most accurate iteration. This results into the comprehensive system for identification of product moving on conveyor belt, where based on the data collection and statistical analysis using machine learning, industry usage reliability is demonstrated.

Full article

►▼

Show Figures

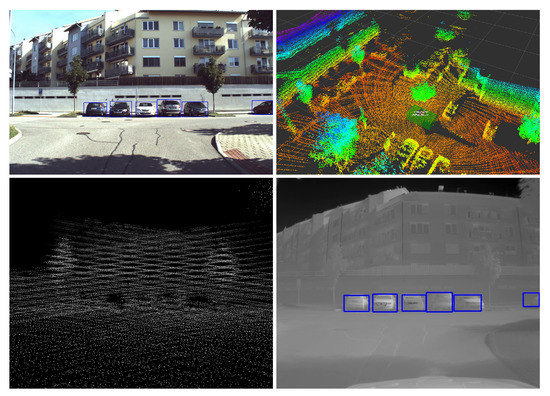

Open AccessArticle

Fully Automated DCNN-Based Thermal Images Annotation Using Neural Network Pretrained on RGB Data

by

Adam Ligocki, Ales Jelinek, Ludek Zalud and Esa Rahtu

Cited by 19 | Viewed by 6723

Abstract

One of the biggest challenges of training deep neural network is the need for massive data annotation. To train the neural network for object detection, millions of annotated training images are required. However, currently, there are no large-scale thermal image datasets that could

[...] Read more.

One of the biggest challenges of training deep neural network is the need for massive data annotation. To train the neural network for object detection, millions of annotated training images are required. However, currently, there are no large-scale thermal image datasets that could be used to train the state of the art neural networks, while voluminous RGB image datasets are available. This paper presents a method that allows to create hundreds of thousands of annotated thermal images using the RGB pre-trained object detector. A dataset created in this way can be used to train object detectors with improved performance. The main gain of this work is the novel method for fully automatic thermal image labeling. The proposed system uses the RGB camera, thermal camera, 3D LiDAR, and the pre-trained neural network that detects objects in the RGB domain. Using this setup, it is possible to run the fully automated process that annotates the thermal images and creates the automatically annotated thermal training dataset. As the result, we created a dataset containing hundreds of thousands of annotated objects. This approach allows to train deep learning models with similar performance as the common human-annotation-based methods do. This paper also proposes several improvements to fine-tune the results with minimal human intervention. Finally, the evaluation of the proposed solution shows that the method gives significantly better results than training the neural network with standard small-scale hand-annotated thermal image datasets.

Full article

►▼

Show Figures

Open AccessArticle

Shape-Based Alignment of the Scanned Objects Concerning Their Asymmetric Aspects

by

Andrej Lucny, Viliam Dillinger, Gabriela Kacurova and Marek Racev

Cited by 2 | Viewed by 3165

Abstract

We introduce an integrated method for processing depth maps measured by a laser profile sensor. It serves for the recognition and alignment of an object given by a single example. Firstly, we look for potential object contours, mainly using the Retinex filter. Then,

[...] Read more.

We introduce an integrated method for processing depth maps measured by a laser profile sensor. It serves for the recognition and alignment of an object given by a single example. Firstly, we look for potential object contours, mainly using the Retinex filter. Then, we select the actual object boundary via shape comparison based on Triangle Area Representation (TAR). We overcome the limitations of the TAR method by extension of its shape descriptor. That is helpful mainly for objects with symmetric shapes but other asymmetric aspects like squares with asymmetric holes. Finally, we use point-to-point pairing, provided by the extended TAR method, to calculate the 3D rigid affine transform that aligns the scanned object to the given example position. For the transform calculation, we design an algorithm that overcomes the Kabsch point-to-point algorithm’s accuracy and accommodates it for a precise contour-to-contour alignment. In this way, we have implemented a pipeline with features convenient for industrial use, namely production inspection.

Full article

►▼

Show Figures

Open AccessArticle

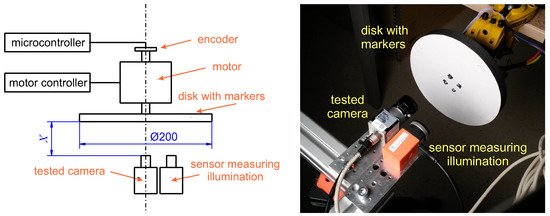

Experimental Comparison between Event and Global Shutter Cameras

by

Ondřej Holešovský, Radoslav Škoviera, Václav Hlaváč and Roman Vítek

Cited by 25 | Viewed by 7145

Abstract

We compare event-cameras with fast (global shutter) frame-cameras experimentally, asking: “What is the application domain, in which an event-camera surpasses a fast frame-camera?” Surprisingly, finding the answer has been difficult. Our methodology was to test event- and frame-cameras on generic computer vision tasks

[...] Read more.

We compare event-cameras with fast (global shutter) frame-cameras experimentally, asking: “What is the application domain, in which an event-camera surpasses a fast frame-camera?” Surprisingly, finding the answer has been difficult. Our methodology was to test event- and frame-cameras on generic computer vision tasks where event-camera advantages should manifest. We used two methods: (1) a controlled, cheap, and easily reproducible experiment (observing a marker on a rotating disk at varying speeds); (2) selecting one challenging practical ballistic experiment (observing a flying bullet having a ground truth provided by an ultra-high-speed expensive frame-camera). The experimental results include sampling/detection rates and position estimation errors as functions of illuminance and motion speed; and the minimum pixel latency of two commercial state-of-the-art event-cameras (ATIS, DVS240). Event-cameras respond more slowly to positive than to negative large and sudden contrast changes. They outperformed a frame-camera in bandwidth efficiency in all our experiments. Both camera types provide comparable position estimation accuracy. The better event-camera was limited by pixel latency when tracking small objects, resulting in motion blur effects. Sensor bandwidth limited the event-camera in object recognition. However, future generations of event-cameras might alleviate bandwidth limitations.

Full article

►▼

Show Figures

Open AccessArticle

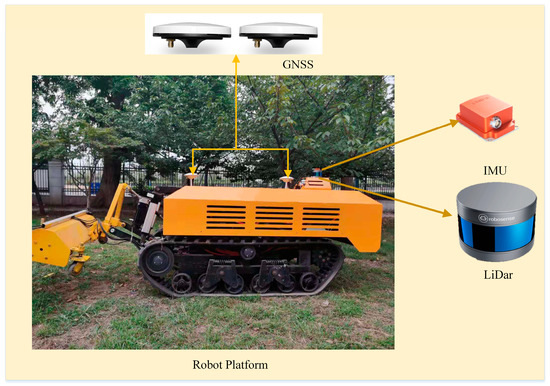

Mobile LiDAR Scanning System Combined with Canopy Morphology Extracting Methods for Tree Crown Parameters Evaluation in Orchards

by

Kai Wang, Jun Zhou, Wenhai Zhang and Baohua Zhang

Cited by 41 | Viewed by 5344

Abstract

To meet the demand for canopy morphological parameter measurements in orchards, a mobile scanning system is designed based on the 3D Simultaneous Localization and Mapping (SLAM) algorithm. The system uses a lightweight LiDAR-Inertial Measurement Unit (LiDAR-IMU) state estimator and a rotation-constrained optimization algorithm

[...] Read more.

To meet the demand for canopy morphological parameter measurements in orchards, a mobile scanning system is designed based on the 3D Simultaneous Localization and Mapping (SLAM) algorithm. The system uses a lightweight LiDAR-Inertial Measurement Unit (LiDAR-IMU) state estimator and a rotation-constrained optimization algorithm to reconstruct a point cloud map of the orchard. Then, Statistical Outlier Removal (SOR) filtering and European clustering algorithms are used to segment the orchard point cloud from which the ground information has been separated, and the k-nearest neighbour (KNN) search algorithm is used to restore the filtered point cloud. Finally, the height of the fruit trees and the volume of the canopy are obtained by the point cloud statistical method and the 3D alpha-shape algorithm. To verify the algorithm, tracked robots equipped with LIDAR and an IMU are used in a standardized orchard. Experiments show that the system in this paper can reconstruct the orchard point cloud environment with high accuracy and can obtain the point cloud information of all fruit trees in the orchard environment. The accuracy of point cloud-based segmentation of fruit trees in the orchard is 95.4%. The R

2 and Root Mean Square Error (RMSE) values of crown height are 0.93682 and 0.04337, respectively, and the corresponding values of canopy volume are 0.8406 and 1.5738, respectively. In summary, this system achieves a good evaluation result of orchard crown information and has important application value in the intelligent measurement of fruit trees.

Full article

►▼

Show Figures

Open AccessArticle

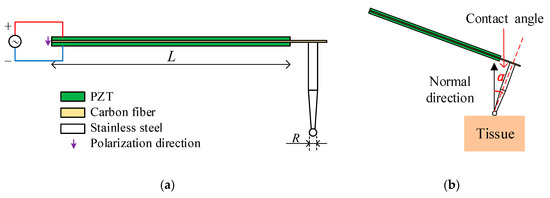

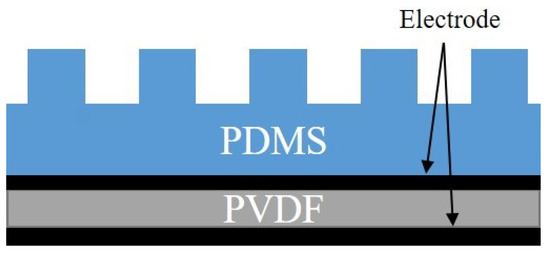

A Piezoelectric Tactile Sensor for Tissue Stiffness Detection with Arbitrary Contact Angle

by

Yingxuan Zhang, Feng Ju, Xiaoyong Wei, Dan Wang and Yaoyao Wang

Cited by 32 | Viewed by 5973

Abstract

In this paper, a piezoelectric tactile sensor for detecting tissue stiffness in robot-assisted minimally invasive surgery (RMIS) is proposed. It can detect the stiffness not only when the probe is normal to the tissue surface, but also when there is a contact angle

[...] Read more.

In this paper, a piezoelectric tactile sensor for detecting tissue stiffness in robot-assisted minimally invasive surgery (RMIS) is proposed. It can detect the stiffness not only when the probe is normal to the tissue surface, but also when there is a contact angle between the probe and normal direction. It solves the problem that existing sensors can only detect in the normal direction to ensure accuracy when the degree of freedom (DOF) of surgical instruments is limited. The proposed senor can distinguish samples with different stiffness and recognize lump from normal tissue effectively when the contact angle varies within [0°, 45°]. These are achieved by establishing a new detection model and sensor optimization. It deduces the influence of contact angle on stiffness detection by sensor parameters design and optimization. The detection performance of the sensor is confirmed by simulation and experiment. Five samples with different stiffness (including lump and normal samples with close stiffness) are used. Through blind recognition test in simulation, the recognition rate is 100% when the contact angle is randomly selected within 30°, 94.1% within 45°, which is 38.7% higher than the unoptimized sensor. Through blind classification test and automatic k-means clustering in experiment, the correct rate is 92% when the contact angle is randomly selected within 45°. We can get the proposed sensor can easily recognize samples with different stiffness with high accuracy which has broad application prospects in the medical field.

Full article

►▼

Show Figures

Open AccessArticle

A Non-Array Type Cut to Shape Soft Slip Detection Sensor Applicable to Arbitrary Surface

by

Sung Joon Kim, Seung Ho Lee, Hyungpil Moon, Hyouk Ryeol Choi and Ja Choon Koo

Cited by 8 | Viewed by 3740

Abstract

The presence of a tactile sensor is essential to hold an object and manipulate it without damage. The tactile information helps determine whether an object is stably held. If a tactile sensor is installed at wherever the robot and the object touch, the

[...] Read more.

The presence of a tactile sensor is essential to hold an object and manipulate it without damage. The tactile information helps determine whether an object is stably held. If a tactile sensor is installed at wherever the robot and the object touch, the robot could interact with more objects. In this paper, a skin type slip sensor that can be attached to the surface of a robot with various curvatures is presented. A simple mechanical sensor structure enables the cut and fit of the sensor according to the curvature. The sensor uses a non-array structure and can operate even if a part of the sensor is cut off. The slip was distinguished using a simple vibration signal received from the sensor. The signal is transformed into the time-frequency domain, and the slippage was determined using an artificial neural network. The accuracy of slip detection was compared using four artificial neural network models. In addition, the strengths and weaknesses of each neural network model were analyzed according to the data used for training. As a result, the developed sensor detected slip with an average of 95.73% accuracy at various curvatures and contact points.

Full article

►▼

Show Figures