An Aggregated Data Integration Approach to the Web and Cloud Platforms through a Modular REST-Based OPC UA Middleware

Abstract

:1. Introduction

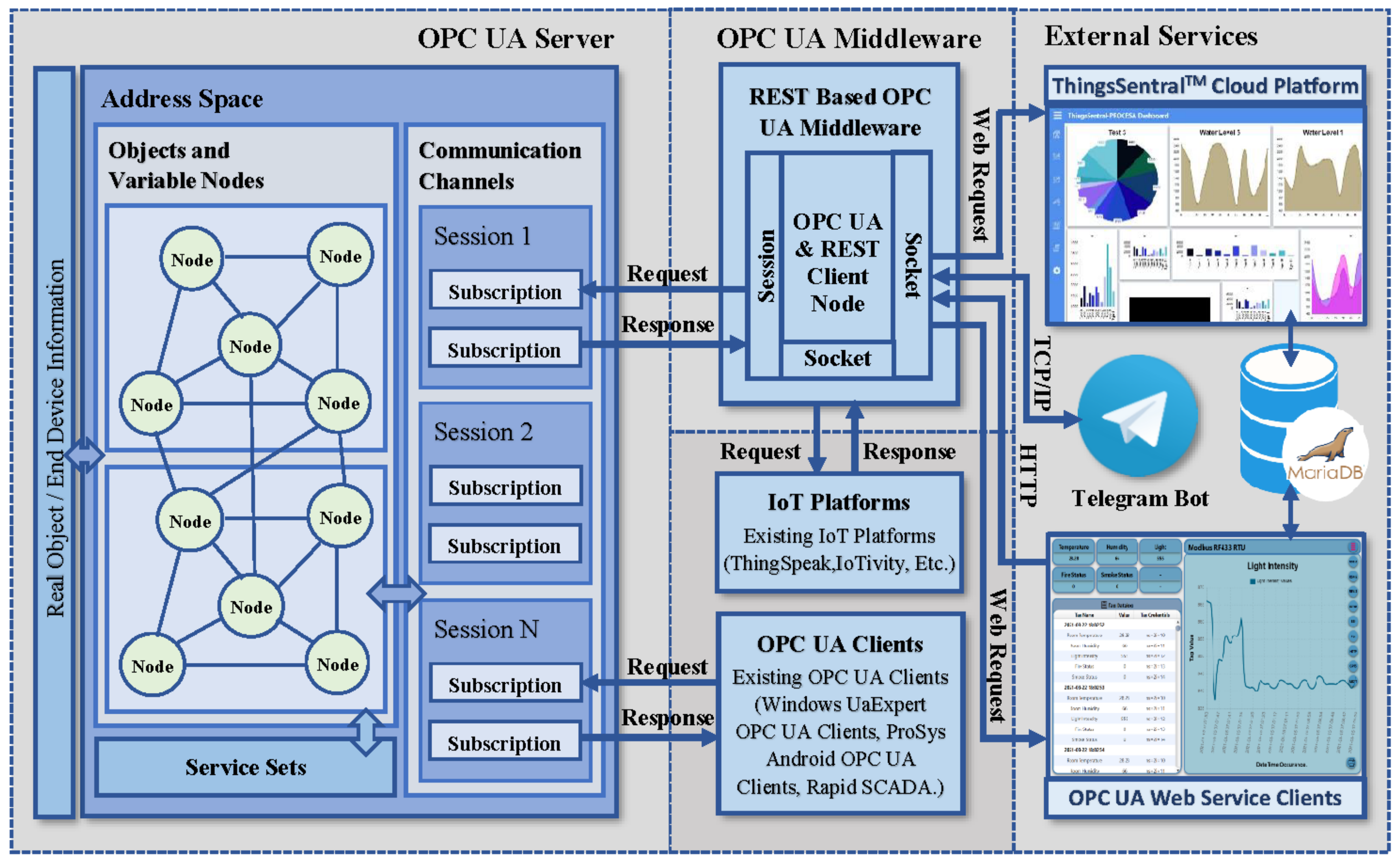

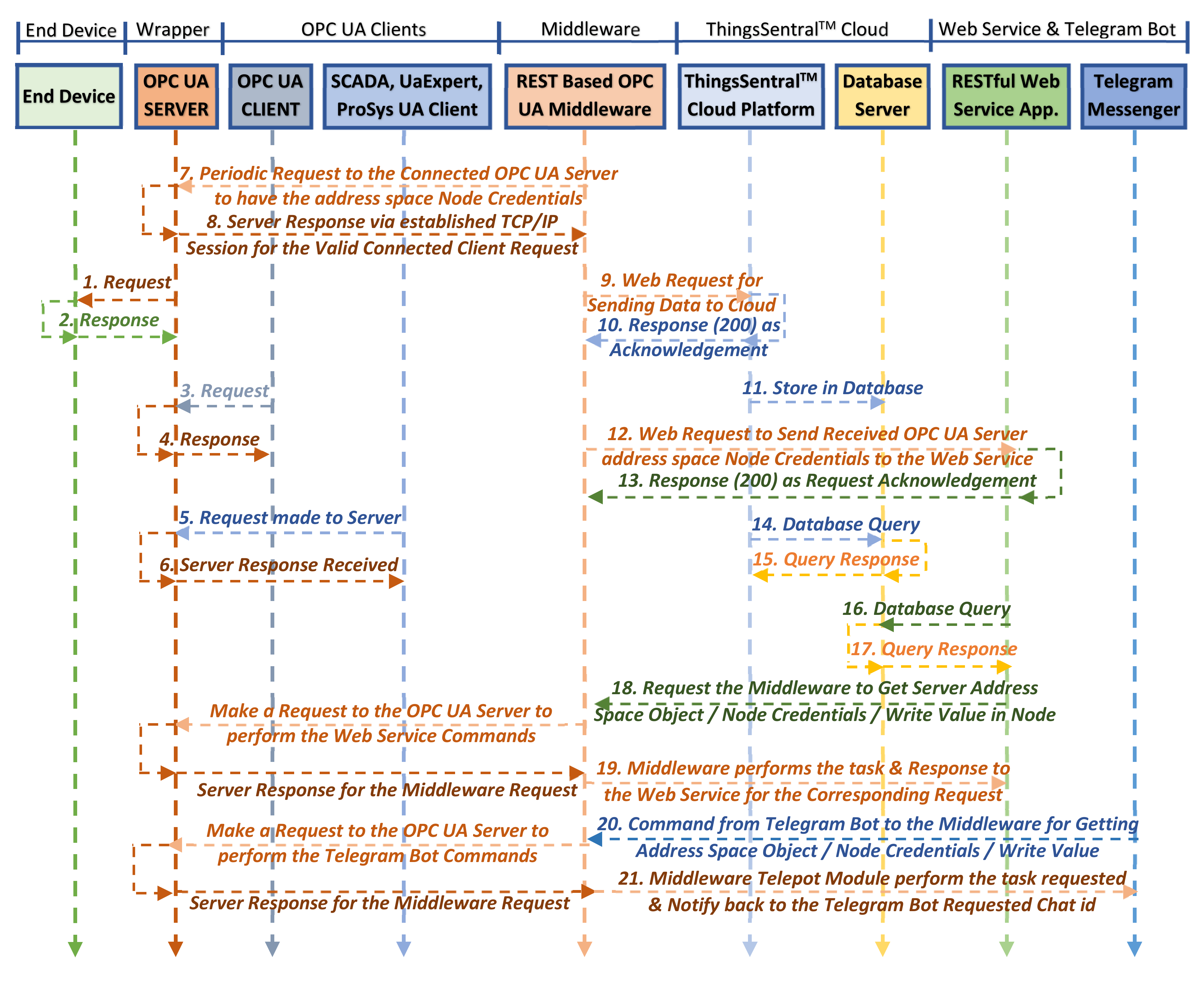

- We implemented an open-source vendor-neutral cross-platform OPC UA server to address communication interoperability and enable seamless aggregated data exchange across the heterogeneous distributed system at different automation levels;

- We proposed an open-source REST-based OPC UA middleware to unlock OPC UA network capabilities to address semantic and syntactic interoperability to integrate aggregated data with other domains such as web, Telegram, and cloud services;

- We developed a standalone web service client to receive OPC UA resources from the middleware, enable OPC UA address space remote supervision, and provide end-user application support to perform interoperable M2M communication;

- We implemented a cloud platform named ThingsSentralTM (http://thingssentral.io:443/) to collect and store the middleware data in a dedicated database for further visual data analysis, and aggregated bulk data management. The proposed framework also synchronizes Telegram messenger as the remote commander unit to perform remote supervision and notify users about the event occurrences;

2. Materials and Methods

2.1. Proposed Framework

2.2. OPC UA Server Implementation

| Algorithm 1. OPC UA Server Development Algorithm. |

| Input: Request messages from the OPC UA clients/middleware module. |

| Output: OPC UA server endpoint URI and node credentials in UA binary format. |

| 1: function StartOPCUAServer() |

| 2: Construct server endpoint URI as opc.tcp://platform IP address:port |

| 3: Create address space and define nine objects with associated variable nodes. |

| 4: Start the OPC UA server with discoverable endpoint URI. |

| 5: while (server.is_listening) do |

| 6: Call Rand() function to get periodic simulated random numbers. |

| 7: Update variable nodes by node.set_value(variables) |

| 8: Wait 1000 ms for the next iteration. |

| 9: end while |

| 10: function Listening(Request) |

| 11: Listen continuously for the OPC UA client requests. |

| 12: while (Request.available) do |

| 13: Parse the NodeID, value, credentials from the request. |

| 14: root = server.get_root_node() |

| 15: Objects = len(root.get_children()) |

| 16: if (Request == “Read”) then |

| 17: for (i = 0; Objects; i++) do |

| 18: if (NodeID in root.get_children(i)) then |

| 19: Name = root.get_children()[i].get_browser_name() |

| 20: Value = root.get_children()[i].get_value() |

| 21: Concatenate = Name + ‘=’ + Value |

| 22: TagCredentials += Concatenate + ‘,’ |

| 23: end if |

| 24: end for |

| 25: Send TagCredentials to the client |

| 26: elif (Request == “Write”) then |

| 27: for (j = 0; Objects; j++) do |

| 28: if (NodeID in root.get_children(j)) then |

| 29: Write = root.get_node(NodeID).set_value(Value) |

| 30: end if |

| 31: end for |

| 32: end if |

| 33: end while |

| Begin |

| 34: Start a thread calling StartOPCUAServer() to run the OPC UA server. |

| 35: Start another thread calling Listening() to handle OPC UA client requests. |

| Finish |

2.3. REST-Based OPC UA Middleware Implementation

| Algorithm 2. REST-based OPC UA Middleware Module Development Algorithm. |

| Input: OPC UA Binary Data, User Query Request, and Telegram Commands. |

| Output: Request URL to the web and cloud platforms. Notify for Telegram commands. |

| 01: function ConnectServer() |

| 02: try client = Client.Connect(ServerURL) |

| 03: if (client) then break; |

| 04: except pass |

| 05: function MakeReq (Platform, Address, Path as arguments) |

| 06: Get the root nodes as Query = client.get_root_node().get_children() |

| 07: for (i = 0; len(Query.get_children(i)); i++) do |

| 08: if (client.get_object_node().get_children(i) in Query) then |

| 09: Name =Query.get_children()[i].get_browser_name() |

| 10: Value =Query.get_children()[i].get_value(); Concatenate1= Name+Value; |

| 11: ClientBuilder += “$” + ServerObject(i) + “*” + Concatenate1 |

| 12: CloudBuilder += Concatenate2; PCloudBuilder += Concatenate3 |

| 13: end if |

| 14: if (Platform == ”web”) then |

| 15: ReqURL = Address + “Path?” + ClientBuilder |

| 16: elif (Platform == ”ThingsSentral”) then |

| 17: ReqURL = Address + “Path?” + CloudBuilder |

| 18: elif (Platform == ”PCloud”) then |

| 19: ReqURL = Address + “Path?” + PCloudBuilder |

| 20: end for |

| 21: try Req = Get(ReqURL) do |

| 22: if (Req.Status == 200) then break; |

| 23: except pass |

| 24: function StartTelegramWebHook() |

| 25: while (Message available in Telegram server) do |

| 26: Parse the message and store in chat_id, UserName, command variable. |

| 27: end while |

| 28: if (command is valid) then |

| 29: Call associated function for the corresponding command request. |

| 30: Bot.sendMessage(chat_id, Response) |

| 31: end if |

| Begin |

| 32: Start a thread calling ConnectServer() function to connect with OPC UA server. |

| 33: Call MakeReq (web, localhost, OPC) to send OPC UA credentials to the web. |

| 34: MakeReq(ThingsSentral, thingssentral.io, postlong) to send in ThingsSentralTM. |

| 35: Call MakeReq(Pcloud, Address, update) to send credentials to the cloud. |

| 36: Start a thread calling StartTelegramWebHook() to run Telegram Bot. |

| Finish |

2.4. Web Service Client Development

2.5. ThingsSentralTM Cloud Platform Development

| Algorithm 3. REST-Aware-Based Web Service and ThingsSentralTM Cloud Platform Development Algorithm. |

| Input: Web request from the middleware. Sensor ID, Command Type (Statistics/Chart/View/Download), Query Type (Historical/N/csv/txt), From Date, To Date, Number to Query. NodeID & Value to Write. |

| Output: Present OPC UA server node credentials and store in the MariaDB database. Command to write value in the OPC UA server node. Present all/historical database records graphically and statistically based on sensor id. |

| 01: function HandleURL() |

| 02: Start the application in a standalone mannner and listen for the middleware URL request. |

| 03: while (Request.available) do |

| 04: Split request on ‘?’ and store in URLRequest array. Split the first index point of URLRequest on the ‘/’ and store |

| in PathType variable. Store the last index point of the URLRequest in Data. |

| 05: if (PathType == “OPC”) then |

| 06: Split data on the ‘$’delimiter and store in ObjectNodeTags array. |

| 07: for (i = 0 to length.ObjectNodeTags, i++) do |

| 08: Split ObjectNodeTags[i] on the ‘*’ delimeter & store Ist index in Object & 2nd index in Tags. |

| 09: if (Object in ObjectList) then |

| 10: Split Tags on the ‘|’ delimiter & store in TagCredentials array. |

| 11: for (j = 0 to length.TagCredentials, j++) do |

| 12: Split TagCredentials[j] on the ‘=’ delimiter. |

| 13: Store first index point of TagCredentials in Name and 2nd index in Values array. |

| 14: Update GUI, List Box, and Data Logger File taking these credentials. |

| 15: end for |

| 16: else break |

| 17: end for |

| 18: elif (PathType == “postlong”) then |

| 19: Split Data on the ‘@’delimiter & store in SensorData array. |

| 20: for (i = 0 to length.SensorData, i++) do |

| 21: Split SensorData[i] on the ‘|’ delimeter & store 1st index in SensorID, 2nd in Value Array. |

| 22: Make query as sql = “SELECT SensorID, SensorValue, DateTime FROM DatabaseTable” |

| 23: rs = mdb.SQLSelect(sql) |

| 24: if (rs not empty) then |

| 25: while (rs not reached to the last field) do |

| 26: row.column(SensorID, SensorValue, DateTime) = SensorID, Value, Time |

| 27: mdb.InsertRecord(DatabaseTableName, row); rs.MoveNext |

| 28: end while |

| 29: end if |

| 30: end for |

| 31: else pass |

| 32: end while |

| 33: function WriteServerTag(Value, id as Arguments) |

| 34: Collect middleware URL, id, value from the GUI and store in MiddlewareURL, NodeID, and Value. |

| 35: Payload = {‘Nodeid’:id, ‘value’:Value}; response = HTTP.POST(MiddlewareURL, data=Payload) |

| 36: function ExecuteCommand(Command, QueryType, Sensorid as Arguments) |

| 37: Get Start Date, End Date, Limit from the GUI and store in From, To, Num. Connect with the MySQl Server. |

| 38: if (QueryType == Historical) then |

| 39: Collect historic data from the database for the given sensor id within the specified time and store in sql. |

| 40: elif (QueryType == N) then |

| 41: Collect N number of data from the database for the given sensor id and store in sql. |

| 42: rs = mdb.SQLSelect(sql) |

| 43: if (rs is not nil) then |

| 44: while (rs not reached last field) do |

| 45: Value = rs.Field(SensorValue); Time = rs.Field(DateTimeAcquired) |

| 46: Join Time and Value on the ‘,’ and store in Concatenate Variable; Builder += Concatenate + EndOfLine |

| 47: if (Command == “Statistics”) then Listbox.AddRow(Value, Time) |

| 48: elif (Command == “Chart”) then PlotGraph(Time, value) |

| 49: else pass |

| 50: end while |

| 51: end if |

| 52: function UserRequest(Command, QueryType, SensorID as Arguments) |

| 53: if (Command == “Write”) then |

| 54: Call WriteServerTag(QureyType, SensorID) |

| 55: elif (Command == “Statistics”) then |

| 56: Call ExecuteCommand(Statistics, QueryType, SensorID) |

| 57: elif (Command == “Chart”) then |

| 58: Call ExecuteCommand(Chart, QueryType, SensorID) |

| Begin |

| 59: Start a thread calling HandleURL() to collect data from the middleware request. |

| 60: Call UserRequest(Write,Value,sensorid) function to Write Data in the OPC UA Server address space. |

| 61: Call UserRequest(Statistics,Historical,sensorid) to monitor sensor data historically in the application GUI. |

| 62: Call UserRequest(Statistics,N,sensorid) to monitor latest N number of data in the application GUI. |

| 63: Call UserRequest(Chart,Historical,sensorid) to monitor sensor data graphically in a historical manner. |

| 64: Call UserRequest(Chart,N,sensorid) to monitor N number of selected sensor data graphically. |

| Finish |

2.6. Telegram Messenger as the Remote Commander Service

3. Performance Evaluation of the Proposed System

4. Performance Analysis and Results

4.1. Testbed Setup

4.2. Network Utilization Analysis

4.3. Power Consumption Analysis

4.4. CPU Utilization Analysis

4.5. Resource Update Time Analysis

4.6. Middleware Service Time Requirement

4.7. Middleware Response Time Analysis

5. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bader, S.R.; Maleshkova, M.; Lohmann, S. Structuring reference architectures for the industrial internet of things. Future Internet 2019, 11, 151. [Google Scholar] [CrossRef] [Green Version]

- Heidel, R. Industrie 4.0: The Reference Architecture Model RAMI 4.0 and the Industrie 4.0 Component, 1st ed.; Beuth Verlag GmbH: Berlin, Germany, 2019; pp. 1–149. ISBN 978-3-410-28919-7. [Google Scholar]

- Georgakopoulos, D.; Jayaraman, P.P.; Fazia, M.; Villari, M.; Ranjan, R. Internet of Things and edge cloud computing roadmap for manufacturing. IEEE Cloud Comput. 2016, 3, 66–73. [Google Scholar] [CrossRef]

- Wollschlaeger, M.; Sauter, T.; Jasperneite, J. The future of industrial communication: Automation networks in the era of the internet of things and industry 4.0. IEEE Ind. Electron. Mag. 2017, 11, 17–27. [Google Scholar] [CrossRef]

- Scanzio, S.; Wisniewski, L.; Gaj, P. Heterogeneous and dependable networks in industry—A survey. Comput. Ind. 2021, 125, 103388. [Google Scholar] [CrossRef]

- Tramarin, F.; Vitturi, S.; Luvisotto, M.; Zanella, A. On the use of IEEE 802.11 n for industrial communications. IEEE Trans. Ind. Inform. 2015, 12, 1877–1886. [Google Scholar] [CrossRef]

- González, I.; Calderón, A.J.; Portalo, J.M. Innovative multi-layered architecture for heterogeneous automation and monitoring systems: Application case of a photovoltaic smart microgrid. Sustainability 2021, 13, 2234. [Google Scholar] [CrossRef]

- Pearce, J.M. Economic savings for scientific free and open source technology: A review. HardwareX 2020, 8, 00139. [Google Scholar] [CrossRef] [PubMed]

- Sisinni, E.; Saifullah, A.; Han, S.; Jennehag, U.; Gidlund, M. Industrial internet of things: Challenges, opportunities, and directions. IEEE Trans. Ind. Inform. 2018, 14, 4724–4734. [Google Scholar] [CrossRef]

- Hankel, M.; Rexroth, B. The reference architectural model industrie 4.0 (rami 4.0). ZVEI 2015, 2, 4–9. [Google Scholar]

- Industrial Internet Consortium. The Industrial Internet of Things Volume G1: Reference Architecture. 2017. Available online: https://www.iiconsortium.org/IIC_PUB_G1_V1.80_2017-01-31.pdf (accessed on 4 June 2021).

- Vyatkin, V. Software engineering in industrial automation: State-of-the-art review. IEEE Trans. Ind. Inform. 2013, 9, 1234–1249. [Google Scholar] [CrossRef]

- Arestova, A.; Martin, M.; Hielscher, K.S.J.; German, R. A Service-Oriented Real-Time Communication Scheme for AUTOSAR Adaptive Using OPC UA and Time-Sensitive Networking. Sensors 2021, 21, 2337. [Google Scholar] [CrossRef] [PubMed]

- Ioana, A.; Korodi, A. OPC UA Publish-Subscribe and VSOME/IP Notify-Subscribe Based Gateway Application in the Context of Car to Infrastructure Communication. Sensors 2020, 20, 4624. [Google Scholar] [CrossRef]

- OPC Foundation. OPC Unified Architecture: Part 6: Mappings; OPC Foundation: Scottsdale, AZ, USA, 2018; Release 1.04. [Google Scholar]

- González, I.; Calderón, A.J.; Figueiredo, J.; Sousa, J.M.C. A literature survey on open platform communications (OPC) applied to advanced industrial environments. Electronics 2019, 8, 510. [Google Scholar] [CrossRef] [Green Version]

- Hoffmann, M.; Büscher, C.; Meisen, T.; Jeschke, S. Continuous integration of field level production data into top-level information systems using the OPC interface standard. Procedia CIRP 2016, 41, 496–501. [Google Scholar] [CrossRef] [Green Version]

- Krutwig, M.C.; Kölmel, B.; Tantau, A.D.; Starosta, K. Standards for cyber-physical energy systems—Two case studies from sensor technology. Appl. Sci. 2019, 9, 435. [Google Scholar] [CrossRef] [Green Version]

- Vernadat, F.B. Technical, semantic and organizational issues of enterprise interoperability and networking. Annu. Rev. Control 2010, 34, 139–144. [Google Scholar] [CrossRef]

- Cavalieri, S.; Salafia, M.G.; Scroppo, M.S. Integrating OPC UA with web technologies to enhance interoperability. Comput. Stand. Interfaces 2019, 61, 45–64. [Google Scholar] [CrossRef]

- Nițulescu, I.V.; Korodi, A. Supervisory control and data acquisition approach in node-RED: Application and discussions. IoT 2020, 1, 76–91. [Google Scholar] [CrossRef]

- Gutierrez-Guerrero, J.M.; Holgado-Terriza, J.A. Automatic configuration of OPC UA for Industrial Internet of Things environments. Electronics 2019, 8, 600. [Google Scholar] [CrossRef] [Green Version]

- Fielding, R.T.; Taylor, R.N.; Erenkrantz, J.R.; Gorlick, M.M.; Whitehead, J.; Khare, R.; Oreizy, P. Reflections on the REST architectural style and “principled design of the modern web architecture”. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, New York, NY, USA, 21 August 2017; pp. 4–14. [Google Scholar]

- Fielding, R.T.; Taylor, R.N. Principled design of the modern web architecture. ACM Trans. Internet Technol. 2002, 2, 115–150. [Google Scholar] [CrossRef]

- Guinard, D.; Trifa, V.; Wilde, E. A resource oriented architecture for the Web of Things. In Proceedings of the 2010 Internet of Things (IOT), Tokyo, Japan, 29 November–1 December 2010; pp. 1–8. [Google Scholar]

- KEPServerEX Home Page. Available online: https://www.kepware.com/en-us/products/kepserverex/ (accessed on 2 June 2021).

- Projexsys, Inc. Available online: https://github.com/projexsys (accessed on 4 June 2021).

- Softing Home Page. Available online: https://industrial.softing.com/products/opc-opc-ua-software-platform.html (accessed on 5 June 2021).

- Grüner, S.; Pfrommer, J.; Palm, F. RESTful industrial communication with OPC UA. IEEE Trans. Ind. Inform. 2016, 12, 1832–1841. [Google Scholar] [CrossRef]

- Grüner, S.; Pfrommer, J.; Palm, F. A RESTful extension of OPC UA. In Proceedings of the 2015 IEEE World Conference on Factory Communication Systems (WFCS), Palma de Mallorca, Spain, 27–29 May 2015; pp. 1–4. [Google Scholar]

- Graube, M.; Urbas, L.; Hladik, J. Integrating industrial middleware in linked data collaboration networks. In Proceedings of the 2016 IEEE 21st International Conference on Emerging Technologies and Factory Automation (ETFA), Berlin, Germany, 6–9 September 2016; pp. 1–8. [Google Scholar]

- Schiekofer, R.; Scholz, A.; Weyrich, M. REST based OPC UA for the IIoT. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Turin, Italy, 4–7 September 2018; pp. 274–281. [Google Scholar]

- Hästbacka, D.; Barna, L.; Karaila, M.; Liang, Y.; Tuominen, P.; Kuikka, S. Device status information service architecture for condition monitoring using OPC UA. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–7. [Google Scholar]

- Schlechtendahl, J.; Keinert, M.; Kretschmer, F.; Lechler, A.; Verl, A. Making existing production systems Industry 4.0-ready. Prod. Eng. 2015, 9, 143–148. [Google Scholar] [CrossRef]

- Toma, C.; Popa, M. IoT Security Approaches in Oil & Gas Solution Industry 4.0. Inform. Econ. 2018, 22, 46–61. [Google Scholar]

- Tantik, E.; Anderl, R. Concept for Improved Automation of Distributed Systems with a Declarative Control based on OPC UA and REST. In Proceedings of the 2019 7th International Conference on Control, Mechatronics and Automation (ICCMA), Delft, The Netherlands, 6–8 November 2019; pp. 260–265. [Google Scholar]

- Cavalieri, S. A Proposal to Improve Interoperability in the Industry 4.0 Based on the Open Platform Communications Unified Architecture Standard. Computers 2021, 10, 70. [Google Scholar] [CrossRef]

- Cavalieri, S.; Salafia, M.G. Insights into mapping solutions based on opc ua information model applied to the industry 4.0 asset administration shell. Computers 2020, 9, 28. [Google Scholar] [CrossRef] [Green Version]

- Mellado, J.; Núñez, F. Design of an IoT-PLC: A containerized programmable logical controller for the industry 4.0. J. Ind. Inf. Integr. 2021, 25, 100250. [Google Scholar] [CrossRef]

- Silva, D.; Carvalho, L.I.; Soares, J.; Sofia, R.C. A Performance Analysis of Internet of Things Networking Protocols: Evaluating MQTT, CoAP, OPC UA. Appl. Sci. 2021, 11, 4879. [Google Scholar] [CrossRef]

- Ioana, A.; Burlacu, C.; Korodi, A. Approaching OPC UA Publish–Subscribe in the Context of UDP-Based Multi-Channel Communication and Image Transmission. Sensors 2021, 21, 1296. [Google Scholar] [CrossRef]

- Haskamp, H.; Meyer, M.; Möllmann, R.; Orth, F.; Colombo, A.W. Benchmarking of existing OPC UA implementations for Industrie 4.0-compliant digitalization solutions. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 589–594. [Google Scholar]

- FreeOpcUa/python-opcua. Available online: https://github.com/FreeOpcUa/Python-opcua (accessed on 2 February 2021).

- OPC Foundation. OPC Unified Architecture: Part 3: Address Space; OPC Foundation: Scottsdale, AZ, USA, 2018; Release 1.04. [Google Scholar]

- OPC Foundation. OPC Unified Architecture: Part 4: Services; OPC Foundation: Scottsdale, AZ, USA, 2018; Release 1.04. [Google Scholar]

- Tightiz, L.; Yang, H. A comprehensive review on IoT protocols’ features in smart grid communication. Energies 2020, 13, 2762. [Google Scholar] [CrossRef]

- Tkinter—Python Interface to Tcl/TK. Available online: https://docs.Python.org/3/library/tkinter (accessed on 13 March 2021).

- Li, Y. An integrated platform for the internet of things based on an open source ecosystem. Future Internet 2018, 10, 105. [Google Scholar] [CrossRef] [Green Version]

- ThingSpeak Home Page. Available online: https://www.mathworks.com/help/thingspeak/ (accessed on 20 June 2021).

- Xojo: Build Native, Cross-Platform Apps Home Page. Available online: https://www.xojo.com/ (accessed on 6 May 2021).

- Richardson, L.; Ruby, S. RESTful web services. In Web Services for the Real World, 1st ed.; Loukides, M., Wallace, P., Ruma, L.R.T., Eds.; O’Reilly Media, Inc.: Gravenstein Highway North, CA, USA, 2008; pp. 1–409. ISBN 978-0-596-52926-0. [Google Scholar]

- Saad, M.H.M.; Akmar, M.H.; Ahmad, A.S.; Habib, K.; Hussain, A.; Ayob, A. Design, Development & Evaluation of A Lightweight IoT Platform for Engineering & Scientific Applications. In Proceedings of the 2021 IEEE 12th International Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 7 August 2021; pp. 271–276. [Google Scholar]

- Habib, K.; Kai, E.E.T.; Saad, M.H.M.; Hussain, A.; Ayob, A.; Ahmad, A.S.S. Internet of Things (IoT) Enhanced Educational Toolkit for Teaching & Learning of Science, Technology, Engineering and Mathematics (STEM). In Proceedings of the 2021 IEEE 11th International Conference on System Engineering and Technology (ICSET2021), Shah Alam, Malaysia, 6 November 2021; pp. 194–199. [Google Scholar]

- nickoala/telepot. Available online: https://github.com/nickoala/telepot (accessed on 19 May 2021).

- Guinard, D.; Trifa, V.; Mattern, F.; Wilde, E. From the internet of things to the web of things: Resource-oriented architecture and best practices. In Architecting the Internet of Things; Uckelmann, D., Harrison, M., Michahelles, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 97–129. ISBN 978-3-642-19157-2. [Google Scholar]

- Enomatrix Solutions Sdn Bhd Home Page. Available online: http://www.enomatrix.com/ (accessed on 2 May 2021).

- Unified Automation. Version 1.5.1. Available online: https://www.unified-automation.com/ (accessed on 2 June 2021).

- PROSYS: OPC UA Client for Android. Available online: https://www.prosysopc.com/products/opc-ua-client-for-android/ (accessed on 2 June 2021).

- psutil Documentation. Available online: https://psutil.readthedocs.io/en/latest/ (accessed on 5 June 2021).

- chrisb2/pi_ina219. Available online: https://github.com/chrisb2/pi_ina219 (accessed on 6 July 2021).

- Requests: HTTP for Humans™. Available online: https://requests.readthedocs.io/en/master/ (accessed on 16 June 2021).

- open62541. Available online: https://github.com/open62541/open62541 (accessed on 15 September 2021).

| Criteria | Eclipse Milo | FreeOpcUa C++ | FreeOpcUa Python | Node-Opc-Ua | OPC UA.NET | Open62541 |

|---|---|---|---|---|---|---|

| Language | Java | C++ | Python | JavaScript | C# | C |

| Licensing | Eclipse | LGPL | LGPL | MIT | RCL, MIT | MPLv2.0 |

| Certificate | None | None | None | None | Yes | None |

| Implementation | Server and Client | Server and Client | Server and Client | Server and Client | Stack | Server and Client |

| UA-TCP UA-Binary | √ | √ | √ | √ | √ | √ |

| HTTPS UA-Binary | √ | √ | ||||

| HTTPS UA XML | √ | √ | ||||

| GetEndPoint | √ | √ | √ | √ | √ | √ |

| FindServer | √ | √ | √ | √ | √ | √ |

| AddNodes | √ | √ | √ | √ | √ | |

| AddReference | √ | √ | √ | √ | √ | |

| DeleteNodes | √ | √ | √ | √ | ||

| DeleteReference | √ | √ | √ | √ | ||

| Browse | √ | √ | √ | √ | √ | √ |

| Read | √ | √ | √ | √ | √ | √ |

| HistoryRead | √ | √ | √ | |||

| Write | √ | √ | √ | √ | √ | |

| Methods | √ | √ | √ | √ | ||

| CreateMonitoredItem | √ | √ | √ | √ | √ | √ |

| CreateSubscription | √ | √ | √ | √ | √ | √ |

| Publish | √ | √ | √ | √ | √ | √ |

| Basic128Rsa15 | √ | √ | √ | √ | ||

| Basic256 | √ | √ | √ | √ | ||

| Basic256Sha256 | √ | √ | √ | √ | ||

| Anonymous | √ | √ | √ | √ | √ | √ |

| Username Password | √ | √ | √ | √ | √ | |

| X.509 Certificate | √ | √ | √ | |||

| Platform | Linux, Windows | Linux, Windows | Linux, Windows | Linux, Mac, Windows | Windows | Linux, Windows |

| Method No. | Method Name | Method Scopes |

|---|---|---|

| 1 | client.connect(Server Endpoint URI) | Connect with the server through the endpoint URI. |

| 2 | get_root_node().get_children() | Server address space objects under the root node. |

| 3 | get_object_node().get_children() | List of available variable nodes of a specified object. |

| 4 | get_node(node_id) | Get a node credentials via passed node id. |

| 5 | get_node(node_id).get_browse_name() | Get the variable node name via passed node id. |

| 6 | get_node(nodeid).get_value() | Read variable node contents via passed node id. |

| 7 | get_node(nodeid).set_value(Value) | Write value in a node via passed node id. |

| Commands | Command Scope in OPC UA Server |

|---|---|

| Commands? | Get the list of all the scripted commands. |

| Server Terminals? | Get all the server address space object node mames. |

| Server Tags? | Get all the OPC UA server variable node names. |

| Server nid? | Get all the OPC UA server variable node id. |

| ServerWritable | Get the list of OPC UA server writable variable nodes. |

| @Write.NodeName@Value | Write a value mentioned in the passed node name. |

| ClientDatalog | Get the Client Data Log file. |

| CloudDatalog | Get the Cloud Data Log file. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Habib, K.; Saad, M.H.M.; Hussain, A.; Sarker, M.R.; Alaghbari, K.A. An Aggregated Data Integration Approach to the Web and Cloud Platforms through a Modular REST-Based OPC UA Middleware. Sensors 2022, 22, 1952. https://doi.org/10.3390/s22051952

Habib K, Saad MHM, Hussain A, Sarker MR, Alaghbari KA. An Aggregated Data Integration Approach to the Web and Cloud Platforms through a Modular REST-Based OPC UA Middleware. Sensors. 2022; 22(5):1952. https://doi.org/10.3390/s22051952

Chicago/Turabian StyleHabib, Kaiser, Mohamad Hanif Md Saad, Aini Hussain, Mahidur R. Sarker, and Khaled A. Alaghbari. 2022. "An Aggregated Data Integration Approach to the Web and Cloud Platforms through a Modular REST-Based OPC UA Middleware" Sensors 22, no. 5: 1952. https://doi.org/10.3390/s22051952

APA StyleHabib, K., Saad, M. H. M., Hussain, A., Sarker, M. R., & Alaghbari, K. A. (2022). An Aggregated Data Integration Approach to the Web and Cloud Platforms through a Modular REST-Based OPC UA Middleware. Sensors, 22(5), 1952. https://doi.org/10.3390/s22051952