Internal Parameters Calibration of Vision Sensor and Application of High Precision Integrated Detection in Intelligent Welding Based on Plane Fitting

Abstract

:1. Introduction

2. Configuration and Detection Mathematical Model of Vision Sensor

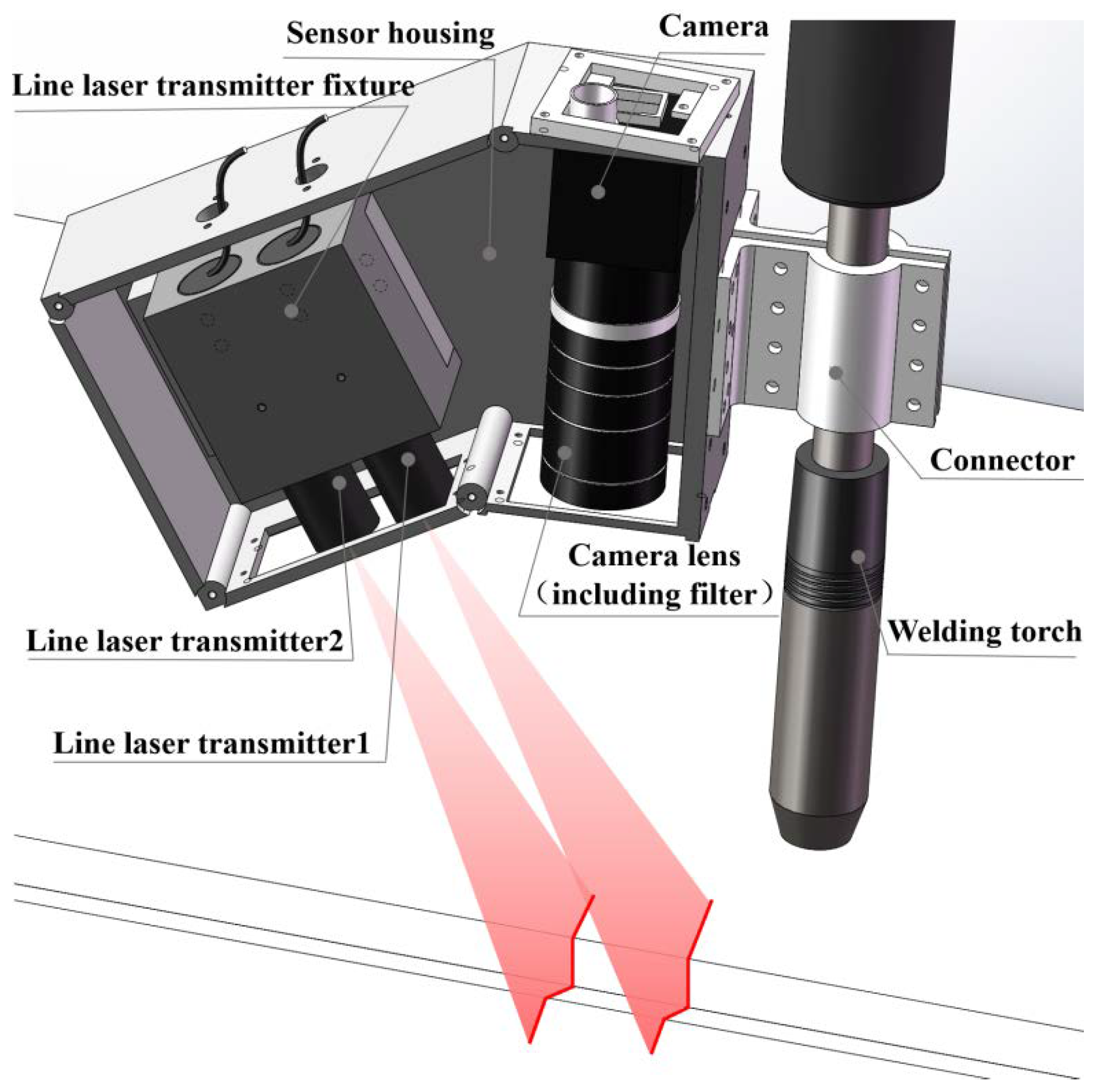

2.1. Configuration of Vision Sensor Based on Combined Laser Structured Lights

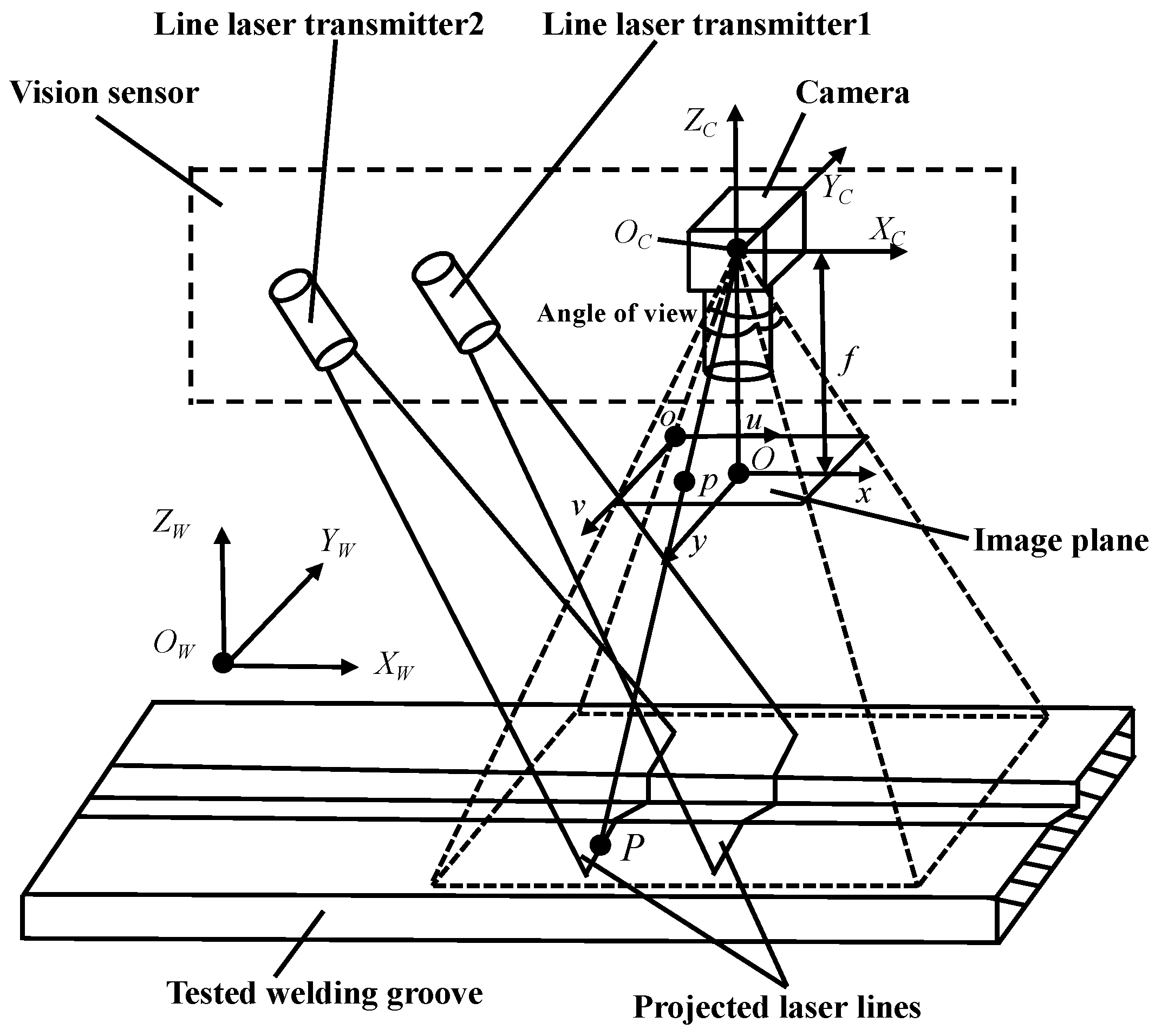

2.2. Detection Mathematical Model of Vision Sensor

3. Integrated Calibration for Internal Parameters of Vision Sensor

4. Detection Algorithm of Welding Groove Sizes and Relative Position and Posture of Welding Torch

4.1. Image Processing of Modulated Laser Lines Projected on V-Groove of Planar Workpiece

4.2. Three-Dimensional Reconstruction for V-Groove Surfaces and Its Adjacent Workpiece Surfaces of Planar Workpiece

4.2.1. Two-Dimensional Image Data Segmentation of the Modulated Laser Lines

4.2.2. Three-Dimensional Coordinates Solution of Segmented Data in the Camera Coordinate System

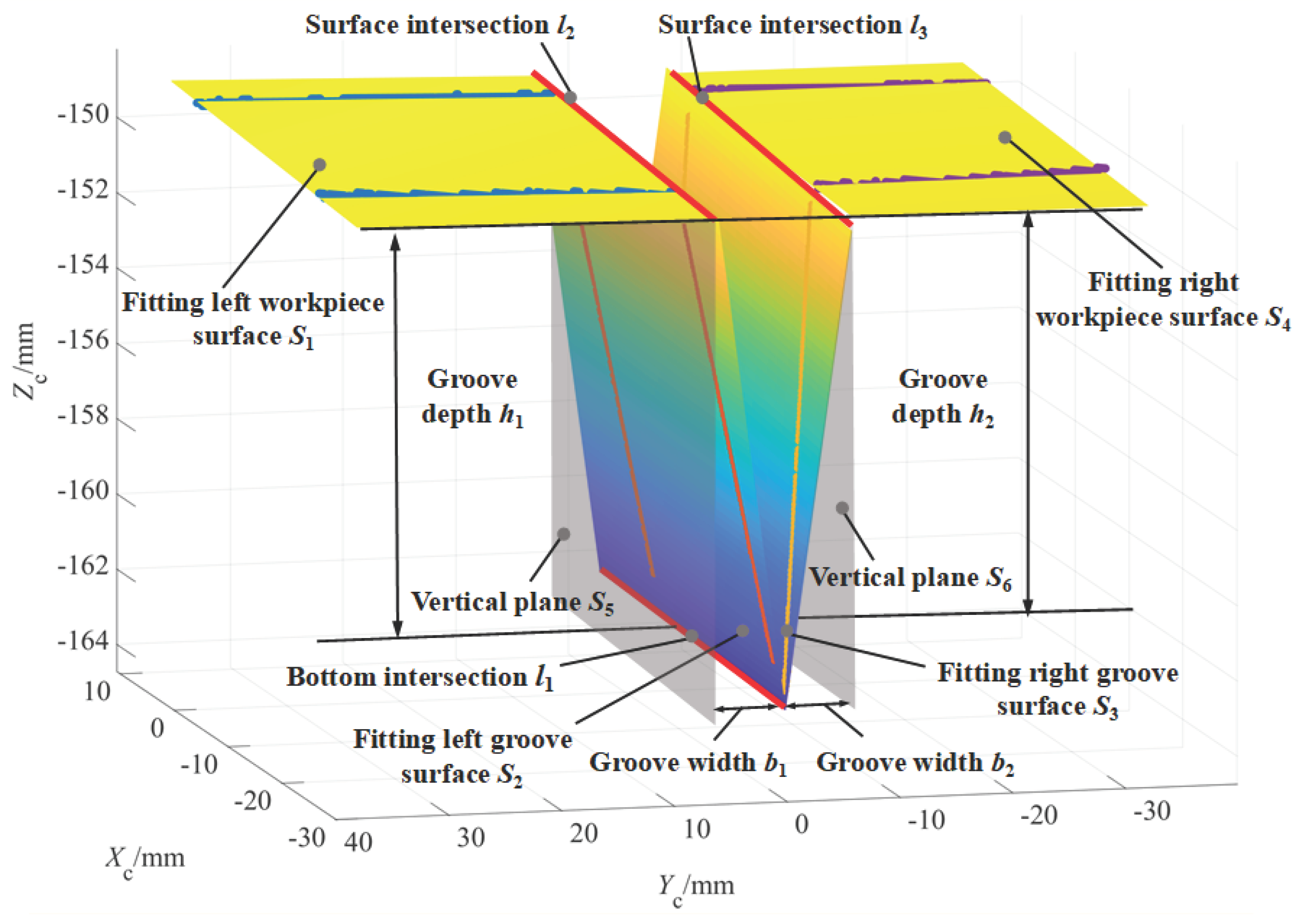

4.2.3. Plane Fitting of Segmented 3D Data

4.3. Detection Algorithm of Welding Groove Size Parameters

4.3.1. Groove Depth h

4.3.2. Groove Width b1 and b2

4.4. Detection Algorithm of Welding Torch Relative SPP Parameters

4.4.1. Relative SPP Parameters of Welding Torch

4.4.2. Solution of Relative Position Parameters of Welding Torch

- 1.

- Transverse deviation e

- 2.

- Angular deviation γ

- 3.

- Welding torch height H

4.4.3. Solution of Relative Posture Parameters of Welding Torch

- 1.

- Front and rear tilt angle α of the welding torch

- 2.

- Left and right tilt angle β of the welding torch

5. Experimental Verification and Discussion

6. Conclusions

- (1)

- For the specially designed vision sensor based on combined laser structured lights, an integrated calibration method of vision sensor internal parameters is proposed, which uses only an ordinary planar checkerboard calibration board. The internal parameters (including camera internal parameters of fx, fy, u0, v0, k1, k2 and laser structured light plane equation parameters of Al1, Bl1, Cl1, Dl1 and Al2, Bl2, Cl2, Dl2 in the camera coordinate system) of the vision sensor can be integrated, calibrated effectively by the proposed calibration method. This reduces the requirement for high installation accuracy of two laser transmitters to a great extent, avoids the influence of non-parallel error in two laser structured light projection planes on detection results and eliminates the cumulative error in stepwise calibration. Thus, the proposed integrated calibration method improves the efficiency, accuracy and comprehensiveness of internal parameter calibration for a line structured light vision sensor and provides a good foundation for industrial application of the vision sensor.

- (2)

- The derived high precision integrated detection algorithms for the V-groove size parameters (groove width b1, b2 and groove depth h) of the planar workpiece and the SPP parameters (including position parameters of e, γ, H and posture parameters of α, β) of the welding torch relative to the welding groove can be applied under any SPP of the welding torch (or vision sensor). Based on the 3D data of modulated laser lines obtained by the processing of the single modulated laser lines image, the algorithms reconstruct the 3D surfaces of V-groove surfaces and its adjacent surfaces of planar workpiece by data segmentation and plane fitting. This improves the utilization of modulated laser lines image data and reduces the interference of image processing error on the parameter detection.

- (3)

- According to the proposed integrated calibration method and derived high precision integrated detection algorithms, some verification tests were carried out. The experimental results show that the derived integrated detection algorithms can be applied under any position and posture of the welding torch (or vision sensor) and has good detection accuracy and robustness, which improves the applicability of the vision sensor and the integration of detection algorithms. This work has important value for the application of the vision sensor in intelligent welding production.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bestard, G.A.; Alfaro, S.C.A. Measurement and estimation of the weld bead geometry in arc welding processes: The last 50 years of development. J. Braz. Soc. Mech. Sci. Eng. 2018, 40, 444. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.; Biswal, B.B.; Mahanta, G.B. Weld Seam Detection, Finding, and Setting of Process Parameters for Varying Weld Gap by the Utilization of Laser and Vision Sensor in Robotic Arc Welding. IEEE Trans. Ind. Electron. 2022, 69, 622–632. [Google Scholar] [CrossRef]

- Leandry, I.; Breque, C.; Valle, V. Calibration of a structured-light projection system: Development to large dimension objects. Opt. Lasers Eng. 2012, 50, 373–379. [Google Scholar] [CrossRef]

- Kim, D.; Lee, S.; Kim, H.; Lee, S. Wide-angle laser structured light system calibration with a planar object. In Proceedings of the International Conference on Control, Automation and Systems (ICCAS 2010), Goyang-si, Korea, 27–30 October 2010; pp. 1879–1882. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Huynh, D.Q.; Owens, R.A.; Hartmann, P.E. Calibrating a structured light stripe system: A novel approach. Int. J. Comput. Vis. 1999, 33, 73–86. [Google Scholar] [CrossRef]

- Ha, J.E. Calibration of Structured Light Vision System using Multiple Vertical Planes. J. Electr. Eng. Technol. 2018, 13, 438–444. [Google Scholar]

- Santolaria, J.; Pastor, J.; Brosed, F.; Aguilar, J. A one-step intrinsic and extrinsic calibration method for laser line scanner operation in coordinate measuring machines. Meas. Sci. Technol. 2009, 20, 045107. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, X.; Su, J.; Li, X.; Zheng, A. Solution approach of a laser plane based on Plucker matrices of the projective lines on a flexible 2D target. Appl. Opt. 2016, 55, 2653–2656. [Google Scholar] [CrossRef]

- Ha, J.E. Extrinsic Calibration of a Camera and Laser Range Finder Using a New Calibration Structure of a Plane with a Triangular Hole. Int. J. Control. Autom. Syst. 2012, 10, 1240–1244. [Google Scholar] [CrossRef]

- Chen, T.; Sun, L.; Zhang, Q.; Wu, X.; Wu, D. Field geometric calibration method for line structured light sensor using single circular target. Sci. Program. 2017, 2017, 1526706. [Google Scholar] [CrossRef] [Green Version]

- Kiddee, P.; Fang, Z.J.; Tan, M. An automated weld seam tracking system for thick plate using cross mark structured light. Int. J. Adv. Manuf. Technol. 2016, 87, 3589–3603. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, Y.; Tuo, S.; Hou, Z.; Yang, W.; Xu, Z.; Wu, Y.; Yuan, H.; Shin, K. A novel seam tracking technique with a four-step method and experimental investigation of robotic welding oriented to complex welding seam. Sensors 2021, 21, 3067. [Google Scholar] [CrossRef] [PubMed]

- Mao, Z.; Zhou, S.; Zhao, B.; Shi, Z.; Jiang, Y.; Pan, J. Welding torch position and seam orientation deviation based on two stripes laser vision sensing. Trans. China Weld. Inst. 2015, 36, 35–38. [Google Scholar]

- He, Y.; Li, D.; Pan, Z.; Ma, G.; Yu, L.; Yuan, H.; Le, J. Dynamic modeling of weld bead geometry features in thick plate GMAW based on machine vision and learning. Sensors 2020, 20, 7104. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Lu, Y.; Li, Y.; Tan, J.; Feng, Q. Method for detecting weld feature size based on line structured light. Trans. Nanjing Univ. Aeronaut. Astronaut. 2021, 38, 383–392. [Google Scholar]

- Kim, J.; Lee, J.; Chung, M.; Shin, Y.G. Multiple weld seam extraction from RGB-depth images for automatic robotic welding via point cloud registration. Multimed. Tools Appl. 2021, 80, 9703–9719. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, Z.; Sun, B.; Yu, Y. Principle of an innovative visual sensor based on combined laser structured lights and its experimental verification. Opt. Laser Technol. 2019, 111, 35–44. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, Z.; Sun, B.; Yu, Y. A novel multifunctional visual sensor based on combined laser structured lights and its anti-jamming detection algorithms. Weld. World 2019, 63, 313–322. [Google Scholar] [CrossRef]

- Xue, B.; Chang, B.; Peng, G.; Gao, Y.; Tian, Z.; Du, D.; Wang, G. A vision based detection method for narrow butt joints and a robotic seam tracking system. Sensors 2019, 19, 1144. [Google Scholar] [CrossRef] [Green Version]

- Zeng, J.; Chang, B.; Du, D.; Hong, Y.; Chang, S.; Zou, Y. A precise visual method for narrow butt detection in specular reflection workpiece welding. Sensors 2016, 16, 1480. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T. Welding Torch Postion and Posture Based on Vision and Gravity Sensing; Tsinghua University: Beijing, China, 2021. [Google Scholar]

- Sioma, A. 3D imaging methods in quality inspection systems. In Proceedings of the 44th WILGA Symposium on Photonics Applications and Web Engineering, Wilga, Poland, 26 May–2 June 2019. [Google Scholar]

- Zou, Y.; Zhao, M.; Zhang, L.; Gao, S. Error analysis and structural analysis of structured-light visual sensor for seam tracking. Chin. J. Sci. Instrum. 2008, 29, 2605–2610. [Google Scholar]

- Sioma, A. Geometry and resolution in triangulation vision systems. In Proceedings of the Conference on Photonics Applications in Astronomy, Communications, Industry, and High Energy Physics Experiments, Wilga, Poland, 31 August–6 September 2020. [Google Scholar]

- Liu, N.; Guo, C.; Liu, M.; Wei, S.; Liao, Y. The internal layout experiment research of structured light visual sensor used in crawling arc welding robot. Jiangxi Sci. 2005, 23, 325–327. [Google Scholar]

- Wang, Z.; Fan, J.; Jing, F.; Deng, S.; Zheng, M.; Tan, M. An efficient calibration method of line structured light vision sensor in robotic eye-in-hand system. IEEE Sens. J. 2020, 20, 6200–6208. [Google Scholar] [CrossRef]

- Nowakowski, A.; Skarbek, W. Analysis of Brown camera distortion model. In Proceedings of the Conference on Photonics Applications in Astronomy, Communications, Industry, and High-Energy Physics Experiments, Wilga, Poland, 27 May–2 June 2013. [Google Scholar]

- Chen, W.; Sui, L.; Xu, Z.; Lang, Y. Improved Zhang-Suen thinning algorithm in binary line drawing applications. In Proceedings of the 2012 International Conference on Systems and Informatics (ICSAI 2012), Yantai, China, 19–20 May 2012; pp. 1947–1950. [Google Scholar]

| Component Designations | Model and Main Parameters |

|---|---|

| Industrial camera | CMOS: MER2-503-23GM |

| Resolution: 2448 × 2048 | |

| Exposure mode: Global Shutter | |

| Exposure frequency: 23.5 fps | |

| Dimension of the sensor matrix: 2/3″ | |

| Pixel size: 3.45 × 3.45 μm | |

| Line laser emitter | Wavelength: 660 nm |

| Lens: glass lens | |

| Size: Φ16 × 70 mm | |

| Power: 200 mW | |

| Focal length: adjustable | |

| Camera lens | Model: Computar, M1228-MPW3 |

| Focal length: 12 mm | |

| Angle of view (D × H × V):49.3° × 40.3° × 30.8° | |

| Working distance: 100 mm~inf | |

| Maximum compatible target size: 2/3″ | |

| Filter | Filter wavelength: 660 ± 8 nm |

| Calibration Items | Calibration Parameters | Calibration Parameters Value |

|---|---|---|

| Internal parameters of the camera | fx | 3544 |

| fy | 3543 | |

| u0 | 1239 | |

| v0 | 1225 | |

| k1 | −0.0508 | |

| k2 | 0.0738 | |

| Structured light plane equation parameters of two laser emitters | Al1/Al2 | 0.8771/0.8748 |

| Bl1/Bl2 | 0.1340/0.0044 | |

| Cl1/Cl2 | 0.4801/0.4844 | |

| Dl1/Dl2 | 51.63/71.57 |

| Groove Type | Size Parameters | Measured Value (mm) | Detected Value (mm) | Mean Detected Value (mm) | Standard Deviation (mm) | Absolute Error (mm) | Relative Error | ||

|---|---|---|---|---|---|---|---|---|---|

| Absolute Value | Maximum | Absolute Value | Maximum | ||||||

| Symmetric V-groove | h | 13.065 | 13.070 | 13.093 | 0.033 | 0.005 | 0.074 | 0.04% | 0.57% |

| 13.069 | 0.004 | 0.03% | |||||||

| 13.139 | 0.074 | 0.57% | |||||||

| b1 | 5.976 | 5.980 | 5.981 | 0.009 | 0.004 | 0.016 | 0.07% | 0.27% | |

| 5.992 | 0.016 | 0.27% | |||||||

| 5.972 | 0.004 | 0.07% | |||||||

| b2 | 6.022 | 6.002 | 6.021 | 0.021 | 0.020 | 0.029 | 0.33% | 0.48% | |

| 6.011 | 0.011 | 0.18% | |||||||

| 6.051 | 0.029 | 0.48% | |||||||

| Asymmetric V-groove | h | 12.071 | 12.134 | 12.137 | 0.002 | 0.063 | 0.069 | 0.52% | 0.57% |

| 12.137 | 0.066 | 0.55% | |||||||

| 12.140 | 0.069 | 0.57% | |||||||

| b1 | 6.996 | 6.990 | 7.000 | 0.010 | 0.006 | 0.017 | 0.09% | 0.24% | |

| 6.996 | 0.00 | 0.00% | |||||||

| 7.013 | 0.017 | 0.24% | |||||||

| b2 | 4.425 | 4.465 | 4.454 | 0.011 | 0.040 | 0.040 | 0.90% | 0.90% | |

| 4.458 | 0.033 | 0.75% | |||||||

| 4.440 | 0.015 | 0.34% | |||||||

| Position Parameters | Measured Value | Detected Value | Absolute Error | Relative Error | ||

|---|---|---|---|---|---|---|

| Absolute Value | Maximum | Absolute Value | Maximum | |||

| e/mm | 3 | 2.996 | 0.004 | 0.006 | 0.13% | 0.13% |

| 5 | 5.006 | 0.006 | 0.12% | |||

| 8 | 8.004 | 0.004 | 0.05% | |||

| γ/° | 1.6 | 1.613 | 0.013 | 0.085 | 0.81% | 1.77% |

| 3.2 | 3.158 | 0.042 | 1.31% | |||

| 4.8 | 4.715 | 0.085 | 1.77% | |||

| H1/mm | 143 | 143.011 | 0.011 | 0.040 | 0.01% | 0.03% |

| 148 | 147.960 | 0.040 | 0.03% | |||

| 153 | 153.009 | 0.009 | 0.01% | |||

| Posture Parameters | Measured Value | Detected Value | Absolute Error | Relative Error | ||

|---|---|---|---|---|---|---|

| Absolute Value | Maximum | Absolute Value | Maximum | |||

| α/° | −4.94 | −4.862 | 0.078 | 0.124 | 1.58% | 4.40% |

| −2.56 | −2.549 | 0.011 | 0.43% | |||

| 2.82 | 2.696 | 0.124 | 4.40% | |||

| β/° | −10.06 | −10.131 | 0.071 | 0.071 | 0.71% | 2.75% |

| 2.18 | 2.240 | 0.060 | 2.75% | |||

| 4.36 | 4.372 | 0.012 | 0.28% | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, C.; Zhu, Z.; Ke, Z.; Zhang, T. Internal Parameters Calibration of Vision Sensor and Application of High Precision Integrated Detection in Intelligent Welding Based on Plane Fitting. Sensors 2022, 22, 2117. https://doi.org/10.3390/s22062117

Zhu C, Zhu Z, Ke Z, Zhang T. Internal Parameters Calibration of Vision Sensor and Application of High Precision Integrated Detection in Intelligent Welding Based on Plane Fitting. Sensors. 2022; 22(6):2117. https://doi.org/10.3390/s22062117

Chicago/Turabian StyleZhu, Chuanhui, Zhiming Zhu, Zhijie Ke, and Tianyi Zhang. 2022. "Internal Parameters Calibration of Vision Sensor and Application of High Precision Integrated Detection in Intelligent Welding Based on Plane Fitting" Sensors 22, no. 6: 2117. https://doi.org/10.3390/s22062117

APA StyleZhu, C., Zhu, Z., Ke, Z., & Zhang, T. (2022). Internal Parameters Calibration of Vision Sensor and Application of High Precision Integrated Detection in Intelligent Welding Based on Plane Fitting. Sensors, 22(6), 2117. https://doi.org/10.3390/s22062117