An Edge Computing and Ambient Data Capture System for Clinical and Home Environments

Abstract

:1. Introduction

2. Related Work

2.1. Wearables and Body Area Networks

- BAN sensors perform localized measurements. For example, a wrist-worn accelerometer measures the acceleration of hand/wrist (local) and does not reliably measure overall body movement (global). Although a network of accelerometers will alleviate this problem, it comes at the cost of causing inconvenience to the patient as they have to wear multiple sensors on their body over long periods.

- Data from BAN sensors are often corrupted by missing data due to motion artifacts [36] (the patient-induced noise in physiological signals by voluntary or involuntary bodily movements) and low compliance by the patients [37]. Human bodily movement causes motion artifacts in the physiological signals (say ECG) being captured by the wearable and thus leads to data degradation. Further, the wearer (a human) has to comply with a data acquisition schedule and follow the instructions diligently to generate good quality data.

2.2. Non-Contact Health Monitoring Systems

2.3. Applications of Non-Contact Monitoring Systems

3. Materials and Methods

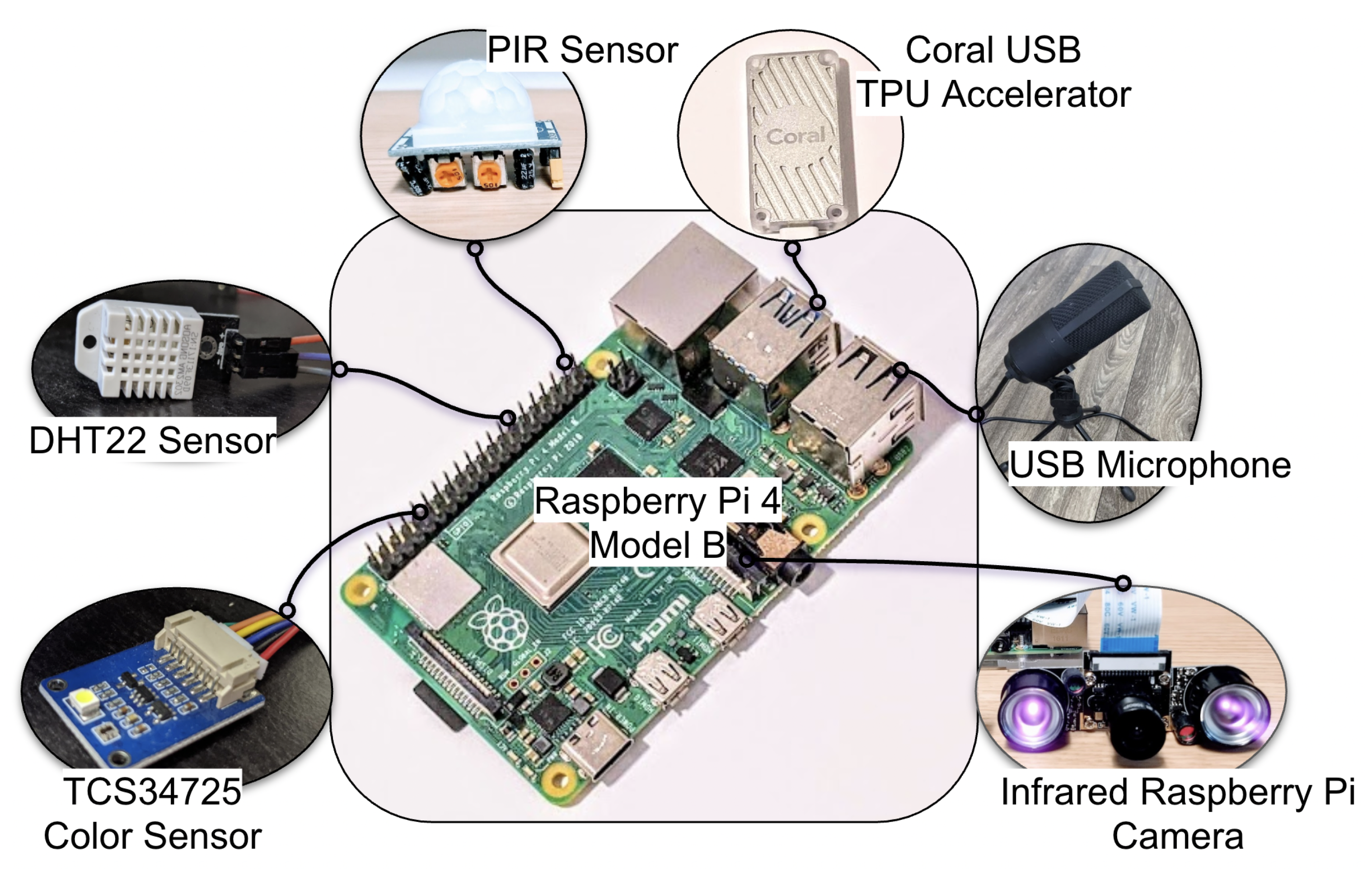

3.1. System Architecture

3.1.1. Raspberry Pi

3.1.2. PIR Sensor Based Human Movement Detection

3.1.3. IR Camera-Based Human Movement Detection

3.1.4. Human Pose and Activity Phenotyping

3.1.5. Privacy Preserving Audio Data Capture

3.1.6. Human Location Tracking via Bluetooth

3.1.7. Ambient Light Intensity Assessment

3.1.8. Temperature and Humidity Detection

3.1.9. Thermal Camera-Based Temperature Measurement

3.2. Data Fusion

3.3. Applications

3.3.1. Estimating Occupancy and Human Activity Phenotyping

3.3.2. Medical Equipment Alarm Classification Using Audio

3.3.3. Geolocation of Humans in a Built Environment

3.3.4. Ambient Light Logging

3.3.5. Ambient Temperature and Humidity Logging

4. Results

4.1. Estimating Occupancy and Human Activity Phenotyping

4.2. Medical Equipment Alarm Classification Using Audio

4.3. Geolocation of Humans in a Built Environment

4.4. Ambient Light Logging

4.5. Ambient Temperature and Humidity Logging

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OR | Operating Room |

| ICU | Intensive Care Unit |

| PIR | Passive Infrared |

| USB | Universal Serial Bus |

| TPU | Tensor Processing Unit |

| RPi | Raspberry Pi |

| HIPAA | Three letter acronym |

| IR | Infrared |

| ECG | Electrocardiogram |

| BAN | Body Area Network |

| RF | Radio Frequency |

| GPIO | General Purpose Input Output |

| NoIR | No Infrared |

| Global Difference Sum | |

| Global -Pixel Count | |

| Local Difference Sum | |

| Local -Pixel Count | |

| ISC | Internet Systems Consortium |

| STFT | Short Time Fourier Transform |

| MFCC | Mel Frequency Cepstral Coefficient |

| RSSI | Received Signal Strength Indicator |

| MAC | Media Access Control |

| LUX | Lumen per Square Foot |

| DC | Direct Current |

| RH | Relative Humidity |

| IQR | Interquartile Range |

| ISO | International Organization for Standardization |

| IEC | International Electrotechnical Commission |

| dBm | Decibels with reference to one milliwatt |

| RMSE | Root Mean Square Error |

| SD | Standard Deviation |

| MCIEP | Mild Cognitive Impairment Executive Park |

References

- Ma, A.J.; Rawat, N.; Reiter, A.; Shrock, C.; Zhan, A.; Stone, A.; Rabiee, A.; Griffin, S.; Needham, D.M.; Saria, S. Measuring patient mobility in the ICU using a novel noninvasive sensor. Crit. Care Med. 2017, 45, 630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Davoudi, A.; Malhotra, K.R.; Shickel, B.; Siegel, S.; Williams, S.; Ruppert, M.; Bihorac, E.; Ozrazgat-Baslanti, T.; Tighe, P.J.; Bihorac, A.; et al. Intelligent ICU for autonomous patient monitoring using pervasive sensing and deep learning. Sci. Rep. 2019, 9, 8020. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schmid, F.; Goepfert, M.S.; Reuter, D.A. Patient monitoring alarms in the ICU and in the operating room. Annu. Update Intensive Care Emerg. Med. 2013, 17, 359–371. [Google Scholar]

- Ryherd, E.; Ackerman, J.; Zimring, C.; Okcu, S.; Persson Waye, K. Noise pollution in Hospitals: Impacts on staff. J. Clin. Outcomes Manag. 2012, 19, 1–10. [Google Scholar]

- Choiniere, D.B. The effects of hospital noise. Nurs. Adm. Q. 2010, 34, 327–333. [Google Scholar] [CrossRef]

- Jaiswal, S.J.; Garcia, S.; Owens, R.L. Sound and light levels are similarly disruptive in ICU and non-ICU wards. J. Hosp. Med. 2017, 12, 798–804. [Google Scholar] [CrossRef] [Green Version]

- Wise, J. Higher nurse to patient ratio is linked to reduced risk of inpatient death. BMJ 2016, 352, i797. [Google Scholar] [CrossRef] [Green Version]

- Frisby, J.; Smith, V.; Traub, S.; Patel, V.L. Contextual computing: A Bluetooth based approach for tracking healthcare providers in the emergency room. J. Biomed. Inform. 2017, 65, 97–104. [Google Scholar] [CrossRef]

- Vankipuram, A.; Patel, V.L. Automated Location Tracking in Clinical Environments: A Review of Systems and Impact on Workflow Analysis. In Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2019; pp. 235–253. [Google Scholar]

- White, R.D. Recommended standards for the newborn ICU. J. Perinatol. 2007, 27, S4–S19. [Google Scholar] [CrossRef] [Green Version]

- Wilde-Frenz, J.; Schulz, H. Rate and distribution of body movements during sleep in humans. Percept. Mot. Ski. 1983, 56, 275–283. [Google Scholar] [CrossRef]

- Suresha, P.B.; Cakmak, A.S.; Da Poian, G.; Shah, A.J.; Vaccarino, V.; Bliwise, D.; Clifford, G.D. Obstructive Sleep Apnea Classification in a Mixed-Disorder Elderly Male Population Using a Low-Cost Off-Body Movement Sensor. In Proceedings of the 2019 IEEE-EMBS International Conference on Biomedical & Health Informatics, Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Liang, P.C.; Krause, P. Real-time indoor patient movement pattern telemonitoring with one-meter precision. In Proceedings of the 4th International Conference on Wireless Mobile Communication and Healthcare-Transforming Healthcare Through Innovations in Mobile and Wireless Technologies, Athens, Greece, 3–5 November 2014; pp. 141–144. [Google Scholar]

- Baker, C.R.; Armijo, K.; Belka, S.; Benhabib, M.; Bhargava, V.; Burkhart, N.; Der Minassians, A.; Dervisoglu, G.; Gutnik, L.; Haick, M.B.; et al. Wireless sensor networks for home health care. In Proceedings of the 21st International Conference on Advanced Information Networking and Applications Workshops, Niagara Falls, ON, Canada, 21–23 May 2007; Volume 2, pp. 832–837. [Google Scholar]

- Kim, S.H.; Jeong, J.M.; Hwang, M.T.; Kang, C.S. Development of an IoT-based atmospheric environment monitoring system. In Proceedings of the International Conference on Information and Communication Technology Convergence, Jeju Island, Korea, 18–20 October 2017; pp. 861–863. [Google Scholar]

- Gederi, E.; Clifford, G.D. Fusion of image and signal processing for the detection of obstructive sleep apnea. In Proceedings of the 2012 IEEE-EMBS International Conference on Biomedical and Health Informatics, Hong Kong, China, 5–7 January 2012; pp. 890–893. [Google Scholar]

- Wang, C.W.; Hunter, A.; Gravill, N.; Matusiewicz, S. Unconstrained video monitoring of breathing behavior and application to diagnosis of sleep apnea. IEEE Trans. Biomed. Eng. 2013, 61, 396–404. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deng, F.; Dong, J.; Wang, X.; Fang, Y.; Liu, Y.; Yu, Z.; Liu, J.; Chen, F. Design and implementation of a noncontact sleep monitoring system using infrared cameras and motion sensor. IEEE Trans. Instrum. Meas. 2018, 67, 1555–1563. [Google Scholar] [CrossRef]

- Hegde, C.; Jiang, Z.; Suresha, P.B.; Zelko, J.; Seyedi, S.; Smith, M.A.; Wright, D.W.; Kamaleswaran, R.; Reyna, M.A.; Clifford, G.D. Autotriage—An open source edge computing raspberry pi-based clinical screening system. medRxiv 2020. [Google Scholar] [CrossRef]

- Rienzo, M.D.; Mukkamala, R. Wearable and Nearable Biosensors and Systems for Healthcare. Sensors 2021, 21, 1291. [Google Scholar] [CrossRef]

- Romdhane, R.; Mulin, E.; Derreumeaux, A.; Zouba, N.; Piano, J.; Lee, L.; Leroi, I.; Mallea, P.; David, R.; Thonnat, M.; et al. Automatic video monitoring system for assessment of Alzheimer’s disease symptoms. J. Nutr. Health Aging 2012, 16, 213–218. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, S.; Matsui, T.; Kagawa, M.; Asao, T.; Kotani, K. An approach to a non-contact vital sign monitoring using dual-frequency microwave radars for elderly care. J. Biomed. Sci. Eng. 2013, 6, 704–711. [Google Scholar] [CrossRef] [Green Version]

- Matsui, T.; Yoshida, Y.; Kagawa, M.; Kubota, M.; Kurita, A. Development of a practicable non-contact bedside autonomic activation monitoring system using microwave radars and its clinical application in elderly people. J. Clin. Monit. Comput. 2013, 27, 351–356. [Google Scholar] [CrossRef]

- Tsumura, N.; Kanai, C.; Masui, K.; Mitsuhashi, R.; Nagasawa, T. Automatic classification of adult males with and without autism spectrum disorder by non-contact measurement of autonomic nervous system activation. Front. Psychiatry 2021, 12, 573. [Google Scholar]

- Shajahan, A.; Culp, C.H.; Williamson, B. Effects of indoor environmental parameters related to building heating, ventilation, and air conditioning systems on patients’ medical outcomes: A review of scientific research on hospital buildings. Indoor Air 2019, 29, 161–176. [Google Scholar] [CrossRef]

- Melhado, M.A.; Hensen, J.; Loomans, M. Literature review of staff thermal comfort and patient thermal risks in operating rooms. In Proceedings of the 8th International healthy buildings conference, Lisboa, Portugal, 4–8 June 2006; pp. 11–14. [Google Scholar]

- Tang, J.W. The effect of environmental parameters on the survival of airborne infectious agents. J. R. Soc. Interface 2009, 6, S737–S746. [Google Scholar] [CrossRef] [Green Version]

- DuBose, J.; Davis, R.G.; Campiglia, G.; Wilkerson, A.; Zimring, C. Lighting the patient room of the future: Evaluating different lighting conditions from the patient perspective. Health Environ. Res. Des. J. 2021, 14, 234–253. [Google Scholar] [CrossRef] [PubMed]

- Piwek, L.; Ellis, D.A.; Andrews, S.; Joinson, A. The Rise of Consumer Health Wearables: Promises and Barriers. PLoS Med. 2016, 13, e1001953. [Google Scholar] [CrossRef] [PubMed]

- Dunn, J.; Runge, R.; Snyder, M. Wearables and the medical revolution. Pers. Med. 2018, 15, 429–448. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yetisen, A.K.; Martinez-Hurtado, J.L.; Ünal, B.; Khademhosseini, A.; Butt, H. Wearables in Medicine. Adv. Mater. 2018, 30, 1706910. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Gonzalez, S.; Vasilakos, A.; Cao, H.; Leung, V.C.M. Body Area Networks: A Survey. Mob. Networks Appl. 2011, 16, 171–193. [Google Scholar] [CrossRef]

- Catherwood, P.A.; Bukhari, S.S.; Watt, G.; Whittow, W.G.; McLaughlin, J. Body-centric wireless hospital patient monitoring networks using body-contoured flexible antennas. IET Microwaves Antennas Propag. 2018, 12, 203–210. [Google Scholar] [CrossRef] [Green Version]

- Movassaghi, S.; Abolhasan, M.; Lipman, J.; Smith, D.; Jamalipour, A. Wireless Body Area Networks: A Survey. IEEE Commun. Surv. Tutor. 2014, 16, 1658–1686. [Google Scholar] [CrossRef]

- Monton, E.; Hernandez, J.; Blasco, J.; Herve, T.; Micallef, J.; Grech, I.; Brincat, A.; Traver, V. Body area network for wireless patient monitoring. IET Commun. 2008, 2, 215. [Google Scholar] [CrossRef]

- Gyselinckx, B.; Penders, J.; Vullers, R. Potential and challenges of body area networks for cardiac monitoring. J. Electrocardiol. 2007, 40, S165–S168. [Google Scholar] [CrossRef]

- Martin, L.R.; Williams, S.L.; Haskard, K.B.; DiMatteo, M.R. The challenge of patient adherence. Ther. Clin. Risk Manag. 2005, 1, 189. [Google Scholar]

- Lin, F.; Zhuang, Y.; Song, C.; Wang, A.; Li, Y.; Gu, C.; Li, C.; Xu, W. SleepSense: A Noncontact and Cost-Effective Sleep Monitoring System. IEEE Trans. Biomed. Circuits Syst. 2017, 11, 189–202. [Google Scholar] [CrossRef] [PubMed]

- Liang, Q.; Xu, L.; Bao, N.; Qi, L.; Shi, J.; Yang, Y.; Yao, Y. Research on Non-Contact Monitoring System for Human Physiological Signal and Body Movement. Biosensors 2019, 9, 58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, F.; Skubic, M.; Rantz, M.; Cuddihy, P.E. Quantitative Gait Measurement With Pulse-Doppler Radar for Passive In-Home Gait Assessment. IEEE Trans. Biomed. Eng. 2014, 61, 2434–2443. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, Y.S.; Pathirana, P.N.; Caelli, T.; Evans, R. Doppler radar in respiratory monitoring: Detection and analysis. In Proceedings of the International Conference on Control, Automation and Information Sciences, Nha Trang, Vietnam, 25–28 November 2013; pp. 224–228. [Google Scholar] [CrossRef]

- Gu, C.; Li, C.; Lin, J.; Long, J.; Huangfu, J.; Ran, L. Instrument-Based Noncontact Doppler Radar Vital Sign Detection System Using Heterodyne Digital Quadrature Demodulation Architecture. IEEE Trans. Instrum. Meas. 2010, 59, 1580–1588. [Google Scholar] [CrossRef]

- Drzymalski, D.; Ceruzzi, J.; Camann, W. Noise in the obstetric operating room. Int. J. Obstet. Anesth. 2017, 29, 87–88. [Google Scholar] [CrossRef] [PubMed]

- Keller, S.; Tschan, F.; Semmer, N.K.; Holzer, E.; Candinas, D.; Brink, M.; Beldi, G. Noise in the operating room distracts members of the surgical team. An observational study. World J. Surg. 2018, 42, 3880–3887. [Google Scholar] [CrossRef]

- Murthy, V.; Malhotra, S.; Bala, I.; Raghunathan, M. Detrimental effects of noise on anaesthetists. Can. J. Anaesth. 1995, 42, 608. [Google Scholar] [CrossRef] [Green Version]

- Shapiro, R.A.; Berland, T. Noise in the operating room. N. Engl. J. Med. 1972, 287, 1236–1238. [Google Scholar] [CrossRef]

- Way, T.J.; Long, A.; Weihing, J.; Ritchie, R.; Jones, R.; Bush, M.; Shinn, J.B. Effect of noise on auditory processing in the operating room. J. Am. Coll. Surg. 2013, 216, 933–938. [Google Scholar] [CrossRef]

- Guerlain, S.; Adams, R.B.; Turrentine, F.B.; Shin, T.; Guo, H.; Collins, S.R.; Calland, J.F. Assessing team performance in the operating room: Development and use of a “black-box” recorder and other tools for the intraoperative environment. J. Am. Coll. Surg. 2005, 200, 29–37. [Google Scholar] [CrossRef]

- Azevedo-Coste, C.; Pissard-Gibollet, R.; Toupet, G.; Fleury, É.; Lucet, J.C.; Birgand, G. Tracking Clinical Staff Behaviors in an Operating Room. Sensors 2019, 19, 2287. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-person localization via RF body reflections. In Proceedings of the 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI 15), Oakland, CA, USA, 4–6 May 2015; pp. 279–292. [Google Scholar]

- Kianoush, S.; Savazzi, S.; Vicentini, F.; Rampa, V.; Giussani, M. Device-free RF human body fall detection and localization in industrial workplaces. IEEE Internet Things J. 2016, 4, 351–362. [Google Scholar] [CrossRef]

- Tian, Y.; Lee, G.H.; He, H.; Hsu, C.Y.; Katabi, D. RF-based fall monitoring using convolutional neural networks. Proc. ACM Interact. Mobile Wearable Ubiquitous Technol. 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Aschoff, J. Circadian rhythms: Influences of internal and external factors on the period measured in constant conditions. Z. Tierpsychol. 1979, 49, 225–249. [Google Scholar] [CrossRef]

- Campbell, S.S.; Dawson, D. Enhancement of nighttime alertness and performance with bright ambient light. Physiol. Behav. 1990, 48, 317–320. [Google Scholar] [CrossRef]

- Honma, K.; Honma, S.; Kohsaka, M.; Fukuda, N. Seasonal variation in the human circadian rhythm: Dissociation between sleep and temperature rhythm. Am. J. Physiol. Regul. Integr. Comp. Physiol. 1992, 262, R885–R891. [Google Scholar] [CrossRef]

- Saper, C.B.; Lu, J.; Chou, T.C.; Gooley, J. The hypothalamic integrator for circadian rhythms. Trends Neurosci. 2005, 28, 152–157. [Google Scholar] [CrossRef]

- Okamoto-Mizuno, K.; Mizuno, K. Effects of thermal environment on sleep and circadian rhythm. J. Physiol. Anthropol. 2012, 31, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Verceles, A.C.; Silhan, L.; Terrin, M.; Netzer, G.; Shanholtz, C.; Scharf, S.M. Circadian rhythm disruption in severe sepsis: The effect of ambient light on urinary 6-sulfatoxymelatonin secretion. Intensive Care Med. 2012, 38, 804–810. [Google Scholar] [CrossRef]

- Blume, C.; Garbazza, C.; Spitschan, M. Effects of light on human circadian rhythms, sleep and mood. Somnologie 2019, 23, 147–156. [Google Scholar] [CrossRef] [Green Version]

- Rashed, A.; Ibrahim, A.; Adel, A.; Mourad, B.; Hatem, A.; Magdy, M.; Elgaml, N.; Khattab, A. Integrated IoT medical platform for remote healthcare and assisted living. In Proceedings of the 2017 Japan-Africa Conference on Electronics, Communications and Computers (JAC-ECC), Alexandria, Egypt, 18–20 December 2017; pp. 160–163. [Google Scholar]

- Tyndall, A.; Cardell-Oliver, R.; Keating, A. Occupancy estimation using a low-pixel count thermal imager. IEEE Sens. J. 2016, 16, 3784–3791. [Google Scholar] [CrossRef]

- Metwaly, A.; Queralta, J.P.; Sarker, V.K.; Gia, T.N.; Nasir, O.; Westerlund, T. Edge computing with embedded AI: Thermal image analysis for occupancy estimation in intelligent buildings. In Proceedings of the INTelligent Embedded Systems Architectures and Applications Workshop, New York, NY, USA, 13–18 October 2019; pp. 1–6. [Google Scholar]

- Ahmad, J.; Larijani, H.; Emmanuel, R.; Mannion, M.; Javed, A. An intelligent real-time occupancy monitoring system using single overhead camera. In Proceedings of the SAI Intelligent Systems Conference, London, UK, 21–22 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 957–969. [Google Scholar]

- Zerrouki, N.; Harrou, F.; Sun, Y.; Houacine, A. Vision-based human action classification using adaptive boosting algorithm. IEEE Sens. J. 2018, 18, 5115–5121. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Tu, P.; Chang, M.C. Occupancy Sensing and Activity Recognition with Cameras and Wireless Sensors. In Proceedings of the 2nd Workshop on Data Acquisition To Analysis, New York, USA, 10 November 2019; pp. 1–6. [Google Scholar]

- Park, B.R.; Choi, E.J.; Choi, Y.J.; Moon, J.W. Accuracy analysis of DNN-based pose-categorization model and activity-decision algorithm. Energies 2020, 13, 839. [Google Scholar] [CrossRef] [Green Version]

- Singh, S.; Aksanli, B. Non-intrusive presence detection and position tracking for multiple people using low-resolution thermal sensors. J. Sens. Actuator Netw. 2019, 8, 40. [Google Scholar] [CrossRef] [Green Version]

- Sato, A.; Nakajima, M.; Kohtake, N. Rapid BLE beacon localization with range-only EKF-SLAM using beacon interval constraint. In Proceedings of the 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–8. [Google Scholar]

- Martín, A.J.; Gordo, I.M.; Domínguez, J.J.G.; Torres-Sospedra, J.; Plaza, S.L.; Gómez, D.G. Affinity propagation clustering for older adults daily routine estimation. In Proceedings of the 2021 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Lloret de Mar, Spain, 29 November–2 December 2021; pp. 1–7. [Google Scholar]

- Jain, D.; Mack, K.; Amrous, A.; Wright, M.; Goodman, S.; Findlater, L.; Froehlich, J.E. Homesound: An iterative field deployment of an in-home sound awareness system for deaf or hard of hearing users. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar]

- Cantarini, M.; Brocanelli, A.; Gabrielli, L.; Squartini, S. Acoustic features for deep learning-based models for emergency siren detection: An evaluation study. In Proceedings of the 2021 12th International Symposium on Image and Signal Processing and Analysis (ISPA), Zagreb, Croatia, 13–15 September 2021; pp. 47–53. [Google Scholar]

- Wyatt, S.; Elliott, D.; Aravamudan, A.; Otero, C.E.; Otero, L.D.; Anagnostopoulos, G.C.; Smith, A.O.; Peter, A.M.; Jones, W.; Leung, S.; et al. Environmental sound classification with tiny transformers in noisy edge environments. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 26–31 July 2021; pp. 309–314. [Google Scholar]

- Suresha, P.B.; Clifford, G.D. Sedline-Root-EEG-Toolbox. Available online: https://github.com/cliffordlab/OS_Edge_Compute_Data_Capture_RPi (accessed on 24 February 2021).

- Pi NoIR Camera V2. Available online: https://www.raspberrypi.org/products/pi-noir-camera-v2/ (accessed on 24 February 2021).

- Papandreou, G.; Zhu, T.; Chen, L.C.; Gidaris, S.; Tompson, J.; Murphy, K. Personlab: Person pose estimation and instance segmentation with a bottom-up, part-based, geometric embedding model. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 269–286. [Google Scholar]

- Papandreou, G.; Zhu, T.; Kanazawa, N.; Toshev, A.; Tompson, J.; Bregler, C.; Murphy, K. Towards accurate multi-person pose estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4903–4911. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef]

- Python-Sounddevice. Available online: https://python-sounddevice.readthedocs.io/ (accessed on 24 February 2021).

- Github: Python-Sounddevice. Available online: https://github.com/spatialaudio/python-sounddevice/ (accessed on 24 February 2021).

- Librosa. Available online: https://librosa.org/doc/latest/index.html (accessed on 24 February 2021).

- Multiscale Entropy. Available online: https://www.physionet.org/physiotools/mse/mse.c (accessed on 24 February 2021).

- Bluepy. Available online: https://ianharvey.github.io/bluepy-doc/ (accessed on 24 February 2021).

- Hegde, C.; Sameni, R.; Rad, A.B.; Clifford, G.D. Modeling Social Distancing and Quantifying Epidemic Disease Exposure in a Built Environment. IEEE J. Sel. Top. Signal Process. 2022. [Google Scholar] [CrossRef]

- Cheong, Y.Z.; Chew, W.J. The application of image processing to solve occlusion issue in object tracking. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2018; Volume 152, p. 3001. [Google Scholar]

- Block, F.E., Jr.; Rouse, J.D.; Hakala, M.; Thompson, C.L. A proposed new set of alarm sounds which satisfy standards and rationale to encode source information. J. Clin. Monit. Comput. 2000, 16, 541. [Google Scholar] [CrossRef]

- ISO/IEC 60601-1-8; Patterson and Other Alarms in Medical Equipment. Available online: http://www.anaesthesia.med.usyd.edu.au/resources/alarms/ (accessed on 2 June 2020).

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- XGBoost. Available online: https://xgboost.readthedocs.io/en/latest/index.html (accessed on 24 February 2021).

- Takahashi, K.; Yamamoto, K.; Kuchiba, A.; Koyama, T. Confidence interval for micro-averaged F1 and macro-averaged F1 scores. Appl. Intell. 2021, 52, 4961–4972. [Google Scholar] [CrossRef]

- Afouras, T.; Chung, J.S.; Zisserman, A. The Conversation: Deep Audio-Visual Speech Enhancement. In Proceedings of the Interspeech 2018 19th Annual Conference of the International Speech Communication Association, Hyderabad, India, 2–6 September 2018. [Google Scholar]

- Wallace, C.; Robins, J.; Alvord, L.; Walker, J. The effect of earplugs on sleep measures during exposure to simulated intensive care unit noise. Am. J. Crit. Care 1999, 8, 210–219. [Google Scholar] [CrossRef]

- Weinhouse, G.L.; Watson, P.L. Sedation and sleep disturbances in the ICU. Crit. Care Clin. 2009, 25, 539–549. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, A.; Gudeczauskas, K.; Therrien, A.; Zauher, A. Bedside reporting is a key to communication. J. Healthc. Commun. 2018, 3, 13. [Google Scholar] [CrossRef]

| Start Time | End Time | Duration | Action |

|---|---|---|---|

| (s) | (s) | (s) | |

| 0 | 30 | 30 | One person standing |

| 30 | 60 | 30 | Two people standing |

| 60 | 90 | 30 | Three people standing |

| 90 | 120 | 30 | Three people exercising |

| 120 | 150 | 30 | Two people exercising |

| 150 | 180 | 30 | One person exercising |

| Musical Note | Fundamental Frequency (Hz) | Count |

|---|---|---|

| 207 | ||

| 43 | ||

| 32 | ||

| 46 | ||

| 27 | ||

| 66 | ||

| 45 | ||

| 17 | ||

| 81 | ||

| E | - | 969 |

| T | - | 432 |

| Start Time | End Time | Duration | Action |

|---|---|---|---|

| (s) | (s) | (s) | |

| 0 | 146 | 146 | Stay in room 1 |

| 146 | 159 | 13 | Move from room 1 to room 2 |

| 159 | 268 | 109 | Stay in room 2 |

| 268 | 286 | 18 | Move from room 2 to room 3 |

| 286 | 470 | 184 | Stay in room 3 |

| 470 | 480 | 10 | Move from room 3 to room 1 |

| 480 | 600 | 120 | Stay in room 1 |

| Day | Time (HH:MM) | Action |

|---|---|---|

| Clear Day () | 07:32 | Sunrise |

| 18:10 | Sunset | |

| Night-1 () | 18:55 | Lights-ON |

| 23:45 | Lights-OFF | |

| 01:00 | Data upload start | |

| 02:00 | Restart data collection | |

| Cloudy Day () | 07:31 | Sunrise |

| 16:58 | Lights-ON | |

| 18:11 | Sunset | |

| Night-2 () | 23:42 | Lights-OFF |

| Task | Reference | Sensor | Method | # People | Accuracy |

|---|---|---|---|---|---|

| Human occupancy estimation | Tyndall et al. [61] | Thermal Imager | KNN | 3 | |

| Ahmad et al. [63] | RGB Camera | RNN | 4 | ||

| Metwaly et al. [62] | Thermal Imager | DNN | 5 | ||

| Our work | RGB Camera | RPi-PoseNet | 3 | ||

| Human activity recognition | Zerrouki et al. [64] | RGB Camera | AdaBoost | 17 | (6-Activities) 1 |

| Singh et al. [67] | Thermal Imager | Random Forest | 3 | (4-Activities) | |

| Zhao et al. [65] | RGB Camera | CNN | NA | (3-Activities) 2 | |

| Park et al. [66] | RGB Camera | DNN | 6 | (4-Activities) | |

| Our work | RGB Camera | RPi-PoseNet | 3 | (2-Activities) | |

| Geolocation of humans in a built environment | Sato et al. [68] | BLE | RO-EKF-SLAM | 6 | Mean Error m |

| Martín et al. [69] | BLE+IMU | KNN | 4 | (6-Rooms) | |

| Our work | BLE | Softmax | 1 | (3-Rooms) |

| Setting | ||

|---|---|---|

| Without Speech | 0.98 | 0.97 |

| With Speech Mixing | 0.93 | 0.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suresha, P.B.; Hegde, C.; Jiang, Z.; Clifford, G.D. An Edge Computing and Ambient Data Capture System for Clinical and Home Environments. Sensors 2022, 22, 2511. https://doi.org/10.3390/s22072511

Suresha PB, Hegde C, Jiang Z, Clifford GD. An Edge Computing and Ambient Data Capture System for Clinical and Home Environments. Sensors. 2022; 22(7):2511. https://doi.org/10.3390/s22072511

Chicago/Turabian StyleSuresha, Pradyumna Byappanahalli, Chaitra Hegde, Zifan Jiang, and Gari D. Clifford. 2022. "An Edge Computing and Ambient Data Capture System for Clinical and Home Environments" Sensors 22, no. 7: 2511. https://doi.org/10.3390/s22072511

APA StyleSuresha, P. B., Hegde, C., Jiang, Z., & Clifford, G. D. (2022). An Edge Computing and Ambient Data Capture System for Clinical and Home Environments. Sensors, 22(7), 2511. https://doi.org/10.3390/s22072511