Utility of Cognitive Neural Features for Predicting Mental Health Behaviors

Abstract

:1. Introduction

2. Materials and Methods

2.1. Overview of the Dataset

2.2. Dataset Acquisition

2.3. Experimental Design

- Selective Attention & Response Inhibition. Participants accessed a game named Go Green modeled after the standard test of variables of attention [34]. In this simple two-block task, colored rockets were presented either in the upper/lower central visual field. Participants were instructed to respond to green-colored rocket targets and ignore, i.e., withhold their response, to distracting rockets of five other isoluminant colors (shades of cyan, blue, purple, pink, orange). The task sequence consisted of a central fixation ‘+’ cue for 500 msec followed by a target/non-target stimulus of 100 msec duration, and up to a 1 s duration blank response window. When the participant made a response choice, or at the end of 1 s in case of no response, a happy or sad face emoticon was presented for 200 msec to signal response accuracy, followed by a 500 msec inter-trial interval (ITI). To reinforce positive feedback for fast and accurate responding, within 100–400 msec two happy face emoticons were simultaneously presented during the feedback period. Both task blocks had 90 trials lasting 5 min each, with target/non-target trials shuffled in each block. A brief practice period of 4 trials preceded the main task blocks. Summary total block accuracy was provided to participants at the end of each block as a series of happy face emoticons (up to 10 emoticons) in this and in all assessments described below. In the first task block, green rocket targets were sparse (33% of trials), hence selective attention was engaged as in a typical continuous performance attention task. In the second block, green rocket targets were frequent (67% of trials), hence participants developed a prepotent impulse to respond. As individuals must intermittently suppress a motor response to sparse non-targets (33% of trials), this block provided a metric of response inhibition.

- Working Memory. Participants accessed a game named Lost Star that is based on the standard visuo-spatial Sternberg task [35]. Participants were presented with a set of test objects (stars); they were instructed to maintain the visuo-spatial locations of the test objects in working memory for a 3 s delay period, and then responded whether a probe object (star) was or was not located in the same place as one of the objects in the original test set. We implemented this task at the threshold perceptual span for each individual, i.e., the number of star stimuli that the individual could correctly encode without any working memory delay. For this, a brief perceptual thresholding period preceded the main working memory task, allowing for an equivalent perceptual load to be investigated across participants. During thresholding, the set size of the test stars was progressively increased from 1 to 8 stars based on accurate performance; 4 trials were presented at each set size and 100% performance accuracy led to an increment in set size; <100% performance led to one 4-trial repeat of the same set size and any further inaccurate performance aborted the thresholding phase. The final set size at which 100% accuracy was obtained was designated as the individual’s perceptual threshold. Post-thresholding, the working memory task, consisted of 48 trials presented over 2 blocks. Each trial initiated with a central fixation ‘+’ for 500 msec followed by a 1 s presentation of the test set of star objects located at various positions on the screen, then a 3 s working memory delay period, followed by a single probe star object for 1 s, and finally a response time window of up to 1 s in which participants made a yes/no choice whether the probe star had a matching location to the previously presented test set. A happy/sad face emoticon was used to provide accuracy feedback for 200 msec followed by a 500 msec ITI. Summary accuracy was also shown between blocks. The total task duration was 6 min.

- Interference Processing. Participants accessed a game named Middle Fish, an adaptation of the Flanker task [36], which has been extensively used to study interfering/distractor processing. Participants were instructed to respond to the direction of a centrally located target (middle fish) while ignoring all flanking distractor fish. In congruent trials the flanker fish faced the same direction as the central fish, whereas in incongruent trials they faced the opposite direction. A brief practice of 4 trials preceded the main task of 96 trials presented over two blocks for a total task time of 8 min. 50% of trials had congruent distractors and 50% were incongruent. To retain attention, the array of fish was randomly presented in the upper or lower visual field in an equivalent number of trials. In each trial, a central fixation ‘+’ appeared for 500 msec followed by a 100 msec stimulus array of fish and up to a 1 s response window in which participants responded left/right as per the direction of the middle fish. Subsequently a happy/sad face emoticon was presented for 200 msec for accuracy feedback followed by a 500 msec ITI. Summary accuracy was shown between blocks and the total task duration was 8 min.

- Emotional Interference Processing. We embedded this task in the BrainE assessment suite given ample evidence that emotions impact cognitive control processes [37,38,39]. Participants accessed a game named Face Off, adapted from prior studies of attention bias in emotional contexts [40,41,42]. We used a standardized set of culturally diverse faces from the Nim-Stim database for this assessment [43]. We used an equivalent number of male and female faces, each face with four sets of emotions, either neutral, happy, sad, or angry, presented in equivalent number of trials. An arrow was superimposed on the face in each trial, occurring either in the upper or lower central visual field in an equal number of trials, and participants responded to the direction of the arrow (left/right). Participants completed 144 trials presented over three equipartitioned blocks with a shuffled but equivalent number of emotion trials in each block; a practice set of 4 trials preceded the main task. Each trial was initiated with a central fixation ‘+’ for 500 msec followed by a face stimulus with a superimposed arrow of 300 msec duration. As in other tasks, participants responded within an ensuing 1 s response window, followed by a happy/sad emoticon feedback for accuracy (200 msec) and a 500 msec ITI. Summary block accuracy feedback was provided, and the total task duration was 10 min.

2.4. Neural Processing Methods

2.5. Predicting Mental Health Symptoms Using Logistic Regression

2.6. Logistic Regression

- a.

- The model can mitigate overfitting or accidental fitting [54]. Furthermore, we apply stratified cross validation during model testing, which also helps to avoid overfitting.

- b.

- The model can mitigate the error of estimated coefficients and make logistic regression predictions despite multicollinearity. The variance of coefficients and prediction error of ridge regression is smaller than that of simple regression even if multicollinearity, i.e., a state in which multiple features are strongly correlated, occurs [55]. Therefore, we believe the model is able to stably predict even if many features are correlated.

2.7. Data Augmentation

2.7.1. Statistical Measures

- (1)

- we obtained 7 × 5 × 3 = 105 new features by computing these seven measures across ROIs.

- (2)

- we generated 7 × 68 × 3 = 1428 features by the measures across frequency band.

- (3)

- we generated 7 × 68 × 5 = 2380 features by the measures across task, respectively.

2.7.2. Product of Feature Pairs

2.7.3. Log Transform

2.8. Feature Selection

2.9. Oversampling

2.9.1. SMOTE

2.9.2. Adding Gaussian Noise

2.10. Evaluation

2.10.1. Stratified Cross-Validation

2.10.2. Sensitivity and Specificity

2.11. Assessing ”Hub-like” Spectral Activations That Predict Mental Health Symptom Scores

2.12. Current Flow Centralities

3. Results

3.1. Prediction of Mental Health Symptoms

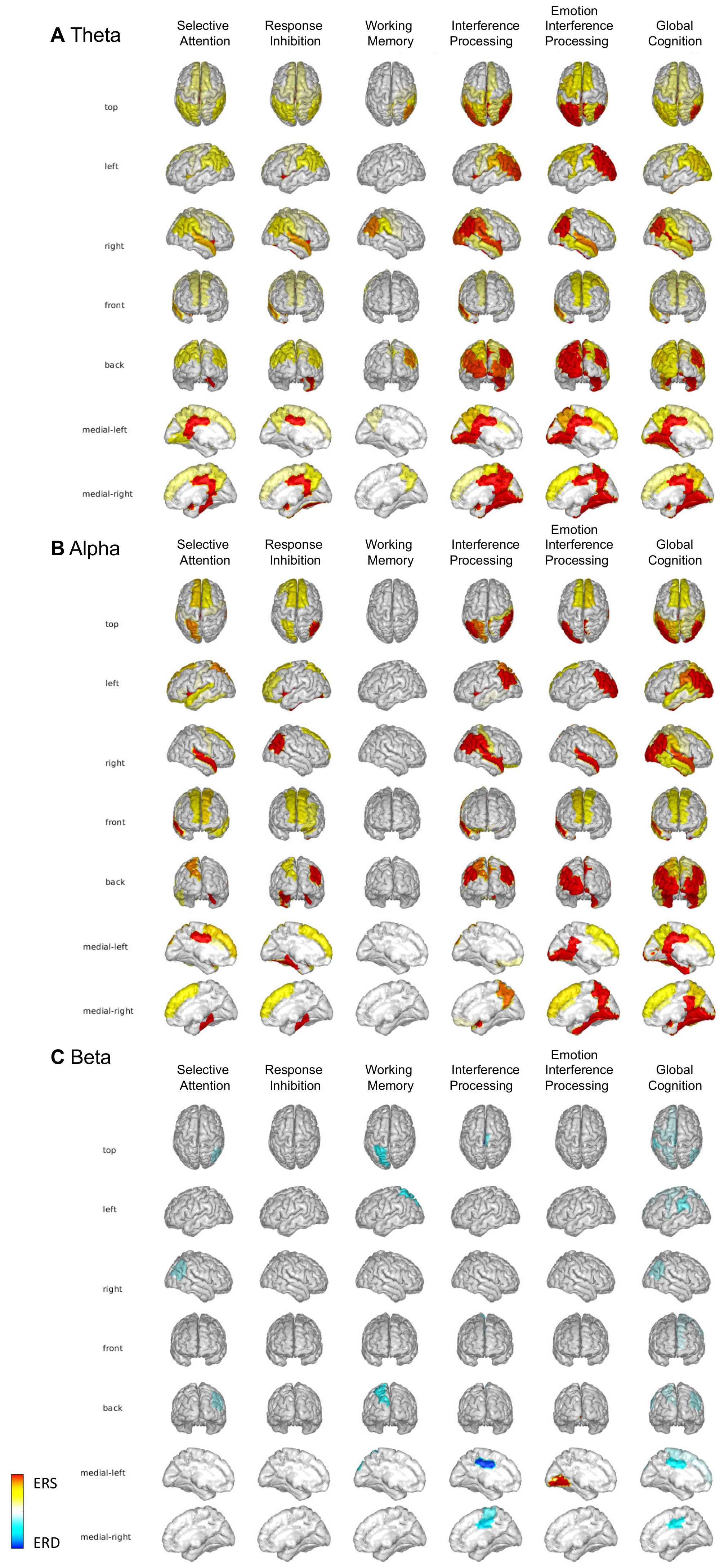

3.2. Different “Hub-like” Spectral Activations during Cognitive Tasks Predict Mental Health Symptoms

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

Appendix C

References

- Friedrich, M.J. Depression is the leading cause of disability around the world. Jama 2017, 317, 1517. [Google Scholar] [CrossRef]

- Kessler, R.C.; Adler, L.; Barkley, R.; Biederman, J.; Conners, C.K.; Demler, O.; Faraone, S.V.; Greenhill, L.L.; Howes, M.J.; Secnik, K.; et al. The Prevalence and Correlates of Adult ADHD in the United States: Results From the National Comorbidity Survey Replication. Am. J. Psychiatry 2006, 163, 716–723. [Google Scholar] [CrossRef] [PubMed]

- Insel, T.R. Assessing the Economic Costs of Serious Mental Illness. Am. J. Psychiatry 2008, 165, 663–665. [Google Scholar] [CrossRef] [PubMed]

- Mishra, J.; Gazzaley, A. Closed-Loop Rehabilitation of Age-Related Cognitive Disorders. In Seminars in Neurology; Thieme Medical Publishers: New York, NY, USA, 2014; pp. 584–590. [Google Scholar]

- Millan, M.J.; Agid, Y.; Brüne, M.; Bullmore, E.; Carter, C.S.; Clayton, N.; Connor, R.; Davis, S.; Deakin, J.; DeRubeis, R.; et al. Cognitive dysfunction in psychiatric disorders: Characteristics, causes and the quest for improved therapy. Nat. Rev. Drug Discov. 2012, 11, 141–168. [Google Scholar] [CrossRef] [PubMed]

- Price, J.L.; Drevets, W.C. Neural circuits underlying the pathophysiology of mood disorders. Trends Cogn. Sci. 2012, 16, 61–71. [Google Scholar] [CrossRef] [PubMed]

- Drysdale, A.T.; Grosenick, L.; Downar, J.; Dunlop, K.; Mansouri, F.; Meng, Y.; Fetcho, R.N.; Zebley, B.; Oathes, D.J.; Etkin, A.; et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat. Med. 2017, 23, 28–38. [Google Scholar] [CrossRef] [Green Version]

- Wu, W.; Zhang, Y.; Jiang, J.; Lucas, M.V.; Fonzo, G.A.; Rolle, C.E.; Cooper, C.; Chin-Fatt, C.; Krepel, N.; Cornelssen, C.A.; et al. An electroencephalographic signature predicts antidepressant response in major depression. Nat. Biotechnol. 2020, 38, 439–447. [Google Scholar] [CrossRef] [PubMed]

- Al-Ezzi, A.; Kamel, N.; Faye, I.; Gunaseli, E. Analysis of Default Mode Network in Social Anxiety Disorder: EEG Resting-State Effective Connectivity Study. Sensors 2021, 21, 4098. [Google Scholar] [CrossRef]

- Shadli, S.M.; Glue, P.; McIntosh, J.; McNaughton, N. An improved human anxiety process biomarker: Characterization of frequency band, personality and pharmacology. Transl. Psychiatry 2015, 5, e699. [Google Scholar] [CrossRef] [Green Version]

- McVoy, M.; Lytle, S.; Fulchiero, E.; Aebi, M.E.; Adeleye, O.; Sajatovic, M. A systematic review of quantitative EEG as a possible biomarker in child psychiatric disorders. Psychiatry Res. 2019, 279, 331–344. [Google Scholar] [CrossRef]

- de Aguiar Neto, F.S.; Rosa, J.L.G. Depression biomarkers using non-invasive EEG: A review. Neurosci. Biobehav. Rev. 2019, 105, 83–93. [Google Scholar] [CrossRef]

- Mehta, T.; Mannem, N.; Yarasi, N.K.; Bollu, P.C. Biomarkers for ADHD: The Present and Future Directions. Curr. Dev. Disord. Rep. 2020, 7, 85–92. [Google Scholar] [CrossRef]

- Müller, A.; Vetsch, S.; Pershin, I.; Candrian, G.; Baschera, G.-M.; Kropotov, J.D.; Kasper, J.; Rehim, H.A.; Eich, D. EEG/ERP-based biomarker/neuroalgorithms in adults with ADHD: Development, reliability, and application in clinical practice. World J. Biol. Psychiatry 2019, 21, 172–182. [Google Scholar] [CrossRef]

- de Bardeci, M.; Ip, C.T.; Olbrich, S. Deep learning applied to electroencephalogram data in mental disorders: A systematic review. Biol. Psychol. 2021, 162, 108117. [Google Scholar] [CrossRef]

- Safayari, A.; Bolhasani, H. Depression diagnosis by deep learning using EEG signals: A Systematic Review. Med. Nov. Technol. Devices 2021, 12, 100102. [Google Scholar] [CrossRef]

- Shatte, A.B.R.; Hutchinson, D.M.; Teague, S.J. Machine learning in mental health: A scoping review of methods and applications. Psychol. Med. 2019, 49, 1426–1448. [Google Scholar] [CrossRef] [Green Version]

- Aftanas, L.; Golocheikine, S. Human anterior and frontal midline theta and lower alpha reflect emotionally positive state and internalized attention: High-resolution EEG investigation of meditation. Neurosci. Lett. 2001, 310, 57–60. [Google Scholar] [CrossRef]

- Clayton, M.S.; Yeung, N.; Kadosh, R.C. The roles of cortical oscillations in sustained attention. Trends Cogn. Sci. 2015, 19, 188–195. [Google Scholar] [CrossRef]

- Putman, P.; Verkuil, B.; Arias-Garcia, E.; Pantazi, I.; Van Schie, C. EEG theta/beta ratio as a potential biomarker for attentional control and resilience against deleterious effects of stress on attention. Cogn. Affect. Behav. Neurosci. 2014, 14, 782–791. [Google Scholar] [CrossRef]

- Beltrán, D.; Morera, Y.; García-Marco, E.; de Vega, M. Brain Inhibitory Mechanisms Are Involved in the Processing of Sentential Negation, Regardless of Its Content. Evidence From EEG Theta and Beta Rhythms. Front. Psychol. 2019, 10, 1782. [Google Scholar] [CrossRef] [Green Version]

- Chambers, C.D.; Garavan, H.; Bellgrove, M.A. Insights into the neural basis of response inhibition from cognitive and clinical neuroscience. Neurosci. Biobehav. Rev. 2009, 33, 631–646. [Google Scholar] [CrossRef] [PubMed]

- Muralidharan, V.; Yu, X.; Cohen, M.X.; Aron, A.R. Preparing to Stop Action Increases Beta Band Power in Contralateral Sensorimotor Cortex. J. Cogn. Neurosci. 2019, 31, 657–668. [Google Scholar] [CrossRef] [PubMed]

- Batabyal, T.; Muthukrishnan, S.; Sharma, R.; Tayade, P.; Kaur, S. Neural substrates of emotional interference: A quantitative EEG study. Neurosci. Lett. 2018, 685, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Gazzaley, A.; Nobre, A.C. Top-down modulation: Bridging selective attention and working memory. Trends Cogn. Sci. 2012, 16, 129–135. [Google Scholar] [CrossRef] [Green Version]

- Hussain, I.; Young, S.; Park, S.-J. Driving-Induced Neurological Biomarkers in an Advanced Driver-Assistance System. Sensors 2021, 21, 6985. [Google Scholar] [CrossRef]

- Hussain, I.; Park, S.-J. Quantitative Evaluation of Task-Induced Neurological Outcome after Stroke. Brain Sci. 2021, 11, 900. [Google Scholar] [CrossRef]

- Lenartowicz, A.; Delorme, A.; Walshaw, P.D.; Cho, A.L.; Bilder, R.; McGough, J.J.; McCracken, J.T.; Makeig, S.; Loo, S.K. Electroencephalography Correlates of Spatial Working Memory Deficits in Attention-Deficit/Hyperactivity Disorder: Vigilance, Encoding, and Maintenance. J. Neurosci. 2014, 34, 1171–1182. [Google Scholar] [CrossRef]

- Balasubramani, P.P.; Ojeda, A.; Grennan, G.; Maric, V.; Le, H.; Alim, F.; Zafar-Khan, M.; Diaz-Delgado, J.; Silveira, S.; Ramanathan, D.; et al. Mapping cognitive brain functions at scale. NeuroImage 2020, 231, 117641. [Google Scholar] [CrossRef]

- Spitzer, R.L.; Kroenke, K.; Williams, J.B.; Löwe, B. A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch. Intern. Med. 2006, 166, 1092–1097. [Google Scholar] [CrossRef] [Green Version]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B.W. The PHQ-9: Validity of a Brief Depression Severity Measure. J. Gen. Intern. Med. 2001, 16, 606–613. [Google Scholar] [CrossRef]

- DuPaul, G.J.; Power, T.J.; Anastopoulos, A.D.; Reid, R. ADHD Rating Scale-IV: Checklist, Norms, and Clinical Interpretation; Guilford Press: New York, NY, USA, 1998. [Google Scholar]

- Desikan, R.S.; Ségonne, F.; Fischl, B.; Quinn, B.T.; Dickerson, B.C.; Blacker, D.; Buckner, R.L.; Dale, A.M.; Maguire, R.P.; Hyman, B.T.; et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 2006, 31, 968–980. [Google Scholar] [CrossRef]

- Greenberg, L.M.; Waldman, I.D. Developmental normative data on the test of variables of attention (T.O.V.A.). J. Child Psychol. Psychiatry 1993, 34, 1019–1030. [Google Scholar] [CrossRef]

- Sternberg, S. High-speed scanning in human memory. Science 1966, 153, 652–654. [Google Scholar] [CrossRef] [Green Version]

- Eriksen, B.; Eriksen, C.W. Effects of noise letters upon the identification of a target letter in a nonsearch task. Percept. Psychophys. 1974, 16, 143–149. [Google Scholar] [CrossRef] [Green Version]

- Gray, J.R. Integration of Emotion and Cognitive Control. Curr. Dir. Psychol. Sci. 2004, 13, 46–48. [Google Scholar] [CrossRef]

- Inzlicht, M.; Bartholow, B.D.; Hirsh, J.B. HHS Public Access. Trends Cogn. Sci. 2015, 19, 126–132. [Google Scholar] [CrossRef] [Green Version]

- Pessoa, L. How do emotion and motivation direct executive control? Cell 2009, 13, 160–166. [Google Scholar] [CrossRef] [Green Version]

- López-Martín, S.; Albert, J.; Fernández-Jaén, A.; Carretié, L. Emotional distraction in boys with ADHD: Neural and behavioral correlates. Brain Cogn. 2013, 83, 10–20. [Google Scholar] [CrossRef]

- López-Martín, S.; Albert, J.; Fernández-Jaén, A.; Carretié, L. Emotional response inhibition in children with attention-deficit/hyperactivity disorder: Neural and behavioural data. Psychol. Med. 2015, 45, 2057–2071. [Google Scholar] [CrossRef] [Green Version]

- Thai, N.; Taber-Thomas, B.C.; Pérez-Edgar, K.E. Neural correlates of attention biases, behavioral inhibition, and social anxiety in children: An ERP study. Dev. Cogn. Neurosci. 2016, 19, 200–210. [Google Scholar] [CrossRef] [Green Version]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.J.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ojeda, A.; Kreutz-Delgado, K.; Mullen, T. Fast and robust Block-Sparse Bayesian learning for EEG source imaging. Neuroimage 2018, 174, 449–462. [Google Scholar] [CrossRef] [PubMed]

- Ojeda, A.; Kreutz-Delgado, K.; Mishra, J. Bridging M/EEG Source Imaging and Independent Component Analysis Frameworks Using Biologically Inspired Sparsity Priors. Neural Comput. 2021, 33, 2408–2438. [Google Scholar] [CrossRef]

- Nunez, P.L. REST: A good idea but not the gold standard. Clinical neurophysiology: Official journal of the International Federation of Clinical Neurophysiology. Clin. Neurophysiol. 2010, 121, 2177–2180. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pascual-Marqui, R.D.; Michel, C.M.; Lehmann, D. Low resolution electromagnetic tomography: A new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 1994, 18, 49–65. [Google Scholar] [CrossRef]

- Holmes, C.J.; Hoge, R.; Collins, L.; Woods, R.; Toga, A.W.; Evans, A.C. Enhancement of MR Images Using Registration for Signal Averaging. J. Comput. Assist. Tomogr. 1998, 22, 324–333. [Google Scholar] [CrossRef] [PubMed]

- Jung, T.P.; Makeig, S.; Humphries, C.; Lee, T.W.; McKeown, M.J.; Iragui, V.; Sejnowski, T.J. Removing electroencephalographic artifacts by blind source separation. Psychophysiology 2000, 37, 163–178. [Google Scholar] [CrossRef] [PubMed]

- Sohrabpour, A.; Lu, Y.; Kankirawatana, P.; Blount, J.; Kim, H.; He, B. Effect of EEG electrode number on epileptic source localization in pediatric patients. Clin. Neurophysiol. 2015, 126, 472–480. [Google Scholar] [CrossRef] [Green Version]

- Ding, L.; He, B. Sparse source imaging in electroencephalography with accurate field modeling. Hum. Brain Mapp. 2008, 29, 1053–1067. [Google Scholar] [CrossRef] [Green Version]

- Stopczynski, A.; Stahlhut, C.; Larsen, J.E.; Petersen, M.K.; Hansen, L.K. The smartphone brain scanner: A portable real-time neuroimaging system. PLoS ONE 2014, 9, e86733. [Google Scholar] [CrossRef] [Green Version]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Hautamäki, V.; Lee, K.A.; Kinnunen, T.; Ma, B.; Li, H. Regularized logistic regression fusion for speaker verification. In Proceedings of the Twelfth Annual Conference of the International Speech Communication Association 2011, Florence, Italy, 27–31 August 2011. [Google Scholar]

- Demir-Kavuk, O.; Kamada, M.; Akutsu, T.; Knapp, E.-W. Prediction using step-wise L1, L2 regularization and feature selection for small data sets with large number of features. BMC Bioinform. 2011, 12, 412. [Google Scholar] [CrossRef] [Green Version]

- Kuhn, M.; Johnson, K. Feature Engineering and Selection: A Practical Approach for Predictive Models; CRC Press: New York, NY, USA, 2019. [Google Scholar]

- Zhang, Z. Model building strategy for logistic regression: Purposeful selection. Ann. Transl. Med. 2016, 4, 111. [Google Scholar] [CrossRef] [Green Version]

- Ha, T.N.; Lubo-Robles, D.; Marfurt, K.J.; Wallet, B.C. An in-depth analysis of logarithmic data transformation and per-class normalization in machine learning: Application to unsupervised classification of a turbidite system in the Canterbury Basin, New Zealand, and supervised classification of salt in the Eugene Island minibasin, Gulf of Mexico. Interpretation 2021, 9, T685–T710. [Google Scholar]

- Christopher, D.M.; Prabhakar, R.; Hinrich, S. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Kanter, J.M.; Veeramachaneni, K. Deep feature synthesis: Towards automating data science endeavors. In Proceedings of the 2015 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Paris, France, 19–21 October 2015; pp. 1–10. [Google Scholar]

- Muller, M.; Lange, I.; Wang, D.; Piorkowski, D.; Tsay, J.; Liao, Q.V.; Dugan, C.; Erickson, T. How data science workers work with data: Discovery, capture, curation, design, creation. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar]

- Brandes, U.; Fleischer, D. Centrality Measures based on Current Flow. In Annual Symposium on Theoretical Aspects of Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; pp. 533–544. [Google Scholar]

- Ahmad, M.A.; Eckert, C.; Teredesai, A. Interpretable machine learning in healthcare. In Proceedings of the 2018 ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, Washington, DC, USA, 29 August 2018; pp. 559–560. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef] [Green Version]

- Vellido Alcacena, A. The importance of interpretability and visualization in machine learning for applications in medicine and health care. Neural Comput. Appl. 2019, 32, 18069–18083. [Google Scholar] [CrossRef] [Green Version]

- Stiglic, G.; Kocbek, P.; Fijacko, N.; Zitnik, M.; Verbert, K.; Cilar, L. Interpretability of machine learning-based prediction models in healthcare. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1379. [Google Scholar] [CrossRef]

- Clancy, K.J.; Andrzejewski, J.A.; Simon, J.; Ding, M.; Schmidt, N.B.; Li, W. Posttraumatic Stress Disorder Is Associated with α Dysrhythmia across the Visual Cortex and the Default Mode Network. eNeuro 2020, 7. [Google Scholar] [CrossRef]

- Kartvelishvili, N. Interplay between Alpha Oscillations, Anxiety, and Sensory Processing. Master’s Thesis, Florida State University, Tallahassee, FL, USA, 2019. [Google Scholar]

- Knyazev, G.G.; Savostyanov, A.N.; Bocharov, A.V.; Rimareva, J.M. Anxiety, depression, and oscillatory dynamics in a social interaction model. Brain Res. 2016, 1644, 62–69. [Google Scholar] [CrossRef]

- Mo, J.; Liu, Y.; Huang, H.; Ding, M. Coupling between visual alpha oscillations and default mode activity. NeuroImage 2013, 68, 112–118. [Google Scholar] [CrossRef] [Green Version]

- Rolls, E.T.; Cheng, W.; Gilson, M.; Qiu, J.; Hu, Z.; Ruan, H.; Li, Y.; Huang, C.-C.; Yang, A.C.; Tsai, S.-J.; et al. Effective connectivity in depression. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2018, 3, 187–197. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arns, M.; Etkin, A.; Hegerl, U.; Williams, L.M.; DeBattista, C.; Palmer, D.M.; Fitzgerald, P.B.; Harris, A.; de Beuss, R.; Gordon, E. Frontal and rostral anterior cingulate (rACC) theta EEG in depression: Implications for treatment outcome? Eur. Neuropsychopharmacol. 2015, 25, 1190–1200. [Google Scholar] [CrossRef] [PubMed]

- Narushima, K.; McCormick, L.M.; Yamada, T.; Thatcher, R.W.; Robinson, R.G. Subgenual Cingulate Theta Activity Predicts Treatment Response of Repetitive Transcranial Magnetic Stimulation in Participants With Vascular Depression. JNP 2010, 22, 75–84. [Google Scholar] [CrossRef] [PubMed]

- Pizzagalli, D.A.; Webb, C.A.; Dillon, D.G.; Tenke, C.E.; Kayser, J.; Goer, F.; Fava, M.; McGrath, P.; Weissman, M.; Parsey, R.; et al. Pretreatment Rostral Anterior Cingulate Cortex Theta Activity in Relation to Symptom Improvement in Depression: A Randomized Clinical Trial. JAMA Psychiatry 2018, 75, 547–554. [Google Scholar] [CrossRef]

- Solomon, E.A.; Stein, J.M.; Das, S.; Gorniak, R.; Sperling, M.R.; Worrell, G.; Inman, C.S.; Tan, R.J.; Jobst, B.C.; Rizzuto, D.S.; et al. Dynamic Theta Networks in the Human Medial Temporal Lobe Support Episodic Memory. Curr. Biol. 2019, 29, 1100–1111.e4. [Google Scholar] [CrossRef] [Green Version]

- Sheline, Y.I.; Barch, D.M.; Price, J.L.; Rundle, M.M.; Vaishnavi, S.N.; Snyder, A.Z.; Mintun, M.A.; Wang, S.; Coalson, R.S.; Raichle, M.E. The default mode network and self-referential processes in depression. Proc. Natl. Acad. Sci. USA 2009, 106, 1942–1947. [Google Scholar] [CrossRef] [Green Version]

- Sheline, Y.I.; Price, J.L.; Yan, Z.; Mintun, M.A. Resting-state functional MRI in depression unmasks increased connectivity between networks via the dorsal nexus. Proc. Natl. Acad. Sci. USA 2010, 107, 11020–11025. [Google Scholar] [CrossRef] [Green Version]

- Hocking, J.; Price, C.J. The Role of the Posterior Superior Temporal Sulcus in Audiovisual Processing. Cereb. Cortex 2008, 18, 2439–2449. [Google Scholar] [CrossRef] [Green Version]

- Klein, J.T.; Shepherd, S.V.; Platt, M.L. Social Attention and the Brain. Curr. Biol. 2009, 19, R958–R962. [Google Scholar] [CrossRef] [Green Version]

- Corbetta, M.; Shulman, G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002, 3, 201. [Google Scholar] [CrossRef]

- Janssen, D.J.C.; Poljac, E.; Bekkering, H. Binary sensitivity of theta activity for gain and loss when monitoring parametric prediction errors. Soc. Cogn. Affect. Neurosci. 2016, 11, 1280–1289. [Google Scholar] [CrossRef] [Green Version]

- Guo, J.; Luo, X.; Wang, E.; Li, B.; Chang, Q.; Sun, L.; Song, Y. Abnormal alpha modulation in response to human eye gaze predicts inattention severity in children with ADHD. Dev. Cogn. Neurosci. 2019, 38, 100671. [Google Scholar] [CrossRef]

- Sanefuji, M.; Craig, M.; Parlatini, V.; Mehta, M.A.; Murphy, D.; Catani, M.; Cerliani, L.; de Schotten, M.T. Double-dissociation between the mechanism leading to impulsivity and inattention in Attention Deficit Hyperactivity Disorder: A resting-state functional connectivity study. Cortex 2017, 86, 290–302. [Google Scholar] [CrossRef] [Green Version]

- Yerys, B.E.; Tunç, B.; Satterthwaite, T.D.; Antezana, L.; Mosner, M.G.; Bertollo, J.R.; Guy, L.; Schultz, R.T.; Herrington, J.D. Functional Connectivity of Frontoparietal and Salience/Ventral Attention Networks Have Independent Associations With Co-occurring Attention-Deficit/Hyperactivity Disorder Symptoms in Children With Autism. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2019, 4, 343–351. [Google Scholar] [CrossRef]

- Zarka, D.; Leroy, A.; Cebolla, A.M.; Cevallos, C.; Palmero-Soler, E.; Cheron, G. Neural generators involved in visual cue processing in children with attention-deficit/hyperactivity disorder (ADHD). Eur. J. Neurosci. 2021, 53, 1207–1224. [Google Scholar] [CrossRef]

- Mishra, J.; Anguera, J.A.; Ziegler, D.A.; Gazzaley, A. A Cognitive Framework for Understanding and Improving Interference Resolution in the Brain. Progress Brain Res. 2013, 207, 351–377. [Google Scholar] [CrossRef] [Green Version]

- Mishra, J.; de Villers-Sidani, E.; Merzenich, M.; Gazzaley, A. Adaptive training diminishes distractibility in aging across species. Neuron 2014, 84, 1091–1103. [Google Scholar] [CrossRef] [Green Version]

- Dayan, E.; Censor, N.; Buch, E.R.; Sandrini, M.; Cohen, L.G. Noninvasive brain stimulation: From physiology to network dynamics and back. Nat. Neurosci. 2013, 16, 838–844. [Google Scholar] [CrossRef]

- Mishra, J.; Anguera, J.A.; Gazzaley, A. Video games for neuro-cognitive optimization. Neuron 2016, 90, 214–218. [Google Scholar] [CrossRef] [Green Version]

- Mishra, J.; Gazzaley, A. Closed-loop cognition: The next frontier arrives. Trends Cogn. Sci. 2015, 19, 242–243. [Google Scholar] [CrossRef] [Green Version]

- Wagner, T.; Valero-Cabre, A.; Pascual-Leone, A. Noninvasive Human Brain Stimulation. Annu. Rev. Biomed. Eng. 2007, 9, 527–565. [Google Scholar] [CrossRef] [Green Version]

- Weber, L.A.; Ethofer, T.; Ehlis, A.-C. Predictors of neurofeedback training outcome: A systematic review. NeuroImage Clin. 2020, 27, 102301. [Google Scholar] [CrossRef]

| Original Feature 1 | Original Feature 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| Rank | Log | Product | Freq Band | Task | ROI ID | Freq Band | Task | ROI ID |

| 1 | Yes | Yes | Alpha | 2 | 19 | Alpha | 2 | 41 |

| 2 | No | Yes | Alpha | 2 | 19 | Alpha | 2 | 41 |

| 3 | Yes | Yes | Alpha | 5 | 7 | Alpha | 5 | 43 |

| 4 | No | Yes | Theta | 2 | 39 | Theta | 5 | 7 |

| 5 | No | Yes | Alpha | 5 | 7 | Alpha | 5 | 43 |

| 6 | Yes | Yes | Theta | 1 | 45 | Theta | 5 | 6 |

| 7 | Yes | Yes | Theta | 2 | 39 | Theta | 5 | 7 |

| 8 | Yes | Yes | Theta | 1 | 45 | Theta | 5 | 7 |

| 9 | No | Yes | Theta | 5 | 9 | Theta | 5 | 19 |

| 10 | No | Yes | Theta | 1 | 7 | Theta | 5 | 22 |

| ID | Name | Closeness | Betweenness |

|---|---|---|---|

| Anxiety | |||

| 8 | cuneus R | 6.272 | 0.339 |

| 7 | cuneus L | 6.171 | 0.268 |

| 44 | pericalcarine R | 5.866 | 0.145 |

| 24 | lateraloccipital R | 5.767 | 0.116 |

| 63 | supramarginal L | 5.356 | 0.135 |

| Depression | |||

| 65 | temporalpole L | 2.281 | 0.220 |

| 9 | entorhinal L | 2.279 | 0.245 |

| 42 | parstriangularis R | 2.216 | 0.144 |

| 12 | frontalpole R | 2.212 | 0.145 |

| 33 | paracentral L | 2.157 | — |

| 67 | transversetemporal L | — | 0.099 |

| Inattention | |||

| 2 | bankssts R | 3.357 | 0.271 |

| 24 | lateraloccipital R | 3.315 | 0.135 |

| 13 | fusiform L | 3.293 | 0.147 |

| 63 | supramarginal L | 3.187 | 0.119 |

| 35 | parahippocampal L | 3.118 | 0.098 |

| Hyperactivity | |||

| 63 | supramarginal L | 3.240 | 0.194 |

| 8 | cuneus R | 3.202 | 0.183 |

| 9 | entorhinal L | 3.135 | 0.140 |

| 44 | pericalcarine R | 3.005 | 0.142 |

| 67 | transversetemporal L | 2.839 | 0.080 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kato, R.; Balasubramani, P.P.; Ramanathan, D.; Mishra, J. Utility of Cognitive Neural Features for Predicting Mental Health Behaviors. Sensors 2022, 22, 3116. https://doi.org/10.3390/s22093116

Kato R, Balasubramani PP, Ramanathan D, Mishra J. Utility of Cognitive Neural Features for Predicting Mental Health Behaviors. Sensors. 2022; 22(9):3116. https://doi.org/10.3390/s22093116

Chicago/Turabian StyleKato, Ryosuke, Pragathi Priyadharsini Balasubramani, Dhakshin Ramanathan, and Jyoti Mishra. 2022. "Utility of Cognitive Neural Features for Predicting Mental Health Behaviors" Sensors 22, no. 9: 3116. https://doi.org/10.3390/s22093116