Intelligent Fusion Imaging Photonics for Real-Time Lighting Obstructions

Abstract

:1. Introduction

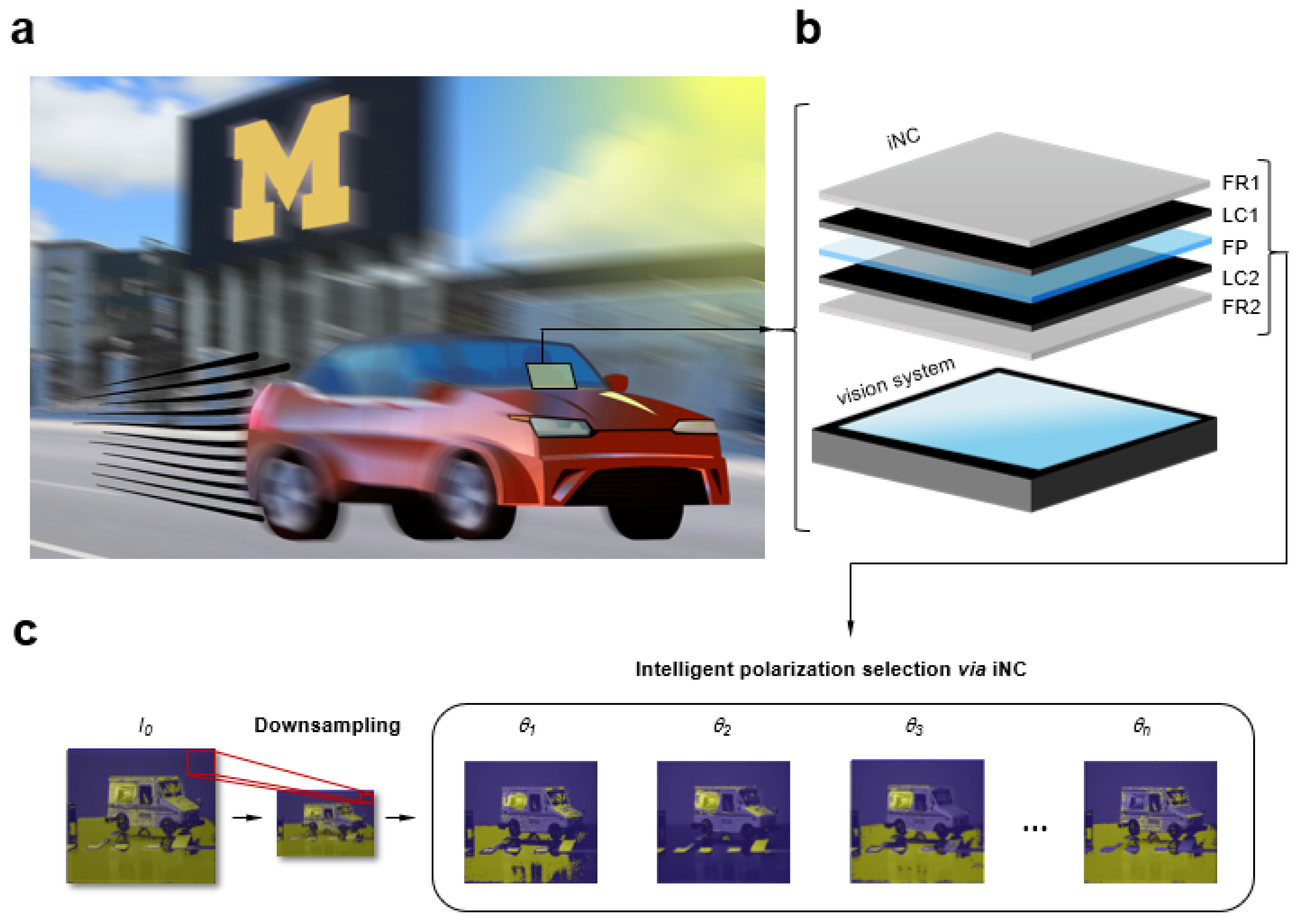

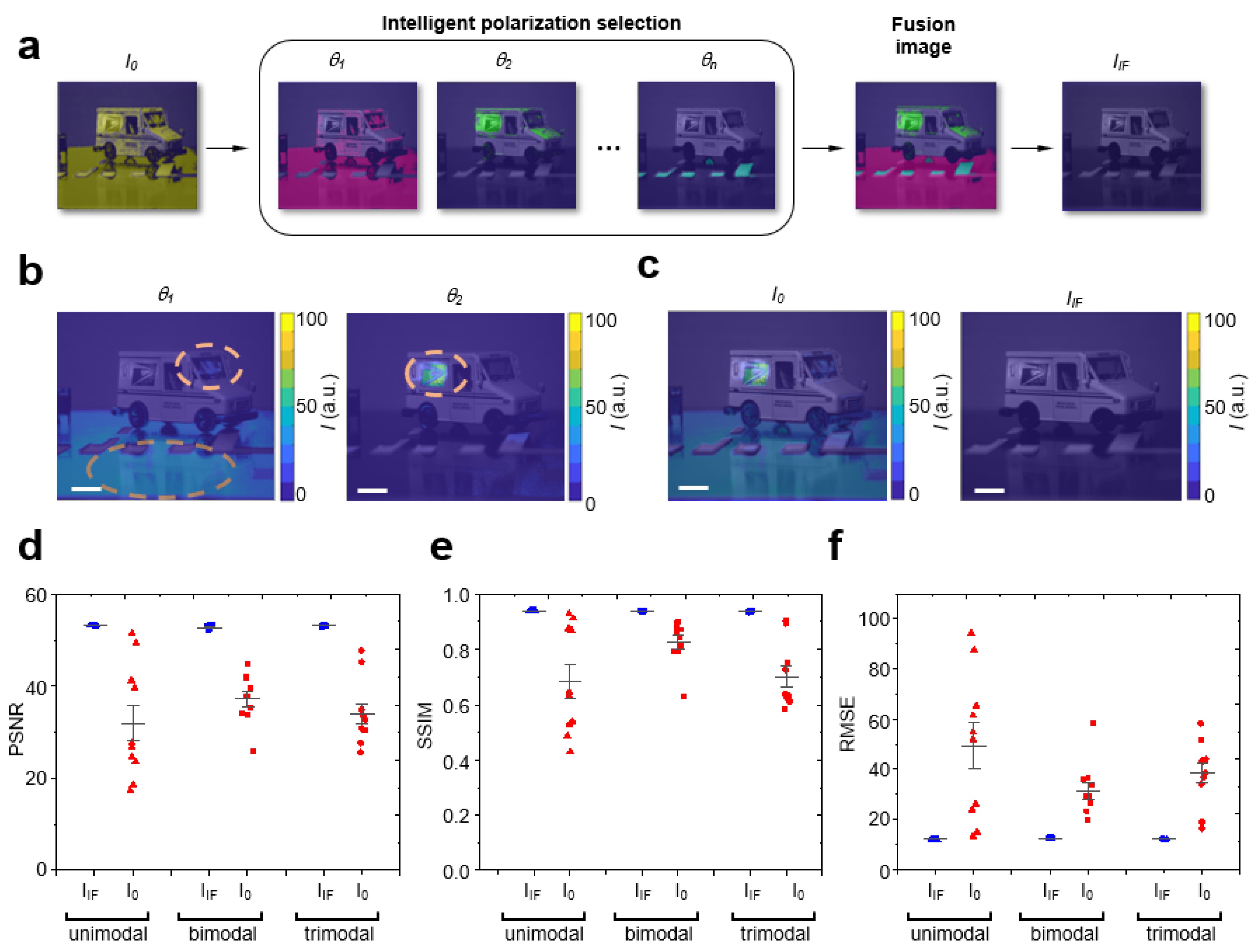

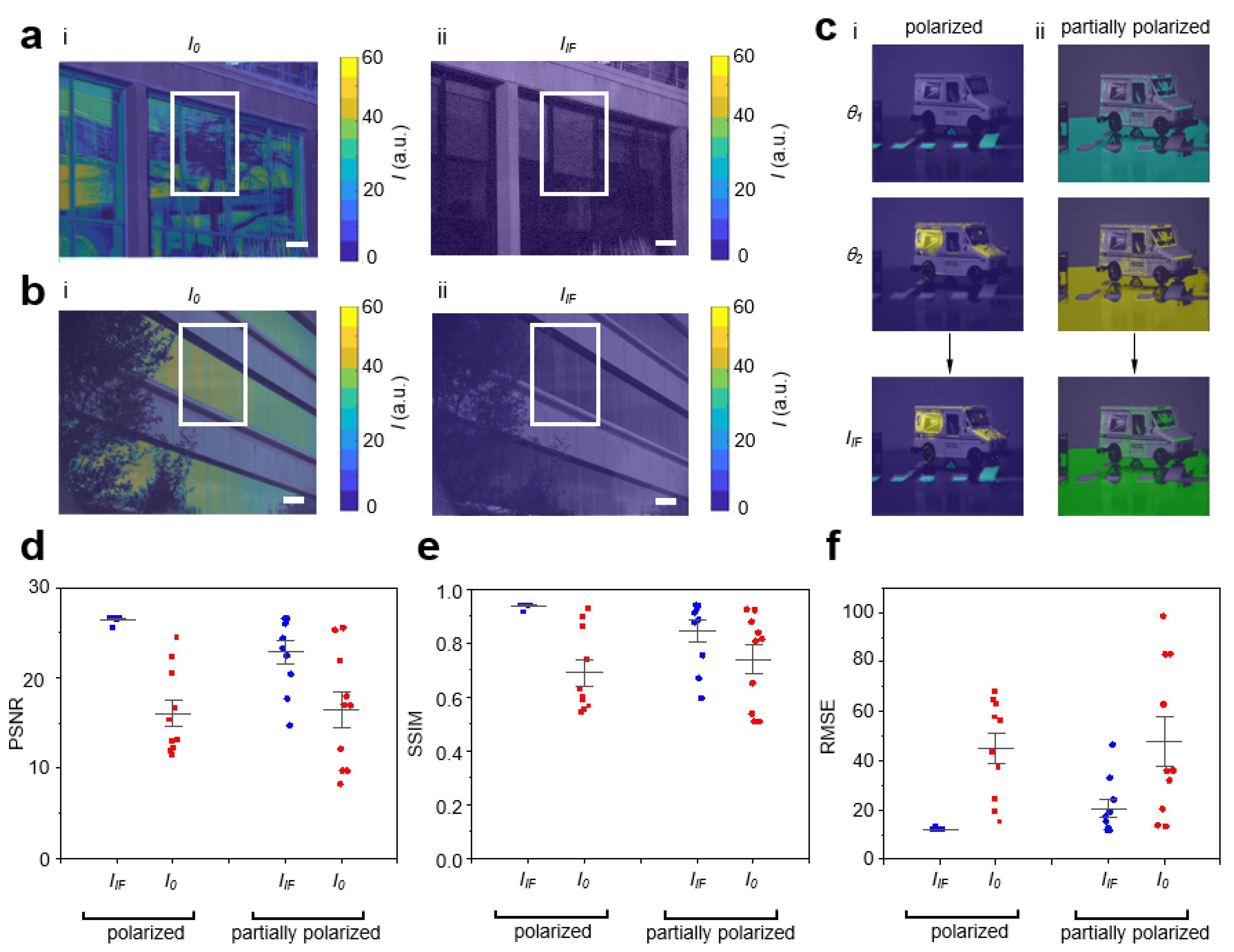

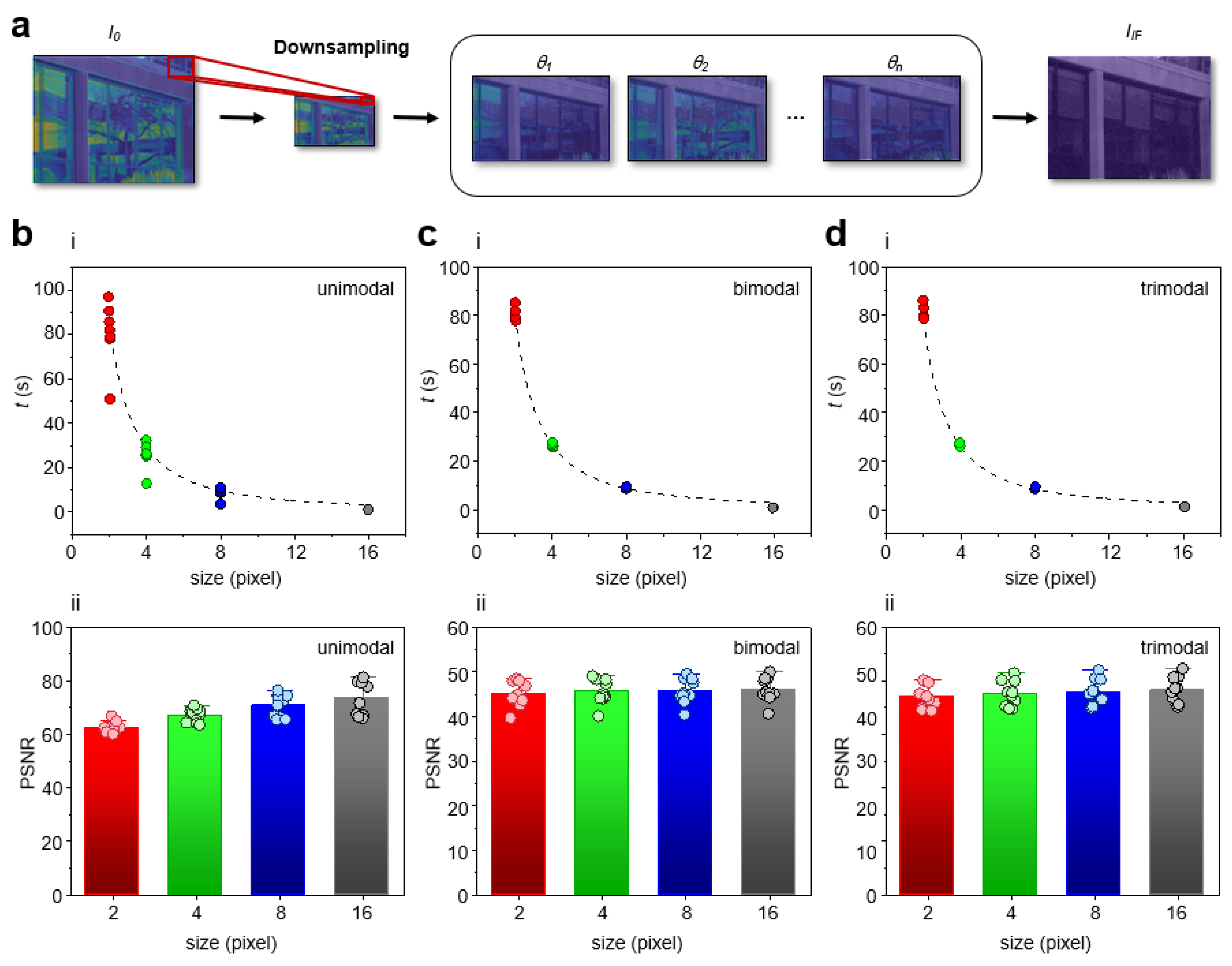

2. Results and Discussion

3. Conclusions

4. Methods

4.1. Experimental Setup

4.2. IF Algorithm

4.3. Intelligent Polarization Selection

4.4. Quantitative Metrics

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Picardi, G.; Chellapurath, M.; Iacoponi, S.; Stefanni, S.; Laschi, C.; Calisti, M. Bioinspired underwater legged robot for seabed exploration with low environmental disturbance. Sci. Robot. 2020, 5, eaaz1012. [Google Scholar] [CrossRef] [PubMed]

- Goddard, M.A.; Davies, Z.G.; Guenat, S.; Ferguson, M.J.; Fisher, J.C.; Akanni, A.; Ahjokoski, T.; Anderson, P.M.L.; Angeoletto, F.; Antoniou, C.; et al. A global horizon scan of the future impacts of robotics and autonomous systems on urban ecosystems. Nat. Ecol. Evol. 2021, 5, 219–230. [Google Scholar] [CrossRef] [PubMed]

- Van Derlofske, J.; Pankratz, S.; Franey, E. New film technologies to address limitations in vehicle display ecosystems. J. Soc. Inf. Disp. 2020, 28, 917–925. [Google Scholar] [CrossRef]

- Talvala, E.-V.; Adams, A.; Horowitz, M.; Levoy, M. Veiling glare in high dynamic range imaging. ACM Trans. Graph. 2007, 26, 37. [Google Scholar] [CrossRef]

- Nishihara, H.; Nagao, T. Extraction of illumination effects from natural images with color transition model. In International Symposium on Visual Computing; Springer: Berlin/Heidelberg, Germany, 2008; 5359 LNCS; pp. 752–761. [Google Scholar]

- Xue, T.; Rubinstein, M.; Liu, C.; Freeman, W.T. A computational approach for obstruction-free photography. ACM Trans. Graph. 2015, 34, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Guo, X.; Cao, X.; Ma, Y. Robust separation of reflection from multiple images. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2014, 2195–2202. [Google Scholar] [CrossRef]

- Amer, K.O.; Elbouz, M.; Alfalou, A.; Brosseau, C.; Hajjami, J. Enhancing underwater optical imaging by using a low-pass polarization filter. Opt. Express 2019, 27, 621. [Google Scholar] [CrossRef]

- Raut, H.K.; Ganesh, V.A.; Nair, A.S.; Ramakrishna, S. Anti-reflective coatings: A critical, in-depth review. Energy Environ. Sci. 2011, 4, 3779–3804. [Google Scholar] [CrossRef]

- Li, C.; Yang, Y.; He, K.; Lin, S.; Hopcroft, J.E. Single Image Reflection Removal through Cascaded Refinement. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2020, 10, 3565–3574. [Google Scholar]

- Wolff, L.B. Polarization camera for computer vision with a beam splitter. J. Opt. Soc. Am. A 1994, 11, 2935. [Google Scholar] [CrossRef]

- Mooney, J.G.; Johnson, E.N. A Comparison of Automatic Nap-of-the-earth Guidance Strategies for Helicopters. J. F. Robot. 2014, 33, 1–17. [Google Scholar]

- McCann, J.J.; Rizzi, A. Camera and visual veiling glare in HDR images. J. Soc. Inf. Disp. 2007, 15, 721. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Feng, H.; Xu, Z.; Li, Q.; Chen, Y. Single image veiling glare removal. J. Mod. Opt. 2018, 65, 2254–2264. [Google Scholar] [CrossRef]

- Rizzi, A.; Pezzetti, M.; McCann, J.J. Glare-limited appearances in HDR images. Final Progr. Proc.-IS T/SID Color Imaging Conf. 2007, 293–298. [Google Scholar] [CrossRef]

- Baker, L.R. Veiling glare in digital cameras. Imaging Sci. J. 2006, 54, 233–239. [Google Scholar] [CrossRef]

- Wetzstein, G.; Heidrich, W.; Luebke, D. Optical image processing using light modulation displays. Comput. Graph. Forum 2010, 29, 1934–1944. [Google Scholar] [CrossRef] [Green Version]

- Esser, M. Handbook of Camera Monitor Systems; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lin, S.-S.; Yemelyanov, K.M.; Pugh, E.N., Jr.; Engheta, N. Separation and contrast enhancement of overlapping cast shadow components using polarization. Opt. Express 2006, 14, 7099. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Park, Y.; Lee, S.E. Ultraprecision Imaging and Manipulation of Plasmonic Nanostructures by Integrated Nanoscopic Correction. Small 2021, 17, e2007610. [Google Scholar] [CrossRef]

- Liu, Y.; Zu, D.; Zhang, Z.; Zhao, X.; Cui, G.; Hentschel, M.; Park, Y.; Lee, S.E. Rapid Depolarization-Free Nanoscopic Background Elimination of Cellular Metallic Nanoprobes. Adv. Intell. Syst. 2022, 4, 2200180. [Google Scholar] [CrossRef]

- Lee, S.E.; Liu, G.L.; Kim, F.; Lee, L.P. Remote optical switch for localized and selective control of gene interference. Nano Lett. 2009, 9, 562–570. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Somin, E.L.; Sasaki, D.Y.; Perroud, T.D.; Yoo, D.; Patel, K.D.; Lee, L.P. Biologically functional cationic phospholipid-gold nanoplasmonic carriers of RNA. J. Am. Chem. Soc. 2009, 131, 14066–14074. [Google Scholar]

- Lee, S.E.; Lee, L.P. Biomolecular plasmonics for quantitative biology and nanomedicine. Curr. Opin. Biotechnol. 2010, 21, 489–497. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.E.; Lee, L.P. Nanoplasmonic gene regulation. Curr. Opin. Chem. Biol. 2010, 14, 623–633. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.E.; Sasaki, D.Y.; Park, Y.; Xu, R.; Brennan, J.S.; Bissell, M.J.; Lee, L.P. Photonic gene circuits by optically addressable siRNA-Au nanoantennas. ACS Nano 2012, 6, 7770–7780. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.E.; Alivisatos, A.P.; Bissell, M.J. Toward plasmonics-enabled spatiotemporal activity patterns in three-dimensional culture models. Syst. Biomed. 2013, 1, 12–19. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.E.; Chen, Q.; Bhat, R.; Petkiewicz, S.; Smith, J.M.; Ferry, V.E.; Correia, A.L.; Alivisatos, A.P.; Bissell, M.J. Reversible Aptamer-Au Plasmon Rulers for Secreted Single Molecules. Nano Lett. 2015, 15, 4564–4570. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Park, Y.; Lee, S.E. Thermo-responsive mechano-optical plasmonic nano-antenna. Appl. Phys. Lett. 2016, 109, 013109. [Google Scholar] [CrossRef]

- Lin, W.K.; Cui, G.; Burns, Z.; Zhao, X.; Liu, Y.; Zhang, Z.; Wang, Y.; Ye, X.; Park, Y.; Lee, S.E. Optically and Structurally Stabilized Plasmo-Bio Interlinking Networks. Adv. Mater. Interfaces 2021, 8, 2001370. [Google Scholar] [CrossRef]

- Murphy, E.; Liu, Y.; Krueger, D.; Prasad, M.; Lee, S.E.; Park, Y. Visible-Light Induced Sustainable Water Treatment Using Plasmo-Semiconductor Nanogap Bridge Array, PNA. Small 2021, 17, 2006044. [Google Scholar] [CrossRef]

- Saha, T.; Mondal, J.; Khiste, S.; Lusic, H.; Hu, Z.W.; Jayabalan, R.; Hodgetts, K.J.; Jang, H.; Sengupta, S.; Lee, S.E.; et al. Nanotherapeutic approaches to overcome distinct drug resistance barriers in models of breast cancer. Nanophotonics 2021, 10, 3063–3073. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Yoon, H.J.; Lee, S.E.; Lee, L.P. Multifunctional Cellular Targeting, Molecular Delivery, and Imaging by Integrated Mesoporous-Silica with Optical Nanocrescent Antenna: MONA. ACS Nano 2022, 16, 2013–2023. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Jeong, H.; Zu, D.; Zhao, X.; Senaratne, P.; Filbin, J.; Silber, B.; Kang, S.; Gladstone, A.; Lau, M.; et al. Dynamic observations of CRISPR-Cas target recognition and cleavage heterogeneities. Nanophotonics 2022, 11, 4419–4425. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Do, H.; Yoon, C.; Liu, Y.; Zhao, X.; Gregg, J.; Da, A.; Park, Y.; Lee, S.E. Intelligent Fusion Imaging Photonics for Real-Time Lighting Obstructions. Sensors 2023, 23, 323. https://doi.org/10.3390/s23010323

Do H, Yoon C, Liu Y, Zhao X, Gregg J, Da A, Park Y, Lee SE. Intelligent Fusion Imaging Photonics for Real-Time Lighting Obstructions. Sensors. 2023; 23(1):323. https://doi.org/10.3390/s23010323

Chicago/Turabian StyleDo, Hyeonsu, Colin Yoon, Yunbo Liu, Xintao Zhao, John Gregg, Ancheng Da, Younggeun Park, and Somin Eunice Lee. 2023. "Intelligent Fusion Imaging Photonics for Real-Time Lighting Obstructions" Sensors 23, no. 1: 323. https://doi.org/10.3390/s23010323

APA StyleDo, H., Yoon, C., Liu, Y., Zhao, X., Gregg, J., Da, A., Park, Y., & Lee, S. E. (2023). Intelligent Fusion Imaging Photonics for Real-Time Lighting Obstructions. Sensors, 23(1), 323. https://doi.org/10.3390/s23010323