A Query Language for Exploratory Analysis of Video-Based Tracking Data in Padel Matches

Abstract

:1. Introduction

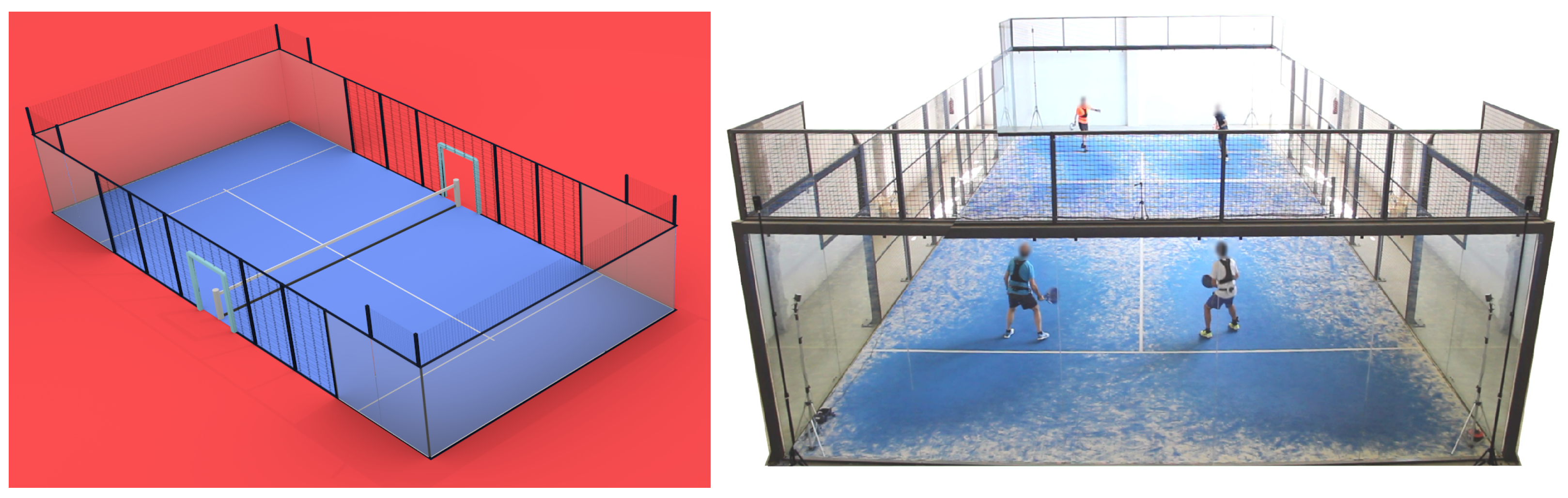

1.1. Padel Essential Characteristics

1.2. Video-Based Tracking in Sport Science

1.3. Structuring Tracking Data

1.4. Retrieving Data about Specific In-Game Situations from Tabular Data

- E1

- “Players should try to win the net zone as much as they can; it is easier to score a point from the net zone than from the backcourt zone”.

- E2

- “An effective way to win the net zone is to play a lob”.

- E3

- “When a player is about to serve, his/her partner should be waiting in the net zone, at about 2 m from the net”.

- Q1

- Retrieve all points, distinguishing by the winning team and the zone of the hitter player.

- Q2

- Retrieve all lob shots with an additional column indicating whether the players could win the net zone or not.

- Q3

- Retrieve all frames immediately after a serve, along with the court-space position of the server’s partner.

1.5. Extracting Essential Information without Missing Relevant Details

1.6. Contributions

2. Design Principles for the Query Language

- Expressiveness We wish the language to support complex queries, combining arbitrary conditions on player positions, poses, distances, shot attributes, court zones, timing, scores, sequences, and any other fact in the tabular data or that can be derived from it (such as speed, acceleration, motion paths).

- Compactness Queries (even complex ones) should require little space (e.g., a few lines of code).

- Expandability Analysts should be able to easily extend the language to incorporate their vision of fuzzy concepts. For example, different analysts might want to define court zones using different criteria and reuse these concepts in queries. When it comes to processing data, many concepts in padel (e.g., “forced error”, “good lob”) need to be defined precisely, and the concrete definition might vary among analysts, or depend on the player profiles (professional vs. amateur). Once these concepts are defined, they should integrate seamlessly into query definitions.

- Easy to write We wish sports analysts to be able to write new queries, or at least be able to modify existing examples to suit their needs.

- Easy to read We wish sports analysts to be able to understand the queries after a brief introduction to the main concepts of the language.

3. Design of the Query Language

3.1. Domain Analysis

3.1.1. Domain Analysis of a Video-Recorded Padel Match

3.1.2. Domain Analysis of Queries about Padel Matches

3.2. Query Language Syntax

4. Components of a Query

- Query type The output of all our queries is a table with the retrieved data (more precisely, a QueryResult object that holds a Pandas’ DataFrame). The Query type refers to the different types of queries according to the expected output (that is, the type of the rows in the output DataFrame). Table 5 shows the query types supported by our language. From now on, we will use the generic word “item” to collectively refer to the entities (points, shots, frames…) that will form the rows of the output.

- Query definition The query definition is a Boolean predicate that establishes which items should be retrieved (e.g., all shots that match a specific shot type). In our language, this takes the form of a decorated Python function that takes as input an object of the intended class (e.g., a Shot object if defining a shot query) and returns a true/false value. These predicates act as filters that discard items for which the predicate evaluates to false, and collect items for which the predicates evaluate to true. The output table will contain as many rows as items satisfy the predicate.

- Attributes This refers to the collection of attributes we wish for every item in the output table (that is, the output table will have one column for each attribute). For example, for every smash, we might be interested only in the name of the player, or also in its court-space position, or just the distance to the net. One of the key ingredients of our language is that attributes are arbitrary Python expressions, with the only condition that they should be able to evaluate correctly from the item. For example, a shot has attributes such as hitter (the player that executed the shot), frame (the frame where the shot occurs), etc. Attributes can be simple expressions such as shot.hitter or more complex ones such as shot.next.hitter.distance_to_net < 3.

- Scope Once we have specified the elements above, we might want to execute the query on different collections of matches. The scope is the collection of matches that will be searched for items fulfilling the predicate.

5. Key Features of the API

5.1. Navigation through Method Chaining

- point.shots[i] # the i-th shot of a point

- shot.point # the point the shot belongs to

- shot.next # the next shot within the point (or None if last shot)

- shot.prev # the previous shot within the point (or None if serve)

- shot.hitter # player that executed the shot

- shot.prev.hitter # hitter of the previous shot

- shot.next.next.hitter.distance_to_net # for two shots ahead, distance to net of the hitter

- shot.point.winner # team that won the point the shot belongs to

- shot.point.game.winner # team that won the game the shot belongs to

- can be written simply as frame.match.gender. Although implementation details are discussed in the Appendix A, we wish to note that the methods above are implemented as Python properties (using the @property decorator). Therefore instead of writing

- we can omit the parentheses and write

- which is a bit more compact. Since query definitions require read-only access to all these objects, we consider that using properties instead of methods is safe.

5.2. Binding Players to Frames

5.3. Tag Collections

- # Define a new tag describing a volley near the net

- @shot_tag

- def attack_volley(shot):

- return (shot.like(“vd”) or shot.like(“vr”)) and shot.hitter.distance_to_net < 1.5

- # Add the tag to a match

- attack_volley(match)

- # Query definitions can now use the new concept

- @shot_query

- def attack_volley_after_return_of_serve(shot):

- return shot.like(“attack_volley”) and shot.prev.like(“return”)

6. A Complete Example

- # Define the query

- @shot_query

- def attack_drive_volley(shot):

- return shot.like(“vd”) and (shot.hitter.distance_to_net < 5)

- # Define the attributes

- attribs = [“tags”, “frame.frame_number”, “hitter.position.x”,

- “hitter.distance_to_net”, “hitter.last_name”]

- # Execute query on a match

- match = load(“Estrella Damm Open’20 - Women’s Final”)

- result = attack_drive_volley(match, attribs)

- # Analyze the results

- result.analyze()

- result.plot_positions()

7. Classes and Properties

7.1. Match, Set, Game, Point, Shot, Frame

7.2. Shot Types

7.3. Player

8. Evaluation

8.1. Test Dataset

8.2. Test Statements

- S1

- “One generally volleys cross-court” (source: [43])

- S2

- “A very effective volley is a fast, down-the-line volley to the opponent’s feet” (source: [43])

- S3

- “An interesting aspect of women’s padel is that the game speed is close to a second and a half, to be exact 1.37 s” (source: [3], referring to a 2020 sample of padel matches).

- S4

- “The serve should be a deep shot, targeted towards the glass, the T, or the receiving player’s feet” (source: basic padel tactics).

- S5

- “When a player is about to serve, his/her partner should be waiting in the net zone, at about 2 m from the net”. (source: basic padel tactics).

- S6

- “The serve is an attempt to seize the initiative for the attack, therefore the serving team tries to maintain the initiative by approaching the net”. (source: [5]).

- S7

- “Players should try to win the net zone as much as they can; it is easier to score a point from the net zone than from the defense zone” (source: basic padel tactics).

- S8

- “The (physiological) intensity developed during the practice of padel is close to that experienced in the practice of singles tennis (…). The real demands are different. This is probably due to the shorter distance covered by padel players in their actions. An aspect that can be compensated by a greater number of actions compared to tennis, due to the continuity allowed by the walls”. (source: [5]).

- S9

- “An effective way to win the net zone is to play a lob” (source: [43]).

- S10

- “The data corroborate one of the maxims that surround this sport: first return and first volley inside, implying that no easy point should be given to the opponents” (source: [5]).

8.3. Queries

8.3.1. S1: “One Generally Volleys Cross-Court”

- Q1

- “Retrieve all shots that are volleys (either forehand or backhand)”.

- @shot_tag

- def volley(shot):

- return shot.one_of(“vd,vr”)

- volley(match) # add “volley” tag to shots in this match

- @shot_query

- def volleys(shot):

- return shot.like(“volley”)

- volley(match)

- attribs = [“frame.frame_number”, “hitter.position.x”, “hitter.position.y”, “hitter.last_name”,

- “next.hitter.position.x”, “next.hitter.position.y”, “angle”, “abs_angle”]

- q = volleys(match, attribs)

- q.plot_directions(color=‘angle’)

- q.plot_distribution(density=‘angle’, extent=[-30,30], groupby=‘player’)

- # Volleys shot from less than 4 m from the net

- @shot_query

- def volley_from_attack_zone(shot):

- return shot.like(“volley”) and shot.hitter.distance_to_net < 4

- # Volleys after a drive shot from the opponent

- @shot_query

- def volley_after_drive(shot):

- return shot.like(“volley”) and shot.prev.like(“drive”)

8.3.2. S2: “Effectiveness of a Fast, Down-the-Line Volley to the Opponent’S Feet”

- Q2

- “Extract all forehand or backhand volleys whose duration is below some threshold (1 s) and that are down-the-line”.

- @shot_query

- def fast_down_the_line_volley(shot):

- return shot.like(“volley”) and shot.duration < 1 and shot.abs_angle < 8

- q=fast_down_the_line_volley(match, attribs)

- q.plot_directions(color=‘angle’)

- q.plot_directions(color=‘winning’)

8.3.3. S3: “The Game Speed Is Close to a Second and a Half”

- Q3

- “For each shot, get its duration (the time interval between a shot and the next shot)”.

- def all_non_last_shots(shot):

- return shot.next # equivalent to: shot.next is not None

- attribs = [“hitter.position.x”, “hitter.position.y”, “id”, “duration”]

- q.plot_distribution(“duration”)

- q.plot_positions(color=‘duration’)

8.3.4. S4: “The Serve Should Be a Deep Shot”

- Q4

- “Retrieve all serves; for each serve, get the serving player’s position and the serve direction”.

- @shot_query

- def serves(shot):

- return shot.like(“serve”) and shot.next

- q = serves(match, attribs)

- q.plot_directions(color=“angle”)

- q.plot_directions(color=“player”)

8.3.5. S5: “Serving Player’s Partner Should Be Waiting in the Net Zone”

- Q5

- “Retrieve all serves; for each serve, get the position of the partner of the serving player”.

- q = serves(match, attribs + [“hitter.partner.last_name”, “hitter.partner.position.x”,

- “hitter.partner.position.y”])

- q.plot_positions(color=‘player’)

8.3.6. S6: “After Serving, the Player Should Move Quickly to the Net”

- Q6

- “Retrieve all frames immediately after a serve (e.g., for 1 s); for each frame, get the position of the serving player”.

- @frame_query

- def all_frames_after_serve(frame):

- return (frame.time - frame.point.start_time) < 1

- q=all_frames_after_serve(match, fattribs)

- q.plot_player_positions()

8.3.7. S7: “Players Should Try to Win the Net Zone as Much as They Can”

- Q7a

- “For each point, compute the total time both players were in the net zone, for the team that wins the point, and also for the team that loses the point”.

- Q7b

- “For each winning shot, retrieve the position (and distance to the net) of the player that hit that ball”.

- @frame_query

- def time_on_net_for_winning_team(frame):

- winner = frame.shot.point.winner

- return winner and frame.distance_to_net(winner.forehand_player) < 4

- and frame.distance_to_net(winner.backhand_player) < 4

- q1=time_on_net_for_winning_team(match,[“duration”, (“point.id”, “point”)])

- q1.sum(“point”) # Group by point and sum

- where we compute for how long both players of a team are in the net zone (here, 4 m from the net).

- q1.addColumn(“Team”, “Point Winner”)

- q2.addColumn(“Team”, “Point Loser”)

- q = concat([q1,q2], “Time on the net”)

- q.plot_bar_chart(x=‘point’, y=‘duration’, color=‘Team’)

- @shot_query

- def winning_shots(shot):

- if not shot.hitter.from_point_winning_team:

- return False # not from the winning team

- return not shot.next or not shot.next.next # just winning shots

- attribs = [“hitter.last_name”, “hitter.position.x”, “hitter.position.y”,

- “frame.frame_number”, (“hitter.distance_to_net”, “distance”)]

- q = winning_shots(match, attribs)

- q.plot_positions()

- q.plot_histogram(“distance”)

8.3.8. S8: “Distance Covered by the Players”

- Q8

- “Retrieve all frames, together with the position of the players and the distance they traversed for each point”.

- @frame_query

- def all_frames(frame):

- return True

- q=all_frames(match, fattribs)

- q.plot_player_positions()

- q1=all_frames(match, [(“salazar.distance_from_prev_frame”, “distance”), …])

- q1.sum(“point”)

- q2=all_frames(match, [(“sanchez.distance_from_prev_frame”, “distance”), …])

- q2.sum(“point”)

- q1.addColumn(“Player”, “Salazar”)

- q2.addColumn(“Player”, “Sanchez”)

- q = concat([q1,q2], “Distance traversed”)

- q.plot_bar_chart(x=‘point’, y=‘distance’, color=‘Player’)

8.3.9. S9: “Good Lobs”

- Q9

- “Retrieve all lobs followed by a defensive shot”.

- @shot_tag

- def defensive(shot):

- return shot.one_of(“d,r,ad,ar,pld,plr,spd,spr,bpd,bpr,dpa,dpc,dpag,cp,cpld,cplr”)

- @shot_query

- def lobs(shot):

- return shot.like(“lob”) and shot.next.like(“defensive”)

8.3.10. S10: “First Volley”

- Q10

- “Retrieve all serves directed to the T, followed by any shot, followed by a volley to the side wall of the same player that returned the serve”.

- @shot_query

- def serve_return_volley(shot):

- return shot.like(“serve”) and shot.next.hitter.distance_to_side_wall > 2.5 and

- shot.next.next.like(“volley”) and shot.next.next.next.hitter.distance_to_side_wall < 2.5

- and shot.next.hitter == shot.next.next.next.hitter

- where shot is the serve, shot.next is the return, shot.next.next is the volley, and shot.next.next.next is the volley’s return.

8.4. Comparison with State-of-the-Art Analysis Tools

- def first_volley(df):

- df = df.reset_index() # make sure indexes pair with the number of rows

- for index, row in df.iterrows():

- if (row[“shot_code”] == “serve” and

- df[“hitter.distance_to_side_wall”][index + 1] > 2.5 and

- df[“shot_code”][index + 2] in [“vd”, “vr”] and

- df[“hitter.distance_to_side_wall”][index + 3] < 2.5 and

- df[“hitter.id”][index + 1] == df[“hitter.id”][index + 3]):

- df.loc[index, “Filter”] = True

- return df[ df[“Filter”] == True ]

- @shot_query

- def first_volley(shot):

- return shot.like(“serve”) and shot.next.hitter.distance_to_side_wall > 2.5 and

- shot.next.next.like(“volley”) and shot.next.next.next.hitter.distance_to_side_wall < 2.5

- and shot.next.hitter == shot.next.next.next.hitter

9. Discussion

10. Applications

- Analyze the frequency and success rate of the different technical actions (types of shots, their direction and speed) according to the in-game situation (preceding technical actions, position, and speed of the partner and the opponents).

- Analyze the distance covered by the players, their positions, displacements, and coordinated movements, and relate them with the other variables [45].

- Analyze how all the variables above vary between women’s matches and men’s matches.

- Analyze how other external factors (e.g., outdoor match) might affect the variables above.

- Retrieve specific parts of the video (e.g., certain shot sequences) to quickly analyze visually other aspects not captured by the input tabular data.

- Compare the technical actions adopted by the trainee in particular scenarios against those adopted by professional players.

- Show trainees specific segments of professional padel videos to provide visual evidence and representative examples of strategic recommendations.

- If multiple videos are available, compare the different variables defining the game profile of a trainee with those of other amateur or professional players.

- Help to monitor the progress and performance improvement of the trainees.

11. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Implementation Details

References

- Priego, J.I.; Melis, J.O.; Belloch, S.L.; Soriano, P.P.; García, J.C.G.; Almenara, M.S. Padel: A Quantitative study of the shots and movements in the high-performance. J. Hum. Sport Exerc. 2013, 8, 925–931. [Google Scholar] [CrossRef] [Green Version]

- Escudero-Tena, A.; Sánchez-Alcaraz, B.J.; García-Rubio, J.; Ibáñez, S.J. Analysis of Game Performance Indicators during 2015–2019 World Padel Tour Seasons and Their Influence on Match Outcome. Int. J. Environ. Res. Public Health 2021, 18, 4904. [Google Scholar] [CrossRef] [PubMed]

- Almonacid Cruz, B.; Martínez Pérez, J. Esto es Pádel; Editorial Aula Magna; McGraw-Hill: Sevilla, Spain, 2021. (In Spanish) [Google Scholar]

- Demeco, A.; de Sire, A.; Marotta, N.; Spanò, R.; Lippi, L.; Palumbo, A.; Iona, T.; Gramigna, V.; Palermi, S.; Leigheb, M.; et al. Match analysis, physical training, risk of injury and rehabilitation in padel: Overview of the literature. Int. J. Environ. Res. Public Health 2022, 19, 4153. [Google Scholar] [CrossRef] [PubMed]

- Almonacid Cruz, B. Perfil de Juego en pádel de Alto Nivel. Ph.D. Thesis, Universidad de Jaén, Jaén, Spain, 2011. [Google Scholar]

- Santiago, C.B.; Sousa, A.; Estriga, M.L.; Reis, L.P.; Lames, M. Survey on team tracking techniques applied to sports. In Proceedings of the 2010 International Conference on Autonomous and Intelligent Systems, AIS 2010, Povoa de Varzim, Portugal, 21–23 June 2010; pp. 1–6. [Google Scholar]

- Shih, H.C. A survey of content-aware video analysis for sports. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1212–1231. [Google Scholar] [CrossRef] [Green Version]

- Mukai, R.; Araki, T.; Asano, T. Quantitative Evaluation of Tennis Plays by Computer Vision. IEEJ Trans. Electron. Inf. Syst. 2013, 133, 91–96. [Google Scholar] [CrossRef]

- Lara, J.P.R.; Vieira, C.L.R.; Misuta, M.S.; Moura, F.A.; de Barros, R.M.L. Validation of a video-based system for automatic tracking of tennis players. Int. J. Perform. Anal. Sport 2018, 18, 137–150. [Google Scholar] [CrossRef]

- Pingali, G.; Opalach, A.; Jean, Y. Ball tracking and virtual replays for innovative tennis broadcasts. In Proceedings of the 15th International Conference on Pattern Recognition. ICPR-2000, Barcelona, Spain, 3–7 September 2000; Volume 4, pp. 152–156. [Google Scholar]

- Mao, J. Tracking a Tennis Ball Using Image Processing Techniques. Ph.D. Thesis, University of Saskatchewan, Saskatoon, SK, Canada, 2006. [Google Scholar]

- Qazi, T.; Mukherjee, P.; Srivastava, S.; Lall, B.; Chauhan, N.R. Automated ball tracking in tennis videos. In Proceedings of the 2015 Third International Conference on Image Information Processing (ICIIP), Waknaghat, India, 21–24 December 2015; pp. 236–240. [Google Scholar]

- Kamble, P.R.; Keskar, A.G.; Bhurchandi, K.M. Ball tracking in sports: A survey. Artif. Intell. Rev. 2019, 52, 1655–1705. [Google Scholar] [CrossRef]

- Zivkovic, Z.; van der Heijden, F.; Petkovic, M.; Jonker, W. Image segmentation and feature extraction for recognizing strokes in tennis game videos. In Proceedings of the ASCI, Heijen, The Netherlands, 30 May–1 June 2001. [Google Scholar]

- Dahyot, R.; Kokaram, A.; Rea, N.; Denman, H. Joint audio visual retrieval for tennis broadcasts. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’03), Hong Kong, China, 6–10 April 2003; Volume 3, p. III-561. [Google Scholar]

- Yan, F.; Christmas, W.; Kittler, J. A tennis ball tracking algorithm for automatic annotation of tennis match. In Proceedings of the British Machine Vision Conference, Oxford, UK, 5–8 September 2005; Volume 2, pp. 619–628. [Google Scholar]

- Ramón-Llin, J.; Guzmán, J.; Martínez-Gallego, R.; Muñoz, D.; Sánchez-Pay, A.; Sánchez-Alcaraz, B.J. Stroke Analysis in Padel According to Match Outcome and Game Side on Court. Int. J. Environ. Res. Public Health 2020, 17, 7838. [Google Scholar] [CrossRef]

- Mas, J.R.L.; Belloch, S.L.; Guzmán, J.; Vuckovic, G.; Muñoz, D.; Martínez, B.J.S.A. Análisis de la distancia recorrida en pádel en función de los diferentes roles estratégicos y el nivel de juego de los jugadores (Analysis of distance covered in padel based on level of play and number of points per match). Acción Mot. 2020, 25, 59–67. [Google Scholar]

- Vučković, G.; Perš, J.; James, N.; Hughes, M. Measurement error associated with the SAGIT/Squash computer tracking software. Eur. J. Sport Sci. 2010, 10, 129–140. [Google Scholar] [CrossRef]

- Ramón-Llin, J.; Guzmán, J.F.; Llana, S.; Martínez-Gallego, R.; James, N.; Vučković, G. The Effect of the Return of Serve on the Server Pair’s Movement Parameters and Rally Outcome in Padel Using Cluster Analysis. Front. Psychol. 2019, 10, 1194. [Google Scholar] [CrossRef] [PubMed]

- Javadiha, M.; Andujar, C.; Lacasa, E.; Ric, A.; Susin, A. Estimating Player Positions from Padel High-Angle Videos: Accuracy Comparison of Recent Computer Vision Methods. Sensors 2021, 21, 3368. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 1 November 2021).

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 483–499. [Google Scholar]

- Huang, J.; Zhu, Z.; Guo, F.; Huang, G. The Devil Is in the Details: Delving Into Unbiased Data Processing for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020. [Google Scholar]

- Zhang, F.; Zhu, X.; Dai, H.; Ye, M.; Zhu, C. Distribution-aware coordinate representation for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 7093–7102. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Cheng, B.; Xiao, B.; Wang, J.; Shi, H.; Huang, T.S.; Zhang, L. HigherHRNet: Scale-Aware Representation Learning for Bottom-Up Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 5386–5395. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Guo, G.; Huang, D.; Han, J. PoseFlow: A Deep Motion Representati–on for Understanding Human Behaviors in Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Šajina, R.; Ivašić-Kos, M. 3D Pose Estimation and Tracking in Handball Actions Using a Monocular Camera. J. Imaging 2022, 8, 308. [Google Scholar] [CrossRef]

- Soto-Fernández, A.; Camerino, O.; Iglesias, X.; Anguera, M.T.; Castañer, M. LINCE PLUS software for systematic observational studies in sports and health. Behav. Res. Methods 2022, 54, 1263–1271. [Google Scholar] [CrossRef]

- Mishra, P.; Eich, M.H. Join processing in relational databases. ACM Comput. Surv. 1992, 24, 63–113. [Google Scholar] [CrossRef] [Green Version]

- Fister, I.; Fister, I.; Mernik, M.; Brest, J. Design and implementation of domain-specific language easytime. Comput. Lang. Syst. Struct. 2011, 37, 151–167. [Google Scholar] [CrossRef]

- Van Deursen, A.; Klint, P. Domain-specific language design requires feature descriptions. J. Comput. Inf. Technol. 2002, 10, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Remohi-Ruiz, J.J. Pádel: Lo Esencial. Nivel Iniciación y Medio; NPQ Editores: Valencia, Spain, 2019. (In Spanish) [Google Scholar]

- Mellado-Arbelo, Ó.; Vidal, E.B.; Usón, M.V. Análisis de las acciones de juego en pádel masculino profesional (Analysis of game actions in professional male padel). Cult. Cienc. Deporte 2019, 14, 191–201. [Google Scholar]

- Ramón-Llin, J.; Guzmán, J.F.; Belloch, S.L.; Vuckovic, G.; James, N. Comparison of distance covered in paddle in the serve team according to performance level. J. Hum. Sport Exerc. 2013, 8, S738–S742. [Google Scholar] [CrossRef]

| Start (f) | End (f) | Duration (f) | Duration (s) | Winner | Points A | Points B | Top Left | Top Right | Bottom Left | Bottom Right |

|---|---|---|---|---|---|---|---|---|---|---|

| 13,606 | 13,820 | 214 | 7.1 | B | 0 | 15 | L Sainz | G Triay | A Sánchez | A Salazar |

| 14,093 | 14,785 | 692 | 23.1 | T | 15 | 15 | L Sainz | G Triay | A Sánchez | A Salazar |

| 15,332 | 16,204 | 872 | 29.1 | T | 30 | 15 | L Sainz | G Triay | A Sánchez | A Salazar |

| 16,932 | 17,004 | 72 | 2.4 | B | 30 | 30 | L Sainz | G Triay | A Sánchez | A Salazar |

| 17,378 | 17,661 | 283 | 9.4 | B | 30 | 40 | L Sainz | G Triay | A Sánchez | A Salazar |

| Frame | Player | Shot Type | Lob |

|---|---|---|---|

| 13,604 | TL | Serve | F |

| 13,633 | BR | D | T |

| 13,656 | TL | B | F |

| 13,676 | BR | D | F |

| 13,696 | TL | VD | F |

| Frame | TL i | TL j | TR i | TR j | BL i | BL j | BR i | BR j | TL x | TL y | TR x | TR y | BL x | BL y | BR x | BR y |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 13,614 | 478 | 202 | 785 | 266 | 401 | 554 | 911 | 553 | 1.89 | 18.53 | 7.49 | 13.60 | 2.50 | 1.60 | 7.87 | 1.66 |

| 13,615 | 477 | 202 | 785 | 266 | 401 | 555 | 912 | 554 | 1.87 | 18.52 | 7.49 | 13.60 | 2.50 | 1.59 | 7.88 | 1.62 |

| 13,616 | 477 | 203 | 785 | 266 | 400 | 553 | 914 | 555 | 1.89 | 18.43 | 7.49 | 13.60 | 2.49 | 1.63 | 7.89 | 1.60 |

| 13,617 | 479 | 204 | 785 | 266 | 399 | 550 | 915 | 556 | 1.94 | 18.35 | 7.49 | 13.59 | 2.47 | 1.70 | 7.90 | 1.57 |

| 13,618 | 480 | 206 | 785 | 266 | 398 | 549 | 918 | 556 | 1.96 | 18.21 | 7.49 | 13.59 | 2.46 | 1.74 | 7.93 | 1.58 |

| Application Domain Concepts | Python Language Translation |

|---|---|

| Match, Set, Game, Point, Shot, Frame | Python classes corresponding to temporal play units. |

| First name, Last name, Gender | Python class properties referring to a player. |

| Start, End, Duration | Python class properties of a play unit. |

| Winner, Player | Python classes (Team, Player). |

| Score | Python class property. |

| Query definition | Python function (decorated). |

| Query type | Python function decorator. |

| Query filter | Python function definition. |

| Query name | Python identifier. |

| Query predicate | Body of a Python function that returns a Boolean value. |

| Query execution | Invocation of the function identified by the Query name. |

| The function takes two parameters: the scope and the attributes. | |

| Query scope | Python expression that evaluates to a match or a collection of matches. |

| Query attributes | Python list containing strings representing Python expressions. |

| Query result | Python class that holds the output table. |

| Query Type | Python Decorator | Output DataFrame |

|---|---|---|

| Match query | @match_query | One row for every match that meets the query definition. |

| Set query | @set_query | One row for every set that meets the query definition. |

| Game query | @game_query | One row for every game that meets the query definition. |

| Point query | @point_query | One row for every point that meets the query definition. |

| Shot query | @shot_query | One row for every shot that meets the query definition. |

| Frame query | @frame_query | One row for every frame that meets the query definition. |

| Shot Code | Spanish Name | English Translations |

|---|---|---|

| “s” | “servicio” | “serve” |

| “d” | “derecha” | “drive“, “forehand” |

| “r” | “revés” | “backhand” |

| “ad” | “alambrada derecha” | “right mesh“, “right fence” |

| “ar” | “alambrada revés” | “left mesh“, “left fence” |

| “pld” | “pared lateral de derecha” | “side wall drive” |

| “plr” | “pared lateral de revés” | “side wall backhand” |

| “spd” | “salida de pared de derecha” | “off the wall forehand” |

| “spr” | “salida de pared de revés” | “off the wall backhand” |

| “bpd” | “bajada de pared de derecha” | “off the wall forehand smash” |

| “bpr” | “bajada de pared de revés” | “off the wall backhand smash” |

| “dpa” | “doble pared que abre” | “double wall opening” |

| “dpag” | “doble pared que abre con giro” | “double wall opening with rotation” |

| “dpc” | “doble pared que cierra” | “double wall closing” |

| “cp” | “contrapared” | “back wall boast” |

| “vd” | “volea de derecha” | “drive volley“, “forehand volley” |

| “vr” | “volea de revés” | “backhand volley” |

| “b” | “bandeja” | “defensive smash” |

| “djd” | “dejada” | “stop volley“, “drop shot” |

| “r1” | “remate” | “smash” |

| “r2” | “finta de remate” | “fake smash” |

| “r3” | “remate por 3” | “smash out by 3” |

| “r4” | “remate por 4” | “smash out by 4” |

| “cd” | “contra-ataque de derecha” | “forehand counter-attack” |

| “cr” | “contra-ataque de revés” | “backhand counter-attack” |

| “cpld” | “contrapared lateral derecha” | “right wall boast” |

| “cplr” | “contrapared lateral izquierda” | “left wall boast” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Javadiha, M.; Andujar, C.; Lacasa, E. A Query Language for Exploratory Analysis of Video-Based Tracking Data in Padel Matches. Sensors 2023, 23, 441. https://doi.org/10.3390/s23010441

Javadiha M, Andujar C, Lacasa E. A Query Language for Exploratory Analysis of Video-Based Tracking Data in Padel Matches. Sensors. 2023; 23(1):441. https://doi.org/10.3390/s23010441

Chicago/Turabian StyleJavadiha, Mohammadreza, Carlos Andujar, and Enrique Lacasa. 2023. "A Query Language for Exploratory Analysis of Video-Based Tracking Data in Padel Matches" Sensors 23, no. 1: 441. https://doi.org/10.3390/s23010441

APA StyleJavadiha, M., Andujar, C., & Lacasa, E. (2023). A Query Language for Exploratory Analysis of Video-Based Tracking Data in Padel Matches. Sensors, 23(1), 441. https://doi.org/10.3390/s23010441