Obstacle Detection Method Based on RSU and Vehicle Camera Fusion

Abstract

:1. Introduction

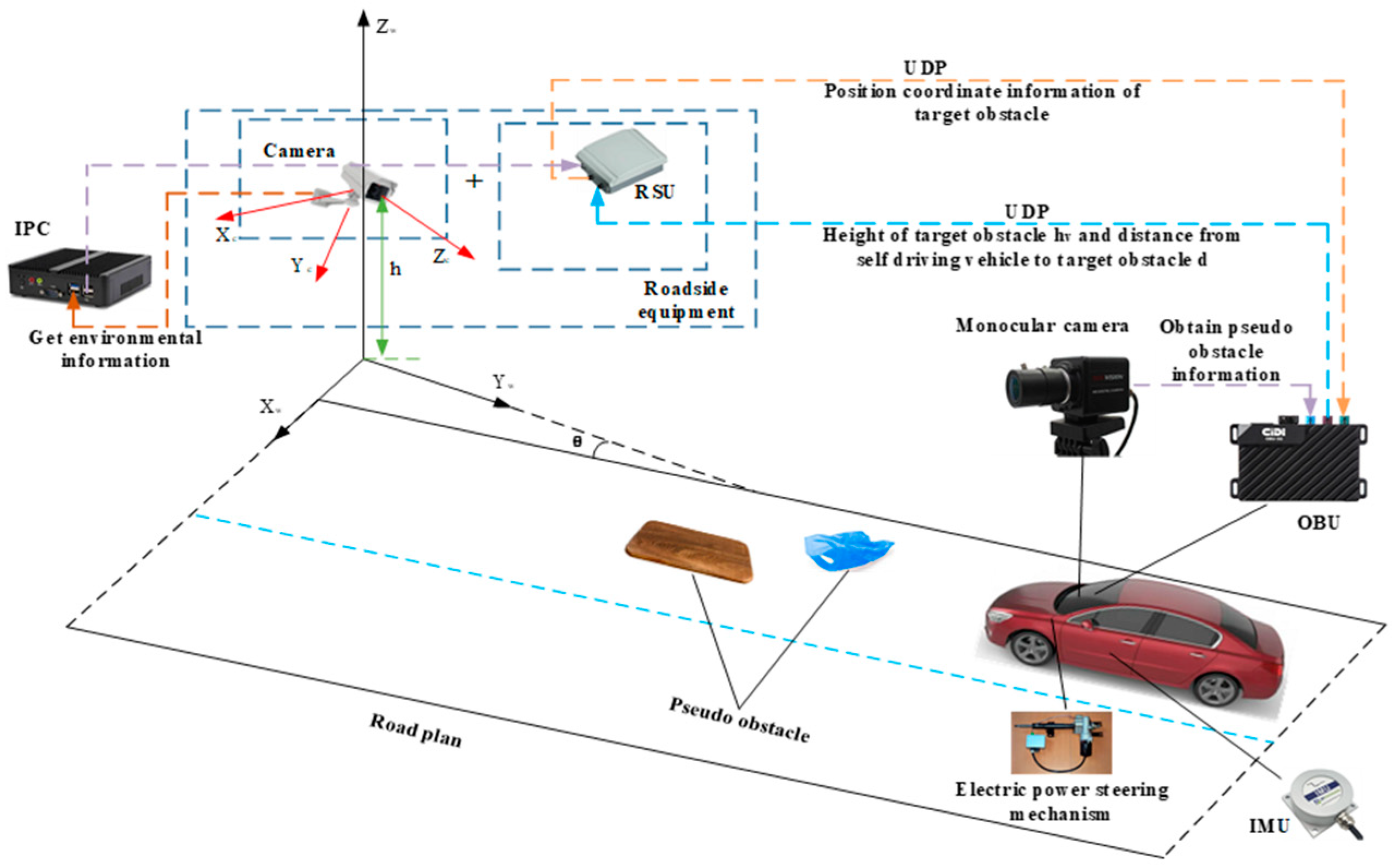

2. Related Works

3. Method

3.1. RSU Obstacle Extraction

- Video framing. In order to select the test scene suitable for the establishment of this paper, the video frame should be decomposed, and the video image should be decomposed into an image sequence according to a certain time or period.

- Image preprocessing. All channel sampling values of each image in image sequence are weighted average, that is, the image is grayed. Then, the Gaussian function is discretized. The Gaussian function value is used as the weight, and the pixels in the grayscale image are weighted and averaged to suppress the noise in the image. In order to make the edge contour in the image smoother, the pre-processed image is closed to solve the adaptive repair of contour fracture in the gray image.

- Build background model . According to the image sequence decomposed by step (1), the scene image suitable for the establishment of this paper is selected as the initial background image, that is, the background model .

- Calculate image pixel difference value and image thresholding. The difference between the gray value of each pixel in the current image frame and the image of each pixel in the background model is calculated, where . According to Equation (1), the dynamic pixels in the region are judged by comparing the difference value of each pixel with the preset threshold . If the difference value of the pixel is greater than the threshold , the pixel is the dynamic target area pixel. If the difference value of the pixel is less than the threshold , the pixel is the background area pixel. Finally, the dynamic target area pixels are integrated to obtain the background difference image :

- Calculate the obstacle position coordinate and locate the obstacle position. False recognition of dynamic pixels is caused by the influence of external environment (light changes, wind speed, etc.). In this paper, we set the object change area threshold in two frames, where is the period step set by the image sequence. The generalized obstacle is defined by comparing the change area, where this obstacle vertical coordinate is the value of the lowest point vertical coordinate of the target obstacle. The obstacle horizontal coordinate is the arithmetic mean of the maximum horizontal coordinate value and the minimum horizontal coordinate value corresponding to the longitudinal coordinate of the lowest point of the target obstacle. Finally, the coordinate points corresponding to the minimum abscissa, the maximum abscissa, the minimum ordinate, and the maximum ordinate of the obstacle in the background difference image are extracted. The above four points are used as frames, and the frame area is the obstacle position area. Figure 4 is the overall flow chart of the RSU generalized obstacle extraction.

3.2. Vehicle-Side Obstacle Detection Method Based on VIDAR

3.2.1. An Obstacle Region Extraction Method Based on MSER Fast Image Matching

- MSER algorithm is used to extract the maximum stable extreme value region .

- Regional range difference is calculated for the two frames of images collected in the experiment. It is assumed that the MSER region sets of the two frames are and , respectively. is the set of differences between the ith MSER region range in the previous frame and the unmatched region in the next frame. The set is normalized, and the effect of normalization is represented by . The calculation equation for is

- Area set spacing calculation. It is assumed that the centroid sets of MSER regions in the two frames are and , respectively. is the set of distances between the range of the th MSER region in the previous image and the unmatched region in the subsequent image. The set is normalized, and the processing result is represented by . The calculation equation for is

- Extract the matching region . Let be the matching value set of the th MSER, and extract the MSER corresponding to the minimum as the matching region.

- Object is selected as the clustering center [32]. Calculate the Euclidean distance between the obstacle feature point and the cluster center according to Equation (4), where is the center of clustering at a point, and the region to which belongs is divided by calculating the distance between the center point of clustering and regions and . By setting the distance threshold , the data whose distance from the clustering center is less than the threshold are classified into one class:

- According to Equation (5), the cluster center of class is recalculated:

- The obstacle feature points are iterated according to the repeated steps 5 and 6 until the cluster centroid set does not change. Figure 5 is the overall flowchart of obstacle extraction.

3.2.2. Static Obstacle Detection

3.2.3. Dynamic Obstacle Detection

3.3. Data Interaction Based on UDP Protocol

3.3.1. Data Acquisition at RSU Side and OBU Side

3.3.2. UDP Protocol Transmission UDP Adopts a Connectionless Mode

3.3.3. UDP-Based Data Transfer

4. Experiment and Result Analysis

4.1. RSU-End Obstacle Detection Test

4.2. VIDAR-Based Vehicle-End Obstacle Detection Test

4.3. Experiment of Obstacle Detection Based on RSU and Vehicle Camera

5. Analysis of the Effect of Obstacle Detection Method Based on the Fusion of RSU and Vehicle Camera

5.1. Detection Accuracy Analysis

5.2. Detection Speed Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, Y.; Gao, S.; Li, S.; Tan, D.; Guo, D.; Wang, Y.; Chen, Q. Vision-IMU Based Obstacle Detection Method. In Green Intelligent Transportation Systems GITSS 2017; Lecture Notes in Electrical Engineering; Springer: Singapore, 2019; Volume 503, pp. 475–487. [Google Scholar]

- Sabir, Z.; Dafrallah, S.; Amine, A. A Novel Solution to Prevent Accidents using V2I in Moroccan Smart Cities. In Proceedings of the International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai, United Arab Emirates, 11–12 December 2019; pp. 621–625. [Google Scholar]

- Rateke, T.; Wangenheim, A.v.J.M.V. Road obstacles positional and dynamic features extraction combining object detection, stereo disparity maps and optical flow data. Int. J. Comput. Appl. 2020, 31, 73. [Google Scholar] [CrossRef]

- Song, T.-J.; Jeong, J.; Kim, J.-H. End-to-End Real-Time Obstacle Detection Network for Safe Self-Driving via Multi-Task Learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16318–16329. [Google Scholar] [CrossRef]

- Gholami, F.; Khanmirza, E.; Riahi, M. Real-time obstacle detection by stereo vision and ultrasonic data fusion. Measurement 2022, 190, 110718. [Google Scholar] [CrossRef]

- Weon, I.-S.; Lee, S.-G.; Ryu, J.-K. Object Recognition Based Interpolation With 3D LIDAR and Vision for Autonomous Driving of an Intelligent Vehicle. IEEE Access 2020, 8, 65599–65608. [Google Scholar] [CrossRef]

- Bansal, V.; Balasubramanian, K.; Natarajan, P. Obstacle avoidance using stereo vision and depth maps for visual aid devices. SN Appl. Sci. 2020, 2, 1131. [Google Scholar] [CrossRef]

- Xu, C.; Hu, X. Real time detection algorithm of parking slot based on deep learning and fisheye image. In Proceedings of the Journal of Physics: Conference Series, Sanya, China, 20–22 February 2020; p. 012037. [Google Scholar]

- Huu, P.N.; Thi, Q.P.; Quynh, P.T.T. Proposing Lane and Obstacle Detection Algorithm Using YOLO to Control Self-Driving Cars on Advanced Networks. Adv. Multimedia 2022, 2022, 3425295. [Google Scholar] [CrossRef]

- Dhouioui, M.; Frikha, T. Design and implementation of a radar and camera-based obstacle classifification system using machinelearning techniques. JRTIP 2021, 18, 2403–2415. [Google Scholar]

- Xue, J.; Cheng, F.; Li, Y.; Song, Y.; Mao, T. Detection of Farmland Obstacles Based on an Improved YOLOv5s Algorithm by Using CIoU and Anchor Box Scale Clustering. Sensors 2022, 22, 1790. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. Moving object detection based on frame difference and W4. IJIGSP 2017, 11, 1357–1364. [Google Scholar] [CrossRef]

- Jiang, G.; Xu, Y.; Gong, X.; Gao, S.; Sang, X.; Zhu, R.; Wang, L.; Wang, Y.J.J.o.R. An Obstacle Detection and Distance Measurement Method for Sloped Roads Based on VIDAR. J. Robot. 2022, 2022, 5264347. [Google Scholar] [CrossRef]

- Jiang, G.; Xu, Y.; Sang, X.; Gong, X.; Gao, S.; Zhu, R.; Wang, L.; Wang, Y.J.J.o.R. An Improved VM Obstacle Identification Method for Reflection Road. J. Robot. 2022, 2022, 3641930. [Google Scholar] [CrossRef]

- Steinbaeck, J.; Druml, N.; Herndl, T.; Loigge, S.; Marko, N.; Postl, M.; Kail, G.; Hladik, R.; Hechenberger, G.; Fuereder, H. ACTIVE—Autonomous Car to Infrastructure Communication Mastering Adverse Environments. In Proceedings of the Sensor Data Fusion: Trends, Solutions, Applications (SDF) Conference, Bonn, Germany, 15–17 October 2019; pp. 1–6. [Google Scholar]

- Mouawad, N.; Mannoni, V.; Denis, B.; da Silva, A.P. Impact of LTE-V2X Connectivity on Global Occupancy Maps in a Cooperative Collision Avoidance (CoCA) System. In Proceedings of the IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–5. [Google Scholar]

- Ghovanlooy Ghajar, F.; Salimi Sratakhti, J.; Sikora, A. SBTMS: Scalable Blockchain Trust Management System for VANET. Appl. Sci. 2021, 11, 11947. [Google Scholar] [CrossRef]

- Ağgün, F.; Çibuk, M.; UR-Rehman, S. ReMAC: A novel hybrid and reservation-based MAC protocol for VANETs. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 1886–1904. [Google Scholar] [CrossRef]

- Ke, Q.; Kanade, T. Transforming camera geometry to a virtual downward-looking camera: Robust ego-motion estimation and ground-layer detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madison, WI, USA, 18–20 June 2003; Volume 1. [Google Scholar]

- Boyoon, J.; Sukhatme, G.S. Detecting Moving Objects Using a Single Camera on a Mobile Robot in an Outdoor Environment. In Proceedings of the 8th Conference on Intelligent Autonomous Systems, Amsterdam, The Netherlands, 10–13 March 2004; pp. 980–987. [Google Scholar]

- Wybo, S.; Bendahan, R.; Bougnoux, S.; Vestri, C.; Abad, F.; Kakinami, T. Movement Detection for Safer Backward Maneuvers. In Proceedings of the IEEE Intelligent Vehicles Symposium, Meguro-Ku, Japan, 13–15 June 2006; pp. 453–459. [Google Scholar]

- Nakasone, R.; Nagamine, N.; Ukai, M.; Mukojima, H.; Deguchi, D.; Murase, H. Frontal Obstacle Detection Using Background Subtraction and Frame Registration. Q. Rep. RTRI 2017, 58, 298–302. [Google Scholar] [CrossRef]

- Zhang, L.; Li, D. Research on Mobile Robot Target Recognition and Obstacle Avoidance Based on Vision. J. Int. Technol. 2018, 19, 1879–1892. [Google Scholar]

- Qi, G.; Wang, H.; Haner, M.; Weng, C.; Chen, S.; Zhu, Z. Convolutional neural network based detection and judgement of environmental obstacle in vehicle operation. CAAI TIT 2019, 4, 80–91. [Google Scholar] [CrossRef]

- Li, J.; Shi, X.; Wang, J.; Yan, M. Adaptive road detection method combining lane line and obstacle boundary. IET Image Process. 2020, 14, 2216–2226. [Google Scholar] [CrossRef]

- Lan, J.; Jiang, Y.; Fan, G.; Yu, D.; Zhang, Q.J.J.o.S.P.S. Real-time automatic obstacle detection method for traffic surveillance in urban traffic. J. Signal Process. Syst. 2016, 82, 357–371. [Google Scholar] [CrossRef]

- He, D.; Zou, Z.; Chen, Y.; Liu, B.; Miao, J. Rail Transit Obstacle Detection Based on Improved CNN. IEEE Trans. Instrum. Meas. 2021, 70, 2515114. [Google Scholar] [CrossRef]

- Yuxi, F.; Guotian, H.; Qizhou, W. A New Motion Obstacle Detection Based Monocular-Vision Algorithm. In Proceedings of the International Conference on Computational Intelligence and Applications (ICCIA), Chongqing, China, 20–29 August 2016; pp. 31–35. [Google Scholar]

- Bertozzi, M.; Broggi, A.; Medici, P.; Porta, P.P.; Vitulli, R. Obstacle detection for start-inhibit and low speed driving. In Proceedings of the Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 569–574. [Google Scholar]

- Cui, M. Introduction to the k-means clustering algorithm based on the elbow method. Account. Audit. Financ. 2020, 1, 5–8. [Google Scholar]

- Syakur, M.A.; Khotimah, B.K.; Rochman, E.M.S.; Satoto, B.D. Integration k-means clustering method and elbow method for identification of the best customer profile cluster. In Proceedings of the IOP Conference Series: Materials Science and Engineering, 2nd International Conference on Vocational Education and Electrical Engineering (ICVEE), Surabaya, Indonesia, 9 November 2017; IOP Publishing: Bristol, UK, 2018; Volume 336, pp. 1–6. [Google Scholar]

- Xu, J.; Zhang, Y.; Miao, D. Three-way confusion matrix for classification: A measure driven view. Inf. Sci. 2020, 507, 772–794. [Google Scholar] [CrossRef]

- Patel, A.K.; Chatterjee, S.; Gorai, A.K. Development of machine vision-based ore classifification model using support vector machine (SVM) algorithm. Arab. J. Geosci. 2017, 10, 107. [Google Scholar] [CrossRef]

- Abdat, F.; Amouroux, M.; Guermeur, Y.; Blondel, W. Hybrid feature selection and SVM-based classification for mouse skin precancerous stages diagnosis from bimodal spectroscopy. Opt. Express. 2012, 20, 228. [Google Scholar] [CrossRef]

| Camera Parameter Name | Parameter Symbol | Numerical Value | Company |

|---|---|---|---|

| Pixel size of the photosensitive chip | p | 1.4 | μm |

| Camera mounting height | h | 6.572 | cm |

| Camera pitch angle | γ | 0.132 | rad |

| Effective focal length of camera | f | 6.779 | mm |

| Minimum height of vehicle chassis | hc | 14 | cm |

| Feature Point | d1/cm | d2/cm | ∆d/cm | ∆l/cm | hv/cm |

|---|---|---|---|---|---|

| 1 | 20.05 | 19.21 | 2.00 | 1.16 | 22.21 |

| 2 | 19.99 | 19.07 | 2.00 | 1.08 | 20.89 |

| 3 | 19.97 | 18.98 | 2.00 | 1.01 | 19.90 |

| 4 | 15.77 | 15.55 | 2.00 | 1.78 | 10.05 |

| 5 | 15.75 | 15.59 | 2.00 | 1.84 | 10.02 |

| 6 | 15.74 | 15.59 | 2.00 | 1.85 | 9.98 |

| 7 | 15.73 | 15.62 | 2.00 | 1.89 | 9.95 |

| Name | Specific Description |

|---|---|

| RSU-end laptop | Intel Core i5-6200U (Lenovo Co., Ltd., Beijing, China) |

| On-board unit-end laptop | Intel Core i7-6500U (Lenovo Co., Ltd., Beijing, China) |

| Camera | SONY IMX179 (Kexun Limited, Hong Kong, China) |

| RSU | MOKAR I-Classic (Huali Zhixing, Wuhan, China) |

| IMU | HEC295 (Weite Intelligent Technology Co., Ltd., Shenzhen, China) |

| STM32 single chip microcomputer | STM32F103VET6 CAN RS485 (Jiaqin Electronics, Shenzhen, China) |

| OBU | VSC-305-00D (Huali Zhixing, Wuhan, China) |

| System support | Windows7/10 |

| Applicable operating temperature | 24 °C |

| IPv4 address of RSU side | 192.168.1.103 |

| IPv4 address of on-board equipment | 192.168.1.106 |

| Feature Point | d1/cm | d2/cm | ∆d/cm | ∆l/cm | hv/cm |

|---|---|---|---|---|---|

| 1 | 10.93 | 10.80 | 2.00 | 1.87 | 9.97 |

| 2 | 11.02 | 10.87 | 2.00 | 1.85 | 9.99 |

| 3 | 11.02 | 10.83 | 2.00 | 1.81 | 10.02 |

| 4 | 11.01 | 10.82 | 2.00 | 1.81 | 10.02 |

| Actual Obstacle Category | |||

|---|---|---|---|

| True | None or False | ||

| Detection result | True | ||

| None or false | |||

| Detection Method | Actual Obstacle Category | Identification Accuracy | |||||

|---|---|---|---|---|---|---|---|

| True | None or False | DT | RT | OT | |||

| Obstacle detection method based on VIDAR | Detection result | True | 6828 | 106 | 0.984 | 0.980 | 0.968 |

| None or false | 140 | 282 | |||||

| RSU-based obstacle detection method | True | 5976 | 89 | 0.985 | 0.826 | 0.817 | |

| None or false | 1258 | 33 | |||||

| Obstacle detection method based on RSU and vehicle camera | True | 6934 | 13 | 0.998 | 0.976 | 0.991 | |

| None or false | 164 | 245 | |||||

| YOLOv5 | True | 6798 | 104 | 0.985 | 0.974 | 0.961 | |

| None or false | 180 | 274 | |||||

| Method | Obstacle Detection Method Based on VIDAR | Obstacle Detection Method Based on RSU | RSU and Vehicle Camera Integration | YOLOv5 |

|---|---|---|---|---|

| Detection Time/s | 0.364 | 0.371 | 0.367 | 0.359 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, S.; Xu, Y.; Zhang, Q.; Yu, J.; Sun, T.; Ni, J.; Shi, S.; Kong, X.; Zhu, R.; Wang, L.; et al. Obstacle Detection Method Based on RSU and Vehicle Camera Fusion. Sensors 2023, 23, 4920. https://doi.org/10.3390/s23104920

Ding S, Xu Y, Zhang Q, Yu J, Sun T, Ni J, Shi S, Kong X, Zhu R, Wang L, et al. Obstacle Detection Method Based on RSU and Vehicle Camera Fusion. Sensors. 2023; 23(10):4920. https://doi.org/10.3390/s23104920

Chicago/Turabian StyleDing, Shaohong, Yi Xu, Qian Zhang, Jinxin Yu, Teng Sun, Juan Ni, Shuyue Shi, Xiangcun Kong, Ruoyu Zhu, Liming Wang, and et al. 2023. "Obstacle Detection Method Based on RSU and Vehicle Camera Fusion" Sensors 23, no. 10: 4920. https://doi.org/10.3390/s23104920

APA StyleDing, S., Xu, Y., Zhang, Q., Yu, J., Sun, T., Ni, J., Shi, S., Kong, X., Zhu, R., Wang, L., & Wang, P. (2023). Obstacle Detection Method Based on RSU and Vehicle Camera Fusion. Sensors, 23(10), 4920. https://doi.org/10.3390/s23104920