Surgical Instrument Detection Algorithm Based on Improved YOLOv7x

Abstract

1. Introduction

- A surgical instrument detection model was proposed to help hospitals achieve automated surgical instrument counting.

- The model can accurately identify 26 commonly used surgical instruments and has good recognition performance for some instruments with similar shapes and dense occlusions.

- The OSI26 surgical instrument data set was created and made public (address: https://aistudio.baidu.com/aistudio/datasetdetail/198164, accessed on 4 April 2023). It contains 452 images, covering 26 types of surgical instruments, each appearing 60 times. To enhance the model’s recognition accuracy and generalization ability, the surgical instruments were placed, with varying levels of dense occlusion, and data were collected under multiple lighting conditions. In addition, various data augmentation techniques were used to expand the data set.

- RepLK Block and ODConv structures were introduced to further improve the recognition accuracy of the YOLOv7x model, and experiments were conducted on the OSI26 data set. The experimental results show that the improved model performs significantly better than the original model, and it also shows superior performance compared to other advanced object detection algorithms.

2. Related Work

3. Materials and Methods

3.1. Image Acquisition

3.2. Construction of Surgical Instrument Detection Model

3.2.1. YOLOv7x

3.2.2. RepLKNet

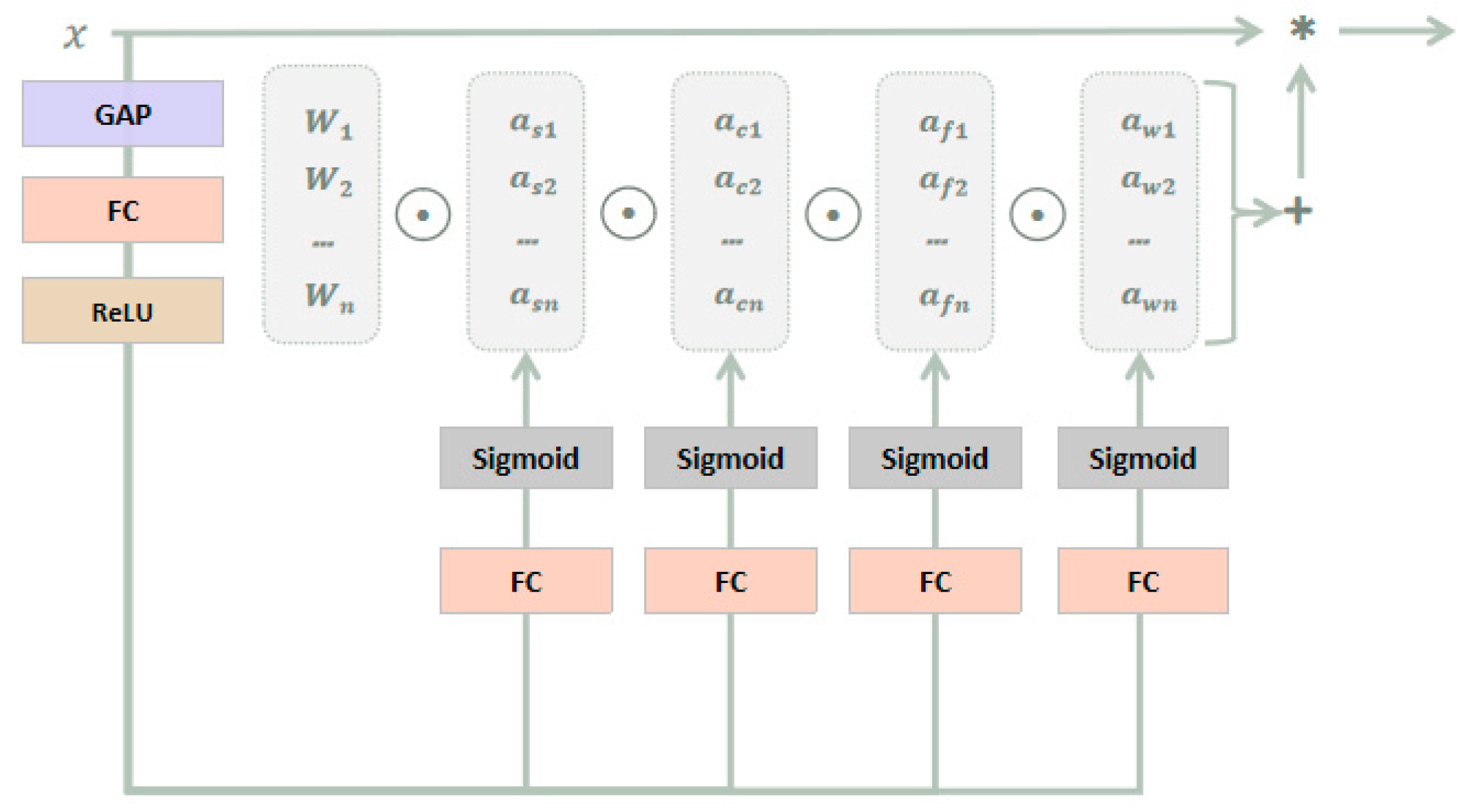

3.2.3. ODConv

3.3. Improved Model

4. Results and Discussion

4.1. Evaluation Indicators

4.2. Ablation Experiments

4.3. Model Performance Comparison

4.4. Confusion Matrix Evaluation

4.5. Limitation and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Tong, L.; Chen, L.; Jiang, Z.; Zhou, F.; Zhang, Q.; Zhang, X.; Jin, Y.; Zhou, H. Deep learning based brain tumor segmentation: A survey. Complex Intell. Syst. 2020, 9, 1001–1026. [Google Scholar] [CrossRef]

- Shmatko, A.; Ghaffari Laleh, N.; Gerstung, M.; Kather, J.N. Artificial intelligence in histopathology: Enhancing cancer research and clinical oncology. Nat. Cancer 2022, 3, 1026–1038. [Google Scholar] [CrossRef]

- Puri, M.; Hoover, S.B.; Hewitt, S.M.; Wei, B.R.; Adissu, H.A.; Halsey, C.H.; Beck, J.; Bradley, C.; Cramer, S.D.; Durham, A.C.; et al. Automated Computational Detection, Quantitation, and Mapping of Mitosis in Whole-Slide Images for Clinically Actionable Surgical Pathology Decision Support. J. Pathol. Inform. 2019, 10, 4. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Liang, X.; Shen, C.; Jiang, S.; Wang, J. Synthetic CT Generation from CBCT images via Deep Learning. Med. Phys. 2019, 47, 1115–1125. [Google Scholar] [CrossRef]

- Ma, H. Automatic positioning system of medical service robot based on binocular vision. In Proceedings of the 2021 3rd International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Changzhou, China, 24–26 September 2021; pp. 52–55. [Google Scholar]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2016, 36, 61–78. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention Residual Learning for Skin Lesion Classification. IEEE Trans. Med. Imaging 2019, 38, 2092–2103. [Google Scholar] [CrossRef]

- Hooshangnejad, H.; Chen, Q.; Feng, X.; Zhang, R.; Ding, K. deepPERFECT: Novel Deep Learning CT Synthesis Method for Expeditious Pancreatic Cancer Radiotherapy. arXiv 2023, arXiv:2301.11085. [Google Scholar]

- Sharghi, A.; Haugerud, H.; Oh, D.; Mohareri, O. Automatic Operating Room Surgical Activity Recognition for Robot-Assisted Surgery. arXiv 2020, arXiv:2006.16166. [Google Scholar]

- Steelman, V.M.; Shaw, C.; Shine, L.; Hardy-Fairbanks, A.J. Hardy-Fairbanks, Unintentionally Retained Foreign Objects: A Descriptive Study of 308 Sentinel Events and Contributing Factors. Jt. Comm. J. Qual. Patient Saf. 2019, 45, 249–258. [Google Scholar] [CrossRef]

- Warwick, V.; Gillespie, B.M.; McMurray, A.; Clark-Burg, K. The patient, case, individual and environmental factors that impact on the surgical count process: An integrative review. J. Perioper. Nurs. 2019, 32, 9–19. [Google Scholar] [CrossRef]

- Hua, R.F.; Tie, Q. Application of optimized device placement method in nasal septum device inventory. In Proceedings of the 2014 Henan Provincial Hospital Disinfection Supply Center (Room) Standardization Construction and Management Academic Conference, Henan, China, 2014; Henan Provincial Nursing Association: Zhengzhou, China, 2014; pp. 61–62. [Google Scholar]

- Huang, X.F.; Dai, H.X.; Chang, H.C.; Zou, L.J.; Zhang, Q.H. Improving the counting method of surgical instruments and articles to improve the safety of patients’ operation. J. Nurse Educ. 2007, 20, 1835–1837. [Google Scholar] [CrossRef]

- Wu, Y.Y. Analysis of the application effect of instrument atlas in improving the correct rate of instrument handover in operating room and supply room. Famous Dr. 2022, 125, 116–118. [Google Scholar]

- Ying, Y.; Zhu, F.J. Application of Ultra-High Frequency Electronic Radio Frequency Identification Technology in Automatic Inventory of Surgical Instruments. Med. Equip. 2022, 35, 55–57. [Google Scholar]

- Lee, J.-D.; Chien, J.-C.; Hsu, Y.-T.; Wu, C.-T. Automatic Surgical Instrument Recognition A Case of Comparison Study between the Faster R-CNN, Mask R-CNN, and Single-Shot Multi-Box Detectors. Appl. Sci. 2021, 11, 8097. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wang, S.; Raju, A.; Huang, J. Deep learning based multi-label classification for surgical tool presence detection in laparoscopic videos. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, VIC, Australia, 18–21 April 2017; pp. 620–623. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Q.; Sun, G.; Gu, L.; Liu, Z. Object Detection of Surgical Instruments Based on YOLOv4. In Proceedings of the 2021 6th IEEE International Conference on Advanced Robotics and Mechatronics (ICARM), Chongqing, China, 3–5 July 2021; pp. 578–581. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, Z. Detection of Surgical Instruments Based on YOLOv5. In Proceedings of the 2022 IEEE International Conference on Manipulation, Manufacturing and Measurement on the Nanoscale (3M-NANO), Tianjin, China, 8–12 August 2022; pp. 470–473. [Google Scholar] [CrossRef]

- Liu, K.; Zhao, Z.; Shi, P.; Li, F.; Song, H. Real-time surgical tool detection in computer-aided surgery based on enhanced feature-fusion convolutional neural network. J. Comput. Des. Eng. 2022, 9, 1123–1134. [Google Scholar] [CrossRef]

- Jin, A.; Yeung, S.; Jopling, J.; Krause, J.; Azagury, D.; Milstein, A.; Fei-Fei, L. Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In Proceedings of the 2018 IEEE winter conference on applications of computer vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 691–699. [Google Scholar]

- Kurmann, T.; Marquez Neila, P.; Du, X.; Fua, P.; Stoyanov, D.; Wolf, S.; Sznitman, R. Simultaneous recognition and pose estimation of instruments in minimally invasive surgery. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017; Proceedings, Part II 20. Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 505–513. [Google Scholar]

- Wang, R.; Miao, Y.B. A method for counting surgical instruments based on improved template matching. Mechatronics 2022, 28, 51–57. [Google Scholar] [CrossRef]

- Lu, K. Research on Image Detection Methods of Surgical Instruments Based on Deep Learning. Master’s Thesis, Tianjin University of Technology, Tianjin, China, 2021. [Google Scholar] [CrossRef]

- Zhang, W.K. Research on Surgical Instrument Recognition Based on Fine-Grained Image Classification. Master’s Thesis, Dalian University of Technology, Dalian, China, 2021. [Google Scholar] [CrossRef]

- Liang, P.K. Research on Image Recognition and Sorting of Surgical Instruments Based on Deep Learning. Master’s Thesis, Yanshan University, Qinhuangdao, China, 2022. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Qi, L.L.; Gao, J.L. Small target detection based on improved YOLOv7. Comput. Eng. 2023, 49, 41–48. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G.; Sun, J. Scaling Up Your Kernels to 31 × 31: Revisiting Large Kernel Design in CNNs. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11953–11965. [Google Scholar]

- Li, C.; Zhou, A.; Yao, A. Omni-Dimensional Dynamic Convolution. arXiv 2022, arXiv:2209.07947. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution: Attention Over Convolution Kernels. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11027–11036. [Google Scholar]

- Yang, B.; Bender, G.; Le, Q.V.; Ngiam, J. CondConv: Conditionally Parameterized Convolutions for Efficient Inference. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Wang, Z.; Li, H.; Yan, D.M.; Zhu, Y.R. Pavement Disease Detection Model Based on Improved YOLOv5. Comput. Eng. 2013, 49, 15–23. [Google Scholar] [CrossRef]

| RepLK Block | ODConv | P | R | F1 | AP | AP50 | AP75 | APL |

|---|---|---|---|---|---|---|---|---|

| - | - | 83.9% | 94.2% | 88.8% | 87.2% | 94.6% | 93.0% | 87.2% |

| - | √ | 91.9% | 93.5% | 92.7% | 89.9% | 97.8% | 95.3% | 89.9% |

| √ | - | 91.9% | 97.6% | 94.7% | 90.3% | 98.3% | 96.7% | 90.3% |

| √ | √ | 95.1% | 97.6% | 96.3% | 92.0% | 99.8% | 98.3% | 92.0% |

| RepLK Block | ODConv | P | R | F1 | AP | AP50 | AP75 | APL |

|---|---|---|---|---|---|---|---|---|

| - | - | 88.4% | 91.9% | 90.1% | 88.4% | 95.5% | 94.3% | 88.4% |

| - | √ | 89.4% | 97.3% | 93.2% | 91.0% | 98.3% | 97.7% | 91.0% |

| √ | - | 94.3% | 95.4% | 94.9% | 91.0% | 98.2% | 97.4% | 91.0% |

| √ | √ | 92.6% | 97.0% | 94.7% | 91.5% | 99.1% | 98.2% | 91.5% |

| Models | Model Backbone | Image Size | AP | AP50 | AP75 | APL | Iteration Number |

|---|---|---|---|---|---|---|---|

| YOLOv5l | CSPDarknet53 | 640 × 640 | 91.5% | 98.9% | 97.1% | 91.5% | 150 |

| YOLOv7 | CSPDarknet53 | 82.7% | 90.7% | 87.5% | 82.7% | ||

| YOLOv7x | CSPDarknet53 | 87.2% | 94.6% | 93.0% | 87.2% | ||

| YOLOX-tiny | Darknet53 | 79.2% | 96.6% | 92.3% | 79.2% | ||

| YOLOv6n | EfficientRep | 90.1% | 98.7% | 96.0% | 90.1% | ||

| Faster RCNN | ResNet101 | 87.3% | 97.9% | 96.6% | 87.3% | ||

| DETR | ResNet50 | 86.1% | 94.8% | 93.6% | 86.1% | ||

| Dynamic RCNN | ResNet50 | 86.4% | 98.4% | 96.0% | 86.4% | ||

| Improved YOLOv7x | CSPDarknet53 | 92.0% | 99.8% | 98.3% | 92.0% |

| Models | Image Size | Params | FLOPs | AP | AP50 | AP75 | APL |

|---|---|---|---|---|---|---|---|

| YOLOv5l | 640 × 640 | 46.2 M | 108.1 G | 90.7% | 97.0% | 96.1% | 90.7% |

| YOLOv7 | 36.9 M | 104.7 G | 83.5% | 90.5% | 89.4% | 83.5% | |

| YOLOv7x | 71.3 M | 189.9 G | 88.4% | 95.5% | 94.3% | 88.4% | |

| YOLOX-tiny | 5.06 M | 6.45 G | 77.6% | 96.0% | 90.8% | 77.6% | |

| YOLOv6n | 4.63 M | 11.36 G | 88.0% | 97.9% | 94.1% | 88.0% | |

| Faster RCNN | 60.5 M | 283.1 G | 88.0% | 98.5% | 97.5% | 88.0% | |

| DETR | 41.3 M | 91.6 G | 86.9% | 95.8% | 92.6% | 86.9% | |

| Dynamic RCNN | 41.3 M | 206.8 G | 81.5% | 95.6% | 93.2% | 81.5% | |

| Improved YOLOv7x | 80.4 M | 204.0 G | 91.5% | 99.1% | 98.2% | 91.5% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ran, B.; Huang, B.; Liang, S.; Hou, Y. Surgical Instrument Detection Algorithm Based on Improved YOLOv7x. Sensors 2023, 23, 5037. https://doi.org/10.3390/s23115037

Ran B, Huang B, Liang S, Hou Y. Surgical Instrument Detection Algorithm Based on Improved YOLOv7x. Sensors. 2023; 23(11):5037. https://doi.org/10.3390/s23115037

Chicago/Turabian StyleRan, Boping, Bo Huang, Shunpan Liang, and Yulei Hou. 2023. "Surgical Instrument Detection Algorithm Based on Improved YOLOv7x" Sensors 23, no. 11: 5037. https://doi.org/10.3390/s23115037

APA StyleRan, B., Huang, B., Liang, S., & Hou, Y. (2023). Surgical Instrument Detection Algorithm Based on Improved YOLOv7x. Sensors, 23(11), 5037. https://doi.org/10.3390/s23115037