Multi-Sensor Platform for Predictive Air Quality Monitoring

Abstract

1. Introduction

2. Materials and Methods

2.1. Hardware Architecture

- Wireless air quality sensor capable of acquiring the following environmental parameters: particulate matter (PM 1, PM 2.5, PM 10), CO, VOC, atmospheric pressure, temperature, humidity;

- Sensorized T-shirt to monitor torso movements, breathing and heart rate;

- Embedded Personal Computer (PC) for data collection and processing.

2.2. Data Acquisition

- Ten days for an average of about 8 h per day for smart working;

- Twelve days for an average of about 2 h per day for physical activity;

- The remainder of the recording covered the room under non-inhabited conditions.

2.3. Methodology

2.4. Neural-Network Architectures

- Input layer: each sample includes the values of the input variables (CO, temperature, humidity and activity level) for each minute of acquisition. The input values are normalised before loading the neural network. In particular, the input values are scaled so that they lie in the range given on the training set, in our case between zero and one [35]. The scaling is given by the following equation:where minimum and maximum values are related to the x-value to be normalised.

- One-dimensional Convolutional layer: It is used for the analysis and extraction of features along the temporal axis of the inputs. To extract non-linear feature patterns from the data, the standard rectified linear activation function (i.e., ReLU) is employed.

- Max Pooling layer: Its purpose is to learn the most useful information from the feature vectors by subsampling the output matrix from the previous layer.

- Flatten layer: The input matrix is reshaped to produce a one-dimensional feature vector to generate predictions from the output layer.

- Output layer: The output of this fully connected linear layer is a single neuron to forecast the CO value for the next minute.

- Input layer: like CNN.

- Three RNN layers: After the input layer, those three layers are present to improve the performance of our model and provide reasonable results compared to conventional neural-network models.

- Three Dropout layers: A dropout layer was added after each RNN layer in order to improve the forecast accuracy and compensate overfitting.

- Output layer: as for CNN.

- Input layer: like the two previous architectures.

- Three LSTM layers: as described for RNN, these three layers increase performance in CO forecasting.

- Three Dropout layers: As with the RNN, a dropout layer was added after each LSTM layer to enhance forecast values.

- Output layer: like the two previous architectures.

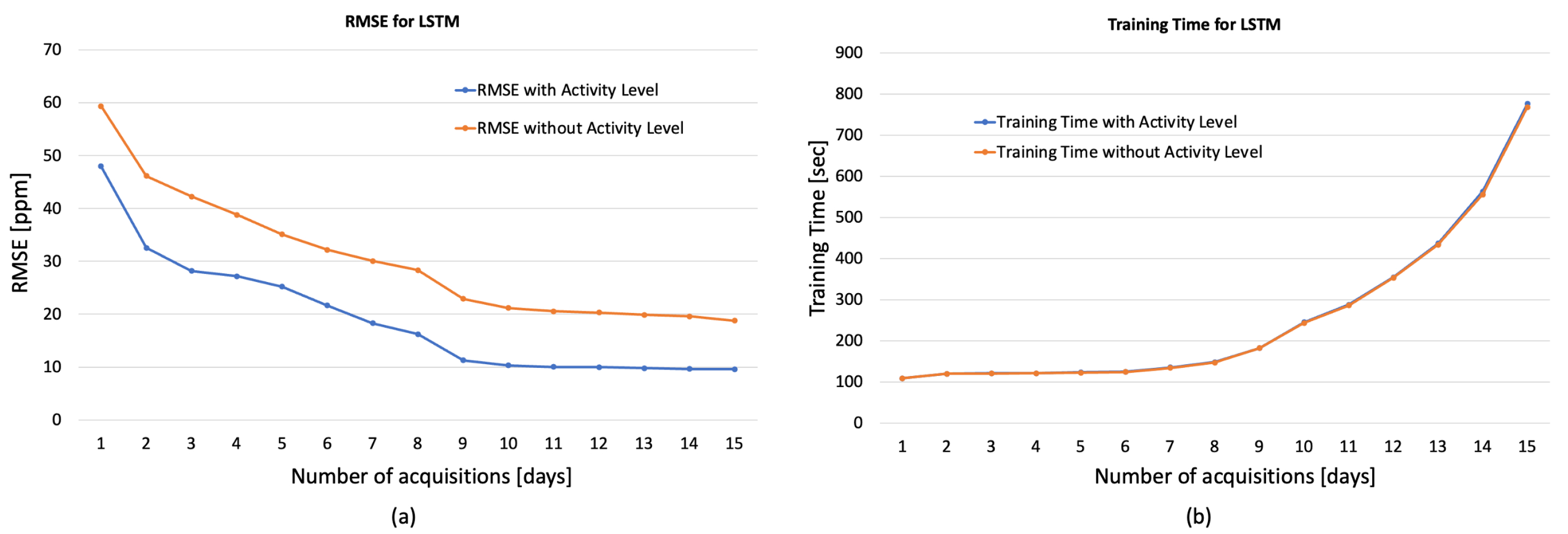

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Myers, I.; Maynard, R.L. Polluted air–outdoors and indoors. Occup. Med. 2005, 55, 432–438. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Marć, M.; Tobiszewski, M.; Zabiegała, B.; de la Guardia, M.; Namieśnik, J. Current air quality analytics and monitoring: A review. Anal. Chim. Acta 2015, 853, 116–126. [Google Scholar] [CrossRef] [PubMed]

- Hattori, S.; Iwamatsu, T.; Miura, T.; Tsutsumi, F.; Tanaka, N. Investigation of Indoor Air Quality in Residential Buildings by Measuring CO2 Concentration and a Questionnaire Survey. Sensors 2022, 22, 7331. [Google Scholar] [CrossRef] [PubMed]

- Apte, M.G.; Fisk, W.J.; Daisey, J.M. Association between indoor CO2 concentrations and Sick Building Syndrome in U.S. office buildings: An analysis of the 1994–1996 base study data. Indoor Air 2000, 10, 246–257. [Google Scholar] [CrossRef][Green Version]

- Azuma, K.; Kagi, N.; Yanagi, U.; Osawa, H. Effects of low-level inhalation exposure to carbon dioxide in indoor environments: A short review on human health and psychomotor performance. Environ. Int. 2018, 121, 51–56. [Google Scholar] [CrossRef]

- Persily, A.; de Jonge, L. Carbon dioxide generation rates for building occupants. Indoor Air 2017, 27, 868–879. [Google Scholar] [CrossRef][Green Version]

- Folk, A.L.; Wagner, B.E.; Hahn, S.L.; Larson, N.; Barr-Anderson, D.J.; Neumark-Sztainer, D. Changes to Physical Activity during a Global Pandemic: A Mixed Methods Analysis among a Diverse Population-Based Sample of Emerging Adults. Int. J. Environ. Res. Public Health 2021, 18, 3674. [Google Scholar] [CrossRef]

- Wei, W.; Ramalho, O.; Malingre, L.; Sivanantham, S.; Little, J.C.; Mandin, C. Machine learning and statistical models for predicting indoor air quality. Indoor Air 2019, 29, 704–726. [Google Scholar] [CrossRef]

- Candanedo, L.M.; Feldheim, V. Accurate occupancy detection of an office room from light, temperature, humidity and CO2 measurements using statistical learning models. Energy Build 2016, 112, 28–39. [Google Scholar] [CrossRef]

- Khazaei, B.; Shiehbeigi, A.; Kani, A.H.M.A. Modeling indoor air carbon dioxide concentration using artificial neural network. Int. J. Environ. Sci. Technol. 2019, 16, 729–736. [Google Scholar] [CrossRef]

- Ahn, J.; Shin, D.; Kim, K.; Yang, J. Indoor air quality analysis using deep learning with sensor data. Sensors 2017, 17, 2476. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Putra, J.C.P.; Safrilah; Ihsan, M. The prediction of indoor air quality in office room using artificial neural network. In Proceedings of the 4th International Conference on Engineering, Technology, and Industrial Application (ICETIA), Surakarta, Indonesia, 13–14 December 2017. [Google Scholar]

- Yu, T.C.; Lin, C.C. An intelligent wireless sensing and control system to improve indoor air quality: Monitoring, prediction, and preaction. Int. J. Distrib. Sens. Netw. 2015, 11, 140978. [Google Scholar] [CrossRef][Green Version]

- Kallio, J.; Tervonen, J.; Räsänen, P.; Mäkynen, R.; Koivusaari, J.; Peltola, J. Forecasting office indoor CO2 concentration using machine learning with a one-year dataset. Build. Environ. 2021, 187, 107409. [Google Scholar] [CrossRef]

- Segala, G.; Doriguzzi-Corin, R.; Peroni, C.; Gazzini, T.; Siracusa, D. A Practical and Adaptive Approach to Predicting Indoor CO2. Appl. Sci. 2021, 11, 10771. [Google Scholar] [CrossRef]

- Available online: https://www.upsens.com/ (accessed on 9 March 2023).

- Available online: https://www.upsens.com/images/pdf/QuAirLite_datasheetRevC_EN.pdf (accessed on 20 March 2023).

- Available online: https://www.smartex.it/ (accessed on 9 March 2023).

- Leone, A.; Rescio, G.; Diraco, G.; Manni, A.; Siciliano, P.; Caroppo, A. Ambient and Wearable Sensor Technologies for Energy Expenditure Quantification of Ageing Adults. Sensors 2022, 22, 4893. [Google Scholar] [CrossRef]

- Leone, A.; Rescio, G.; Caroppo, A.; Siciliano, P.; Manni, A. Human Postures Recognition by Accelerometer Sensor and ML Architecture Integrated in Embedded Platforms: Benchmarking and Performance Evaluation. Sensors 2023, 23, 1039. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef][Green Version]

- Lin, T.; Horne, B.G.; Tino, P.; Giles, C.L. Learning long-term dependencies in NARX recurrent neural networks. IEEE Trans. Neural Netw. 1996, 7, 1329–1338. [Google Scholar]

- Zhao, B.; Lu, H.; Chen, S.; Liu, J.; Wu, D. Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 2017, 28, 162–169. [Google Scholar] [CrossRef]

- Tzoumpas, K.; Estrada, A.; Miraglio, P.; Zambelli, P. A data filling methodology for time series based on CNN and (Bi) LSTM neural networks. arXiv 2022, arXiv:2204.09994. [Google Scholar]

- Umeda, Y. Time series classification via topological data analysis. Inf. Media Technol. 2017, 12, 228–239. [Google Scholar] [CrossRef][Green Version]

- Shu, P.; Sun, Y.; Zhao, Y.; Xu, G. Spatial-temporal taxi demand prediction using LSTM-CNN. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 1226–1230. [Google Scholar]

- Lara-Benítez, P.; Carranza-García, M.; Riquelme, J.C. An experimental review on deep learning architectures for time series forecasting. Int. J. Neural Syst. 2021, 31, 2130001. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A critical review of recurrent neural networks for sequence learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Bengio, Y.; Frasconi, P.; Simard, P. The problem of learning long-term dependencies in recurrent networks. In Proceedings of the 1993 IEEE International Conference on Neural Networks (ICNN ’93), San Francisco, CA, USA, 28 March–1 April 1993; pp. 1183–1188. [Google Scholar]

- Moghar, A.; Hamiche, M. Stock market prediction using LSTM recurrent neural network. Procedia Comput. Sci. 2020, 170, 1168–1173. [Google Scholar] [CrossRef]

- Graves, A.; Jaitly, N.; Mohamed, A.R. Hybrid speech recognition with deep bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–13 December 2013; pp. 273–278. [Google Scholar]

- Vadyala, S.R.; Betgeri, S.N.; Sherer, E.A.; Amritphale, A. Prediction of the number of COVID-19 confirmed cases based on K-means-LSTM. Array 2021, 11, 100085. [Google Scholar] [CrossRef]

- Xu, L.; Magar, R.; Farimani, A.B. Forecasting COVID-19 new cases using deep learning methods. Comput. Biol. Med. 2022, 144, 105342. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.MinMaxScaler.html (accessed on 13 March 2023).

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Related Work | Learning Dataset Size | Method | Input Parameters | Adaptive | Future Forecasting Window | RMSE |

|---|---|---|---|---|---|---|

| Khazaei et al. [10] | About seven days | Multi Layer Perceptron | CO, humidity, temperature | No | 1 min | 17 ppm |

| Kallio et al. [14] | One year | Ridge regression, Decision Tree, Random Forest, Multi Layer Perceptron | CO, humidity, temperature, PIR | No | 15 min | 12–13 ppm |

| Segala et al. [15] | Thirty days | 1D-Convolution Neural Network | CO, humidity, temperature | Yes | 15 min | 15 ppm |

| Presented Work | Ten days | 1D-Convolution Neural Network, Recurrent Neural Network, Long Short-Term Memory | CO, humidity, temperature, wearable accelerometer | Yes | 15 min | 10–11 ppm |

| Model | Parameters |

|---|---|

| 1D-CNN | hidden_layer_conv1d = [16, 32, 64, 128, 256], |

| hidden_layer_dense = [10, 20, 30, 40, 50, 60], | |

| number_epochs = [20, 30, 40, 50, 60, 70, 80, 90], | |

| batch_size = [4, 8, 16, 32, 64, 128], | |

| dropout = [0.0001, 0.0005, 0.001, 0.005, 0.01, 0.05] | |

| RNN | hidden_layer_simple_rnn = [10, 20, 30, 40, 50, 60], |

| hidden_layer_simple_rnn_1 = [10, 20, 30, 40, 50, 60], | |

| hidden_layer_simple_rnn_2 = [10, 20, 30, 40, 50, 60], | |

| number_epochs = [20, 30, 40, 50, 60, 70, 80, 90, 100], | |

| batch_size = [4, 8, 16, 32, 64], | |

| dropout = [0.1, 0.2, 0.3, 0.4, 0.5] | |

| dropout_1 = [0.1, 0.2, 0.3, 0.4, 0.5] | |

| dropout_2 = [0.1, 0.2, 0.3, 0.4, 0.5] | |

| LSTM | hidden_layer_lstm = [10, 20, 30, 40, 50, 60], |

| hidden_layer_lstm_1 = [10, 20, 30, 40, 50, 60], | |

| hidden_layer_lstm_2 = [10, 20, 30, 40, 50, 60], | |

| number_epochs = [20, 30, 40, 50, 60, 70, 80], | |

| batch_size = [4, 8, 16, 32, 64], | |

| dropout = [0.1, 0.2, 0.3, 0.4, 0.5] | |

| dropout_1 = [0.1, 0.2, 0.3, 0.4, 0.5] | |

| dropout_2 = [0.1, 0.2, 0.3, 0.4, 0.5] |

| Model | Parameters |

|---|---|

| 1D-CNN | optimizer = adam [37], loss_function = mean squared error, |

| epochs = 80, batch_size = 128, hidden_layer_conv1d = 128, | |

| hidden_layer_dense = 20, dropout = 0.005 | |

| RNN | optimizer = adam [37], loss_function = mean squared error |

| epochs = 80, batch_size = 4, hidden_layer_simple_rnn = 10, | |

| hidden_layer_simple_rnn_1 = 10, hidden_layer_simple_rnn_2 = 20, | |

| dropout = 0.1, dropout_1 = 0.3, dropout_2 = 0.3 | |

| LSTM | optimizer = adam [37], loss_function = mean squared error, |

| epochs = 50, batch_size = 8, hidden_layer_lstm = 60, | |

| hidden_layer_lstm_1 = 60, hidden_layer_lstm_2 = 30, dropout = 0.1, | |

| dropout_1 = 0.5, dropout_2 = 0.1 |

| Uninhabited Room | Work | Physical Activity | ||||

|---|---|---|---|---|---|---|

| RMSE | NRMSE | RMSE | NRMSE | RMSE | NRMSE | |

| 1D-CNN | 5.89 | 0.90 | 6.87 | 1.39 | 10.42 | 0.06 |

| RNN | 12.25 | 1.48 | 15.56 | 3.89 | 15.87 | 0.09 |

| LSTM | 4.85 | 0.80 | 5.38 | 1.34 | 10.31 | 0.06 |

| Model | RMSE [ppm] | |

|---|---|---|

| with Activity Level | without Activity Level | |

| 1D-CNN | 5.17 | 9.78 |

| RNN | 13.23 | 18.54 |

| LSTM | 3.50 | 7.86 |

| Model | RMSE (ppm) | ||

|---|---|---|---|

| without Temperature | without Umidity | without Temperature and Umidity | |

| 1D-CNN | 24.08 | 23.61 | 28.36 |

| RNN | 28.07 | 27.68 | 32.73 |

| LSTM | 24.43 | 23.54 | 27.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rescio, G.; Manni, A.; Caroppo, A.; Carluccio, A.M.; Siciliano, P.; Leone, A. Multi-Sensor Platform for Predictive Air Quality Monitoring. Sensors 2023, 23, 5139. https://doi.org/10.3390/s23115139

Rescio G, Manni A, Caroppo A, Carluccio AM, Siciliano P, Leone A. Multi-Sensor Platform for Predictive Air Quality Monitoring. Sensors. 2023; 23(11):5139. https://doi.org/10.3390/s23115139

Chicago/Turabian StyleRescio, Gabriele, Andrea Manni, Andrea Caroppo, Anna Maria Carluccio, Pietro Siciliano, and Alessandro Leone. 2023. "Multi-Sensor Platform for Predictive Air Quality Monitoring" Sensors 23, no. 11: 5139. https://doi.org/10.3390/s23115139

APA StyleRescio, G., Manni, A., Caroppo, A., Carluccio, A. M., Siciliano, P., & Leone, A. (2023). Multi-Sensor Platform for Predictive Air Quality Monitoring. Sensors, 23(11), 5139. https://doi.org/10.3390/s23115139