Transformer-Based Semantic Segmentation for Extraction of Building Footprints from Very-High-Resolution Images

Abstract

:1. Introduction

2. Related Work

2.1. Building Footprint Extraction Methods

2.2. Foundations of Transformers in Vision

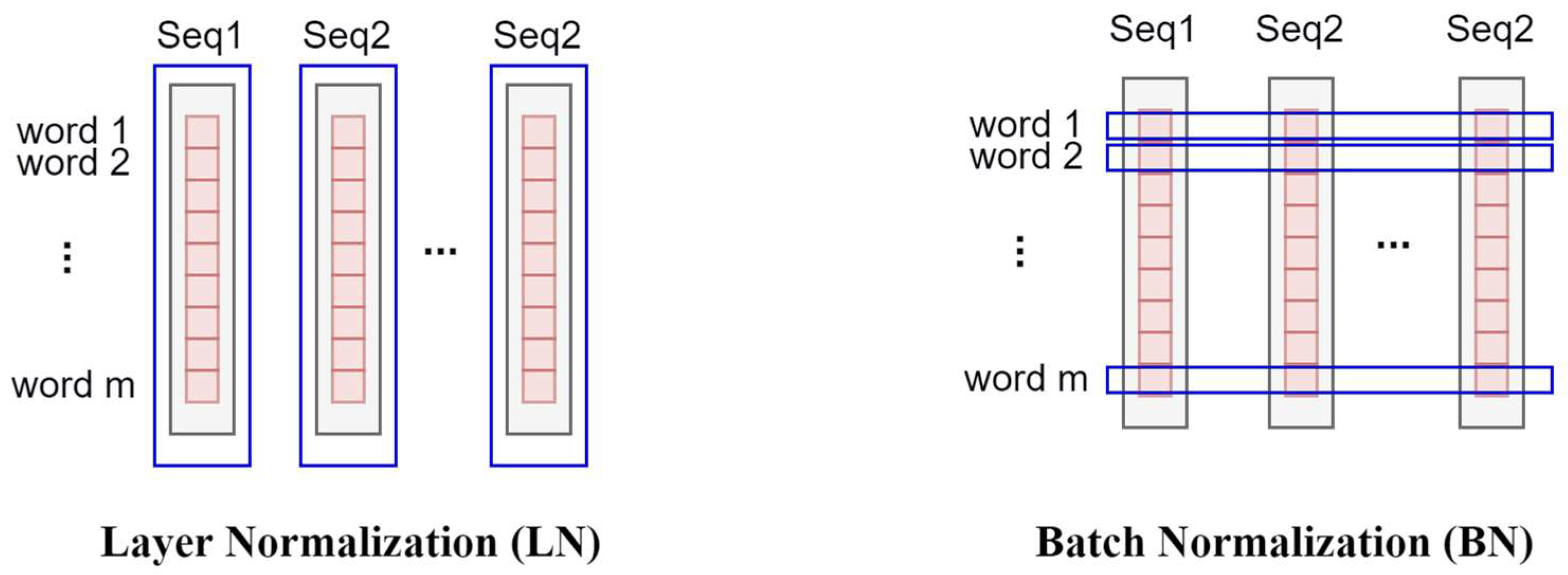

2.3. Layer Normalization (LN)

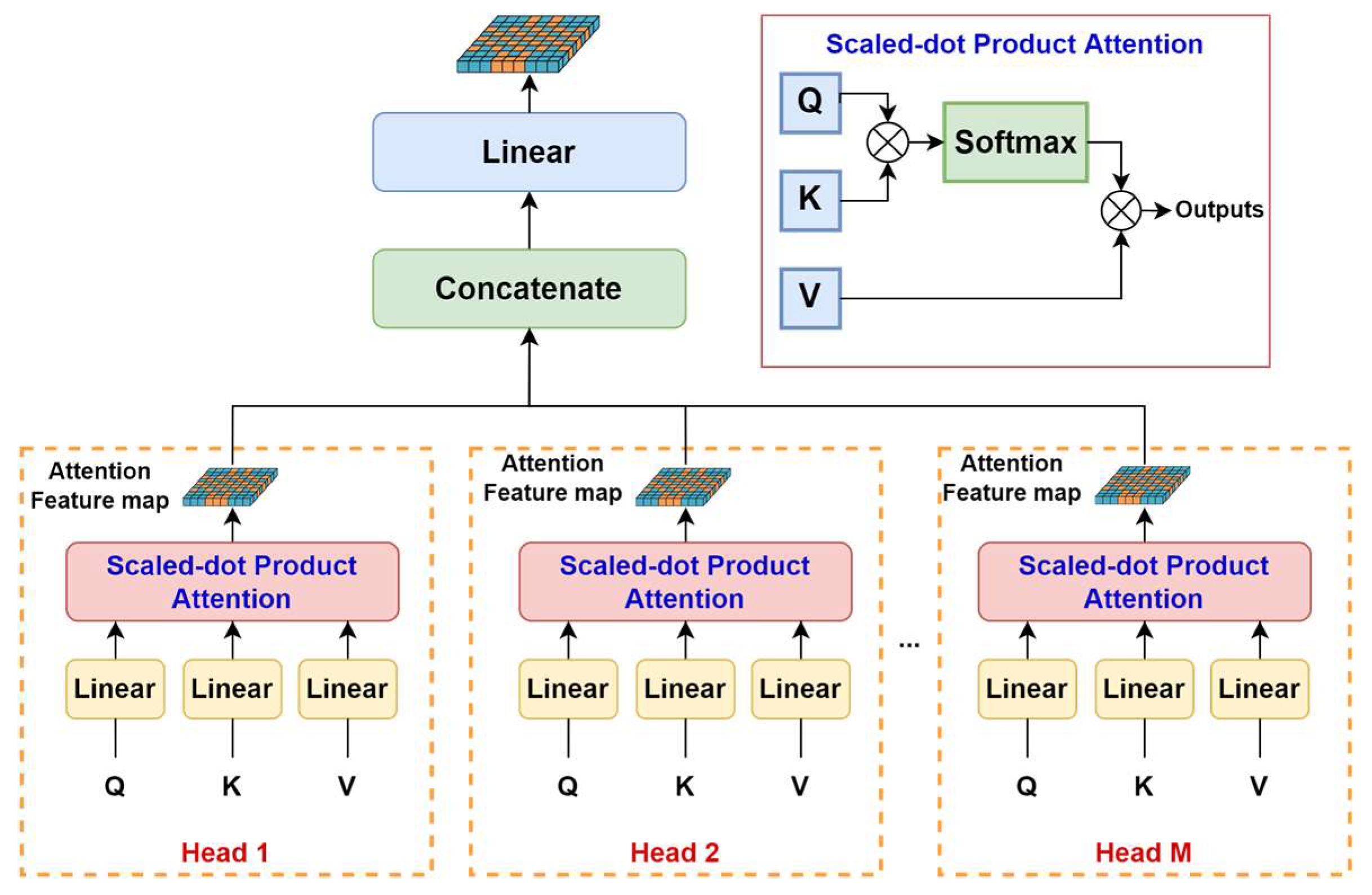

2.4. Multi-Head Self Attention (MSA)

2.5. Multi-Layer Perceptron (MLP)

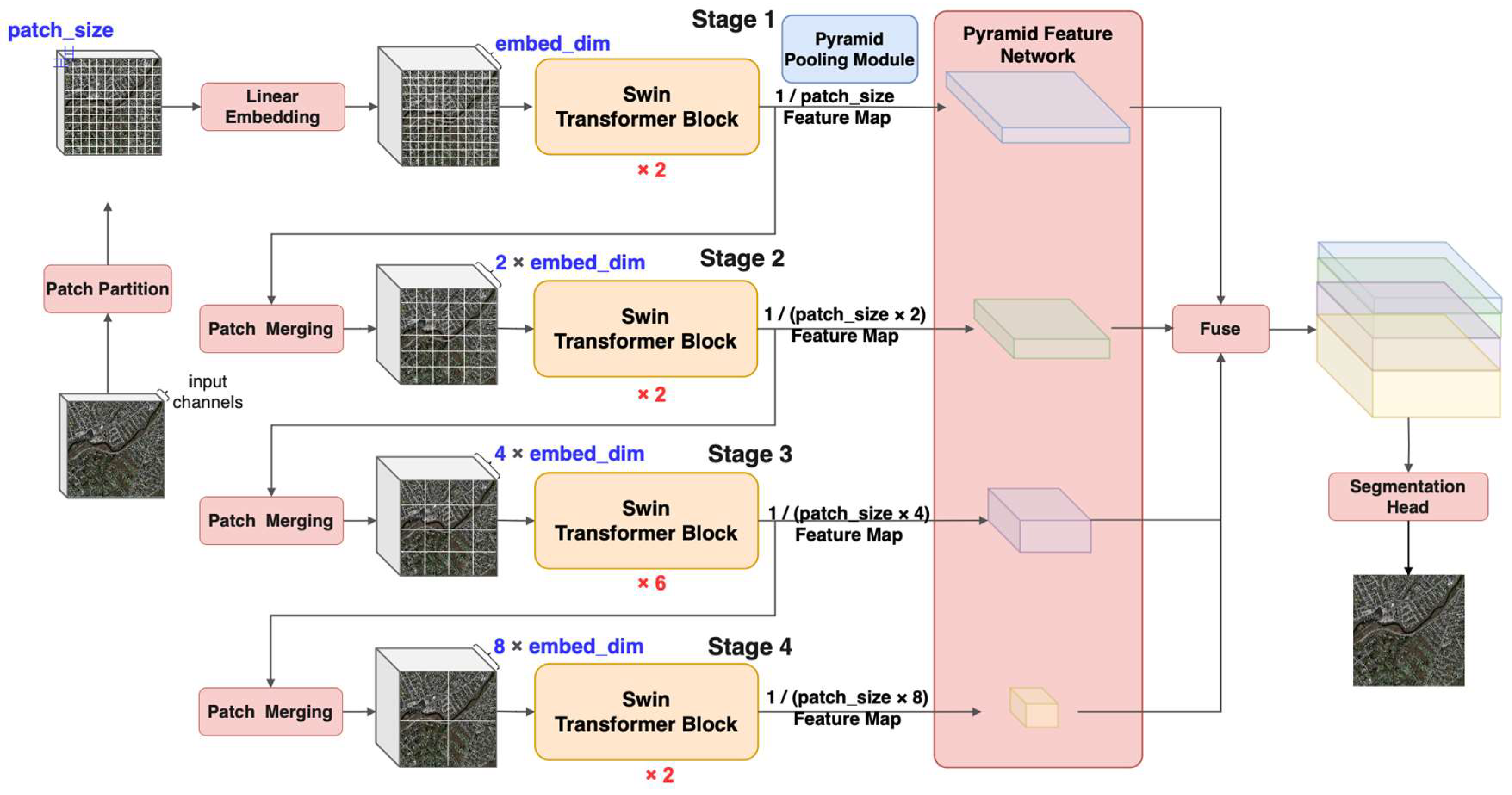

3. Transformer-Based Network for Extraction of Build Footprints from VHR Images

3.1. Network Architecture

3.2. Network Modules

3.2.1. Patch Partition

3.2.2. Linear Embedding

3.2.3. Swin Transformer Block

3.2.4. Pyramid Pooling

3.2.5. Feature Pyramid Fusion

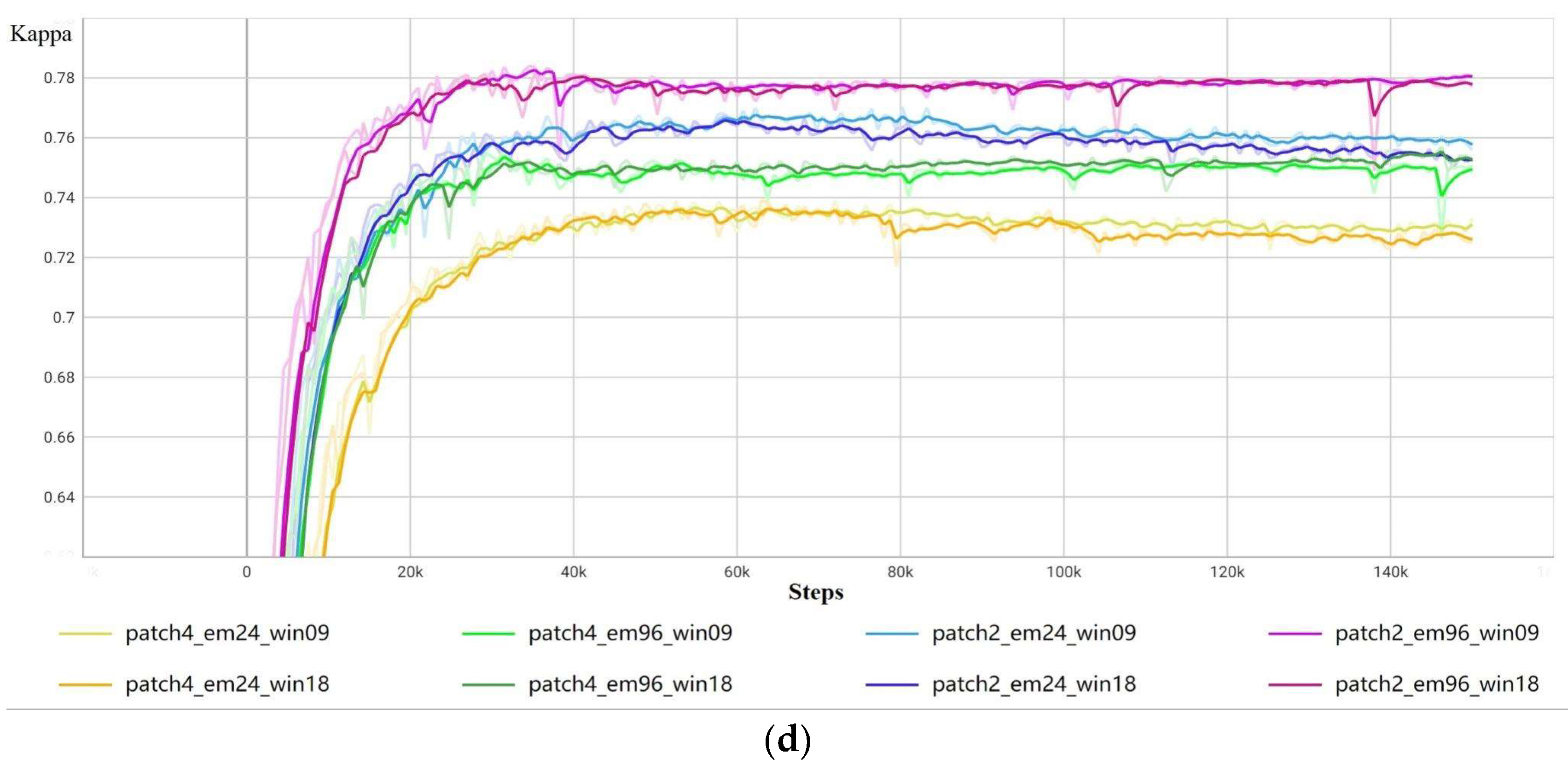

3.3. Transformer-Specific Hyperparameters

- (1)

- Patch size

- (2)

- Dimension of embeddings

- (3)

- Window size

4. Experimental Section

4.1. Datasets

4.2. Hyperparameter Settings

4.3. Training Settings

4.4. Evaluation Metrics

- (1)

- Overall accuracy (OA)

- (2)

- Intersection over union (IoU)

- (3)

- F1-score

- (4)

- Kappa

5. Results and Discussion

5.1. Accuracy Evaluation

5.2. Model Size and Training Time

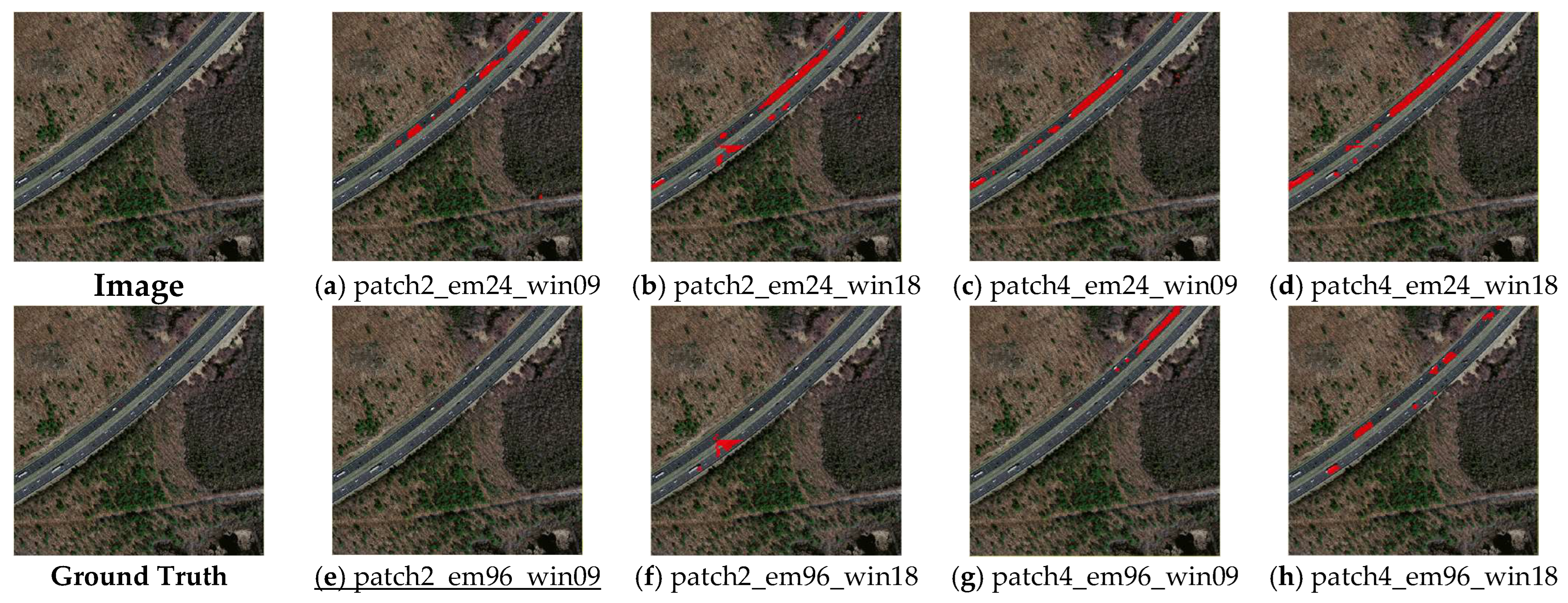

5.3. Prediction Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimedia Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Baatz, M.; Hoffmann, C.; Willhauck, G. Progressing from object-based to object-oriented image analysis. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 29–42. [Google Scholar]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S.H.G.J., Eds.; Springer: Heidelberg/Berlin, Germany; New York, NY, USA, 2008. [Google Scholar]

- Dong, L.; Du, H.; Mao, F.; Han, N.; Li, X.; Zhou, G.; Zhu, D.; Zheng, J.; Zhang, M.; Xing, L.; et al. Very High Resolution Remote Sensing Imagery Classification Using a Fusion of Random Forest and Deep Learning Technique—Subtropical Area for Example. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 113–128. [Google Scholar] [CrossRef]

- Guo, H.; Shi, Q.; Marinoni, A.; Du, B.; Zhang, L. Deep building footprint update network: A semi-supervised method for updating existing building footprint from bi-temporal remote sensing images. Remote Sens. Environ. 2021, 264, 112589. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, Y.; Wang, L.; Zhong, Y.; Guan, Q.; Lu, X.; Zhang, L.; Li, D. A Global Context-aware and Batch-independent Network for road extraction from VHR satellite imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 353–365. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A coarse-to-fine boundary refinement network for building footprint extraction from remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Hosseinpour, H.; Samadzadegan, F.; Javan, F.D. CMGFNet: A deep cross-modal gated fusion network for building extraction from very high-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2022, 184, 96–115. [Google Scholar] [CrossRef]

- Alam, M.; Wang, J.-F.; Guangpei, C.; Yunrong, L.; Chen, Y. Convolutional Neural Network for the Semantic Segmentation of Remote Sensing Images. Mob. Netw. Appl. 2021, 26, 200–215. [Google Scholar] [CrossRef]

- Dong, S.; Quan, Y.; Feng, W.; Dauphin, G.; Gao, L.; Xing, M. A Pixel Cluster CNN and Spectral-Spatial Fusion Algorithm for Hyperspectral Image Classification with Small-Size Training Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4101–4114. [Google Scholar] [CrossRef]

- Pan, X.; Zhao, J. High-Resolution Remote Sensing Image Classification Method Based on Convolutional Neural Network and Restricted Conditional Random Field. Remote Sens. 2018, 10, 920. [Google Scholar] [CrossRef]

- Jia, Z.; Lu, W. An End-to-End Hyperspectral Image Classification Method Using Deep Convolutional Neural Network with Spatial Constraint. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1786–1790. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Längkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and Segmentation of Satellite Orthoimagery Using Convolutional Neural Networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef]

- Liu, J.; Wang, S.; Hou, X.; Song, W. A deep residual learning serial segmentation network for extracting buildings from remote sensing imagery. Int. J. Remote Sens. 2020, 41, 5573–5587. [Google Scholar] [CrossRef]

- Huang, H.; Chen, P.; Xu, X.; Liu, C.; Wang, J.; Liu, C.; Clinton, N.; Gong, P. Estimating building height in China from ALOS AW3D30. ISPRS-J. Photogramm. Remote Sens. 2022, 185, 146–157. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Norman, M.; Shahar, H.M.; Mohamad, Z.; Rahim, A.; Mohd, F.A.; Shafri, H.Z.M. Urban building detection using object-based image analysis (OBIA) and machine learning (ML) algorithms. IOP Conf. Ser. Earth Environ. Sci. 2021, 620, 012010. [Google Scholar] [CrossRef]

- Qian, Y.; Zhou, W.; Yan, J.; Li, W.; Han, L. Comparing Machine Learning Classifiers for Object-Based Land Cover Classification Using Very High Resolution Imagery. Remote Sens. 2015, 7, 153–168. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Conneau, A.; Lample, G. Cross-Lingual Language Model Pretraining. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 7059–7069. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Yu, P.; Fei, H.; Li, P. Cross-lingual Language Model Pretraining for Retrieval. In Proceedings of the Web Conference, Ljubljana, Slovenia, 12–16 April 2021; pp. 1029–1039. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021; p. 11929. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Valente, J.; van der Voort, M.; Tekinerdogan, B. Effect of Attention Mechanism in Deep Learning-Based Remote Sensing Image Processing: A Systematic Literature Review. Remote Sens. 2021, 13, 2965. [Google Scholar] [CrossRef]

- Lindsay, G.W. Attention in Psychology, Neuroscience, and Machine Learning. Front. Comput. Neurosci. 2020, 14, 29. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Zou, Z.; Shi, Z. Building Extraction from Remote Sensing Images with Sparse Token Transformers. Remote Sens. 2021, 13, 4441. [Google Scholar] [CrossRef]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-Attention Multi-Scale Vision Transformer for Image Classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 357–366. [Google Scholar]

- Arkin, E.; Yadikar, N.; Xu, X.; Aysa, A.; Ubul, K. A survey: Object detection methods from CNN to transformer. Multimedia Tools Appl. 2022, 27, 1–31. [Google Scholar] [CrossRef]

- Cao, F.; Lu, X. Self-Attention Technology in Image Segmentation. In Proceedings of the International Conference on Intelligent Traffic Systems and Smart City, Zhengzhou, China, 19–21 November 2021; p. 1216511. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2021, 54, 200. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Montreal, QC, Canada, 6–14 December 2021; Volume 34, pp. 12077–12090. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. 2021. Available online: https://arxiv.org/abs/2103.14030 (accessed on 15 July 2022).

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows. arXiv 2022, arXiv:2107.00652. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Al Rahhal, M.M.; Al Dayil, R.; Al Ajlan, N. Vision Transformers for Remote Sensing Image Classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jegou, H. Training Data-Efficient Image Transformers & Distillation through Attention. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Volume 139, pp. 10347–10357. [Google Scholar] [CrossRef]

- Gu, J.; Tresp, V.; Qin, Y. Are Vision Transformers Robust to Patch Perturbations? In Computer Vision–ECCV 2022; Lecture Notes in Computer Science; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Germany, 2022; p. 13672. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Y. Locally linear embedding: A survey. Artif. Intell. Rev. 2011, 36, 29–48. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, F.; Zhao, J.; Yao, R.; Chen, S.; Ma, H. Spatial-Temporal Based Multihead Self-Attention for Remote Sensing Image Change Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6615–6626. [Google Scholar] [CrossRef]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.; Tay, F.E.H.; Feng, J.; Yan, S. Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 538–547. [Google Scholar] [CrossRef]

- Yuan, W.; Xu, W. MSST-Net: A Multi-Scale Adaptive Network for Building Extraction from Remote Sensing Images Based on Swin Transformer. Remote Sens. 2021, 13, 4743. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, Z.; Zhang, Y.; Li, J.; Du, Y.; Guan, Q.; Li, D. Global-Local-Aware conditional random fields based building extraction for high spatial resolution remote sensing images. Natl. Remote Sens. Bull. 2020, 25, 1422–1433. [Google Scholar] [CrossRef]

- Liu, T.; Yao, L.; Qin, J.; Lu, N.; Jiang, H.; Zhang, F.; Zhou, C. Multi-scale attention integrated hierarchical networks for high-resolution building footprint extraction. Int. J. Appl. Earth Obs. 2022, 109, 102768. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. A scale robust convolutional neural network for automatic building extraction from aerial and satellite imagery. Int. J. Remote Sens. 2018, 40, 3308–3322. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A. Improved Textural Built-Up Presence Index for Automatic Recognition of Human Settlements in Arid Regions with Scattered Vegetation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 16–26. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Urban Area Detection Using Local Feature Points and Spatial Voting. IEEE Geosci. Remote Sens. Lett. 2010, 7, 146–150. [Google Scholar] [CrossRef]

- Li, Y.; Tan, Y.; Deng, J.; Wen, Q.; Tian, J. Cauchy Graph Embedding Optimization for Built-Up Areas Detection From High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2078–2096. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Qin, X.; Ye, X.; Qin, Q. An Efficient Approach for Automatic Rectangular Building Extraction From Very High Resolution Optical Satellite Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 487–491. [Google Scholar] [CrossRef]

- Du, S.; Du, S.; Liu, B.; Zhang, X. Incorporating DeepLabv3+ and object-based image analysis for semantic segmentation of very high resolution remote sensing images. Int. J. Digit. Earth 2021, 14, 357–378. [Google Scholar] [CrossRef]

- Chen, H.; Lu, S. Building Extraction from Remote Sensing Images Using SegNet. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC), Xiamen, China, 5–7 July 2019; pp. 227–230. [Google Scholar] [CrossRef]

- Chen, D.-Y.; Peng, L.; Li, W.-C.; Wang, Y.-D. Building Extraction and Number Statistics in WUI Areas Based on UNet Structure and Ensemble Learning. Remote Sens. 2021, 13, 1172. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef]

- Tong, Z.; Li, Y.; Li, Y.; Fan, K.; Si, Y.; He, L. New Network Based on Unet++ and Densenet for Building Extraction from High Resolution Satellite Imagery. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2268–2271. [Google Scholar] [CrossRef]

- Yu, S.; Xie, Y.; Liu, C. Building extraction from remote sensing image based on improved segnet neural network and image pyramid. J. Phys. Conf. Ser. 2020, 1651, 012145. [Google Scholar] [CrossRef]

- Angelis, G.-E.; Domi, A.; Zamichos, A.; Tsourma, M.; Drosou, A.; Tzovaras, D. On The Exploration of Vision Transformers in Remote Sensing Building Extraction. In Proceedings of the 2022 IEEE International Symposium on Multimedia (ISM), Naples, Italy, 5 December 2022; pp. 208–215. [Google Scholar] [CrossRef]

- Cui, L.; Jing, X.; Wang, Y.; Huan, Y.; Xu, Y.; Zhang, Q. Improved Swin Transformer-Based Semantic Segmentation of Postearthquake Dense Buildings in Urban Areas Using Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 369–385. [Google Scholar] [CrossRef]

- Yuan, W.; Zhang, X.; Shi, J.; Wang, J. LiteST-Net: A Hybrid Model of Lite Swin Transformer and Convolution for Building Extraction from Remote Sensing Image. Remote Sens. 2023, 15, 1996. [Google Scholar] [CrossRef]

- Sun, Z.; Zhou, W.; Ding, C.; Xia, M. Multi-Resolution Transformer Network for Building and Road Segmentation of Remote Sensing Image. ISPRS Int. J. Geo-Inf. 2022, 11, 165. [Google Scholar] [CrossRef]

- Wang, L.; Fang, S.; Meng, X.; Li, R. Building Extraction with Vision Transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Xiao, X.; Guo, W.; Chen, R.; Hui, Y.; Wang, J.; Zhao, H. A Swin Transformer-Based Encoding Booster Integrated in U-Shaped Network for Building Extraction. Remote Sens. 2022, 14, 2611. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. Available online: http://arxiv.org/abs/1607.06450 (accessed on 30 July 2022).

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. In Proceedings of the Lecture Notes in Computer Science, Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 432–448. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

| Input Image Size (Pixels) | Utilization for the Original 1500 × 1500 Pixel Image | Patch Size (Pixels) | Patch Numbers of the Four Stages | Window Size (Patches) |

|---|---|---|---|---|

| 224 | 89.6% | 2 | [1122, 562, 282, 142] | 7 or 14 |

| 4 | [562, 282, 142, 72] | 7 | ||

| 256 | 85.3% | 2 | [1282, 642, 322, 162] | 8 or 16 |

| 4 | [642, 322, 162, 82] | 8 | ||

| 288 | 96.0% | 2 | [1442, 722, 362, 182] | 9 or 18 |

| 4 | [722, 362, 182, 92] | 9 | ||

| 320 | 93.9% | 2 | [1602, 802, 402, 202] | 10 or 20 |

| 4 | [802, 402, 202, 102] | 10 | ||

| 352 | 93.9% | 2 | [1762, 882, 442, 222] | 11 or 22 |

| 4 | [882, 442, 222, 112] | 11 | ||

| 384 | 76.8% | 2 | [1922, 962, 482, 242] | 12 or 24 |

| 4 | [962, 482, 242, 122] | 12 |

| Experiment Group | Patch Size (Pixels) | Embedding Dimension | Window Size (Patches) |

|---|---|---|---|

| patch2_em24_win09 | 2 | 24 | 9 |

| patch2_em96_win09 | 2 | 96 | 9 |

| patch2_em24_win18 | 2 | 24 | 18 |

| patch2_em96_win18 | 2 | 96 | 18 |

| patch4_em24_win09 | 4 | 24 | 9 |

| patch4_em96_win09 | 4 | 96 | 9 |

| patch4_em24_win18 | 4 | 24 | 18 |

| patch4_em96_win18 | 4 | 96 | 18 |

| Experiment Group | Top-3 | OA | mIoU | F1-Score | Kappa | Epoch |

|---|---|---|---|---|---|---|

| patch4_em24_win09 | 1 | 0.8663 | 0.7822 | 0.8696 | 0.7393 | 96 |

| 2 | 0.8675 | 0.7821 | 0.8695 | 0.7391 | 85 | |

| 3 | 0.8627 | 0.7820 | 0.8694 | 0.7388 | 77 | |

| Avg | 0.8655 | 0.7821 | 0.8695 | 0.7391 | - | |

| patch4_em24_win18 | 1 | 0.8673 | 0.7822 | 0.8697 | 0.7393 | 84 |

| 2 | 0.8642 | 0.7815 | 0.8691 | 0.7382 | 85 | |

| 3 | 0.8682 | 0.7814 | 0.8691 | 0.7382 | 65 | |

| Avg | 0.8666 | 0.7817 | 0.8693 | 0.7386 | - | |

| patch4_em96_win09 | 1 | 0.8838 | 0.7945 | 0.8785 | 0.7569 | 42 |

| 2 | 0.8743 | 0.7933 | 0.8775 | 0.7550 | 39 | |

| 3 | 0.8730 | 0.7925 | 0.8769 | 0.7538 | 40 | |

| Avg | 0.8770 | 0.7934 | 0.8776 | 0.7552 | - | |

| patch4_em96_win18 | 1 | 0.8804 | 0.7947 | 0.8786 | 0.7571 | 195 |

| 2 | 0.8775 | 0.7937 | 0.8778 | 0.7557 | 186 | |

| 3 | 0.8782 | 0.7936 | 0.8778 | 0.7555 | 189 | |

| Avg | 0.8787 | 0.7940 | 0.8781 | 0.7561 | - | |

| patch2_em24_win09 | 1 | 0.8864 | 0.8038 | 0.8851 | 0.7703 | 107 |

| 2 | 0.8818 | 0.8038 | 0.8850 | 0.7701 | 111 | |

| 3 | 0.8855 | 0.8037 | 0.8850 | 0.7700 | 83 | |

| Avg | 0.8846 | 0.8038 | 0.8850 | 0.7701 | - | |

| patch2_em24_win18 | 1 | 0.8802 | 0.8019 | 0.8837 | 0.7674 | 78 |

| 2 | 0.8827 | 0.8013 | 0.8834 | 0.7668 | 81 | |

| 3 | 0.8872 | 0.8011 | 0.8833 | 0.7666 | 91 | |

| Avg | 0.8834 | 0.8014 | 0.8835 | 0.7669 | - | |

| patch2_em96_win09 | 1 | 0.8931 | 0.8139 | 0.8920 | 0.7839 | 46 |

| 2 | 0.8909 | 0.8138 | 0.8919 | 0.7837 | 42 | |

| 3 | 0.8898 | 0.8138 | 0.8918 | 0.7837 | 47 | |

| Avg | 0.8913 | 0.8138 | 0.8919 | 0.7838 | - | |

| patch2_em96_win18 | 1 | 0.8900 | 0.8124 | 0.8909 | 0.7819 | 47 |

| 2 | 0.8898 | 0.8121 | 0.8907 | 0.7814 | 53 | |

| 3 | 0.8940 | 0.8119 | 0.8906 | 0.7813 | 39 | |

| Avg | 0.8913 | 0.8121 | 0.8907 | 0.7815 | - |

| Methods | Parameters (Million) | OA | mIoU | F1-Score | Kappa |

|---|---|---|---|---|---|

| U-Net | 7.7 | 0.8271 | 0.7412 | 0.8390 | 0.6780 |

| DeepLabV3 | 39.6 | 0.8339 | 0.7471 | 0.8440 | 0.6881 |

| Swin Transformer+PFN (patch2_em96_win09) | 62.3 | 0.8913 | 0.8138 | 0.8919 | 0.7838 |

| Experiment Group | Training Time (Hours) | Model Parameters (Million) | Model Size (MB) |

|---|---|---|---|

| patch4_em24_win09 | 8.8 | 8.9 | 35 |

| patch2_em24_win09 | 9.0 | ||

| patch4_em24_win18 | 9.4 | 8.9 | 35.6 |

| patch2_em24_win18 | 9.8 | ||

| patch4_em96_win09 | 10.4 | 62.3 | 249.2 |

| patch2_em96_win09 | 10.8 | ||

| patch4_em96_win18 | 12.4 | 62.3 | 249.2 |

| patch2_em96_win18 | 12.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, J.; Zhu, A.-X.; Zhu, Y. Transformer-Based Semantic Segmentation for Extraction of Building Footprints from Very-High-Resolution Images. Sensors 2023, 23, 5166. https://doi.org/10.3390/s23115166

Song J, Zhu A-X, Zhu Y. Transformer-Based Semantic Segmentation for Extraction of Building Footprints from Very-High-Resolution Images. Sensors. 2023; 23(11):5166. https://doi.org/10.3390/s23115166

Chicago/Turabian StyleSong, Jia, A-Xing Zhu, and Yunqiang Zhu. 2023. "Transformer-Based Semantic Segmentation for Extraction of Building Footprints from Very-High-Resolution Images" Sensors 23, no. 11: 5166. https://doi.org/10.3390/s23115166

APA StyleSong, J., Zhu, A.-X., & Zhu, Y. (2023). Transformer-Based Semantic Segmentation for Extraction of Building Footprints from Very-High-Resolution Images. Sensors, 23(11), 5166. https://doi.org/10.3390/s23115166