Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features

Abstract

1. Introduction

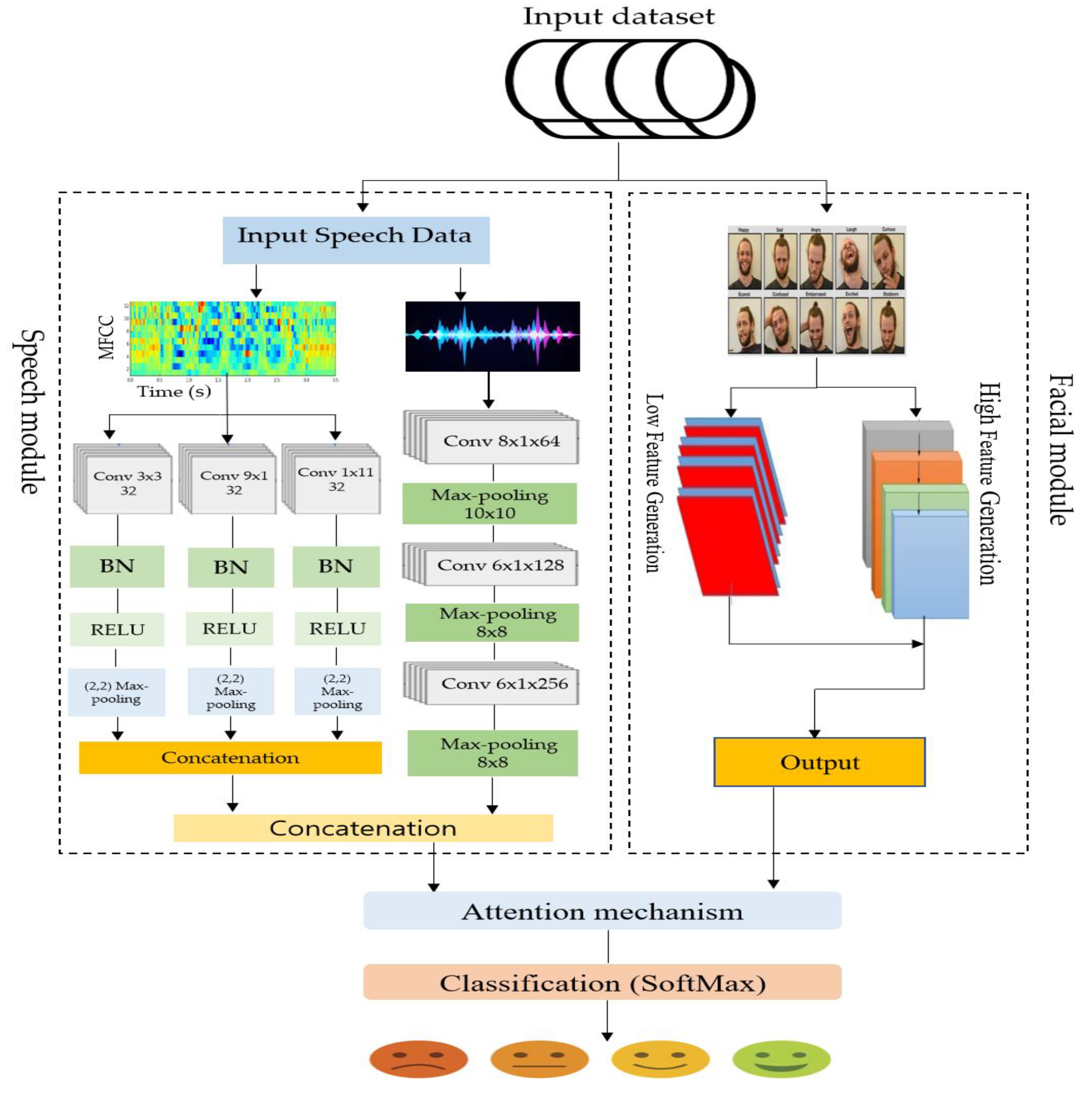

- This study suggests a novel way for identifying multimodal emotions by bringing together facial and verbal clues with an attention mechanism. This method addresses the shortcomings of unimodal systems and enhances the accuracy of emotion recognition by using valuable data from both modalities.

- Time and spectral information were used to address the challenges posed by varying the length of speech data. This allows the model to focus on the most informative parts of the speech data, thereby reducing the loss of important information.

- Facial expression modalities involve generating low- and high-level facial features using a pretrained CNN model. Low-level features capture the local facial details, whereas high-level features capture the global facial expressions. The use of both low- and high-level features enhances the accuracy of emotion recognition systems because it provides a more comprehensive representation of facial expressions.

- This study improves the generalization of the multimodal emotion recognition system by reducing the overfitting problem.

- Finally, the attention mechanism is effectively utilized to focus on the most informative parts of the input data and handle speech and image features of different sizes.

2. Related Works

3. The Proposed System

3.1. Facial Module

Low Feature Generation Pyramid (LFGP)

3.2. Speech Module

3.2.1. MFCC Feature Extractor

- expanding the CNN’s depth by incorporating additional layers

- implementing average pooling or larger stride

- making use of expanded convolutions

- employing a separate convolution on each channel of an input

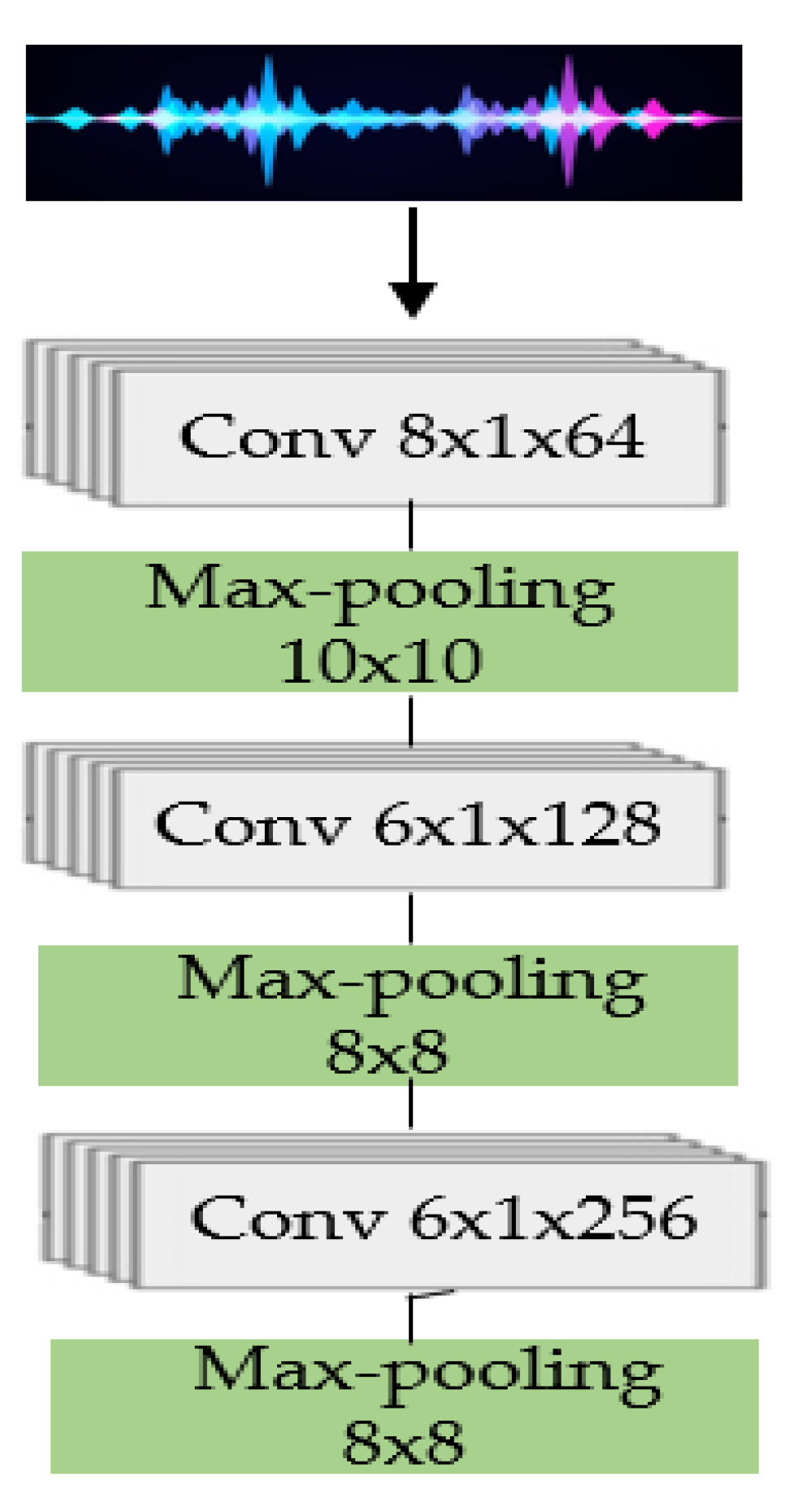

3.2.2. Waveform Feature Extractor

3.3. Attention Mechanism

4. Experiment

4.1. Datasets

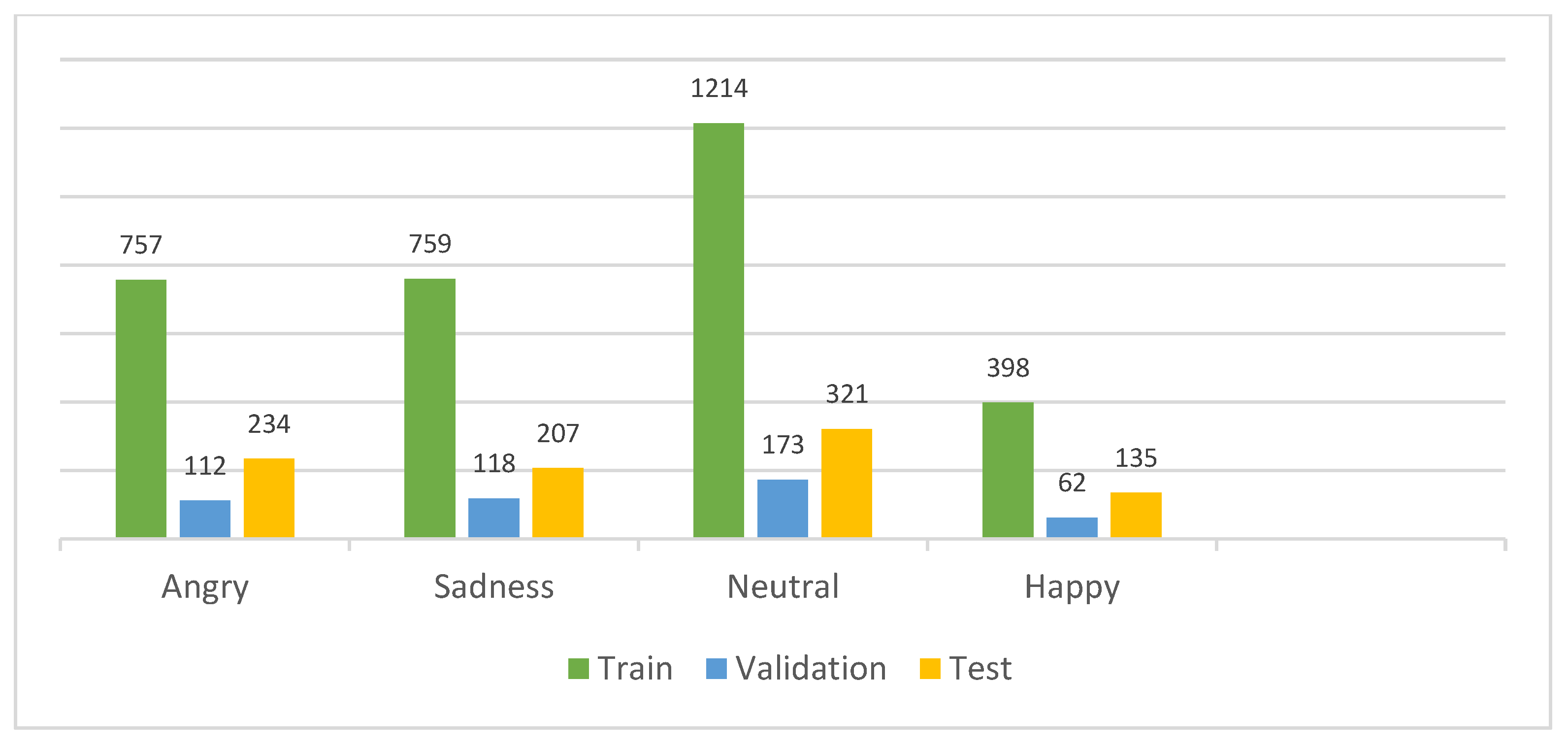

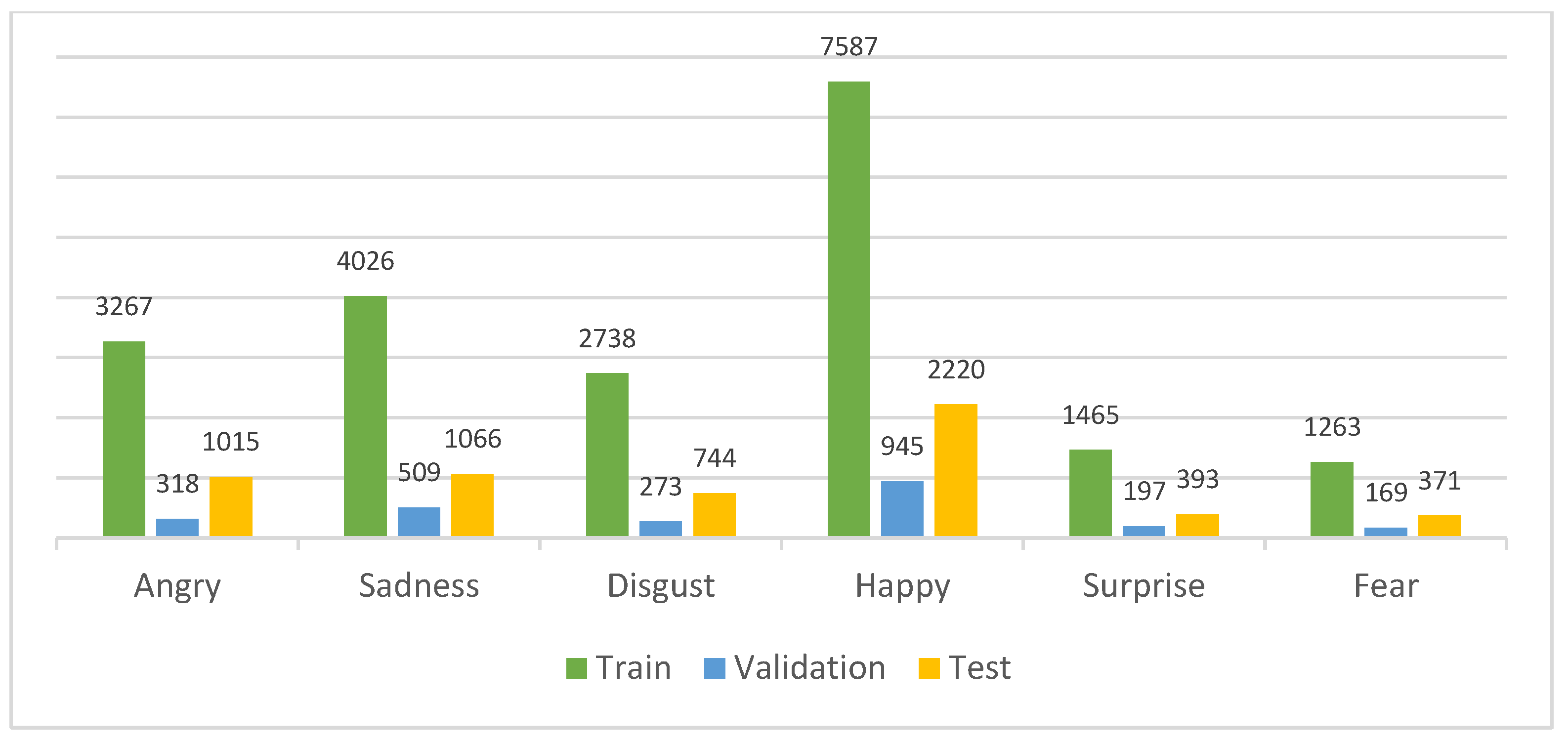

4.1.1. The Interactive Emotional Dyadic Motion Capture (IEMOCAP) [49]

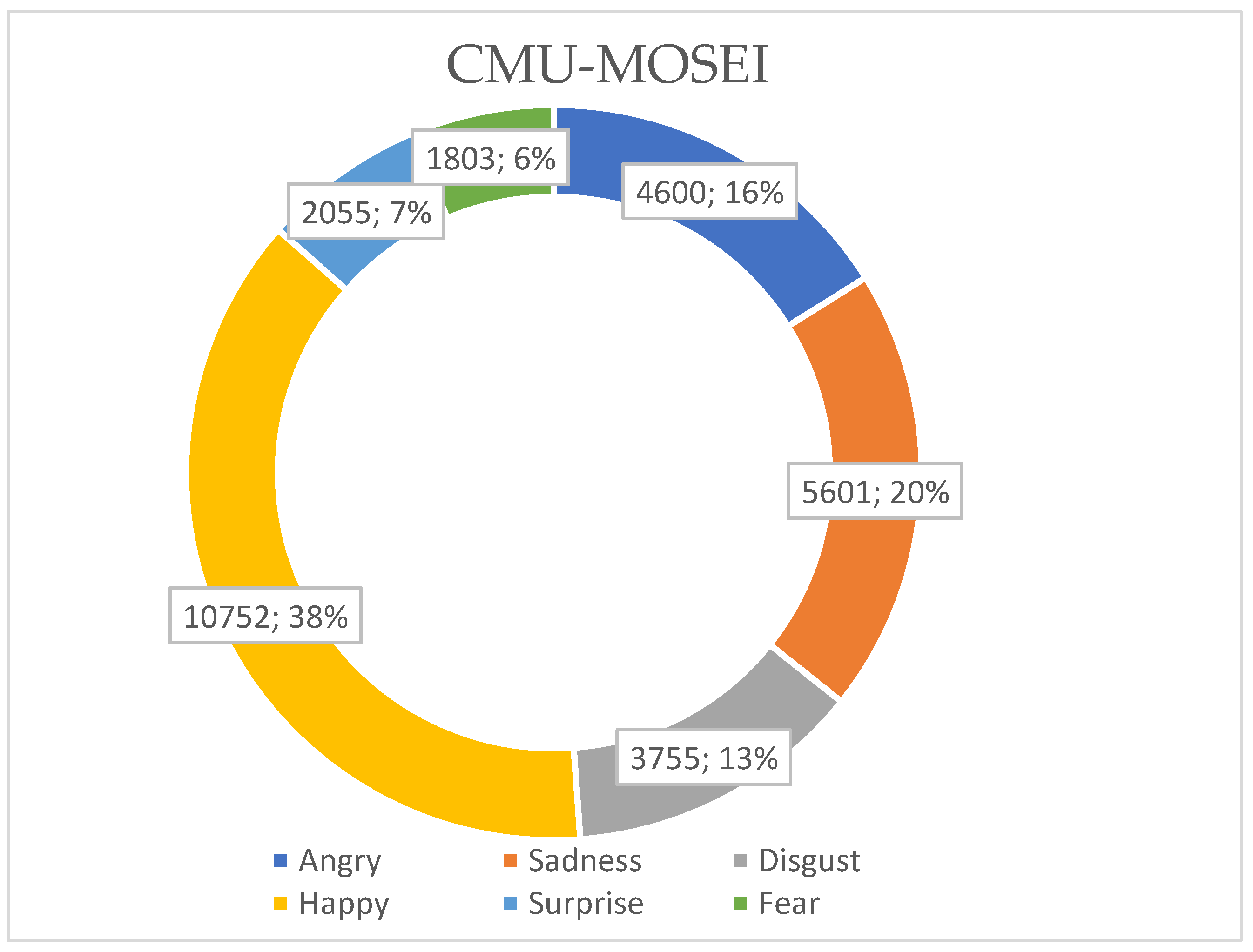

4.1.2. The CMU-MOSEI [51]

4.2. Evaluation Metrics

4.3. Implementation Details

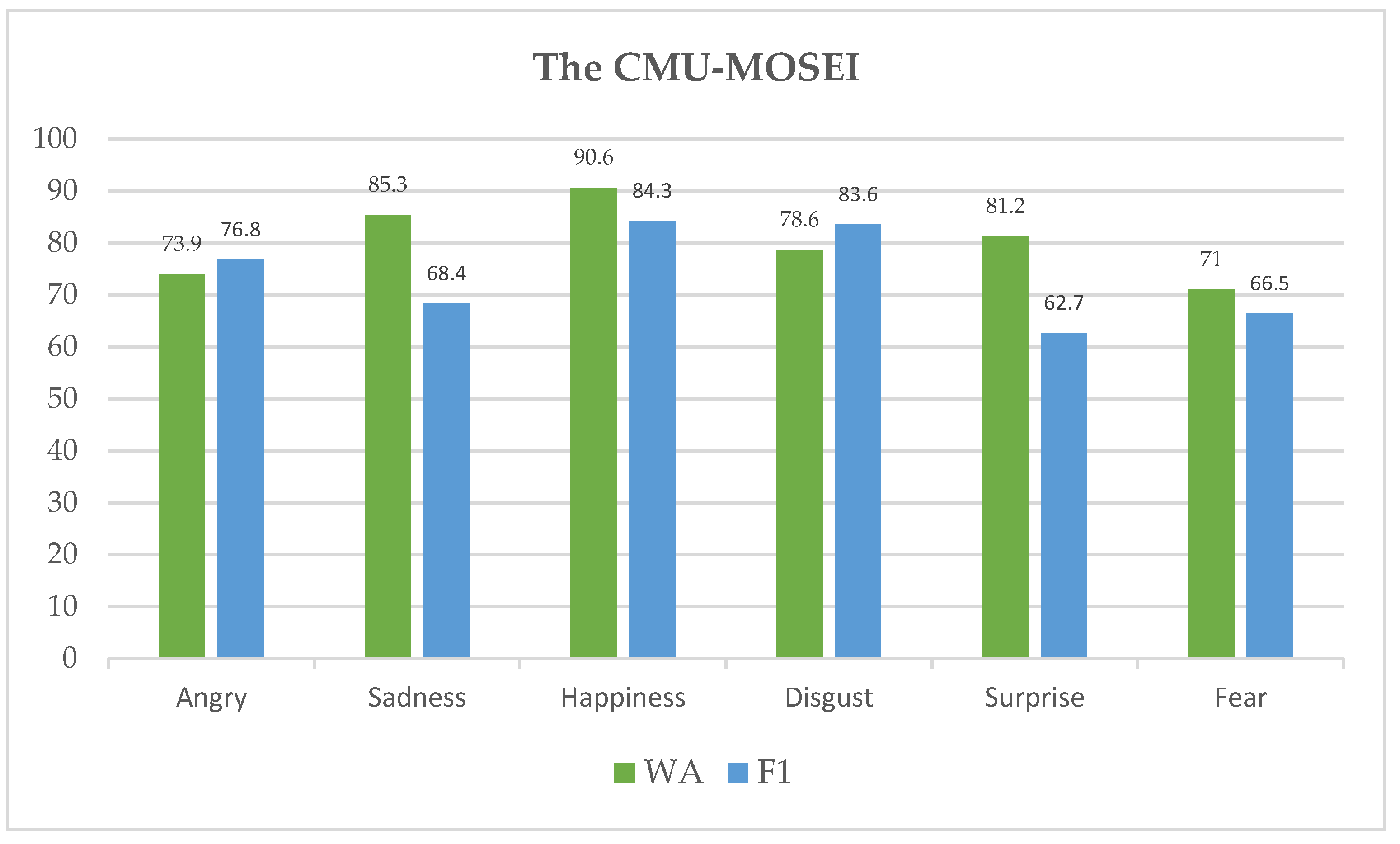

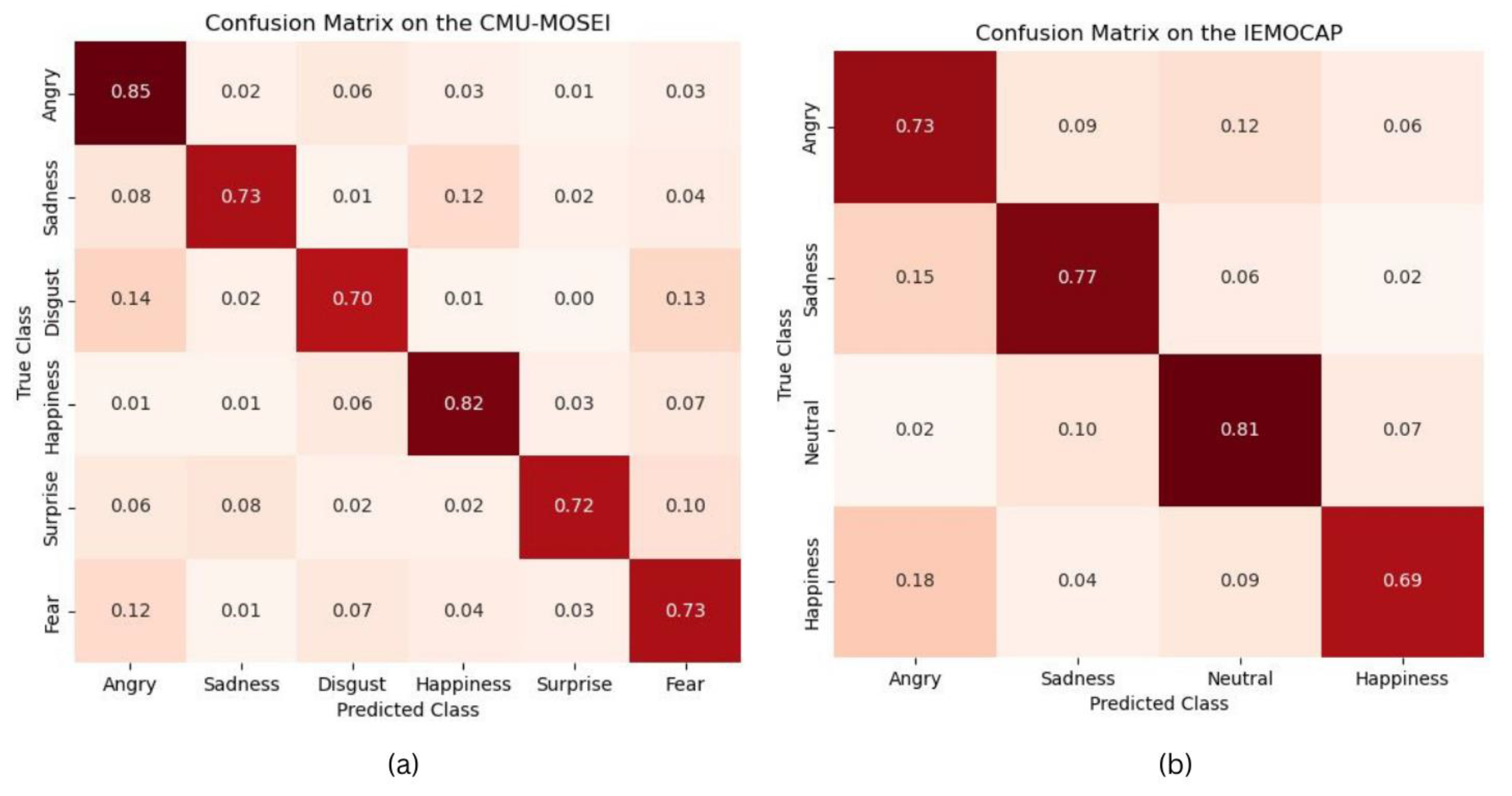

4.4. Experimental Performance and Its Comparison

- Wenliang et al. [20] completely constructed an end-to-end multimodal emotion recognition model that links and optimizes the two stages simultaneously.

- Xi et al. [21]’s emotion identification from face video includes a semantic improvement module that guides the audio/visual encoder with text information, followed by a multimodal bottleneck transformer that reinforces audio and visual representations via cross-modal dynamic interactions.

- Multimodal transformer [22]. The suggested technique intends to improve emotion identification accuracy by deploying a cross-modal translator capable of translating across three distinct modalities.

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balci, S.; Demirci, G.M.; Demirhan, H.; Sarp, S. Sentiment Analysis Using State of the Art Machine Learning Techniques. In Digital Interaction and Machine Intelligence, 9th Machine Intelligence and Digital Interaction Conference, Warsaw, Poland, 9–10 December 2021; Lecture Notes in Networks and Systems; Biele, C., Kacprzyk, J., Kopeć, W., Owsiński, J.W., Romanowski, A., Sikorski, M., Eds.; Springer: Cham, Switzerland, 2022; Volume 440. [Google Scholar] [CrossRef]

- Ahmed, N.; Al Aghbari, Z.; Girija, S. A systematic survey on multimodal emotion recognition using learning algorithms. Intell. Syst. Appl. 2023, 17, 200171. [Google Scholar] [CrossRef]

- Gu, X.; Shen, Y.; Xu, J. Multimodal Emotion Recognition in Deep Learning:a Survey. In Proceedings of the 2021 International Conference on Culture-Oriented Science & Technology (ICCST), Beijing, China, 18–21 November 2021; pp. 77–82. [Google Scholar] [CrossRef]

- Tang, G.; Xie, Y.; Li, K.; Liang, R.; Zhao, L. Multimodal emotion recognition from facial expression and speech based on feature fusion. Multimedia Tools Appl. 2022, 82, 16359–16373. [Google Scholar] [CrossRef]

- Luna-Jiménez, C.; Griol, D.; Callejas, Z.; Kleinlein, R.; Montero, J.M.; Fernández-Martínez, F. Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning. Sensors 2021, 21, 7665. [Google Scholar] [CrossRef]

- Sajjad, M.; Ullah, F.U.M.; Ullah, M.; Christodoulou, G.; Cheikh, F.A.; Hijji, M.; Muhammad, K.; Rodrigues, J.J. A comprehensive survey on deep facial expression recognition: Challenges, applications, and future guidelines. Alex. Eng. J. 2023, 68, 817–840. [Google Scholar] [CrossRef]

- Song, Z. Facial Expression Emotion Recognition Model Integrating Philosophy and Machine Learning Theory. Front. Psychol. 2021, 12, 759485. [Google Scholar] [CrossRef] [PubMed]

- Abdusalomov, A.B.; Safarov, F.; Rakhimov, M.; Turaev, B.; Whangbo, T.K. Improved Feature Parameter Extraction from Speech Signals Using Machine Learning Algorithm. Sensors 2022, 22, 8122. [Google Scholar] [CrossRef] [PubMed]

- Hsu, J.H.; Su, M.H.; Wu, C.H.; Chen, Y.H. Speech emotion recognition considering nonverbal vocalization in affective con-versations. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1675–1686. [Google Scholar] [CrossRef]

- Ayvaz, U.; Gürüler, H.; Khan, F.; Ahmed, N.; Whangbo, T.; Bobomirzaevich, A.A. Automatic speaker recognition using mel-frequency cepstral coefficients through machine learning. Comput. Mater. Contin. 2022, 71, 5511–5521. [Google Scholar] [CrossRef]

- Makhmudov, F.; Mukhiddinov, M.; Abdusalomov, A.; Avazov, K.; Khamdamov, U.; Cho, Y.I. Improvement of the end-to-end scene text recognition method for “text-to-speech” conversion. Int. J. Wavelets Multiresolution Inf. Process. 2020, 18, 2050052. [Google Scholar] [CrossRef]

- Vijayvergia, A.; Kumar, K. Selective shallow models strength integration for emotion detection using GloVe and LSTM. Multimed. Tools Appl. 2021, 80, 28349–28363. [Google Scholar] [CrossRef]

- Farkhod, A.; Abdusalomov, A.; Makhmudov, F.; Cho, Y.I. LDA-Based Topic Modeling Sentiment Analysis Using Topic/Document/Sentence (TDS) Model. Appl. Sci. 2021, 11, 11091. [Google Scholar] [CrossRef]

- Pan, J.; Fang, W.; Zhang, Z.; Chen, B.; Zhang, Z.; Wang, S. Multimodal Emotion Recognition based on Facial Expressions, Speech, and EEG. IEEE Open J. Eng. Med. Biol. 2023, 1–8. [Google Scholar] [CrossRef]

- Liu, X.; Xu, Z.; Huang, K. Multimodal Emotion Recognition Based on Cascaded Multichannel and Hierarchical Fusion. Comput. Intell. Neurosci. 2023, 2023, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Farkhod, A.; Abdusalomov, A.B.; Mukhiddinov, M.; Cho, Y.-I. Development of Real-Time Landmark-Based Emotion Recognition CNN for Masked Faces. Sensors 2022, 22, 8704. [Google Scholar] [CrossRef]

- Chaudhari, A.; Bhatt, C.; Krishna, A.; Travieso-González, C.M. Facial Emotion Recognition with Inter-Modality-Attention-Transformer-Based Self-Supervised Learning. Electronics 2023, 12, 288. [Google Scholar] [CrossRef]

- Krishna, N.D.; Patil, A. Multimodal Emotion Recognition Using Cross-Modal Attention and 1D Convolutional Neural Networks. Interspeech 2020, 2020, 4243–4247. [Google Scholar] [CrossRef]

- Xu, Y.; Su, H.; Ma, G.; Liu, X. A novel dual-modal emotion recognition algorithm with fusing hybrid features of audio signal and speech context. Complex Intell. Syst. 2023, 9, 951–963. [Google Scholar] [CrossRef]

- Dai, W.; Cahyawijaya, S.; Liu, Z.; Fung, P. Multimodal end-to-end sparse model for emotion recognition. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics, Online, 6–11 June 2021; pp. 5305–5316. [Google Scholar]

- Xia, X.; Zhao, Y.; Jiang, D. Multimodal interaction enhanced representation learning for video emotion recognition. Front. Neurosci. 2022, 16, 1086380. [Google Scholar] [CrossRef]

- Yoon, Y.C. Can We Exploit All Datasets? Multimodal Emotion Recognition Using Cross-Modal Translation. IEEE Access 2022, 10, 64516–64524. [Google Scholar] [CrossRef]

- Yang, K.; Wang, C.; Gu, Y.; Sarsenbayeva, Z.; Tag, B.; Dingler, T.; Wadley, G.; Goncalves, J. Behavioral and Physiological Signals-Based Deep Multimodal Approach for Mobile Emotion Recognition. IEEE Trans. Affect. Comput. 2021, 14, 1082–1097. [Google Scholar] [CrossRef]

- Tashu, T.M.; Hajiyeva, S.; Horvath, T. Multimodal Emotion Recognition from Art Using Sequential Co-Attention. J. Imaging 2021, 7, 157. [Google Scholar] [CrossRef] [PubMed]

- Kutlimuratov, A.; Abdusalomov, A.; Whangbo, T.K. Evolving Hierarchical and Tag Information via the Deeply Enhanced Weighted Non-Negative Matrix Factorization of Rating Predictions. Symmetry 2020, 12, 1930. [Google Scholar] [CrossRef]

- Dang, X.; Chen, Z.; Hao, Z.; Ga, M.; Han, X.; Zhang, X.; Yang, J. Wireless Sensing Technology Combined with Facial Expression to Realize Multimodal Emotion Recognition. Sensors 2023, 23, 338. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.; Nguyen, D.T.; Sridharan, S.; Denman, S.; Nguyen, T.T.; Dean, D.; Fookes, C. Meta-transfer learning for emotion recognition. Neural Comput. Appl. 2023, 35, 10535–10549. [Google Scholar] [CrossRef]

- Dresvyanskiy, D.; Ryumina, E.; Kaya, H.; Markitantov, M.; Karpov, A.; Minker, W. End-to-End Modeling and Transfer Learning for Audiovisual Emotion Recognition in-the-Wild. Multimodal Technol. Interact. 2022, 6, 11. [Google Scholar] [CrossRef]

- Wei, W.; Jia, Q.; Feng, Y.; Chen, G.; Chu, M. Multi-modal facial expression feature based on deep-neural networks. J. Multimodal User Interfaces 2019, 14, 17–23. [Google Scholar] [CrossRef]

- Gupta, S.; Kumar, P.; Tekchandani, R.K. Facial emotion recognition based real-time learner engagement detection system in online learning context using deep learning models. Multimedia Tools Appl. 2023, 82, 11365–11394. [Google Scholar] [CrossRef]

- Chowdary, M.K.; Nguyen, T.N.; Hemanth, D.J. Deep learning-based facial emotion recognition for human–computer inter-action applications. Neural Comput. Appl. 2021, 1–18. [Google Scholar] [CrossRef]

- Li, J.; Zhang, X.; Huang, L.; Li, F.; Duan, S.; Sun, Y. Speech Emotion Recognition Using a Dual-Channel Complementary Spectrogram and the CNN-SSAE Neutral Network. Appl. Sci. 2022, 12, 9518. [Google Scholar] [CrossRef]

- Kutlimuratov, A.; Abdusalomov, A.B.; Oteniyazov, R.; Mirzakhalilov, S.; Whangbo, T.K. Modeling and Applying Implicit Dormant Features for Recommendation via Clustering and Deep Factorization. Sensors 2022, 22, 8224. [Google Scholar] [CrossRef]

- Zou, H.; Si, Y.; Chen, C.; Rajan, D.; Chng, E.S. Speech Emotion Recognition with Co-Attention Based Multi-Level Acoustic Information. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022. [Google Scholar] [CrossRef]

- Kanwal, S.; Asghar, S.; Ali, H. Feature selection enhancement and feature space visualization for speech-based emotion recognition. PeerJ Comput. Sci. 2022, 8, e1091. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Yang, J.; Xie, X. Multimodal emotion recognition based on feature fusion and residual connection. In Proceedings of the 2023 IEEE 2nd International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 24–26 February 2023; pp. 373–377. [Google Scholar] [CrossRef]

- Huddar, M.G.; Sannakki, S.S.; Rajpurohit, V.S. Attention-based Multi-modal Sentiment Analysis and Emotion Detection in Conversation using RNN. Int. J. Interact. Multimed. Artif. Intell. 2021, 6, 112. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, M.; Sun, X.; Chen, X.; Zhao, F. Attention-based sensor fusion for emotion recognition from human motion by combining convolutional neural network and weighted kernel support vector machine and using inertial measurement unit signals. IET Signal Process. 2023, 17, e12201. [Google Scholar] [CrossRef]

- Dobrišek, S.; Gajšek, R.; Mihelič, F.; Pavešić, N.; Štruc, V. Towards Efficient Multi-Modal Emotion Recognition. Int. J. Adv. Robot. Syst. 2013, 10, 53. [Google Scholar] [CrossRef]

- Mamieva, D.; Abdusalomov, A.B.; Mukhiddinov, M.; Whangbo, T.K. Improved Face Detection Method via Learning Small Faces on Hard Images Based on a Deep Learning Approach. Sensors 2023, 23, 502. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Makhmudov, F.; Kutlimuratov, A.; Akhmedov, F.; Abdallah, M.S.; Cho, Y.-I. Modeling Speech Emotion Recognition via At-tention-Oriented Parallel CNN Encoders. Electronics 2022, 11, 4047. [Google Scholar] [CrossRef]

- Araujo, A.; Norris, W.; Sim, J. Computing Receptive Fields of Convolutional Neural Networks. Distill 2019, 4, e21. [Google Scholar] [CrossRef]

- Wang, C.; Sun, H.; Zhao, R.; Cao, X. Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series. Sensors 2020, 20, 7031. [Google Scholar] [CrossRef]

- Hsu, S.-M.; Chen, S.-H.; Huang, T.-R. Personal Resilience Can Be Well Estimated from Heart Rate Variability and Paralinguistic Features during Human–Robot Conversations. Sensors 2021, 21, 5844. [Google Scholar] [CrossRef]

- Mirsamadi, S.; Barsoum, E.; Zhang, C. Automatic speech emotion recognition using recurrent neural networks with local attention. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2227–2231. [Google Scholar]

- Ayetiran, E.F. Attention-based aspect sentiment classification using enhanced learning through cnn-Bilstm networks. Knowl. Based Syst. 2022, 252. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Hazarika, D.; Mazumder, N.; Zadeh, A.; Morency, L.-P. Multi-level Multiple Attentions for Contextual Multimodal Sentiment Analysis. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), Orleans, LA, USA, 18–21 November 2017; pp. 1033–1038. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.-C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Evaluation 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Poria, S.; Majumder, N.; Hazarika, D.; Cambria, E.; Gelbukh, A.; Hussain, A. Multimodal Sentiment Analysis: Addressing Key Issues and Setting Up the Baselines. IEEE Intell. Syst. 2018, 33, 17–25. [Google Scholar] [CrossRef]

- Zadeh, A.; Pu, P. Multimodal language analysis in the wild: CMU-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers), Melbourne, VIC, Australia, 15–20 July 2018; pp. 2236–2246. [Google Scholar]

- Ilyosov, A.; Kutlimuratov, A.; Whangbo, T.-K. Deep-Sequence–Aware Candidate Generation for e-Learning System. Processes 2021, 9, 1454. [Google Scholar] [CrossRef]

- Safarov, F.; Kutlimuratov, A.; Abdusalomov, A.B.; Nasimov, R.; Cho, Y.-I. Deep Learning Recommendations of E-Education Based on Clustering and Sequence. Electronics 2023, 12, 809. [Google Scholar] [CrossRef]

| Software | Programming tools | Python, Pandas, OpenCV, Librosa, Keras-TensorFlow |

| OS | Windows 10 | |

| Hardware | CPU | AMD Ryzen Threadripper 1900X 8-Core Processor 3.80 GHz, TSMC, South Korea |

| GPU | Titan Xp 32 GB | |

| RAM | 128 GB | |

| Parameters | Epochs | 100 |

| Batch size | 32 | |

| Learning rate | 0.001, Adam optimizer | |

| Regularization | L2 regularization, Batch normalization |

| Datasets | Models | Metrics | |

|---|---|---|---|

| WA (%) | F1 (%) | ||

| IEMOCAP | Xia et al. [21] | − | 64.6 |

| Multimodal transformer [22] | 72.8 | 59.9 | |

| Wenliang et al. [20] | - | 57.4 | |

| Our system | 74.6 | 66.1 | |

| CMU-MOSEI | Xi et al. [21] | 69.6 | 50.9 |

| Multimodal transformer [22] | 80.4 | 67.4 | |

| Wenliang et al. [20] | 66.8 | 46.8 | |

| Our system | 80.7 | 73.7 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mamieva, D.; Abdusalomov, A.B.; Kutlimuratov, A.; Muminov, B.; Whangbo, T.K. Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors 2023, 23, 5475. https://doi.org/10.3390/s23125475

Mamieva D, Abdusalomov AB, Kutlimuratov A, Muminov B, Whangbo TK. Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors. 2023; 23(12):5475. https://doi.org/10.3390/s23125475

Chicago/Turabian StyleMamieva, Dilnoza, Akmalbek Bobomirzaevich Abdusalomov, Alpamis Kutlimuratov, Bahodir Muminov, and Taeg Keun Whangbo. 2023. "Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features" Sensors 23, no. 12: 5475. https://doi.org/10.3390/s23125475

APA StyleMamieva, D., Abdusalomov, A. B., Kutlimuratov, A., Muminov, B., & Whangbo, T. K. (2023). Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors, 23(12), 5475. https://doi.org/10.3390/s23125475