An Improved YOLOv7 Lightweight Detection Algorithm for Obscured Pedestrians

Abstract

1. Introduction

2. Materials and Methods

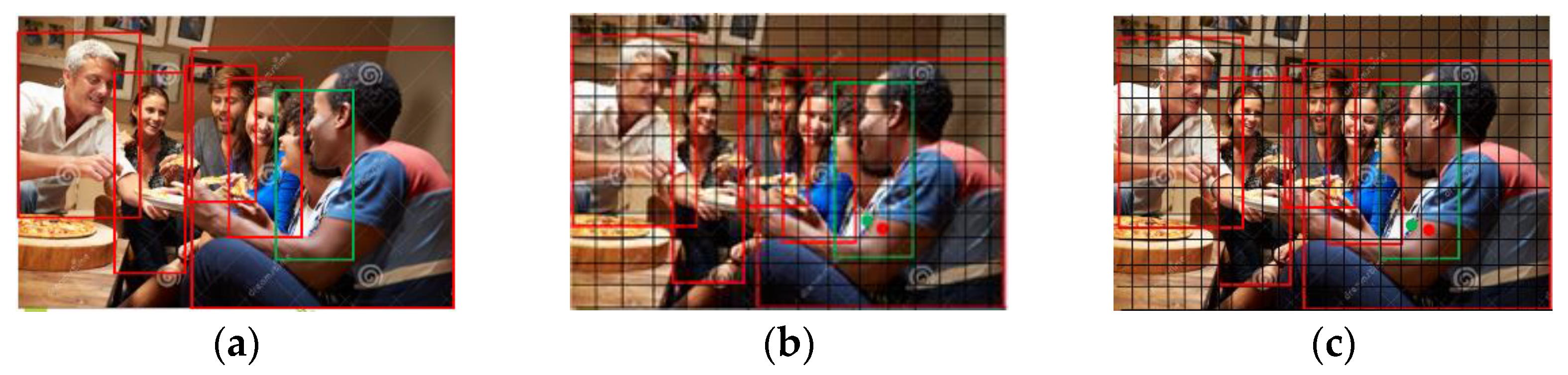

2.1. Dataset

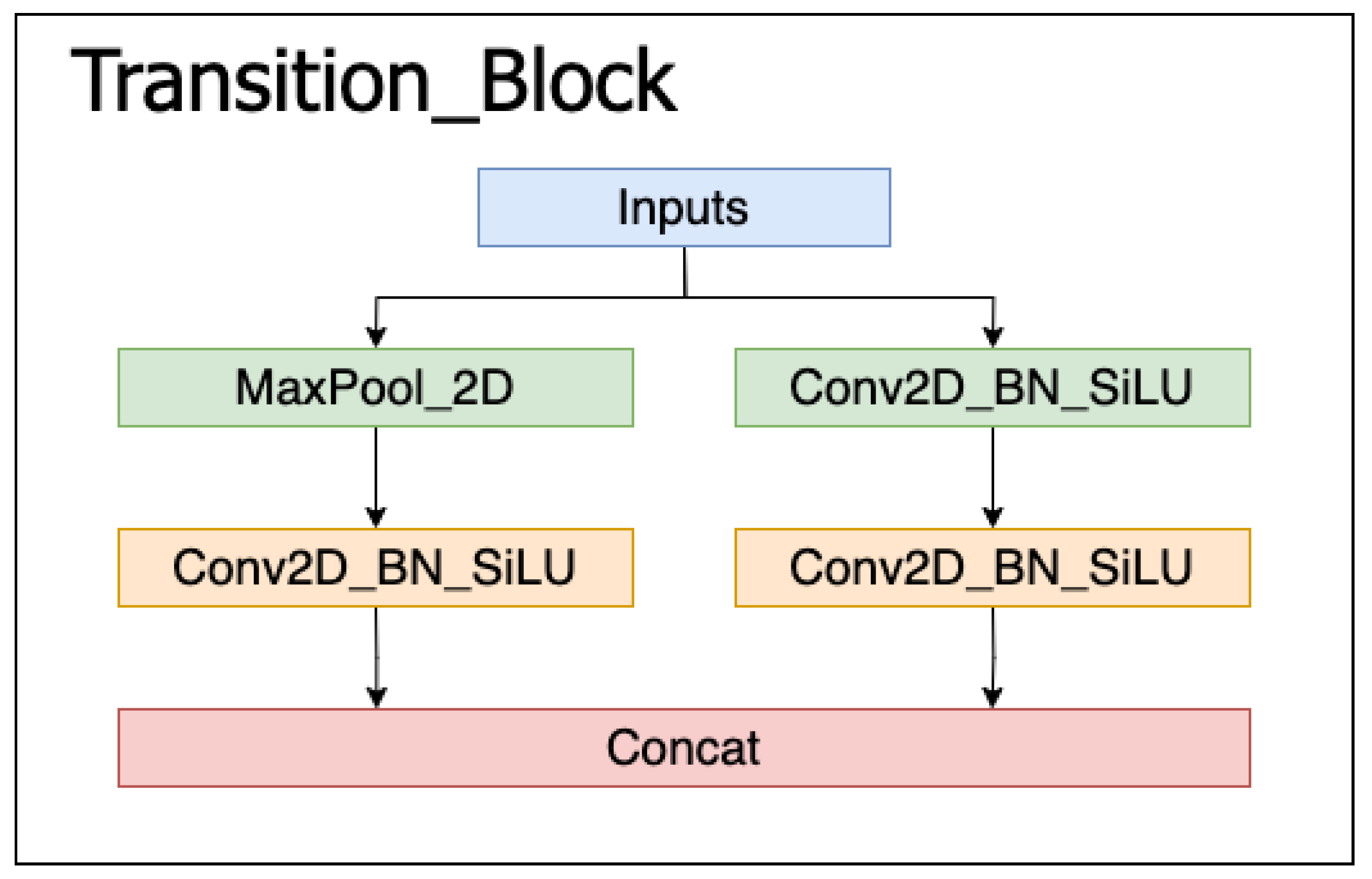

2.2. Structure of YOLOv7

3. Improved YOLOv7

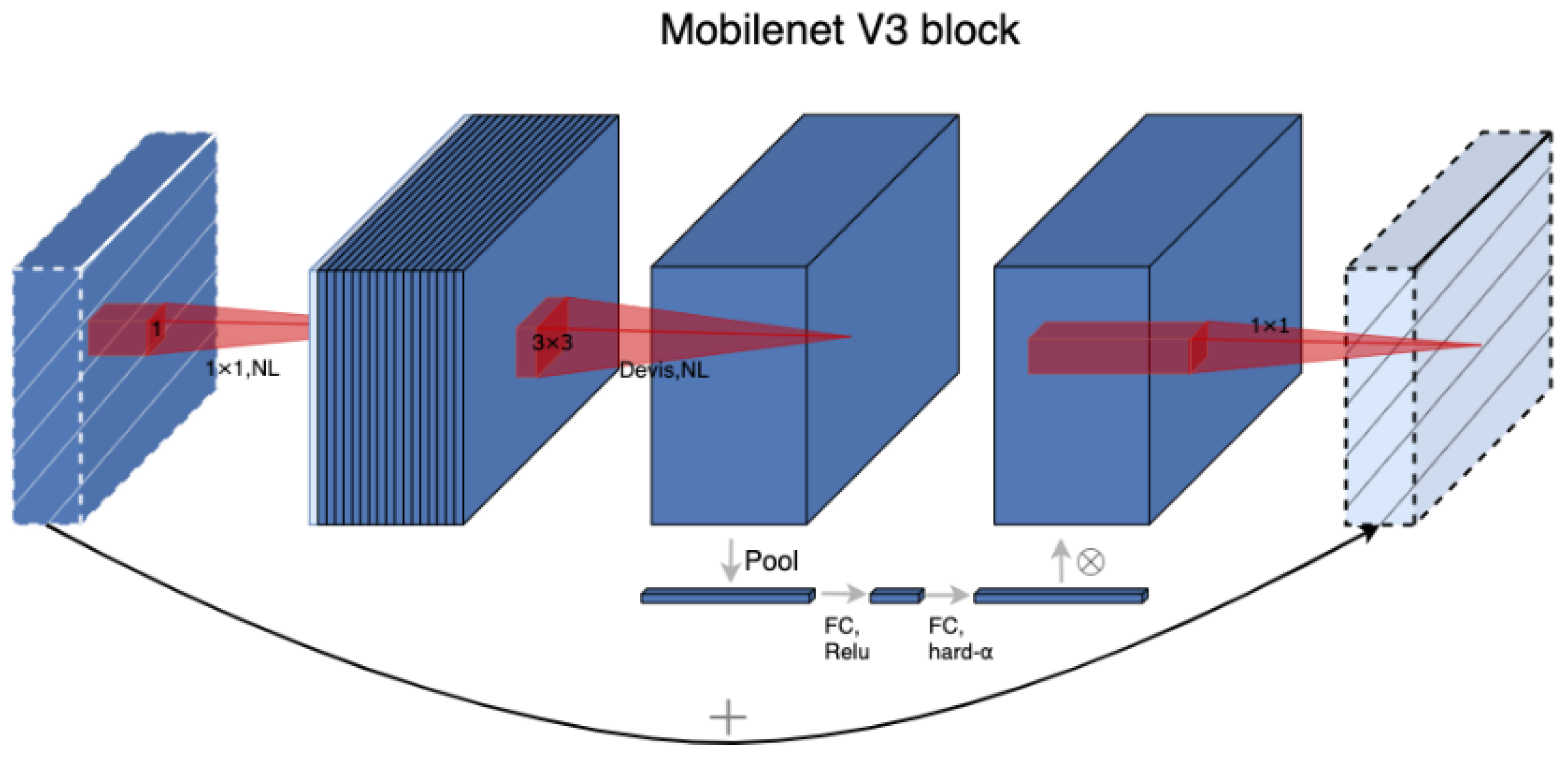

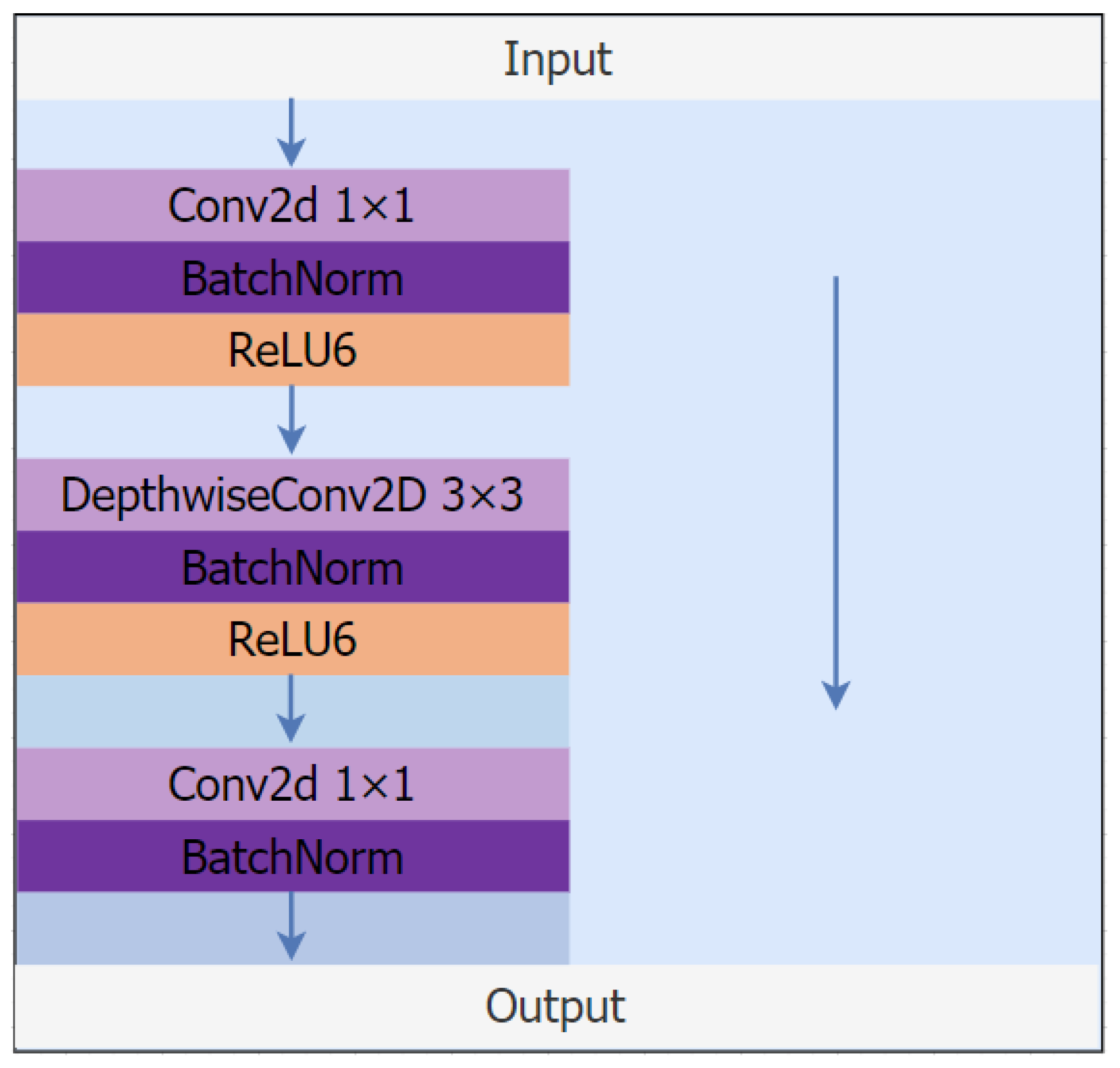

3.1. The Lightweight Backbone MobilemetV3

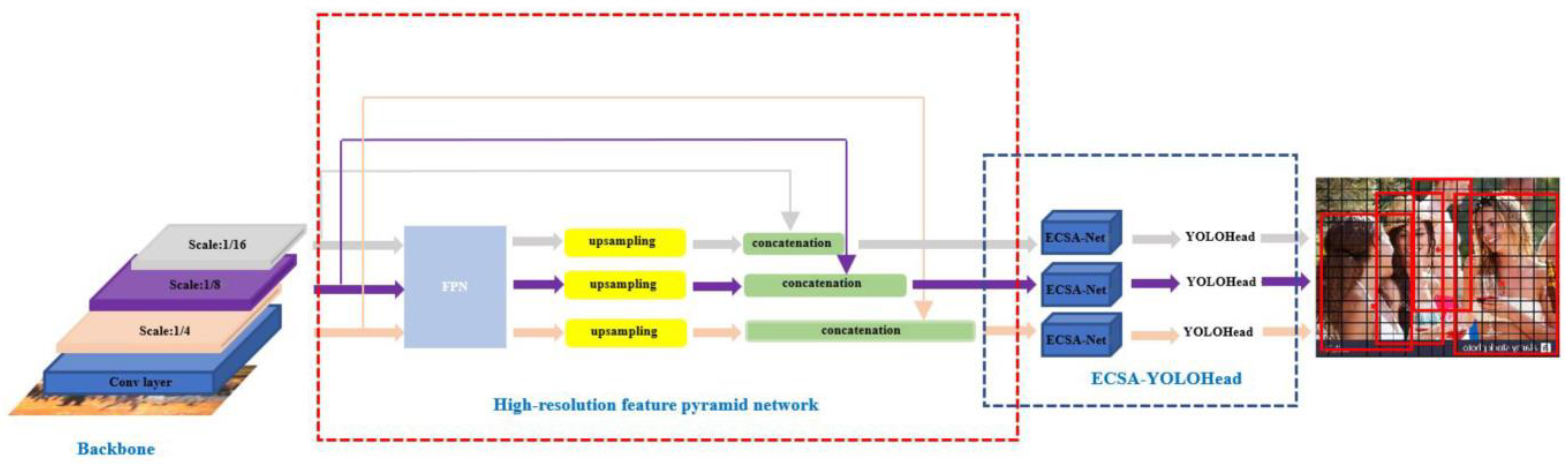

3.2. High-Resolution Feature Pyramid Network

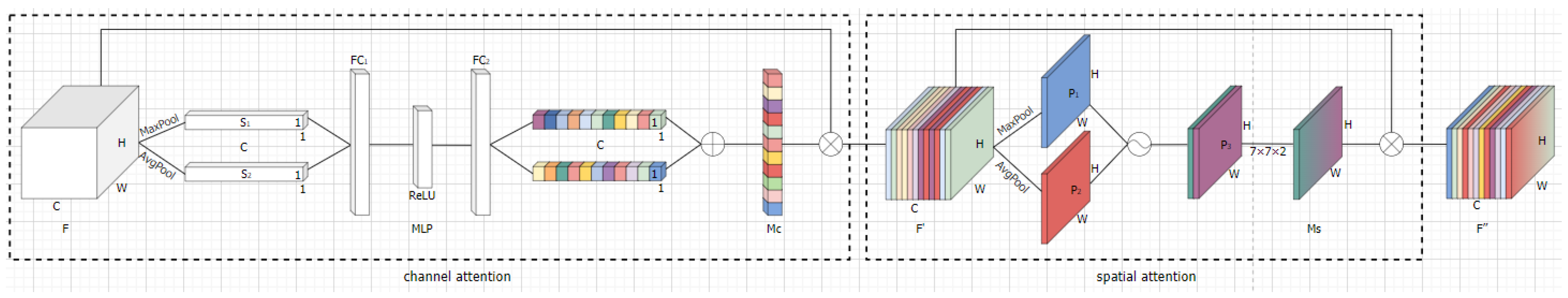

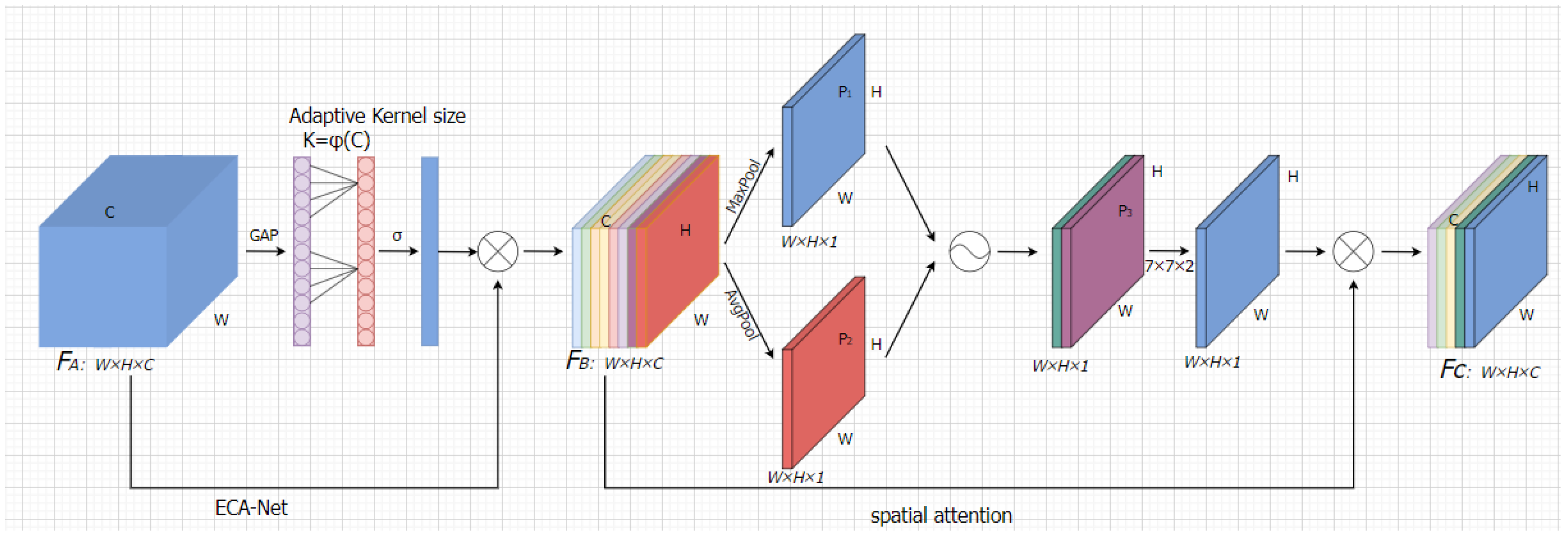

3.3. Improved Attention Mechanism

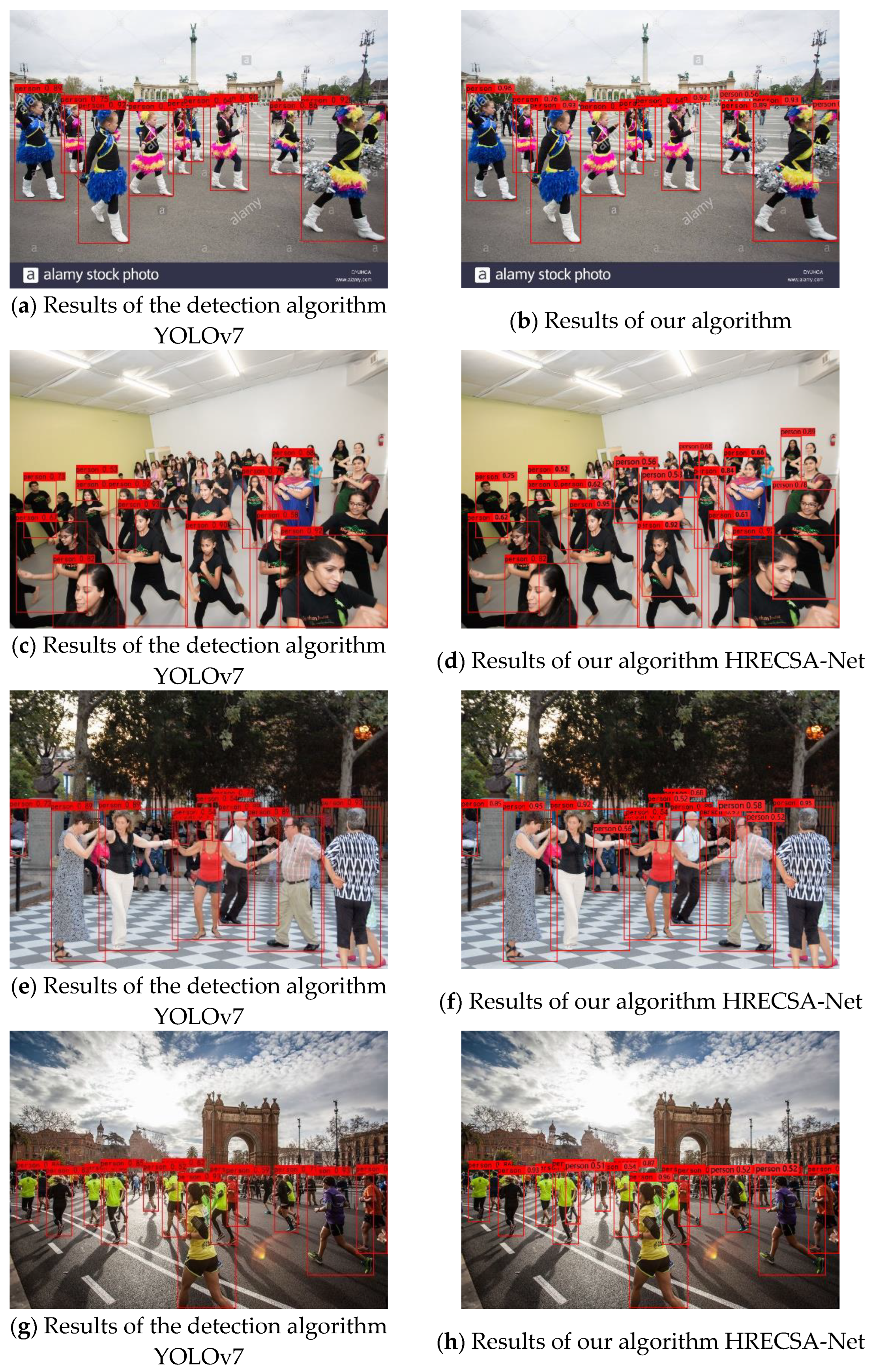

4. Experimental Results and Analysis

4.1. Experimental Environment

4.2. Experimental Evaluation Criteria

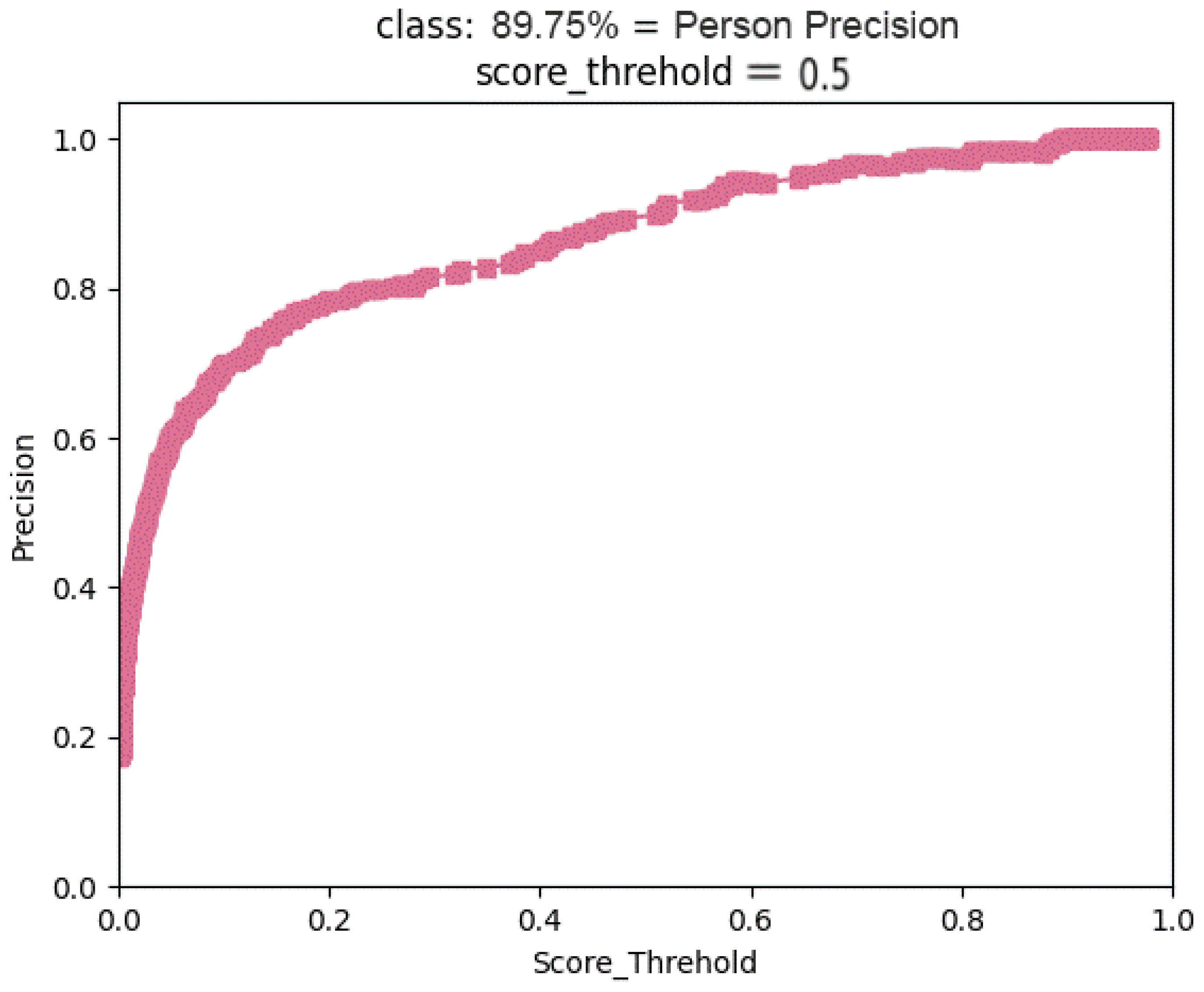

4.3. Ablation Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sun, Y.C.; Pan, S.G.; Zhao, T. Traffic light detection based on optimized YOLOv3 algorithm. Acta Opt. Sin. 2020, 40, 1215001. [Google Scholar]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by Bayesian combination of edgelet part detectors. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005. [Google Scholar]

- Sabzmeydani, P.; Mori, G. Detecting pedestrians by learning shapelet features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Ross, G.; Jeff, D.; Trevor, D. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D. SSD: Single shot multiBox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Eds.; Computer vision-ECCV 2016. Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE Press: New York, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE Press: New York, NY, USA, 2017; pp. 6517–6525. [Google Scholar]

- Redom, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

- Chu, Y.L.; Hong, L.J.; Kai, H.W. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–27 October 2017; pp. 2980–2988. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR), Hong Kong, China, 20–24 August 2006; pp. 850–855. [Google Scholar]

- Navaneeth, B.; Bharat, S.; Rama, C.; Larry, S. Soft-NMS: Improving Object Detection with One Line of Code. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–27 October 2017; pp. 5561–5569. [Google Scholar]

- Song, T.L.; Di, H.; Yun, H.W. Adaptive NMS: Refining Pedestrian Detection in a Crowd. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 6459–6468. [Google Scholar]

- Ma, J.; Wan, H.L.; Wang, J.X. An Improved one-stage pedestrian detection method based on multi-scale attention feature extraction. J. Real-Time Image Process. 2021, 18, 1965–1978. [Google Scholar] [CrossRef]

- Zou, Z.Y.; Gai, S.Y.; Da, F.P. Occluded pedestrian detection algorithm based on attention mechanism. Acta Opt. Sin. 2021, 41, 1515001. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 16–20 June 2019; pp. 1314–1324. [Google Scholar]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. In Proceedings of the IEEE International Conference of Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Loy, C.C.; Lin, D.; Ouyang, W.; Xiong, Y.; Yang, S.; Huang, Q.; Zhou, D.; Xia, W.; Li, Q.; Luo, P.; et al. WIDER Face and Pedestrian Challenge 2018: Methods and Results. In Proceedings of the 2018 IEEE International Conference of Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 22–24 June 2009; pp. 248–255. [Google Scholar]

- Chen, X.; Fang, H.; Lin, T.-Y.; Vedantam, R.; Gupta, S.; Dollar, P.; Zitnick, C.L. Microsoft COCO Captions: Data Collection and Evaluation Server. arXiv 2015, arXiv:1504.00325. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE of Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 11534–11542. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

| Algorithm | mAP | Speed (Frame · ) |

|---|---|---|

| YOLOv7 | 80.25% | 16 |

| YOLOv7 + MobilenetV3 | 82.86% | 190 |

| YOLOv7 + MobilenetV3 + HR | 85.27% | 126 |

| Ours (YOLOv7 + MobilenetV3 + HR + ECSA) | 89.75% | 138 |

| Algorithm | mAP | Speed (Frame · ) |

|---|---|---|

| Faster R-CNN | 71.5% | 14 |

| SSD | 88.9% | 20 |

| Scaled-YOLOv4 [34] | 85.7% | 108 |

| YOLOv5 | 83.1% | 126 |

| YOLOv7 | 80.25% | 16 |

| HRECSA-Net | 89.75% | 138 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Wang, Y.; Liu, X. An Improved YOLOv7 Lightweight Detection Algorithm for Obscured Pedestrians. Sensors 2023, 23, 5912. https://doi.org/10.3390/s23135912

Li C, Wang Y, Liu X. An Improved YOLOv7 Lightweight Detection Algorithm for Obscured Pedestrians. Sensors. 2023; 23(13):5912. https://doi.org/10.3390/s23135912

Chicago/Turabian StyleLi, Chang, Yiding Wang, and Xiaoming Liu. 2023. "An Improved YOLOv7 Lightweight Detection Algorithm for Obscured Pedestrians" Sensors 23, no. 13: 5912. https://doi.org/10.3390/s23135912

APA StyleLi, C., Wang, Y., & Liu, X. (2023). An Improved YOLOv7 Lightweight Detection Algorithm for Obscured Pedestrians. Sensors, 23(13), 5912. https://doi.org/10.3390/s23135912