Abstract

With the maturity of Unmanned Aerial Vehicle (UAV) technology and the development of Industrial Internet of Things, drones have become an indispensable part of intelligent transportation systems. Due to the absence of an effective identification scheme, most commercial drones suffer from impersonation attacks during their flight procedure. Some pioneering works have already attempted to validate the pilot’s legal status at the beginning and during the flight time. However, the off-the-shelf pilot identification scheme can not adapt to the dynamic pilot membership management due to a lack of extensibility. To address this challenge, we propose an incremental learning-based drone pilot identification scheme to protect drones from impersonation attacks. By utilizing the pilot temporal operational behavioral traits, the proposed identification scheme could validate pilot legal status and dynamically adapt newly registered pilots into a well-constructed identification scheme for dynamic pilot membership management. After systemic experiments, the proposed scheme was capable of achieving the best average identification accuracy with 95.71% on P450 and 94.23% on S500. With the number of registered pilots being increased, the proposed scheme still maintains high identification performance for the newly added and the previously registered pilots. Owing to the minimal system overhead, this identification scheme demonstrates high potential to protect drones from impersonation attacks.

1. Introduction

With the continuous advancement of the Industrial Internet of Things and 5G technology, drones have been integrated with various emerging technologies, such as Software-Defined Networking and Blockchain, for providing reliable service [1,2]. Owing to their high mobility and operability, a drone can act as an individual switch for traffic forwarding in an SDN-based drone communication network. On the other hand, Blockchain technology has also been applied to drone swarms to keep the transactions between drones and pilots secure, cost-effective and privacy-preserving.

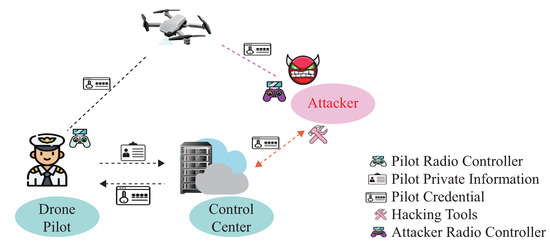

Since drones have become indispensable to intelligent traffic systems, many adversaries try to compromise flying drones for malicious purposes. Currently, there are already some vulnerabilities that have been found in commercial drones. For example, the authors in [3] revealed that GPS spoofing attacks could mislead the flying drones to a manipulated destination. Son et al. in [4] also found that resonance effects could exaggerate the Micro-Electro-Mechanical Systems gyroscope estimation bias, preventing drones from undergoing pre-flight checks. Furthermore, networking-based attacks, such as Denial of Services attack [5] and Man-in-the-Middle attack [6], also pose significant threats to flying drones. Compared with the previously mentioned vulnerabilities, pilot impersonation attacks, where the adversaries try to impersonate the victim pilot bearing compromised credentials and send malicious control instructions to the flying drones, pose severe threats to the drone’s security. As the adversary could obtain drone control privileges without generating faked radio signals, the pilot impersonation attacks are more brutal to detect and nerveless to be prevented.

Currently, there are many works [7,8,9,10] that have investigated the validation of the pilot legal status to protect drones from pilot impersonation attacks. For example, Zhang et al. in [7] proposed the utilization of a one-way hash function and bitwise XOR operations for authentication and key agreement at the beginning of the flight. After analyzing with the security tools, they have proven safety under the random oracle model and can achieve the security requirements of the Internet of drones environment to withstand various attacks. After that, Alladi et al. in [8] proposed a Physical Unclonable Function-based mutual authentication scheme, SecAuthUAV, for UAV-GCS. Through comparing with the state-of-the-art authentication protocols [9,10], the authors verified that their proposed scheme could well resist masquerade, replay, node tampering, and cloning attacks over the drone communication channel. On the other hand, many machine learning (ML)-based identification schemes [11,12,13] have also been designed for ensuring drone pilot legal status during the flight procedure. For example, shouFan et al. in [11,12] first verified the pilot’s legal status by monitoring the remote radio controller sending instructions. After analyzing extracted control commands with Linear Discriminant (LD) [14], Quadratic Discriminant (QD) [15], Support Vector Machine (SVM) [16], weighted k Nearest Neighbors (k-NN) [17] and Random Forest (RF) [18] the best performance was achieved with RF approximating to 89% in accuracy. Alkadi et al. in [13] further combined the onboard sensor measurements and received control instructions for improving drone pilot identification performance. Through analyzing with Long Short-Term Memory (LSTM), feature-based, and majority voting-based classification algorithms [19,20,21], the authors concluded that the combination methods could enhance the pilot identification performance. As there exist similarities between automobile driver and drone pilot operations, some algorithms, such as XGBoost [22] and SVM, which have been applied to automobile drivers [23,24], could also achieve impressive performance in drone pilot identification.

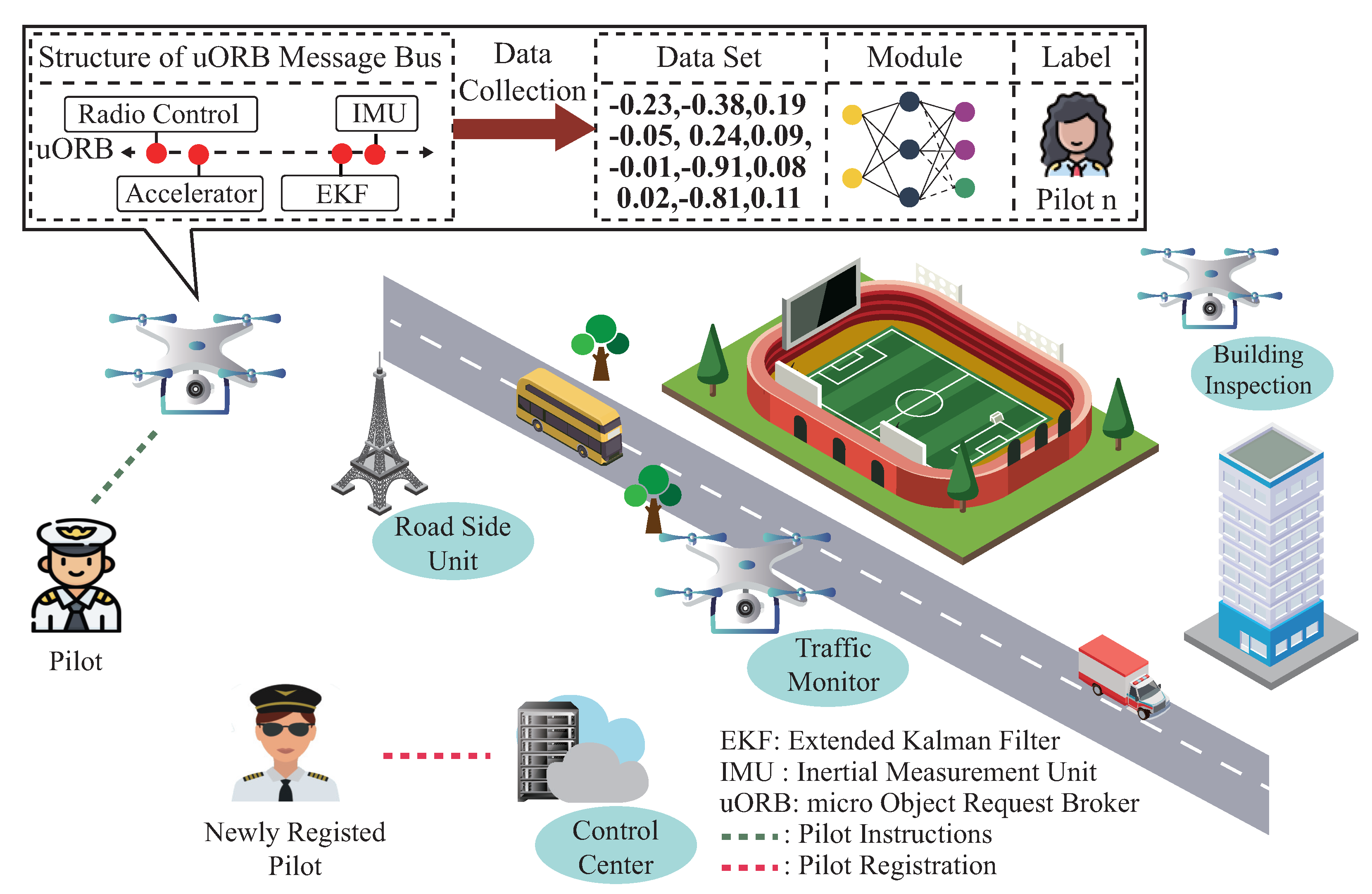

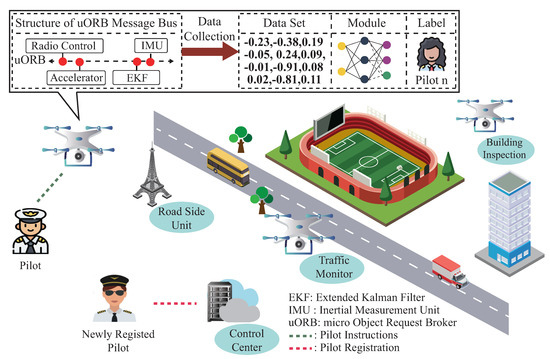

Despite the significant progress that has been made to reduce drone pilot impersonation attacks, a critical challenge still needs to be addressed: drone pilot membership dynamic management. Unlike the protocol-based authentication scheme, the ML-based identification scheme mentioned in the previous works [11,12,13] could not adapt to newly joined pilots for identification and authentication, only succeeding with the help of the current pilot’s flight data. As illustrated in Figure 1, once the well-established pilot identification scheme is deployed on the flying drone, only the previous registered pilot’s legal status can be verified during the flight procedure. To maintain high identification performance, these ML-based identification schemes require periodic retraining to update their inner parameters. Since the previous registered pilots’ flight data are not accessible due to pilot operation privacy issues, updating ML-based identification schemes would cause the catastrophic forgetting problem, a phenomenon of significant accuracy degradation after training on newly joined pilots’ flight data. Even if the previously registered pilot’s flight data are accessible, storing those data still requires a significant amount of memory. Retraining the ML-based identification scheme from scratch for the newly joined pilots also brings many system overhead costs.

Figure 1.

Application scenario.

To address the challenge mentioned above, we design a novel task-incremental learning-based drone pilot identification scheme. Motivated by the previous works [12,13,23], we first design a background service to collect drone flight data by subscribing to the topics from a micro object request broker (uORB) message bus. We then construct a module for extracting pilot behavioral traits from the flight data and establish a mapping between the extracted pilots’ behavioral traits and their provided identities. For adapting to the newly joined pilot’s identification, we design an updating mechanism to adjust the inner structure and trainable parameters based on the newly registered pilot flight data. As the proposed scheme possesses high identification accuracy for the newly and previously registered pilots with minimal system overheads, it enhances the potential for protecting drones from pilot impersonation attacks.

The prominent features of this paper are summarized as the following aspects:

- We propose a novel incremental learning-based drone pilot identification scheme for protecting drones from impersonation attacks.

- For obtaining high-quality drone flight data, we design a background service to collect the subscribed topics from uORB message bus without altering the hardware and software architecture.

- To adopt dynamic pilot membership management, we construct an extensible framework and propose an updating mechanism for adopting newly joined pilots into the well-established identification scheme.

- Numerous experiments have demonstrated that the proposed scheme can maintain high identification accuracy for newly and previously registered pilots with minimal system overhead.

The rest of this paper is organized as follows: In Section 2, we first present PX4 inner communication mechanism and adversary model, and then give details about the proposed drone pilot identification scheme and updating mechanism. In Section 3, we conduct systematic experiments to prove the effectiveness of the proposed identification scheme under different environmental settings. We also discuss the advantages and disadvantages of the proposed identification scheme and put forward our future research directions in Section 4. Finally, we provide concluding remarks in Section 5.

2. Materials and Methods

2.1. UAV Inner Communication Mechanism

With the development of dynamic aerial technology and integrated circuit manufacturing, most flying functionalities have been integrated into flight controllers, such as PX4 [25]. Through utilizing the onboard sensor measurements, PX4 can monitor the drone flight status and provide navigation and mission planning service to the flying drones, reducing the pilot’s operational overhead. According to [25], PX4 has been implemented in Nuttx [26] environment, where different hardware modules have been abstracted into functionalities and provided reliable services for the flight control stack. Moreover, the PX4 also acts as the middleware to intercept the pilot’s instructions and convert them into the preset drone flight attitudes.

Thanks to the inner communication mechanism, the modules within PX4 could cooperate with each other through publishing and subscribing to predefined topics. Specifically, the modules that want to utilize the drone flight attitudes for computation first subscribe to specific topic and then create a listener to receive the onboard measurements at fixed intervals. On the other hand, the modules that want to publish their computation results must also apply for a topic on the uORB message bus and publish their results over the applied topic. Furthermore, PX4 also utilizes the Extended Kalman Filter [27] algorithm to fuse the onboard sensor measurements and publish high-precision drone flight attitude over the uORB message bus, reducing the side effects caused by external environmental conditions. Since the high-precision flight data run over the uORB message bus, we design a background service to subscribe to the predefined topics listed in Table 1 from the uORB message bus and utilize them for drone pilot identification.

Table 1.

Selected Topics and Its Physical Meaning.

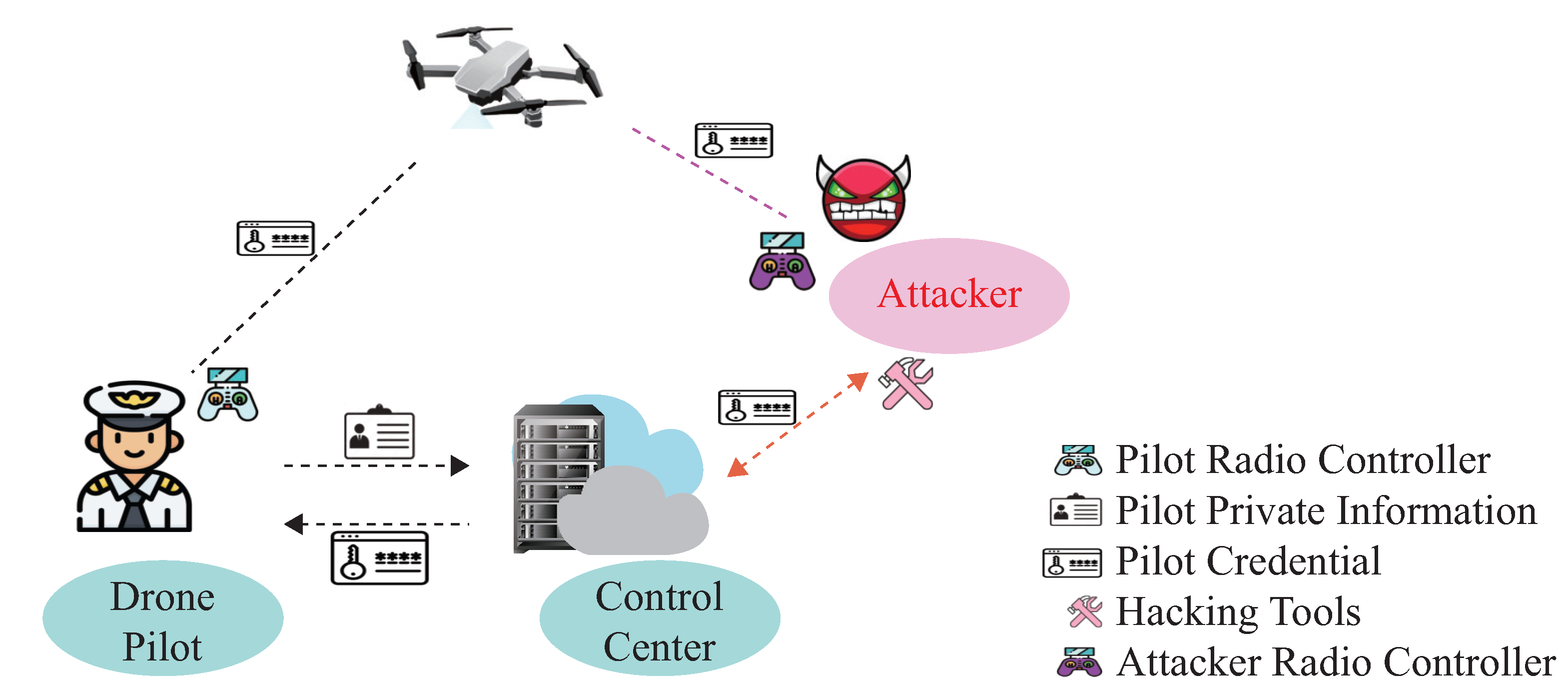

2.2. UAV Pilot Impersonation Attack

As drones deployed in the open environment carry sensitive information, many adversaries try to capture flying drones for malicious purposes. Compared with other electronic attacks, such as GPS spoofing attacks or Man-in-the-middle attacks, the adversary could launch an impersonation attack by utilizing the compromised credential, as illustrated in Figure 2. This paper assumes that all pilots and flying drones must first complete registration at the ground control center. After that, the pilot can utilize their credentials for mutual authentication at the beginning of the communication. Once their legal status is verified on the drone side, the pilot uses their remote radio controller to send instructions for the flying drone. On the other hand, the adversary tries to utilize hacking tools to capture the pilot’s credentials from the ground control center. Once the victim pilot’s credentials are obtained, it utilizes the compromised credentials to obtain the flying drone’s control privilege. As the knowledge-based authentication scheme can not verify the pilot’s legal status during the flight procedure, the adversary can impersonate the victim pilot and utilize their radio controller to send malicious instructions to the flying drone.

Figure 2.

Impersonation attack.

To protect drones from impersonation attacks, we propose a pilot identification scheme that verifies the pilot’s legal status during the flight procedure. As distinct differences exist between the victim pilot and adversary operation profile, the proposed identification scheme will differentiate the adversary flight data from the current pilot’s. If the estimated identity does not match the pilot-provided credential, an alert will be triggered by this identification system. At the same time, the PX4 flight controller will stop executing the received instructions and switch to automatic mode, guiding the drone to land at the take-off place to stop further impersonation attacks.

2.3. Incremental Learning

Incremental learning was first proposed by Schlimmer in [28], whose goal is to enable machine-learning models to continuously adjust their structure according to the newly generated data, adapting to the dynamic changing environment. In recent years, incremental learning has become an important research topic and is applied to many application scenarios, such as wireless device identification, smartphone counterfeit detection, and natural language processing [29,30,31]. In order to adapt to the dynamic changing environment, many researchers have proposed to utilize regularization approaches to mitigate the catastrophic forgetting problem. For example, Kirkpatrick et al. in [29] proposed an elastic weight consolidation (ewc) approach to calculate a diagonal of the Fisher Information Matrix. They assumed that the model would learn the importance after each task while ignoring the influence of those parameters along the learning trajectory in weight space. To address the importance overestimating problem, the authors in [30] proposed memory aware synapses (mas) to fuse Fisher Information Matrix approximation and online path integral in a single algorithm to calculate the importance for each parameter. Furthermore, the authors in [32] proposed an incremental learning method, learning without forgetting (lwf), to regularize data drift with the temperature-scaled logits during the training procedure. Instead of the previously mentioned works [29,30,32], there also exist some techniques, such as rehearsal approaches [32,33,34], that have been proved to be effective in improving incremental learning performance. To our knowledge, the proposed identification scheme is the first work that utilizes incremental learning for drone pilot identification. We compare the performance of our updating mechanism with the state-of-the-art incremental learning algorithms, such as [29,30,32] to illustrate the effectiveness of the proposed algorithm. Systemic experiments in natural and constrained enviroments have demonstrated that the proposed updating strategy has higher identification accuracy for the previously and currently registered pilots over the compared algorithms.

2.4. Problem Definition

We define the learning on newly joint pilot drone flight data as a new task in our drone pilot identification scheme. When training on t-th pilot’s drone flight dataset , we assume no direct access to for the moment, leading to the following training objective.

where and denote the N-dimensional drone flight data and the corresponding pilot-provided identity, respectively. represents the well-established pilot identification model parameterized by a vector w. ℓ is the objective function, quantifying drone pilot identification performance. One may add a regularizer to Equation (1) to gain resistance to catastrophic forgetting. For evaluation, we may measure the performance of on the hold-out test sets of all tasks seen so far.

where is the test set for the k-th task. An ideal drone pilot identification scheme should identify well on all newly joined pilots and endeavor to mitigate catastrophic forgetting of the previous pilots, resulting in better identification performance.

2.5. Pilot Identification Based on UAV Flight Data

2.5.1. Data Collection and Preprocessing

In order to collect highly precise drone flight data and minimize the side impact caused by the external environment, we have designed a background service to subscribe to the selected topics from the uORB message bus instead of the ground control center. Due to the unstable connections caused by external weather conditions and drone mobility, the integrity of data transmitted to the ground control center over the mavlink protocol has been broken, downgrading drone pilot identification performance. According to the PX4 documentation, there are a total of 54 topics running through the uORB message bus, designed explicitly for the quadcopter framework. In order to select the most representative attributes which can describe the pilot behavioral traits, we utilize the embedded feature selection algorithm [35] to filter out the irrelevant topics and attributes. Specifically, we first select five pilots’ flight data to construct a mini dataset, which includes drone flight attitude, inner communication message, and pilot-provided identity. We then utilize RF as a classifier to identify drone pilot identity based on the attribute within subscribed uORB topics. Finally, we sort the attributes by identification accuracy in descending order and preserve the first 28 attributes for constructing the identification scheme. Table 1 provides details about the selected topics and preserved attributes.

As illustrated in Table 1, the selected topics are mainly about the received pilot instructions, drone flight attitudes, and the inner control commands sent from the flight controller to the executor. Due to internal hardware errors and external factors, significant deviations exist among the selected attributes. To address this problem, we first utilized the Kalman filter algorithm to find the abnormal deviations from the selected topics and then replaced this deviation with the previous observation. Note that the selected topics maintain their publishing frequency, and the preserved attributes have their working dimensions. We implemented a sliding window with one-second width to streamline the selected attributes to 1 Hz, where the average value within this window is utilized for current observation. We then applied the following standard equation to normalize the observations before feeding them into the drone pilot identification scheme.

where x represents the previous generated observations, and are mean and standard deviation of current observations. After data processing, we concatenated the selected attributes chronologically and generated the input sequence with 28 dimensions. Each time, we selected 64 consecutive observations to form one input sequence map, and the overlap between consecutive maps is kept at 32. Furthermore, we digitized pilot-provided credentials as unique numbers and utilized these numbers to represent the ground truth while constructing the identification scheme.

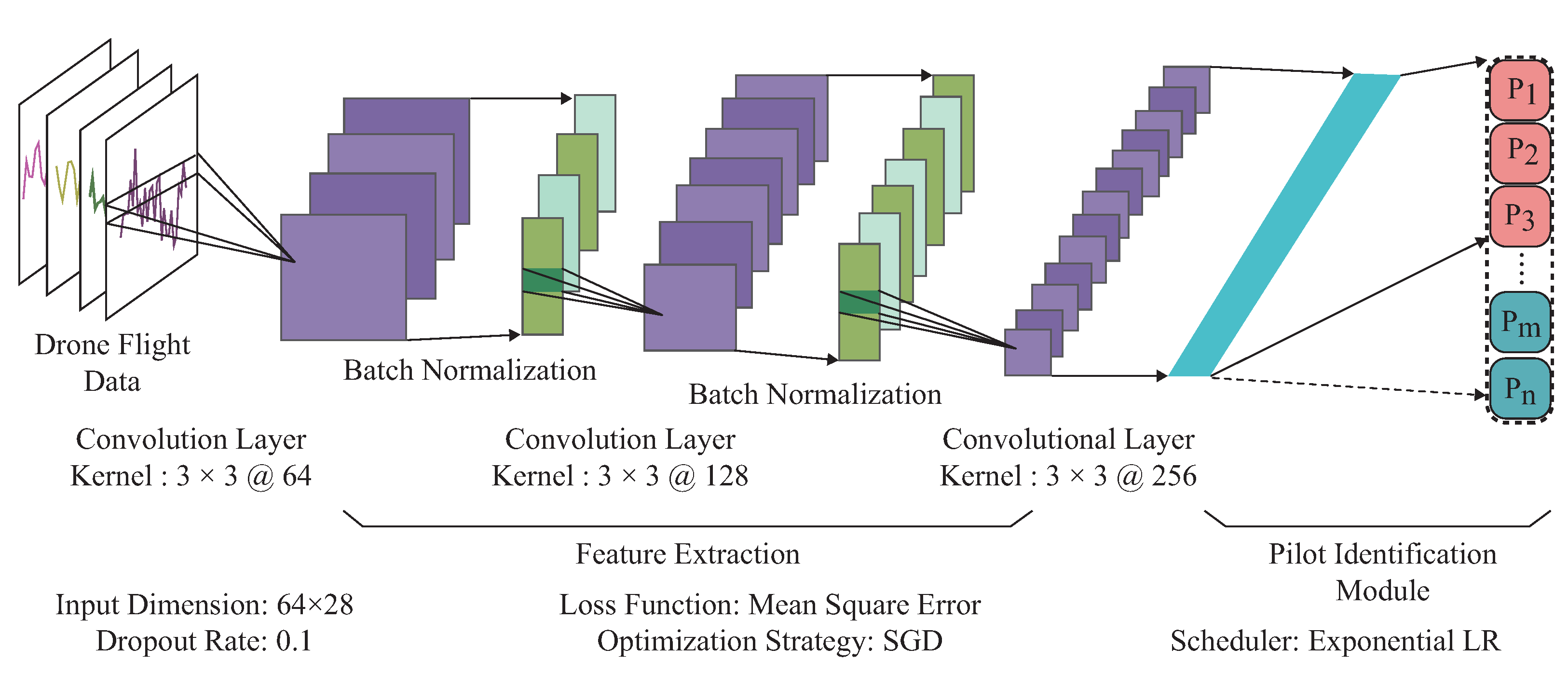

2.5.2. Drone Pilot Identification

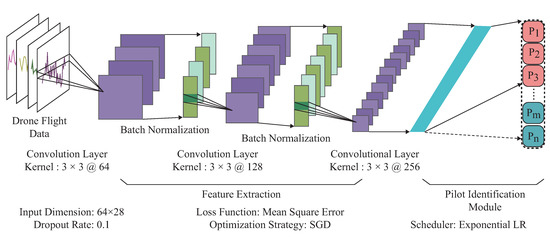

In order to make the drone pilot identification scheme adaptable for dynamic membership management, we first designed a foundation framework that could estimate pilot identity according to the drone flight data. Motivated by the previous work [36,37], this foundation framework consists of feature extraction and pilot identification module, as illustrated in Figure 3. In the feature extraction module, we concatenated three convolution layers sequentially and appended the batch normalization operation at the output of each convolution layer for the pilot behavioral traits extraction. The convolutional layer utilizes the local parameter-sharing mechanism for extracting the spatial and temporal relationships among the input drone flight data. Furthermore, batch normalization has been applied to recenter and rescale the output features of the convolutional layer, boosting the gradient backpropagation and reducing the probabilities of the gradient disappearance problem. As the negative representations extracted by each convolutional layer have physical meaning when describing drone flight data, we eliminated the ReLU [38] functions in the constructed foundation framework.

Figure 3.

Incremental learning-based UAV pilot identification.

In the pilot identification module, we utilized one full connection layer to establish connections between the extracted hidden representations with the registered pilot identity. The full connection layer could assign appropriate weights for each extracted hidden representation, just like an affine matrix. By utilizing the backpropagation mechanism, the full connection layer can approximate the pilot’s real identity by adjusting the trainable weights. In order to make this identification scheme adaptable for dynamic pilot membership management, the form of the full connection layer also can be altered according to the number of currently registered pilots. As illustrated in Figure 3, we first utilized the drone flight data as input to the pilot identification scheme. Through a series of convolutional operation and the batch normalization, we extract pilot behavioral traits step by step, where the purple blocks represent the convolutional features and green blocks indicate the batch normalization features. After that, we flatten the hidden representations into one dimension(cyan blocks) and establish the connections between the hidden representations with predicted pilot identity, where the red blocks represent the previously registered pilots, and the green ones indicate the newly joined pilots. Once newly registered pilots have been integrated into the drone system, the green blocks will be appended at the end of the red ones in the previously constructed identification scheme. Regarding the identification scheme updating mechanism, we utilized the previously constructed identification scheme to guide the newly generated identification scheme only with the help of newly registered drone pilot flight data. For more details about updating mechanism, please refer to Section 2.5.3.

Regarding the optimization strategy, we utilized the least mean square error to calculate the distance between the predicted pilot identity and the ground truth. The loss function can be expressed as Equation (4).

where n is the number of identities the proposed identification scheme could handle each time. is the proposed identification scheme predicted identity, and is the ground truth corresponding to the pilot-provided credential. represents the hamming distance, where zero denotes that the estimated identity is consistent with the generated ground truth. One represents that the estimated identity deviates from the generated ground truth. Figure 3 illustrates the hyperparameters we used to construct the pilot identification scheme, and we applied stochastic gradient descent optimization [39] strategy to find the parameters for best identification performance.

2.5.3. Drone Pilot Identification Updating Mechanism

Once the pilot completes registration at the ground control center, he will be granted the credential for obtaining the drone control privilege. In order to validate this pilot’s legal status during the flight procedure, we first required the pilots to utilize a remote radio controller to send instructions to the drone for completing some basic flight missions. Meanwhile, we utilized the previously designed background services to extract the flight data . We digitized their credential into a unique number to represent their identity in our identification scheme. Since the other registered pilot’s flight data are inaccessible due to their operation privacy, we only utilized the and the previous well-constructed identification scheme to optimize parameters of the altered network structure, aiming at high identification accuracy for all registered pilots.

As illustrated in Figure 3, we first parameterized this identification scheme with parameter and , where represents the parameters within feature extraction module and indicates the parameters within pilot identification module. When updating the pilot identification module for newly registered pilots, we first added nodes to the output layer (the green blocks in Figure 3). After that, we established the connections between the feature extraction module and the newly added nodes. The number of connections equals the number of newly added pilots times the number of the output features extracted by the feature extraction module. We initialized with random Gaussian distribution and then updated this identification scheme in the following procedure. At the beginning of updating procedure, we first froze parameter and and utilized newly registered pilot flight data to training parameter until to coverage. We then utilized to train all the network parameters including , and to converge. As we could only utilize the currently registered pilot data for updating the drone pilot identification scheme, the optimization target for newly registered pilots is to minimize the distance between the predicted identity and pilot-provided ground truth. We utilized the Equation (5) to describe the newly registered pilot’s optimization target.

where is the output of the pilot identification scheme based on pilot-provided data and is the corresponding ground truth. As for the previously registered pilot optimization, we utilized the Knowledge Distillation loss [40] to encourage the current pilot identification scheme’s output to approximate the previous one’s outputs.

where l is the number of the previously registered pilots in each iteration, indicates the probabilities predicted by the previous well-constructed identification scheme, and is the probabilities estimated by the newly constructed identification scheme. Furthermore, we tried to regularize the parameters , and with with the decay of 0.005 to force the newly constructed identification focus on the newly registered pilot behavioral traits.

In order to minimize the distance between the current and previous identification scheme output probabilities, we utilized the Equation (7) to aggravate the small probabilities.

where we utilized hyper-parameter T to control the scale factors to amplify the small probabilities for each predicted pilot identity. Since drone pilot identification is a multi-label classification problem, we took the sum of loss for old and new tasks in each iteration. Algorithm 1 gives more details about updating procedure proposed in the drone pilot identification scheme. In order to further improve identification performance, we preserved 100 samples for each previously registered pilot flight data. We merged the newly registered pilot flight data for this newly generated identification scheme. We used Pilot Identification to indicate the previous well-constructed identification scheme, and the controls weights between the old and current tasks. After conducting system experiments, we find that the parameter with 0.1 could achieve the best identification performance for all registered pilots.

| Algorithm 1: Drone Pilot Identification Updating Algorithm |

|

1: Start: feature extraction parameters identification parameters for the previous registered pilots added parameters for newly registered pilots newly registered pilot’s drone flight data and their identity 2: Initialize: Pilot Identification RANDINT 3: Train: Define = Pilot Identification() ▹ previous registered pilot identity estimation Define = Pilot Identification() ▹ newly registered pilot identity estimation , , ← () |

3. Results

3.1. Environmental Setting

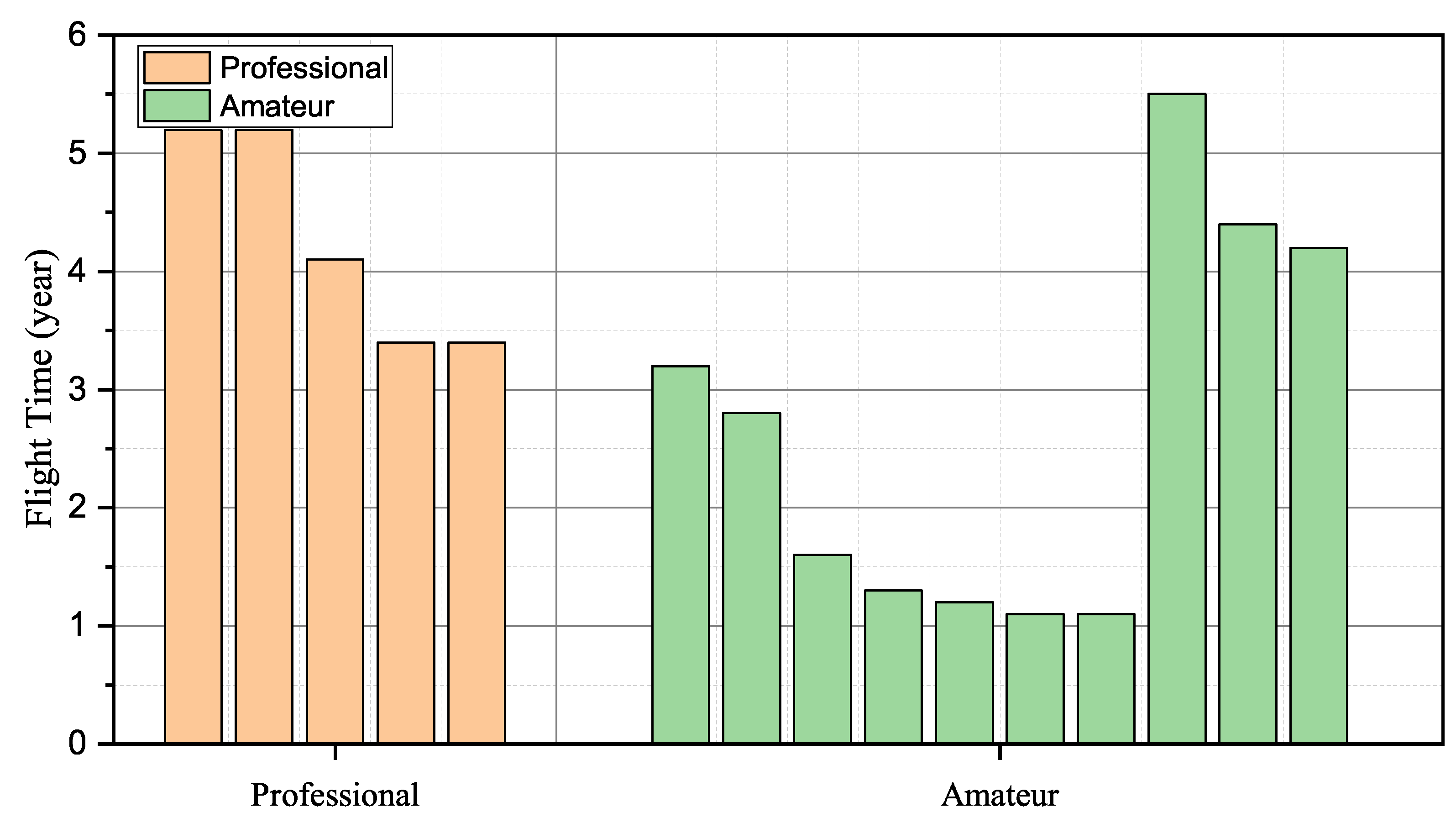

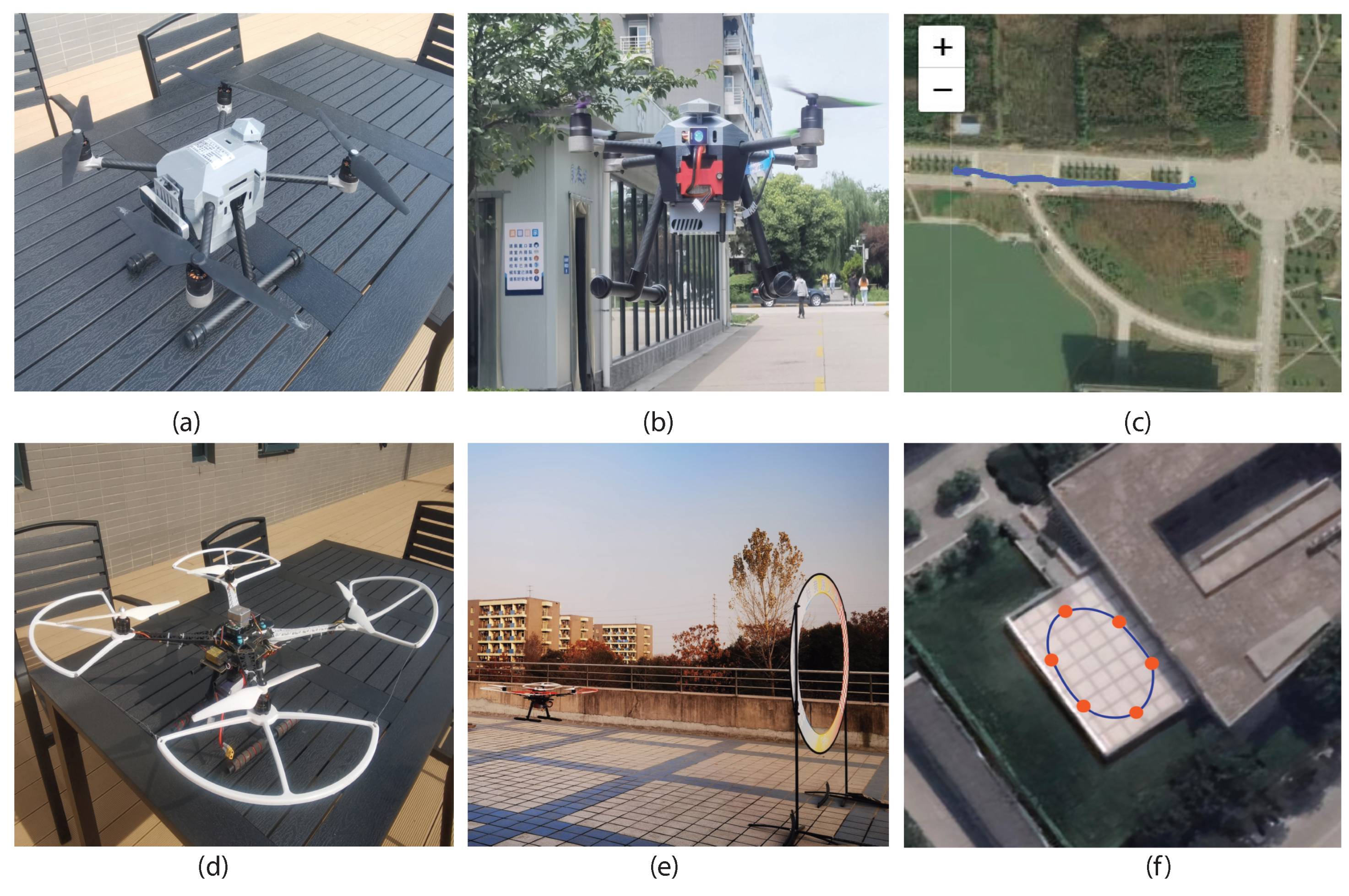

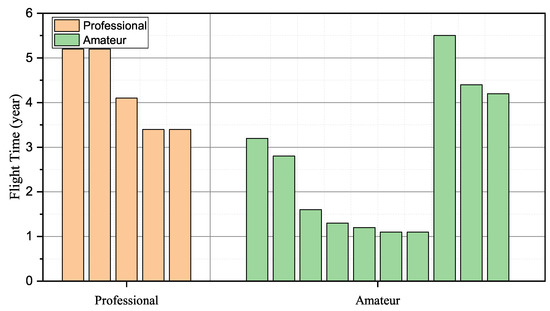

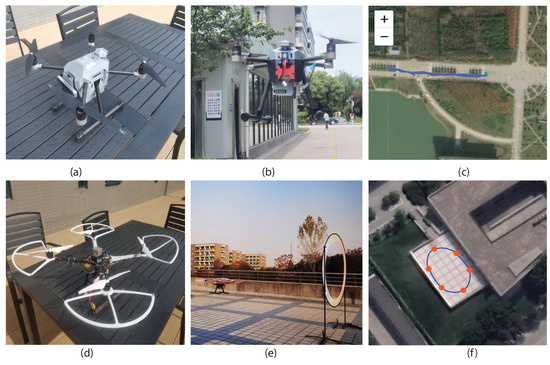

As the quadcopter has received wide attention due to its easy operation and broad application, we conducted experiments on the quadcopter, such as P450 and S500, to validate the effectiveness of the proposed identification scheme. In data collection, 15 students were required to fly quadcopters in the natural and constrained environment. Regarding P450, produced by AmovLab, it was installed with a depth of field and optical flow sensors to provide reliable flight performance for the drone. When it comes to S500, we constructed it from scratch under the guidance of the CUAV flight stack. We utilized the remote radio controller Futuba to capture the pilot instructions and send control signals to the flying drone. Among the invited pilots, five students from AeroModelling Team were required to act as professional pilots, and the remainder who came from our laboratory were the amateurs. Figure 4 details the participants’ operation proficiency, where we utilized the flying times to represent their operation experience.

Figure 4.

Pilot operation proficiency.

To validate the effectiveness of the proposed identification scheme, we have conducted experiments over P450 and S500, as illustrated in Figure 5. We first required participants to fly P450 in the natural environment for a traffic monitoring mission, the most common flight task in the quadcopter. Specifically, the pilots utilized a remote radio controller to send instructions to the drone for taking off, hovering in the air, and landing at the predefined destination. At the same time, the pilots were asked to record three-minute videos about the traffic conditions. Furthermore, we conducted experiments in the constrained environment, whose settings complied with [12,13]. We had preset the flight trajectories and asked pilots to control drones to pass through the way-points without collision. To reduce the side effects caused by external weather conditions, all the data were collected in the constrained environment on sunny days.

Figure 5.

Experimental settings. All the experiments have been conducted on drone P450 (a) and S500 (d). We have invited all the pilots to fly the drone in natural (b) and constrained (e) environments. The flight trajectories are illustrated in (c,f).

We also conducted experiments on S500 in natural and constrained environments with similar settings to further illustrate the effectiveness of the proposed identification scheme. To collect sufficient data, all the participants were required to fly the drone ten times, and we utilized the designed background service to monitor the drone flight status. Regarding the ground truth generation, we digitized the pilot-provided credential and mapped it as a unique number, indicating the ground truth in our experiments. Table 2 gives more details about the experimental settings and the portion of the collected dataset has been available on 15 June 2023 at https://github.com/FRTeam2017/DronePilotIdentification.git.

Table 2.

Environmental settings.

3.2. The Hardware and Software Architecture

In order to collect high-precise drone flight data, we first designed a background service to subscribe to the selected topics from the uORB bus. In data preprocessing, Anaconda is utilized to create a pure Python environment, where the package Pyulog is installed for converting the collected flight data into CSV format, and Pandas is used for calculating statical features. As for the drone pilot identification scheme implementation, we utilized the Pytorch framework to extract pilot behavioral traits from the flight data and estimate their identity in real-time.

In the hardware configuration, we have constructed a workstation equipped with an i7-8700 CPU processor and 24 GB of memory. In order to accelerate the training procedure, an NVIDIA-3080 graphics card was installed for parallel computing. We utilized Ubuntu 20.04 to manage the previously mentioned hardware equipment, and the above configuration determines the results presented below.

3.3. Drone Pilot Identification Based on P450

In this section, 15 pilots were required to utilize P450 for completing flight missions in the natural and constrained environment, as illustrated in Figure 5. In order to collect sufficient drone flight data, we asked them to repeat the flight mission ten times in the natural and constrained environment separately. We utilized the first eight trajectories for training and the remainder for testing that partition obeys the machine learning and pattern recognition algorithm. Furthermore, there is no overlapping between the training and testing trajectories. After data preprocessing, we obtained 54,123 training and 11,254 testing samples in the natural environment. We also obtained 49,938 training and 11,432 testing samples in the constrained environment. Table 3 details drone pilot identification performance in different environmental settings based on P450, where represents the sample that drone pilot identification scheme will be classified, and indicates the estimation results based on given flight data.

Table 3.

Drone pilot identification in natural and constrained environment based on P450.

This table demonstrates that the proposed identification scheme can achieve impressive performance based on the selected topics on uORB message bus. In the natural environment, the average identification accuracy approximates 94.87%, and the average identification accuracy for the constrained environment is about 95.71%, slightly better than the natural environment. One possible explanation is that external weather conditions, such as wind and magnets, may affect the pilot’s operation behaviors, decreasing identification performance. Note that the worst identification accuracy is 73.54%. The proposed identification scheme can be deployed on the drone for real-time pilot identification.

3.4. Drone Pilot Identification Based on S500

In order to further illustrate the effectiveness of the proposed identification scheme, we also conducted the same experiments in S500. We required all the pilots to utilize S500 for traffic monitoring in the natural environment and pass through the arch door preset along the waypoints in the constrained environment. To collect sufficient drone flight data, all the pilots were asked to repeat the mission ten times. We utilized the flight data from the first eight times for training and the last two for testing. After data preprocessing, we obtained 62,118 flight samples in the natural environment and 58,921 in the constrained environment. Table 4 provides details about the performance of the proposed drone pilot identification, and the meaning of and has been illustrated in Table 3.

Table 4.

Drone pilot identification in natural and constrained environment based on S500.

The proposed drone pilot identification scheme achieves similar accuracy on drone S500. According to Table 4, eight pilot identification accuracy exceeds 95% The minimal identification accuracy has been achieved with 82.17% (constrained environment) and 88.47% (natural environment). The reason of the minimal identification accuracy is also caused by the external weather condition factors. As the average identification accuracy on S500 is 93.95% and 94.23% in natural and constrained environments, respectively, we can conclude that the proposed scheme can maintain high identification performance over different quadcopters.

3.5. Performance Comparison

Since there is no public drone pilot identification dataset available and this is the first work that utilizes the incremental learning paradigm for drone pilot identification, we first compared the proposed identification performance with the most related works [11,12,13] in terms of objectiveness, the number of participants, and utilized signals et al. in Section 3.5.1. We then compare the identification performance with the algorithms mentioned in the related works in Section 3.5.2. Finally, we compare our updating mechanism with the off-the-shelf incremental algorithms [29,30,32] to illustrate effectiveness of the proposed identification scheme in Section 3.5.3.

3.5.1. Comparison with the Related Works

In this section, we first compare the proposed identification scheme with the most related works [11,12,13] in terms of the experimental setting, objectiveness, number of participants, utilized signals, and identification performance. Table 5 provides details about the comparison results. As the compared works do not provide the source code and drone flight data, we utilized the reported results for the comparison.

Table 5.

Comparison with the related works.

As illustrated in Table 5, we employed similar experimental settings to demonstrate the effectiveness of the proposed identification scheme. For example, we used the quadcopter to collect drone flight data and verify the pilot’s legal status. Compared with the related works, we verified the effectiveness of the proposed identification scheme in natural and constrained environments. We also have to consider the extensibility when constructing drone pilot identification scheme in our work. Although the identification accuracy is not comparable due to lacking the public dataset, the proposed scheme achieves the best identification accuracy with 95.24% over 15 pilots, approximating to the best results reported in the related work [13].

3.5.2. Comparison with the Algorithms Mentioned in Related Works

We also compared our identification scheme with the algorithms mentioned in the related works [11,12,13,23]. Specifically, we compared the algorithm QD, LD ( = SGD), Bagging, RF ( = 100), Adaboost, DT mentioned in work [12], and LSTM ( = 2), Feature ( = RF, = 5), Voted (=SVC, = 5) mentioned in [13]. Furthermore, the algorithms, including SVM, XGB, and RF mentioned in [23] are utilized for comparison due to their competitive performance. Due to the lack of a public dataset, all the comparison algorithms were tested on our collected dataset. The best parameters for the compared algorithms are set with the grid search algorithm [41]. In order to reduce side effects caused by data preprocessing, all the data preprocessing procedures align with the original works. Table 6 provides more details about the drone pilot identification performance.

Table 6.

Comparison with the most related works on P450 in nature and constrained environment.

Table 6 and Table 7 give details about the identification performance of the proposed identification scheme and the related works with over 15 participants. Our proposed identification scheme achieves the best identification performance over 15 participants compared to the related algorithm. Specifically, four out of fifteen pilots’ identification are 100%, and the fifteenth pilot with 95.42% has achieved minimal identification accuracy. One reason is that the identification scheme can effectively use the spatial and temporal relationships between the subscribed UAV flight data.

Table 7.

Comparison with the most related works on S500 in nature and constrained environments.

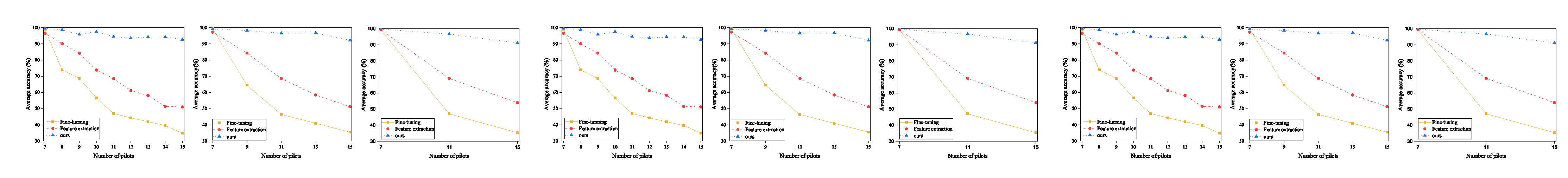

3.5.3. Comparison with the SOTA Incremental Learning Algorithms

In order to validate the effectiveness of the proposed updating mechanism, we conducted experiments over the quad-copter S500 flight data. We first compared the proposed updating mechanism with the feature extraction and fine-tuning mentioned in the related work [42,43], where the feature extraction tries to fix the shared parameters and and utilize the newly joined pilot light data as a new task for training , While the Fine-tuning utilized and for learning a new task and maintain the previous task-specific parameters stable. Each time we utilized the fixed number of newly joined pilot flight data to update the proposed identification scheme and the average accuracy up to the currently registered pilots (mentioned in Equation (2)) to evaluate the drone pilot identification performance. From Figure 6, we can see that the proposed could maintain high identification performance with the increased size of the registered pilots in different incremental steps. However, Fine-tuning and Feature extraction suffer from the catastrophic forgetting problem. Note that the performance of feature extraction is relatively better than fine-tuning. One reason to explain this is that the feature extraction updating mechanism fixes the shared parameters and , which keeps more historical information during the updating procedure.

Figure 6.

Performance comparison versus different incremental step.

We also compared the proposed updating mechanism with the state-of-the-art incremental learning algorithms, including lwf [32], ewc [29] and mas [30]. These methods have been utilized as the standard comparison algorithms to illustrate the effectiveness of incremental learning-based identification framework in the IoT device recognition and identification [44,45]. As illustrated in Table 8, we first selected seven pilot’s flight data to initialize the proposed identification scheme. After that, we utilized the different number of pilot flight data to update the well-established identification scheme and the up-to-current registered pilot average accuracy to depict performance among the compared algorithms. It is clear that the well-established drone pilot identification performance decreases with the increased number of newly joined pilots. Take mas for example. The identification performance of mas dropped from 98.11% with seven registered pilots to 61.52% with 15 registered pilots. One of the reasons to explain this is that the mas needs many current pilot flight data to determine the importance of each shared parameter, which contradicts the setting of drone pilot identification. Compared with the other SOTA incremental learning algorithm, our proposed updating mechanism could maintain high identification performance with the increased number of registered pilots. Based on the previous analyzes, the updating mechanism can help the drone pilot identification scheme adopted for newly registered pilots in dynamic pilot membership management.

Table 8.

Compared to the standard algorithms with different incremental steps.

3.6. Time and Space Complexity

As a drone is a lightweight system, the time and space overhead is critical for timely protection of the drone from impersonation attacks. According to Figure 3, the proposed identification scheme consists of pilot behavioral trait extraction and a pilot identification module. The pilot behavioral extraction module comprises convolution layers and batch normalization operations. Based on [46], the time complexity for each convolution layer is , where M is the dimension of the input feature map, K is the size of the convolutional kernel, and are the number of input and output channels. As for batch normalization, its time complexity depends only on the input feature maps, . We used the full connection layer to map the hidden representation to the pilot identity for pilot identification. Thus, the time complexity for the full connection layer is the same as batch normalization. The proposed identification scheme involves extracting behavioral traits and estimating identity in a sequential manner. The depth of the identification module determines the time complexity of this process. It is represented by the equation: , where D is the depth of the proposed identification module. As for run time overhead, the Python built-in time function suggests that the proposed scheme only needs 0.031 s for drone pilot identification each time. Furthermore, we implemented a Pytorch-implemented parameter statistical function to assess the system overhead. Our identification scheme only has 13 M parameters, indicating that it requires reasonable storage overhead for identification.

4. Discussion

In this paper, we propose a novel drone pilot identification scheme for protecting drones against impersonation attacks and an updating mechanism for adopting to dynamic pilot membership management. In order to validate the effectiveness of the proposed identification scheme, we have conducted experiments over P450 and S500 in different environmental settings. Despite the impressive results that have been produced, there are still existing challenges in our proposed identification scheme.

First, the identification performance could also be further improved. The numerical results in Table 3 and Table 4 have shown that the proposed identification scheme could identify most pilots with high identification accuracy. However, some pilots, such as the tenth pilot in Table 3 still could not be well identified. One explainable reason is that external weather conditions, such as wind, could change the pilot’s behavioral traits for maintaining stabilization during the flight procedure, and the proposed identification scheme does not consider the pilot’s behavioral traits in different weather conditions. In our future work, we will design a more intelligent identification scheme that can utilize weather condition robust features for drone pilot identification.

Second, different application scenarios should be utilized to verify the proposed updating mechanism further. This paper validates the proposed updating mechanism for adopting newly joined pilots under similar experimental settings. In the application scenario, the newly joined pilots’ flight data could come from different environments, which may bring side effects on the performance of the proposed identification scheme. Furthermore, the registered pilots’ leaving scenario should also be considered to enhance dynamic drone pilot membership management.

Third, more experiments should be conducted on different types of drones to validate the effectiveness of the proposed identification scheme. This paper only verified the effectiveness of the proposed identification scheme on P450 and S500 in the preset natural and constrained environment. As more types of drones have been designed and devoted to application scenarios, a more general and robust identification scheme must be deployed on the drone side to further reduce pilot impersonation attacks.

With an increasing number of drones being deployed to real application scenarios, pilot legal status verification will become a dispensable part of the drone system. In the near future, we will conduct more research about drone related attacks and design a more lightweight and robust pilot identification to enhance drone flight security.

5. Conclusions

This paper has presented a novel task incremental learning-based drone pilot identification scheme to protect drones from pilot impersonation attacks and adopt to dynamic pilot membership management. In order to verify the effectiveness of the proposed identification scheme, we conduct systemic experiments on P450 and S500 in different environmental settings. The numerical results show that the proposed identification scheme achieves the best identification accuracy with 95.71% on P450 and 94.32% on S500 over 15 pilots, respectively. Furthermore, the proposed scheme only consumes 13M parameters and can complete drone pilot identification within 0.031 s. Due to the high identification accuracy and low system overhead, the proposed drone pilot identification scheme demonstrates great potential to protect drones from impersonation attacks. In the future, we will consider more factors for designing an intelligent drone pilot identification scheme and conduct comprehensive experiments using different types of drones and environmental settings to verify the robustness of the proposed identification scheme.

Author Contributions

Conceptualization, Y.Z.; methodology, L.H.; validation, L.H. and X.Z.; investigation, L.H.; data curation, L.H. and X.Z.; writing—original draft preparation, L.H.; writing—review and editing, L.H.; visualization, X.Z.; supervision, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are unavailable due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| GPS | Global Positioning System |

| GCS | Ground Control Station |

| MEMS | Micro-Electro-Mechanical Systems |

| LD | Linear dichroism |

| QD | quadratic discriminant |

| SVM | Support vector machine |

| kNN | K-nearest neighbors |

| RF | Random forest |

| DT | Decision tree |

| LSTM | Long short-term memory |

| SGD | Stochastic gradient descent |

| ESC | Electronic Speed Controller |

| NED | North East Down |

| ReLU | Rectification Linear function |

| lwf | learning without forget |

| ewc | elastic weight consolidation |

| mas | memory aware synapses |

| SOTA | state-of-the-art work |

References

- Shakeri, R.; Al-Garadi, M.A.; Badawy, A.; Mohamed, A.; Khattab, T.; Al-Ali, A.K.; Harras, K.A.; Guizani, M. Design challenges of multi-UAV systems in cyber-physical applications: A comprehensive survey and future directions. IEEE Commun. Surv. Tutorials 2019, 21, 3340–3385. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Agrawal, A.; Goyal, A.; Luong, N.C.; Niyato, D.; Yu, F.R.; Guizani, M. Fast, reliable, and secure drone communication: A comprehensive survey. IEEE Commun. Surv. Tutorials 2021, 23, 2802–2832. [Google Scholar] [CrossRef]

- Eldosouky, A.; Ferdowsi, A.; Saad, W. Drones in distress: A game-theoretic countermeasure for protecting uavs against gps spoofing. IEEE Internet Things J. 2019, 7, 2840–2854. [Google Scholar] [CrossRef]

- Son, Y.; Shin, H.; Kim, D.; Park, Y.; Noh, J.; Choi, K.; Choi, J.; Kim, Y. Rocking drones with intentional sound noise on gyroscopic sensors. In Proceedings of the 24th {USENIX} Security Symposium ({USENIX} Security 15), Washington, DC, USA, 12–14 August 2015; pp. 881–896. [Google Scholar]

- Alladi, T.; Chamola, V.; Zeadally, S. Industrial control systems: Cyberattack trends and countermeasures. Comput. Commun. 2020, 155, 1–8. [Google Scholar] [CrossRef]

- Choudhary, G.; Sharma, V.; Gupta, T.; Kim, J.; You, I. Internet of drones (iod): Threats, vulnerability, and security perspectives. arXiv 2018, arXiv:1808.00203. [Google Scholar]

- Zhang, Y.; He, D.; Li, L.; Chen, B. A lightweight authentication and key agreement scheme for Internet of Drones. Comput. Commun. 2020, 154, 455–464. [Google Scholar] [CrossRef]

- Alladi, T.; Naren; Bansal, G.; Chamola, V.; Guizani, M. SecAuthUAV: A Novel Authentication Scheme for UAV-Ground Station and UAV-UAV Communication. IEEE Trans. Veh. Technol. 2020, 69, 15068–15077. [Google Scholar] [CrossRef]

- Wazid, M.; Das, A.K.; Kumar, N.; Vasilakos, A.V.; Rodrigues, J.J. Design and analysis of secure lightweight remote user authentication and key agreement scheme in internet of drones deployment. IEEE Internet Things J. 2018, 6, 3572–3584. [Google Scholar] [CrossRef]

- Srinivas, J.; Das, A.K.; Kumar, N.; Rodrigues, J.J. TCALAS: Temporal credential-based anonymous lightweight authentication scheme for Internet of drones environment. IEEE Trans. Veh. Technol. 2019, 68, 6903–6916. [Google Scholar] [CrossRef]

- Shoufan, A. Continuous authentication of uav flight command data using behaviometrics. In Proceedings of the 2017 IFIP/IEEE International Conference on Very Large Scale Integration (VLSI-SoC), Abu Dhabi, Saudi Arabia, 23–25 October 2017; pp. 1–6. [Google Scholar]

- Shoufan, A.; Al-Angari, H.M.; Sheikh, M.F.A.; Damiani, E. Drone pilot identification by classifying radio-control signals. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2439–2447. [Google Scholar] [CrossRef]

- Alkadi, R.; Al-Ameri, S.; Shoufan, A.; Damiani, E. Identifying drone operator by deep learning and ensemble learning of imu and control data. IEEE Trans. Hum. Mach. Syst. 2021, 51, 451–462. [Google Scholar] [CrossRef]

- Balakrishnama, S.; Ganapathiraju, A. Linear discriminant analysis-a brief tutorial. Inst. Signal Inf. Process. 1998, 18, 1–8. [Google Scholar]

- Tharwat, A. Linear vs. quadratic discriminant analysis classifier: A tutorial. Int. J. Appl. Pattern Recognit. 2016, 3, 145–180. [Google Scholar] [CrossRef]

- Suthaharan, S.; Suthaharan, S. Support vector machine. In Machine Learning Models and Algorithms for Big Data Classification: Thinking With Examples for Effective Learning; Springer: Berlin/Heidelberg, Germany, 2016; pp. 207–235. [Google Scholar]

- Tan, S. Neighbor-weighted k-nearest neighbor for unbalanced text corpus. Expert Syst. Appl. 2005, 28, 667–671. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote. Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Nanopoulos, A.; Alcock, R.; Manolopoulos, Y. Feature-based classification of time-series data. Int. J. Comput. Res. 2001, 10, 49–61. [Google Scholar]

- Gopinath, B.; Gupt, B. Majority voting based classification of thyroid carcinoma. Procedia Comput. Sci. 2010, 2, 265–271. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Kwak, B.I.; Han, M.L.; Kim, H.K. Driver identification based on wavelet transform using driving patterns. IEEE Trans. Ind. Inform. 2020, 17, 2400–2410. [Google Scholar] [CrossRef]

- Hallac, D.; Sharang, A.; Stahlmann, R.; Lamprecht, A.; Huber, M.; Roehder, M.; Leskovec, J.; Sosic, R. Driver identification using automobile sensor data from a single turn. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro Brazil, 1–4 November 2016; pp. 953–958. [Google Scholar]

- Meier, L.; Honegger, D.; Pollefeys, M. PX4: A node-based multithreaded open source robotics framework for deeply embedded platforms. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6235–6240. [Google Scholar]

- Wei, H.; Shao, Z.; Huang, Z.; Chen, R.; Guan, Y.; Tan, J.; Shao, Z. RT-ROS: A real-time ROS architecture on multi-core processors. Future Gener. Comput. Syst. 2016, 56, 171–178. [Google Scholar] [CrossRef]

- Willner, D.; Chang, C.; Dunn, K. Kalman filter algorithms for a multi-sensor system. In Proceedings of the 1976 IEEE Conference on Decision and Control Including the 15th Symposium on Adaptive Processes, Clearwater, FL, USA, 1–3 December 1976; pp. 570–574. [Google Scholar]

- Schlimmer, J.C.; Fisher, D. A case study of incremental concept induction. Proc. AAAI 1986, 86, 496–501. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef] [PubMed]

- Aljundi, R.; Babiloni, F.; Elhoseiny, M.; Rohrbach, M.; Tuytelaars, T. Memory aware synapses: Learning what (not) to forget. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 139–154. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. icarl: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Wu, Y.; Chen, Y.; Wang, L.; Ye, Y.; Liu, Z.; Guo, Y.; Fu, Y. Large scale incremental learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 374–382. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Xun, Y.; Liu, J.; Kato, N.; Fang, Y.; Zhang, Y. Automobile driver fingerprinting: A new machine learning based authentication scheme. IEEE Trans. Ind. Inform. 2019, 16, 1417–1426. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, Y. Convergence analysis of two-layer neural networks with relu activation. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Bottou, L. Stochastic gradient descent tricks. In Neural Networks: Tricks of the Trade: Second Edition; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Syarif, I.; Prugel-Bennett, A.; Wills, G. SVM parameter optimization using grid search and genetic algorithm to improve classification performance. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2016, 14, 1502–1509. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. Decaf: A deep convolutional activation feature for generic visual recognition. In Proceedings of the International Conference on Machine Learning, PMLR, Beijing, China, 21–26 June 2014; pp. 647–655. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Liu, M.; Wang, J.; Zhao, N.; Chen, Y.; Song, H.; Yu, F.R. Radio frequency fingerprint collaborative intelligent identification using incremental learning. IEEE Trans. Netw. Sci. Eng. 2021, 9, 3222–3233. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Song, H. Class-incremental learning for wireless device identification in IoT. IEEE Internet Things J. 2021, 8, 17227–17235. [Google Scholar] [CrossRef]

- Chua, L.O. CNN: A vision of complexity. Int. J. Bifurc. Chaos 1997, 7, 2219–2425. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).