Efficient Fine Tuning for Fashion Object Detection

Abstract

:1. Introduction

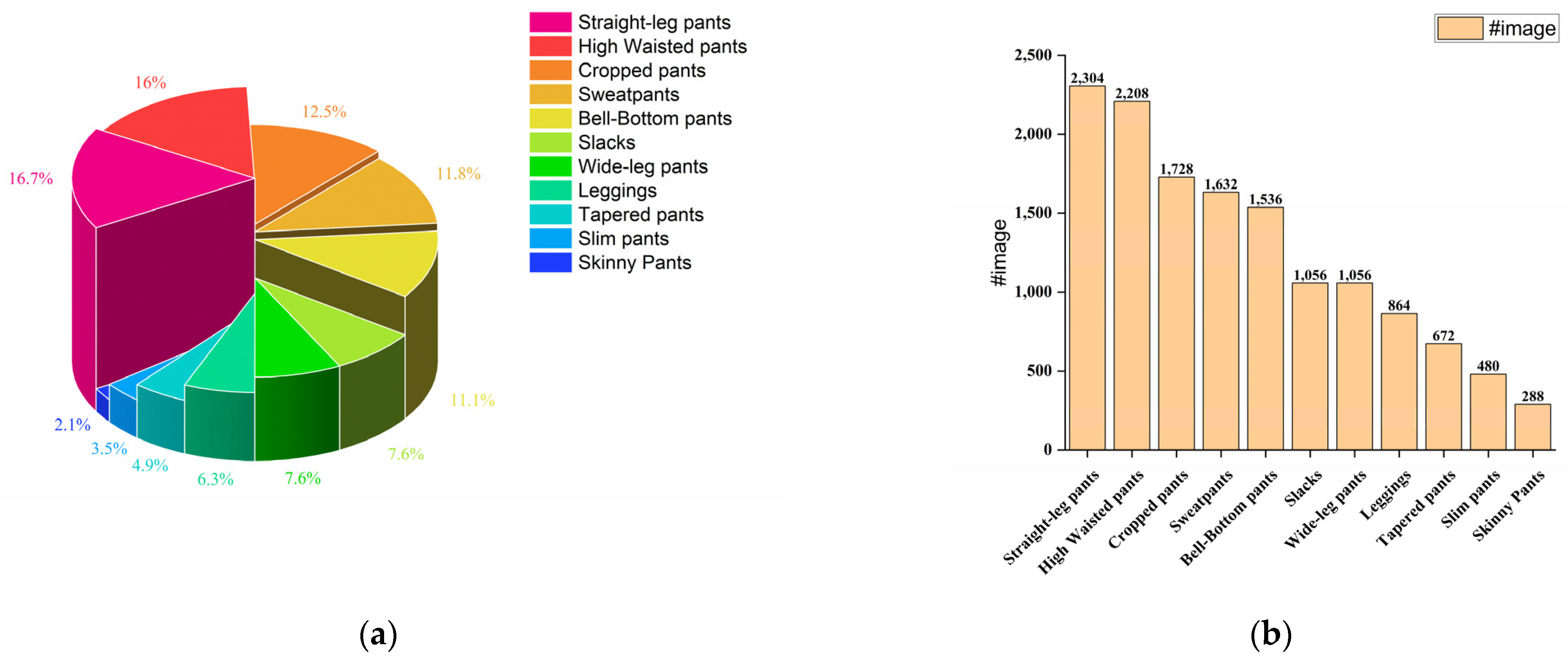

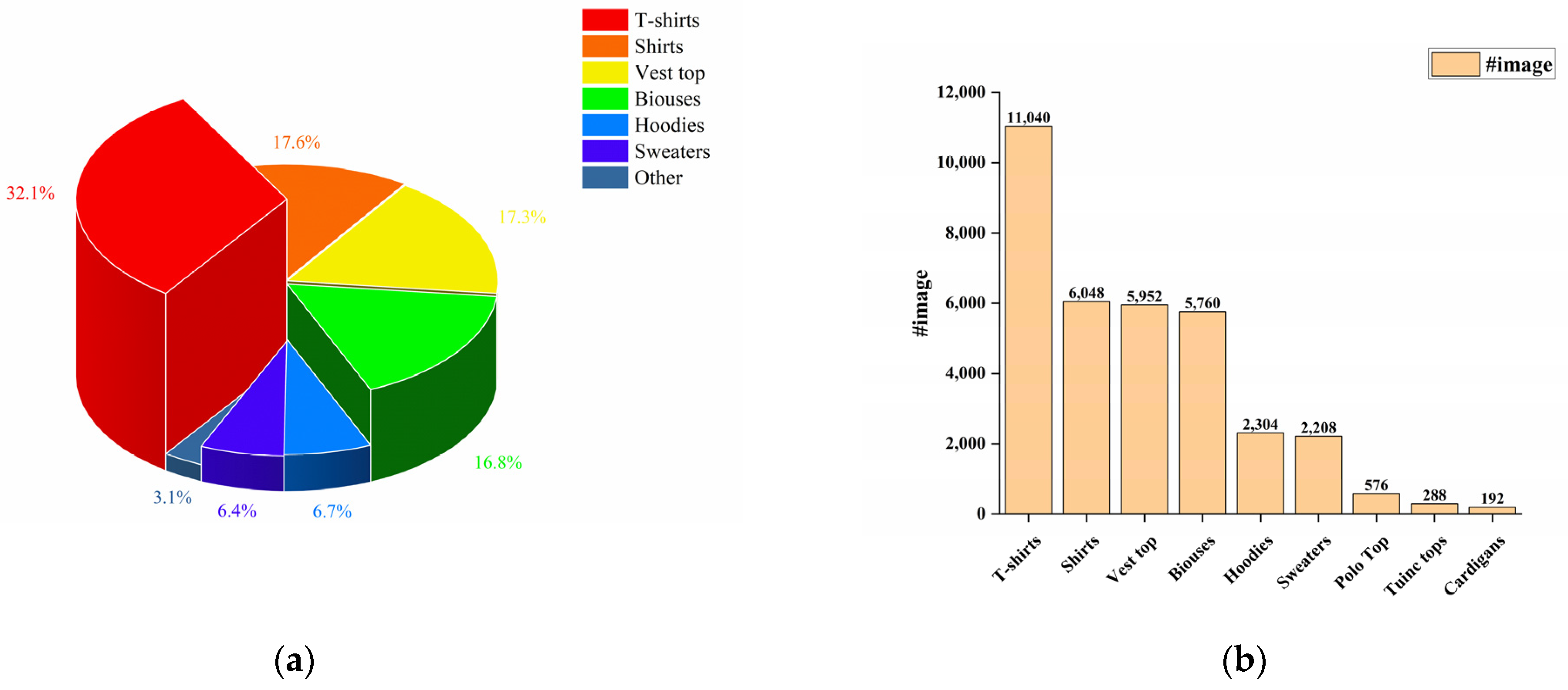

- Comprehensiveness: The images in Garment40K are extensively annotated with categories, text descriptions, and bounding boxes. The dataset covers 15 fine-grained clothing categories and includes richly varied perspectives of human images. This level of comprehensive and detailed information is not commonly found in existing datasets, making Garment40K valuable for various computer vision tasks related to clothing analysis.

- Scale: Garment40K has the potential to be the largest standalone dataset specifically designed for clothing object detection. With 40,000 clothing images, it provides a significant number of diverse and labeled data for training and evaluating clothing detection models. This larger scale enables researchers to develop more robust and accurate algorithms for detecting and recognizing garments in images.

- Availability: Garment40K is intended to make the dataset publicly accessible to the research community. By making it widely available, we aim to foster advancements in clothing object detection research. This availability promotes collaboration, encourages innovation, and allows researchers from around the world to benefit from the dataset, leading to potential breakthroughs in the field.

- Potential: Garment40K has the potential to serve as a high-quality dataset for human image generation tasks. Additionally, as a future research direction, the dataset can be further enhanced by annotating human parsing labels and including human pose representations. This expansion would transform Garment40K into a multimodal clothing dataset, facilitating research in areas such as fashion recommendation systems, virtual try-on technologies, and other applications that require detailed clothing analysis in conjunction with human pose estimation.

- We introduce Garment40K, a novel open-set clothing object detection benchmark, providing a valuable resource for the fashion domain.

- We employ parameter-efficient transfer learning methods for fine-tuning Grounding DINO, integrating adapter modules to enable the effective learning of new vocabulary.

- We introduce an additional similarity loss function that serves as a supplementary supervision signal to enhance the model’s learning of the object detection task.

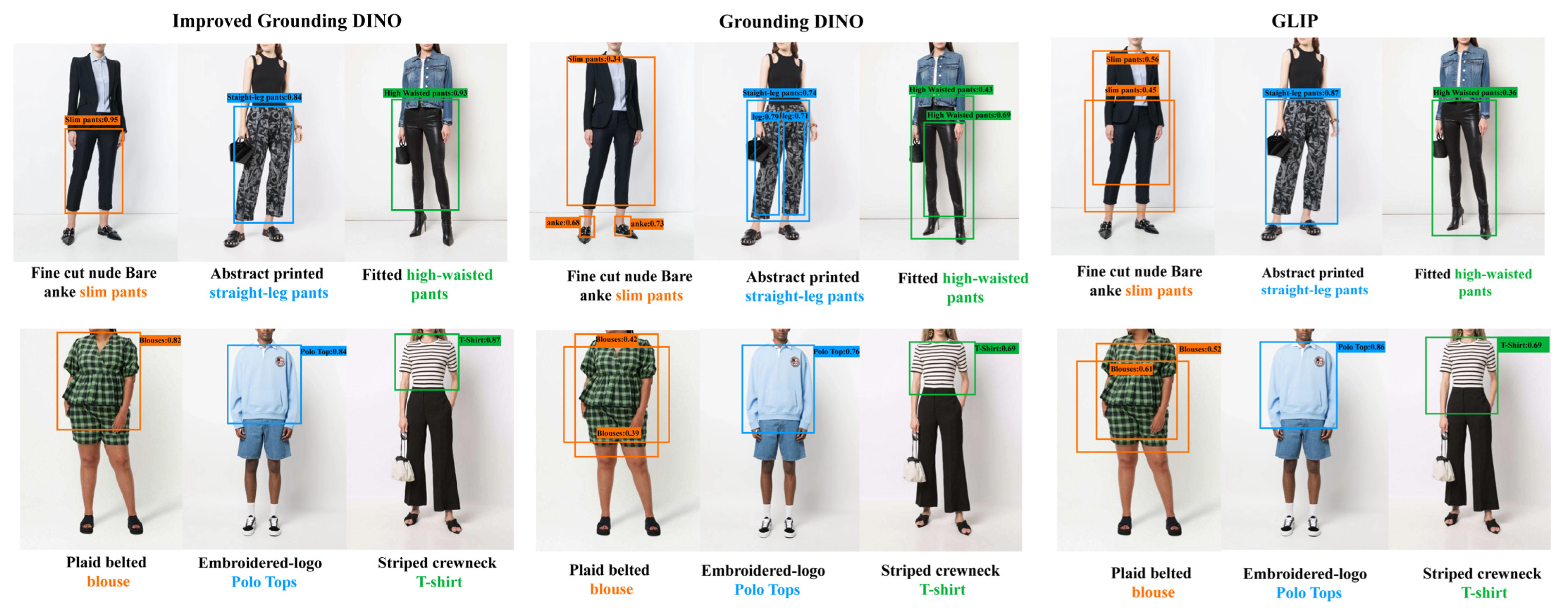

- We address the limitations of state-of-the-art open-set object detection models in the fashion image domain by adapting Grounding DINO for improved performance.

2. Related Work

2.1. Clothing Datasets

2.2. Open-Set Object Detection

2.3. Parameter-Efficient Transfer Learning

3. The Garment40K Dataset

Image Collection

4. Method

4.1. Grounding DINO

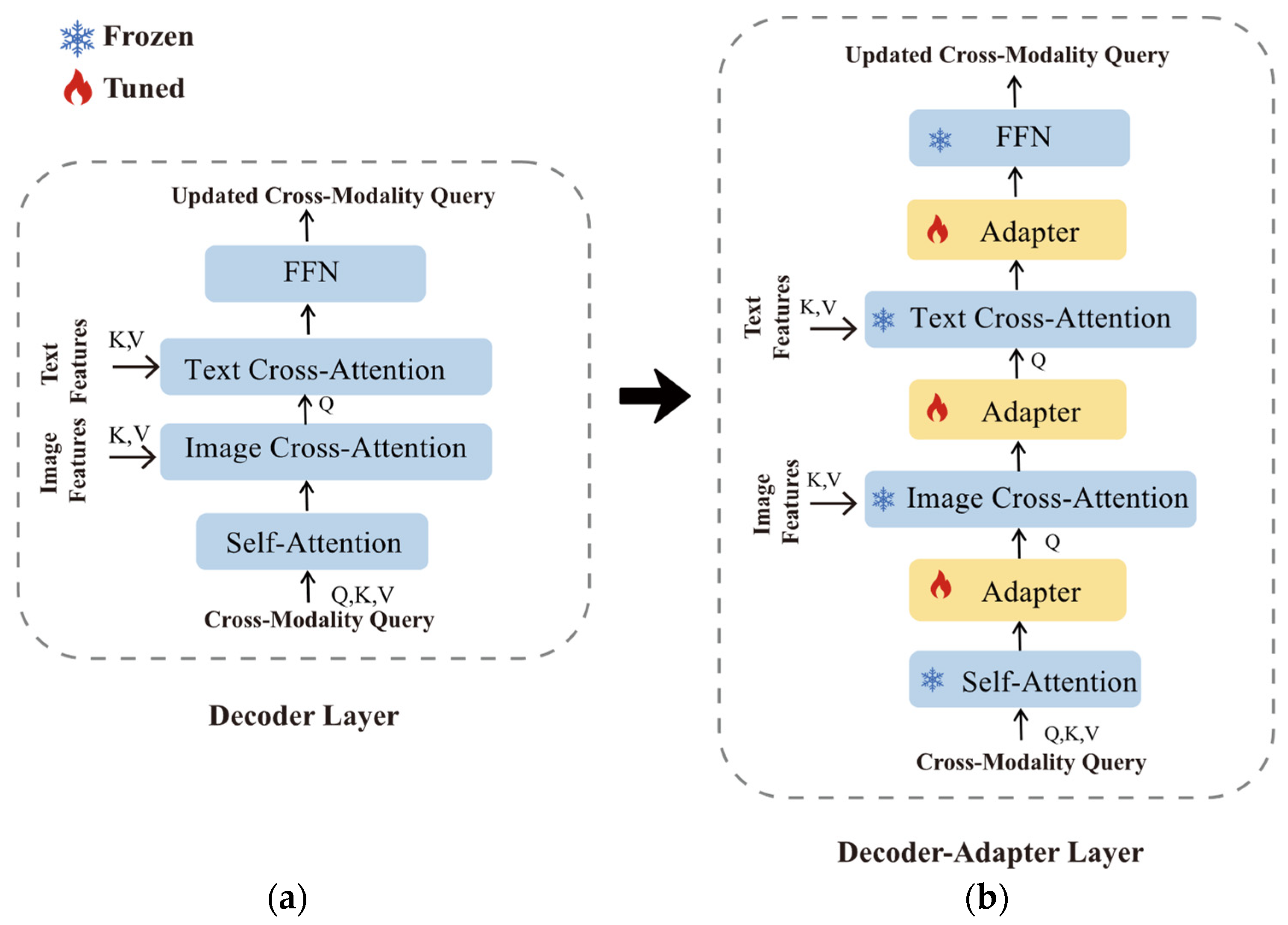

4.2. Feature Enhancer–Adapter Layer

4.3. Decoder–Adapter Layer

4.4. Loss Function

5. Experiments

5.1. Setup

5.2. Metric

- Precision: Precision is rigorously defined as the ratio of the cardinality of true positive predictions to the sum total of instances flagged as positive by the model. It is a critical measure of the veracity of the model in terms of positive identification.

- Recall: On the contrary, recall is the ratio of the cardinality of true positive predictions to the entirety of instances that are genuinely positive. It offers insights into the model’s capability of correctly identifying positive instances.

- Precision–recall curve: Pertaining to object detection, it is customary to have detections accompanied by a confidence score. By judiciously adjusting the threshold of this confidence score, a gamut of precision and recall values is obtained. The precision–recall (PR) curve is manifested by plotting these values over an array of thresholds.

- Average precision (AP): AP is a quintessential representation of the morphology of the precision–recall curve. It is deduced as the weighted mean of the precision values at each threshold, with the increment in recall from the antecedent threshold serving as the weight:

5.3. Supervised Transfer on Garment40K

5.4. Zero-Shot Transfer on COCO and LVIS

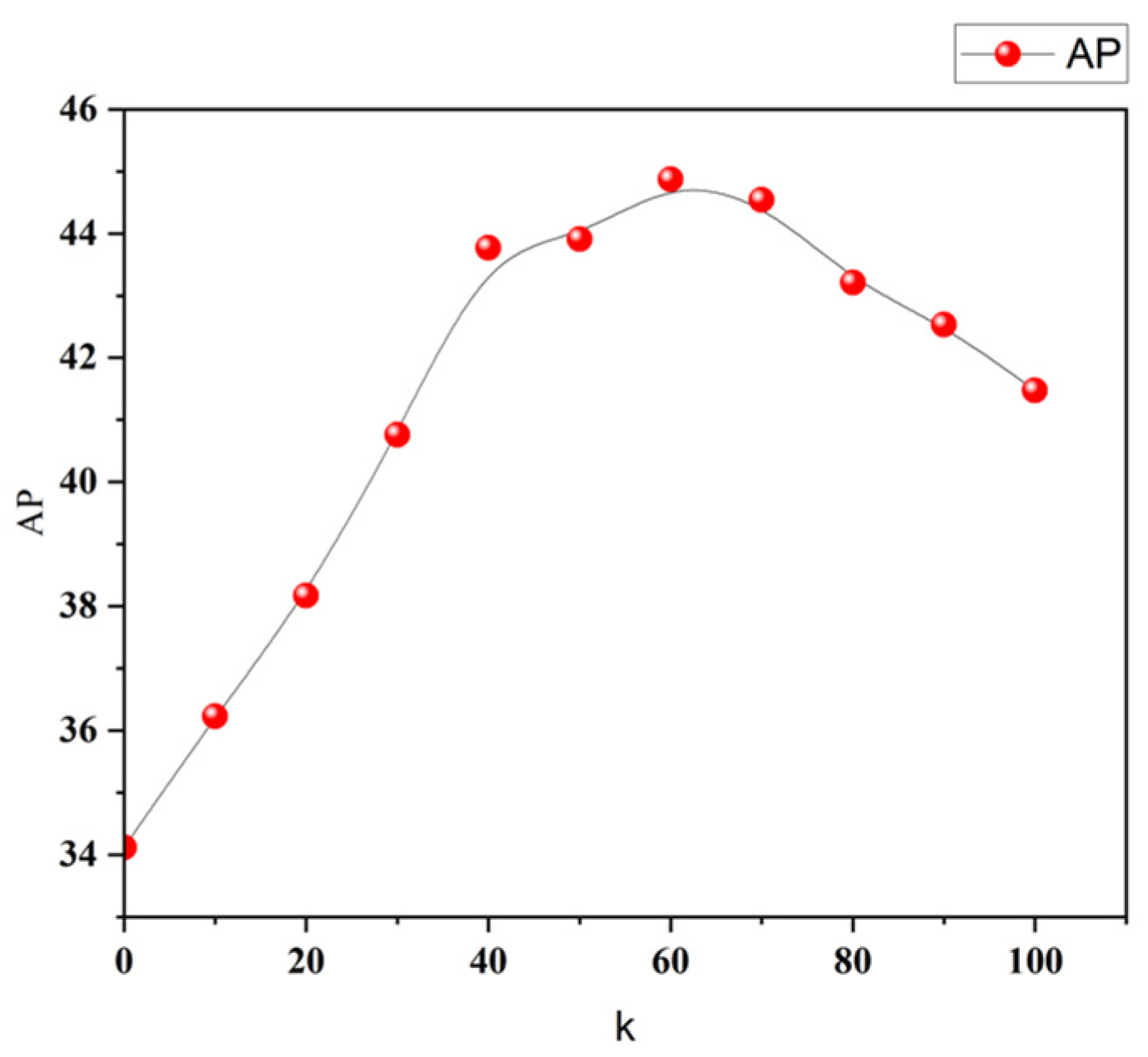

5.5. Ablations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747v2. [Google Scholar]

- Xie, Z.; Huang, Z.; Dong, X.; Zhao, F.; Dong, H.; Zhang, X.; Zhu, F.; Liang, X. GP-VTON: Towards General Purpose Virtual Try-on via Collaborative Local-Flow Global-Parsing Learning. arXiv 2023, arXiv:2303.13756. [Google Scholar]

- Pernuš, M.; Fookes, C.; Štruc, V.; Dobrišek, S. FICE: Text-Conditioned Fashion Image Editing with Guided GAN Inversion. arXiv 2023, arXiv:2301.02110. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN 2018. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. ISBN 978-3-030-58451-1. [Google Scholar]

- Gu, X.; Lin, T.-Y.; Kuo, W.; Cui, Y. Open-Vocabulary Object Detection via Vision and Language Knowledge Distillation. arXiv 2022, arXiv:2104.13921. [Google Scholar]

- Li, L.H.; Zhang, P.; Zhang, H.; Yang, J.; Li, C.; Zhong, Y.; Wang, L.; Yuan, L.; Zhang, L.; Hwang, J.-N.; et al. Grounded Language-Image Pre-Training. arXiv 2022, arXiv:2112.03857. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B. Parameter-Efficient Transfer Learning for NLP. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1096–1104. [Google Scholar]

- Choi, S.; Park, S.; Lee, M.; Choo, J. VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Jiang, Y.; Yang, S.; Qiu, H.; Wu, W.; Loy, C.C.; Liu, Z. Text2Human: Text-Driven Controllable Human Image Generation. ACM Trans. Graph. 2022, 41, 162. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021. [Google Scholar]

- Zang, Y.; Li, W.; Zhou, K.; Huang, C.; Loy, C.C. Open-Vocabulary DETR with Conditional Matching. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Volume 13669, pp. 106–122. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yao, L.; Han, J.; Wen, Y.; Liang, X.; Xu, D.; Zhang, W.; Li, Z.; Xu, C.; Xu, H. DetCLIP: Dictionary-Enriched Visual-Concept Paralleled Pre-Training for Open-World Detection. In Proceedings of the Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Jia, M.; Tang, L.; Chen, B.-C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.-N. Visual Prompt Tuning. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Bahng, H.; Jahanian, A.; Sankaranarayanan, S.; Isola, P. Exploring Visual Prompts for Adapting Large-Scale Models. arXiv 2022, arXiv:2203.17274. [Google Scholar]

- Chen, S.; Ge, C.; Tong, Z.; Wang, J.; Song, Y.; Wang, J.; Luo, P. AdaptFormer: Adapting Vision Transformers for Scalable Visual Recognition. arXiv 2022, arXiv:2205.13535. [Google Scholar]

- Gao, Y.; Shi, X.; Zhu, Y.; Wang, H.; Tang, Z.; Zhou, X.; Li, M.; Metaxas, D.N. Visual Prompt Tuning for Test-Time Domain Adaptation. In Proceedings of the 2023 International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.-Y. DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection. In Proceedings of the Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929v2. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar]

- Gupta, A.; Dollár, P.; Girshick, R. LVIS: A Dataset for Large Vocabulary Instance Segmentation 2019. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

| Model | Pre-Training Data | Fine-Tuning Data | Tunable Param (M) | Inference | |

| Garment40K-Test | Garment40K-Val | ||||

| GLIP-T | O365, GoldG, Cap4M | Garment40K | 232 | 42.5 | 42.8 |

| O365, GoldG | 232 | 41.9 | 42.3 | ||

| Grounding DINO-T | O365 | Garment40K | 172 | 41.5 | 41.6 |

| O365, GoldG | 172 | 42.4 | 42.7 | ||

| O365, GoldG, Cap4M, | 172 | 42.8 | 42.6 | ||

| Our model | O365 | Garment40K | 1.3 | 41.7 | 41.8 |

| O365, GoldG | 1.3 | 42.8 | 42.9 | ||

| O365, GoldG, Cap4M | 1.3 | 43.2 | 43.5 | ||

| Model | Pre-Training Data | Fine-Tuning Data | Zero-Shot 2017val |

|---|---|---|---|

| Our model | O365 | Garment40K | 46.4 |

| O365, GoldG | 47.9 | ||

| O365, GoldG, Gap4M | 48.5 | ||

| Grounding DINO-T | O365 | None | 46.7 |

| O365, GoldG | 48.1 | ||

| O365, GoldG, Cap4M | 48.4 |

| Model | Pre-Training Data | Fine-Tuning Data | MiniVal | |||

|---|---|---|---|---|---|---|

| APr | APc | APf | AP | |||

| Our model | O365, GoldG | Garment40K | 12.9 | 18.3 | 31.3 | 24.6 |

| O365, GoldG, Gap4M | 46.5 | 17.5 | 22.8 | 31.6 | ||

| Grounding DINO-T | O365, GoldG | None | 14.4 | 19.6 | 32.2 | 25.6 |

| O365, GoldG, Cap4M | 48.4 | 18.1 | 23.3 | 32.7 | ||

| Model | Inference Garment40k-Test |

|---|---|

| Feature enhancer–adapter layer | 41.4 |

| Decoder–adapter layer | 41.6 |

| 42.1 | |

| 41.9 | |

| Feature enhancer–adapter + decoder–adapter layer | 42.3 |

| Improved Grounding DINO (our model) | 43.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, B.; Xu, W. Efficient Fine Tuning for Fashion Object Detection. Sensors 2023, 23, 6083. https://doi.org/10.3390/s23136083

Ma B, Xu W. Efficient Fine Tuning for Fashion Object Detection. Sensors. 2023; 23(13):6083. https://doi.org/10.3390/s23136083

Chicago/Turabian StyleMa, Benjiang, and Wenjin Xu. 2023. "Efficient Fine Tuning for Fashion Object Detection" Sensors 23, no. 13: 6083. https://doi.org/10.3390/s23136083