A Compact and Low-Power SoC Design for Spiking Neural Network Based on Current Multiplier Charge Injector Synapse

Abstract

1. Introduction

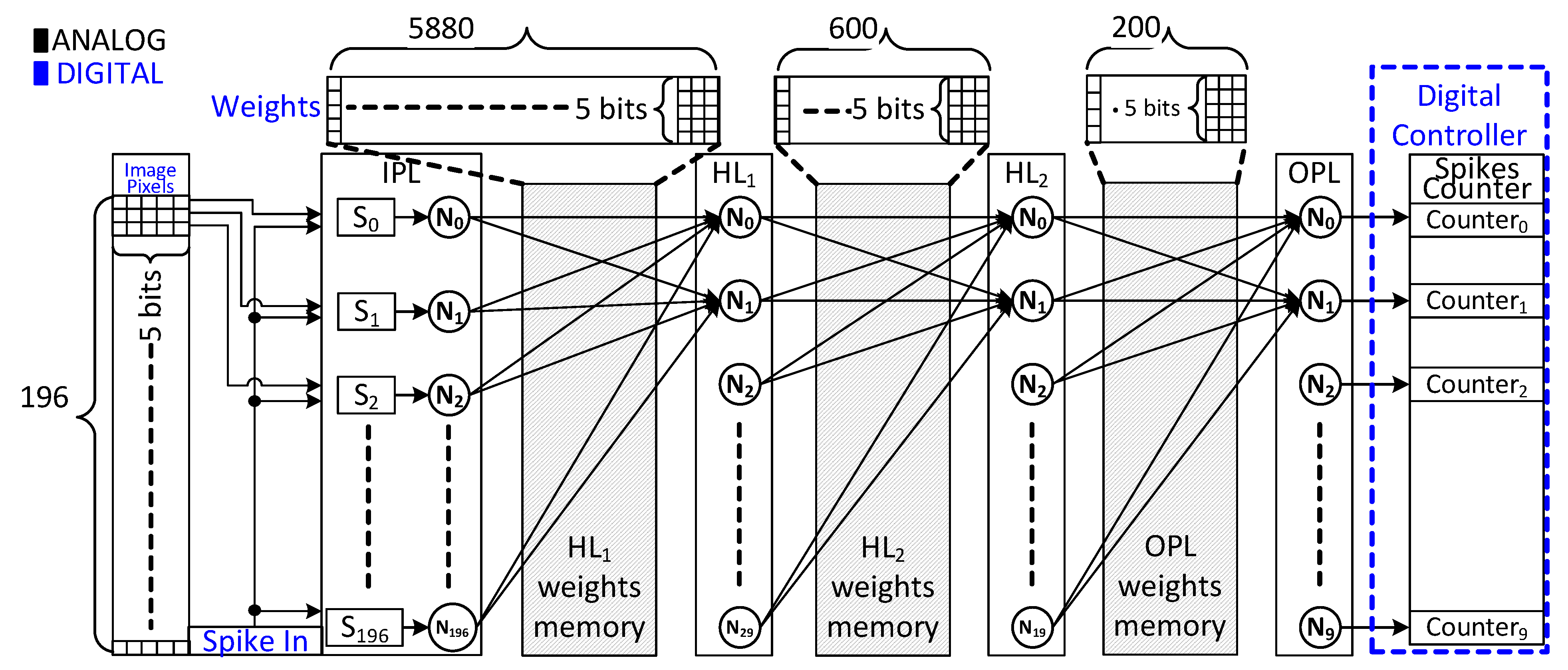

2. Neural Network Architecture

2.1. Spiking Neural Network Model

2.2. Leaky Integrate and Fire Model

2.3. Optimization of SNN Architecture

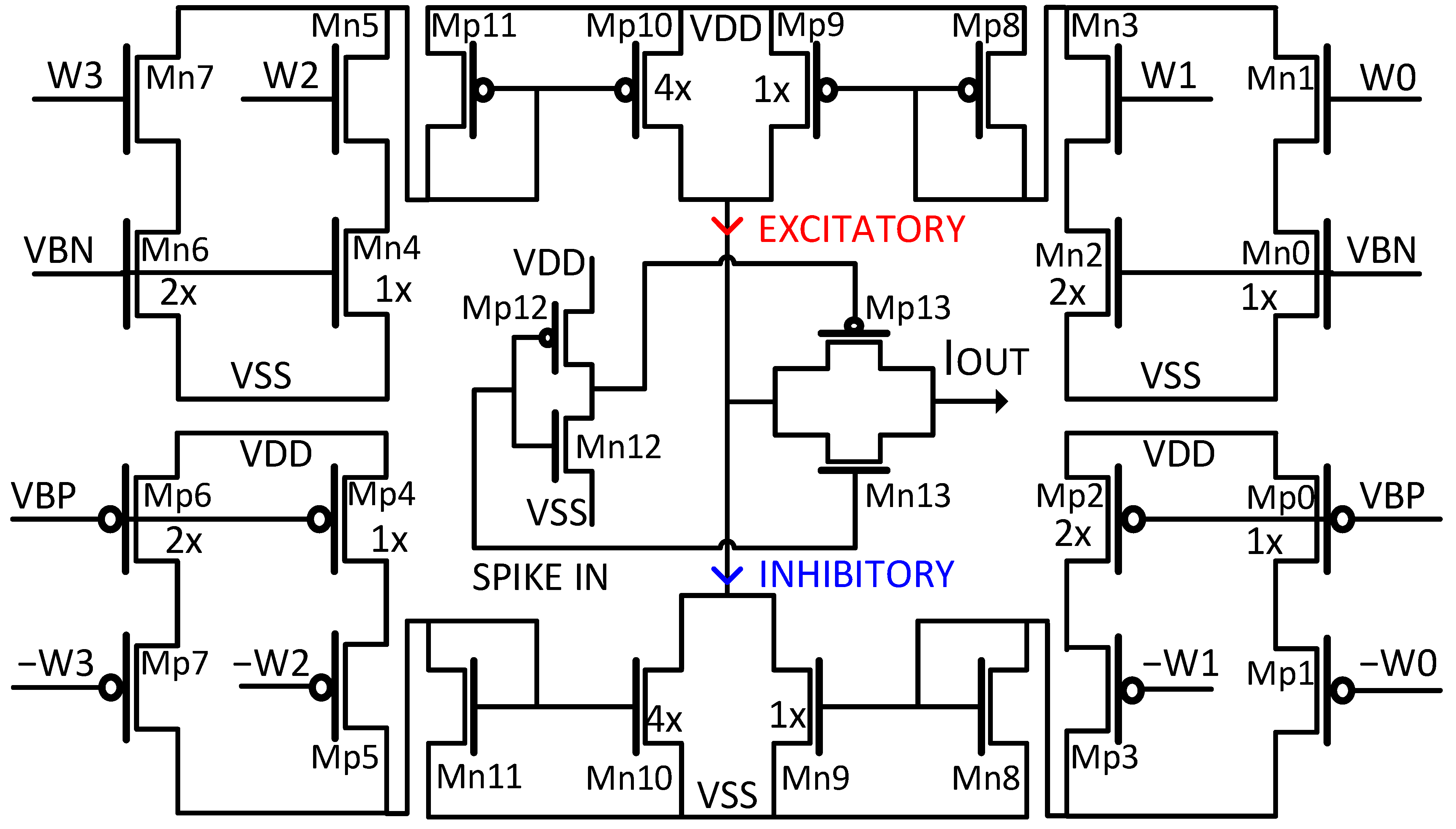

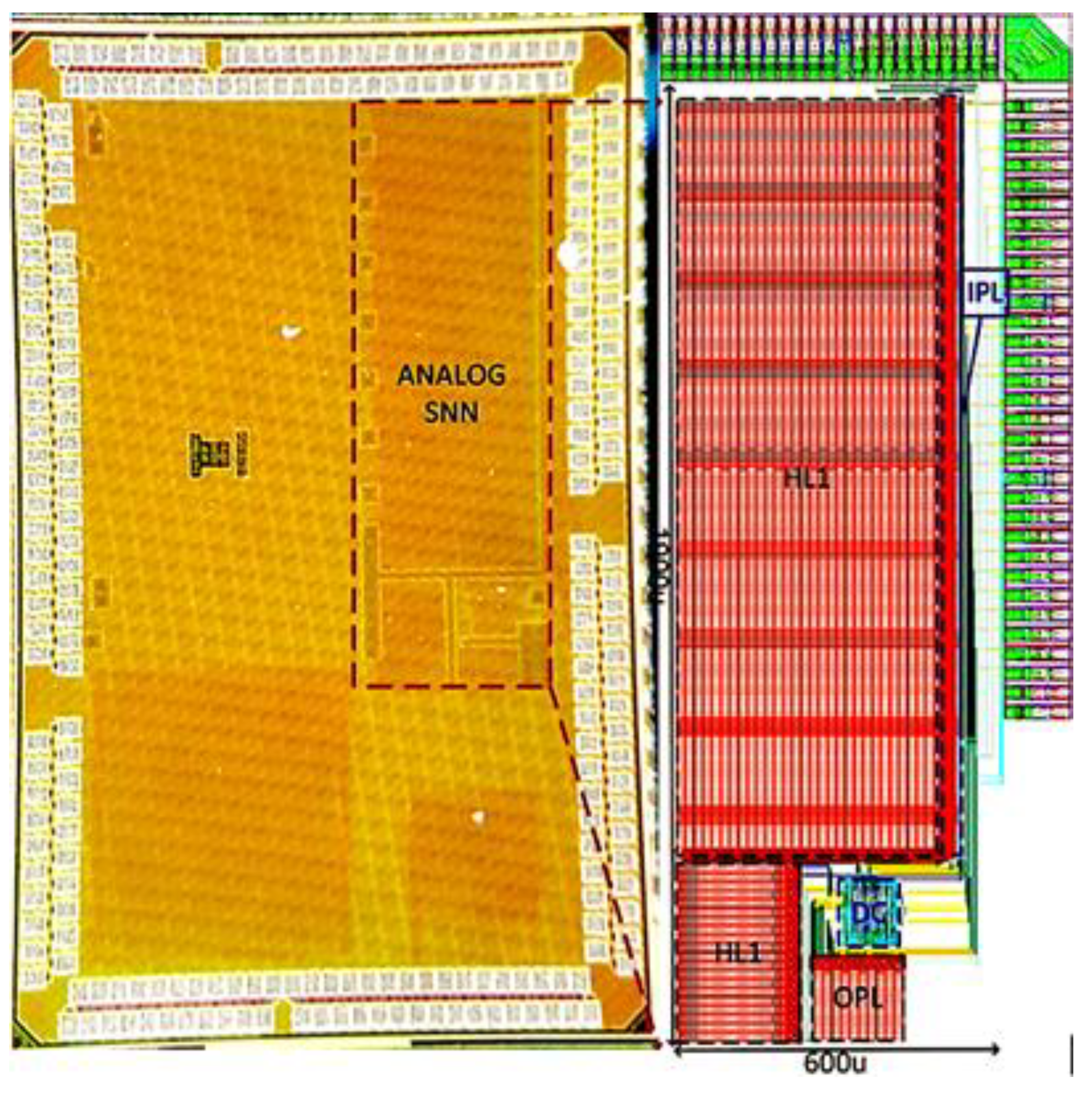

3. Implementation of SNN SoC

3.1. Analog SNN SoC Implementation

3.2. Digital SNN SoC Implementation

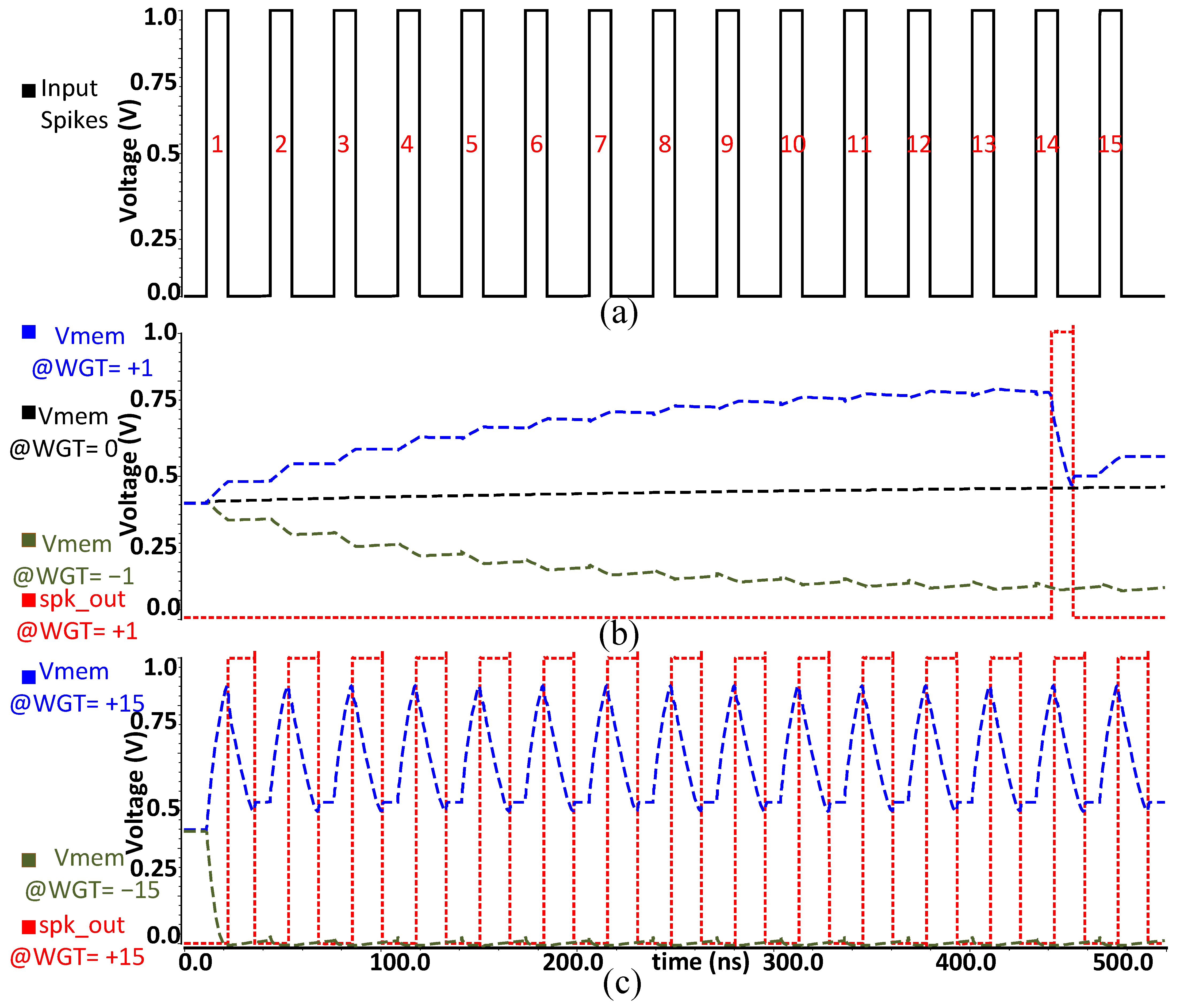

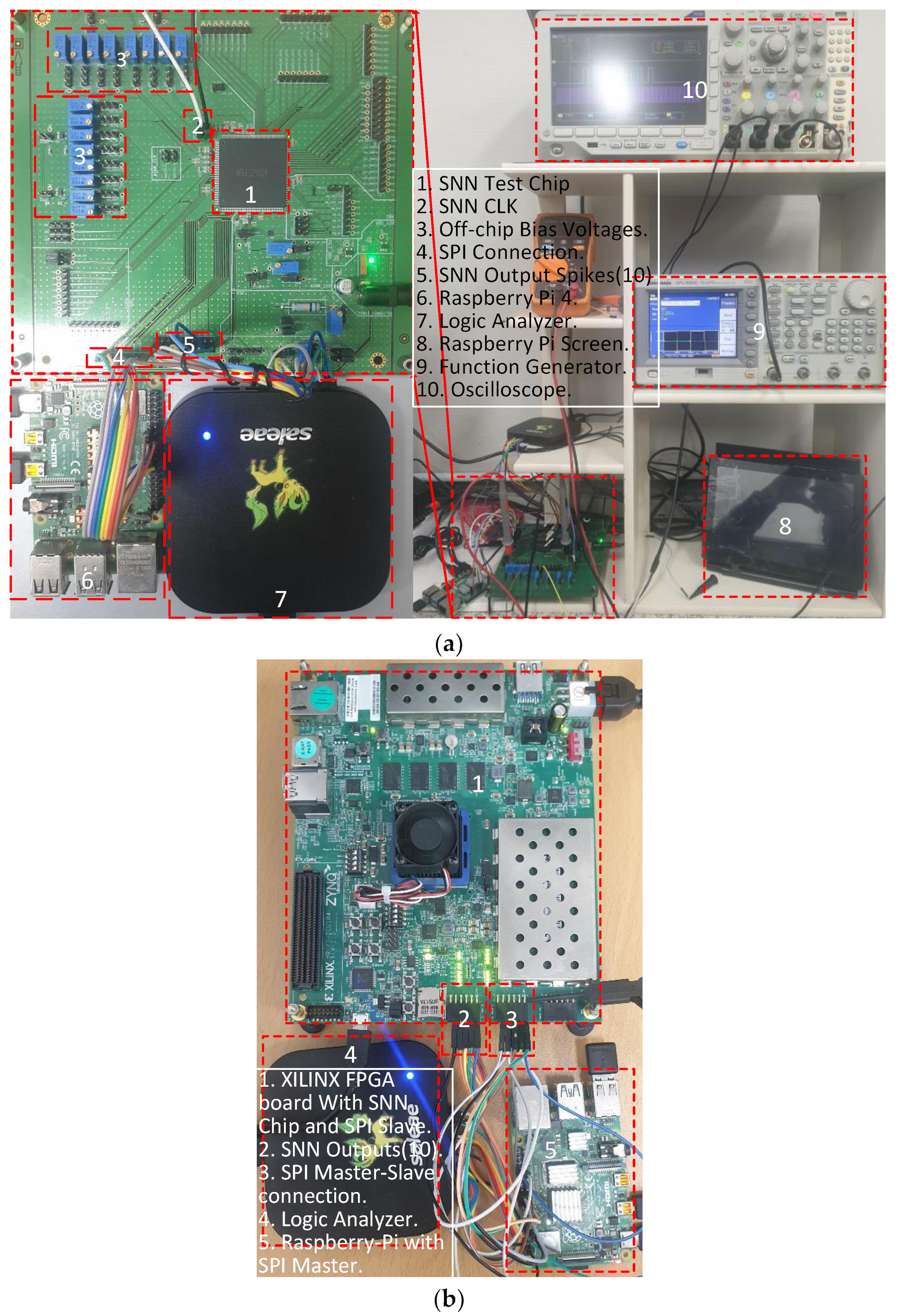

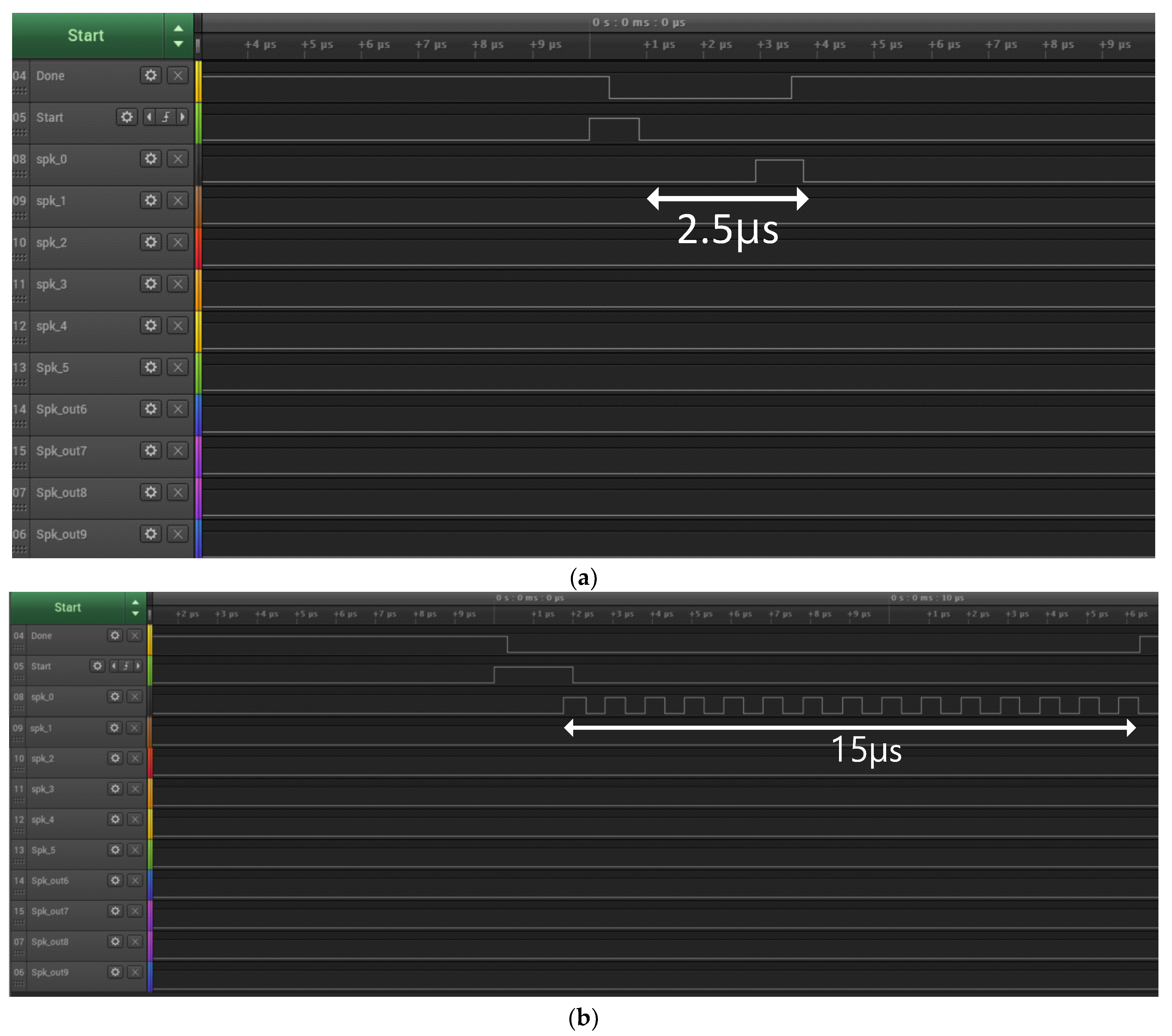

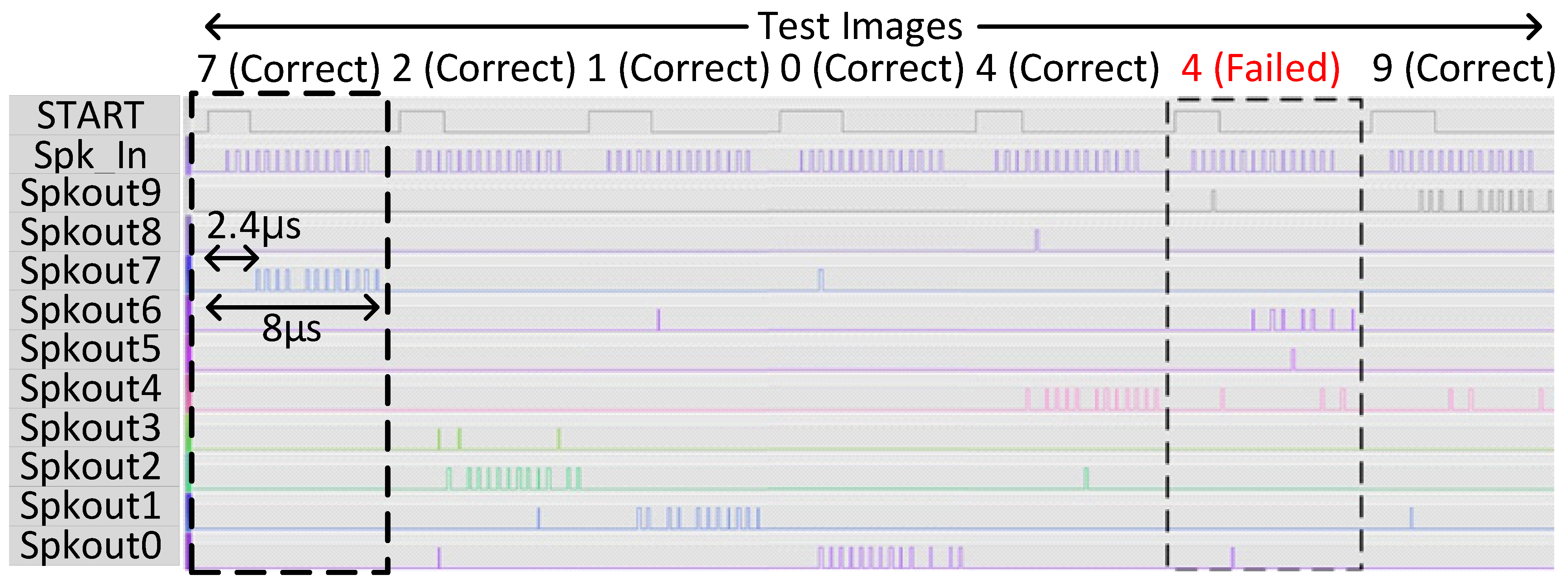

4. Measurement Results

4.1. Analyzing Analog SNN

4.2. Comparison with Digital SNN Chip

5. Performance Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kankanhalli, A.; Charalabidis, Y.; Mellouli, S. IoT and AI for smart government: A research agenda. Gov. Inf. Q. 2019, 36, 304–309. [Google Scholar] [CrossRef]

- Mead, C. Neuromorphic electronic systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Prezioso, M.; Merrikh-Bayat, F.; Hoskins, B.D.; Adam, G.C.; Likharev, K.K.; Strukov, D.B. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 2015, 521, 61–64. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in NIPS 25, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Lee, K.; Park, J.; Yoo, H. A low-power, mixed-mode neural network classifier for robust scene classification. J. Semicond. Technol. Sci. 2019, 19, 129–136. [Google Scholar] [CrossRef]

- von Neumann, J. First draft of a report on the EDVAC. IEEE Ann. Hist. Comput. 1993, 15, 27–75. [Google Scholar] [CrossRef] [PubMed]

- Meier, K. Special report: Can we copy the brain?—The brain as computer. IEEE Spectr. 2017, 54, 28–33. [Google Scholar] [CrossRef]

- Mead, C. Analog VLSI and Neural Systems, 1st ed.; Addison-Wesley: Reading, MA, USA, 1989. [Google Scholar]

- Kim, S.; Kim, H.; Hwang, S.; Kim, M.-H.; Chang, Y.-F.; Park, B.-G. Analog synaptic behavior of a silicon nitride memristor. ACS Appl. Mater. Interfaces 2017, 9, 40420–40427. [Google Scholar] [CrossRef] [PubMed]

- Miyashita, D.; Kousai, S.; Suzuki, T.; Deguchi, J. Time-Domain Neural Network: A 48.5 TSOp/s/W neuromorphic chip optimized for deep learning and CMOS technology. In Proceedings of the IEEE Asian SSC Conference, Toyama, Japan, 7–9 November 2016; pp. 25–28. [Google Scholar] [CrossRef]

- Aamir, S.A.; Stradmann, Y.; Müller, P.; Pehle, C.; Hartel, A.; Grübl, A.; Schemmel, J.; Meier, K. An Accelerated LIF Neuronal Network Array for a Large-Scale Mixed-Signal Neuromorphic Architecture. IEEE TCAS I Regul. Pap. 2018, 65, 4299–4312. [Google Scholar] [CrossRef]

- Li, C.; Belkin, D.; Li, Y.; Yan, P.; Hu, M.; Ge, N.; Jiang, H.; Montgomery, E.; Lin, P.; Wang, Z.; et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 2018, 9, 2385. [Google Scholar] [CrossRef] [PubMed]

- Asghar, M.S.; Arslan, S.; Kim, H. Current multiplier based synapse and neuron circuits for compact SNN chip. In Proceedings of the IEEE ISCAS, Daegu, Republic of Korea, 22–28 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Asghar, M.S.; Arslan, S.; Kim, H. A Low-Power Spiking Neural Network Chip Based on a Compact LIF Neuron and Binary Exponential Charge Injector Synapse Circuits. Sensors 2021, 21, 4462. [Google Scholar] [CrossRef] [PubMed]

- Camuñas-Mesa, L.A.; Linares-Barranco, B.; Serrano-Gotarredona, T. Neuromorphic Spiking Neural Networks and Their Memristor-CMOS Hardware Implementations. Materials 2019, 12, 2745. [Google Scholar] [CrossRef] [PubMed]

- Jolivet, R.; Rauch, A.; Lüscher, H.-R.; Gerstner, W. Integrate-and-fire models with adaptation are good enough: Predicting spike times under random current injection. In Proceedings of the NIPS 18, Vancouver, BC, Canada, 5–8 December 2005; pp. 595–602. Available online: https://proceedings.neurips.cc/paper/2005 (accessed on 30 March 2023).

- Izhikevich, E.M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 2003, 14, 1569–1572. [Google Scholar] [CrossRef] [PubMed]

- Al-Hamid, A.A.; Kim, H. Optimization of Spiking Neural Networks Based on Binary Streamed Rate Coding. Electronics 2020, 9, 1599. [Google Scholar] [CrossRef]

- Indiveri, G.; Chicca, E.; Douglas, R. A VLSI Array of Low-Power Spiking Neurons and Bistable Synapses with Spike-Timing Dependent Plasticity. IEEE Trans. Neural Netw. 2006, 17, 211–221. [Google Scholar] [CrossRef] [PubMed]

| S NN CHIP | Area | Energy/Spike | Energy/Image |

|---|---|---|---|

| Analog SNN | 0.96 mm2 | 1.325 nJ | 261 nJ |

| Digital SNN | 0.75 mm2 | 4.660 nJ | 936 nJ |

| Parameters | [11] | [5] | [19] | [14] | This Work |

|---|---|---|---|---|---|

| CMOS tech [nm] | 65 | 130 | 800 | 180 | 65 |

| Architecture | Analog | Analog NNC | Mixed-Mode | Analog | Analog |

| Classifier Type | SNN | MLP/RBFN | SNN | SNN | SNN |

| Neuron Model | LIF | - | LIF | LIF | LIF |

| Neuron cell Area (μm2) | 2352 | 68,400 | - | 2022.7 | 228 |

| Neuron cell power (μW) | 14.4 | 723 | - | 25 | 3 |

| Chip Area (mm2) | 3.6 | 0.140 | 1.6 | 3.6 | 0.96 |

| Power (mW) | 48.62 | 2.20 | 40 μ | 1.06 | 530 μ |

| Energy per Spike (pj) | 790 | - | 900 | 900 | 1325 |

| Accuracy of MNIST [%] | - | 92 | - | 94.60 | 96.56 |

| Complexity (total # of weights) | 1024 | 750 | 256 | 3311 | 6680 |

| Area η (Complexity/Area) | 284.5 | 5360 | 160 | 920 | 6958 |

| Power η (Complexity/Power) | 21.06 | 341 | - | 3123 | 12,603 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asghar, M.S.; Arslan, S.; Al-Hamid, A.A.; Kim, H. A Compact and Low-Power SoC Design for Spiking Neural Network Based on Current Multiplier Charge Injector Synapse. Sensors 2023, 23, 6275. https://doi.org/10.3390/s23146275

Asghar MS, Arslan S, Al-Hamid AA, Kim H. A Compact and Low-Power SoC Design for Spiking Neural Network Based on Current Multiplier Charge Injector Synapse. Sensors. 2023; 23(14):6275. https://doi.org/10.3390/s23146275

Chicago/Turabian StyleAsghar, Malik Summair, Saad Arslan, Ali A. Al-Hamid, and HyungWon Kim. 2023. "A Compact and Low-Power SoC Design for Spiking Neural Network Based on Current Multiplier Charge Injector Synapse" Sensors 23, no. 14: 6275. https://doi.org/10.3390/s23146275

APA StyleAsghar, M. S., Arslan, S., Al-Hamid, A. A., & Kim, H. (2023). A Compact and Low-Power SoC Design for Spiking Neural Network Based on Current Multiplier Charge Injector Synapse. Sensors, 23(14), 6275. https://doi.org/10.3390/s23146275