1. Introduction

Motion capture systems have been widely used to track human movements as they provide important scientific data. Out of several technologies that capture body motion, marker-based motion capture has been the standard and most spatiotemporally accurate, though requiring the attachment of physical markers. Markerless video-based motion capture is a promising alternative used in situations where physical contact may not be possible [

1,

2,

3]. It is good for the classification of movements but not yet with the spatial precision of marker systems.

There is a third situation that has had little discussion, which is when a hybrid of the two approaches provides the best performance, such as in ball sports where it is not possible to place active markers on the ball, and passive markers may interfere with the handling and flight of the balls. In such a task, humans interact with physical objects, and thus, both human and object movements must be recorded precisely. While a coarse video-based estimation of human movements has been used in sports such as basketball [

4], volleyball [

5], soccer [

6], and baseball [

7], our study of the neural dynamics and biomechanics of juggling needed the precision of an active, high spatiotemporal precision active marker-based system, meaning that the ball motion had to separately be tracked using video, requiring the development of a coregistration system that could be applied to any hybrid motion capture scenario.

Specifically, we apply the hybrid system to the understanding of the motor control of juggling, a new paradigm for computational neuroscience of complex motor skill learning that builds on the simpler tasks that have dominated the field. Its study is possible via the method of Mobile Brain/Body Imaging (MoBI) [

8], which uses freely moving participants and wireless EEG to avoid the physical constraints of other stationary brain imaging methods. Three-ball cascade juggling is a basic juggling pattern with three balls that has been extensively studied behaviorally [

9,

10,

11] and which we are proposing as a new model for the study of neural dynamics of motor control during complex skill learning. Juggling involves the cyclic movement of hands and balls; the ball cycle is commonly divided into free-flight and in-hand phases (commonly termed the dwell/hold phase). The ratio between the amount of time the hand holds a ball and the amount of time the hand is empty has been referred to as the dwell ratio, which has been treated as an important parameter for analysis in juggling. A second critical parameter for our neural analysis is the relative location of the ball’s apex to the hands, a key description of juggling stability that is needed to understand the neural control of throws as well as how the visual estimation of ball trajectory influences the motor control of the catch [

12,

13,

14]. Thus, it is imperative to accurately estimate the accurate movement of the hands and balls to define the catch, throw, and apex positions of the ball in the world coordinate space of the hands. In this experiment, the body location is collected in a real-world coordinate system using motion capture equipment, whereas the positions of the balls are captured on video only, and thus, there is no ground truth for the ball position. The true hand position is also unknown, and this necessitates a way to reconstruct it jointly with the unknown ball position. We expect specific use cases that are already active areas of research beyond our application to juggling, such as the study of the relation between a pro tennis player’s serve and ball velocity [

15] or between elbow stress and ball trajectory [

16]. These are all scenarios in which information about either object kinematics or body kinematics is not sufficient.

In this study, we seek to develop a practical system to integrate commodity video cameras into a marker-based mocap that does not rely on a priori calibration nor on precise knowledge of the camera position and lens characteristics. Such a system could be computationally efficient enough to learn coregistration in real time, which is especially important when the participant is moving relative to the video image plane. In the case of manipulating objects that are alternately held and thrown, two primary constraints are available to be used in coregistration: a positional constraint during holding (ball and hand position coincide) and gravitational dynamics during ball flight. Several additional challenges of motion capture during juggling had to be overcome. Ball position estimation from the video may be inaccurate because of partial occlusion of the ball by the hand, and it was not possible to know the actual hand position because active markers on the hand would be occluded, and thus, hand position was estimated from forearm and wrist markers. Our coregistration method had the secondary benefit of enabling us to estimate the correct hand position, including wrist bending movements that are important for throwing and catching.

Here, we compare multiple methods for the coregistration of video and marker-based motion capture, each differing in their use of hand positions and gravitational constraints. Coregistration methods were evaluated by (1) the difference (error) between the estimated hand position and the estimated ball position during the hold phase and (2) the vertical acceleration of the balls. We chose linear methods for simplicity, assuming that the video plane was spatially linear, but these methods could be easily generalized to use nonlinear scaling methods. The proposed algorithms differed in their use of physical constraints and assumptions to reconstruct the ball trajectory from video, producing trajectories in body space. We provide guidance on the optimal reconstruction of object positions from video that are widely applicable to hybrid video/motion capture scenarios. We expect this discussion to be useful to researchers who used to use marker-based motion capture and vision-based motion capture simultaneously, even in a limited hardware environment.

2. Methods

2.1. Data Acquisition

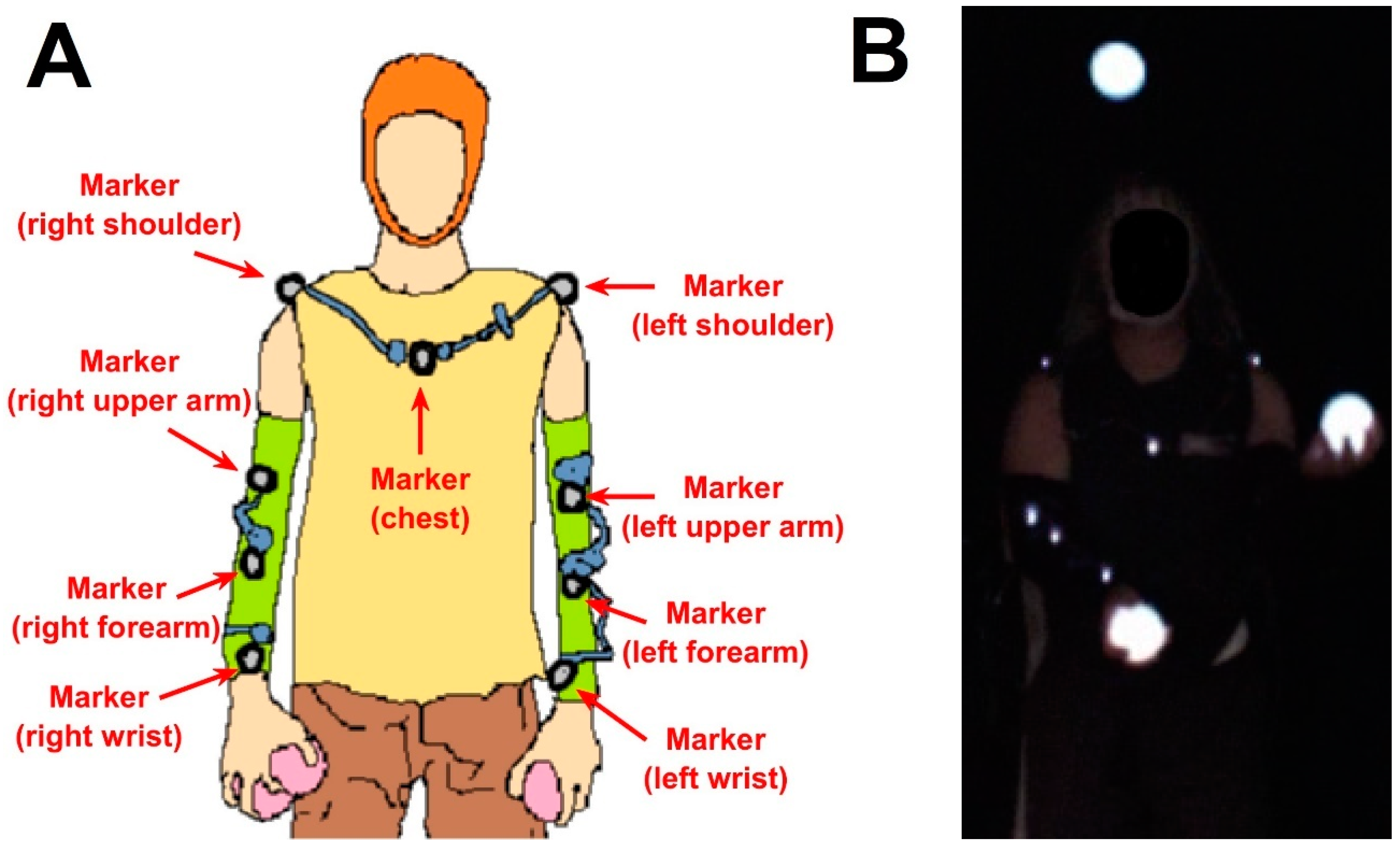

We recruited healthy subjects who can already juggle. Their age was 28.69 ± 5.66 (mean ± standard deviation). The subjects performed three-ball cascade juggling with internally illuminated balls with a diameter of approximately 7 cm for five 10 min sessions with a 3 min break session between sessions. All participants provided informed consent, and this study was approved by the IRB of UCSD and carried out complying with the Declaration of Helsinki. We used a PhaseSpace motion capture system (PhaseSpace, San Leandro, CA, USA) with a sampling rate of 480 Hz to track nine markers on left/right shoulder, left/right upper arm, left/right forearm, left/right wrist, and chest, as shown in

Figure 1A. Each marker was a 1

LED emitter mounted on a small circuit board with dimensions 20 mm × 14 mm × 3.2 mm. As balls could not be instrumented with markers, we recorded video of illuminated balls using a Flea2 video camera (Point Grey, BC, Canada) with a frame rate of 60 Hz (

Figure 1B). Motion capture system cameras have 3600 × 3600 resolution. The video camera had a resolution of 640 × 480. In addition, participants wore an EEG cap to record EEG data, which was not used for this present study. We used the Lab Streaming Layer (LSL) protocol for temporal synchronization of all recordings [

17].

2.2. Preprocessing

For motion capture data, juggling trials were first isolated, and all coordinate signals were manually inspected to eliminate abnormal discontinuous points (noise) and interpolate short missing intervals with spline interpolation. Missing intervals that lasted over 0.2 s were not corrected and were discarded in further processing. Then, using the interpolation function interp1() in MATLAB, the motion capture data were downsampled for the purpose of finding the ball coregistration transformation and aligned with the 60 Hz video sample times. Ball positions on a pixel scale were estimated from the video frames by applying an automatic circle-finding algorithm using the circular Hough transformation [

18], which is capable of capturing variable circle sizes simultaneously. Video coordinates ran in opposite directions as the motion capture coordinates and were, thus, first inverted. As the recorded video contained only two-dimensional data in a plane approximately parallel to the body plane, it captured only vertical and lateral movements (See

Figure 2). The ball depth position was reconstructed by adopting the hand depth position for the hold phase and for the flight phase, linearly interpolating between the depth dimension of the ball position at throw and catch, assuming a constant horizontal ball speed during flight. By construction, this yields real-world scaling, and thus, ball depth was not further considered in coregistration.

To define the body plane, we tracked a rigid body formed by the coordinates of the two shoulder markers and the chest marker. The position and angle of the body plane relative to the video image plane can be used to trigonometrically correct ball coordinates so that they lie in the body plane (

Figure 2A). In practice, in this experiment, participants stood on a clear marker, and thus, movement of the body relative to the camera was minimal. The mean and standard deviations for translational movements across subjects were 1.57

0.62 [cm], 0.54

0.23 [cm], and 2.16

0.66 [cm] for lateral, vertical, and depth coordinates, respectively. These shifts, when projected to the video plane, were less than a quarter of the ball’s diameter. The standard deviation of body to video plane angle was less than one degree (0.24

0.13 [degree]) and similarly of no importance. Since all the values were minimal, we neglected the variation in them for further analysis. We also ignored lens distortions and assumed that pixel space was linear. In situations where these assumptions do not hold, these factors could readily be accounted for via a video de-warping transformation followed by simple trigonometric time-varying rotation of ball coordinates into the body plane prior to proceeding with coregistration.

It was not possible to track the hand using active markers due to occlusion of palm-side markers by the ball and invisibility of markers on the back of the hand. While this could be solved in future studies using inertial sensors or placing mocap cameras below the hands, it was a limitation inherent in this data, and thus, recovery of hand position was a secondary aim of our method. We proceeded by estimating the hand position using wrist and forearm markers by extending a line connecting the two markers by 10 cm (the average distance from wrist marker to palm center, as shown in

Figure 2B). Our simple estimate of hand position neglects flexions/extensions, which are typically on the order of 5 cm, or about a ball diameter, in the vertical direction, as well as lateral deviations, which are generally smaller, on the order of 2 cm. Thus, most of the remaining variation seen after the coregistration can be reasonably ascribed to wrist movements. The balls and hands are, thus, mutually constraining, which provides a method not only to coregister the balls into the body space but also estimate the true hand position incorporating wrist bending.

To determine the hold and flight phase of the balls, throw and catch events were manually inserted based on inspection of the video and verified by a second observer. The hold phase was defined as an interval from catch to throw, and the flight phase was defined as an interval from throw to catch. As the frame rate was 60 Hz, the time of catch and throw could each be wrong by a maximum of 16.7 ms. We did not interpolate the exact time between frames, which could maximally distort the duration of hold and flight phases by approximately 6.2% (the phase duration was approximately 520 ms, averaged across subjects.). Since misalignment of events could misclassify the phase of motion samples near the throw and catch, we removed 3 samples (~50 ms) at the beginning and end of each phase. This had the side effect of eliminating any frames in which ball occlusion by the hand may have led to ball position estimation errors.

2.3. Coregistration Methods

Ball coordinates derived from the video data on the lateral and vertical axes are on a pixel scale, and the aim of coregistration is to match these to the real-world scale. The units can be converted using a linear equation, as we confirmed the validity of linearization in the previous section. Linearity enables us to compare methods with ease and has high generalizability in general.

As there is no a priori best method for reconstructing the ball position from video, we explored the following task-dependent constraints: (1) the ball position during the hold phase should be identical to the hand position; (2) during free flight, the ball is subject to gravitational acceleration of approximately 9.8 [

] at our latitude [

19]. The first constraint was only approximate, given the need to estimate the hand position discussed above, and thus, a secondary outcome of coregistration is to recover the hand position. Prior to registration, ball and hand coordinate data were segmented into hold phase segments for each hand and flight phase segments for each ball. These were separately concatenated. This was justified by our determination that body movement relative to camera was a negligible source of error, so we could fit the entire recording at once. In situations where body movement was larger, coregistration fitting would be preceded by a transformation of ball coordinates into the 2D plane of the body using continuously time-varying shifts and rotations of the body relative to the camera.

We considered four possible approaches for coregistration, varying in their use of two task constraints (hold vs. flight phases) and whether vertical and lateral axes were scaled uniformly or separately.

Ind, the naïve approach is to minimize the distance between hand and ball during the hold phase, in a linear least square sense, Independently for the vertical and lateral axes.

L, deriving a Lateral scale designed to match the ball and hand positions at the extreme lateral ends of the movement range, which is often approximately near the point of ball catching. Such an approach reasons that scaling fits will be most accurate when using extreme values (defined as the maximal 2.5% of absolute lateral hand coordinates for both right and left hand, as slope errors are greater for points more closely spaced). The mean of the ball position was matched to the mean of the hand position.

V, deriving a Vertical scale such that the vertical acceleration of the ball while in flight is 9.8 [] and applying this same scale to the horizontal axis.

LV, combining the results obtained from (2) for the lateral axis scaling and (3) for the vertical axis scaling.

Note, Ind and LV (methods 1 and 4) permit different scaling for vertical and horizontal axes, while L and V (methods 2 and 3) use a common scaler for both axes. Satisfying the theoretical acceleration of gravity constraint using ball flight phase is trivial, but there are various ways to try to satisfy the hold phase constraint. Thus, we applied the least square fitting to take advantage of the entire hold-phase information (Ind, method 1).

The performance of each method in matching the two essential constraints was assessed using a ball–hand distance metric as well as quantifying the vertical acceleration of balls during flight. The difference (error) between the estimated hand position and the estimated ball position during the hold phase was calculated as follows:

where T means the number of samples in the hold phase for all the trials, and X(t), Y(t), and

represent the lateral, vertical, and depth position of the estimated hand position and ball position, respectively. The error was averaged across subjects for group-level comparison. Note that

was always zero in all four methods because of the way the Z coordinate was defined by hand coordinates. The vertical acceleration of the balls was computed by applying one-sided finite difference twice using all points of the flight phase cut at both ends. All computation and statistical tests in this present study were performed using MATLAB R2022b (MathWorks, Inc., Natick, MA, USA).

3. Results

As the true trajectory of the ball in real-world coordinates and units is unknown, we reconstructed the trajectories with the four proposed methods from coordinates in the video image plane, enabling us to make practical suggestions of the best approach to reconstructing real-world coordinates from the video.

Figure 3 shows an example of the estimated hand trajectory and coregistered ball trajectory during juggling using the proposed methods. Differences between the methods were clearly observed in the lateral registration of the ball and hand during the hold phases and at the ball flight apex in the vertical trajectory.

Figure 4 shows the vertical and lateral scaling factors obtained from the four methods. The mean of the lateral scales across individuals was 2.79, 2.84, 2.96, and 2.84 [pixel/cm] for the Ind, L, V, and LV, respectively. The mean of the lateral scales across individuals was 3.38, 2.84, 2.96, and 2.96 [pixel/cm] for the Ind, L, V, and LV, respectively. The Ind and LV fits revealed that lateral scales were notably more variable across individuals than vertical scales.

To compare the performance of the four coregistration methods, we first assessed the position error between the ball and hand during the hold phase.

Figure 5 shows the position errors for the four methods for each subject. The mean errors across subjects were 2.75, 3.28, 3.58, and 3.09 [cm], for Ind, L, V, and LV, respectively. The Ind method resulted in the lowest error of the methods and showed a significant difference in all comparisons (t (12) = −3.48,

p < 0.01 for comparison between the Ind and L; t (12) = −2.88,

p < 0.05 for comparison between Ind and V; t (12) = −3.02,

p < 0.01 for comparison between the Ind and LV). The other comparisons did not show any significant differences.

To establish the relative contribution of errors in the vertical and horizontal axes for each method, we examined the error distribution, as shown in

Figure 6. The plot indicates that the vertical error contributed to the total error more than the lateral error as the mean is above the diagonal identity line. In addition, while the across-subject variance of V was dominated mainly by lateral error, the across-subject variance of Ind, L, and LV was more balanced and similar, indicating that the L method did not preferentially optimize lateral errors. In contrast, the V method indicated that the gravitational constraint did poorly at fitting the lateral position. As expected, Ind had the best compromise between bias and variance since it merely found the best fit without incorporating other constraints.

For evaluation of vertical axis coregistration accuracy, we measured the acceleration of gravity from the ball flight trajectories estimated by each method (

Figure 7). By construction, vertical scaling in V and LV was determined by fitting the flight trajectories to known gravity, so naturally, these fulfilled the constraint without error. The estimate using the Ind method (8.65 [

])was significantly lower than the other methods (t (12) = −3.61,

p < 0.01 for comparison between the Ind and L; t (12) = −5.202,

p < 0.001 for comparison between the Ind and V/LV), while the estimate for the L method was higher (10.35 [

]) but not significantly, so the L and V methods did not show any significant difference. Clearly, the methods that minimized ball-hand distance during the hold phase failed to properly match gravity during the flight phase.

Taken together, the two evaluation indicators revealed that the Ind method had the lowest error and yet the poorest matching of ball flight to known gravitational acceleration. The V method based on the theoretical acceleration of gravity showed the highest position error during the hold phase, indicating that a uniform scaling of vertical and horizontal coordinates is not optimal.

Knowing that our target hand position is an estimate, it may not be surprising that merely fitting the ball-to-hand estimate yielded poor global performance. In order to understand this as well as explain the presence of residual position error seen in all coregistration methods, we examined the temporal variation of the ball-to-hand error during the hold phase.

Figure 8 shows relative ball trajectories with respect to the estimated hand position. The overall position errors shown in

Figure 5 can be regarded as time averages of these curves. As the hold phase had a different length every cycle and across individuals, the interval between catch and throw was temporally normalized, and the normalized trajectories were averaged across subjects. Rows in

Figure 8 correspond to the direction (lateral/vertical), and columns correspond to the hand (right/left). The absolute displacements of the ball from the hand estimate are small (<3 cm) but systematic and likely reflect wrist flexion. Indeed, an analysis of these errors allows us to recover a time series for wrist flexion that was not directly measured. For the vertical position, ball estimation is above the hand estimate at the catch, dips down before the throw, and rises again at the throw, consistent with the expected wrist extension and flexion during the catch/throw cycle. The total amplitude of motion, ~5 cm is consistent within the flexural range of motion for the wrist. For the lateral position, the largest error emerged immediately before the throw. This likely reflects a medial rotation of the wrist such that the wrist flexion used to accurately throw the ball across the midline was visible in both vertical and horizontal coordinates. For the lateral coordinates, since we fit left and right hands together, it is expected that left/right symmetry is enforced, explaining the zero bias for lateral ball position relative to left and right hands, although the specific shape of the trajectories was not symmetric, suggesting an average left/right asymmetry in wrist motion. The small bias (~1 cm) around the catch time may be attributed to the use of the most extreme lateral values for fitting in of the L method as it is possible that the wrist marker, which was attached at the wrist of a neoprene body suit, may be systematically shifted at those positions.

5. Conclusions

In this present study, we demonstrated methods that were able to successfully coregister free-flying thrown and caught objects in video with marker-based motion capture. The best method (the LV model) utilized a combination of physical constraints during flight and ball holding as well as hand motion constraints to result in a coregistration that allowed us to accurately quantify ball flight and with the additional benefit of enabling the accurate recovery of unmeasured wrist movements. The methods (L and V) that partially employed the availability of the constraints and applied homogeneous scaling across dimensions were suboptimal. The methods developed here, which combine the fitting of constraints and reverse reconstruction of unobserved variables could be broadly applicable to other studies of movement dynamics in which not all objects can be instrumented with active markers. A number of general lessons can be drawn from this work. First, simple least squares fitting is not the best approach because the object/body ‘error’ will often, in fact, contain important behavioral information. Considering the least square fitting is likely to be exploited in general, defining task constraints should be prioritized. From this work, we developed several recommendations for future experimental studies of complex object/body interactions that can be difficult to study using marker-based motion capture alone and for which currently available markerless motion capture is not accurate enough. Such cases can benefit from a hybrid approach with the addition of video capture to marker-based systems. The recommendations are as follows: (1) identify task constraints (e.g., in the case of juggling, intervals for free flight of an object in a task that can be matched with the known gravitational acceleration and intervals of physical interaction between a performer and an object); (2) design a method to maximize the use of constraints, such as the LV model in our case, considering the importance of scaling different dimensions using different constraints; (3) avoid simple error minimization as fitting errors may contain behaviorally relevant information; (4) examine and extract meaningful information from the errors.