TDFusion: When Tensor Decomposition Meets Medical Image Fusion in the Nonsubsampled Shearlet Transform Domain

Abstract

1. Introduction

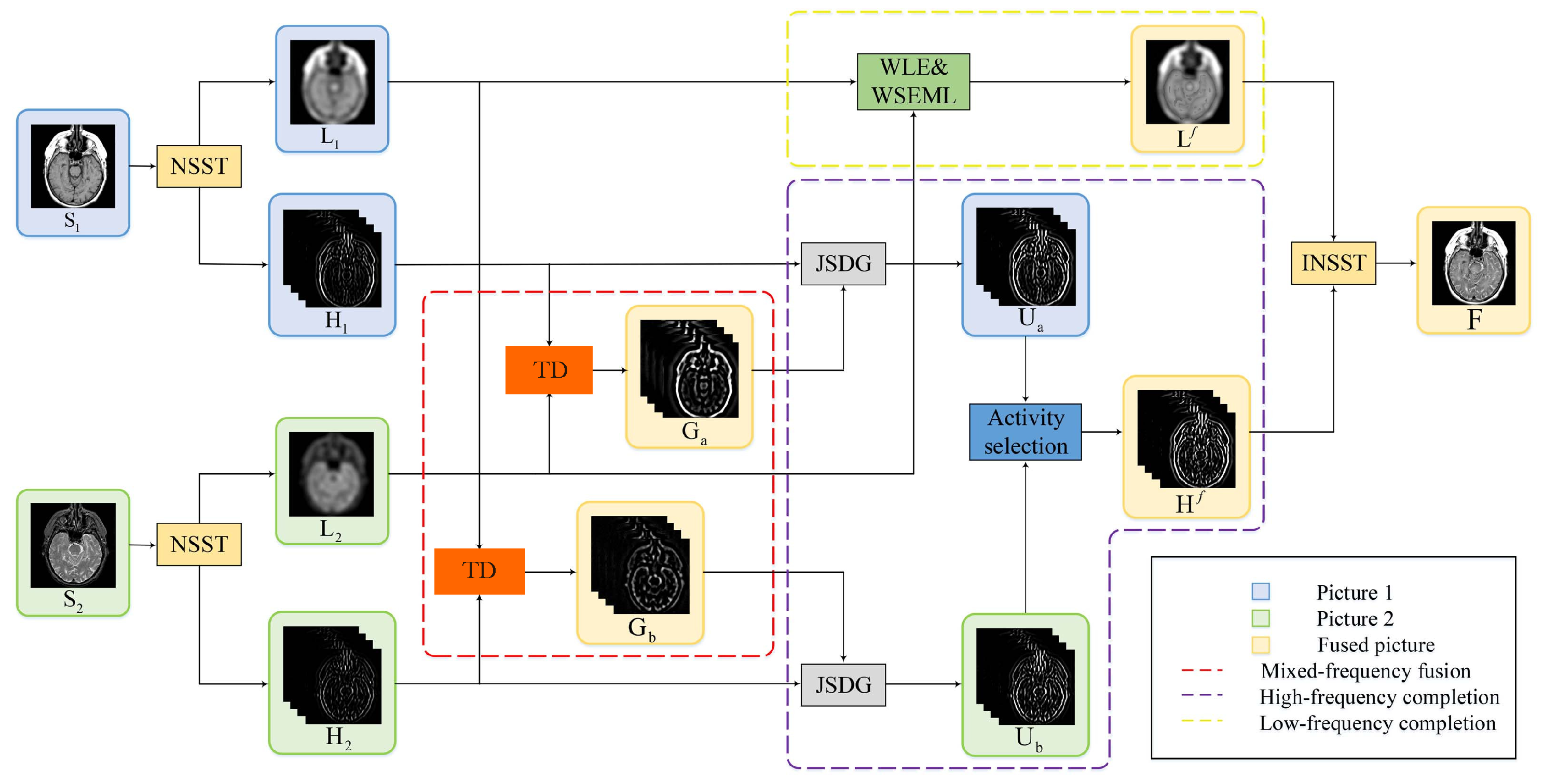

- Our TDFusion model is a unified optimization model. On the basis of the NSST method and the tensor decomposition method, the mixed-frequency fusion image is obtained by fusing the high-frequency and low-frequency components of two source images.

- Considering the structural differences between high-frequency and low-frequency components, some information will be lost during fusion. We embed the framework into the guided filter to optimize and complete the knowledge from low frequencies to high frequencies.

- We combine the ADMM algorithm with the gradient descent method to improve the performance of the fusion image. Through a large number of experiments, the effectiveness of our model in five benchmark datasets of image fusion problems (T1 and T2, T2 and PD, CT and MRI, MRI and PET, and MR and SPECT) is verified. Compared with the other five medical image fusion methods, our model also achieves better results.

2. Related Work

3. Notation and Preliminaries of Tensors

4. The Proposed Method

4.1. Nonsubsampled Shearlet Transform (NSST)

4.2. Tensor Decomposition Based Fusion

4.3. The Optimization Solution

4.3.1. Solution of I

4.3.2. Solution of J

4.3.3. Solution of D

4.3.4. Solution of C

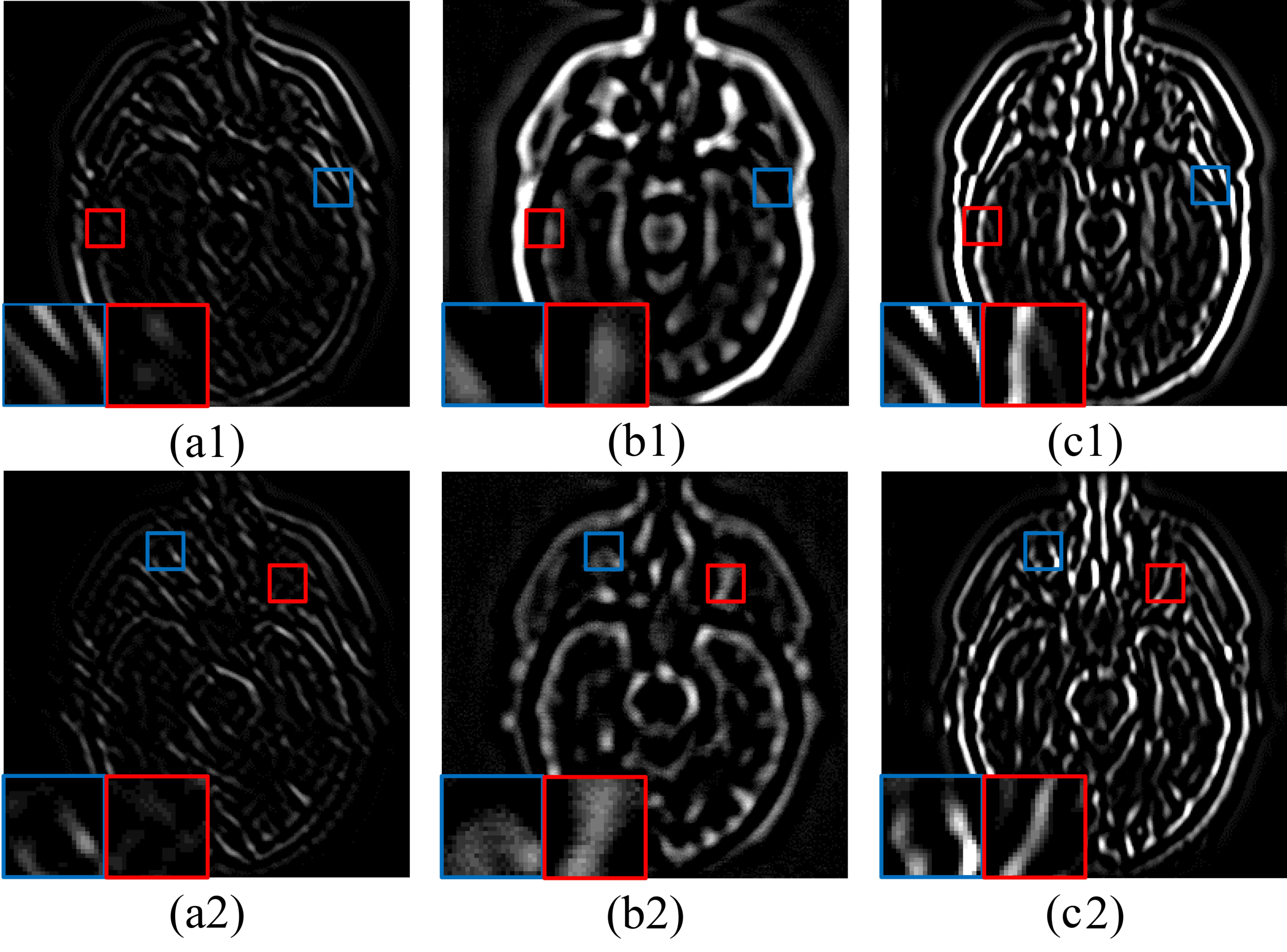

4.4. High-Frequency Completion

4.4.1. Joint Static and Dynamic Guidance

4.4.2. Fusion of Complete Mixed-Frequency Maps

4.5. Low-Frequency Completion

4.6. Reconstruction Fused Image by the INSST

5. Experiments

5.1. Experimental Settings

5.1.1. Experimental Images

5.1.2. Objective Metrics

5.1.3. Comparison Methods

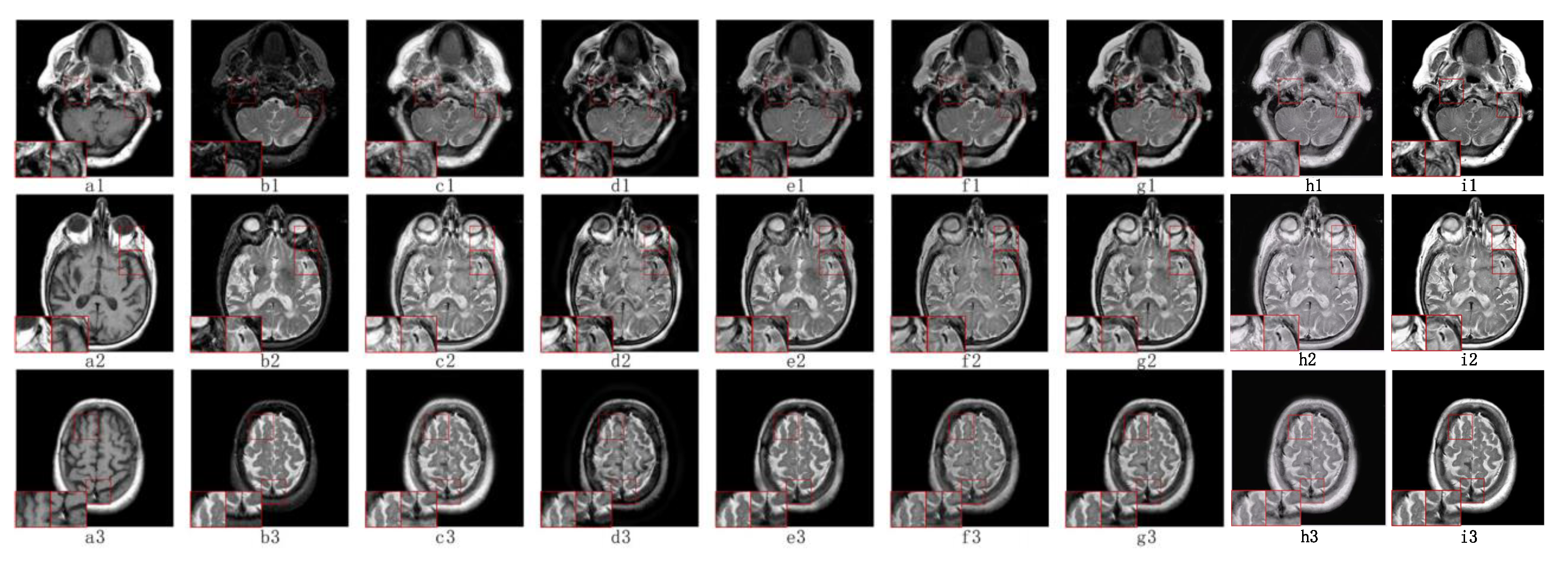

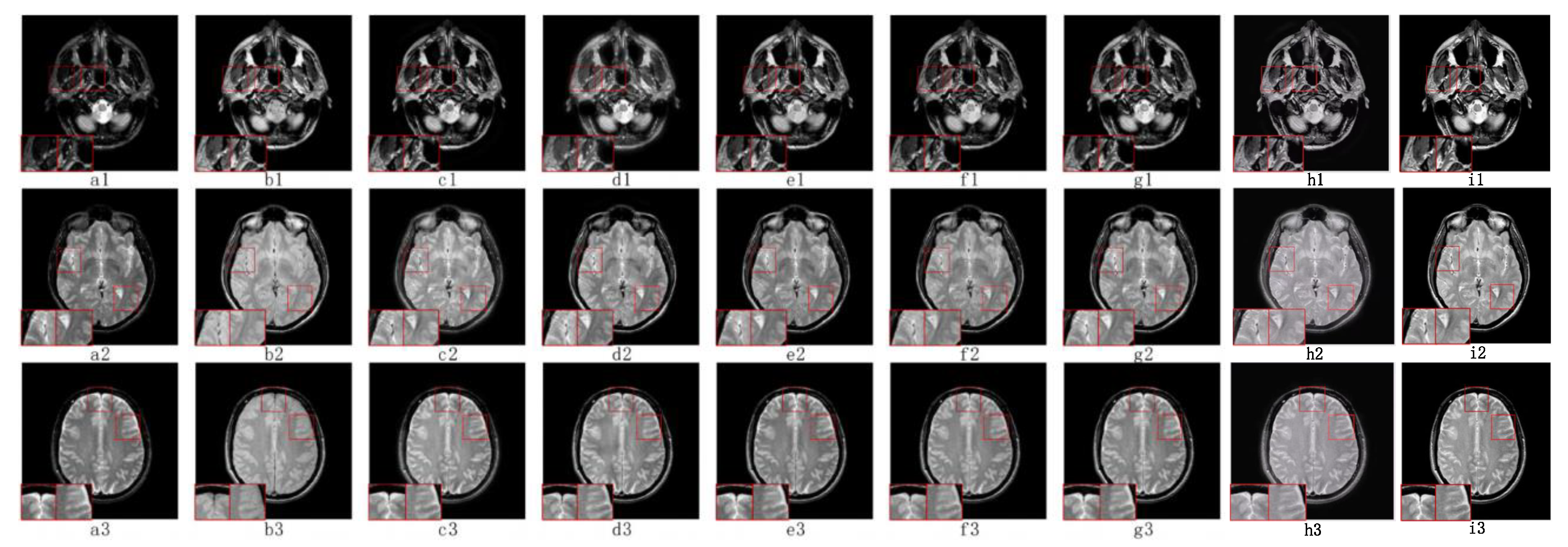

5.2. Visual Effects Analysis

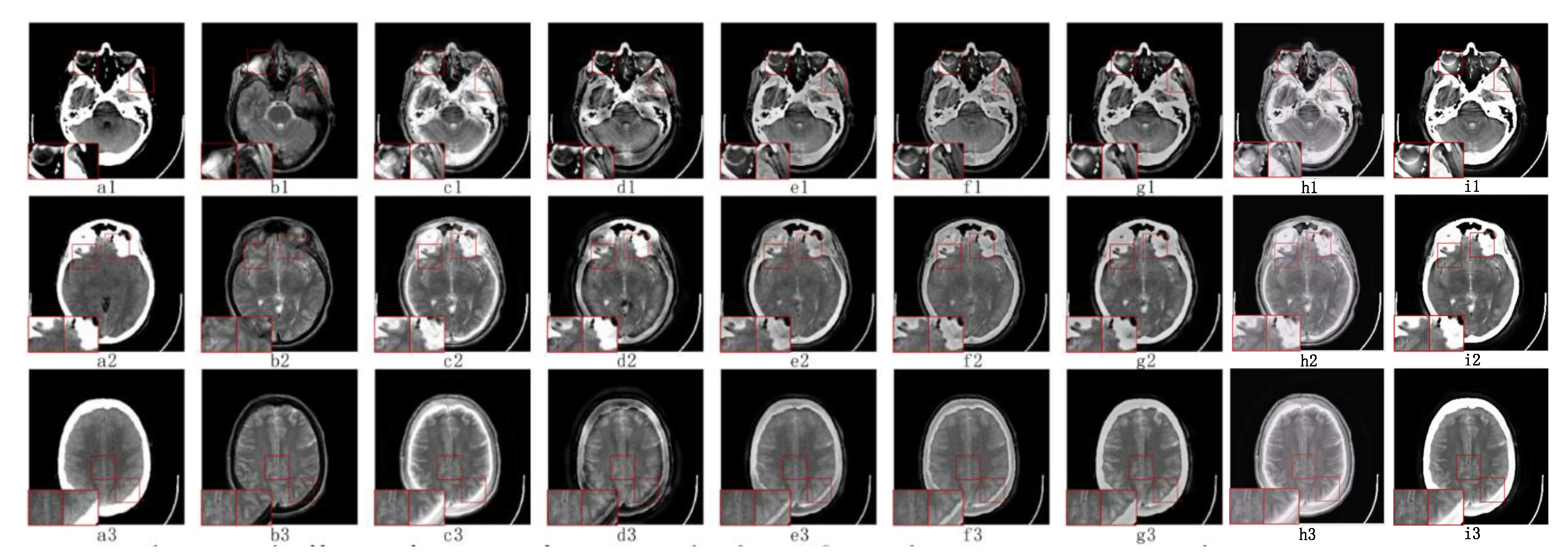

5.2.1. Fusion Analysis on T1-T2

5.2.2. Fusion Analysis on T2-PD

5.2.3. Fusion Analysis on CT-MRI

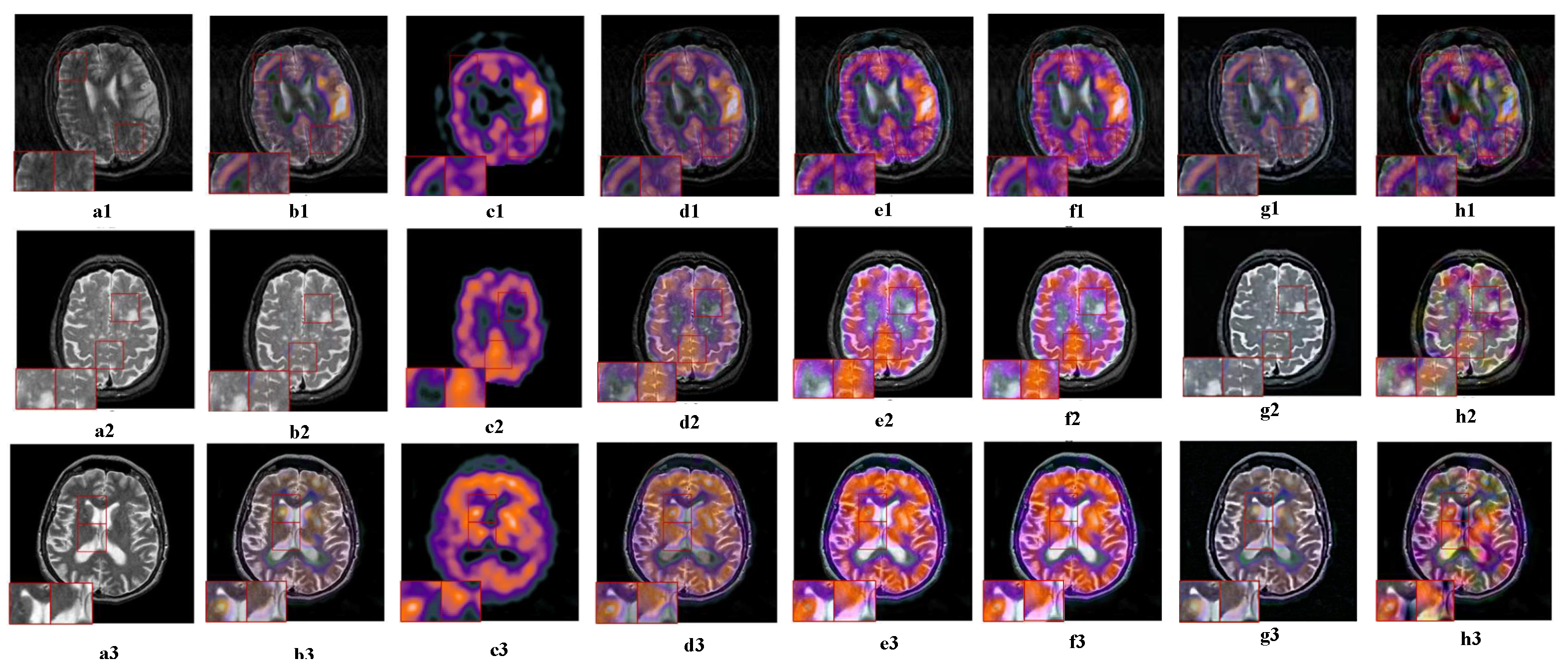

5.2.4. Fusion Analysis on MRI-PET

5.2.5. Fusion Analysis on MR-SPECT

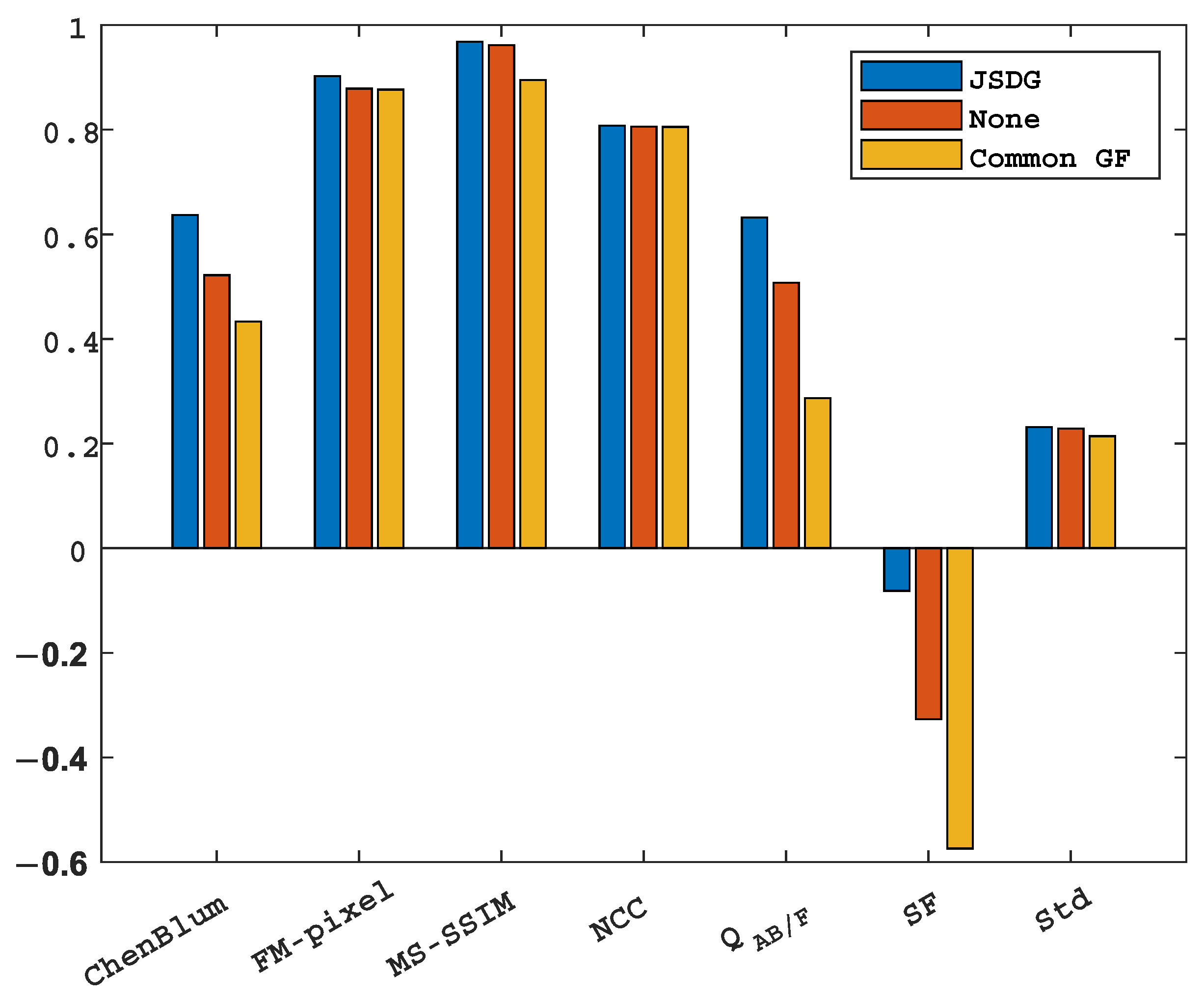

5.3. Objective Metrics Analysis

5.4. Analysis and Discussion

5.4.1. Analysis of Computational Running Time

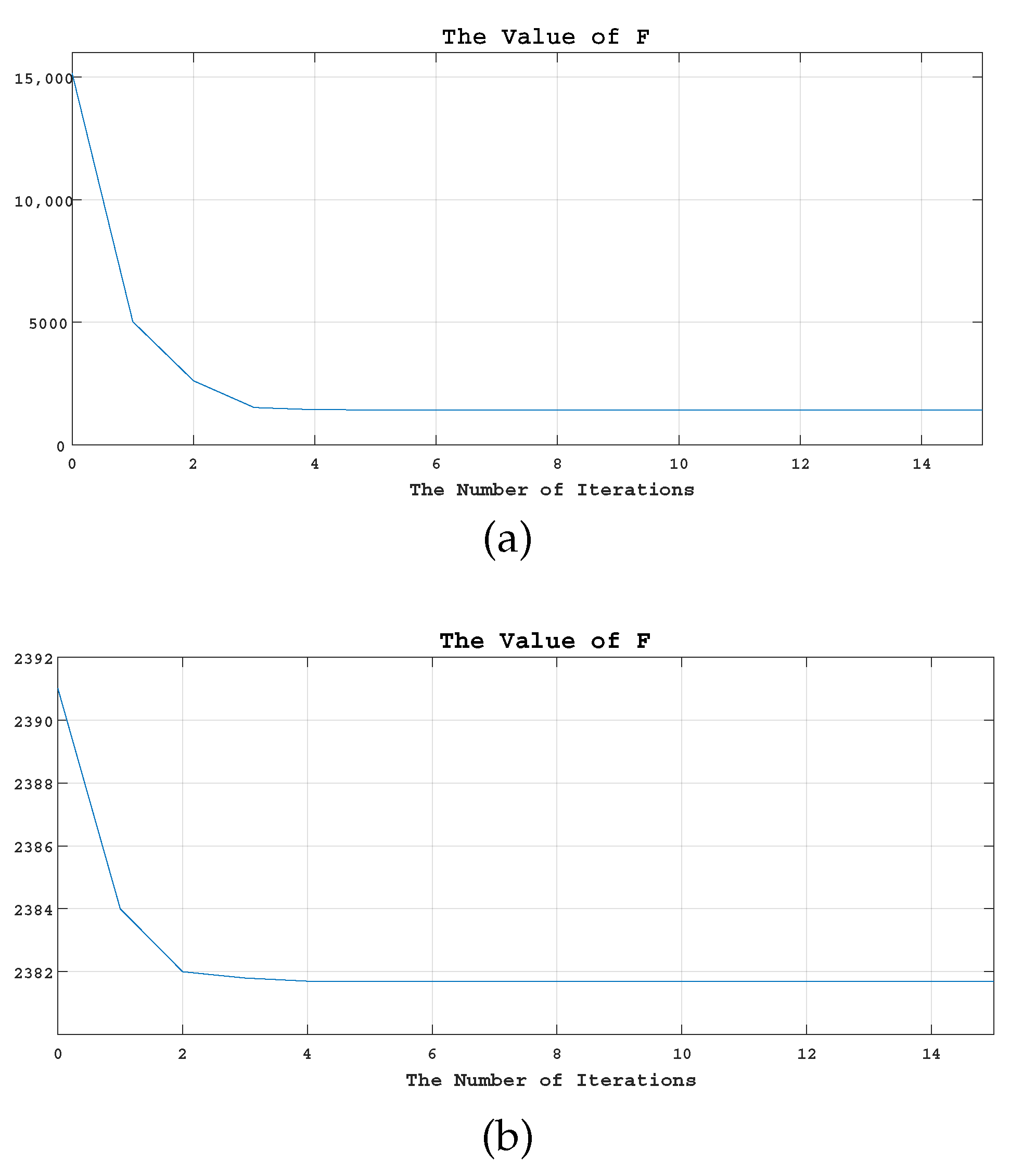

5.4.2. Convergence Analysis

5.4.3. Ablation Analysis

5.4.4. Parameter Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Azam, M.A.; Khan, K.B.; Salahuddin, S.; Rehman, E.; Khan, S.A.; Khan, M.A.; Kadry, S.; Gandomi, A.H. A review on multimodal medical image fusion: Compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Comput. Biol. Med. 2022, 144, 105253. [Google Scholar] [CrossRef] [PubMed]

- Jiao, D.; Li, W.; Ke, L.; Xiao, B. An Overview of Multi-Modal Medical Image Fusion. Neurocomputing 2016, 215, 3–20. [Google Scholar]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y. Medical image fusion using m-PCNN. Inf. Fusion 2008, 9, 176–185. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Yu, L.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar]

- Yin, M.; Liu, X.; Liu, Y.; Chen, X. Medical Image Fusion With Parameter-Adaptive Pulse Coupled Neural Network in Nonsubsampled Shearlet Transform Domain. IEEE Trans. Instrum. Meas. 2018, 68, 49–64. [Google Scholar] [CrossRef]

- Qi, S.; Calhoun, V.D.; Erp, T.V.; Bustillo, J.; Damaraju, E.; Turner, J.A.; Du, Y.; Yang, J.; Chen, J.; Yu, Q. Multimodal Fusion with Reference: Searching for Joint Neuromarkers of Working Memory Deficits in Schizophrenia. IEEE Trans. Med. Imaging 2017, 37, 93–105. [Google Scholar] [CrossRef]

- Yin, H. Tensor Sparse Representation for 3D Medical Image Fusion Using Weighted Average Rule. IEEE Trans. Biomed. Eng. 2018, 65, 2622–2633. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, B.L. Multifocus image fusion using the nonsubsampled contourlet transform. Signal Process. 2009, 89, 1334–1346. [Google Scholar] [CrossRef]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef]

- Khan, M.; Khan, M.K.; Khan, M.A.; Ibrahim, M.T. Endothelial Cell Image Enhancement using Nonsubsampled Image Pyramid. Inf. Technol. J. 2007, 6, 1057–1062. [Google Scholar] [CrossRef]

- Yu, G.; Yang, Y.; Yan, Y.; Guo, M.; Zhang, X.; Wang, J. DeepIDA: Predicting isoform-disease associations by data fusion and deep neural networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 19, 2166–2176. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, L.; Zeng, A.; Xia, D.; Yu, J.; Yu, G. DeepIII: Predicting isoform-isoform interactions by deep neural networks and data fusion. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 19, 2177–2187. [Google Scholar] [CrossRef]

- Xu, G.; He, C.; Wang, H.; Zhu, H.; Ding, W. DM-Fusion: Deep Model-Driven Network for Heterogeneous Image Fusion. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Kundu, M.K. A Neuro-Fuzzy Approach for Medical Image Fusion. IEEE Trans. Biomed. Eng. 2013, 60, 3347–3353. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Deng, X.; Zhou, X.; Pedersen, M.; Cimmino, L.; Wang, H. FCFusion: Fractal Componentwise Modeling with Group Sparsity for Medical Image Fusion. IEEE Trans. Ind. Inform. 2022, 18, 9141–9150. [Google Scholar] [CrossRef]

- Tucker, L.R. Some mathematical notes on three-mode factor analysis. Psychometrika 1966, 31, 279–311. [Google Scholar] [CrossRef]

- Li, S.; Dian, R.; Fang, L.; Bioucas-Dias, J.M. Fusing hyperspectral and multispectral images via coupled sparse tensor factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef]

- Liu, X.; Mei, W.; Du, H. Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 2017, 235, 131–139. [Google Scholar] [CrossRef]

- Ham, B.; Cho, M.; Ponce, J. Robust image filtering using joint static and dynamic guidance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4823–4831. [Google Scholar]

- Stimpel, B.; Syben, C.; Schirrmacher, F.; Hoelter, P.; Dörfler, A.; Maier, A. Multi-modal deep guided filtering for comprehensible medical image processing. IEEE Trans. Med. Imaging 2019, 39, 1703–1711. [Google Scholar] [CrossRef]

- Diwakar, M.; Singh, P.; Singh, R.; Sisodia, D.; Singh, V.; Maurya, A.; Kadry, S.; Sevcik, L. Multimodality Medical Image Fusion Using Clustered Dictionary Learning in Non-Subsampled Shearlet Transform. Diagnostics 2023, 13, 1395. [Google Scholar] [CrossRef] [PubMed]

- Jakhongir, N.; Abdusalomov, A.B.; Whangbo, T.K. Attention 3D U-Net with Multiple Skip Connections for Segmentation of Brain Tumor Images. Sensors 2022, 22, 6501. [Google Scholar]

- Arif, M.; Ajesh, F.; Shamsudheen, S.; Geman, O.; Izdrui, D.R.; Vicoveanu, D.I. Brain Tumor Detection and Classification by MRI Using Biologically Inspired Orthogonal Wavelet Transform and Deep Learning Techniques. J. Healthc. Eng. 2022, 2022, 2693621. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Huang, T.Z.; Zhao, X.L.; Ji, T.Y.; Zheng, Y.B.; Deng, L.J. Adaptive total variation and second-order total variation-based model for low-rank tensor completion. Numer. Algorithms 2021, 86, 1–24. [Google Scholar] [CrossRef]

- Boyd, S.P.; Parikh, N.; Chu, E.K.W.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Da Silva, C.; Herrmann, F.J. Optimization on the hierarchical tucker manifold–applications to tensor completion. Linear Algebra Its Appl. 2015, 481, 131–173. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Medical image fusion via convolutional sparsity based morphological component analysis. IEEE Signal Process. Lett. 2019, 26, 485–489. [Google Scholar] [CrossRef]

- Johnson, C. The whole brain atlas. BMJ 1999, 319, 1507. [Google Scholar]

- Yin, C.; Blum, R.S. A new automated quality assessment algorithm for image fusion. Image Vis. Comput. 2009, 27, 1421–1432. [Google Scholar]

- Xydeas, C.S.; Pv, V. Objective image fusion performance measure. Mil. Tech. Cour. 2000, 56, 181–193. [Google Scholar] [CrossRef]

- Zheng, Y.; Essock, E.A.; Hansen, B.C.; Haun, A.M. A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion 2007, 8, 177–192. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the IEEE Asilomar Conference on Signals, Pacific Grove, CA, USA, 9–12 November 2003. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Wang, Q.; Shen, Y. Performances evaluation of image fusion techniques based on nonlinear correlation measurement. In Proceedings of the 21st IEEE Instrumentation and Measurement Technology Conference, Como, Italy, 18–20 May 2004; Volume 1, pp. 472–475. [Google Scholar]

- Liu, Y.; Wang, Z. Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process. 2015, 9, 347–357. [Google Scholar] [CrossRef]

- Bhatnagar, G.; Wu, Q.J.; Liu, Z. Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans. Multimed. 2013, 15, 1014–1024. [Google Scholar] [CrossRef]

| Symbols | Meanings |

|---|---|

| Scalar | |

| A | Matrix |

| Conjugate transpose of a matrix | |

| Third-order tensor | |

| Horizontal slice of the tensor A | |

| Side slices of tensor A | |

| The front slice of the tensor A | |

| Tube of Tensor A |

| Experimental Environment | Parameters |

|---|---|

| Experimental equipments | Intel Core i5 dual-core processor 8GB 1600 MHz DDR3 |

| Compiling software | MATLAB 2016b |

| Methods | ChenBlum | FMI-Pixel | MS-SSIM | NCC | SF | Std | |

|---|---|---|---|---|---|---|---|

| TDFusion | 0.6514 | 0.8676 | 0.9667 | 0.8106 | 0.6605 | −0.0776 | 0.3277 |

| FCFusion | 0.6260 | 0.8422 | 0.9146 | 0.8080 | 0.6390 | −0.1547 | 0.2533 |

| ASR | 0.6370 | 0.8351 | 0.9261 | 0.8054 | 0.5960 | −0.2086 | 0.2292 |

| CS-MCA | 0.6261 | 0.8430 | 0.9525 | 0.8058 | 0.6399 | −0.1457 | 0.2550 |

| GFF | 0.6106 | 0.8477 | 0.9234 | 0.8067 | 0.6438 | −0.1526 | 0.2606 |

| NSCT-PCDC | 0.5449 | 0.8275 | 0.8792 | 0.8042 | 0.5467 | −0.1383 | 0.2427 |

| NSST-PAPCNN | 0.4459 | 0.8064 | 0.8679 | 0.8050 | 0.4031 | −0.3536 | 0.2956 |

| TDFusion | 0.7622 | 0.8924 | 0.9813 | 0.8069 | 0.6450 | −0.0882 | 0.2249 |

| FCFusion | 0.7486 | 0.8790 | 0.9622 | 0.8054 | 0.6330 | −0.1447 | 0.1933 |

| ASR | 0.7606 | 0.8745 | 0.9669 | 0.8054 | 0.6167 | −0.2392 | 0.1842 |

| CS-MCA | 0.7480 | 0.8821 | 0.9785 | 0.8055 | 0.6447 | −0.1495 | 0.1976 |

| GFF | 0.7325 | 0.8793 | 0.9584 | 0.8056 | 0.6332 | −0.1951 | 0.1881 |

| NSCT-PCDC | 0.6627 | 0.8674 | 0.9441 | 0.8046 | 0.5675 | −0.1309 | 0.1838 |

| NSST-PAPCNN | 0.5787 | 0.8608 | 0.9520 | 0.8051 | 0.5434 | −0.2473 | 0.2126 |

| TDFusion | 0.7122 | 0.9101 | 0.9328 | 0.8065 | 0.5957 | −0.1014 | 0.3282 |

| FCFusion | 0.6860 | 0.8922 | 0.9146 | 0.8063 | 0.5390 | −0.2547 | 0.2533 |

| ASR | 0.7114 | 0.9033 | 0.9081 | 0.8058 | 0.5468 | −0.2691 | 0.2621 |

| CS-MCA | 0.6936 | 0.9080 | 0.9256 | 0.8059 | 0.5468 | −0.2342 | 0.2887 |

| GFF | 0.6956 | 0.9054 | 0.8263 | 0.8061 | 0.5835 | −0.2906 | 0.2529 |

| NSCT-PCDC | 0.6075 | 0.8961 | 0.8369 | 0.8052 | 0.5350 | −0.1789 | 0.2695 |

| NSST-PAPCNN | 0.5449 | 0.8850 | 0.8911 | 0.8058 | 0.5168 | −0.2833 | 0.3264 |

| TDFusion | 0.6398 | 0.8970 | 0.999994 | 0.8115 | 0.8188 | −0.0148 | 0.2526 |

| FCFusion | 0.6365 | 0.8852 | 0.99994 | 0.8114 | 0.8090 | −0.0127 | 0.2536 |

| ASR | 0.6386 | 0.8533 | 0.999947 | 0.8043 | 0.7545 | −0.1419 | 0.1656 |

| GFF | 0.6569 | 0.8928 | 0.999996 | 0.8112 | 0.8136 | −0.0263 | 0.2427 |

| NSCT-PCDC | 0.5970 | 0.8952 | 0.999996 | 0.8106 | 0.8146 | −0.0136 | 0.2470 |

| NSST-PAPCNN | 0.6438 | 0.8958 | 0.999995 | 0.8113 | 0.7811 | −0.0120 | 0.2524 |

| TDFusion | 0.6588 | 0.8972 | 0.999983 | 0.8094 | 0.7719 | −0.0302 | 0.2627 |

| FCFusion | 0.6510 | 0.8962 | 0.999980 | 0.8086 | 0.7310 | −0.0547 | 0.2413 |

| ASR | 0.6511 | 0.8655 | 0.999955 | 0.8049 | 0.6826 | −0.1884 | 0.1803 |

| GFF | 0.6845 | 0.8959 | 0.999986 | 0.8091 | 0.7682 | −0.0469 | 0.2438 |

| NSCT-PCDC | 0.6261 | 0.8967 | 0.999992 | 0.8084 | 0.7315 | −0.0310 | 0.2521 |

| NSST-PAPCNN | 0.6454 | 0.8960 | 0.999987 | 0.8088 | 0.7215 | −0.0278 | 0.2618 |

| Methods | CS-MCA | GFF | NSCT-RPCNN | NSCT-PCDC | NSST-PAPCNN | FCFusion | TDFusion |

|---|---|---|---|---|---|---|---|

| Times | 137.38 | 0.06 | 8.43 | 15.14 | 6.86 | 56.89 | 20.66 |

| - | - | ||||||

|---|---|---|---|---|---|---|---|

| 0.6167 | 0.89140 | 0.965741 | 0.80886 | 0.5990 | −0.0855 | 0.25175 | |

| 0.6117 | 0.89124 | 0.965731 | 0.80874 | 0.6065 | −0.0871 | 0.25173 | |

| 0.6054 | 0.89081 | 0.965602 | 0.80850 | 0.6295 | −0.0911 | 0.25164 | |

| 0.5981 | 0.89045 | 0.965476 | 0.80815 | 0.6309 | −0.0978 | 0.25149 | |

| 0.5927 | 0.89007 | 0.965344 | 0.80793 | 0.6321 | −0.1043 | 0.25132 | |

| 0.5919 | 0.89053 | 0.965139 | 0.80802 | 0.6145 | −0.0941 | 0.25155 | |

| 0.5867 | 0.89038 | 0.964926 | 0.80791 | 0.6115 | −0.0957 | 0.25150 | |

| 0.5788 | 0.89026 | 0.964749 | 0.80778 | 0.6052 | −0.0980 | 0.25142 | |

| 0.5704 | 0.89008 | 0.964429 | 0.80766 | 0.6022 | −0.1003 | 0.25132 | |

| 0.5702 | 0.89006 | 0.964499 | 0.80765 | 0.6009 | −0.1008 | 0.25130 | |

| 0.5603 | 0.88983 | 0.964045 | 0.80752 | 0.5985 | −0.1036 | 0.25117 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Wang, Z.; Sun, H.; Deng, L.; Zhu, H. TDFusion: When Tensor Decomposition Meets Medical Image Fusion in the Nonsubsampled Shearlet Transform Domain. Sensors 2023, 23, 6616. https://doi.org/10.3390/s23146616

Zhang R, Wang Z, Sun H, Deng L, Zhu H. TDFusion: When Tensor Decomposition Meets Medical Image Fusion in the Nonsubsampled Shearlet Transform Domain. Sensors. 2023; 23(14):6616. https://doi.org/10.3390/s23146616

Chicago/Turabian StyleZhang, Rui, Zhongyang Wang, Haoze Sun, Lizhen Deng, and Hu Zhu. 2023. "TDFusion: When Tensor Decomposition Meets Medical Image Fusion in the Nonsubsampled Shearlet Transform Domain" Sensors 23, no. 14: 6616. https://doi.org/10.3390/s23146616

APA StyleZhang, R., Wang, Z., Sun, H., Deng, L., & Zhu, H. (2023). TDFusion: When Tensor Decomposition Meets Medical Image Fusion in the Nonsubsampled Shearlet Transform Domain. Sensors, 23(14), 6616. https://doi.org/10.3390/s23146616