Vision-Based Jigsaw Puzzle Solving with a Robotic Arm

Abstract

1. Introduction

- (1)

- A proposed algorithm that is fast and accurate for solving puzzle reconstruction when the original image is available.

- (2)

- The presentation of a lightweight and systematic algorithm for solving puzzle reconstruction without relying on the availability of the original image.

- (3)

- Improved accuracy was observed when dealing with more complex textural images.

- (4)

- The algorithm maintained a linear complexity, regardless of the complexity of the test image.

2. The Proposed Algorithms

2.1. The Algorithm with the Original Image

2.1.1. Feature Point Extraction

2.1.2. Determining the Direction of Feature Point Gradients

2.1.3. Building a SIFT Descriptor

2.1.4. RANSAC Algorithm

- Step 1.

- Three matching pairs in S were randomly sampled, and the transform matrix was calculated using a selected pair.

- Step 2.

- The feature points in a target image were transformed, and a newer was obtained.

- Step 3.

- The distance in was calculated, and a check was performed to determine whether this distance was less than the threshold .

- Step 4.

- Steps 1–3 were repeated k times, and the maximum inlier pairs were selected as a result.

2.1.5. Transformation Matrix

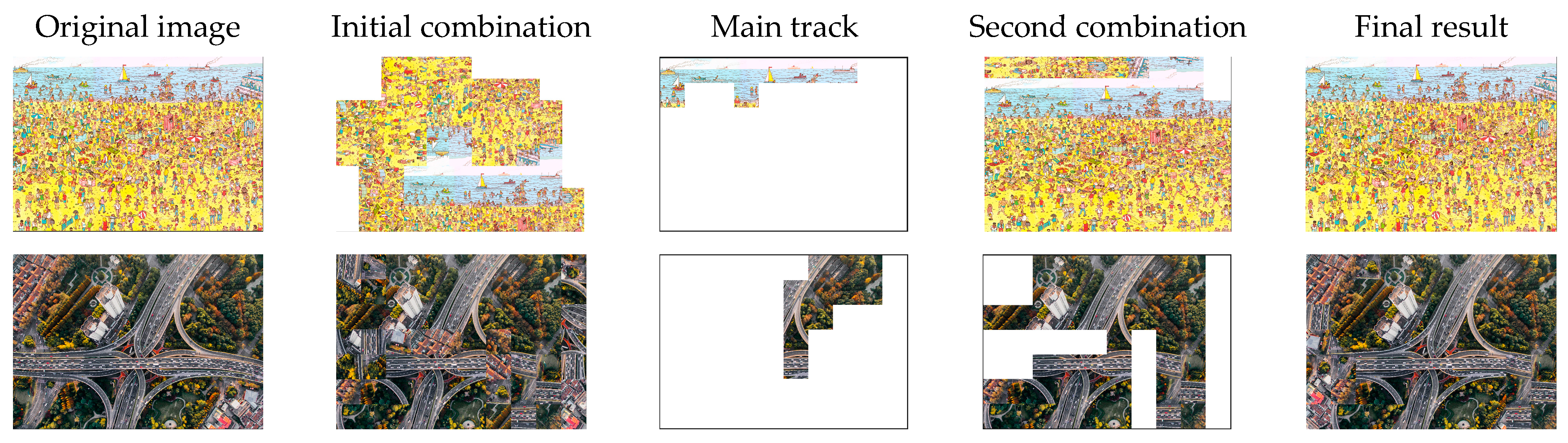

2.2. The Algorithm without the Original Image

2.2.1. Best Piece (BP)

2.2.2. Initial Combination

2.2.3. Main Track

2.2.4. Second Combination

2.2.5. Third Combination

3. Experimental Results

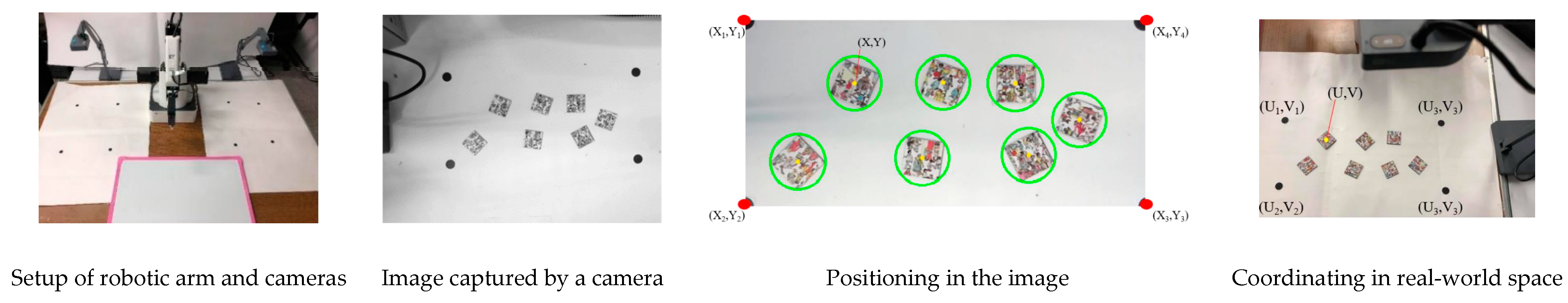

3.1. Experimental Setup

3.2. Result with the Original Image

3.3. Result without the Original Image

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yao, F.-H.; Shao, G.-F. A shape and image merging technique to solve jigsaw puzzles. Pattern Recognit. Lett. 2003, 24, 1819–1835. [Google Scholar] [CrossRef]

- Li, H.; Zheng, Y.; Zhang, S.; Cheng, J. Solving a Special Type of Jigsaw Puzzles: Banknote Reconstruction From a Large Number of Fragments. IEEE Trans. Multimed. 2014, 16, 571–578. [Google Scholar] [CrossRef]

- Shih, H.-C.; Yu, K.-C. SPiraL Aggregation Map (SPLAM): A new descriptor for robust template matching with fast algorithm. Pattern Recognit. 2015, 48, 1707–1723. [Google Scholar] [CrossRef]

- Demaine, E.D.; Demaine, M.L. Jigsaw Puzzles, Edge Matching, and Polyomino Packing: Connections and Complexity. Graphs Comb. 2007, 23, 195–208. [Google Scholar] [CrossRef]

- Chung, M.G.; Fleck, M.M.; Forsyth, D.A. Jigsaw puzzle solver using shape and color. In Proceedings of the ICSP’98. 1998 Fourth International Conference on Signal Processing, Beijing, China, 12–16 October 1998; IEEE: New York City, NY, USA, 1998; Volume 2, pp. 877–880. [Google Scholar]

- Sagiroglu, M.S.; Ercil, A. A Texture Based Matching Approach for Automated Assembly of Puzzles. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 1036–1041. [Google Scholar]

- Nielsen, T.R.; Drewsen, P.; Hansen, K. Solving jigsaw puzzles using image features. Pattern Recognit. Lett. 2008, 29, 1924–1933. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Fan-Chiang, W.-C. Reconstruction of shredded document based on image feature matching. Expert Syst. Appl. 2012, 39, 3324–3332. [Google Scholar] [CrossRef]

- Pimenta, A.; Justino, E.; Oliveira, L.S.; Sabourin, R. Document reconstruction using dynamic programming. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 1393–1396. [Google Scholar]

- Willis, A.R.; Cooper, D.B. Computational reconstruction of ancient artifacts. IEEE Signal Process. Mag. 2008, 25, 65–83. [Google Scholar] [CrossRef]

- Leitao, H.C.D.G.; Stolfi, J. A multiscale method for the reassembly of two-dimensional fragmented objects. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1239–1251. [Google Scholar] [CrossRef]

- Andaló, F.A.; Taubin, G.; Goldenstein, S. PSQP: Puzzle Solving by Quadratic Programming. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 385–396. [Google Scholar] [CrossRef] [PubMed]

- Cho, T.S.; Avidan, S.; Freeman, W.T. A probabilistic image jigsaw puzzle solver. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 183–190. [Google Scholar]

- Gallagher, A.C. Jigsaw puzzles with pieces of unknown orientation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 382–389. [Google Scholar]

- Mondal, D.; Wang, Y.; Durocher, S. Robust Solvers for Square Jigsaw Puzzles. In Proceedings of the 2013 International Conference on Computer and Robot Vision, Regina, SK, Canada, 28–31 May 2013; pp. 249–256. [Google Scholar]

- Pomeranz, D.; Shemesh, M.; Ben-Shahar, O. A fully automated greedy square jigsaw puzzle solver. CVPR 2011, 2011, 9–16. [Google Scholar]

- Moussa, A. Jigsopu: Square Jigsaw Puzzle Solver with Pieces of Unknown Orientation. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 77–80. [Google Scholar]

- Sholomon, D.; David, O.; Netanyahu, N.S. A Genetic Algorithm-Based Solver for Very Large Jigsaw Puzzles. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1767–1774. [Google Scholar]

- Paumard, M.-M.; Picard, D.; Tabia, H. Deepzzle: Solving Visual Jigsaw Puzzles With Deep Learning and Shortest Path Optimization. IEEE Trans. Image Process. 2020, 29, 3569–3581. [Google Scholar] [CrossRef] [PubMed]

- Song, X.; Jin, J.; Yao, C.; Wang, S.; Ren, J.; Bai, R. Siamese-Discriminant Deep Reinforcement Learning for Solving Jigsaw Puzzles with Large Eroded Gaps. Proc. AAAI Conf. Artif. Intell. 2023, 37, 2303–2311. [Google Scholar] [CrossRef]

- Bridger, D.; Danon, D.; Tal, A. Solving Jigsaw Puzzles with Eroded Boundaries. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3523–3532. [Google Scholar]

- Song, X.; Yang, X.; Ren, J.; Bai, R.; Jiang, X. Solving Jigsaw Puzzle of Large Eroded Gaps Using Puzzlet Discriminant Network. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Ein-Gedi, Israel, 29 May–2 June 2011; pp. 2564–2571. [Google Scholar]

- Huang, C.L.; Shih, H.C.; Chen, C.L. Shot and Scoring Events Identification of Basketball Videos. In Proceedings of the 2006 IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 1885–1888. [Google Scholar]

- Ma, C.H.; Shih, H.C. Human Skin Segmentation Using Fully Convolutional Neural Networks. In Proceedings of the 2018 IEEE 7th Global Conference on Consumer Electronics (GCCE), Nara, Japan, 9–12 October 2018; pp. 168–170. [Google Scholar]

- DOBOT. DOBOT Magician Lite Robotic Arm [Online]. Available online: https://www.dobot-robots.com/products/education/magician.html (accessed on 11 April 2023).

| (Start Angle) | (End Angle) | |

|---|---|---|

| 0 (as default) | ||

| #Pieces (#Neighbors) | Method | Puzzle Size (Pixel) | #Correct Neighbors | #Wrong Neighbors |

|---|---|---|---|---|

| 35 (58) | Ours | >100 | 53 | 5 |

| 60~100 | 33 | 25 | ||

| Cho et al. [15] | >100 | 17 | 41 | |

| 60~100 | 18 | 40 | ||

| 70 (123) | Ours | 60~100 | 76 | 47 |

| <60 | 73 | 50 | ||

| Cho et al. [15] | 60~100 | 28 | 95 | |

| <60 | 26 | 97 |

| Method | Threshold Ratio | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | |

| 35 pcs | Ours | Iteration | 7 | 19 | 23 | 29 | 30 | 32 | 33 | 33 | 34 |

| Puzzle completion accuracy | 87.1% | 80% | 80% | 69.1% | 69.1% | 69.1% | 76.8% | 76.8% | 80% | ||

| Cho et al. [15] | Iteration | 19 | 22 | 27 | 27 | 28 | 31 | 33 | 35 | 37 | |

| Puzzle completion accuracy | 11.1% | 11.1% | 21.7% | 53.3% | 66.3% | 74.6% | 86% | 77.8% | 77.8% | ||

| 70 pcs | Ours | Iteration | 12 | 20 | 23 | 28 | 29 | 32 | 32 | 33 | 35 |

| Puzzle completion accuracy | 61.9% | 64.4% | 77.8% | 50.1% | 62.5% | 62.5% | 62.5% | 50 | 62.5% | ||

| Cho et al. [15] | Iteration | 27 | 45 | 56 | 56 | 61 | 62 | 65 | 68 | 71 | |

| Puzzle completion accuracy | 12.6% | 12.5% | 12.6% | 46.3% | 62.5% | 62.5% | 73.6% | 73.6% | 71.6% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.-H.; Lu, C.-L.; Shih, H.-C. Vision-Based Jigsaw Puzzle Solving with a Robotic Arm. Sensors 2023, 23, 6913. https://doi.org/10.3390/s23156913

Ma C-H, Lu C-L, Shih H-C. Vision-Based Jigsaw Puzzle Solving with a Robotic Arm. Sensors. 2023; 23(15):6913. https://doi.org/10.3390/s23156913

Chicago/Turabian StyleMa, Chang-Hsian, Chien-Liang Lu, and Huang-Chia Shih. 2023. "Vision-Based Jigsaw Puzzle Solving with a Robotic Arm" Sensors 23, no. 15: 6913. https://doi.org/10.3390/s23156913

APA StyleMa, C.-H., Lu, C.-L., & Shih, H.-C. (2023). Vision-Based Jigsaw Puzzle Solving with a Robotic Arm. Sensors, 23(15), 6913. https://doi.org/10.3390/s23156913