Cyclic Generative Attention-Adversarial Network for Low-Light Image Enhancement

Abstract

1. Introduction

- (1)

- We present CGAAN, an unsupervised low-light image-enhancement method that has been demonstrated experimentally to perform well;

- (2)

- Based on the cyclic generative adversarial network, we present a novel attention mechanism. This attention mechanism can direct the network to enhance different regions to varying degrees, depending on whether they are in low or normal light;

- (3)

- To make the generated images more realistic, we add a stylized region loss function and a new regularization function on top of the cyclic adversarial network.

2. Related Work

2.1. Traditional Methods

| Method | Advantage | Disadvantage |

|---|---|---|

| EnlightenGAN [17] | A global–local discriminator is being introduced. Overfitting was eliminated, and the model’s generalization ability was enhanced. | Noise is difficult to eliminate. |

| MAGAN [18] | Improve low-light images and remove potential noise by using a hybrid attention layer to model the relationship between each pixel and the image’s features. | When the finished product is obtained, pixel-by-pixel addition has some limitations. |

| HE [20] | Increase the image’s contrast. | Each pixel’s neighborhood information is ignored. |

| Agrawal [3] | This method fully utilizes the relationship between each pixel and its neighbors, resulting in improved image quality. | Enhancements can also cause artifacts and color distortion. |

| Literature [4] | Artifacts and color distortion issues have been addressed to some extent. | The processing effect for various exposure areas is still not optimal. |

| ERMHE [6] | Multi-histogram equalization improves the contrast of images that are not uniformly illuminated. | There are some issues, such as enhancement artifacts. |

| Literature [7] | Divide the image into three histograms and equalize each one separately. | There could be over-enhancement. |

| Literature [8] | The use of four-histogram equalization with limited contrast compensates for the flaws of over-enhancing and over-smoothing. | Does not fully address color shift and noise artifacts in image enhancement in low-light conditions. |

| Literature [21] | As the final output, use the reflection component. | Inadequate detail preservation, halos, and over-enhancement issues. |

| Wang [9] | Using Gabor filters in conjunction with Retinex theory to enhance images in the HSI and RGB color spaces. | The image does not appear to be natural. |

| Chen [10] | The Retinex method is improved with a fully variational model and an adaptive gamma transformation to produce better visuals while preserving image details. | Artifacts could be present. |

| Lin [11] | Using Retinex theory, divide the input image into reflection and illumination components and then add edge preservation to the illumination component. | Will generate noise. |

| Yang [23] | To estimate an initial illuminated image, Retinex theory was combined with a fast and robust fuzzy C-means clustering algorithm, followed by segmentation and fusion to enrich the image details. | Inadequate constraints on reflection components, potentially introducing artifacts and noise. |

| LACN [24] | Introduce a parameter-free attention module and propose a new attention module that retains color information while improving brightness and contrast. | Does not take into account global information. |

| PRIEN [12] | A recurrent module composed of recurrent layers and residual blocks is used to extract features iteratively. | The image’s details are ignored. |

| Literature [13] | Create an end-to-end augmentation network using a module stacking approach and attention blocks, then use fusion to augment images. | The image’s details are ignored. |

| DELLIE [14] | When combined with the detail component prediction model, it is possible to extract and fuse image detail features. | Unable to strike a balance between lighting and detail information. |

| Liu [15] | Image enhancement in low-light conditions using adaptive feature selection and attention that can perceive global and local details. | Inadequate enhancement in various lighting conditions. |

| Kandula [16] | Enhance images in two stages with a context-guided adaptive canonical unsupervised enhancement network. | The image’s texture and semantic information are unaffected. |

| FLA-Net [25] | Using the LBP module, concentrate on the image’s texture information. | There will be issues with color distortion. |

| Literature [26] | To address the issue of color distortion, use a structured texture-aware network and a color-loss function. | Maintaining a natural image is difficult. |

| Retinex-Net [27] | Used in conjunction with Retinex theory to adjust lighting components. | The image’s specifics are ignored. |

| R2RNet [28] | Enhance images using three sub-networks and frequency information to retain details. | Inability to adapt. |

| URetinex-Net [29] | The decomposition problem is formulated as an implicit prior regularization problem for adaptive enhancement of low-light images. | When something is inefficient, there will be some distortion. |

| Zero-DCE [30] | Deep networks are used to transform image augmentation into image-specific curve estimation. | Noise suppression is ineffective. |

| Literature [31] | Image enhancement with two-stage light enhancement and noise-suppression networks. | Image quality could be improved. |

| Proposed method | The addition of feature attention, style area loss, and adaptive normalization functions improves image quality. | The runtime may be extended due to network design. |

2.2. Deep Learning Methods

2.3. Attention Mechanism

2.4. Generative Adversarial Networks

3. Proposed Method

3.1. Network Architectures

3.2. Adaptive Feature-Attention Module

3.3. Loss Functions

4. Experimental Results and Discussion

4.1. Experiment Details

4.2. Datasets and Evaluation Metrics

4.2.1. Datasets

4.2.2. Evaluation Metrics

4.3. Comparison with State-of-the-Art Methods

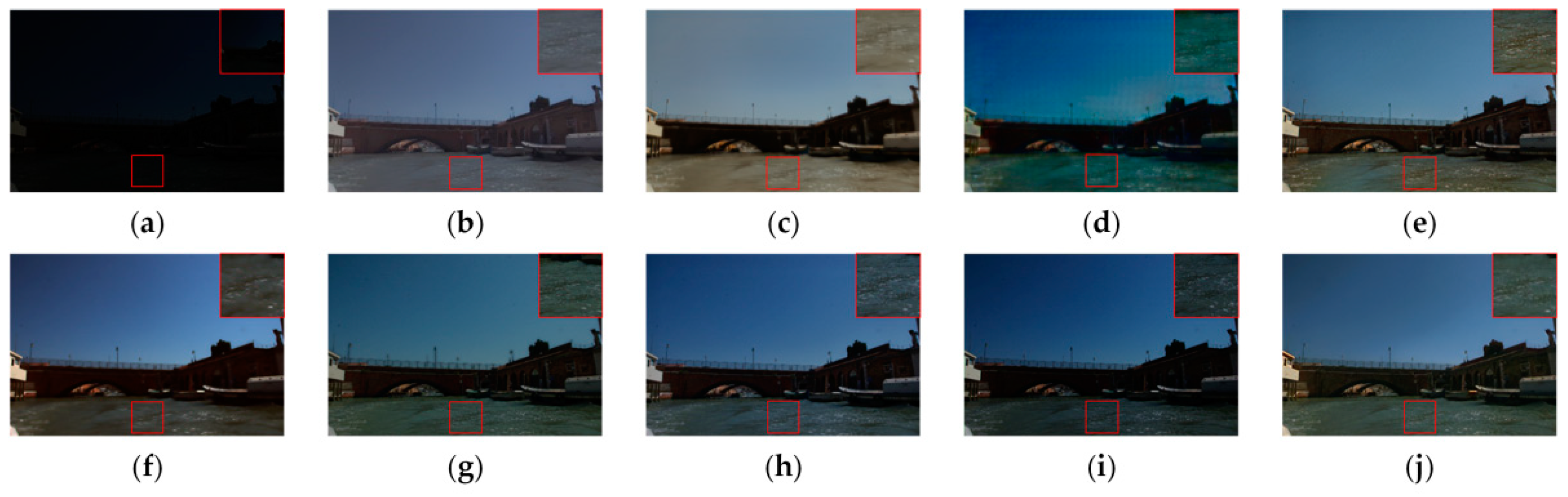

4.3.1. Qualitative Comparisons

4.3.2. Quantitative Comparisons

4.4. Ablation Experiment

4.5. Application

5. Future Work

- (1)

- In conjunction with some specific network structures. Using an appropriate network structure can significantly improve the quality of the enhanced image. Although the majority of the previous methods have been improved based on the U-Net network structure, this does not guarantee that they can be applied to all low-light image enhancement situations. Considering low-light images with low contrast and small pixel values, a suitable network structure for enhancement must be chosen;

- (2)

- Integrate Semantic Data. Semantic information includes image features such as color, which allows the network to distinguish regions of different brightness in the image, which is extremely useful for detail restoration. As a result, combining the benefits of semantic information with semantic information will be a hot research topic in the future;

- (3)

- Given the complexity of low-light image enhancement tasks, investigating how to adaptively adjust the enhancement degree based on user input and how to combine it with sensors is also a promising future research direction.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Paul, A.; Bhattacharya, P.; Maity, S.P. Histogram modification in adaptive bi-histogram equalization for contrast enhancement on digital images. Optik 2022, 259, 168899. [Google Scholar] [CrossRef]

- Paul, A. Adaptive tri-plateau limit tri-histogram equalization algorithm for digital image enhancement. Visual Comput. 2023, 39, 297–318. [Google Scholar] [CrossRef]

- Agrawal, S.; Panda, R.; Mishro, P.K.; Abraham, A. A novel joint histogram equalization based image contrast enhancement. J. King Saud Univ. Comput. Inform. Sci. 2022, 34, 1172–1182. [Google Scholar] [CrossRef]

- Jebadass, J.R.; Balasubramaniam, P. Low light enhancement algorithm for color images using intuitionistic fuzzy sets with histogram equalization. Multimed. Tools Applicat. 2022, 81, 8093–8106. [Google Scholar] [CrossRef]

- Mayathevar, K.; Veluchamy, M.; Subramani, B. Fuzzy color histogram equalization with weighted distribution for image enhancement. Optik 2020, 216, 164927. [Google Scholar] [CrossRef]

- Tan, S.F.; Isa, N.A.M. Exposure based multi-histogram equalization contrast enhancement for non-uniform illumination images. IEEE Access 2019, 7, 70842–70861. [Google Scholar] [CrossRef]

- Rahman, H.; Paul, G.C. Tripartite sub-image histogram equalization for slightly low contrast gray-tone image enhancement. Pattern Recognit. 2023, 134, 109043. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, Z.; Zhang, J.; Li, Q.; Shi, Y. Image enhancement with the preservation of brightness and structures by employing contrast limited dynamic quadri-histogram equalization. Optik 2021, 226, 165877. [Google Scholar] [CrossRef]

- Wang, P.; Wang, Z.; Lv, D.; Zhang, C.; Wang, Y. Low illumination color image enhancement based on Gabor filtering and Retinex theory. Multimed. Tools Applicat. 2021, 80, 17705–17719. [Google Scholar] [CrossRef]

- Chen, L.; Liu, Y.; Li, G.; Hong, J.; Li, J.; Peng, J. Double-function enhancement algorithm for low-illumination images based on retinex theory. JOSA A 2023, 40, 316–325. [Google Scholar] [CrossRef]

- Lin, Y.H.; Lu, Y.C. Low-light enhancement using a plug-and-play Retinex model with shrinkage mapping for illumination estimation. IEEE Trans. Image Process. 2022, 31, 4897–4908. [Google Scholar] [CrossRef]

- Li, J.; Feng, X.; Hua, Z. Low-light image enhancement via progressive-recursive network. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4227–4240. [Google Scholar] [CrossRef]

- Li, M.; Zhao, L.; Zhou, D.; Nie, R.; Liu, Y.; Wei, Y. AEMS, an attention enhancement network of modules stacking for lowlight image enhancement. Visual Comput. 2022, 38, 4203–4219. [Google Scholar] [CrossRef]

- Hui, Y.; Wang, J.; Shi, Y.; Li, B. Low Light Image Enhancement Algorithm Based on Detail Prediction and Attention Mechanism. Entropy 2022, 24, 815. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Ma, W.; Ma, X.; Wang, J. LAE-Net, A locally-adaptive embedding network for low-light image enhancement. Pattern Recognit. 2023, 133, 109039. [Google Scholar] [CrossRef]

- Kandula, P.; Suin, M.; Rajagopalan, A.N. Illumination-adaptive Unpaired Low-light Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2023, 1. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan, Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Wang, R.; Jiang, B.; Yang, C.; Li, Q.; Zhang, B. MAGAN, Unsupervised low-light image enhancement guided by mixed-attention. Big Data Min. Analyt. 2022, 5, 110–119. [Google Scholar] [CrossRef]

- Nguyen, H.; Tran, D.; Nguyen, K.; Nguyen, R. PSENet, Progressive Self-Enhancement Network for Unsupervised Extreme-Light Image Enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 1756–1765. [Google Scholar]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Wang, J.; Dong, L.L.; Chen, S.; Wu, H.; Zhong, Y. Optimization algorithm for low-light image enhancement based on Retinex theory. IET Image Process. 2023, 17, 505–517. [Google Scholar] [CrossRef]

- Fan, S.; Liang, W.; Ding, D.; Yu, H. LACN, A lightweight attention-guided ConvNeXt network for low-light image enhancement. Eng. Applicat. Artific. Intell. 2023, 117, 105632. [Google Scholar] [CrossRef]

- Yu, N.; Li, J.; Hua, Z. Fla-net, multi-stage modular network for low-light image enhancement. Visual Comput. 2022, 39, 1–20. [Google Scholar] [CrossRef]

- Xu, K.; Chen, H.; Xu, C.; Jin, Y.; Zhu, C. Structure-texture aware network for low-light image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 4983–4996. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet, Low-light image enhancement via real-low to real-normal network. J. Visual Communicat. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net, Retinex-based de-ep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Xiong, W.; Liu, D.; Shen, X.; Fang, C.; Luo, J. Unsupervised low-light image enhancement with decoupled networks. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 457–463. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:14-09.0473. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam, Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net, Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 11065–11074. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In International conference on machine learning. PMLR 2021, 139, 11863–11874. [Google Scholar]

- Yu, T.; Li, X.; Cai, Y.; Sun, M.; Li, P. S2-MLPv2, Improved Spatial-Shift MLP Architecture for Vision. arXiv 2021, arXiv:2108.01072. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM, Normalization-based attention module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Zhang, Q.L.; Yang, Y.B. Sa-net, Shuffle attention for deep convolutional neural networks. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, Canada, 6–11 June 2021; pp. 2235–2239. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan, Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar]

- Chen, J.; Chen, J.; Chao, H.; Yang, M. Image blind denoising with generative adversarial network based noise modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3155–3164. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net, Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Part III 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Liu, D.; Wen, B.; Liu, X.; Wang, Z.; Huang, T.S. When image denoising meets high-level vision tasks, a deep learning approach. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 842–848. [Google Scholar]

- Lee, C.; Lee, C.; Kim, C.S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ling, H. LIME, Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Lee, C.; Lee, Y.Y.; Kim, C.S. Power-constrained contrast enhancement for emissive displays based on histogram equalization. IEEE Trans. Image Process. 2011, 21, 80–93. [Google Scholar]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Kwon, D.; Kim, G.; Kwon, J. DALE, Dark region-aware low-light image enhancement. arXiv 2020, arXiv:2008.12493. [Google Scholar]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. From fidelity to perceptual quality, A semi-supervised approach for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3063–3072. [Google Scholar]

- Lim, S.; Kim, W. DSLR, Deep stacked Laplacian restorer for low-light image enhancement. IEEE Trans. Multimed. 2020, 23, 4272–4284. [Google Scholar] [CrossRef]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10561–10570. [Google Scholar]

- Zheng, S.; Gupta, G. Semantic-guided zero-shot learning for low-light image/video enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 581–590. [Google Scholar]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

| Dataset | Scale | Synthetic/Real | Paired/Unpaired |

|---|---|---|---|

| EnlightenGAN | 914 low-light images, 1016 normal-light images | Synthetic | Unpaired |

| DICM | 69 images | Real | Unpaired |

| LIME | 10 images | Real | Unpaired |

| MEF | 17 images | Real | Unpaired |

| NPE | 85 images | Real | Unpaired |

| VV | 24 images | Real | Unpaired |

| Method | PSNR | SSIM |

|---|---|---|

| DALE | 27.1563 | 0.7770 |

| DRBN | 29.3280 | 0.6661 |

| DSLR | 28.9918 | 0.9536 |

| EnlightenGAN | 27.5032 | 0.8090 |

| RUAS | 30.8243 | 0.9242 |

| Zero-DCE | 31.1470 | 0.9097 |

| SGZ | 29.8204 | 0.8213 |

| SCI | 31.4300 | 0.9501 |

| Ours | 31.5482 | 0.9504 |

| Method | DICM | LIME | MEF | NPE | VV | Average |

|---|---|---|---|---|---|---|

| DALE | 3.4665 | 2.9993 | 3.7530 | 3.5869 | 3.3174 | 3.4246 |

| DRBN | 3.8223 | 3.6287 | 4.5318 | 4.6310 | 4.1312 | 4.4190 |

| DSLR | 3.2065 | 3.8683 | 3.4878 | 3.6515 | 3.5184 | 3.5465 |

| EnlightenGAN | 3.4095 | 2.9206 | 2.8039 | 3.5371 | 3.6619 | 3.2666 |

| RUAS | 3.1667 | 3.3964 | 4.0510 | 3.8972 | 6.1626 | 4.1384 |

| Zero-DCE | 2.6201 | 3.1936 | 3.5447 | 3.1849 | 3.4984 | 3.2083 |

| SGZ | 2.6263 | 3.5883 | 3.8319 | 3.8319 | 3.6173 | 3.4991 |

| SCI | 3.1113 | 3.6580 | 3.1131 | 3.2434 | 4.2304 | 3.4712 |

| Ours | 3.2299 | 3.9004 | 3.8583 | 2.8435 | 3.2824 | 3.4229 |

| Method | EnlightenGAN | DICM | LIME | MEF | NPE | VV |

|---|---|---|---|---|---|---|

| DALE | 0.2409 | 0.2605 | 0.2898 | 0.2562 | 0.2304 | 0.2876 |

| DRBN | 0.3069 | 0.3126 | 0.3689 | 0.2980 | 0.2220 | 0.2403 |

| DSLR | 0.2594 | 0.2199 | 0.3053 | 0.2095 | 0.2686 | 0.2143 |

| EnlightenGAN | 0.2453 | 0.2216 | 0.2449 | 0.2475 | 0.2234 | 0.2526 |

| RUAS | 0.1570 | 0.2269 | 0.2230 | 0.2559 | 0.2314 | 0.1544 |

| Zero-DCE | 0.2708 | 0.1842 | 0.2235 | 0.2629 | 0.2472 | 0.2450 |

| SGZ | 0.1450 | 0.1993 | 0.2649 | 0.2513 | 0.1565 | 0.2133 |

| SCI | 0.2322 | 0.2318 | 0.1970 | 0.2198 | 0.1919 | 0.2125 |

| Ours | 0.1831 | 0.2207 | 0.2161 | 0.2398 | 0.2161 | 0.2133 |

| Method | FLOPs |

|---|---|

| DALE | 3017.28256 |

| DRBN | 339.30451 |

| DSLR | 188.00941 |

| EnlightenGAN | 526.23416 |

| RUAS | 6.85140 |

| Zero-DCE | 166.09444 |

| SGZ | 5.35141 |

| SCI | 3155.95162 |

| Ours | 2300.29894 |

| Adaptive Feature Attention | Loss of Style Region | Normalization Function | PSNR | SSIM |

|---|---|---|---|---|

| × | √ | √ | 30.2435 | 0.9417 |

| √ | × | √ | 30.1493 | 0.8751 |

| √ | √ | × | 30.8110 | 0.9485 |

| √ | √ | √ | 31.5482 | 0.9504 |

| Loss of Identity Consistency | Cycle-Consistency Loss | Style Region Loss | PSNR | SSIM |

|---|---|---|---|---|

| × | √ | √ | 27.8482 | 0.53951 |

| √ | × | √ | 28.7930 | 0.7182 |

| √ | √ | × | 30.1493 | 0.8451 |

| √ | √ | √ | 31.5482 | 0.9504 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhen, T.; Peng, D.; Li, Z. Cyclic Generative Attention-Adversarial Network for Low-Light Image Enhancement. Sensors 2023, 23, 6990. https://doi.org/10.3390/s23156990

Zhen T, Peng D, Li Z. Cyclic Generative Attention-Adversarial Network for Low-Light Image Enhancement. Sensors. 2023; 23(15):6990. https://doi.org/10.3390/s23156990

Chicago/Turabian StyleZhen, Tong, Daxin Peng, and Zhihui Li. 2023. "Cyclic Generative Attention-Adversarial Network for Low-Light Image Enhancement" Sensors 23, no. 15: 6990. https://doi.org/10.3390/s23156990

APA StyleZhen, T., Peng, D., & Li, Z. (2023). Cyclic Generative Attention-Adversarial Network for Low-Light Image Enhancement. Sensors, 23(15), 6990. https://doi.org/10.3390/s23156990