Facial Expressions Track Depressive Symptoms in Old Age

Abstract

:1. Introduction

2. Methods

2.1. Participants

2.2. Facial Expression Task

2.3. Data Acquisition

2.4. Korean Version of the Beck Depression Inventory-II

2.5. Statistical Analysis

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wei, J.; Hou, R.; Zhang, X.; Xu, H.; Xie, L.; Chandrasekar, E.K.; Ying, M.; Goodman, M. The association of late-life depression with all-cause and cardiovascular mortality among community-dwelling older adults: Systematic review and meta-analysis. Br. J. Psychiatry 2019, 215, 449–455. [Google Scholar] [CrossRef] [PubMed]

- Vannoy, S.D.; Duberstein, P.; Cukrowicz, K.; Lin, E.; Fan, M.Y.; Unützer, J. The relationship between suicide ideation and late-life depression. Am. J. Geriatr. Psychiatry 2007, 15, 1024–1033. [Google Scholar] [CrossRef]

- Blazer, D.G. Depression in late life: Review and commentary. J. Gerontol. 2003, 58, 249–265. [Google Scholar] [CrossRef] [Green Version]

- Volkert, J.; Schulz, H.; Härter, M.; Wlodarczyk, O.; Andreas, S. The prevalence of mental disorders in older people in Western countries—A meta-analysis. Ageing Res. Rev. 2013, 12, 339–353. [Google Scholar] [CrossRef]

- Meeks, T.W.; Vahia, I.V.; Lavretsky, H.; Kulkarni, G.; Jeste, D.V. A tune in “a minor” can “b major”: A review of epidemiology, illness course, and public health implications of subthreshold depression in older adults. J. Affect. Disord. 2011, 129, 126–142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gallagher, D.; Breckenridge, J.; Steinmetz, J.; Thompson, L. The Beck Depression Inventory and Research Diagnostic Criteria: Congruence in an Older Population. J. Consult. Clin. Psychol. 1983, 51, 945–946. [Google Scholar] [CrossRef] [PubMed]

- Barnes, L.L.; Schneider, J.A.; Boyle, P.A.; Bienias, J.L.; Bennett, D.A. Memory complaints are related to Alzheimer disease pathology in older persons. Neurology 2006, 67, 1581–1585. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feher, E.P.; Larrabee, G.J.; Crook, T.H. Factors Attenuating the Validity of the Geriatric Depression Scale in a Dementia Population. J. Am. Geriatr. Soc. 1992, 40, 906–909. [Google Scholar] [CrossRef] [PubMed]

- Annen, S.; Roser, P.; Brüne, M. Nonverbal behavior during clinical interviews: Similarities and dissimilarities among Schizophrenia, mania, and depression. J. Nerv. Ment. Dis. 2012, 200, 26–32. [Google Scholar] [CrossRef] [PubMed]

- Fiquer, J.T.; Boggio, P.S.; Gorenstein, C. Talking bodies: Nonverbal behavior in the assessment of depression severity. J. Affect. Disord. 2013, 150, 1114–1119. [Google Scholar] [CrossRef] [PubMed]

- Girard, J.M.; Cohn, J.F.; Mahoor, M.H.; Mavadati, S.M.; Hammal, Z.; Rosenwald, D.P. Nonverbal social withdrawal in depression: Evidence from manual and automatic analyses. Image Vis. Comput. 2014, 32, 641–647. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wen, L.; Li, X.; Guo, G.; Zhu, Y. Automated depression diagnosis based on facial dynamic analysis and sparse coding. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1432–1441. [Google Scholar] [CrossRef]

- Cohn, J.F.; Kruez, T.S.; Matthews, I.; Yang, Y.; Nguyen, M.H.; Padilla, M.T.; Zhou, F.; De La Torre, F. Detecting depression from facial actions and vocal prosody. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, ACII 2009, Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar] [CrossRef]

- Joshi, J.; Goecke, R.; Alghowinem, S.; Dhall, A.; Wagner, M.; Epps, J.; Parker, G.; Breakspear, M. Multimodal assistive technologies for depression diagnosis and monitoring. J. Multimodal User Interfaces 2013, 7, 217–228. [Google Scholar] [CrossRef]

- Gehricke, J.G.; Shapiro, D. Reduced facial expression and social context in major depression: Discrepancies between facial muscle activity and self-reported emotion. Psychiatry Res. 2000, 95, 157–167. [Google Scholar] [CrossRef]

- Chentsova-Dutton, Y.E.; Tsai, J.L.; Gotlib, I.H. Further Evidence for the Cultural Norm Hypothesis: Positive Emotion in Depressed and Control European American and Asian American Women. Cult. Divers. Ethn. Minor. Psychol. 2010, 16, 284–295. [Google Scholar] [CrossRef] [Green Version]

- Gaebel, W.; Wölwer, W. Facial expressivity in the course of schizophrenia and depression. Eur. Arch. Psychiatry Clin. Neurosci. 2004, 254, 335–342. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Darwin’s contributions to our understanding of emotional expressions. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3449–3451. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, S.; Lee, K.; Lim, J.A.; Ko, H.; Kim, T.; Lee, J.I.; Kim, H.; Han, S.J.; Kim, J.S.; Park, S.; et al. Differences in facial expressions between spontaneous and posed smiles: Automated method by action units and three-dimensional facial landmarks. Sensors 2020, 364, 1199. [Google Scholar] [CrossRef] [Green Version]

- Gavrilescu, M.; Vizireanu, N. Predicting depression, anxiety, and stress levels from videos using the facial action coding system. Sensors 2019, 19, 3693. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gilanie, G.; Ul Hassan, M.; Asghar, M.; Qamar, A.M.; Ullah, H.; Khan, R.U.; Aslam, N.; Khan, I.U. An Automated and Real-time Approach of Depression Detection from Facial Micro-expressions. Comput. Mater. Contin. 2022, 73, 2513–2528. [Google Scholar] [CrossRef]

- Venkataraman, D. Extraction of Facial Features for Depression Detection among Students. Int. J. Pure Appl. Math. 2018, 118, 455–463. [Google Scholar]

- Gallo, J.J.; Anthony, J.C.; Muthen, B.O. Age differences in the symptoms of depression: A latent trait analysis. J. Gerontol. 1994, 49, P251–P264. [Google Scholar] [CrossRef] [PubMed]

- Butters, M.A.; Whyte, E.M.; Nebes, R.D.; Begley, A.E.; Dew, M.A.; Mulsant, B.H.; Zmuda, M.D.; Bhalla, R.; Meltzer, C.C.; Pollock, B.G.; et al. The nature and determinants of neuropsychological functioning in late-life depression. Arch. Gen. Psychiatry 2004, 61, 587–595. [Google Scholar] [CrossRef]

- Smith, M.C.; Smith, M.K.; Ellgring, H. Spontaneous and posed facial expression in Parkinson’s Disease. J. Int. Neuropsychol. Soc. 1996, 2, 383–391. [Google Scholar] [CrossRef] [PubMed]

- Namba, S.; Makihara, S.; Kabir, R.S.; Miyatani, M.; Nakao, T. Spontaneous Facial Expressions Are Different from Posed Facial Expressions: Morphological Properties and Dynamic Sequences. Curr. Psychol. 2017, 36, 593–605. [Google Scholar] [CrossRef]

- Morris, J.C. The clinical dementia rating (cdr): Current version and scoring rules. Neurology 1993, 43, 2412–2414. [Google Scholar] [CrossRef] [PubMed]

- Han, J.W.; Kim, T.H.; Jhoo, J.H.; Park, J.H.; Kim, J.L.; Ryu, S.H.; Moon, S.W.; Choo, I.H.; Lee, D.W.; Yoon, J.C.; et al. A normative study of the Mini-Mental State Examination for Dementia Screening (MMSE-DS) and its short form (SMMSE-DS) in the Korean elderly. J. Korean Geriatr. Psychiatry 2010, 14, 27–97. [Google Scholar]

- Muliyala, K.P.; Varghese, M. The complex relationship between depression and dementia. Ann. Indian Acad. Neurol. 2010, 13, 69–73. [Google Scholar] [CrossRef]

- Taple, B.J.; Chapman, R.; Schalet, B.D.; Brower, R.; Griffith, J.W. The Impact of Education on Depression Assessment: Differential Item Functioning Analysis. Assessment 2022, 29, 272–284. [Google Scholar] [CrossRef]

- Kim, S.H. Health literacy and functional health status in Korean older adults. J. Clin. Nurs. 2009, 18, 2337–2343. [Google Scholar] [CrossRef] [PubMed]

- Beck, A.T.; Ward, C.H.; Mendelson, M.; Mock, J.; Erbaugh, J. An Inventory for Measuring Depression. Arch. Gen. Psychiatry 1961, 4, 561–571. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, K.; Jaekal, E.; Yoon, S.; Lee, S.H.; Choi, K.H. Diagnostic Utility and Psychometric Properties of the Beck Depression Inventory-II Among Korean Adults. Front. Psychol. 2020, 10, 2934. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ekman, P.; Friesen, W.V. Manual for the Facial Action Coding System; Consulting Psychologists Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Baltrušaitis, T.; Mahmoud, M.; Robinson, P. Cross-dataset learning and person-specific normalisation for automatic Action Unit detection. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, FG 2015, Ljubljana, Slovenia, 4–8 May 2015. [Google Scholar] [CrossRef]

- Sayette, M.A.; Cohn, J.F.; Wertz, J.M.; Perrott, M.A.; Parrott, D.J. A psychometric evaluation of the facial action coding system for assessing spontaneous expression. J. Nonverbal Behav. 2001, 25, 167–185. [Google Scholar] [CrossRef]

- Scherer, K.R.; Ekman, P. Handbook of Methods in Nonverbal Behavior Research; Cambridge University Press: Cambridge, UK, 1982. [Google Scholar]

- Olderbak, S.; Hildebrandt, A.; Pinkpank, T.; Sommer, W.; Wilhelm, O. Psychometric challenges and proposed solutions when scoring facial emotion expression codes. Behav. Res. Methods 2014, 46, 992–1006. [Google Scholar] [CrossRef] [Green Version]

- Sung, H.; Kim, J.; Park, Y.; Bai, D.; Lee, H.; Ahn, H. A study on the reliability and the validity of Korean version of the Beck Depression Inventory-II (BDI-II). J. Korean Soc. Biol. Ther. Psychiatry 2008, 14, 201–212. [Google Scholar]

- Revelle, M.W. psych: Procedures for Personality and Psychological Research (R Package); Northwestern University Press: Evanston, IL, USA, 2017. [Google Scholar]

- Cheong, J.; Xie, T.; Byrne, S.; Chang, L. Py-Feat: Python Facial Expression Analysis Toolbox. arXiv 2021, arXiv:2104.03509. [Google Scholar]

- Susskind, J.M.; Lee, D.H.; Cusi, A.; Feiman, R.; Grabski, W.; Anderson, A.K. Expressing fear enhances sensory acquisition. Nat. Neurosci. 2008, 11, 843–850. [Google Scholar] [CrossRef]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the 4th IEEE International Conference on Automatic Face and Gesture Recognition, FG 2000, Grenoble, France, 28–30 March 2000. [Google Scholar] [CrossRef] [Green Version]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: Oxford, UK, 2012; ISBN 9780199847044. [Google Scholar]

- Rinn, W.E. The neuropsychology of facial expression: A review of the neurological and psychological mechanisms for producing facial expressions. Psychol. Bull. 1984, 95, 52–77. [Google Scholar] [CrossRef] [PubMed]

- Pfister, T.; Li, X.; Zhao, G.; Pietikainen, M. Differentiating spontaneous from posed facial expressions within a generic facial expression recognition framework. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

- Ruihua, M.; Hua, G.; Meng, Z.; Nan, C.; Panqi, L.; Sijia, L.; Jing, S.; Yunlong, T.; Shuping, T.; Fude, Y.; et al. The Relationship Between Facial Expression and Cognitive Function in Patients with Depression. Front. Psychol. 2021, 12, 648346. [Google Scholar] [CrossRef] [PubMed]

- Maximiano-Barreto, M.A.; Bomfim, A.J.d.L.; Borges, M.M.; de Moura, A.B.; Luchesi, B.M.; Chagas, M.H.N. Recognition of Facial Expressions of Emotion and Depressive Symptoms among Caregivers with Different Levels of Empathy. Clin. Gerontol. 2022, 45, 1245–1252. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.; Yu, Y.; Ye, J.; Zheng, Y.; Li, W.; Cui, N.; Wang, Q. A method for diagnosing depression: Facial expression mimicry is evaluated by facial expression recognition. J. Affect. Disord. 2023, 323, 809–818. [Google Scholar] [CrossRef] [PubMed]

| Mean (SD)/Frequency (Proportion) | |

|---|---|

| Age | 71.98 (6.11) |

| Sex (male:female) | 24:35 |

| Education (years) | 9.82 (4.03) |

| MMSE | 26.17 (3.12) |

| K-BDI-II | 18.37 (12.71) |

| Posed AU | Spontaneous AU | ||||

|---|---|---|---|---|---|

| B(SE) | p-Value | B(SE) | p-Value | ||

| Age | −0.50 (0.28) | 0.081 | Age | −0.37 (0.27) | 0.179 |

| Sex | −3.05 (4.21) | 0.472 | Sex | −3.50 (4.12) | 0.400 |

| Education | −0.17 (0.44) | 0.709 | Education | −0.22 (0.44) | 0.619 |

| MMSE | −1.01 (0.6) | 0.100 | MMSE | −1.06 (0.59) | 0.077 |

| P-PC1 | 0.28 (0.52) | 0.585 | S-PC1 | −0.16 (0.42) | 0.706 |

| P-PC2 | −1.06 (0.75) | 0.164 | S-PC2 | −0.39 (0.73) | 0.594 |

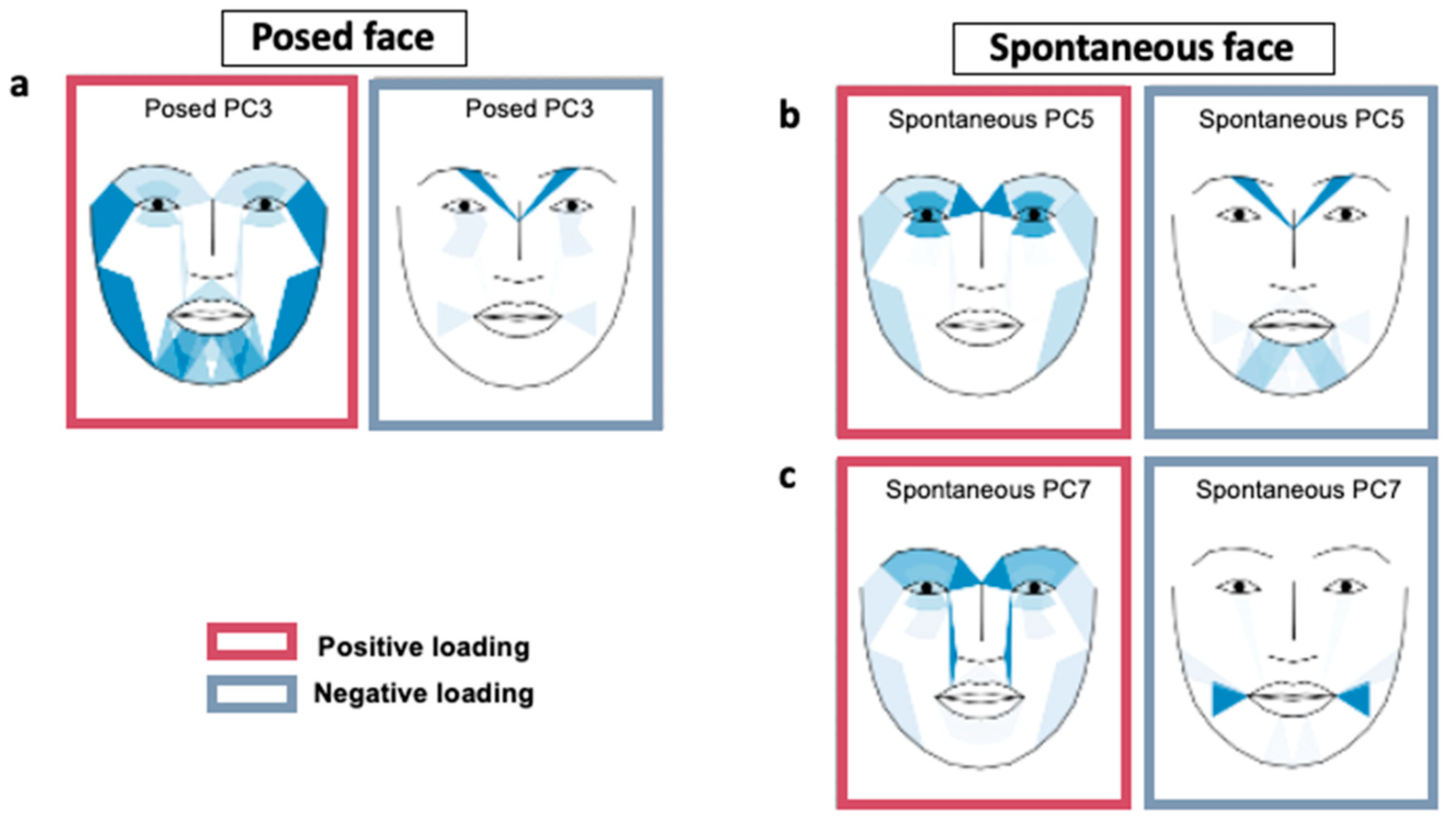

| P-PC3 | 2.09 (0.87) | 0.021 | S-PC3 | 0.57 (0.79) | 0.474 |

| P-PC4 | −0.04 (0.95) | 0.969 | S-PC4 | 0.69 (0.87) | 0.436 |

| P-PC5 | 1.64 (1.07) | 0.134 | S-PC5 | 2.73 (0.94) | 0.006 |

| P-PC6 | 2.06 (1.06) | 0.057 | S-PC6 | −0.71 (0.98) | 0.474 |

| P-PC7 | 0.80 (1.13) | 0.483 | S-PC7 | 2.34 (1.02) | 0.027 |

| P-PC8 | −0.65 (1.39) | 0.641 | S-PC8 | 0.24 (1.19) | 0.842 |

| Emotion Condition | Posed | Spontaneous | ||

|---|---|---|---|---|

| Number of PCs | R-Squared Change | Number of PCs | R-Squared Change | |

| All emotions | 8 | 0.207 | 8 | 0.214 |

| Neutral | 2 | 0.038 | NA | NA |

| Fear | 2 | 0.067 | 2 | 0.033 |

| Disgust | 2 | 0.067 | 2 | 0.016 |

| Anger | 3 | 0.039 | 2 | 0.014 |

| Sad | 1 | 0.038 | 1 | 0.003 |

| Surprise | 1 | 0.030 | 1 | 0.028 |

| Happy | 1 | 0.027 | 2 | 0.000 |

| AU | Description | Location | PAU PC3 | SAU PC5 | SAU PC7 |

|---|---|---|---|---|---|

| AU01 | Raised inner brow | Upper | 0.036 | 0.156 | 0.222 |

| AU02 | Raised outer brow | Upper | 0.073 | 0.071 | 0.171 |

| AU04 | Lowered brow | Upper | −0.381 | −0.205 | −0.011 |

| AU06 | Raised cheek | Upper | −0.128 | 0.019 | 0.071 |

| AU17 | Raised chin | Upper | 0.097 | −0.041 | −0.077 |

| AU45 | Blinking | Upper | 0.610 | 0.281 | 0.317 |

| AU05 | Raised upper lid | Lower | −0.011 | −0.039 | 0.072 |

| AU07 | Tight lid | Lower | 0.081 | 0.133 | 0.117 |

| AU09 | Wrinkled nose | Lower | 0.067 | 0.032 | 0.225 |

| AU10 | Raised upper lip | Lower | −0.045 | 0.008 | −0.057 |

| AU12 | Pulled lip corner | Lower | −0.010 | 0.014 | −0.118 |

| AU14 | Dimpled | Lower | −0.143 | −0.053 | −0.371 |

| AU15 | Depressed lip corner | Lower | 0.103 | −0.080 | 0.007 |

| AU20 | Stretched lip | Lower | 0.081 | −0.040 | 0.051 |

| AU23 | Tight lip | Lower | 0.151 | −0.133 | −0.005 |

| AU25 | Parted lips | Lower | 0.077 | −0.006 | 0.088 |

| AU26 | Dropped jaw | Lower | 0.150 | 0.069 | 0.074 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kwak, S.; Yoo, S.Y.; Lee, E.C.; Park, S.; Ko, H.; Bae, M.; Seo, M.; Nam, G.; Lee, J.-Y. Facial Expressions Track Depressive Symptoms in Old Age. Sensors 2023, 23, 7080. https://doi.org/10.3390/s23167080

Kim H, Kwak S, Yoo SY, Lee EC, Park S, Ko H, Bae M, Seo M, Nam G, Lee J-Y. Facial Expressions Track Depressive Symptoms in Old Age. Sensors. 2023; 23(16):7080. https://doi.org/10.3390/s23167080

Chicago/Turabian StyleKim, Hairin, Seyul Kwak, So Young Yoo, Eui Chul Lee, Soowon Park, Hyunwoong Ko, Minju Bae, Myogyeong Seo, Gieun Nam, and Jun-Young Lee. 2023. "Facial Expressions Track Depressive Symptoms in Old Age" Sensors 23, no. 16: 7080. https://doi.org/10.3390/s23167080

APA StyleKim, H., Kwak, S., Yoo, S. Y., Lee, E. C., Park, S., Ko, H., Bae, M., Seo, M., Nam, G., & Lee, J.-Y. (2023). Facial Expressions Track Depressive Symptoms in Old Age. Sensors, 23(16), 7080. https://doi.org/10.3390/s23167080