Conformer-Based Human Activity Recognition Using Inertial Measurement Units

Abstract

:1. Introduction

- This paper proposes a modified Conformer model that utilizes attention mechanisms to process sparse and irregularly sampled multivariate clinical time-series data;

- The model employs a sensor attention unit that is designed for time-series data from various sensor readings, utilizing the multi-head attention mechanism inherent in transformers;

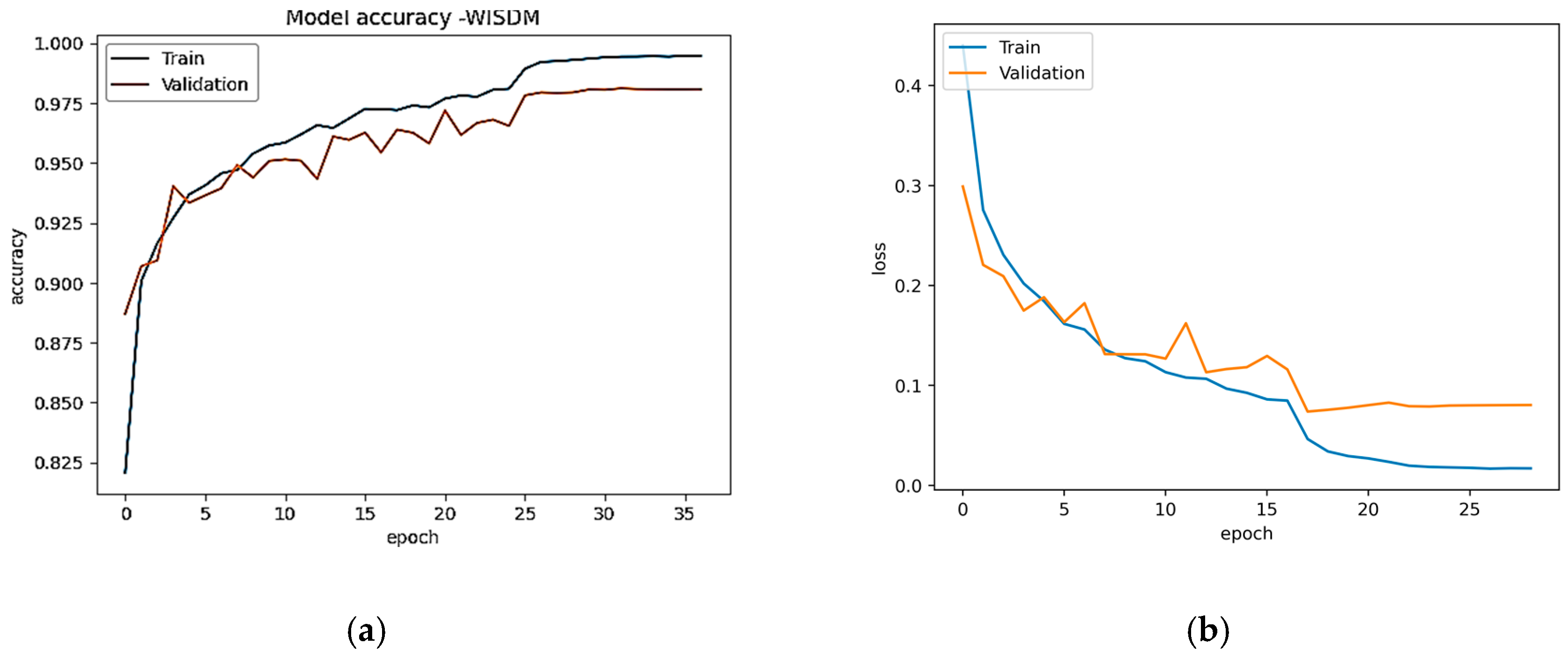

- The performance of the proposed model is evaluated using two publicly available ADL datasets, USCHAD and WISDM, and the model achieves state-of-the-art prediction results.

2. Method

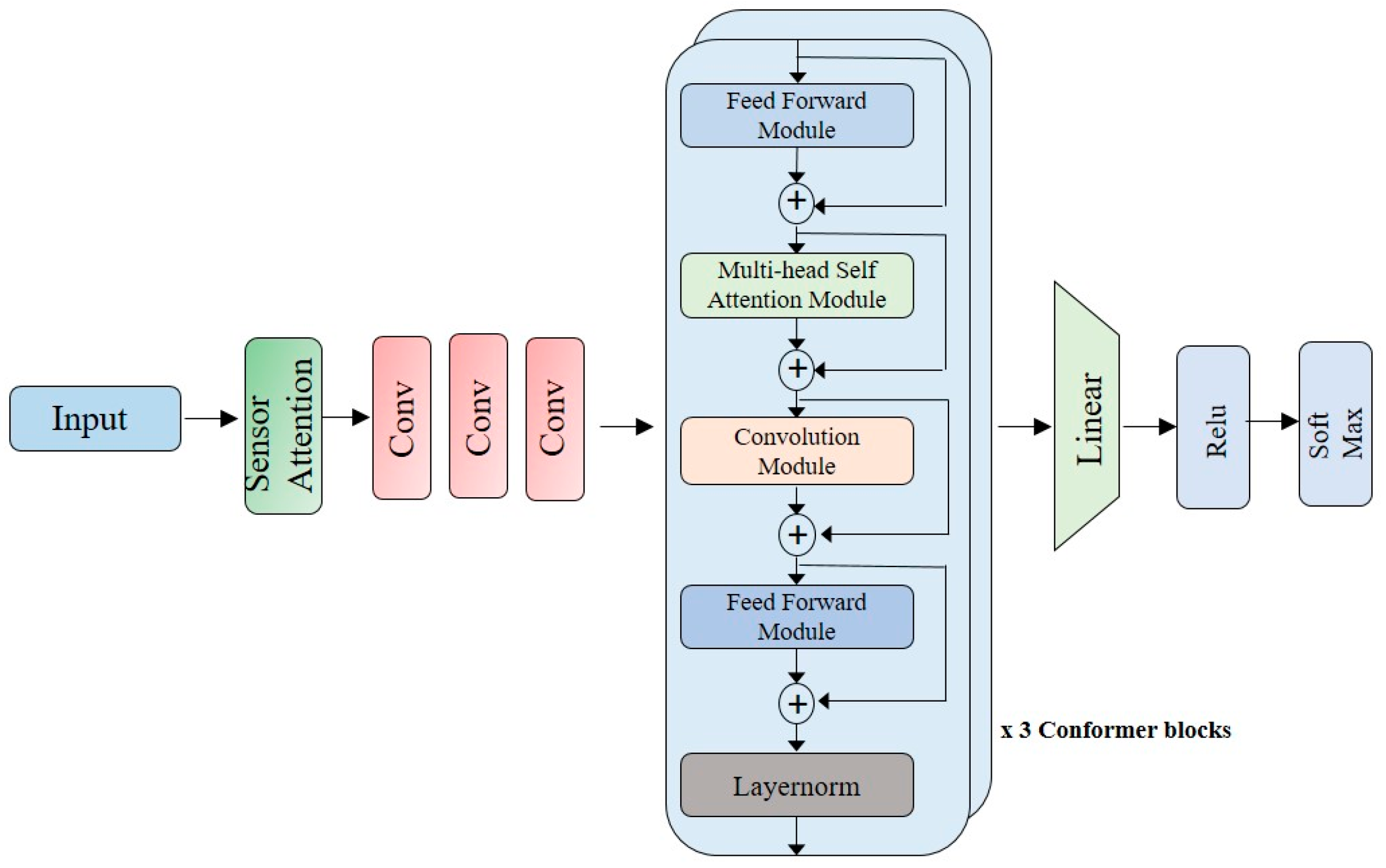

3. Proposed Model

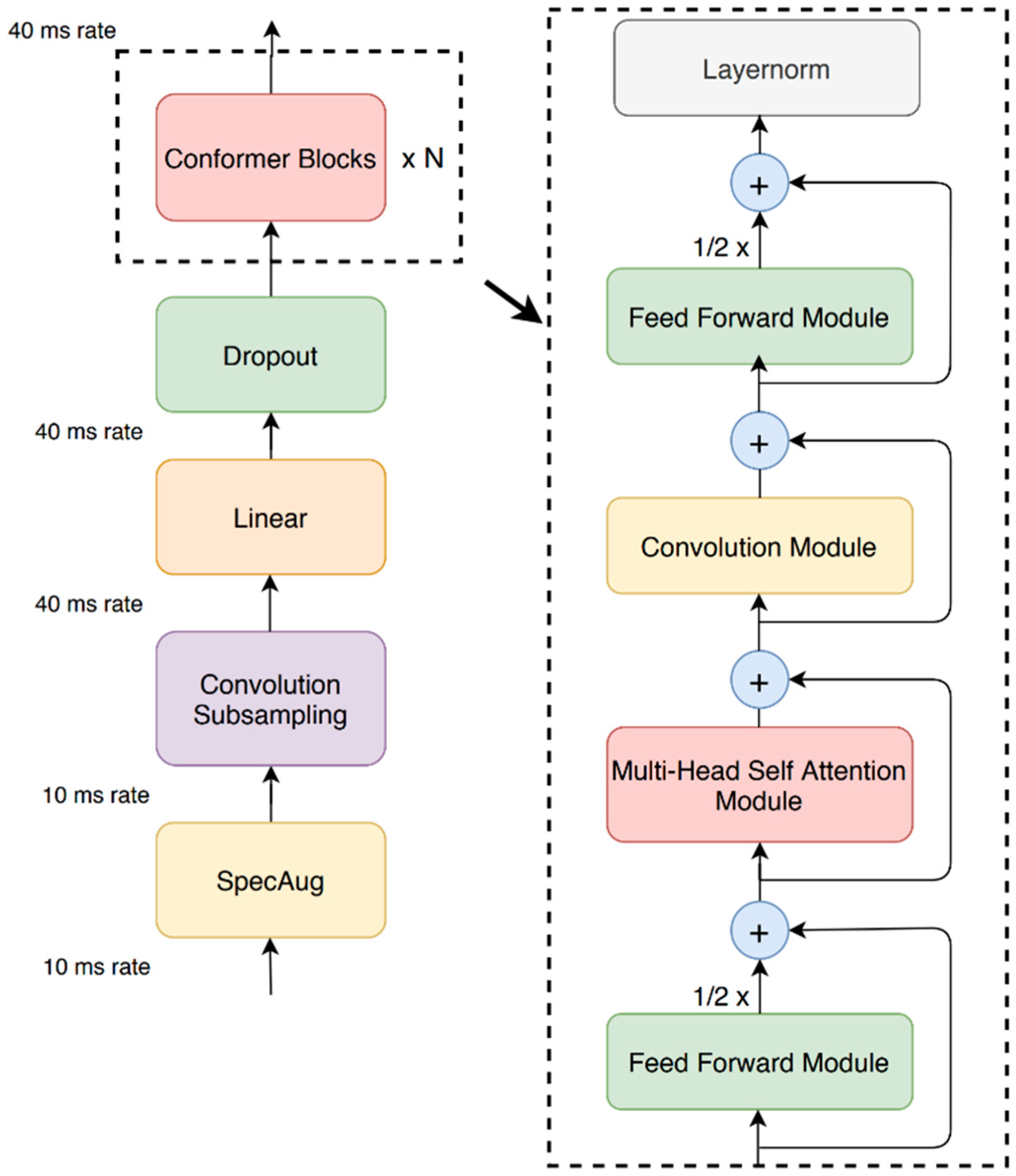

3.1. Conformer

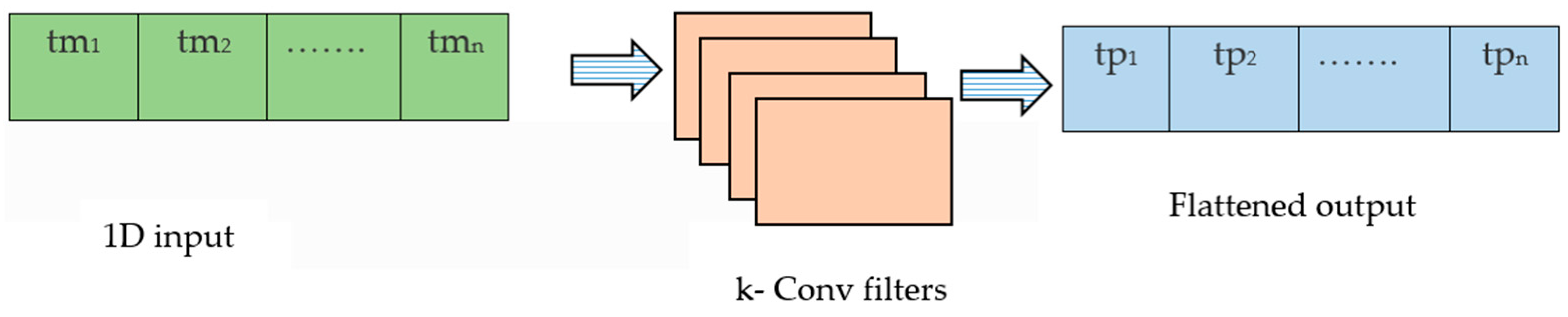

3.2. Conformer Block

3.3. Sensor Attention

3.4. HAR: Online and Offline

3.5. Experimental Setup

3.6. Datasets

3.7. Evaluation Parameters

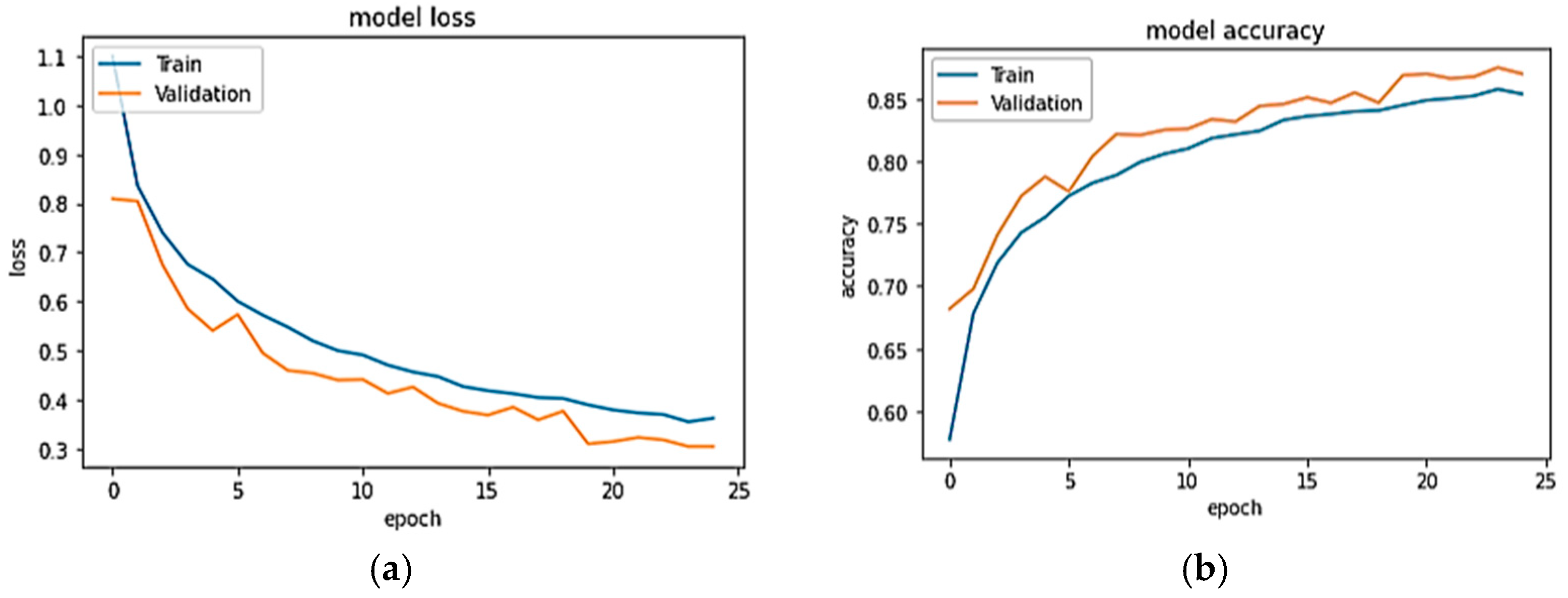

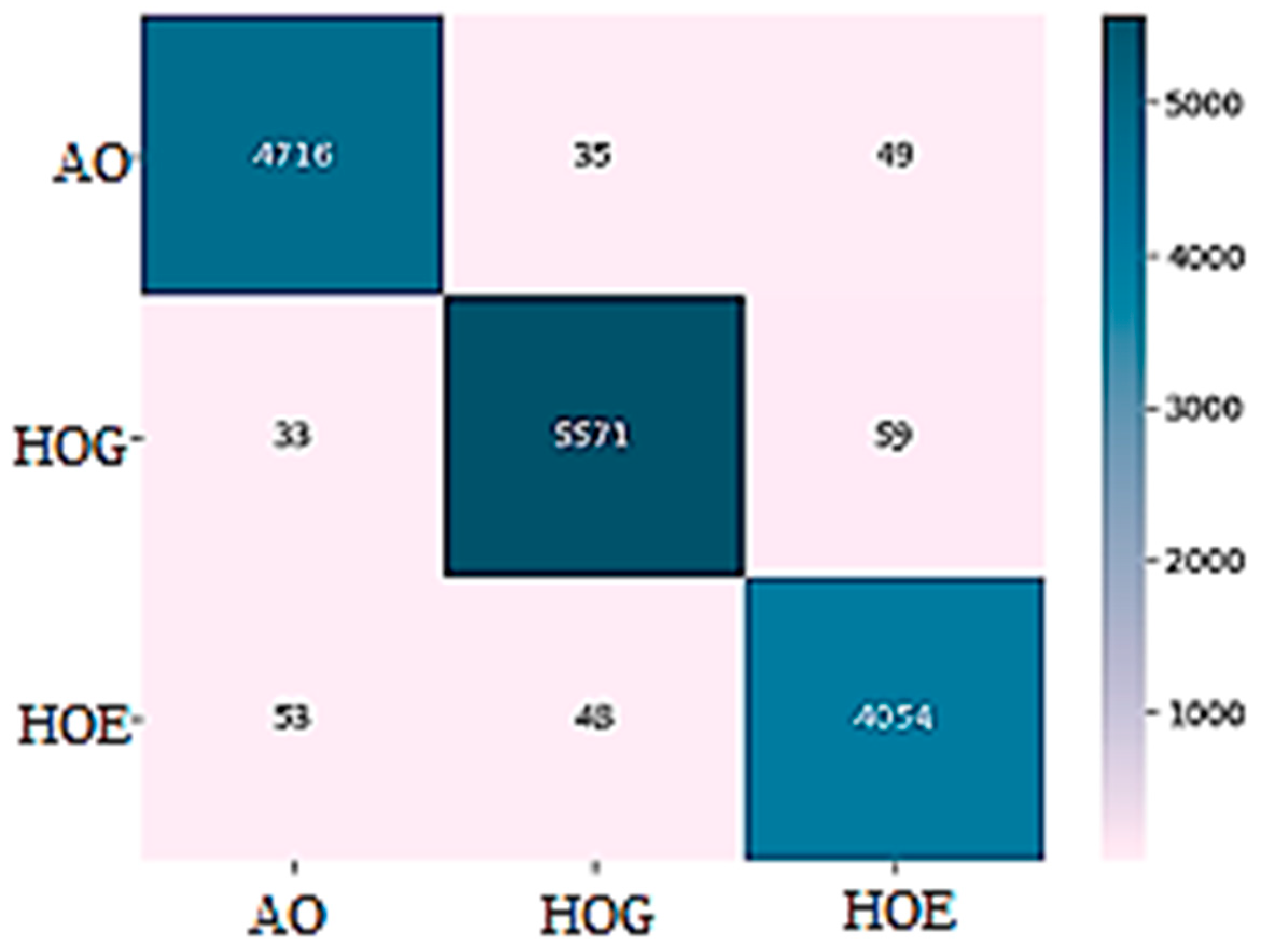

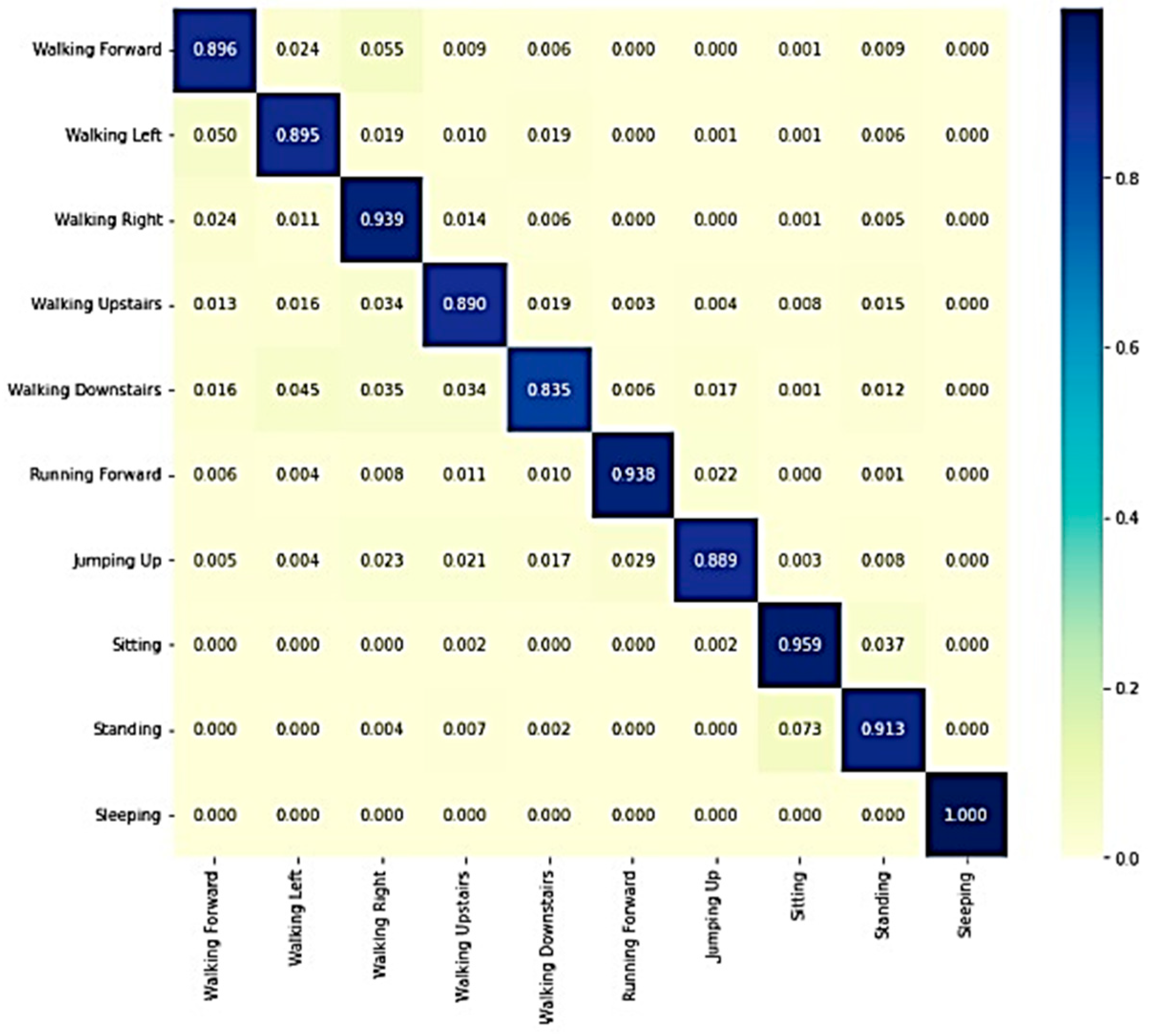

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, N.; Gupta, S.K.; Pathak, R.K.; Jain, V.; Rashidi, P.; Suri, J.S. Human activity recognition in artificial intelligence framework: A narrative review. Artif. Intell. Rev. 2022, 55, 4755–4808. [Google Scholar] [CrossRef] [PubMed]

- Hui, L. Biosignal Processing and Activity Modeling for Multimodal Human Activity Recognition. Doctoral Dissertation, Universität Bremen, Bremen, Germany, 2021. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Device-Free Human Activity Recognition Using Commercial WiFi Devices. IEEE J. Sel. Areas Commun. 2017, 35, 1118–1131. [Google Scholar] [CrossRef]

- Xue, T.; Liu, H. Hidden Markov Model and Its Application in Human Activity Recognition and Fall Detection: A Review. In Communications, Signal Processing, and Systems. CSPS (2021); Liang, Q., Wang, W., Liu, X., Na, Z., Zhang, B., Eds.; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; Volume 878. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.-B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Bianchi, V.; Bassoli, M.; Lombardo, G.; Fornacciari, P.; Mordonini, M.; De Munari, I. IoT Wearable Sensor and Deep Learning: An Integrated Approach for Personalized Human Activity Recognition in a Smart Home Environment. IEEE Internet Things J. 2019, 6, 8553–8562. [Google Scholar] [CrossRef]

- Wu, W.; Zhang, Y. Activity Recognition from Mobile Phone using Deep CNN. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 7786–7790. [Google Scholar] [CrossRef]

- Ravi, D.; Wong, C.; Lo, B.; Yang, G.Z. A deep learning approach to non-node sensor data analytics for mobile or wearable devices. IEEE J. Biomed. Health Inform. 2017, 21, 56–64. [Google Scholar] [CrossRef] [PubMed]

- Inoue, M.; Inoue, S.; Nishida, T. Deep recurrent neural network for mobile human activity recognition with high throughput. Artif. Life Robot. 2018, 23, 173–185. [Google Scholar] [CrossRef]

- Edel, M.; Köppe, E. Binarized-BLSTM-RNN based Human Activity Recognition. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Tao, D.; Wen, Y.; Hong, R. Multicolumn Bidirectional Long Short-Term Memory for Mobile Devices-Based Human Activity Recognition. IEEE Internet Things J. 2016, 3, 1124–1134. [Google Scholar] [CrossRef]

- Zhao, Y.; He, L. Deep learning in the EEG diagnosis of Alzheimer’s disease. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–2 November 2014; Springer: Singapore, 2014; pp. 340–353. [Google Scholar]

- Alsheikh, M.A.; Nitayo, D.; Lin, S.; Tan, H.P.; Han, Z. Mobile big data analytics using deep learning and apache spark. IEEE Netw. 2016, 30, 22–29. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Bhattacharya, S.; Lane, N.D. From smart to deep: Robust activity recognition on smartwatches using deep learning. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), Sydney, NSW, Australia, 14–18 March 2016. [Google Scholar]

- Ordonez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Siraj, M.S.; Ahad, M.A.R. A Hybrid Deep Learning Framework using CNN and GRU-based RNN for Recognition of Pairwise Similar Activities. In Proceedings of the 2020 Joint 9th International Conference on Informatics, Electronics & Vision (ICIEV) and 2020 4th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 26–29 August 2020. [Google Scholar] [CrossRef]

- Roche, J.; De-Silva, V.; Hook, J.; Moencks, M.; Kondoz, A. A Multimodal Data Processing System for LiDAR-Based Human Activity Recognition. IEEE Trans. Cybern. 2022, 52, 10027–10040. [Google Scholar] [CrossRef]

- Luo, F.; Poslad, S.; Bodanese, E. Temporal Convolutional Networks for Multiperson Activity Recognition Using a 2-D LIDAR. IEEE Internet Things J. 2020, 7, 7432–7442. [Google Scholar] [CrossRef]

- Luptáková, I.; Kubovčík, M.; Pospíchal, J. Wearable Sensor-Based Human Activity Recognition with Transformer Model. Sensors 2022, 22, 1911. [Google Scholar] [CrossRef] [PubMed]

- Sannara, E.K.; Portet, F.; Lalanda, P. Lightweight Transformers for Human Activity Recognition on Mobile Devices. arXiv 2022, arXiv:2209.11750. [Google Scholar]

- Shavit, Y.; Klein, I. Boosting Inertial-Based Human Activity Recognition with Transformers. IEEE Access 2021, 9, 53540–53547. [Google Scholar] [CrossRef]

- Sharma, G.; Dhall, A.; Subramanian, R. A Transformer Based Approach for Activity Detection. In Proceedings of the 30th ACM International Conference on Multimedia (MM’22). Association for Computing Machinery, New York, NY, USA, 10–14 October 2022; pp. 7155–7159. [Google Scholar] [CrossRef]

- Anmol, G.; Qin, J.; Chiu, C.-C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- Kim, Y.W.; Cho, W.H.; Kim, K.S.; Lee, S. Inertial Measurement-Unit-Based Novel Human Activity Recognition Algorithm Using Conformer. Sensors 2022, 22, 3932. [Google Scholar] [CrossRef] [PubMed]

- Shang, M.; Hong, X. Recurrent ConFormer for WiFi activity recognition. IEEE/CAA J. Autom. Sin. 2023, 10, 1491–1493. [Google Scholar] [CrossRef]

- Gao, D.; Wang, L. Multi-scale Convolution Transformer for Human Activity Detection. In Proceedings of the 2022 IEEE 8th International Conference on Computer and Communications (ICCC), Chengdu, China, 9–12 December 2022; pp. 2171–2175. [Google Scholar] [CrossRef]

- Liu, H.; Hartmann, Y.; Schultz, T. A Practical Wearable Sensor-based Human Activity Recognition Research Pipeline. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies, Online, 9–11 February 2022; WHC: Broussard, LA, USA, 2022; Volume 4, pp. 847–856, ISBN 978-989-758-552-4. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 33. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Z.; He, D.; Sun, Z.; Dong, B.; Qin, T.; Wang, L.; Liu, T.Y. Understanding and improving transformer from a multiparticle dynamic system point of view. arXiv 2019, arXiv:1906.02762. [Google Scholar]

- Mahmud, S.; Tonmoy, M.; Bhaumik, K.K.; Rahman, A.M.; Amin, M.A.; Shoyaib, M.; Khan, M.A.; Ali, A.A. Human activity recognition from wearable sensor data using self-attention. arXiv 2020, arXiv:2003.09018. [Google Scholar]

- Hartmann, Y.; Liu, H.; Schultz, T. Interactive and Interpretable Online Human Activity Recognition. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Pisa, Italy, 21–25 March 2022; pp. 109–111. [Google Scholar] [CrossRef]

- Liu, H.; Xue, T.; Schultz, T. On a Real Real-Time Wearable Human Activity Recognition System. In Proceedings of the 16th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2023)—WHC, Lisbon, Portugal, 16–18 February 2023. [Google Scholar]

- Liu, H.; Schultz, T. How long are various types of daily activities? Statistical analysis of a multimodal wearable sensor-based human activity dataset. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies—2022, Vienna, Austria, 9–1 February 2022; Volume 5, pp. 680–688. [Google Scholar]

- Ravi, D.; Wong, C.; Lo, B.; Yang, G.Z. Deep learning for human activity recognition: A resource efficient implementation on low-power devices. In Proceedings of the 2016 IEEE 13th International Conference on Wearable and Implantable Body Sensor Networks (BSN), San Francisco, CA, USA, 14–17 June 2016; pp. 71–76. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. USC-HAD: A daily activity dataset for ubiquitous activity recognition using wearable sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing (UbiComp’12), Pittsburgh, PA, USA, 5–8 September 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 1036–1043. [Google Scholar] [CrossRef]

- Gary, W. WISDM Smartphone and Smartwatch Activity and Biometrics Dataset. UCI Mach. Learn. Repos. 2019, 7, 133190–133202. [Google Scholar] [CrossRef]

- Sowmiya, S.; Menaka, D. A hybrid approach using Bidirectional Neural Networks for Human Activity Recognition. In Proceedings of the Third International Conference on Intelligent Computing Instrumentation and Control Technologies (ICICICT), Kannur, India, 11–12 August 2022; pp. 166–171. [Google Scholar] [CrossRef]

- Ghate, V. Hybrid deep learning approaches for smartphone sensor-based human activity recognition. Multimed. Tools Appl. 2021, 80, 35585–35604. [Google Scholar] [CrossRef]

- Xu, H.; Li, J.; Yuan, H.; Liu, Q.; Fan, S.; Li, T.; Sun, X. Human activity recognition based on Gramian angular field and deep convolutional neural network. IEEE Access 2020, 8, 199393–199405. [Google Scholar] [CrossRef]

- Khan, Z.N.; Ahmad, J. Attention induced multi-head convolutional neural network for human activity recognition. Appl. Soft Comput. 2021, 110, 107671. [Google Scholar] [CrossRef]

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Walking Forward | 0.92 | 0.94 | 0.93 |

| Walking Left | 0.90 | 0.97 | 0.93 |

| Walking Right | 0.96 | 0.92 | 0.94 |

| Walking Upstairs | 0.98 | 0.93 | 0.95 |

| Walking Downstairs | 0.96 | 0.95 | 0.96 |

| Running Forward | 0.98 | 0.97 | 0.97 |

| Jumping Up | 0.94 | 0.97 | 0.95 |

| Sitting | 0.99 | 0.99 | 0.99 |

| Standing | 0.92 | 0.96 | 0.94 |

| Sleeping | 1.00 | 1.00 | 1.00 |

| Micro avg | 0.96 | 0.96 | 0.96 |

| Macro avg | 0.95 | 0.96 | 0.96 |

| Weighted avg | 0.96 | 0.96 | 0.96 |

| Smart Watch Results with Conformer for Validation Set | |||

|---|---|---|---|

| Precision | Recall | F1-Score | |

| Ambulation-Related | 0.9815 | 0.9803 | 0.9809 |

| Hand-Oriented Eating | 0.9763 | 0.9724 | 0.9744 |

| Hand-Oriented Eating | 0.9828 | 0.9866 | 0.9847 |

| Accuracy | 0.9806 | ||

| Macro avg | 0.9802 | 0.9798 | 0.9800 |

| Weighted avg | 0.9806 | 0.9806 | 0.9806 |

| Smart Watch Results with Conformer for Test Set | |||

| Precision | Recall | F1-Score | |

| Ambulation-Related | 0.9819 | 0.9800 | 0.9810 |

| Hand-Oriented Eating | 0.9788 | 0.9758 | 0.9733 |

| Hand-Oriented Eating | 0.9841 | 0.9879 | 0.9860 |

| Accuracy | 0.9819 | ||

| Macro avg | 0.9816 | 0.9813 | 0.9814 |

| Weighted avg | 0.9819 | 0.9819 | 0.9819 |

| USCHAD | WISDM | ||

|---|---|---|---|

| Method | Accuracy | Method | Accuracy |

| CNN-LSTM | 90.6 | CNN-LSTM | 88.7 |

| CNN-BiLSTM | 91.8 | CNN-BiLSTM | 89.2 |

| CNN-GRU | 89.1 | CNN-GRU | 89.6 |

| CNN-BiGRU | 90.2 | CNN-BiGRU | 90.2 |

| Transformer model | 91.2 | Transformer model | 90.8 |

| Proposed method | 96.0 | Proposed method | 98.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seenath, S.; Dharmaraj, M. Conformer-Based Human Activity Recognition Using Inertial Measurement Units. Sensors 2023, 23, 7357. https://doi.org/10.3390/s23177357

Seenath S, Dharmaraj M. Conformer-Based Human Activity Recognition Using Inertial Measurement Units. Sensors. 2023; 23(17):7357. https://doi.org/10.3390/s23177357

Chicago/Turabian StyleSeenath, Sowmiya, and Menaka Dharmaraj. 2023. "Conformer-Based Human Activity Recognition Using Inertial Measurement Units" Sensors 23, no. 17: 7357. https://doi.org/10.3390/s23177357

APA StyleSeenath, S., & Dharmaraj, M. (2023). Conformer-Based Human Activity Recognition Using Inertial Measurement Units. Sensors, 23(17), 7357. https://doi.org/10.3390/s23177357