Saliency-Driven Hand Gesture Recognition Incorporating Histogram of Oriented Gradients (HOG) and Deep Learning

Abstract

:1. Introduction

2. Related Work

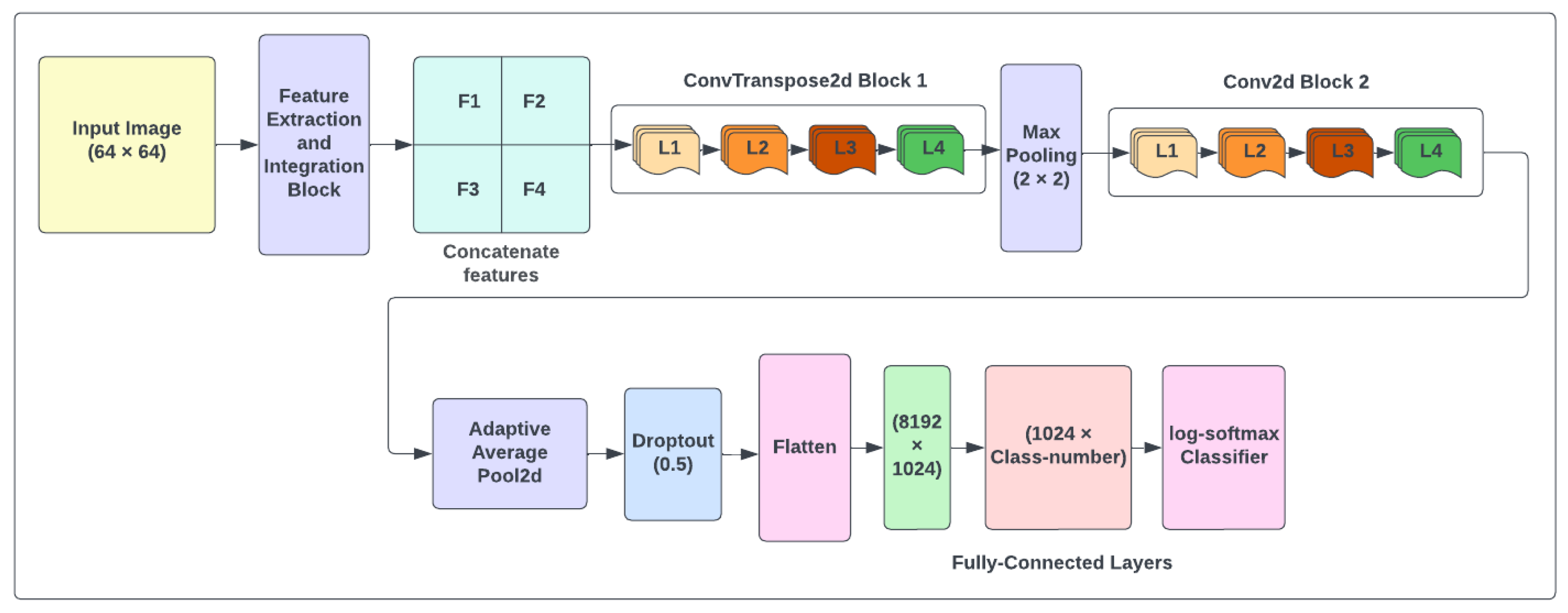

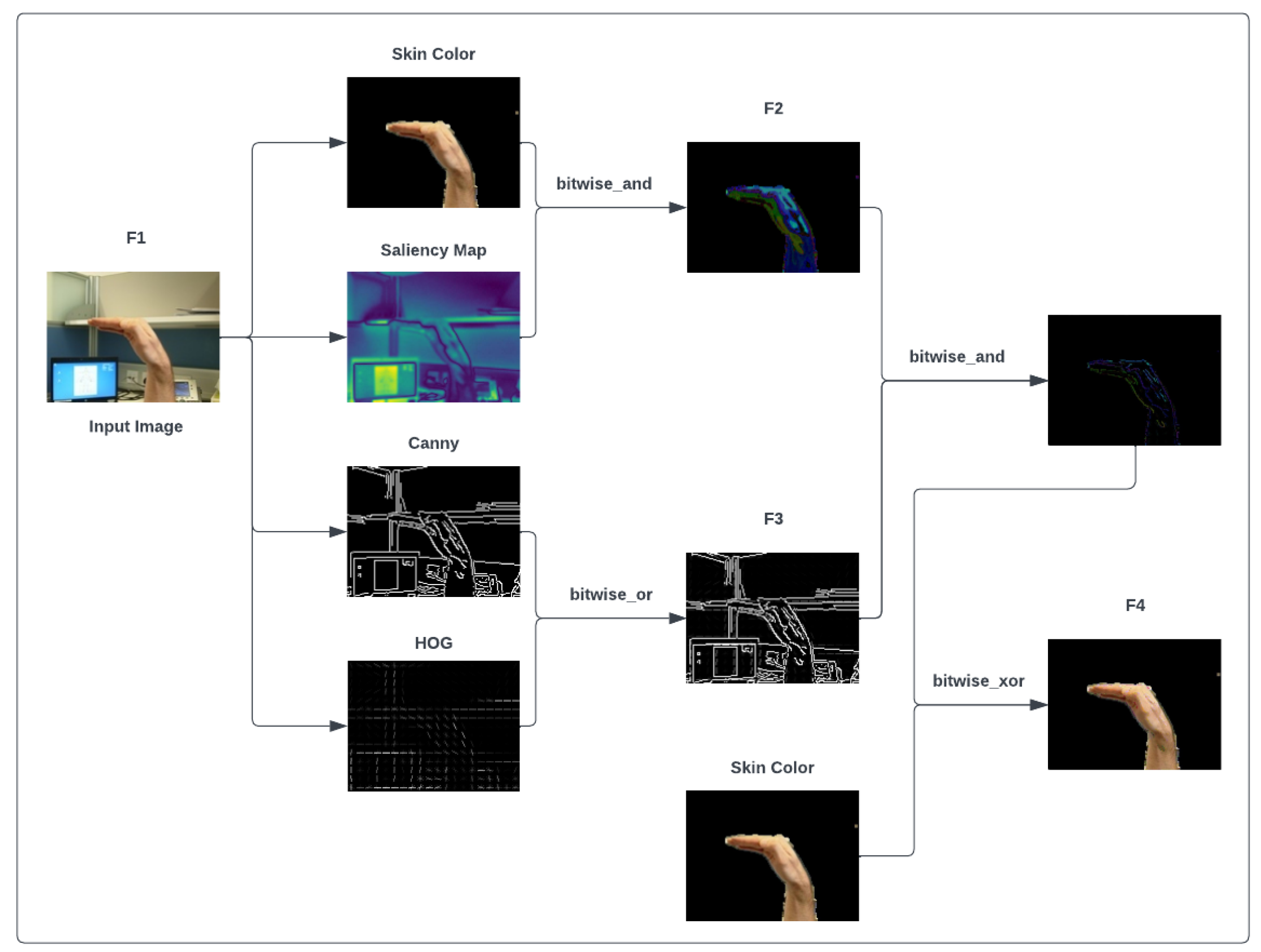

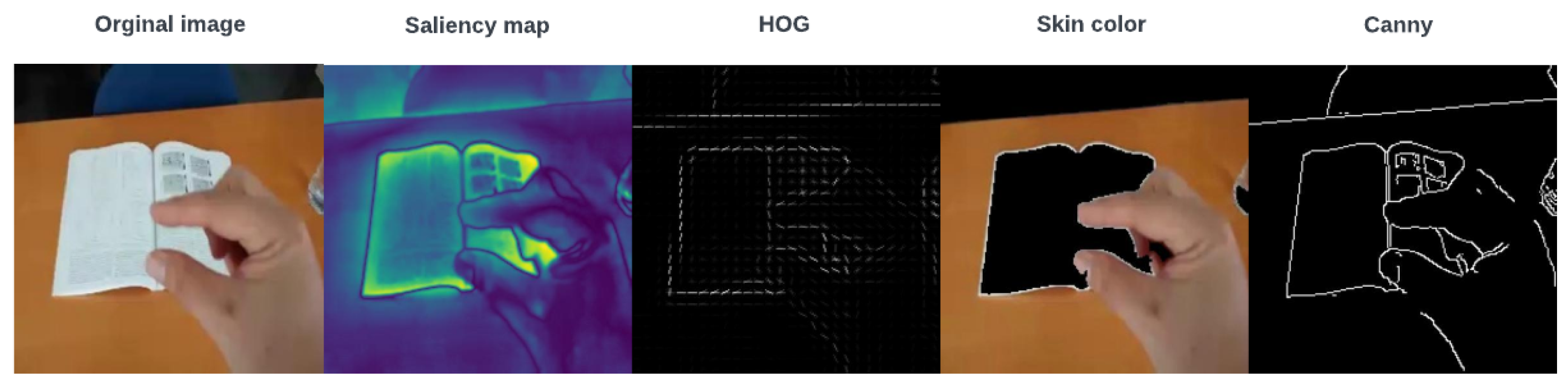

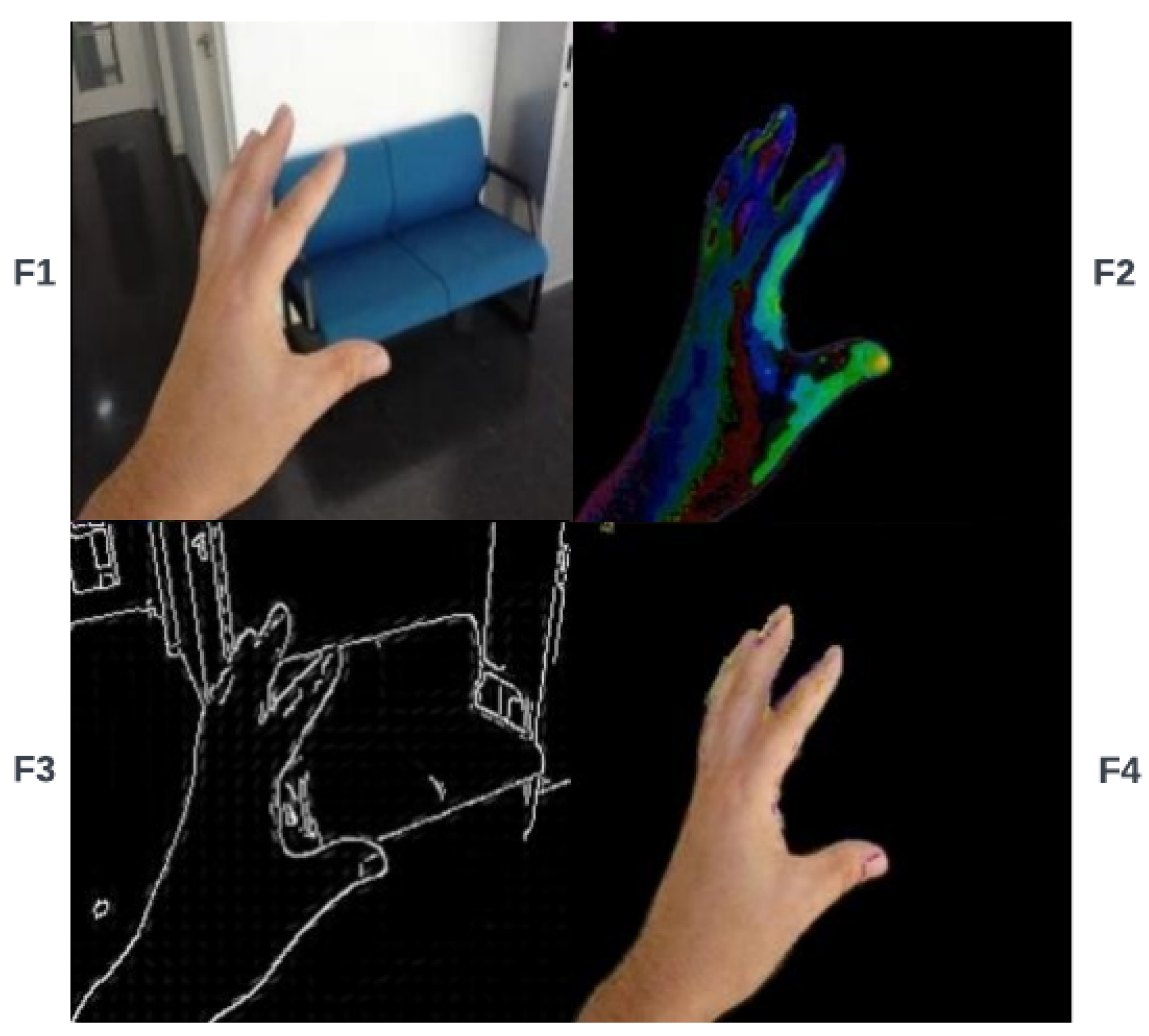

3. Proposed Method

4. Experiments

4.1. Datasets

4.2. Implementation Details

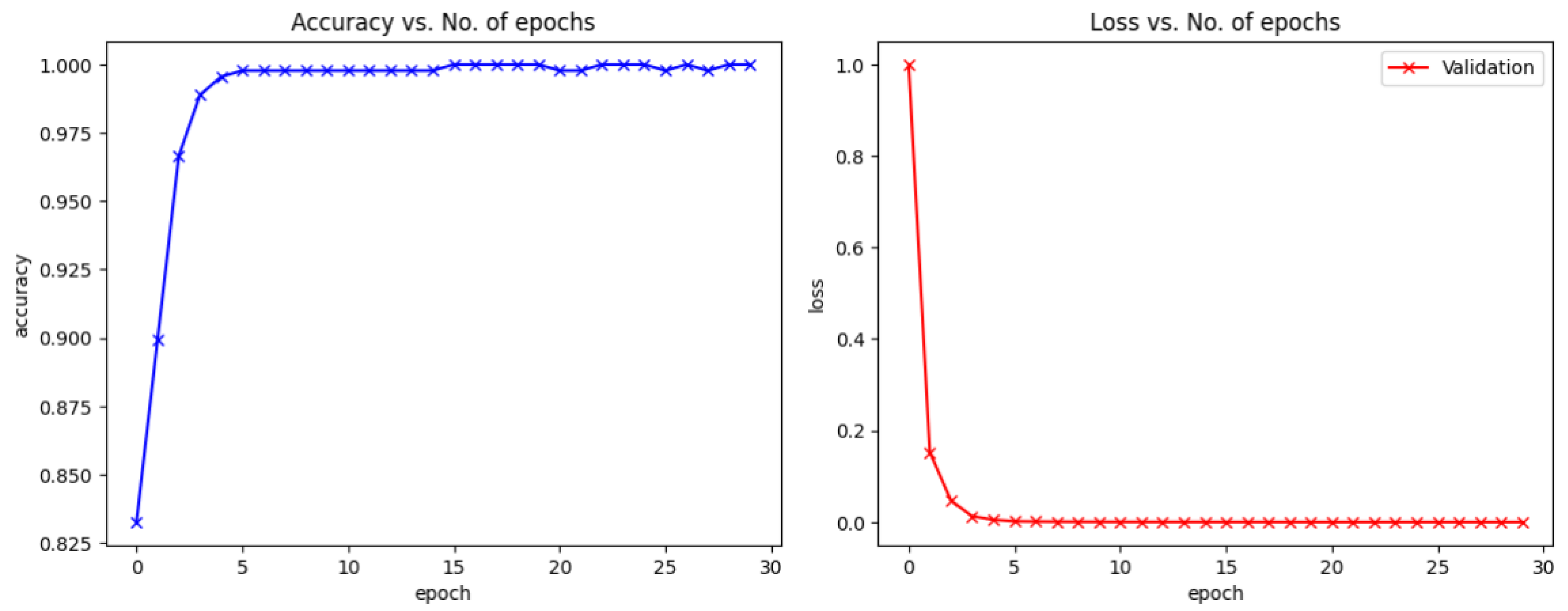

4.3. Analysis

4.4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Opencv Static Saliency Detection

Appendix A.2. Histogram of Oriented Gradients (HOG)

Appendix A.3. Canny Edge Detection

Appendix A.4. Color Space for Skin Color Detection

References

- Ajallooeian, M.; Borji, A.; Araabi, B.N.; Ahmadabadi, M.N.; Moradi, H. Fast hand gesture recognition based on saliency maps: An application to interactive robotic marionette playing. In Proceedings of the RO-MAN 2009-The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Chuang, Y.; Chen, L.; Chen, G. Saliency-guided improvement for hand posture detection and recognition. Neurocomputing 2014, 133, 404–415. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, M.; Kpalma, K.; Zheng, Q.; Zhang, X. Segmentation of hand posture against complex backgrounds based on saliency and skin colour detection. IAENG Int. J. Comput. Sci. 2018, 45, 435–444. [Google Scholar]

- Zhang, Q.; Yang, M.; Zheng, Q.; Zhang, X. Segmentation of hand gesture based on dark channel prior in projector-camera system. In Proceedings of the 2017 IEEE/CIC International Conference on Communications in China (ICCC), Qingdao, China, 22–24 October 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Zamani, M.; Kanan, H.R. Saliency based alphabet and numbers of American sign language recognition using linear feature extraction. In Proceedings of the 2014 4th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29–30 October 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Yin, Y.; Davis, R. Gesture spotting and recognition using salience detection and concatenated hidden markov models. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction, Sydney, Australia, 9–13 December 2013. [Google Scholar]

- Schauerte, B.; Stiefelhagen, R. “Look at this!” learning to guide visual saliency in human-robot interaction. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Santos, A.; Pedrini, H. Human skin segmentation improved by saliency detection. In Proceedings of the Computer Analysis of Images and Patterns: 16th International Conference, CAIP 2015, Valletta, Malta, 2–4 September 2015; Proceedings, Part II 16. Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Vishwakarma, D.K.; Singh, K. A framework for recognition of hand gesture in static postures. In Proceedings of the 2016 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 29–30 April 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Li, Y.; Miao, Q.; Tian, K.; Fan, Y.; Xu, X.; Li, R.; Song, J. Large-scale gesture recognition with a fusion of rgb-d data based on the c3d model. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Yang, W.; Kong, L.; Wang, M. Hand gesture recognition using saliency and histogram intersection kernel based sparse representation. Multimed. Tools Appl. 2016, 75, 6021–6034. [Google Scholar] [CrossRef]

- Qi, S.; Zhang, W.; Xu, G. Detecting consumer drones from static infrared images by fast-saliency and HOG descriptor. In Proceedings of the 4th International Conference on Communication and Information Processing, Qingdao China, 2–4 November 2018. [Google Scholar]

- MacDorman, K.F.; Laskar, R.H. Patient Assistance System Based on Hand Gesture Recognition. IEEE Trans. Instrum. Meas. 2023, 72, 5018013. [Google Scholar]

- Guo, Z.; Hou, Y.; Xiao, R.; Li, C.; Li, W. Motion saliency based hierarchical attention network for action recognition. Multimed. Tools Appl. 2023, 82, 4533–4550. [Google Scholar] [CrossRef]

- Xu, C.; Li, Q.; Zhou, M.; Zhou, Q.; Zhou, Y.; Ma, Y. RGB-T salient object detection via CNN feature and result saliency map fusion. Appl. Intell. 2022, 52, 11343–11362. [Google Scholar] [CrossRef]

- Ma, C.; Wang, A.; Chen, G.; Xu, C. Hand joints-based gesture recognition for noisy dataset using nested interval unscented Kalman filter with LSTM network. Vis. Comput. 2018, 34, 1053–1063. [Google Scholar] [CrossRef]

- Zhai, G.; Min, X. Perceptual image quality assessment: A survey. Sci. China Inf. Sci. 2020, 63, 1–52. [Google Scholar] [CrossRef]

- Min, X.; Gu, K.; Zhai, G.; Yang, X.; Zhang, W.; Le Callet, P.; Chen, C.W. Screen content quality assessment: Overview, benchmark, and beyond. ACM Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Min, X.; Gu, K.; Zhai, G.; Liu, J.; Yang, X.; Chen, C.W. Blind quality assessment based on pseudo-reference image. IEEE Trans. Multimed. 2017, 20, 2049–2062. [Google Scholar] [CrossRef]

- Min, X.; Zhai, G.; Gu, K.; Liu, Y.; Yang, X. Blind image quality estimation via distortion aggravation. IEEE Trans. Broadcast. 2018, 64, 508–517. [Google Scholar] [CrossRef]

- Min, X.; Ma, K.; Gu, K.; Zhai, G.; Wang, Z.; Lin, W. Unified blind quality assessment of compressed natural, graphic, and screen content images. IEEE Trans. Image Process. 2017, 26, 5462–5474. [Google Scholar] [CrossRef] [PubMed]

- Shaik, K.B.; Ganesan, P.; Kalist, V.; Sathish, B.S.; Jenitha, J.M.M. Comparative study of skin color detection and segmentation in HSV and YCbCr color space. Procedia Comput. Sci. 2015, 57, 41–48. [Google Scholar] [CrossRef]

- Saliency API, OpenCV. Available online: https://docs.opencv.org/4.x/d8/d65/group-saliency.html (accessed on 30 November 2022).

- Sahir, S. Canny Edge Detection Step by Step in Python—Computer Vision. 2019. Available online: https://towardsdatascience.com/Canny-edge-detection-step-by-step-in-python-computer-vision-b49c3a2d8123 (accessed on 7 May 2023).

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1. [Google Scholar]

- Tyagi, M. HOG(Histogram of Oriented Gradients). 2021. Available online: https://towardsdatascience.com/hog-histogram-of-oriented-gradients-67ecd887675f (accessed on 7 May 2023).

- The NUS Hand Posture Dataset-II. (n.d.). Available online: https://www.ece.nus.edu.sg/stfpage/elepv/NUS-HandSet/ (accessed on 29 May 2023).

- Hand Gestures Dataset. Available online: https://www.dlsi.ua.es/~jgallego/datasets/gestures/ (accessed on 7 May 2023).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: New York, NY, USA, 2019; pp. 8024–8035. Available online: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf (accessed on 7 May 2023).

- NVIDIA GeForce RTX 2080 SUPER. Available online: https://www.nvidia.com/en-us/geforce/news/gfecnt/nvidia-geforce-rtx-2080-super-out-now/ (accessed on 7 May 2023).

- Wand.Image—Image Objects. Available online: https://docs.wand-py.org/en/0.6.2/wand/image.html (accessed on 19 August 2023).

- Søgaard, J.; Krasula, L.; Shahid, M.; Temel, D.; Brunnström, K.; Razaak, M. Applicability of existing objective metrics of perceptual quality for adaptive video streaming. In Proceedings of the Electronic Imaging, Image Quality and System Performance XIII, San Francisco, CA, USA, 14–18 February 2016. [Google Scholar]

- Renza, D.; Martinez, E.; Arquero, A. A new approach to change detection in multispectral images by means of ERGAS index. IEEE Geosci. Remote Sens. Lett. 2012, 10, 76–80. [Google Scholar] [CrossRef]

- Nasr, M.A.-S.; AlRahmawy, M.F.; Tolba, A.S. Multi-scale structural similarity index for motion detection. J. King Saud-Univ.-Comput. Inf. Sci. 2017, 29, 399–409. [Google Scholar]

- Deshpande, R.G.; Ragha, L.L.; Sharma, S.K. Video quality assessment through PSNR estimation for different compression standards. Indones. J. Electr. Eng. Comput. Sci. 2018, 11, 918–924. [Google Scholar] [CrossRef]

- Li, X.; Jiang, T.; Fan, H.; Liu, S. SAM-IQA: Can Segment Anything Boost Image Quality Assessment? arXiv 2023, arXiv:2307.04455. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; IEEE: Piscataway, NJ, USA, 2003; Volume 2. [Google Scholar]

- Egiazarian, K.; Astola, J.; Ponomarenko, N.; Lukin, V.; Battisti, F.; Carli, M. New full-reference quality metrics based on HVS. In Proceedings of the Second International Workshop on Video Processing and Quality Metrics, Scottsdale, AZ, USA, 22–24 January 2006; Volume 4. [Google Scholar]

- Wu, J.; Lin, W.; Shi, G.; Liu, A. Reduced-reference image quality assessment with visual information fidelity. IEEE Trans. Multimed. 2013, 15, 1700–1705. [Google Scholar] [CrossRef]

- Tan, Y.S.; Lim, K.M.; Tee, C.; Lee, C.P.; Low, C.Y. Convolutional neural network with spatial pyramid pooling for hand gesture recognition. Neural Comput. Appl. 2021, 33, 5339–5351. [Google Scholar] [CrossRef]

- Bradski, G. The openCV library. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

| Stage | Layer (Type:Depth-Index) | Output Size | Param |

|---|---|---|---|

| Input | - | [−1, 3, 32, 32] | - |

| Block 1 | ConvTranspose2d:1–1 | [−1, 64, 32, 32] | 1792 |

| BatchNorm2d:1–2 | [−1, 64, 32, 32] | 128 | |

| ReLU:1–3 | [−1, 64, 32, 32] | - | |

| ConvTranspose2d:1–4 | [−1, 64, 32, 32] | 36,928 | |

| BatchNorm2d:1–5 | [−1, 64, 32, 32] | 128 | |

| ReLU:1–6 | [−1, 64, 32, 32] | - | |

| ConvTranspose2d:1–7 | [−1, 64, 32, 32] | 36,928 | |

| BatchNorm2d:1–8 | [−1, 64, 32, 32] | 128 | |

| ReLU:1–9 | [−1, 64, 32, 32] | - | |

| ConvTranspose2d:1–10 | [−1, 64, 32, 32] | 36,928 | |

| BatchNorm2d:1–11 | [−1, 64, 32, 32] | 128 | |

| ReLU:1–12 | [−1, 64, 32, 32] | - | |

| MaxPool2d(2, 2):1–13 | [−1, 64, 16, 16] | - | |

| Block 2 | Conv2d:1–14 | [−1, 128, 16, 16] | 73,856 |

| BatchNorm2d:1–15 | [−1, 128, 16, 16] | 256 | |

| ReLU:1–16 | [−1, 128, 16, 16] | - | |

| Conv2d:1–17 | [−1, 128, 16, 16] | 147,584 | |

| BatchNorm2d:1–18 | [−1, 128, 16, 16] | 256 | |

| ReLU:1–19 | [−1, 128, 16, 16] | - | |

| Conv2d:1–20 | [−1, 128, 16, 16] | 147,584 | |

| BatchNorm2d:1–21 | [−1, 128, 16, 16] | 256 | |

| ReLU:1–22 | [−1, 128, 16, 16] | - | |

| Conv2d:1–23 | [−1, 128, 16, 16] | 147,584 | |

| BatchNorm2d:1–24 | [−1, 128, 16, 16] | 256 | |

| ReLU:1–25 | [−1, 128, 16, 16] | - | |

| AdaptiveAvgPool2d:1–26 | [−1, 128, 8, 8] | - | |

| Fully connected layer | flatten | [−1, 8192] | - |

| Linear(8192, 1024):1–27 | [−1, 1024] | 8,389,632 | |

| ReLU:1–28 | [−1, 1024] | - | |

| Linear(1024, class_number):1–29 | [−1, 10] | 6150 | |

| F.log_softmax | [−1, 10] | - | |

| Total params: | 9,026,502 | ||

| Trainable params: | 9,026,502 | ||

| Non-trainable params: | 0 | ||

| Total mult-adds (M): | 255.53 | ||

| Input size (MB): | 0.01 | ||

| Forward/backward | |||

| pass size (MB): | 6.01 | ||

| Params size (MB): | 34.43 | ||

| Estimated Total Size (MB): | 40.45 |

| Features | NUS Hand Posture Dataset II | Hand Gesture Dataset |

|---|---|---|

| Original images | 97.27 | 94.50 |

| Canny | 94.92 | 92.96 |

| Saliency | 95.31 | 90.14 |

| Skin color | 96.88 | 95.42 |

| HOG | 97.66 | 92.07 |

| Our proposed features | 99.78 | 97.21 |

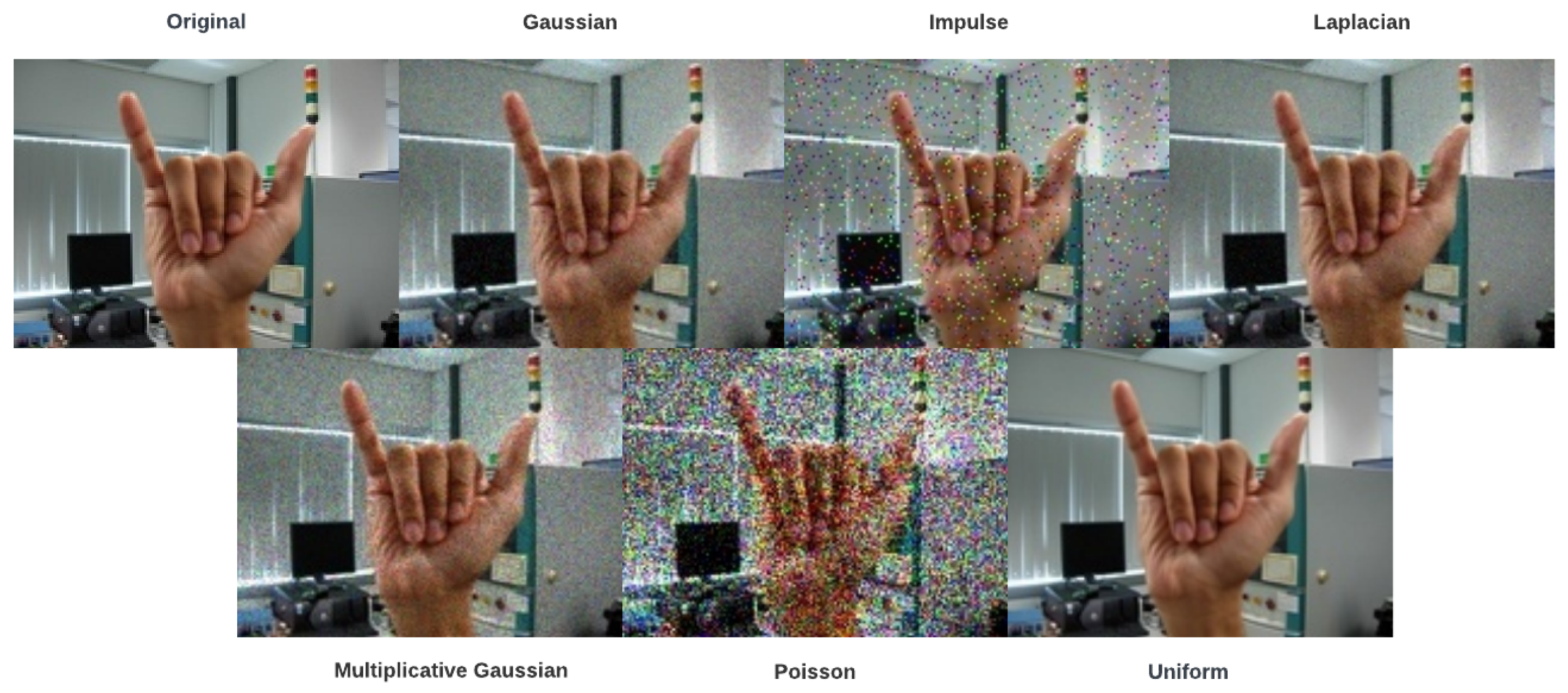

| IQA Metrics | Gaussian | Impulse | Laplacian | Multiplicative-Gaussian | Poisson | Uniform |

|---|---|---|---|---|---|---|

| MSE [32] | 62.22945 | 487.12366 | 37.50542 | 88.54241 | 1494.11719 | 10.68307 |

| EGRAS [33] | 53.44101 | 150.39209 | 41.77666 | 61.73005 | 242.83121 | 22.80766 |

| MSSSIM [34] | 0.97518 | 0.87680 | 0.98504 | 0.97033 | 0.77359 | 0.99655 |

| PSNR [35] | 30.19085 | 21.25441 | 32.38986 | 28.65929 | 16.38696 | 37.84384 |

| RMSE [32] | 7.88856 | 22.07088 | 6.12417 | 9.4097 | 38.65381 | 3.2685 |

| SAM [36] | 0.10161 | 0.26996 | 0.079261 | 0.11953 | 0.44247 | 0.042524 |

| SSIM [37] | 0.86747 | 0.56597 | 0.91653 | 0.84555 | 0.33831 | 0.98446 |

| UQI [38] | 0.97966 | 0.90523 | 0.98762 | 0.98508 | 0.83638 | 0.99668 |

| VIFP [39] | 0.45636 | 0.21459 | 0.53019 | 0.41593 | 0.11206 | 0.74939 |

| IQA Metrics | Gaussian | Impulse | Laplacian | Multiplicative-Gaussian | Poisson | Uniform |

|---|---|---|---|---|---|---|

| MSE [32] | 58.9221 | 103.29271 | 31.5976 | 171.96166 | 1241.64085 | 3.43042 |

| EGRAS [33] | 23.54171 | 31.22135 | 17.26357 | 40.09161 | 107.35299 | 5.73945 |

| MSSSIM [34] | 0.9227 | 0.89105 | 0.9567 | 0.83608 | 0.54435 | 0.99596 |

| PSNR [35] | 30.42802 | 27.99011 | 33.13426 | 25.77649 | 17.19084 | 42.77733 |

| RMSE [32] | 7.67607 | 10.1633 | 5.62117 | 13.11341 | 35.23692 | 1.85214 |

| SAM [36] | 0.053382 | 0.07099 | 0.038891 | 0.09115 | 0.24572 | 0.01163 |

| SSIM [37] | 0.61551 | 0.55536 | 0.74782 | 0.424 | 0.12197 | 0.98187 |

| UQI [38] | 0.99473 | 0.99087 | 0.99711 | 0.9927 | 0.9396 | 0.99954 |

| VIFP [39] | 0.34229 | 0.31202 | 0.41475 | 0.28056 | 0.10697 | 0.73754 |

| Features | NUS Hand Posture Dataset II | Hand Gesture Dataset |

|---|---|---|

| Original images | 94.53 | 94.76 |

| Canny | 80.47 | 88.00 |

| Saliency | 92.97 | 91.07 |

| Skin color | 94.14 | 93.82 |

| HOG | 94.14 | 89.27 |

| Our proposed features | 98.83 | 96.63 |

| Models | Test Accuracy (%) |

|---|---|

| CNN-SPP model [40] | 95.95 |

| Saliency with skin color information [2] | 95.27 |

| Saliency with combined loss function [13] | 98.00 |

| Saliency with skin color information and HOG * | 99.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jafari, F.; Basu, A. Saliency-Driven Hand Gesture Recognition Incorporating Histogram of Oriented Gradients (HOG) and Deep Learning. Sensors 2023, 23, 7790. https://doi.org/10.3390/s23187790

Jafari F, Basu A. Saliency-Driven Hand Gesture Recognition Incorporating Histogram of Oriented Gradients (HOG) and Deep Learning. Sensors. 2023; 23(18):7790. https://doi.org/10.3390/s23187790

Chicago/Turabian StyleJafari, Farzaneh, and Anup Basu. 2023. "Saliency-Driven Hand Gesture Recognition Incorporating Histogram of Oriented Gradients (HOG) and Deep Learning" Sensors 23, no. 18: 7790. https://doi.org/10.3390/s23187790

APA StyleJafari, F., & Basu, A. (2023). Saliency-Driven Hand Gesture Recognition Incorporating Histogram of Oriented Gradients (HOG) and Deep Learning. Sensors, 23(18), 7790. https://doi.org/10.3390/s23187790