Adoption of Transformer Neural Network to Improve the Diagnostic Performance of Oximetry for Obstructive Sleep Apnea

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset

3.2. Base-Model

3.2.1. Constant Position Embeddings

3.2.2. Sinusoidal Positional Embedding

PE (pos,2i+1) = cos (pos/10,0002i/dmodel)

3.2.3. Learned Positional Embedding

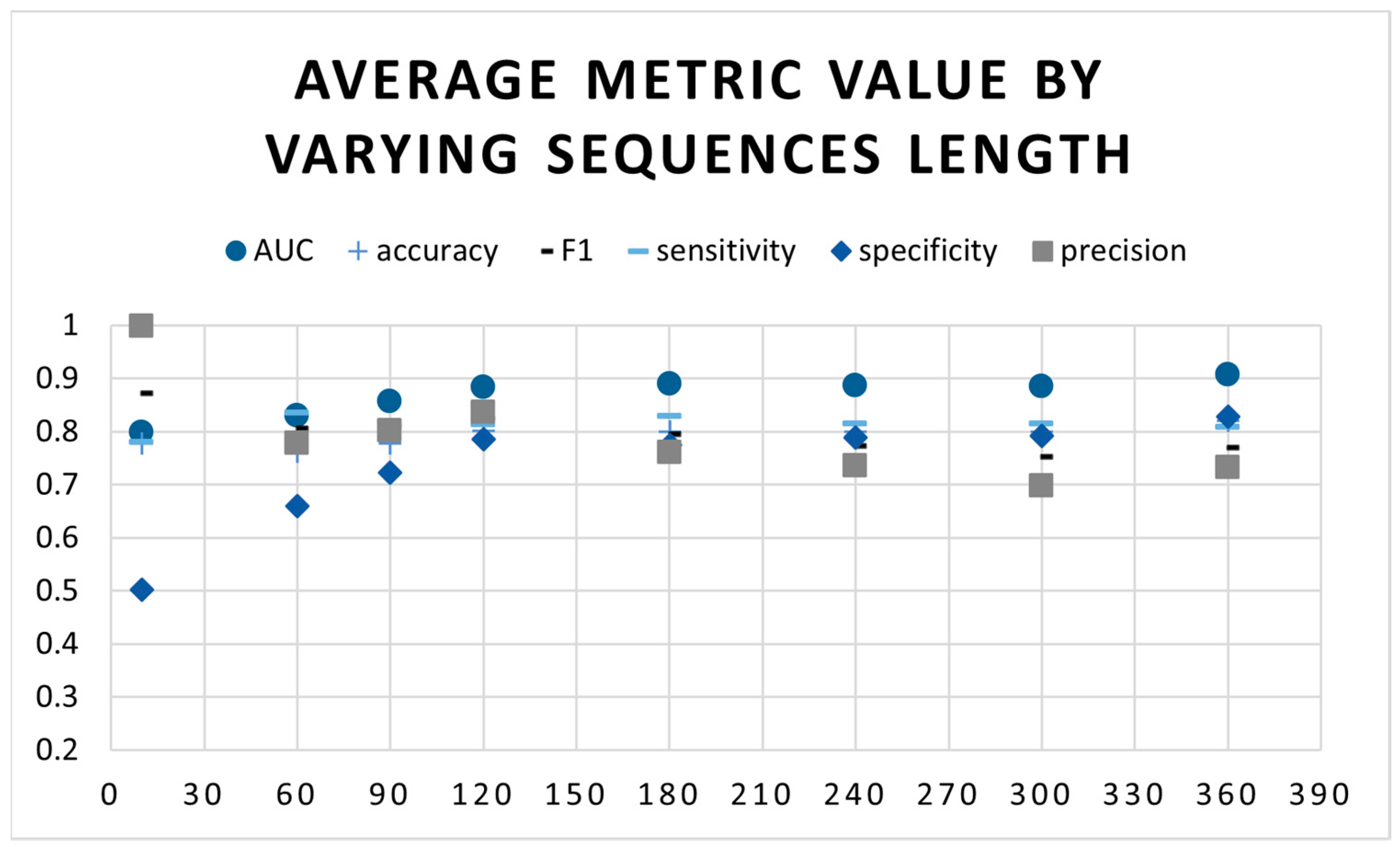

3.3. Determining the Best Sequence Length

3.4. Experimental Setting

3.5. Evaluation Metrics

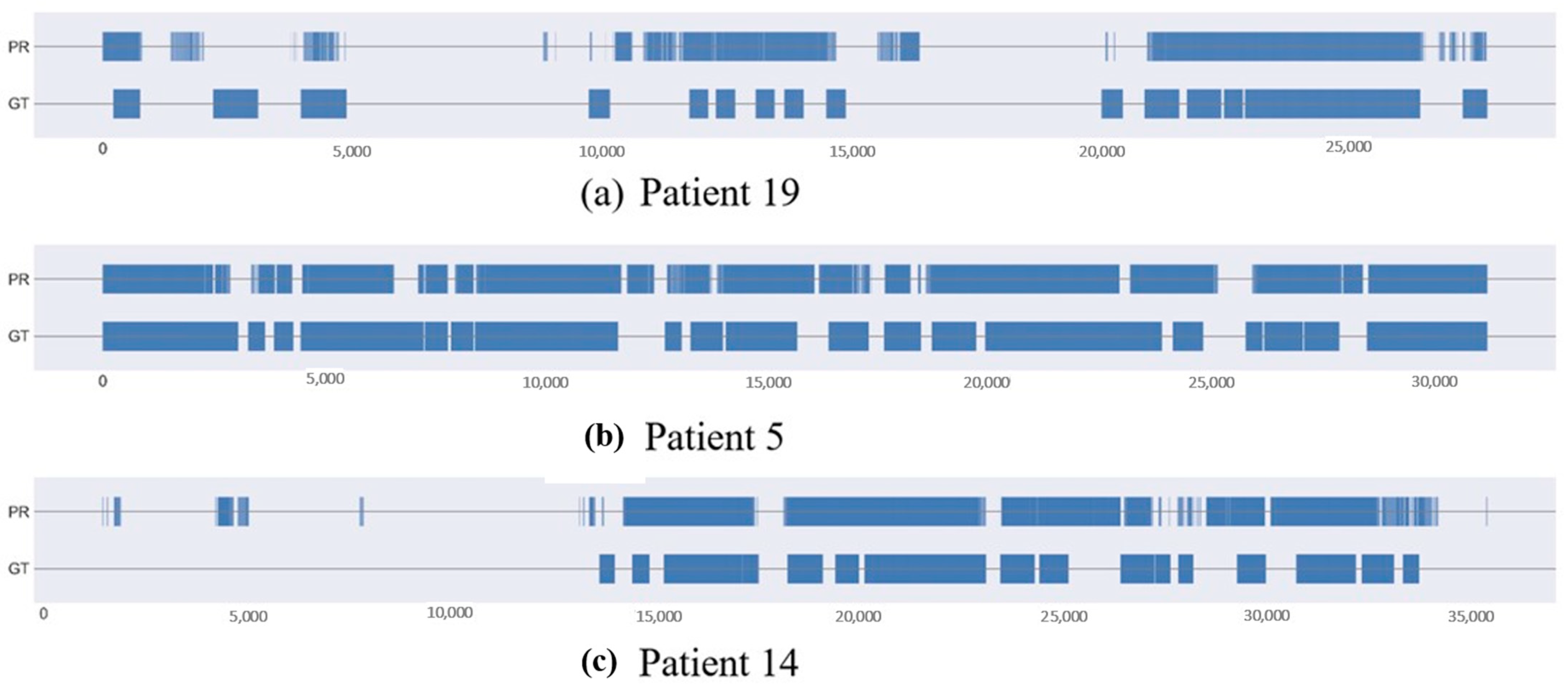

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Patient | AHI | AHI Class | Sensitivity | Specificity | Precision | Accuracy | F1 | AUC |

|---|---|---|---|---|---|---|---|---|

| 1 | 40 | severe | 0.5324 | 0.9664 | 0.7334 | 0.9021 | 0.6169 | 0.9606 |

| 2 | 10 | mild | 0.7393 | 0.7094 | 0.688 | 0.7233 | 0.7127 | 0.8205 |

| 3 | 63 | severe | 0.45 | 0.9701 | 0.0669 | 0.9676 | 0.1165 | 0.9898 |

| 4 | 10 | mild | 0.8953 | 0.5993 | 0.533 | 0.6994 | 0.6682 | 0.7797 |

| 5 | 35 | severe | 0.4831 | 0.8822 | 0.4315 | 0.8198 | 0.4558 | 0.9186 |

| 6 | 58 | severe | 0.4247 | 0.9177 | 0.2244 | 0.8916 | 0.2937 | 0.9435 |

| 7 | 30 | severe | 0.752 | 0.8443 | 0.283 | 0.8373 | 0.4112 | 0.8632 |

| 8 | 1 | none | 0.948 | 0.2346 | 0.508 | 0.559 | 0.6616 | 0.6254 |

| 9 | 8 | mild | 0.8638 | 0.4292 | 0.2898 | 0.5215 | 0.434 | 0.5525 |

| 10 | 41 | severe | 0.0607 | 0.9214 | 0.1065 | 0.8064 | 0.0774 | 0.8979 |

| 11 | 4 | none | 0.9423 | 0.3071 | 0.4407 | 0.5401 | 0.6006 | 0.5642 |

| 12 | 4 | none | 0.8217 | 0.3056 | 0.7821 | 0.6937 | 0.8014 | 0.7736 |

| 13 | 26 | moderate | 0.9958 | 0.727 | 0.4183 | 0.7713 | 0.5891 | 0.8524 |

| 14 | 9 | mild | 0.9039 | 0.7021 | 0.7594 | 0.805 | 0.8254 | 0.902 |

| 15 | 43 | severe | 0.2435 | 0.8619 | 0.4247 | 0.6794 | 0.3095 | 0.7417 |

| 16 | 37 | severe | 0.9566 | 0.8414 | 0.116 | 0.8439 | 0.2069 | 0.9063 |

| 17 | 28 | moderate | 0.7901 | 0.7332 | 0.5004 | 0.7476 | 0.6127 | 0.8246 |

| 18 | 4 | none | 0.8142 | 0.4265 | 0.7748 | 0.701 | 0.794 | 0.7905 |

| 19 | 10 | mild | 0.8252 | 0.6774 | 0.7759 | 0.7624 | 0.7998 | 0.8737 |

| 20 | 48 | severe | 0.6457 | 0.9572 | 0.468 | 0.94 | 0.5427 | 0.9767 |

| 21 | 28 | moderate | 0.526 | 0.7215 | 0.0176 | 0.7197 | 0.0341 | 0.7934 |

| 22 | 2 | none | 0.9978 | 0.2003 | 0.7483 | 0.762 | 0.8552 | 0.8723 |

| 23 | 0 | none | 0.9756 | 0.129 | 0.9187 | 0.8993 | 0.9463 | 0.9721 |

| 24 | 21 | moderate | 0.8016 | 0.7942 | 0.2564 | 0.7948 | 0.3886 | 0.8736 |

| 25 | 44 | severe | 0.8531 | 0.9059 | 0.0407 | 0.9056 | 0.0777 | 0.9599 |

| 26 | 60 | severe | 0.1511 | 0.9921 | 0.5952 | 0.9323 | 0.241 | 0.9756 |

| 27 | 9 | mild | 0.8476 | 0.7414 | 0.5465 | 0.7699 | 0.6646 | 0.8576 |

| 28 | 13 | mild | 0.9087 | 0.628 | 0.3318 | 0.6754 | 0.4861 | 0.7051 |

| 29 | 4 | none | 0.8835 | 0.409 | 0.6465 | 0.6701 | 0.7466 | 0.764 |

| 30 | 73 | severe | 0.2198 | 0.9775 | 0.1108 | 0.9679 | 0.1473 | 0.9871 |

References

- Himanshu Wickramasinghe. Obstructive Sleep Apnea (OSA). Available online: https://emedicine.medscape.com/article/295807-overview (accessed on 23 February 2023).

- Benjafield, A.V.; Ayas, N.T.; Eastwood, P.R.; Heinzer, R.; Ip, M.S.; Morrell, M.J.; Nunez, C.M.; Patel, S.R.; Penzel, T.; Pépin, J.L.; et al. Estimation of the global prevalence and burden of obstructive sleep apnoea: A literature-based analysis. Lancet Respir. Med. 2019, 7, 687–698. [Google Scholar] [CrossRef]

- Almeneessier, A.S.; BaHammam, A.S. Sleep medicine in Saudi Arabia. J. Clin. Sleep Med. 2017, 13, 641–645. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Pandi-Perumal, S.R.; BaHammam, A.S.; FRCP. Clinical Atlas of Polysomnography; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Almarshad, M.A.; Islam, S.; Al-Ahmadi, S.; BaHammam, A.S. Diagnostic Features and Potential Applications of PPG Signal in Healthcare: A Systematic Review. Healthcare 2022, 10, 547. [Google Scholar] [CrossRef] [PubMed]

- Berry, R.B.; Brooks, R.; Gamaldo, C.E.; Harding, S.M.; Marcus, C.; Vaughn, B.V. The AASM Manual for the Scoring of Sleep and Associated Events. Am. Acad. Sleep Med. 2013, 53, 1689–1699. [Google Scholar]

- Collop, N.A. Scoring variability between polysomnography technologists in different sleep laboratories. Sleep Med. 2002, 3, 43–47. [Google Scholar] [CrossRef]

- Philips. Sleepware G3 with integrated Somnolyzer Scoring. Available online: https://www.usa.philips.com/healthcare/product/HC1082462/sleepware-g3-sleep-diagnostic-software (accessed on 13 September 2023).

- BaHammam, A.S.; Gacuan, D.E.; George, S.; Acosta, K.L.; Pandi-Perumal, S.R.; Gupta, R. Polysomnography I: Procedure and Technology. Synop. Sleep Med. 2016, 334, 443–456. [Google Scholar] [CrossRef]

- Choi, J.H.; Lee, B.; Lee, J.Y.; Kim, H.J. Validating the Watch-PAT for diagnosing obstructive sleep apnea in adolescents. J. Clin. Sleep Med. 2018, 14, 1741–1747. [Google Scholar] [CrossRef]

- Álvarez, D.; Hornero, R.; Marcos, J.V.; del Campo, F. Feature selection from nocturnal oximetry using genetic algorithms to assist in obstructive sleep apnoea diagnosis. Med. Eng. Phys. 2012, 34, 1049–1057. [Google Scholar] [CrossRef] [PubMed]

- John, A.; Nundy, K.K.; Cardiff, B.; John, D. SomnNET: An SpO2 Based Deep Learning Network for Sleep Apnea Detection in Smartwatches. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Guadalajara, Mexico, 26–30 July 2021; Volume 2021, pp. 1961–1964. [Google Scholar] [CrossRef]

- SBiswal, S.; Sun, H.; Goparaju, B.; Westover, M.B.; Sun, J.; Bianchi, M.T. Expert-level sleep scoring with deep neural networks. J. Am. Med. Inform. Assoc. 2018, 25, 1643–1650. [Google Scholar] [CrossRef]

- Faust, O.; Hagiwara, Y.; Hong, T.J.; Lih, O.S.; Acharya, U.R. Deep learning for healthcare applications based on physiological signals: A review. In Computer Methods and Programs in Biomedicine; Elsevier Ireland Ltd.: Shannon, Ireland, 2018; Volume 161, pp. 1–13. [Google Scholar] [CrossRef]

- Vicente-Samper, J.M.; Tamantini, C.; Ávila-Navarro, E.; De La Casa-Lillo, M.; Zollo, L.; Sabater-Navarro, J.M.; Cordella, F. An ML-Based Approach to Reconstruct Heart Rate from PPG in Presence of Motion Artifacts. Biosensors 2023, 13, 718. [Google Scholar] [CrossRef]

- Malhotra, A.; Younes, M.; Kuna, S.T.; Benca, R.; Kushida, C.A.; Walsh, J.; Hanlon, A.; Staley, B.; Pack, A.L.; Pien, G.W. Performance of an automated polysomnography scoring system versus computer-assisted manual scoring. Sleep 2013, 36, 573–582. [Google Scholar] [CrossRef]

- Thorey, V.; Hernandez, A.B.; Arnal, P.J.; During, E.H. AI vs Humans for the diagnosis of sleep apnea. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, Berlin, Germany, 23–27 July 2019; pp. 1596–1600. [Google Scholar]

- Aggarwal, C.C. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2018. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 13 September 2023).

- Sharma, M.; Kumar, K.; Kumar, P.; Tan, R.-S.; Acharya, U.R. Pulse oximetry SpO2 signal for auto-mated identification of sleep apnea: A review and future trends. Physiol. Meas. 2022, 43. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Zhao, G.; Zhang, X.; Xu, W.; Han, D. A model for obstructive sleep apnea detection using a multi-layer feed-forward neural network based on electrocardiogram, pulse oxygen saturation, and body mass index. Sleep Breath 2021, 25, 2065–2072. [Google Scholar] [CrossRef]

- Morillo, D.S.; Gross, N. Probabilistic neural network approach for the detection of SAHS from overnight pulse oximetry. Med. Biol. Eng. Comput. 2013, 51, 305–315. [Google Scholar] [CrossRef] [PubMed]

- Almazaydeh, L.; Faezipour, M.; Elleithy, K. A Neural Network System for Detection of Obstructive Sleep Apnea Through SpO2 Signal Features. Int. J. Adv. Comput. Sci. Appl. 2012, 3, 7–11. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Hong, D.; Cai, W.; Yu, C.; Yang, N.; Cai, W. Multi-feature fusion: Graph neural network and CNN combining for hyperspectral image classification. Neurocomputing 2022, 501, 246–257. [Google Scholar] [CrossRef]

- Vaquerizo-Villar, F.; Alvarez, D.; Kheirandish-Gozal, L.; Gutierrez-Tobal, G.C.; Barroso-Garcia, V.; Santamaria-Vazquez, E.; del Campo, F.; Gozal, D.; Hornero, R. A Convolutional Neural Network Architecture to Enhance Oximetry Ability to Diagnose Pediatric Obstructive Sleep Apnea. IEEE J. Biomed. Health Inform. 2021, 25, 2906–2916. [Google Scholar] [CrossRef] [PubMed]

- Mostafa, S.S.; Mendonça, F.; Morgado-Dias, F.; Ravelo-García, A. SpO2 based Sleep Apnea Detection using Deep Learning. In Proceedings of the INES 2017 • 21st International Conference on Intelligent Engineering Systems, Larnaca, Cyprus, 20–23 October 2017; pp. 91–96. [Google Scholar]

- Piorecky, M.; Bartoň, M.; Koudelka, V.; Buskova, J.; Koprivova, J.; Brunovsky, M.; Piorecka, V. Apnea detection in polysomnographic recordings using machine learning techniques. Diagnostics 2021, 11, 2302. [Google Scholar] [CrossRef]

- Bernardini, A.; Brunello, A.; Gigli, G.L.; Montanari, A.; Saccomanno, N. AIOSA: An approach to the auto-matic identification of obstructive sleep apnea events based on deep learning. Artif. Intell. Med. 2021, 118, 102133. [Google Scholar] [CrossRef]

- Pathinarupothi, R.K.; Rangan, E.S.; Gopalakrishnan, E.A.; Vinaykumar, R.; Soman, K.P. Single Sensor Techniques for Sleep Apnea Diagnosis using Deep Learning. In Proceedings of the 2017 IEEE International Conference on Healthcare Informatics Single, Park City, UT, USA, 23–26 August 2017; pp. 524–529. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Zhang, G.-Q.; Cui, L.; Mueller, R.; Tao, S.; Kim, M.; Rueschman, M.; Mariani, S.; Mobley, D.; Redline, S. The National Sleep Research Resource: Towards a sleep data commons. J. Am. Med. Inform. Assoc. 2018, 25, 1351–1358. [Google Scholar] [CrossRef] [PubMed]

- Cen, L.; Yu, Z.L.; Kluge, T.; Ser, W. Automatic System for Obstructive Sleep Apnea Events Detection Using Convolutional Neural Network. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Mostafa, S.S.; Mendonca, F.; Ravelo-Garcia, A.G.; Juliá-Serdá, G.G.; Morgado-Dias, F. Multi-Objective Hy-perparameter Optimization of Convolutional Neural Network for Obstructive Sleep Apnea Detection. IEEE Access 2020, 8, 129586–129599. [Google Scholar] [CrossRef]

- Bernardini, A.; Brunello, A.; Gigli, G.L.; Montanari, A.; Saccomanno, N. OSASUD: A dataset of stroke unit recordings for the detection of Obstructive Sleep Apnea Syndrome. Sci. Data 2022, 9, 177. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- NVIDIA. GeForce RTX 3080 Family. Available online: https://www.nvidia.com/en-me/geforce/graphics-cards/30-series/rtx-3080-3080ti/ (accessed on 21 May 2023).

- Team, G.B. TensorFlow 2.10. Available online: https://www.tensorflow.org/ (accessed on 21 May 2023).

- Sklearn.preprocessing. Preprocessing Data. Available online: https://scikit-learn.org/stable/modules/preprocessing.html (accessed on 21 May 2023).

- Kotsiantis, S.B.; Kanellopoulos, D.; Pintelas, P.E. Data preprocessing for supervised leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar] [CrossRef]

- Wang, Y.A.; Chen, Y.N. What do position embeddings learn? An empirical study of pre-trained language model positional encoding. In Proceedings of the EMNLP 2020—2020 Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; Volume 2, pp. 6840–6849. [Google Scholar] [CrossRef]

- Wang, G.; Lu, Y.; Cui, L.; Lv, T.; Florencio, D.; Zhang, C. A Simple yet Effective Learnable Positional En-coding Method for Improving Document Transformer Model. In Proceedings of the Findings of the Association for Computational Linguistics: AACL-IJCNLP 2022, Online, 20–23 November 2022; pp. 453–463. Available online: https://aclanthology.org/2022.findings-aacl.42 (accessed on 13 September 2023).

- Wu, K.; Peng, H.; Chen, M.; Fu, J.; Chao, H. Rethinking and Improving Relative Position Encoding for Vision Transformer. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10013–10021. [Google Scholar] [CrossRef]

- Taylor, G.W.; Fergus, R.; Zeiler, M.D.; Krishnan, D. Deconvolutional Networks. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 109–122. [Google Scholar] [CrossRef]

- Tomescu, V.I.; Czibula, G.; Niticâ, S. A study on using deep autoencoders for imbalanced binary classification. Procedia. Comput. Sci. 2021, 192, 119–128. [Google Scholar] [CrossRef]

- Dempster, A.; Petitjean, F.; Webb, G.I. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Discov. 2020, 34, 1454–1495. [Google Scholar] [CrossRef]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. Available online: http://arxiv.org/abs/1603.07285 (accessed on 13 September 2023).

- Zhang, Y. A Better Autoencoder for Image: Convolutional Autoencoder. 2015, pp. 1–7. Available online: http://users.cecs.anu.edu.au/Tom.Gedeon/conf/ABCs2018/paper/ABCs2018_paper_58.Pdf (accessed on 13 September 2023).

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Haviv, A.; Ram, O.; Press, O.; Izsak, P.; Levy, O. Transformer Language Models without Positional En-codings Still Learn Positional Information. Find. Assoc. Comput. Linguist. EMNLP 2022, 2022, 1382–1390. [Google Scholar]

- Emilio, S.N.P. A study of ant-based pheromone spaces for generation constructive hyper-heuristics. Swarm Evol. Comput. 2022, 72, 101095. [Google Scholar]

- Zhao, H.; Zhang, C. An online-learning-based evolutionary many-objective algorithm. Inf. Sci. 2020, 509, 1–21. [Google Scholar] [CrossRef]

- Dulebenets, M.A. An Adaptive Polyploid Memetic Algorithm for scheduling trucks at a cross-docking terminal. Inf. Sci. 2021, 565, 390–421. [Google Scholar] [CrossRef]

| Ref | Year | DL Model | Dataset | Window Size (Time) | #* Subjects | Accuracy % (Best) |

|---|---|---|---|---|---|---|

| Almazaydeh et al. [23] | 2012 | NN * | UCD database [30] | - | 7 | 93.3 |

| Morillo et al. [22] | 2013 | PNN * | Private dataset | 30 s | 115 | 84 |

| Mostafa et al. [26] | 2017 | Deep Belief NN with an autoencoder | UCD database [30] | 1 min | 8 and 25 | 85.26 |

| Pathinarupothi et al. [29] | 2017 | LSTM *-RNN | UCD database [30] | 1 min | 35 | 95.5 |

| Cen et al. [32] | 2018 | CNN * | UCD database [30] | 1 s | - | 79.61 |

| Mostafa et al. [33] | 2020 | CNN | Private dataset and UCD database [30] | 1, 3 and 5 min | - | 89.40 |

| John et al. [12] | 2021 | 1D CNN | UCD database [30] | 1 s | 25 | 89.75 |

| Vaquerizo-Villar et al. [25] | 2021 | CNN | CHAT dataset [31] and 2 private datasets | 20 min | 3196 | 83.9 |

| Piorecky et al. [27] | 2021 | CNN | Private dataset | 10 s | 175 | 84 |

| Bernardini et al. [28] | 2021 | LSTM | OSASUD [34] | 180 s | 30 | 63.3 |

| Li et al. [21] | 2021 | Artificial neural network (ANN) | Private dataset | - | 148 | 97.8 |

| Ground Truth (GT) | ||

|---|---|---|

| Predicted (PR) | True Positive (TP) | False Positive (FP) |

| False Negative (FN) | True Negative (TN) | |

| Architecture | AUC | Accuracy | F1 | Sensitivity | Specificity | Precision |

|---|---|---|---|---|---|---|

| Transformer encoder only | 0.8738 | 0.7898 | 0.8092 | 0.7473 | 0.8526 | 0.8822 |

| Transformer encoder with naïve position embeddings | 0.8788 | 0.7969 | 0.8105 | 0.7665 | 0.8366 | 0.8598 |

| Transformer encoder with sinusoidal positional embedding | 0.8799 | 0.7983 | 0.8118 | 0.7678 | 0.8381 | 0.8610 |

| Transformer encoder with Learned Positional Embedding | 0.8890 | 0.7995 | 0.7931 | 0.8285 | 0.7745 | 0.7605 |

| Sequence Length | Duration | Trainable Parameter |

|---|---|---|

| 360 | 16 h 15 min | 139,884 |

| 300 | 6 h 33 min | 132,204 |

| 240 | 5 h 17 min | 124,524 |

| 180 | 4 h 56 min | 116,844 |

| 120 | 4 h 3 min | 109,164 |

| 90 | 2 h 45 min | 105,580 |

| 60 | 3 h 55 min | 101,484 |

| 10 | 1 h 12 min | 95,340 |

| Per Second | ||||||||

|---|---|---|---|---|---|---|---|---|

| Model | Base Architecture | Dataset | AUC | Acc * | F1 | Sens * | Spec * | Prec * |

| Mostafa et al. [33] | CNN | Private | - | 89.40 | - | 74.40 | 93.90 | - |

| UCD | - | 66.79 | - | 85.37 | 60.94 | - | ||

| Morillo et al. [22] | PNN | Private | 0.889 | 85.22 | - | 92.4 | 95.9 | - |

| Bernardini et al. [28] | LSTM | OSAUCD | 0.704 | 0.676 | 0.399 | 0.656 | 0.680 | - |

| proposed | Transformer | 0.908 | 0.821 | 0.769 | 0.808 | 0.829 | 0.733 | |

| Per-patient (OSA = AHI ≥ 5) | ||||||||

| Bernardini et al. [28] | LSTM | OSAUCD | - | 0.633 | 0.776 | 0.826 | 0.0 | - |

| Proposed (AVG *) | Transformer | 0.868 | 0.804 | 0.422 | 0.647 | 0.801 | 0.385 | |

| Proposed (MAX *) | Transformer | OSAUCD | 0.990 | 0.968 | 0.826 | 0.996 | 0.992 | 0.776 |

| Proposed (MIN *) | 0.553 | 0.522 | 0.034 | 0.061 | 0.235 | 0.0176 | ||

| Proposed (σ *) | 0.119 | 0.121 | 0.254 | 0.295 | 0.162 | 0.249 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almarshad, M.A.; Al-Ahmadi, S.; Islam, M.S.; BaHammam, A.S.; Soudani, A. Adoption of Transformer Neural Network to Improve the Diagnostic Performance of Oximetry for Obstructive Sleep Apnea. Sensors 2023, 23, 7924. https://doi.org/10.3390/s23187924

Almarshad MA, Al-Ahmadi S, Islam MS, BaHammam AS, Soudani A. Adoption of Transformer Neural Network to Improve the Diagnostic Performance of Oximetry for Obstructive Sleep Apnea. Sensors. 2023; 23(18):7924. https://doi.org/10.3390/s23187924

Chicago/Turabian StyleAlmarshad, Malak Abdullah, Saad Al-Ahmadi, Md Saiful Islam, Ahmed S. BaHammam, and Adel Soudani. 2023. "Adoption of Transformer Neural Network to Improve the Diagnostic Performance of Oximetry for Obstructive Sleep Apnea" Sensors 23, no. 18: 7924. https://doi.org/10.3390/s23187924

APA StyleAlmarshad, M. A., Al-Ahmadi, S., Islam, M. S., BaHammam, A. S., & Soudani, A. (2023). Adoption of Transformer Neural Network to Improve the Diagnostic Performance of Oximetry for Obstructive Sleep Apnea. Sensors, 23(18), 7924. https://doi.org/10.3390/s23187924