The Accuracy and Absolute Reliability of a Knee Surgery Assistance System Based on ArUco-Type Sensors

Abstract

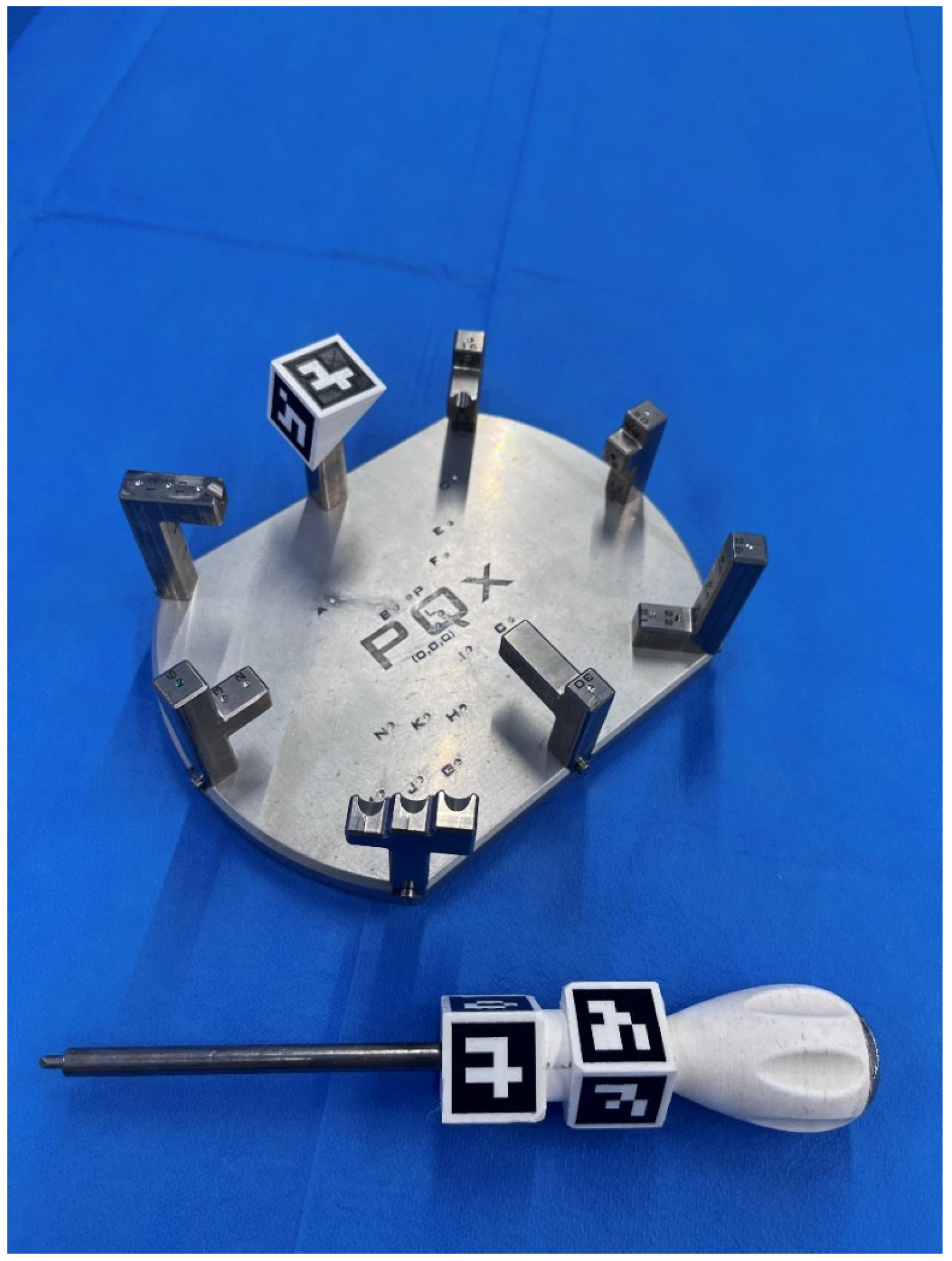

:1. Introduction

2. Materials and Methods

2.1. In Vitro Experimental Model

2.2. ArUco Fiducial Markers

2.3. Software

2.4. Optical Sensing Elements

2.5. Absolute Reliability and Observers

2.6. Statistical Analysis

3. Results

3.1. Accuracy of Distance Measurement

3.2. Accuracy of Angle Measurement

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Amiot, L.-P.; Poulin, F. Computed Tomography-Based Navigation for Hip, Knee, and Spine Surgery. Clin. Orthop. Relat. Res. 2004, 421, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Lustig, S.; Fleury, C.; Goy, D.; Neyret, P.; Donell, S.T. The accuracy of acquisition of an imageless computer-assisted system and its implication for knee arthroplasty. Knee 2011, 18, 15–20. [Google Scholar] [CrossRef] [PubMed]

- Marco, L.; Farinella, G.M. Computer Vision for Assistive Healthcare; Academic Press: Cambridge, MA, USA, 2018; ISBN 0128134461. [Google Scholar]

- Siemionow, K.B.; Katchko, K.M.; Lewicki, P.; Luciano, C.J. Augmented reality and artificial intelligence-assisted surgical navigation: Technique and cadaveric feasibility study. J. Craniovertebral Junction Spine 2020, 11, 81–85. [Google Scholar] [CrossRef] [PubMed]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Ha, J.; Parekh, P.; Gamble, D.; Masters, J.; Jun, P.; Hester, T.; Daniels, T.; Halai, M. Opportunities and challenges of using augmented reality and heads-up display in orthopaedic surgery: A narrative review. J. Clin. Orthop. Trauma 2021, 18, 209–215. [Google Scholar] [CrossRef] [PubMed]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- León-Muñoz, V.J.; Moya-Angeler, J.; López-López, M.; Lisón-Almagro, A.J.; Martínez-Martínez, F.; Santonja-Medina, F. Integration of Square Fiducial Markers in Patient-Specific Instrumentation and Their Applicability in Knee Surgery. J. Pers. Med. 2023, 13, 727. [Google Scholar] [CrossRef]

- Casari, F.A.; Navab, N.; Hruby, L.A.; Kriechling, P.; Nakamura, R.; Tori, R.; de Lourdes Dos Santos Nunes, F.; Queiroz, M.C.; Fürnstahl, P.; Farshad, M. Augmented Reality in Orthopedic Surgery Is Emerging from Proof of Concept Towards Clinical Studies: A Literature Review Explaining the Technology and Current State of the Art. Curr. Rev. Musculoskelet. Med. 2021, 14, 192–203. [Google Scholar] [CrossRef]

- Furman, A.A.; Hsu, W.K. Augmented Reality (AR) in Orthopedics: Current Applications and Future Directions. Curr. Rev. Musculoskelet. Med. 2021, 14, 397–405. [Google Scholar] [CrossRef]

- Jud, L.; Fotouhi, J.; Andronic, O.; Aichmair, A.; Osgood, G.; Navab, N.; Farshad, M. Applicability of augmented reality in orthopedic surgery—A systematic review. BMC Musculoskelet. Disord. 2020, 21, 103. [Google Scholar] [CrossRef] [PubMed]

- Matthews, J.H.; Shields, J.S. The Clinical Application of Augmented Reality in Orthopaedics: Where Do We Stand? Curr. Rev. Musculoskelet. Med. 2021, 14, 316–319. [Google Scholar] [CrossRef] [PubMed]

- Laverdière, C.; Corban, J.; Khoury, J.; Ge, S.M.; Schupbach, J.; Harvey, E.J.; Reindl, R.; Martineau, P.A. Augmented reality in orthopaedics: A systematic review and a window on future possibilities. Bone Jt. J. 2019, 101-B, 1479–1488. [Google Scholar] [CrossRef] [PubMed]

- Fiala, M. Designing Highly Reliable Fiducial Markers. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1317–1324. [Google Scholar] [CrossRef] [PubMed]

- Fiala, M. ARTag, a fiducial marker system using digital techniques. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 590–596. [Google Scholar]

- Atkinson, G.; Nevill, A.M. Selected issues in the design and analysis of sport performance research. J. Sports Sci. 2001, 19, 811–827. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, W.G. Measures of Reliability in Sports Medicine and Science. Sport. Med. 2000, 30, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Cychosz, C.C.; Tofte, J.N.; Johnson, A.; Carender, C.; Gao, Y.; Phisitkul, P. Factors Impacting Initial Arthroscopy Performance and Skill Progression in Novice Trainees. Iowa Orthop. J. 2019, 39, 7–13. [Google Scholar] [PubMed]

- Jentzsch, T.; Rahm, S.; Seifert, B.; Farei-Campagna, J.; Werner, C.M.L.; Bouaicha, S. Correlation Between Arthroscopy Simulator and Video Game Performance: A Cross-Sectional Study of 30 Volunteers Comparing 2- and 3-Dimensional Video Games. Arthrosc. J. Arthrosc. Relat. Surg. Off. Publ. Arthrosc. Assoc. N. Am. Int. Arthrosc. Assoc. 2016, 32, 1328–1334. [Google Scholar] [CrossRef]

- Shrout, P.E.; Fleiss, J.L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 1979, 86, 420–428. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Hopkins, W.G.; Marshall, S.W.; Batterham, A.M.; Hanin, J. Progressive Statistics for Studies in Sports Medicine and Exercise Science. Med. Sci. Sport. Exerc. 2009, 41, 3–12. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. The earth is round (p < 0.05): Rejoinder. Am. Psychol. 1995, 50, 1103. [Google Scholar] [CrossRef]

- Daniel, C.; Ramos, O. Augmented Reality for Assistance of Total Knee Replacement. J. Electr. Comput. Eng. 2016, 2016, 9358369. [Google Scholar] [CrossRef]

- Pokhrel, S.; Alsadoon, A.; Prasad, P.W.C.; Paul, M. A novel augmented reality (AR) scheme for knee replacement surgery by considering cutting error accuracy. Int. J. Med. Robot. 2019, 15, e1958. [Google Scholar] [CrossRef] [PubMed]

- Tsukada, S.; Ogawa, H.; Kurosaka, K.; Saito, M.; Nishino, M.; Hirasawa, N. Augmented reality-aided unicompartmental knee arthroplasty. J. Exp. Orthop. 2022, 9, 88. [Google Scholar] [CrossRef] [PubMed]

- Tsukada, S.; Ogawa, H.; Nishino, M.; Kurosaka, K.; Hirasawa, N. Augmented reality-based navigation system applied to tibial bone resection in total knee arthroplasty. J. Exp. Orthop. 2019, 6, 44. [Google Scholar] [CrossRef] [PubMed]

- Tsukada, S.; Ogawa, H.; Nishino, M.; Kurosaka, K.; Hirasawa, N. Augmented Reality-Assisted Femoral Bone Resection in Total Knee Arthroplasty. JBJS Open Access 2021, 6, e21. [Google Scholar] [CrossRef]

- Guerrero, G.; da Silva, F.J.M.; Fernández-Caballero, A.; Pereira, A. Augmented Humanity: A Systematic Mapping Review. Sensors 2022, 22, 514. [Google Scholar] [CrossRef]

- Alexander, C.; Loeb, A.E.; Fotouhi, J.; Navab, N.; Armand, M.; Khanuja, H.S. Augmented Reality for Acetabular Component Placement in Direct Anterior Total Hip Arthroplasty. J. Arthroplast. 2020, 35, 1636–1641.e3. [Google Scholar] [CrossRef]

- Fotouhi, J.; Alexander, C.P.; Unberath, M.; Taylor, G.; Lee, S.C.; Fuerst, B.; Johnson, A.; Osgood, G.; Taylor, R.H.; Khanuja, H.; et al. Plan in 2-D, execute in 3-D: An augmented reality solution for cup placement in total hip arthroplasty. J. Med. Imaging 2018, 5, 21205. [Google Scholar] [CrossRef]

- Liu, H.; Auvinet, E.; Giles, J.; Rodriguez, Y.; Baena, F. Augmented Reality Based Navigation for Computer Assisted Hip Resurfacing: A Proof of Concept Study. Ann. Biomed. Eng. 2018, 46, 1595–1605. [Google Scholar] [CrossRef] [PubMed]

- Ogawa, H.; Hasegawa, S.; Tsukada, S.; Matsubara, M. A Pilot Study of Augmented Reality Technology Applied to the Acetabular Cup Placement During Total Hip Arthroplasty. J. Arthroplast. 2018, 33, 1833–1837. [Google Scholar] [CrossRef] [PubMed]

- Ogawa, H.; Kurosaka, K.; Sato, A.; Hirasawa, N.; Matsubara, M.; Tsukada, S. Does An Augmented Reality-based Portable Navigation System Improve the Accuracy of Acetabular Component Orientation During THA? A Randomized Controlled Trial. Clin. Orthop. Relat. Res. 2020, 478, 935–943. [Google Scholar] [CrossRef]

- Fallavollita, P.; Brand, A.; Wang, L.; Euler, E.; Thaller, P.; Navab, N.; Weidert, S. An augmented reality C-arm for intraoperative assessment of the mechanical axis: A preclinical study. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 2111–2117. [Google Scholar] [CrossRef] [PubMed]

- Iacono, V.; Farinelli, L.; Natali, S.; Piovan, G.; Screpis, D.; Gigante, A.; Zorzi, C. The use of augmented reality for limb and component alignment in total knee arthroplasty: Systematic review of the literature and clinical pilot study. J. Exp. Orthop. 2021, 8, 52. [Google Scholar] [CrossRef] [PubMed]

- Bennett, K.M.; Griffith, A.; Sasanelli, F.; Park, I.; Talbot, S. Augmented Reality Navigation Can Achieve Accurate Coronal Component Alignment During Total Knee Arthroplasty. Cureus 2023, 15, e34607. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Suenaga, H.; Hoshi, K.; Yang, L.; Kobayashi, E.; Sakuma, I.; Liao, H. Augmented reality navigation with automatic marker-free image registration using 3-D image overlay for dental surgery. IEEE Trans. Biomed. Eng. 2014, 61, 1295–1304. [Google Scholar] [CrossRef]

- Wang, J.; Suenaga, H.; Yang, L.; Kobayashi, E.; Sakuma, I. Video see-through augmented reality for oral and maxillofacial surgery. Int. J. Med. Robot. 2017, 13, e1754. [Google Scholar] [CrossRef]

| Distance Measurement | Angle Measurement | |

|---|---|---|

| Number of acquisitions | n = 1890 | n = 756 |

| Mean error | 1.203 mm | 0.778° |

| Min | 0.00 mm | 0.00° |

| Max | 6.70 mm | 4.43° |

| Standard deviation | 1.031 mm | 0.719° |

| Uncertainty | 2.062 | 1.438 |

| Standard Error of the Sample | 0.024 mm | 0.026° |

| Root Mean Square Error | 1.585 mm | 1.495° |

| Mean Bias Error | 0.051 mm | 0.466° |

| Mean Absolute Error | 1.203 mm | 0.815° |

| Mean Squared Error | 2.51 mm | 2.22° |

| Distance Measurement | Angle Measurement | |

|---|---|---|

| <1 mm or ° | 52.54% (993/1890) (89 measures < 0.1 mm) | 70.24% (531/756) (71 measures < 0.1°) |

| between 1 and 2 mm or ° | 30.32% (573/1890) | 25.26% (191/756) |

| >2 mm or ° | 17.14% (324/1890) | 4.5% (34/756) |

| Intraclass Correlation | 95% Confidence Interval | ||

|---|---|---|---|

| Lower Bound | Upper Bound | ||

| Observer 1 | 0.999 | 0.998 | 0.999 |

| Observer 2 | 0.995 | 0.992 | 0.997 |

| Observer 3 | 0.999 | 0.998 | 0.999 |

| Observer 4 | 0.999 | 0.999 | 1.000 |

| Observer 5 | 0.995 | 0.992 | 0.997 |

| Observer 6 | 0.999 | 0.998 | 0.999 |

| Observer 7 | 0.999 | 0.998 | 0.999 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

León-Muñoz, V.J.; Santonja-Medina, F.; Lajara-Marco, F.; Lisón-Almagro, A.J.; Jiménez-Olivares, J.; Marín-Martínez, C.; Amor-Jiménez, S.; Galián-Muñoz, E.; López-López, M.; Moya-Angeler, J. The Accuracy and Absolute Reliability of a Knee Surgery Assistance System Based on ArUco-Type Sensors. Sensors 2023, 23, 8091. https://doi.org/10.3390/s23198091

León-Muñoz VJ, Santonja-Medina F, Lajara-Marco F, Lisón-Almagro AJ, Jiménez-Olivares J, Marín-Martínez C, Amor-Jiménez S, Galián-Muñoz E, López-López M, Moya-Angeler J. The Accuracy and Absolute Reliability of a Knee Surgery Assistance System Based on ArUco-Type Sensors. Sensors. 2023; 23(19):8091. https://doi.org/10.3390/s23198091

Chicago/Turabian StyleLeón-Muñoz, Vicente J., Fernando Santonja-Medina, Francisco Lajara-Marco, Alonso J. Lisón-Almagro, Jesús Jiménez-Olivares, Carmelo Marín-Martínez, Salvador Amor-Jiménez, Elena Galián-Muñoz, Mirian López-López, and Joaquín Moya-Angeler. 2023. "The Accuracy and Absolute Reliability of a Knee Surgery Assistance System Based on ArUco-Type Sensors" Sensors 23, no. 19: 8091. https://doi.org/10.3390/s23198091

APA StyleLeón-Muñoz, V. J., Santonja-Medina, F., Lajara-Marco, F., Lisón-Almagro, A. J., Jiménez-Olivares, J., Marín-Martínez, C., Amor-Jiménez, S., Galián-Muñoz, E., López-López, M., & Moya-Angeler, J. (2023). The Accuracy and Absolute Reliability of a Knee Surgery Assistance System Based on ArUco-Type Sensors. Sensors, 23(19), 8091. https://doi.org/10.3390/s23198091