A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder

Abstract

:1. Introduction

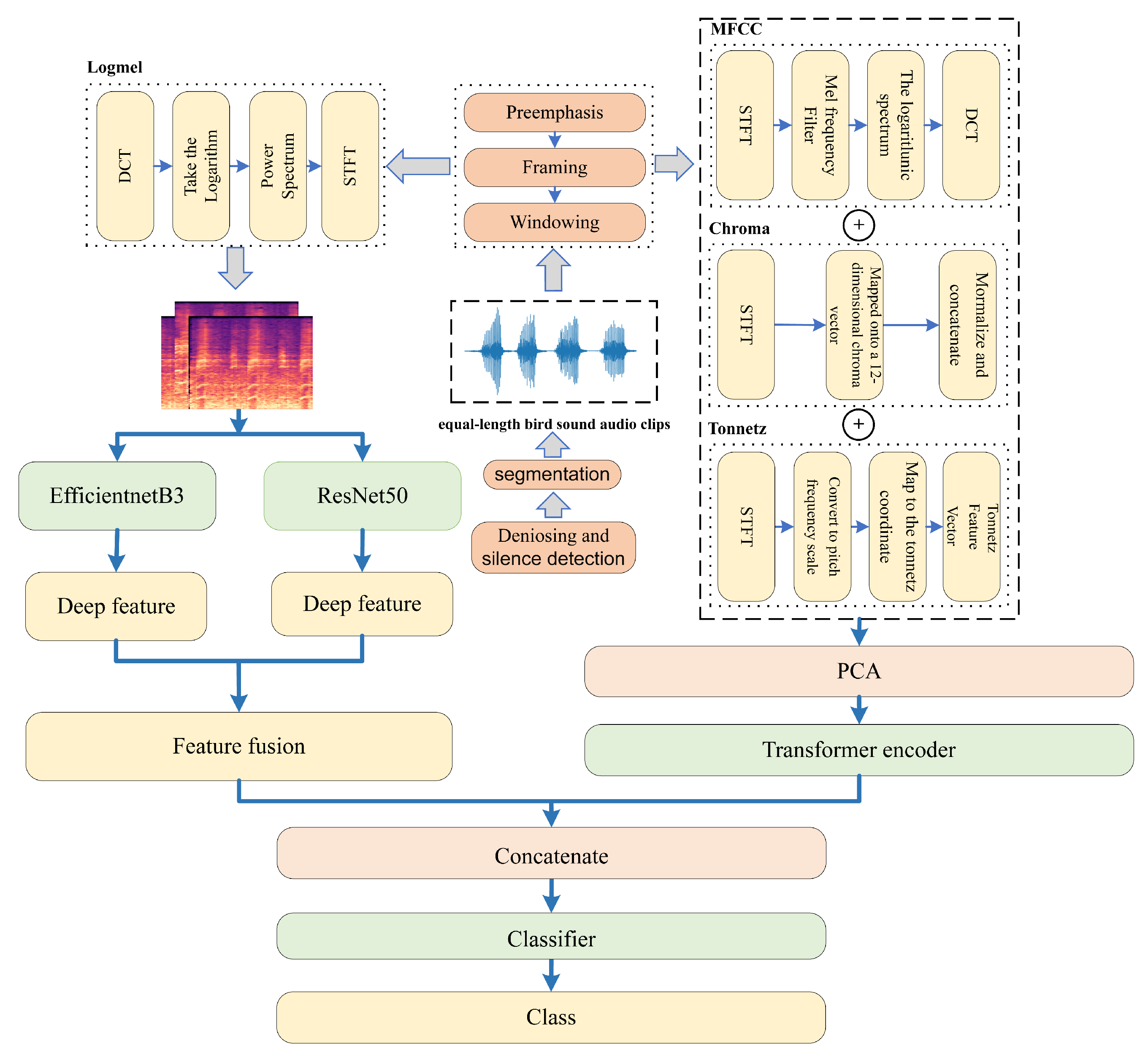

2. Materials and Methods

2.1. Dataset

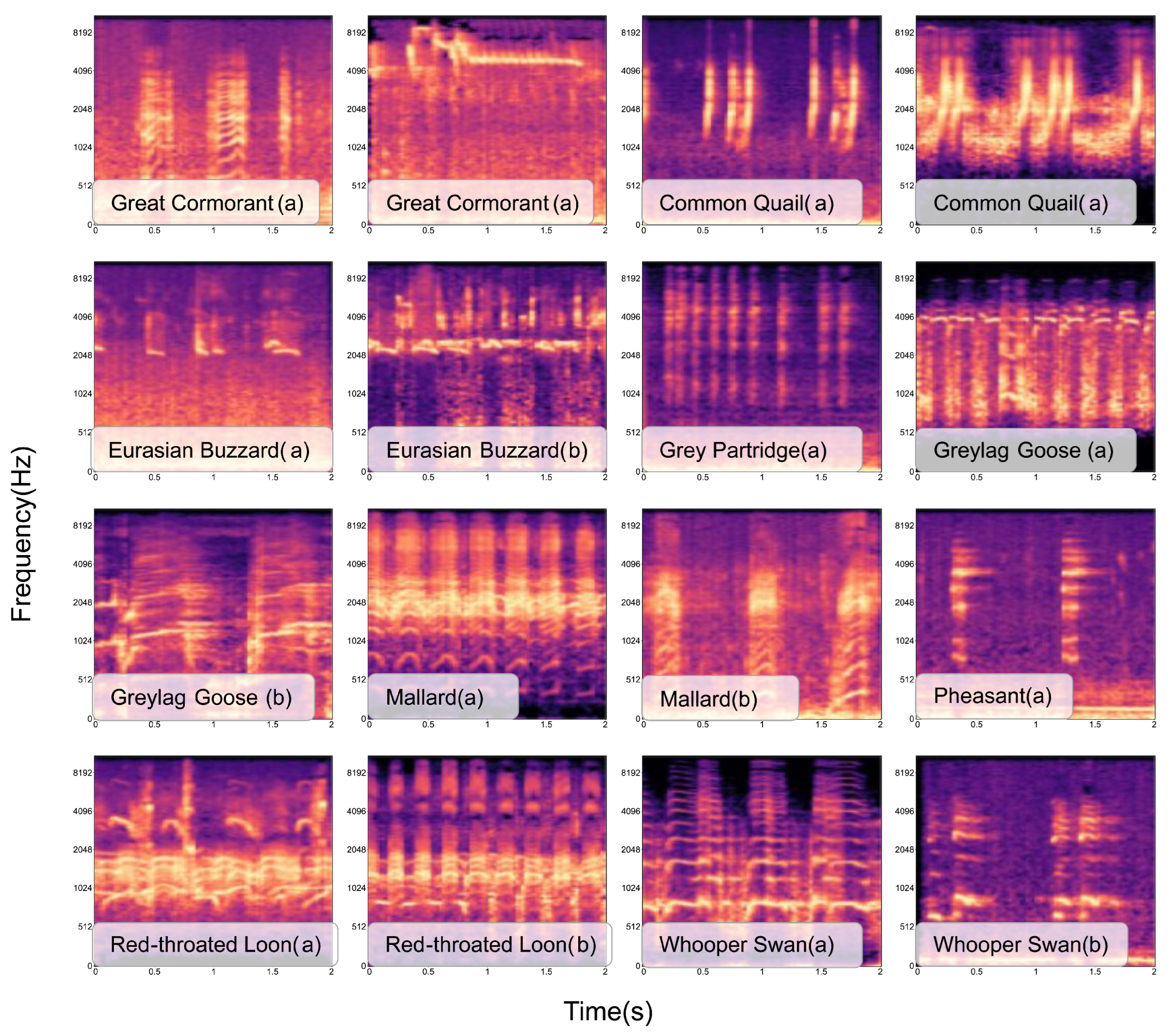

2.1.1. Birdsdata

2.1.2. Cornell Bird Challenge 2020 (CBC) Dataset

2.1.3. Partitioning of the Dataset

2.2. Data Preprocessing

2.2.1. Denoising

2.2.2. Silence Detection and Segmentation

2.3. Construction of the Input Features

2.3.1. Log-Mel Spectrograms

2.3.2. MFCC, Chroma, and Tonnetz Features

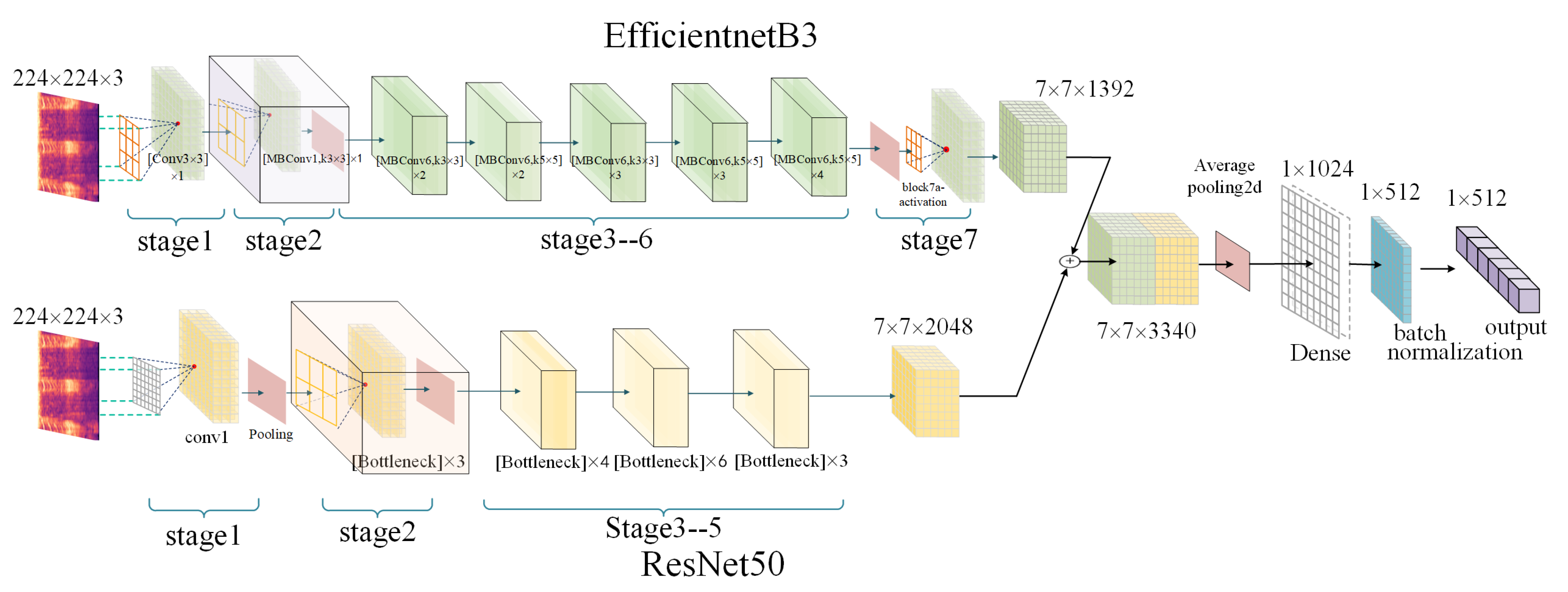

2.4. Deep Feature Extraction and Feature Fusion

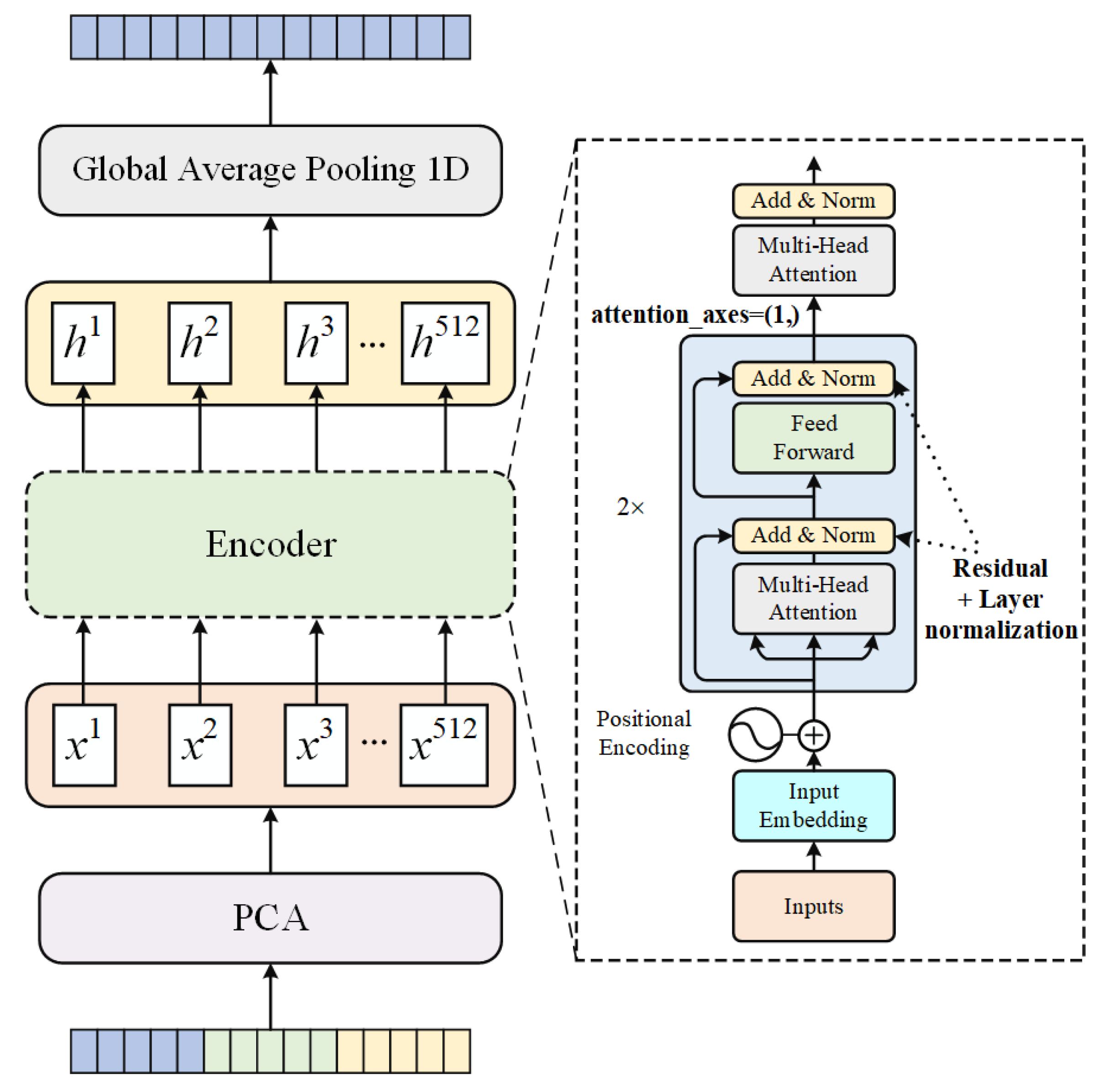

2.5. The Improved Transformer Encoder

3. Experiments and Results

3.1. Settings

3.2. Model Evaluation

3.3. Results

3.3.1. Model Performance

3.3.2. Comparisons

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Peterson, A.T.; Navarro-Sigüenza, A.G. Bird conservation and biodiversity research in Mexico: Status and priorities. J. Field Ornithol. 2016, 87, 121–132. [Google Scholar] [CrossRef]

- Gregory, R. Birds as Biodiversity Indicators for Europe. Significance 2006, 3, 106–110. [Google Scholar] [CrossRef]

- Xia, C.; Huang, R.; Wei, C.; Nie, P.; Zhang, Y. Individual identification on the basis of the songs of the Asian Stubtail (Urosphena squameiceps). Chin. Birds 2011, 2, 132–139. [Google Scholar] [CrossRef]

- Grava, T.; Mathevon, N.; Place, E.; Balluet, P. Individual acoustic monitoring of the European Eagle Owl Bubo bubo. Int. J. Avain Sci. 2008, 150, 279–287. [Google Scholar] [CrossRef]

- Morrison, C.A.; Auniņš, A.; Benkő, Z.; Brotons, L.; Chodkiewicz, T.; Chylarecki, P.; Escandell, V.; Eskildsen, D.; Gamero, A.; Herrando, S.; et al. Bird population declines and species turnover are changing the acoustic properties of spring soundscapes. Nat. Commun. 2021, 12, 6217. [Google Scholar] [CrossRef] [PubMed]

- Sainburg, T.; Thielk, M.; Gentner, T.Q. Finding, visualizing, and quantifying latent structure across diverse animal vocal repertoires. PLoS Comput. Biol. 2020, 16, e1008228. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Chen, A.; Zhou, G.; Zhang, Z.; Huang, X.; Qiang, X. Spectrogram-frame linear network and continuous frame sequence for bird sound classification. Ecol. Inform. 2019, 54, 101009. [Google Scholar] [CrossRef]

- Chen, Z.X.; Maher, R.C. Semi-automatic classification of bird vocalizations using spectral peak tracks. J. Acoust. Soc. Am. 2006, 120, 2974–2984. [Google Scholar] [CrossRef]

- Tan, L.N.; Alwan, A.; Kossan, G.; Cody, M.L.; Taylor, C.E. Dynamic time warping and sparse representation classification for birdsong phrase classification using limited training data. J. Acoust. Soc. Am. 2015, 137, 1069–1080. [Google Scholar] [CrossRef]

- Kalan, A.K.; Mundry, R.; Wagner, O.J.J.; Heinicke, S.; Boesch, C.; Kühl, H.S. Towards the automated detection and occupancy estimation of primates using passive acoustic monitoring. Ecol. Indic. 2015, 54, 217–226. [Google Scholar] [CrossRef]

- Lee, C.H.; Hsu, S.B.; Shih, J.L.; Chou, C.H. Continuous Birdsong Recognition Using Gaussian Mixture Modeling of Image Shape Features. IEEE Trans. Multimed. 2013, 15, 454–464. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, S.; Xu, Z.; Bellisario, K.; Dai, N.; Omrani, H.; Pijanowski, B.C. Automated bird acoustic event detection and robust species classification. Ecol. Inform. 2017, 39, 99–108. [Google Scholar] [CrossRef]

- Leng, Y.R.; Tran, H.D. Multi-label bird classification using an ensemble classifier with simple features. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2014 Asia-Pacific, Chiang Mai, Thailand, 9–12 December 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Stowell, D.; Plumbley, M.D. Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ 2014, 2, e488. [Google Scholar] [CrossRef] [PubMed]

- Shaheen, F.; Verma, B.; Asafuddoula, M. Impact of Automatic Feature Extraction in Deep Learning Architecture. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, H.; McLoughlin, I.; Song, Y. Robust sound event recognition using convolutional neural networks. In Proceedings of the 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 559–563. [Google Scholar]

- Boulmaiz, A.; Messadeg, D.; Doghmane, N.; Taleb-Ahmed, A. Robust acoustic bird recognition for habitat monitoring with wireless sensor networks. Int. J. Speech Technol. 2016, 19, 631–645. [Google Scholar] [CrossRef]

- Stahl, V.; Fischer, A.; Bippus, R. Quantile based noise estimation for spectral subtraction and Wiener filtering. In Proceedings of the 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing, Istanbul, Turkey, 5–9 June 2000; Cat. No. 00CH37100. Volume 3, pp. 1875–1878. [Google Scholar]

- Bardeli, R.; Wolff, D.; Kurth, F.; Koch, M.; Tauchert, K.H.; Frommolt, K.H. Detecting bird sounds in a complex acoustic environment and application to bioacoustic monitoring. Pattern Recognit. Lett. 2010, 31, 1524–1534. [Google Scholar] [CrossRef]

- Xie, J.; Hu, K.; Zhu, M.; Yu, J.; Zhu, Q. Investigation of different CNN-based models for improved bird sound classification. IEEE Access 2019, 7, 175353–175361. [Google Scholar] [CrossRef]

- Koh, C.Y.; Chang, J.Y.; Tai, C.L.; Huang, D.Y.; Hsieh, H.H.; Liu, Y.W. Bird Sound Classification Using Convolutional Neural Networks. In Proceedings of the Clef (Working Notes), Lugano, Switzerland, 9–12 September 2019. [Google Scholar]

- Himawan, I.; Towsey, M. 3D convolution recurrent neural networks for bird sound detection. In Proceedings of the 3rd Workshop on Detection and Classification of Acoustic Scenes and Events, Surrey, UK, November 19–20 2018; pp. 1–4. [Google Scholar]

- Xie, J.; Zhu, M. Handcrafted features and late fusion with deep learning for bird sound classification. Ecol. Inform. 2019, 52, 74–81. [Google Scholar] [CrossRef]

- Sankupellay, M.; Konovalov, D. Bird call recognition using deep convolutional neural network, ResNet-50. In Proceedings of the Acoustics, Adelaide, Australia, 7–9 November 2018; Voume 7, pp. 1–8. [Google Scholar]

- Puget, J.F. STFT Transformers for Bird Song Recognition. In Proceedings of the CLEF (Working Notes), Bucharest, Romania, 21–24th September 2021; pp. 1609–1616. [Google Scholar]

- Tang, Q.; Xu, L.; Zheng, B.; He, C. Transound: Hyper-head attention transformer for birds sound recognition. Ecol. Inform. 2023, 75, 102001. [Google Scholar] [CrossRef]

- Gunawan, K.W.; Hidayat, A.A.; Cenggoro, T.W.; Pardamean, B. Repurposing transfer learning strategy of computer vision for owl sound classification. Procedia Comput. Sci. 2023, 216, 424–430. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Su, Y.; Zhang, K.; Wang, J.; Madani, K. Environment sound classification using a two-stream CNN based on decision-level fusion. Sensors 2019, 19, 1733. [Google Scholar] [CrossRef]

- Xiao, H.; Liu, D.; Chen, K.; Zhu, M. AMResNet: An automatic recognition model of bird sounds in real environment. Appl. Acoust. 2022, 201, 109121. [Google Scholar] [CrossRef]

- Hidayat, A.A.; Cenggoro, T.W.; Pardamean, B. Convolutional Neural Networks for Scops Owl Sound Classification. Procedia Comput. Sci. 2021, 179, 81–87. [Google Scholar] [CrossRef]

- Neal, L.; Briggs, F.; Raich, R.; Fern, X.Z. Time-frequency segmentation of bird song in noisy acoustic environments. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 2012–2015. [Google Scholar] [CrossRef]

- Xie, J.; Zhao, S.; Li, X.; Ni, D.; Zhang, J. KD-CLDNN: Lightweight automatic recognition model based on bird vocalization. Appl. Acoust. 2022, 188, 108550. [Google Scholar] [CrossRef]

- Adavanne, S.; Drossos, K.; Çakir, E.; Virtanen, T. Stacked convolutional and recurrent neural networks for bird audio detection. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos Island, Greece, 28 August–2 September 2017; pp. 1729–1733. [Google Scholar] [CrossRef]

- Selin, A.; Turunen, J.; Tanttu, J.T. Wavelets in recognition of bird sounds. EURASIP J. Adv. Signal Process. 2006, 2007, 051806. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Proceedings of the Advances in neural information processing systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 3856–3866. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Sprengel, E.; Jaggi, M.; Kilcher, Y.; Hofmann, T. Audio based bird species identification using deep learning techniques. In Proceedings of the CEUR Workshop Proceedings, Évora, Portugal, 5–8 September 2016; pp. 547–559. [Google Scholar]

- Gupta, G.; Kshirsagar, M.; Zhong, M.; Gholami, S.; Ferres, J.L. Comparing recurrent convolutional neural networks for large scale bird species classification. Sci. Rep. 2021, 11, 17085. [Google Scholar] [CrossRef] [PubMed]

- Kiapuchinski, D.M.; Lima, C.; Kaestner, C. Spectral Noise Gate Technique Applied to Birdsong Preprocessing on Embedded Unit. In Proceedings of the IEEE International Symposium on Multimedia, Irvine, CA, USA, 10–12 December 2012. [Google Scholar]

- Oppenheim, A.V. Discrete-Time Signal Processing; Pearson Education India: 1999. Available online: https://ds.amu.edu.et/xmlui/bitstream/handle/123456789/5524/1001326.pdf?sequence=1&isAllowed=y (accessed on 17 August 2023).

- Kurzekar, P.K.; Deshmukh, R.R.; Waghmare, V.B.; Shrishrimal, P.P. A comparative study of feature extraction techniques for speech recognition system. Int. J. Innov. Res. Sci. Eng. Technol. 2014, 3, 18006–18016. [Google Scholar] [CrossRef]

- Seo, S.; Kim, C.; Kim, J.H. Convolutional Neural Networks Using Log Mel-Spectrogram Separation for Audio Event Classification with Unknown Devices. J. Web Eng. 2022, 97–522. [Google Scholar] [CrossRef]

- Leung, H.C.; Chigier, B.; Glass, J.R. A comparative study of signal representations and classification techniques for speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Minneapolis, MN, USA, 27–30 April 1993; pp. 680–683. [Google Scholar]

- Ramirez, A.D.P.; de la Rosa Vargas, J.I.; Valdez, R.R.; Becerra, A. A comparative between mel frequency cepstral coefficients (MFCC) and inverse mel frequency cepstral coefficients (IMFCC) features for an automatic bird species recognition system. In Proceedings of the 2018 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Gudalajara, Mexico, 7–9 November 2018; pp. 1–4. [Google Scholar]

- Ahmed, N.; Natarajan, T.; Rao, K.R. Discrete cosine transform. IEEE Trans. Comput. 1974, 100, 90–93. [Google Scholar] [CrossRef]

- Tzanetakis, G.; Cook, P. Musical genre classification of audio signals. IEEE Trans. Speech Audio Process. 2002, 10, 293–302. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Y. Adaptive energy detection for bird sound detection in complex environments. Neurocomputing 2015, 155, 108–116. [Google Scholar] [CrossRef]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. librosa: Audio and music signal analysis in python. In Proceedings of the 14th python in science conference, Austin, TX, USA, 6–12 July 2015; Volume 8, pp. 18–25. [Google Scholar]

- Kwan, C.; Mei, G.; Zhao, X.; Ren, Z.; Xu, R.; Stanford, V.; Rochet, C.; Aube, J.; Ho, K. Bird classification algorithms: Theory and experimental results. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 5, p. V-289. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Prazeres, M.; Oberman, A.M. Stochastic gradient descent with polyak’s learning rate. J. Sci. Comput. 2021, 89, 1–16. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- Andono, P.N.; Shidik, G.F.; Prabowo, D.P.; Yanuarsari, D.H.; Sari, Y.; Pramunendar, R.A. Feature Selection on Gammatone Cepstral Coefficients for Bird Voice Classification Using Particle Swarm Optimization. Int. J. Intell. Eng. Syst. 2023, 16. [Google Scholar] [CrossRef]

- Butt, N.; Chauvenet, A.L.; Adams, V.M.; Beger, M.; Gallagher, R.V.; Shanahan, D.F.; Ward, M.; Watson, J.E.; Possingham, H.P. Importance of species translocations under rapid climate change. Conserv. Biol. 2021, 35, 775–783. [Google Scholar] [CrossRef] [PubMed]

- Sueur, J.; Krause, B.; Farina, A. Climate change is breaking earth’s beat. Trends Ecol. Evol. 2019, 34, 971–973. [Google Scholar] [CrossRef]

- Tittensor, D.P.; Beger, M.; Boerder, K.; Boyce, D.G.; Cavanagh, R.D.; Cosandey-Godin, A.; Crespo, G.O.; Dunn, D.C.; Ghiffary, W.; Grant, S.M.; et al. Integrating climate adaptation and biodiversity conservation in the global ocean. Sci. Adv. 2019, 5, eaay9969. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Yang, S.; Kim, J.; Chang, S. QTI submission to DCASE 2021: Residual normalization for device-imbalanced acoustic scene classification with efficient design. arXiv 2022, arXiv:2206.13909. [Google Scholar]

- Mielke, A.; Zuberbühler, K. A method for automated individual, species and call type recognition in free-ranging animals. Anim. Behav. 2013, 86, 475–482. [Google Scholar] [CrossRef]

- Nanni, L.; Costa, Y.M.; Lucio, D.R.; Silla, C.N.; Brahnam, S. Combining visual and acoustic features for bird species classification. In Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA, 6–8 December 2016; pp. 396–401. [Google Scholar]

- Pérez-Granados, C.; Bota, G.; Giralt, D.; Traba, J. A cost-effective protocol for monitoring birds using autonomous recording units: A case study with a night-time singing passerine. Bird Study 2018, 65, 338–345. [Google Scholar] [CrossRef]

- Ruff, Z.J.; Lesmeister, D.B.; Duchac, L.S.; Padmaraju, B.K.; Sullivan, C.M. Automated identification of avian vocalizations with deep convolutional neural networks. Remote. Sens. Ecol. Conserv. 2020, 6, 79–92. [Google Scholar] [CrossRef]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized self-attention: Towards high-quality pixel-wise regression. arXiv 2021, arXiv:2107.00782. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M. Transformers: State-of-the-art natural language processing. In Proceedings of the Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45.

- Xie, J.; Zhong, Y.; Zhang, J.; Liu, S.; Ding, C.; Triantafyllopoulos, A. A review of automatic recognition technology for bird vocalizations in the deep learning era. Ecol. Inform. 2022, 73, 101927. [Google Scholar] [CrossRef]

| Dataset | Training Set (Classes) | Validation Set (Classes) | Testing Set (Classes) |

|---|---|---|---|

| Birdsdata | 8587 (20) | 2862 (20) | 2862 (20) |

| CBC | 68,573 (264) | 22,857 (264) | 22,857 (264) |

| Designation | Parameters |

|---|---|

| CPU | 13th Gen Intel Core i7-13700KF |

| Memory | 32 GB DDR5 |

| GPU | NVIDIA GeForce RTX 3070 |

| System platform | Windows 10 |

| Software environment | Tensorflow-gpu 2.8.0, Keras 2.8.0 |

| Cuda 11.4, Anaconda 3 |

| Experiment | Model | Features | Accuracy | Recall | F1 Score | Precision | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Birdsdata | CBC | Birdsdata | CBC | Birdsdata | CBC | Birdsdata | CBC | ||||||

| 1 | ResNet50+softmax [38] | log-mel | 94.5% | 61.2% | 94.1% | 60.3% | 91.0% | 60.5% | 94.4% | 62.0% | |||

| 2 | EfficientNetB3+softmax [37] | log-mel | 95.2% | 76.5% | 91.5% | 76.1% | 92.6% | 74.3% | 92.8% | 74.5% | |||

| 3 | DenseNet121+softmax [55] | log-mel | 93.5% | 70.9% | 93.1% | 70.2% | 90.7% | 68.8% | 91.7% | 71.3% | |||

| 4 | VGG16+softmax [56] | log-mel | 94.2% | 64.1% | 92.1% | 61.5% | 90.8% | 63.9% | 92.8% | 64.0% | |||

| 5 | EffcientNetB3+ResNet50+softmax | log-mel | 96.0% | 81.3% | 93.6% | 80.7% | 94.1% | 80.2% | 94.8% | 81.3% | |||

| 6 | EffcientNetB3+ResNet50+LightGBM | log-mel | 97.1% | 90.8% | 96.0% | 89.2% | 93.9% | 90.6% | 93.8% | 90.2% | |||

| 7 | EffcientNetB3+ResNet50+softmax | MFCC+Chroma+Tonnetz | 85.6% | 71.4% | 85.5% | 66.3% | 83.9% | 68.7% | 85.0% | 67.3% | |||

| 8 | LightGBM [39] | MFCC+Chroma+Tonnetz | 88.5% | 81.2% | 86.3% | 81.2% | 87.6% | 79.8% | 88.6% | 81.5% | |||

| 9 | Transformer encoder+LightGBM [28,39] | Chroma+Tonnetz | 81.6% | 78.5% | 82.3% | 76.9% | 82.9% | 76.6% | 82.9% | 77.0% | |||

| 10 | Transformer encoder+LightGBM | MFCC+Chroma+Tonnetz | 89.4% | 83.1% | 89.2% | 82.9% | 88.5% | 80.2% | 89.6% | 80.5% | |||

| 11 | Transformer encoder+LightGBM | MFCC+Chroma+Tonnetz+Spectral contrast | 88.5% | 83.5% | 89.0% | 81.7% | 87.9% | 82.2% | 89.3% | 82.1% | |||

| 12 | BirdNET [57] | spectrogram | 86.7% | 68.3% | 87.9% | 66.5% | 86.3% | 68.1% | 86.5% | 67.9% | |||

| 13 | AMResNet [30] | log-mel+Spectral contrast+Chroma+Tonnetz | 88.7% | 82.1% | 88.0% | 82.4% | 88.5% | 82.1% | 88.1% | 81.9% | |||

| 14 | Methodology of this article | log-mel+MFCC +Chroma+Tonnetz | 98.0% | 93.2% | 96.1% | 92.4% | 96.9% | 93.1% | 97.8% | 93.3% | |||

| Classes | Accuracy (%) | Recall (%) | Precision (%) | F1 Score (%) | Samples |

|---|---|---|---|---|---|

| Gray Goose | 99.6 | 96.1 | 94.6 | 95.3 | 127 |

| Whooper Swan | 99.8 | 98.7 | 99.4 | 99.0 | 160 |

| Mallard | 99.7 | 98.0 | 97.4 | 97.7 | 153 |

| Green–Winged Teal | 99.9 | 100 | 98.4 | 99.1 | 120 |

| Grey Partridge | 99.9 | 66.7 | 100 | 80.0 | 6 |

| Common Quail | 99.9 | 99.3 | 100 | 99.6 | 148 |

| Common Pheasant | 99.6 | 96.8 | 96.2 | 96.5 | 159 |

| Red-Throated Loon | 99.8 | 98.2 | 98.8 | 98.5 | 167 |

| Gray Heron | 99.8 | 98.2 | 98.8 | 98.5 | 170 |

| Great Cormorant | 99.8 | 99.4 | 97.1 | 98.3 | 171 |

| Northern Goshawk | 99.6 | 97.3 | 95.3 | 96.3 | 147 |

| Eurasian Buzzard | 99.6 | 87.9 | 92.7 | 90.3 | 58 |

| Water Rail | 99.6 | 96.3 | 98.2 | 95.3 | 136 |

| Common Coot | 99.9 | 97.8 | 98.9 | 98.3 | 92 |

| Black–Winged Stilt | 99.9 | 98.7 | 100 | 99.3 | 157 |

| Northern Lapwing | 99.9 | 99.4 | 99.3 | 99.4 | 163 |

| Green Sandpiper | 99.9 | 98.6 | 99.3 | 98.9 | 142 |

| Common Redshank | 99.8 | 97.5 | 98.7 | 98.0 | 158 |

| Wood Sandpiper | 99.9 | 99.4 | 98.8 | 99.0 | 165 |

| Eurasian Tree Sparrow | 99.5 | 98.3 | 96.3 | 97.3 | 239 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Gao, Y.; Cai, J.; Yang, H.; Zhao, Q.; Pan, F. A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder. Sensors 2023, 23, 8099. https://doi.org/10.3390/s23198099

Zhang S, Gao Y, Cai J, Yang H, Zhao Q, Pan F. A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder. Sensors. 2023; 23(19):8099. https://doi.org/10.3390/s23198099

Chicago/Turabian StyleZhang, Shaokai, Yuan Gao, Jianmin Cai, Hangxiao Yang, Qijun Zhao, and Fan Pan. 2023. "A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder" Sensors 23, no. 19: 8099. https://doi.org/10.3390/s23198099

APA StyleZhang, S., Gao, Y., Cai, J., Yang, H., Zhao, Q., & Pan, F. (2023). A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder. Sensors, 23(19), 8099. https://doi.org/10.3390/s23198099