A Flower Pollination Optimization Algorithm Based on Cosine Cross-Generation Differential Evolution

Abstract

1. Introduction

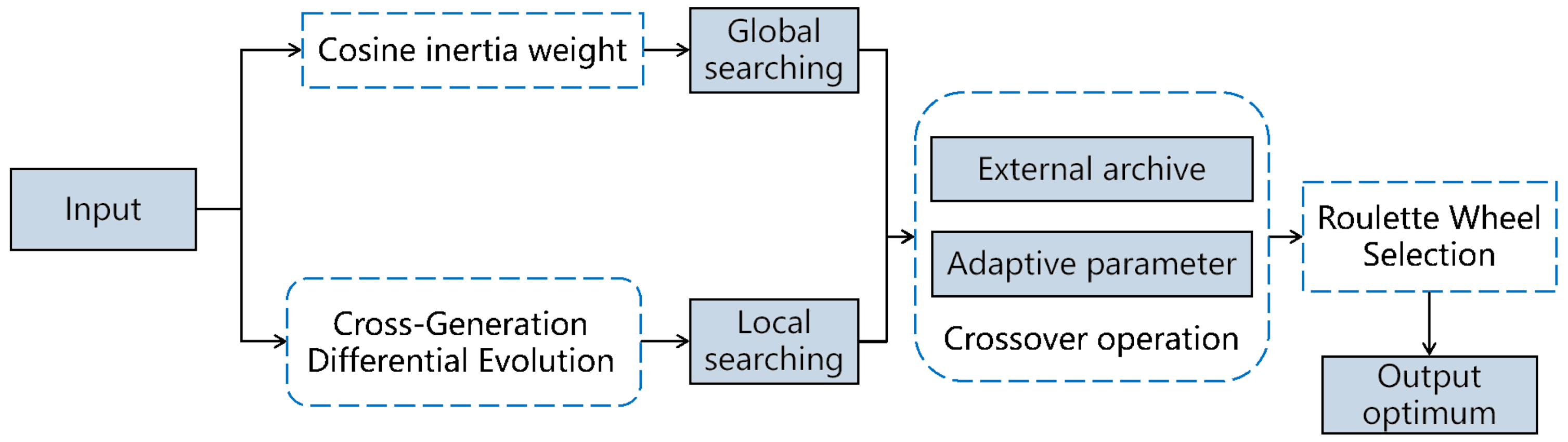

2. Preliminary Review

3. The Flower Pollination Algorithm Based on Cosine Cross-Generation Differential Evolution (FPA-CCDE)

3.1. Cosine Inertia Weight

3.2. Cross-Generation Differential Evolution

3.3. External Archiving Mechanism

3.4. Parameter Adaptive Adjustment Mechanism

3.5. Cross-Generation Roulette Wheel Selection

- For pollen individuals i, is the mapping weight of the fitness value. The cross-generation roulette wheel selection strategy selects appropriate parameters in the target scope. Consequently, it can eliminate inappropriate parameters and reduce the probability of complete convergence of parameters. The specific actions are broken down into the following steps.

| Algorithm 1 Flower Pollination Algorithm Based on Cosine Cross-Generation Differential Evolution (FPA-CCDE) |

|

4. Evaluation forthe FPA-CCDE

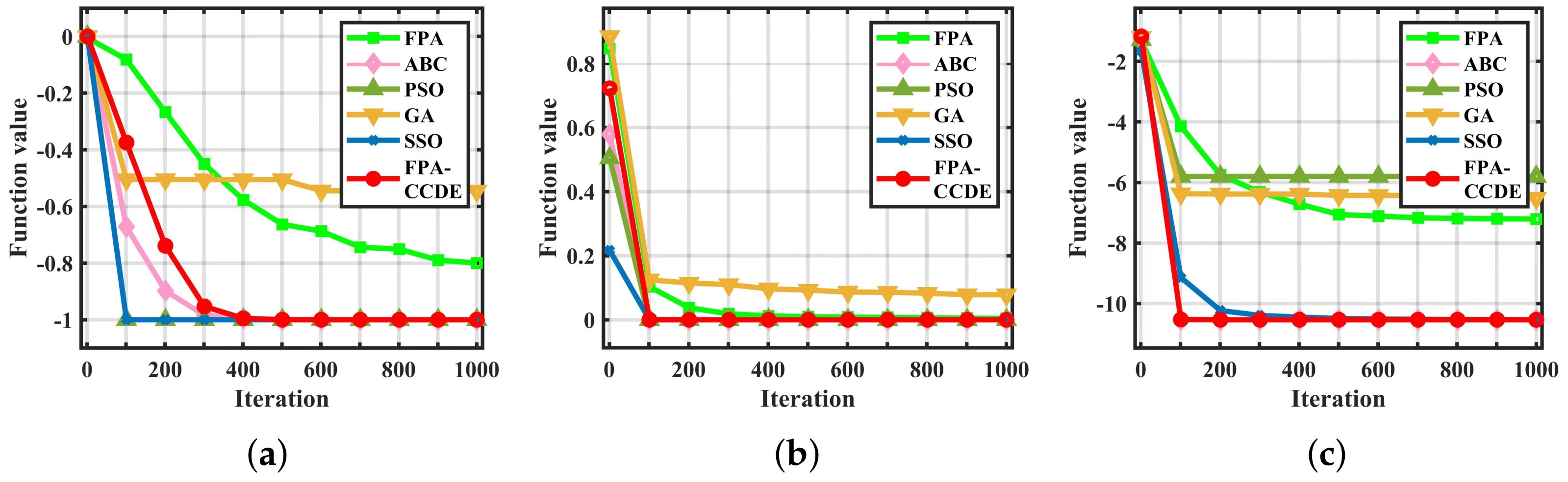

4.1. Performance Comparison on Low-Dimensional Benchmark Functions

| Functions | FPA-CCDE | FPA [14] | ABC [15] | PSO [16] | GA [17] | SSO [18] |

|---|---|---|---|---|---|---|

| F2 | 3.1021 × 10 (4.3388 × 10) | 4.1451 × 10 (7.0754 × 10) | 6.8256 × 10 (1.3239 × 10) | 0.0000 × 10 (0.0000 × 10) | 7.8197 × 10 (1.6845 × 10) | 1.5299 × 10 (1.7006 × 10) |

| F3 | 0.0000 × 10 (0.0000 × 10) | 2.2574 × 10 (2.2744 × 10) | 0.0000 × 10 (0.0000 × 10) | 0.0000 × 10 (0.0000 × 10) | 6.2051 × 10 (2.9506 × 10) | 1.9375 × 10 (1.4281 × 10) |

| F4 | 0.0000 × 10 (0.0000 × 10) | 1.7376 × 10 (1.3740 × 10) | 2.8247 × 10 (1.7454 × 10) | 0.0000 × 10 (0.0000 × 10) | 4.7987 × 10 (3.1709 × 10) | 1.2790 × 10 (5.9245 × 10) |

| F5 | −2.0626 × 10 (3.1346 × 10) | −2.0626 × 10 (1.8779 × 10) | −2.0626 × 10 (9.0649 × 10) | −2.0626 × 10 (9.0649 × 10) | −2.0625 × 10 (1.3597 × 10) | −2.0626 × 10 (1.2666 × 10) |

| F7 | −1.0000 × 10 (0.0000 × 10) | −9.5125 × 10 (2.7284 × 10) | −1.0000 × 10 (0.0000 × 10) | −1.0000 × 10 (0.0000 × 10) | −9.5125 × 10 (1.1331 × 10) | −9.9999 × 10 (4.6850 × 10) |

| F8 | −1.0000 × 10 (0.0000 × 10) | −7.9984 × 10 (3.5024 × 10) | −9.9999 × 10 (8.4234 × 10) | −1.0000 × 10 (0.0000 × 10) | −5.4542 × 10 (4.9345 × 10) | −1.0000 × 10 (2.1452 × 10) |

| F14 | 3.0000 × 10 (2.0121 × 10) | 3.0024 × 10 (3.0070 × 10) | 3.0000 × 10 (1.8198 × 10) | 3.0000 × 10 (1.2128 × 10) | 3.9060 × 10 (1.0226 × 10) | 3.00004 × 10 (3.6979 × 10) |

| F16 | −3.8627 × 10 (1.8995 × 10) | −3.8627 × 10 (7.5327 × 10) | −3.8627 × 10 (2.7194 × 10) | −3.8627 × 10 (2.6691 × 10) | −3.8627 × 10 (1.8206 × 10) | −3.8627 × 10 (4.2692 × 10) |

| F17 | −3.0679 × 10 (3.1312 × 10) | −3.0155 × 10 (2.9497 × 10) | −3.0424 × 10 (9.0649 × 10) | −3.0031 × 10 (3.0102 × 10) | −3.0113 × 10 (2.8284 × 10) | −3.0305 × 10 (2.2595 × 10) |

| F18 | −1.9208 × 10 (5.4266 × 10) | −1.9207 × 10 (1.4184 × 10) | −1.9208 × 10 (7.7768 × 10) | −1.9208 × 10 (6.1960 × 10) | −1.9207 × 10 (5.2294 × 10) | −1.9208 × 10 (8.5032 × 10) |

| F20 | −1.8013 × 10 (4.2850 × 10) | −1.8011 × 10 (2.7689 × 10) | −1.8013 × 10 (6.7987 × 10) | −1.8013 × 10 (6.7987 × 10) | −1.8011 × 10 (8.7772 × 10) | −1.8013 × 10 (2.0978 × 10) |

| F22 | 2.9197 × 10 (2.5958 × 10) | 1.2187 × 10 (6.1220 × 10) | 1.8688 × 10 (1.7140 × 10) | 1.4393 × 10 (2.6987 × 10) | 4.4791 × 10 (9.9631 × 10) | 5.7462 × 10 (1.6986 × 10) |

| F28 | 0.0000 × 10 (0.0000 × 10) | 5.0174 × 10 (5.2430 × 10) | 1.5375 × 10 (3.4115 × 10) | 0.0000 × 10 (0.0000 × 10) | 1.0890 × 10 (1.7702 × 10) | 3.8345 × 10 (1.0001 × 10) |

| F30 | −1.0536 × 10 (1.1934 × 10) | −7.2096 × 10 (3.4956 × 10) | −1.0536 × 10 (6.2565 × 10) | 5.8020 × 10 (3.3779 × 10) | −6.5184 × 10 (3.1127 × 10) | −1.0533 × 10 (1.5542 × 10) |

| F31 | −1.0316 × 10 (2.1531 × 10) | −1.0319 × 10 (3.8595 × 10) | −1.0316 × 10 (2.2662 × 10) | −1.0316 × 10 (2.2662 × 10) | −1.0302 × 10 (1.2697 × 10) | −1.0316 × 10 (1.1275 × 10) |

| F32 | −1.9410 × 10 (3.2685 × 10) | −1.9410 × 10 (1.2658 × 10) | −1.9410 × 10 (4.5324 × 10) | −1.9410 × 10 (4.9650 × 10) | −1.9410 × 10 (1.0748 × 10) | −1.9410 × 10 (8.4117 × 10) |

| F35 | 4.5477 × 10 (2.8866 × 10) | 7.0076 × 10 (1.3636 × 10) | 1.8304 × 10 (1.5938 × 10) | 4.4158 × 10 (2.2079 × 10) | 1.3759 × 10 (1.3743 × 10) | 1.40547 × 10 (1.24796 × 10) |

| F36 | −1.5198 × 10 (2.1675 × 10) | 3.5801 × 10 (1.3014 × 10) | −1.3389 × 10 (1.1533 × 10) | −9.2442 × 10 (5.3658 × 10) | 1.4270 × 10 (4.1396 × 10) | −1.4922 × 10 (5.4167 × 10) |

| +/=/− | 15/3/0 | 8/10/0 | 5/12/1 | 16/2/0 | 14/4/0 |

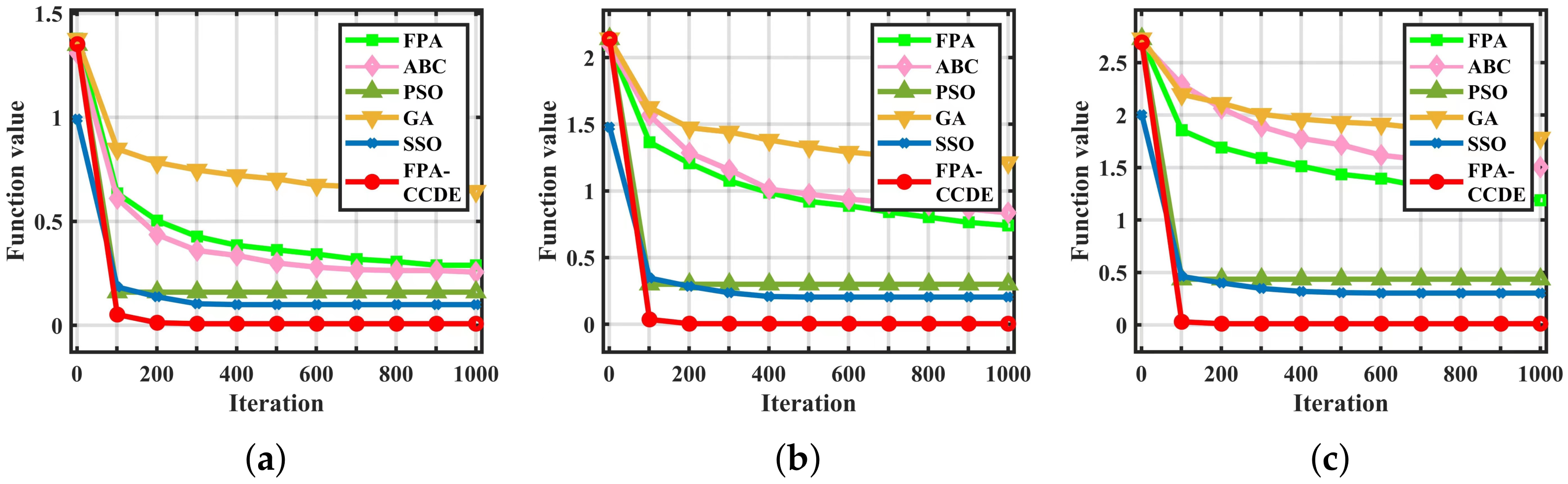

4.2. Performance Comparison on High-Dimensional Benchmark Functions

| Functions | FPA-CCDE | FPA | ABC | PSO | GA | SSO |

|---|---|---|---|---|---|---|

| F1 | 9.4147 × (1.3831 × ) | 1.5080 × (9.7566 × ) | 5.7545 × (2.5028 × ) | 4.1650 × (6.5479 × ) | 1.6527 × (5.8660 × ) | 2.9000 × (3.3065 × ) |

| F6 | 1.5782 × (1.2026 × ) | 3.2860 × (1.8733 × ) | 1.0434 × (5.7614 × ) | 6.9923 × (3.3423 × ) | 3.5530 × (1.0824 × ) | 2.5406 × (9.0102 × ) |

| F15 | 0.0000 × (0.0000 × ) | 7.8836 × (2.0933 × ) | 5.0017 × (2.5007 × ) | 8.5954 × (4.1484 × ) | 1.8969 × (2.5099 × ) | 1.8620 × (1.2621 × ) |

| F19 | 1.4998 × (2.5761 × ) | 3.5660 × (8.0118 × ) | 4.8711 × (3.3297 × ) | 1.5369 × (9.8456 × ) | 7.0606 × (9.2977 × ) | 1.4748 × (2.3450 × ) |

| F21 | 7.8109 × (1.9111 × ) | 1.1668 × (2.9367 × ) | 1.1511 × (2.0025 × ) | 6.2434 × (8.4791 × ) | 1.1301 × (4.5514 × ) | 3.5535 × (8.1652 × ) |

| F23 | 1.7355 × (3.0057 × ) | 4.9245 × (1.8600 × ) | 4.3686 × (1.3277 × ) | 4.9038 × (3.2106 × ) | 4.0988 × (1.0626 × ) | 1.6126 × (5.5078 × ) |

| F24 | −1.4344 × (3.6431 × ) | −4.9410 × (3.8186 ×) | −1.2208 × (2.2864 ×) | −3.2450 × (3.5118 ×) | −5.6765 × (1.8977 ×) | −7.9893 × (8.4372 ×) |

| F25 | 1.1137 × (3.0381 × ) | 2.3523 × (1.6087 × ) | 1.8038 × (7.4305 × ) | 3.9470 × (8.9127 × ) | 2.4371 × (1.7642 × ) | 4.7235 × (1.0067 × ) |

| F26 | 0.0000 × (0.00000 × ) | 2.3426 × (1.2743 × ) | 2.7476 × (2.0968 × ) | 1.8593 × (6.6331 × ) | 2.5259 × (9.1306 × ) | 6.7862 × (3.6053 × ) |

| F27 | 9.5293 × (3.1604 × ) | 4.7212 × (1.3112 × ) | 1.6450 × 11 (1.4457 × 11) | 3.3784 × (2.8782 × ) | 1.2034 × (2.3265 × ) | 1.1852 × (2.7957 × ) |

| F29 | 1.2451 × (3.7184 × ) | 6.9380 × (4.9156 × ) | 1.6629 × (1.1654 × ) | 9.2941 × (3.6015 × ) | 5.9258 × (4.1609 × ) | 6.7862 × (3.6053 × ) |

| F33 | 0.0000 × (0.0000 × ) | 8.9432 × (2.3352 × ) | 0.0000 × (0.0000 × ) | 6.5480 × (4.5312 × ) | 2.2026 × (2.8696 × ) | 2.4000 × (4.3589 × ) |

| F34 | 6.2388 × (1.1777 × ) | 1.3207 × (1.5938 × ) | 3.0464 × (8.7926 × ) | 2.0137 × (1.0508 × ) | 1.4142 × (1.2205 × ) | 2.9222 × (5.1325 × ) |

| F37 | 1.6338 × (2.1111 × ) | 1.2515 × (1.2515 × ) | 2.4360 × (2.6737 × ) | 9.5543 × (4.8483 × ) | 2.5296 × (4.6531 × ) | 5.0225 × (1.1329 × ) |

| +/=/− | 14/0/0 | 12/1/1 | 14/0/0 | 14/0/0 | 14/0/0 |

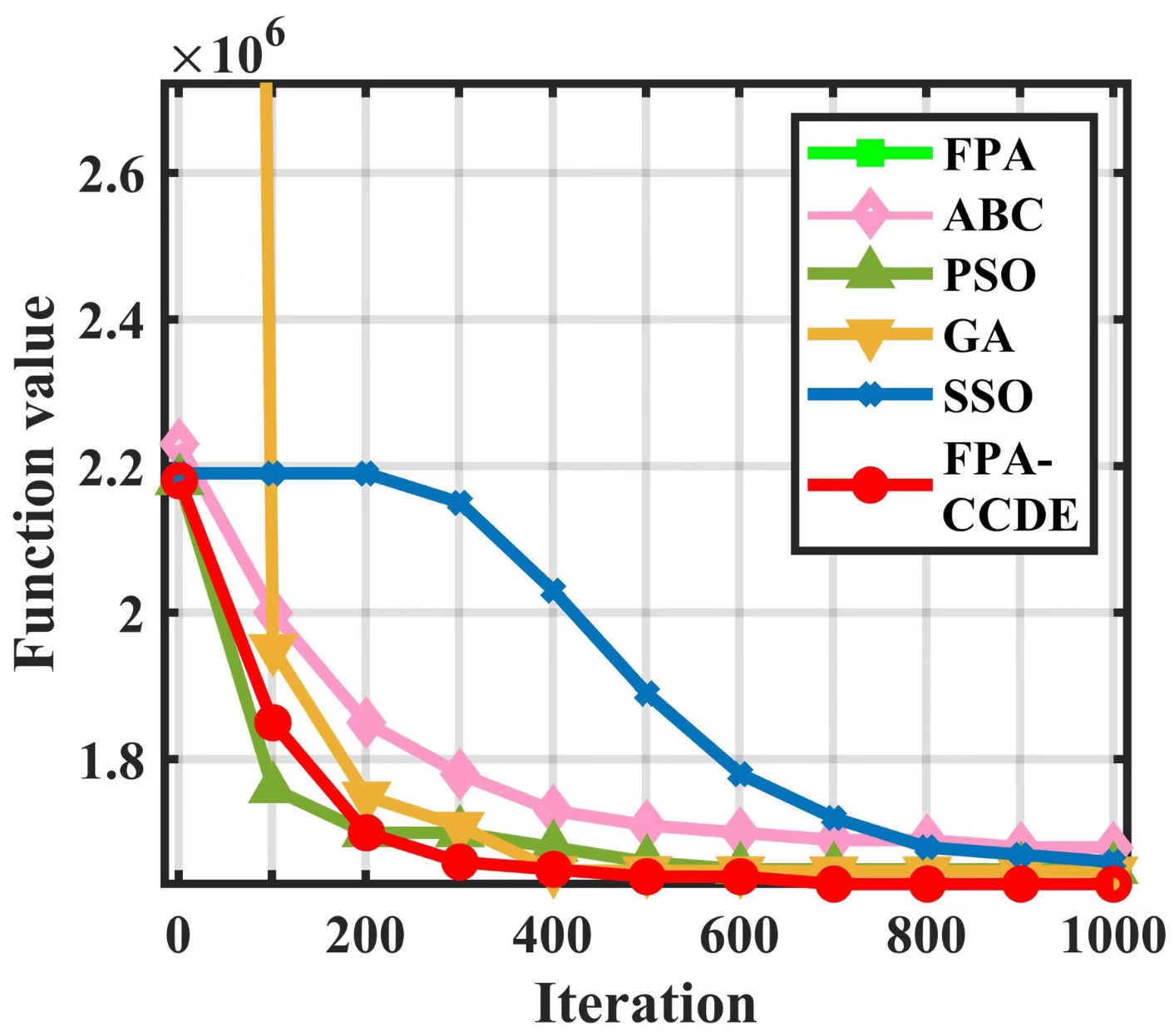

4.3. Performance Comparison on Scalable Benchmark Functions

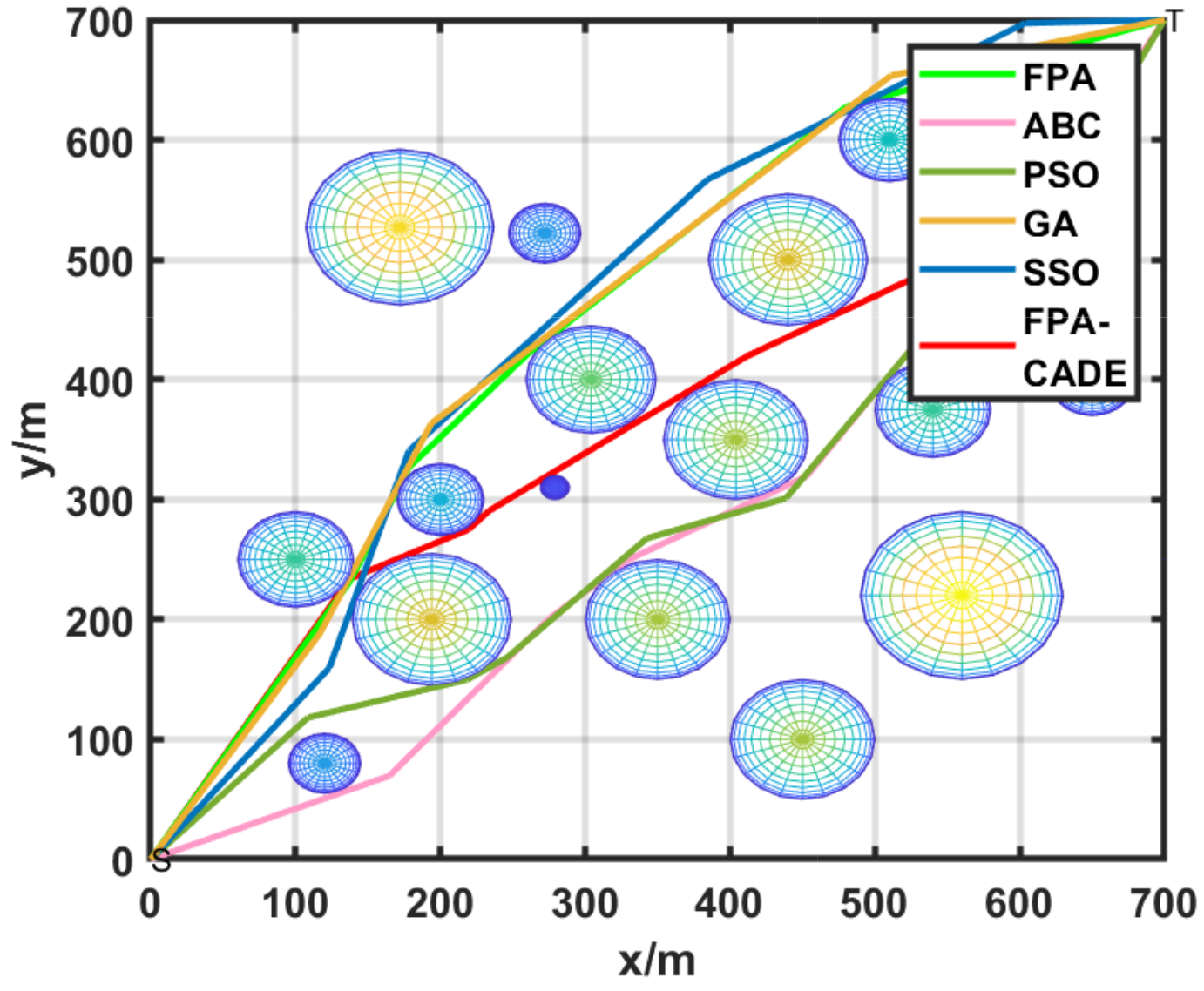

5. Application of the FPA-CCDE in Inspection Robot Path Planning

- The last two constraints are the velocity and acceleration of the robot. The linear velocity , angular velocity , linear acceleration and angular acceleration are defined as:Let the maximum linear velocity be , the maximum angular velocity be , the maximum linear acceleration be , and the maximum angular acceleration be . The limitations can be stated in the following manner:On the basis of the above constraints, we consider four costs, including energy cost, steering cost, threat attacking cost, and time cost.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liang, Y.C.; Smith, A.E. An ant colony optimization algorithm for the redundancy allocation problem (RAP). IEEE Trans. Reliab. 2004, 53, 417–423. [Google Scholar] [CrossRef]

- Ma, Z.; Ai, B.; He, R.; Wang, G.; Zhong, Z. Impact of UAV Rotation on MIMO Channel Characterization for Air-to-Ground Communication Systems. IEEE Trans. Veh. Technol. 2020, 69, 12418–12431. [Google Scholar] [CrossRef]

- Pandey, P.; Shukla, A.; Tiwari, R. Aerial path planning using meta-heuristics: A survey. In Proceedings of the 2017 Second International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 22–24 February 2017; pp. 1–7. [Google Scholar]

- Song, B.; Qi, G.; Xu, L. A Survey of Three-Dimensional Flight Path Planning for Unmanned Aerial Vehicle. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 5010–5015. [Google Scholar]

- Song, Y.; Zhang, K.; Hong, X.; Li, X. A novel multi-objective mutation flower pollination algorithm for the optimization of industrial enterprise R&D investment allocation. Appl. Soft Comput. 2021, 109, 107530. [Google Scholar]

- Wei, S.; Zhang, S.J.; Cheng, Y.F. An Improved Multi-Objective Genetic Algorithm for Large Planar Array Thinning. IEEE Trans. Magn. 2016, 52, 1–4. [Google Scholar]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Mhs95 Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 2002. [Google Scholar]

- Cao, H.; Hu, H.; Qu, Z.; Yang, L. Heuristic solutions of virtual network embedding: A survey. China Commun. 2018, 15, 186–219. [Google Scholar] [CrossRef]

- Pan, L.; Feng, X.; Sang, F.; Li, L.; Leng, M. An improved back propagation neural network based on complexity decomposition technology and modified flower pollination optimization for short-term load forecasting. Neural Comput. Appl. 2019, 31, 2679–2697. [Google Scholar] [CrossRef]

- Gan, C.; Cao, W.H.; Liu, K.Z.; Wu, M.; Zhang, S.B. A New Hybrid Bat Algorithm and its Application to the ROP Optimization in Drilling Processes. IEEE Trans. Ind. Inform. 2020, 16, 7338–7348. [Google Scholar] [CrossRef]

- San-José-Revuelta, L.M.; Casaseca-de-la-Higuera, P. A new flower pollination algorithm for equalization in synchronous DS/CDMA multiuser communication systems. Soft Comput. 2020, 24, 13069–13083. [Google Scholar] [CrossRef]

- Wu, T.; Feng, Z.; Wu, C.; Lei, G.; Wang, X. Multiobjective Optimization of a Tubular Coreless LPMSM Based on Adaptive Multiobjective Black Hole Algorithm. IEEE Trans. Ind. Electron. 2020, 67, 3901–3910. [Google Scholar] [CrossRef]

- Li, J.Q.; Pan, Q.K.; Duan, P.Y. An Improved Artificial Bee Colony Algorithm for Solving Hybrid Flexible Flowshop With Dynamic Operation Skipping. IEEE Trans. Cybern. 2016, 46, 1311–1324. [Google Scholar] [CrossRef]

- Chandran, T.R.; Reddy, A.V.; Janet, B. An effective implementation of Social Spider Optimization for text document clustering using single cluster approach. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 508–511. [Google Scholar]

- Karaboga, D.; Basturk, B. On the performance of artificial bee colony (ABC) algorithm. Appl. Soft Comput. 2008, 687–697. [Google Scholar] [CrossRef]

- Klein, C.E.; Segundo, E.H.V.; Mariani, V.C.; Leandro, D.S.C. Modified Social-Spider Optimization Algorithm Applied to Electromagnetic Optimization. IEEE Trans. Magn. 2016, 52, 1–4. [Google Scholar] [CrossRef]

- Chen, X.; Tang, C.; Jian, W.; Lei, Z. A novel hybrid wolf pack algorithm with harmony search for global numerical optimization. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; pp. 2164–2169. [Google Scholar]

- Huang, M.; Zhan, X.; Liang, X. Improvement of Whale Algorithm and Application. In Proceedings of the 2019 IEEE 7th International Conference on Computer Science and Network Technology (ICCSNT), Dalian, China, 19–20 October 2019; pp. 6–8. [Google Scholar]

- Sudabattula, S.K.; Kowsalya, M.; Velamuri, S.; Melimi, R.K. Optimal Allocation of Renewable Distributed Generators and Capacitors in Distribution System Using Dragonfly Algorithm. In Proceedings of the 2018 International Conference on Intelligent Circuits and Systems (ICICS), Phagwara, India, 20–21 April 2018; pp. 393–396. [Google Scholar]

- Wei, G.U. An improved whale optimization algorithm with cultural mechanism for high-dimensional global optimization problems. In Proceedings of the 2020 IEEE International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 6–8 November 2020; pp. 1282–1286. [Google Scholar]

- Peng, J.; Ye, Y.; Chen, S.; Dong, C. A novel chaotic dragonfly algorithm based on sine-cosine mechanism for optimization design. In Proceedings of the 2019 2nd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 28–30 September 2019; pp. 185–188. [Google Scholar]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms, 2nd ed.; Xinshe, Y., Ed.; Luniver Press: Frome, UK, 2019. [Google Scholar]

- Wang, H.; Sun, H.; Li, C. Diversity enhanced particle swarm optimization with neighborhood search. Inf. Sci. 2013, 223, 119–135. [Google Scholar] [CrossRef]

- Tian, M.; Gao, X.; Dai, C. Differential evolution with improved individual-based parameter setting and selection strategy. Appl. Soft Comput. 2017, 56, 286–297. [Google Scholar] [CrossRef]

- Yang, X.S. Flower Pollination Algorithm for Global Optimization. In Proceedings of the Unconventional Computation and Natural Computation: 11th International Conference (UCNC 2012), Orleans, France, 3–7 September 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Zhou, Y.; Wang, R.; Luo, Q. Elite opposition-based flower pollination algorithm. Neurocomputing 2016, 188, 294–310. [Google Scholar] [CrossRef]

- Bian, J.H.; He, X.T.; Fan, Q.W. Structural optimization of BP neural network based on adaptive flower pollination algorithm. Comput. Eng. Appl. 2018, 54, 50–56. [Google Scholar]

- Supriya, D.; Palaniandavar, V. An improved global-best-driven flower pollination algorithm for optimal design of two-dimensional fir filter. Soft Comput. 2019, 23, 8855–8872. [Google Scholar]

- Yang, X.; Shen, Y.J. An Improved Flower Pollination Algorithm with three Strategies and its Applications. Neural Process. Lett. 2020, 51, 675–695. [Google Scholar] [CrossRef]

- Hui, S.; Suganthan, P.N. Ensemble and Arithmetic Recombination-Based Speciation Differential Evolution for Multimodal Optimization. IEEE Trans. Cybern. 2016, 46, 64–74. [Google Scholar] [CrossRef]

- Qu, B.Y.; Liang, J.J.; Wang, Z.Y.; Liu, D.M. Solving CEC 2015 multi-modal competition problems using neighborhood based speciation differential evolution. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 3214–3219. [Google Scholar]

- Li, Y.L.; Zhan, Z.H.; Gong, Y.J.; Chen, W.N.; Zhang, J.; Li, Y. Differential Evolution with an Evolution Path: A DEEP Evolutionary Algorithm. IEEE Trans. Cybern. 2015, 45, 1798–1810. [Google Scholar] [CrossRef]

- Liang, J.J.; Runarsson, T.P.; Mezura-Montes, E.; Clerc, M.; Suganthan, P.N.; Coello, C.C.; Deb, K. Problem definitions and evaluation criteria for the cec 2006 special session on constrained real-parameter optimization. J. Appl. Mech. 2006, 41, 8–31. [Google Scholar]

- Tang, K.; Yao, X.; Suganthan, P.N.; Chen, Y.P.; Chen, C.M.; Yang, Z. Benchmark Functions for the cec’2008 Special Session and Competition on Large Scale Global Optimization; USTC: Hefei, China, 2007. [Google Scholar]

- Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2010 Competition on Constrained Real-Parameter Opti-Mization. 2007. Available online: https://al-roomi.org/multimedia/CEC_Database/CEC2010/RealParameterOptimization/CEC2010_RealParameterOptimization_TechnicalReport.pdf (accessed on 8 November 2022).

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N.; Hernández-Díaz, A.G. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization. 2007. Available online: https://al-roomi.org/multimedia/CEC_Database/CEC2013/RealParameterOptimization/CEC2013_RealParameterOptimization_TechnicalReport.pdf (accessed on 8 November 2022).

- Lin, X.; Wang, Z.Q.; Chen, X.Y. Path Planning with Improved Artificial Potential Field Method Based on Decision Tree. In Proceedings of the 2020 27th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS), Saint Petersburg, Russia, 25–27 May 2020. [Google Scholar]

- Li, B.; Jiang, W.S. Optimizing Complex Functions by Chaos Search. Cybernet. Syst. 1998, 29, 409–419. [Google Scholar]

- Agafonov, A.; Myasnikov, V. Stochastic On-time Arrival Problem with Levy Stable Distributions. In Proceedings of the 2019 4th International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 5–7 September 2019; pp. 227–231. [Google Scholar]

- Tarczewski, T.; Grzesiak, L.M. An Application of Novel Nature-Inspired Optimization Algorithms to Auto-Tuning State Feedback Speed Controller for PMSM. IEEE Trans. Ind. Appl. 2018, 54, 2913–2925. [Google Scholar] [CrossRef]

- Qiu, X.; Xu, J.X.; Tan, K.C.; Abbass, H.A. Adaptive Cross-Generation Differential Evolution Operators for Multiobjective Optimization. IEEE Trans. Evol. Comput. 2016, 20, 232–244. [Google Scholar] [CrossRef]

- Chen, Y.; Pi, D. An innovative flower pollination algorithm for continuous optimization problem. Appl. Math. Model. 2020, 83, 237–265. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Wang, L. Antenna Design by an Adaptive Variable Differential Artificial Bee Colony Algorithm. IEEE Trans. Magn. 2017, 54, 1–4. [Google Scholar] [CrossRef]

| Algorithm | Parameters Setting |

|---|---|

| FPA | , |

| ABC | |

| PSO | , , |

| SSO | , , |

| GA | , |

| F9 (D = 10) | F9 (D = 30) | F9 (D = 50) | F9 (D = 70) | F9 (D = 100) | |

|---|---|---|---|---|---|

| FPA-CCDE | 6.2388 × (1.1777 × ) | 1.19848 × (3.31242 × ) | 7.98987 × (2.76537 × ) | 1.19851 × (3.31241 × ) | 1.19853 × (3.31241 × ) |

| FPA | 2.89070 × (5.25541 × ) | 1.18909 × (1.76364 × ) | 1.91691 × (2.09563 × ) | 2.51704 × (2.33520 × ) | 3.12524 × (3.38376 × ) |

| ABC | 2.55878 × (6.50620 × ) | 1.50392 × (1.78987 × ) | 2.70389 × (1.48553 × ) | 3.64166 × (1.22192 × ) | 4.89987 × (1.35401 × ) |

| PSO | 1.59873 × (5.00000 × ) | 4.35873 × (6.37704 × ) | 6.67873 × (7.48331 × ) | 8.39873 × (8.66025 × ) | 1.06387 × (7.57188 × ) |

| GA | 6.45494 × (1.10375 × ) | 1.78149 × (1.36208 × ) | 2.60254 × (1.28445 × ) | 3.38987 × (1.60031 × ) | 4.27658 × (1.17499 × ) |

| SSO | 9.98733 × (2.54849 × ) | 3.03873 × (4.54606 × ) | 5.11873 × (3.31662 × ) | 6.35873 × (4.89898 × ) | 7.59873 × (5.00000 × ) |

| Parameter Name | Parameter Value | Parameter Name | Parameter Value |

|---|---|---|---|

| Movement time factor () | 1.5 | Maximum angular acceleration (:degree) | 1.0 |

| Threat factor (a) | 1.2 | Maximum movement distance (:metre) | 500 |

| Threat factor (b) | 1.5 | Threat attacking cost weight () | 0.5 |

| Steering coefficient (k) | 2.5 | Energy cost weight () | 0.3 |

| Maximum turning angle () | 60 | Movement time cost weight () | 0.5 |

| Maximum linear velocity () | 1.0 | Steering cost weight () | 0.3 |

| Maximum angular velocity () | 0.8 | Threat target number (N) | 18 |

| Maximum linear acceleration () | 1.0 | Segment number (D) | 15 |

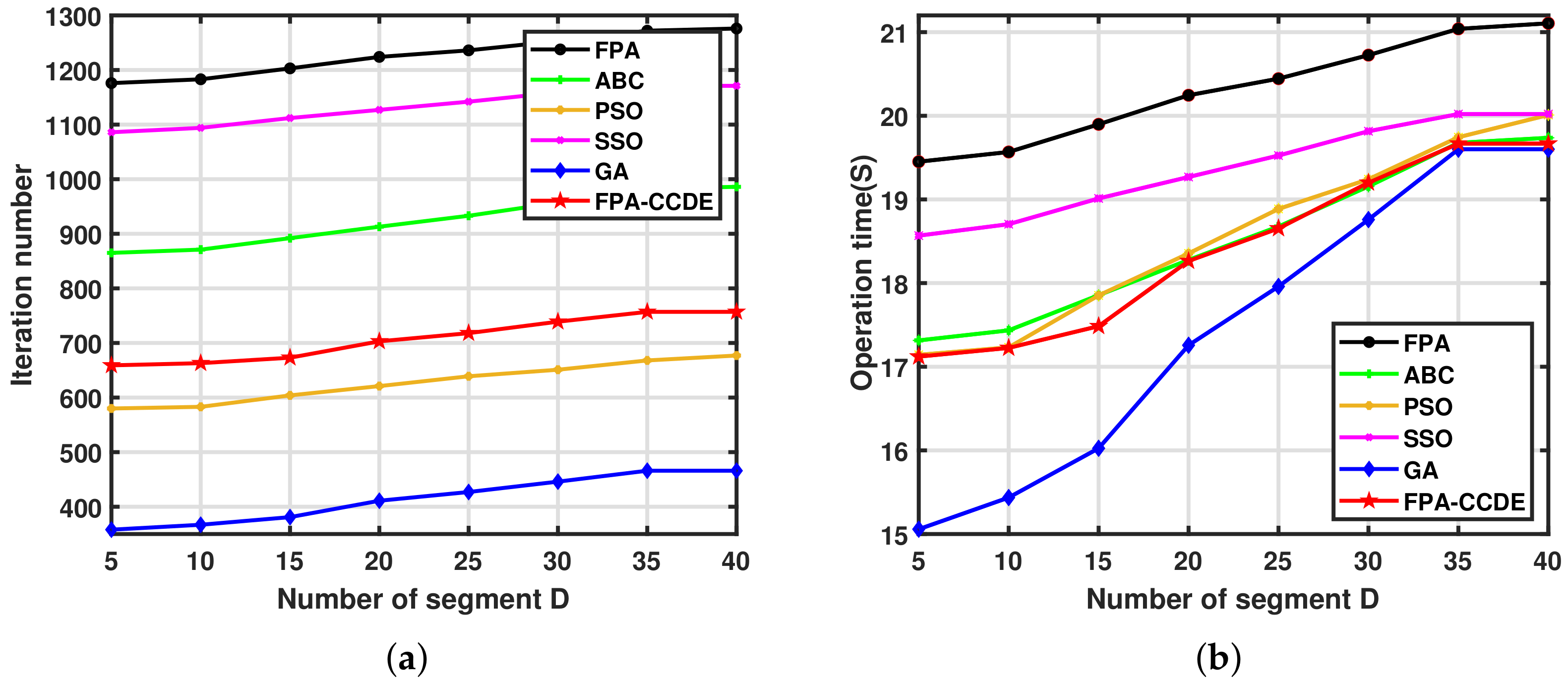

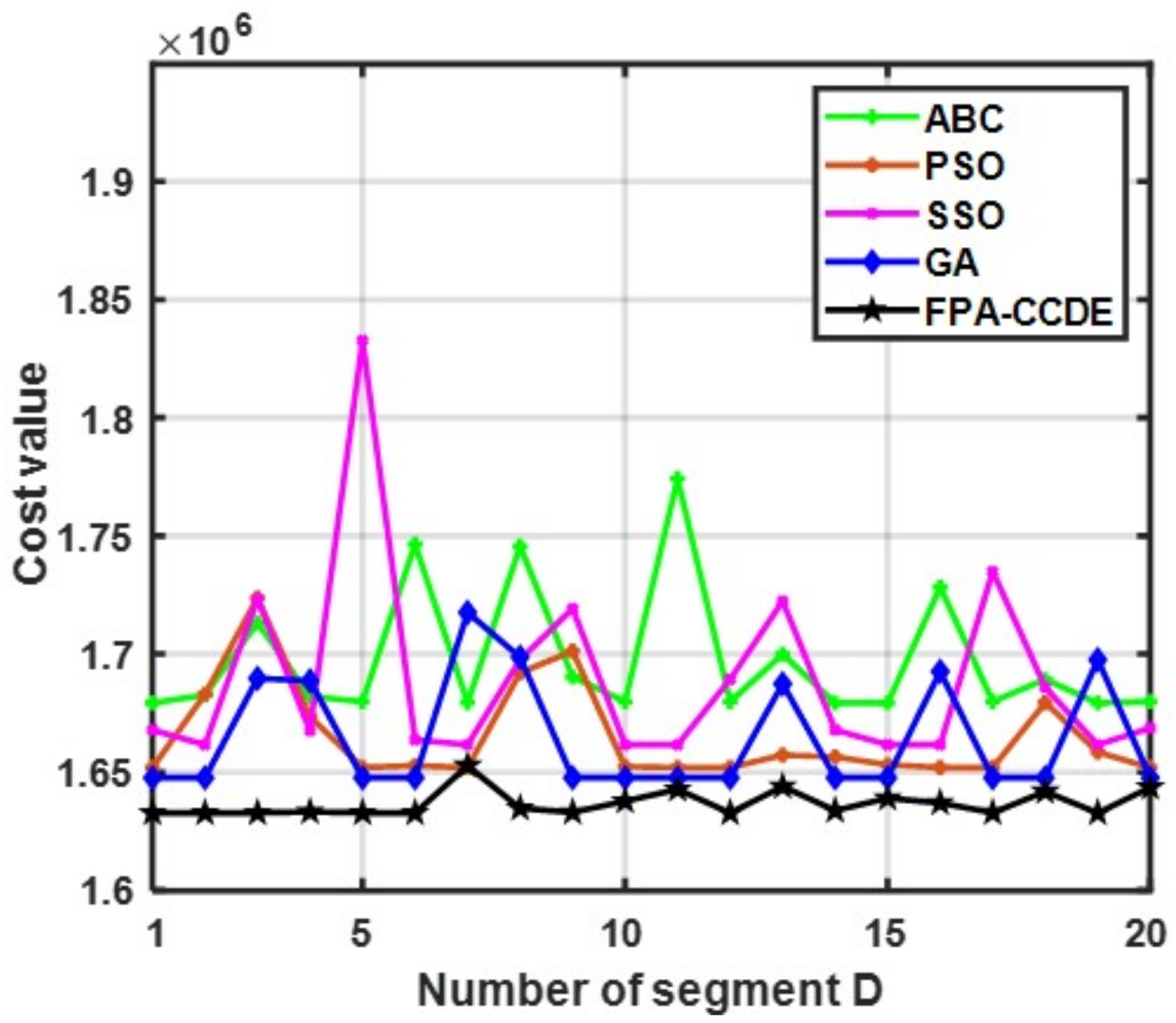

| Algorithm | Convergence Iteration | Time | Running Time | Function Value | Average Error |

|---|---|---|---|---|---|

| FPA | 1224 | 20.24543 | 25.32147 | 4.76025 × | 1.69787 × |

| ABC | 913 | 18.27653 | 20.33333 | 1.67925 × | 3.07283 × |

| PSO | 621 | 18.35461 | 20.00214 | 1.65191 × | 8.06825 × |

| SSO | 1127 | 19.26845 | 21.52612 | 1.66159 × | 1.54797 × |

| GA | 411 | 17.25684 | 20.45647 | 1.64760 × | 2.92722 × |

| FPA-CCDE | 703 | 18.26453 | 20.35951 | 1.63272 × | 2.97392 × |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, Y.; Wang, S.; Liang, L.; Wei, Y.; Wu, Y. A Flower Pollination Optimization Algorithm Based on Cosine Cross-Generation Differential Evolution. Sensors 2023, 23, 606. https://doi.org/10.3390/s23020606

Jia Y, Wang S, Liang L, Wei Y, Wu Y. A Flower Pollination Optimization Algorithm Based on Cosine Cross-Generation Differential Evolution. Sensors. 2023; 23(2):606. https://doi.org/10.3390/s23020606

Chicago/Turabian StyleJia, Yunjian, Shankun Wang, Liang Liang, Yaxing Wei, and Yanfei Wu. 2023. "A Flower Pollination Optimization Algorithm Based on Cosine Cross-Generation Differential Evolution" Sensors 23, no. 2: 606. https://doi.org/10.3390/s23020606

APA StyleJia, Y., Wang, S., Liang, L., Wei, Y., & Wu, Y. (2023). A Flower Pollination Optimization Algorithm Based on Cosine Cross-Generation Differential Evolution. Sensors, 23(2), 606. https://doi.org/10.3390/s23020606