Abstract

Personal identification based on radar gait measurement is an important application of biometric technology because it enables remote and continuous identification of people, irrespective of the lighting conditions and subjects’ outfits. This study explores an effective time-velocity distribution and its relevant parameters for Doppler-radar-based personal gait identification using deep learning. Most conventional studies on radar-based gait identification used a short-time Fourier transform (STFT), which is a general method to obtain time-velocity distribution for motion recognition using Doppler radar. However, the length of the window function that controls the time and velocity resolutions of the time-velocity image was empirically selected, and several other methods for calculating high-resolution time-velocity distributions were not considered. In this study, we compared four types of representative time-velocity distributions calculated from the Doppler-radar-received signals: STFT, wavelet transform, Wigner–Ville distribution, and smoothed pseudo-Wigner–Ville distribution. In addition, the identification accuracies of various parameter settings were also investigated. We observed that the optimally tuned STFT outperformed other high-resolution distributions, and a short length of the window function in the STFT process led to a reasonable accuracy; the best identification accuracy was 99% for the identification of twenty-five test subjects. These results indicate that STFT is the optimal time-velocity distribution for gait-based personal identification using the Doppler radar, although the time and velocity resolutions of the other methods were better than those of the STFT.

1. Introduction

In recent times, biometric technology is extensively used in surveillance and monitoring systems, with the most common application being that of facial recognition via camera for authentication purposes [1,2]. In addition, smart speakers have had a recent surge in popularity and are also used for personal identification [3]. However, such techniques have serious privacy concerns. To overcome these privacy issues, personal identification techniques using various biometric information, such as fingerprints, irises, veins, brain waves, and heartbeat characteristics, have been investigated [4,5]. However, the acquisition of such biometric information often requires physical contact with the user and/or a complex setup. Therefore, personal identification based on unconstrained daily activities is an important research topic. In particular, gait-based personal identification has recently been studied, as walking is a representative daily activity that can be easily measured and applied for continuous identification [6,7].

The use of cameras herein is primarily for personal identification using gait information [8,9]; however, there are privacy issues similar to facial recognition, with their accuracy depending on the lighting conditions and a subject’s outfit. Methods using depth sensors have also been studied [10,11]; however, their accuracy depends on the lighting conditions, similar to those in the case of cameras. Moreover, measurement of wide areas is relatively difficult. Another approach is the use of accelerometers, including those used in wearable devices and smartphones [12,13]. However, in this case, the user is required to carry or wear sensors.

Doppler radar is a promising prospect for solving the aforementioned problems with other sensors [14,15]. Doppler radar can remotely measure the time variation in the velocities during human movements based on frequency transitions caused by the Doppler effect. During Doppler radar motion measurement, the participants are not required to wear sensor devices, and there are no restrictions on the participants’ outfits. Gait-based personal identification using the micro-Doppler radar and applying deep learning to time-velocity distribution, which was calculated as the short-time Fourier transform (STFT) of the radar-received signals, has yielded high accuracy [16,17,18,19,20,21,22,23]. It has been reported that the identification of two persons has an accuracy of 99% [16], and identification of 20 persons has an accuracy of 97% [17]. Furthermore, several person identification methods have been recently developed which are suitable for various types of realistic scenarios such as a multi-person scenario [18], use of a relatively small amount of training data [19,20], and scenarios which assume people walking in arbitrary directions [21].

However, most conventional studies on Doppler-radar-based personal identification do not consider the relationships between gait identification accuracy and time-velocity distributions and their resolutions. For example, there are multiple methods for calculating the time-velocity distributions other than STFT, such as wavelet transform (WT) [24], Wigner–Ville distribution (WVD) [25], and smoothed pseudo-WVD (SPWVD) [26]. These time-frequency analysis techniques have been recently used for various signal classification problems based on deep learning techniques, such as electrocardiogram techniques [27,28,29]. Thus, even for the Doppler radar techniques, several researchers have efficiently used such deep-learning- and time-frequency (time-velocity)-based methods for human motion classification problems [30,31,32]. However, methods other than STFT have rarely been applied to gait-based personal identification using Doppler radars. Although Dong et al. [33] presented the effects of time-velocity distribution on gait identification, no significant differences between the STFT and WVD-based methods were observed because the numbers of participants and test trials were limited, and their various parameter settings, which control the time-velocity resolution, were not considered. Thus, an efficient time-velocity distribution and parameter setting have not been established for Doppler-radar-based personal gait identification.

In this study, we explore efficient time-velocity distributions for personal gait identification using Doppler radar and deep learning by comparing the identification accuracy of various time-velocity distributions involving STFT, WT, WVD, SPWVD, and their various parameter settings. The contributions of this study are as follows.

- For gait-based person identification using deep learning and Doppler radar, tuning of micro-Doppler signatures is considered, and the appropriate settings are revealed.

- A comparison of the person identification accuracies of various time-velocity distributions showing that the conventionally used STFT spectrograms achieved the best accuracy.

- Twenty-five test subjects were successfully identified with an accuracy of approximately 99%.

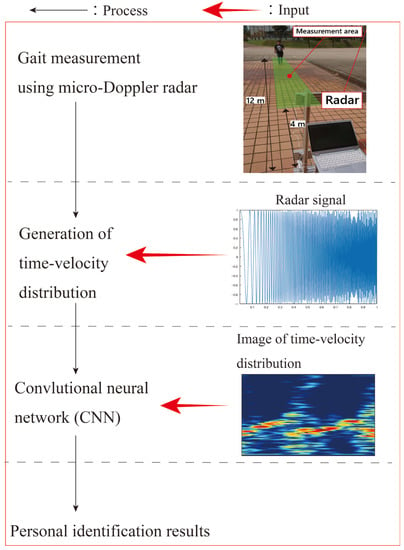

2. Radar Gait Measurement and Person Identification Procedure

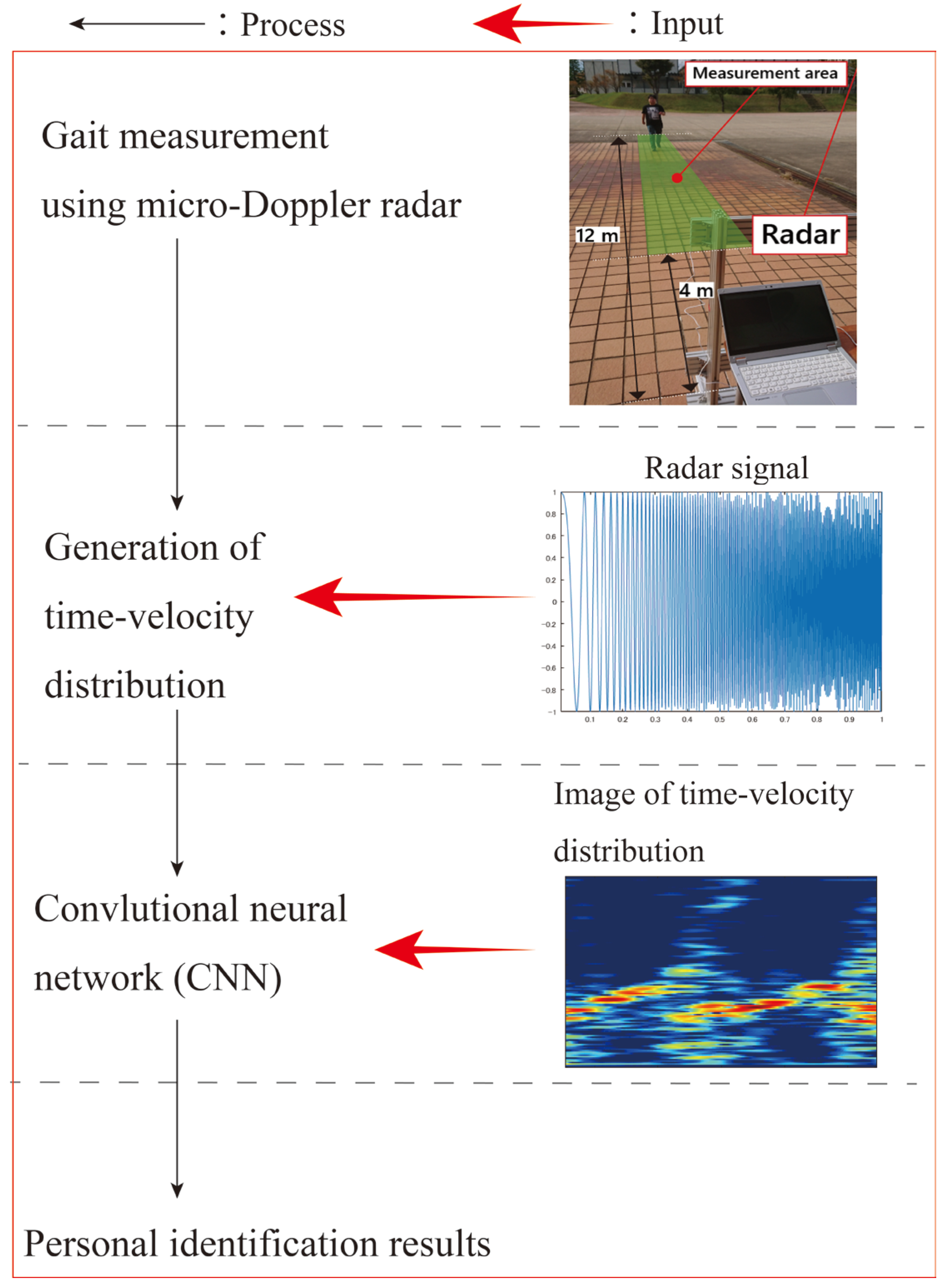

Figure 1 outlines the procedure of the Doppler radar experiments and person identification. Gait measurements were performed to generate the dataset. We used a monostatic continuous-wave 24 GHz micro-Doppler radar (ILT Office Inc., Toyama, Japan, BSS-110) installed at a height of 1.0 m. The −3 dB beamwidth in the V- and H-planes of the antenna were ±14° and ±35°, respectively. The received demodulated radar signals were obtained at a sampling frequency of 600 Hz. The study participants comprised 25 healthy adults (mean age: 22.5 years; 22 men and 3 women) who wore their own shoes (none of the participants wore shoes that led to relatively difficult walking). The participants were instructed to walk toward the radar along a straight walkway at a self-selected comfortable pace.

Figure 1.

Outline of the procedure used in this study.

We collected the received signals corresponding to the participants who walked in the range of 4–12 m because they performed steady-state walking, and the radar could measure the entire body in this range. We collected the data for 150 gait cycles for each participant for several days. The received signal of each gait cycle was used as one dataset for personal identification, and a total of 150 × 25 = 3750 data points were collected. The length of each input data point is one gait cycle. We extracted the data corresponding to each gait cycle from the collected data of steady-state walking using the method presented in [34]. Therefore, the length of each data point was approximately 1 s, which is referred to as the general value of the walking cycle of human gait; however, the gait cycle of each data point was different.

We then generated images of the time-velocity distribution calculated using the received signals. The focus of this study is to clarify the type and parameters of the time-velocity distribution that achieves accurate identification. The details involving the generation method for various types of time-velocity distributions and their parameters are explained in Section 3. The identification accuracies for the various types and settings of the generated time-velocity images are compared in Section 4.

We investigated the identification accuracy of the 25 participants using the generated time-velocity distribution and deep learning. A convolutional neural network (CNN) was used as the deep learning method, similar to previous studies on Doppler-radar-based personal identification. The time-velocity distributions converted to RGB-colored PNG images of size 224 × 224 were used as the input data for the CNN. Because we focused on the exploration of the effective time-velocity distribution, the CNN structure used in this study was fixed to ResNet-18 [35], which is an effective network for various conventional radar-based motion and person identification systems [23,36]. The basic structure of ResNet-18 was used with the same empirically tuned parameter settings as in our previous study [14]. Global average pooling was performed, and a fully connected layer was then employed using stochastic gradient descent with momentum optimization. The loss function was a cross-entropy function. The hyperparameters were optimized for all experiments for their fair comparison. For example, for the STFT spectrogram, a mini-batch size of 64. The learning rate was 0.01 and was decreased by multiplying it times 0.7 every 10 epochs. These hyperparameters were empirically optimized.

3. Generation of Various Time-Velocity Distribution Images

This section describes the methods for generating the time-velocity distributions: STFT, WT, WVD, and SPWVD. The procedures to calculate various time-velocity distributions and their examples and features are presented herein.

3.1. STFT Spectrogram

This section describes the method for generating the time-velocity distributions. This subsection presents the most commonly used STFT methods. The STFT of the radar-received signal s(t) (t: time) is expressed as [25]:

where w(t) is a window function, and this study used a general Hamming window function with the length of WL (note that we confirmed that the accuracies of the gait identifications presented in this paper are only slightly changed for other representative window functions involving Hann and Blackman window functions). The radial velocity vd can be calculated using the Doppler angular velocity ωd as:

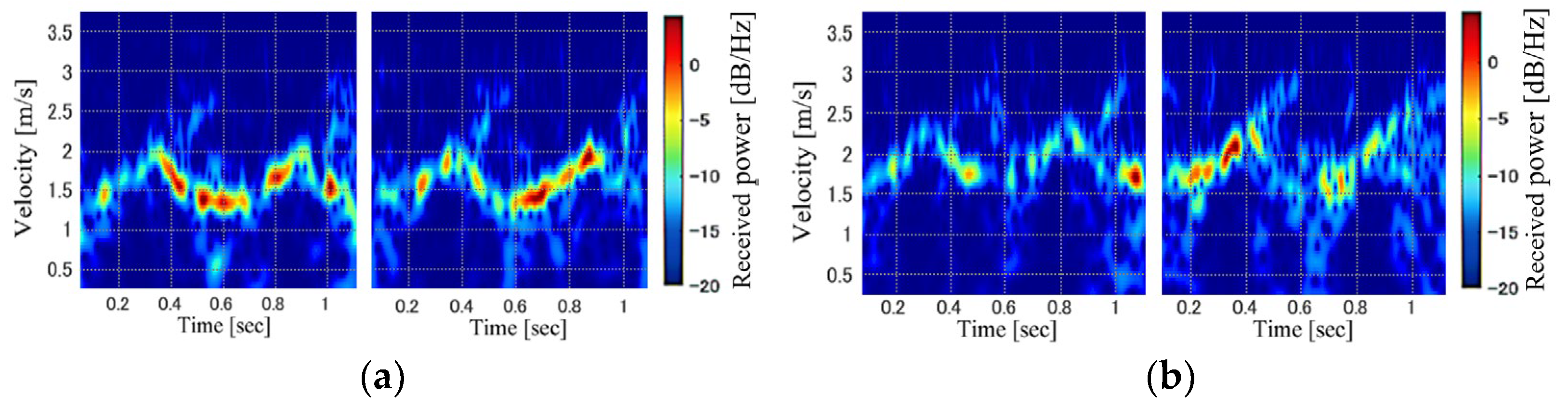

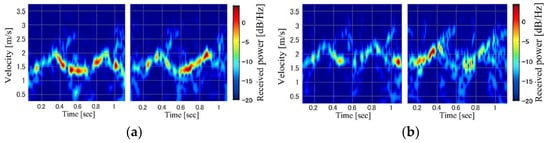

where f0 is the transmitting frequency (24 GHz), and c is the speed of light. For micro-Doppler-radar-based personal identification, the magnitude squared of the STFT, which is referred to as a spectrogram, is often used. Using Equation (2), the STFT spectrogram of the received signal |S(t, vd)|2 is calculated, and its images are input to a machine-learning algorithm, such as a CNN. Figure 2 shows examples of the spectrogram images with the window length WL of 128 samples for steady-state gaits of three participants. The similar images for the same participants and different tendencies depending on the participants could be confirmed.

Figure 2.

Examples of STFT spectrogram images. Each panel corresponds to a different participant (subfigures (a–c) show the spectrogram images of participants (a), (b), and (c), respectively).

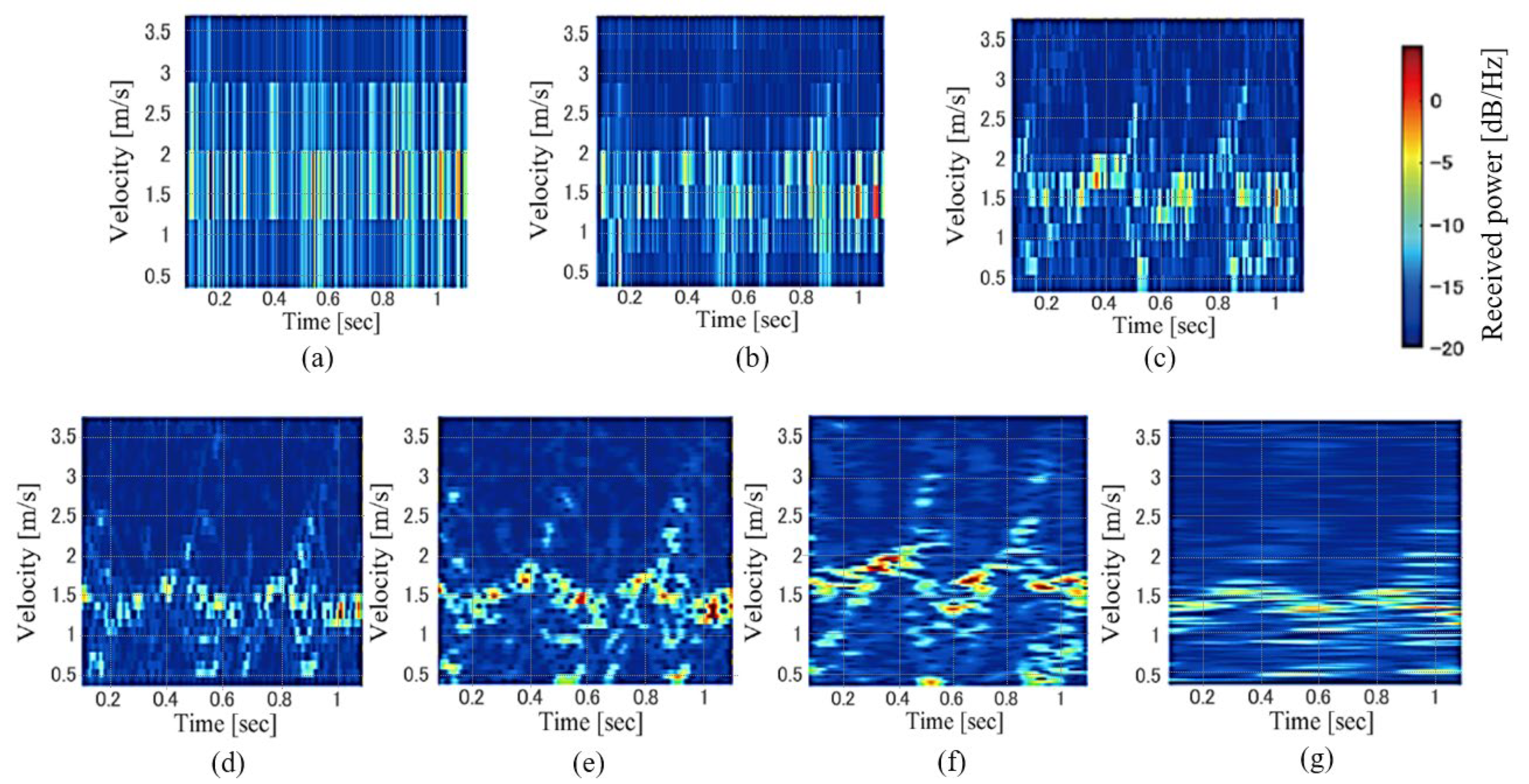

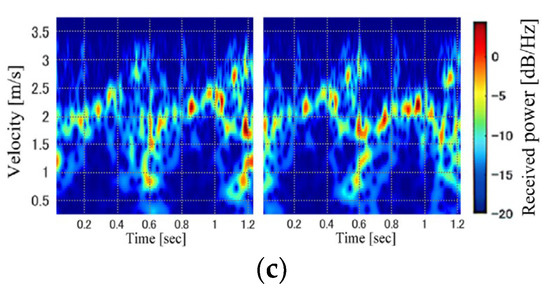

Although most studies on micro-Doppler-radar-based personal identification have used STFT spectrogram images, the effects of their resolution, which is determined by the window length WL, have not been investigated. Figure 3 illustrates examples of the spectrograms of gait for various WL calculated using the same data with an overlap length of WL−1. This figure clearly shows that the velocity (frequency) resolution of the spectrogram improved when a large WL was set. However, a larger WL results in a lower time resolution. Thus, the expressed information on gait in the spectrogram depends on WL, and we investigated the relationship between WL and personal identification accuracy.

Figure 3.

Examples of STFT spectrogram images for various window lengths. WL = (a) 4, (b) 8, (c) 16, (d) 32, (e) 64, (f) 128, and (g) 256 samples.

3.2. WT Scalogram

WT is another popular time-frequency analysis method owing to its resolution flexibility. The WT of s(t) is expressed as [32]:

where * indicates a complex conjugate, a is the scale factor (corresponding to the velocity), u is the shift factor, and ψ(t) is the wavelet function. We used a Morse wavelet [37] as the wavelet function because it can easily control both the time and frequency resolutions, which are expressed as follows:

where b and γ are the parameters that control the time and frequency resolutions, respectively. For personal identification, we used images having the magnitude squared of the WT, called a scalogram.

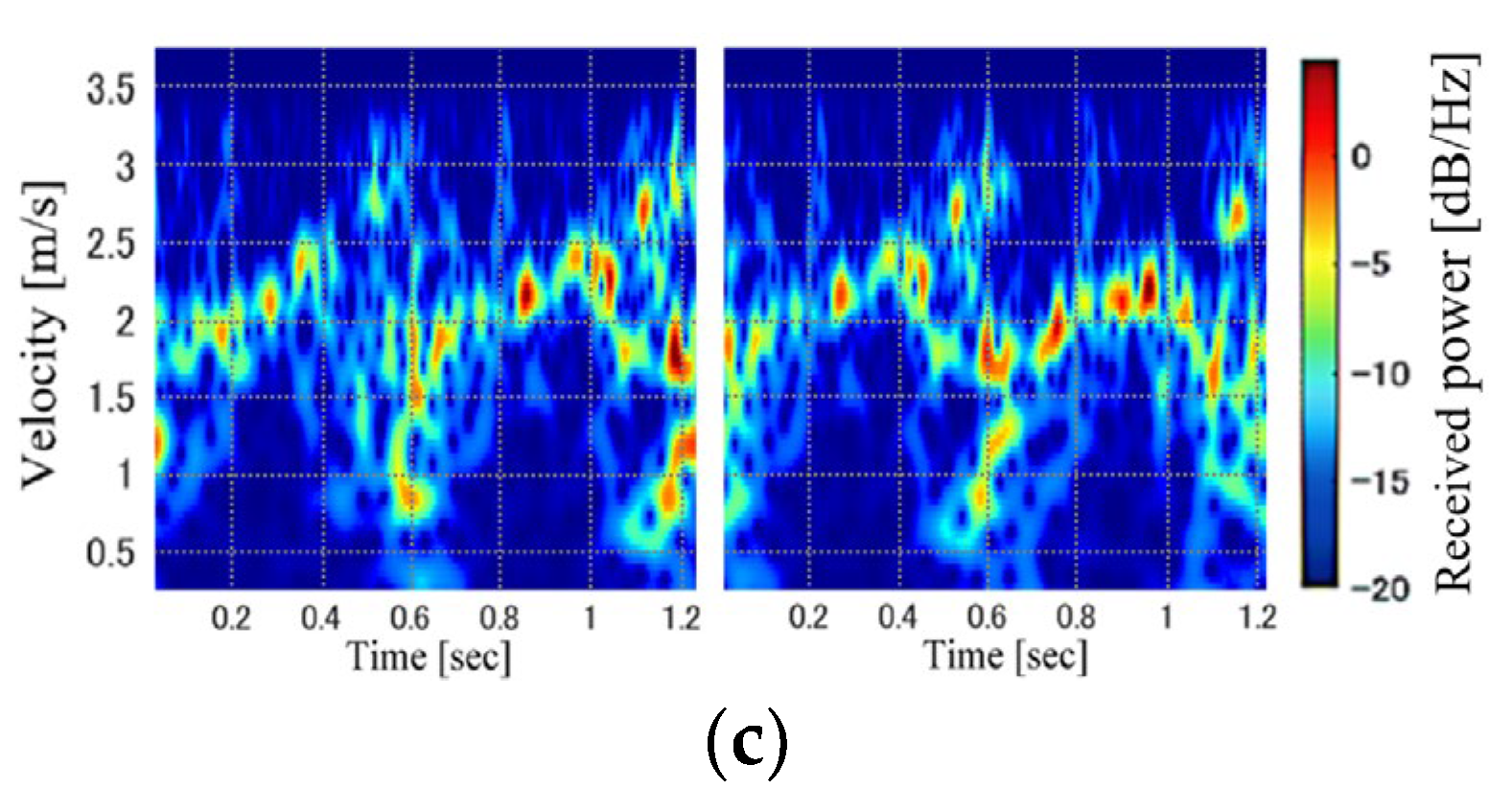

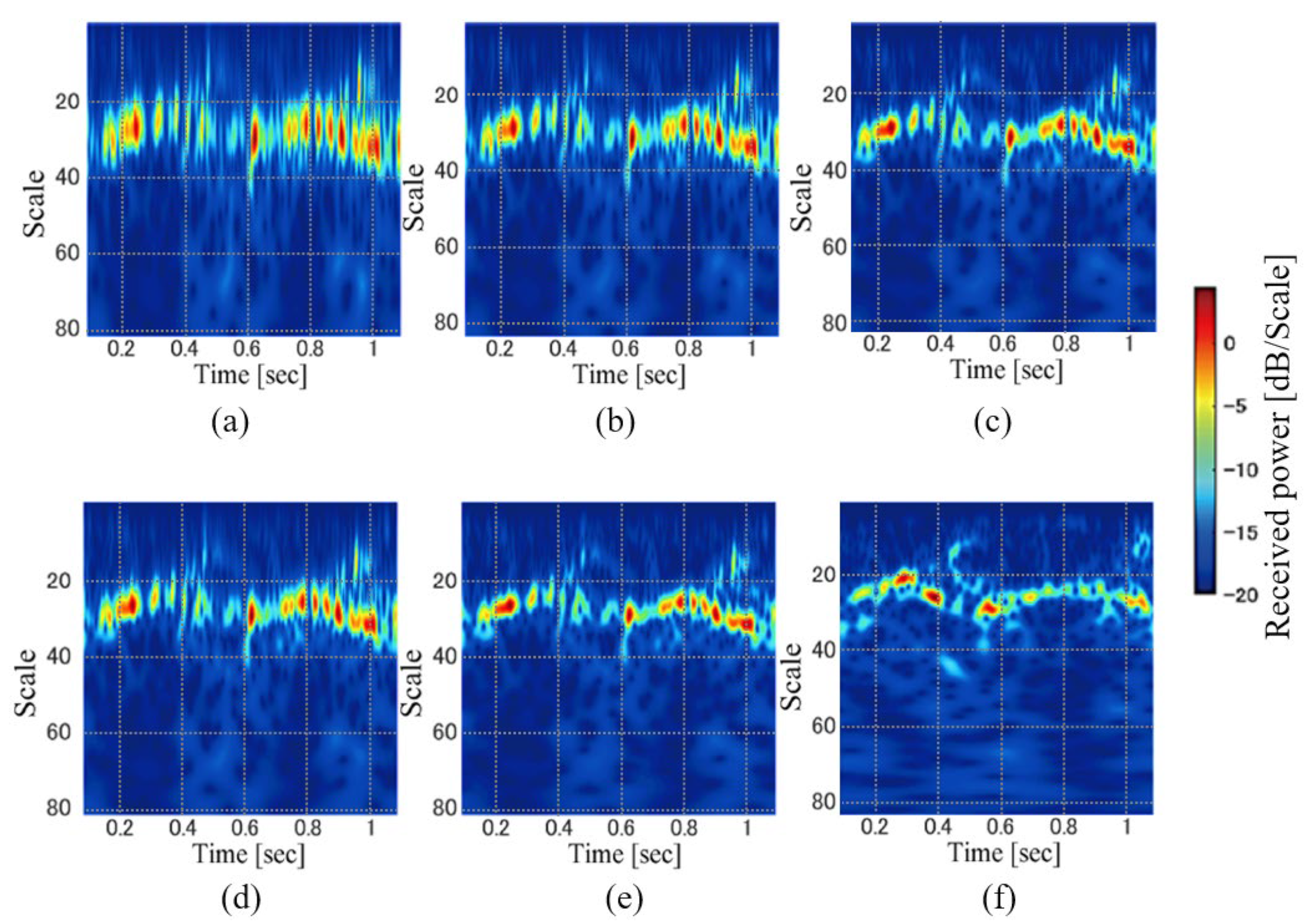

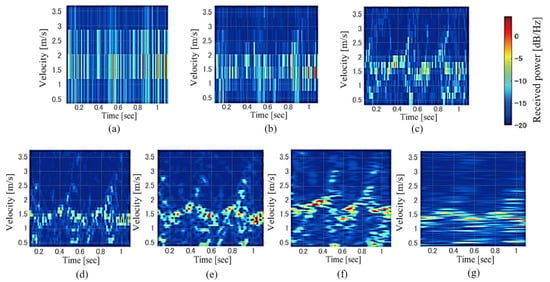

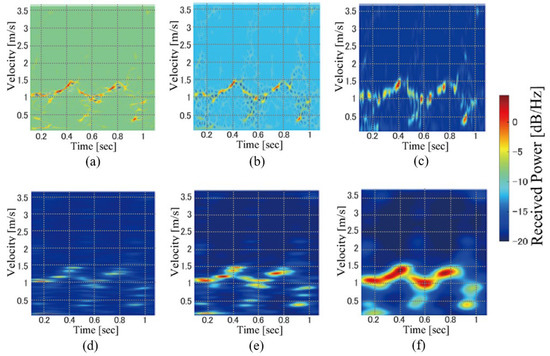

Figure 4 shows examples of the scalograms for various settings of b and γ. In contrast to the STFT, the time and scale (velocity) resolutions are not fixed in the scalogram and are controlled by these parameters. We investigated the personal identification accuracy for various parameter settings and compared the results of the WT scalogram with those of the STFT spectrogram.

Figure 4.

Examples of WT scalogram images. (b, γ) = (a) (8, 3), (b) (16, 3), (c) (32, 3), (d) (8, 8), (e) (16, 8), and (f) (32, 8).

3.3. WVD

WVD is a high-resolution time-frequency analysis method without a trade-off between the time and frequency resolutions that exist in the STFT and WT. The WVD of s(t) is expressed as [25]:

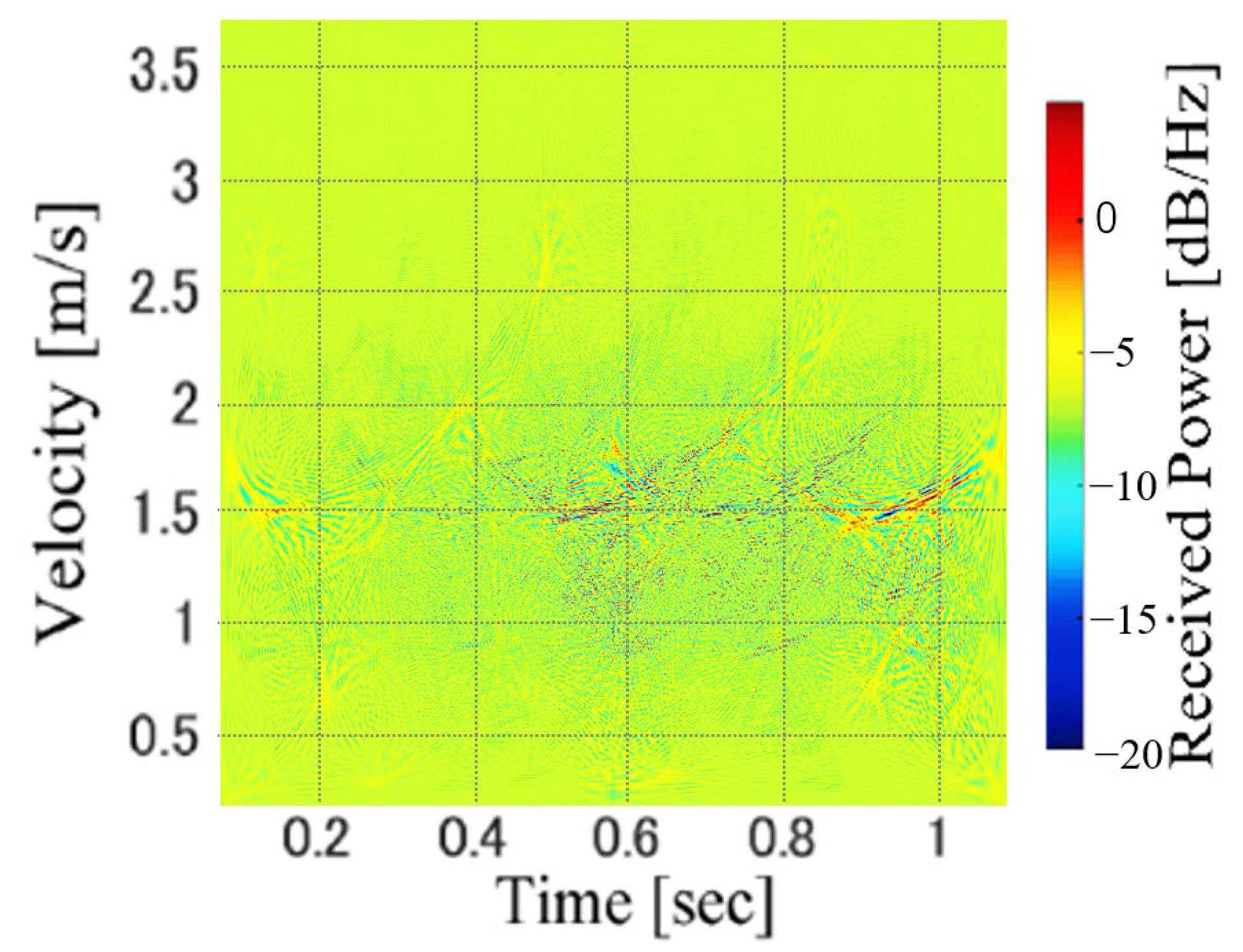

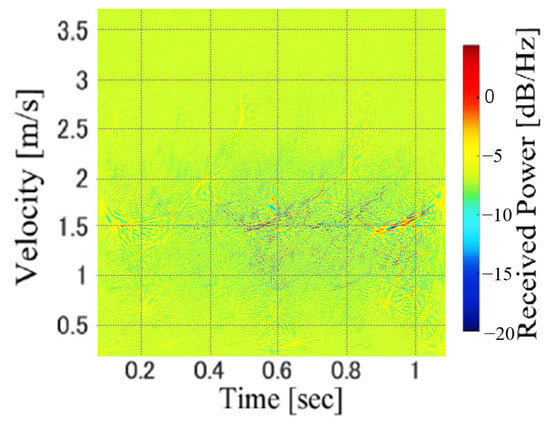

The WVD can determine the frequency spectrum for each time bin, and the time and frequency resolutions are equivalent to the physical limitations determined by the sampling frequency. However, owing to the interference of the multifrequency components, the WVD includes many cross-terms. Figure 5 shows an example of the WVD of the Doppler-radar-received signal of the gait. Compared with the STFT spectrograms shown in Figure 3, the velocity components of the walking motion are not clearly confirmed because of the many cross-terms. Thus, WVD has not been widely used for Doppler radar-based motion recognition. However, we can hypothesize that these cross-terms can be considered as the features of individuals in the personal identification problem. Thus, we investigated the accuracy of personal gait identification using WVD images.

Figure 5.

An example of WVD image.

3.4. SPWVD

The SPWVD is a smoothed WVD for removing the cross-terms in the WVD; it is expressed as follows [25,26]:

where Φ(t, ωd) is the smoothing function. In this study, we used the two-dimensional Gaussian function for Φ(t, ωd), which is expressed as:

where α and β are the parameters that control the resolution in terms of time and velocity, respectively.

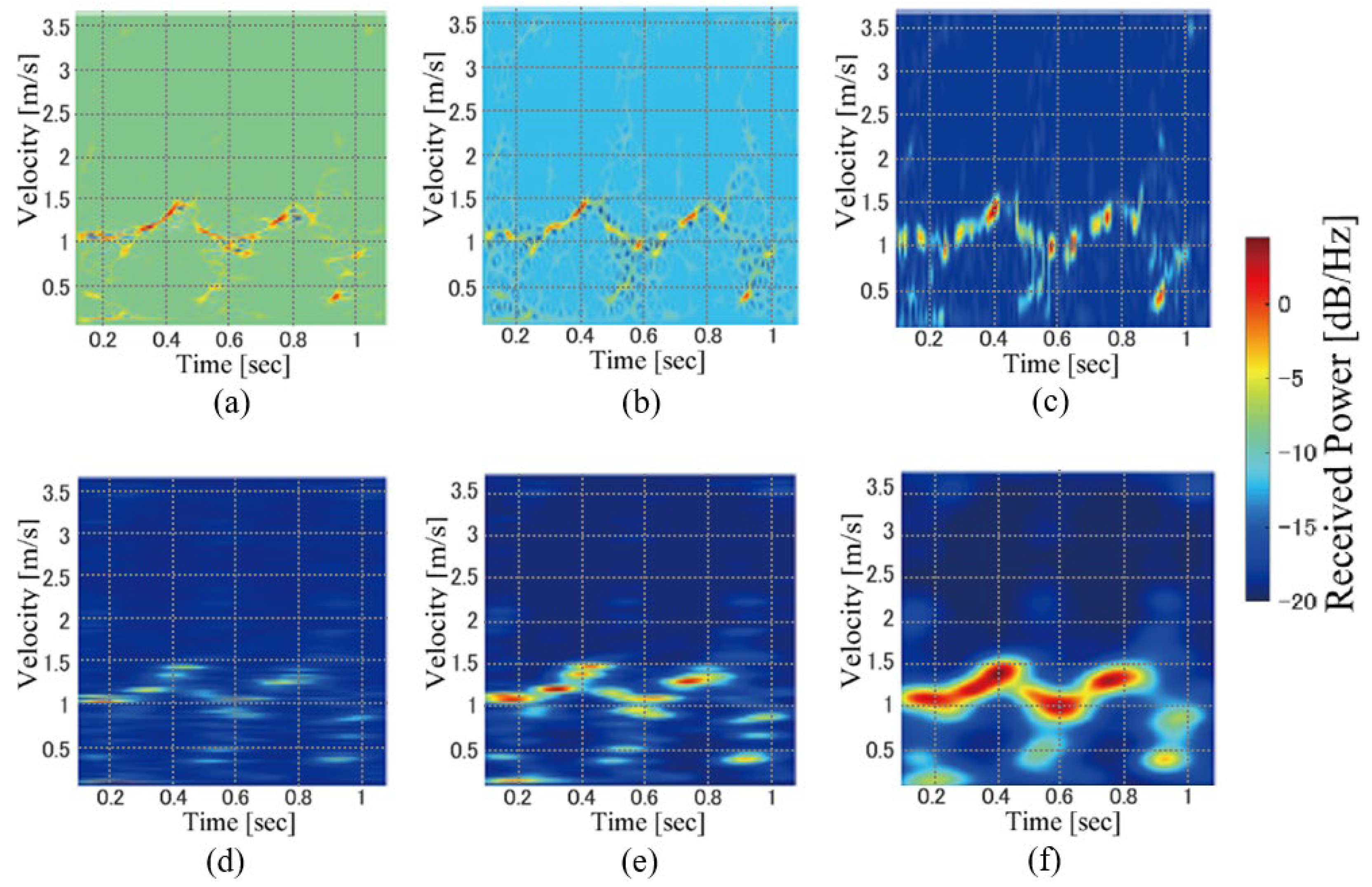

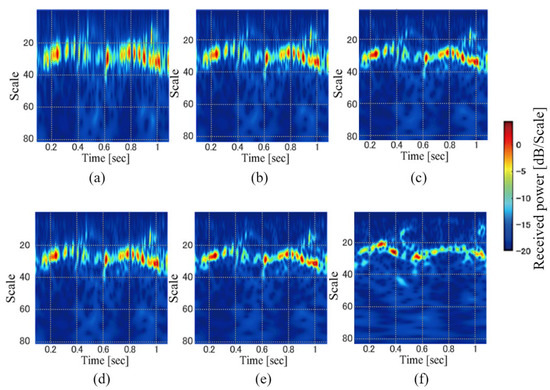

Figure 6 shows examples of the SPWVDs for various values of α and β. When α and β are set to smaller values, a high-resolution time-velocity distribution is obtained. However, several cross-terms remain with extremely small values. In contrast, larger values of these parameters sufficiently remove the cross-terms, although the resolutions worsen. As shown in Figure 6, SPWVDs with larger parameters tend to produce results that are similar to the spectrograms shown in Figure 3. SPWVDs with smaller parameters are high-resolution time-velocity distributions compared to the STFT and WT with smaller cross-terms compared to the WVD.

Figure 6.

Examples of SPWVD images. (α, β) = (a) (4, 4), (b) (4, 16), (c) (4, 64), (d) (64, 4), (e) (64, 16), and (f) (64, 64).

As presented in this section, various time-velocity distributions with different parameter settings generate the input images with different resolutions. In the next section, we evaluate the personal gait identification accuracy for the aforementioned time-velocity distribution types and resolution of the input time-velocity images.

4. Evaluation and Discussion

4.1. Evaluation Method

We evaluated and compared the accuracy of the gait identification of the participants using images of various time-velocity distributions with various parameter settings, as described in the previous section. We also investigated the identification accuracy according to the number of participants N; cases with N = 5, 15, and 25 participants were considered. When we investigated the identification of five or fifteen participants, the participants were randomly selected from the twenty-five participants. For accuracy evaluation, we performed a hold-out validation. In each case, the CNN was trained using 70% of the data (105 data points per participant), and the remaining 30% of the data were used as test data. Thus, the sizes of training data for N = 5, 15, and 25 were 525, 1575, and 2625 images; those of test data for N = 5, 15, and 25 were 225, 675, and 1125 images, respectively. The number of training and test data was the same for all time-velocity distributions. Subsequently, ten trials of hold-out validations were conducted by varying the training/test data split. The training and test data were randomly selected from the generated dataset. Notably, the length of each data point is one gait cycle. The mean and standard deviation of the classification accuracy across all trials were calculated.

4.2. Results for STFT

Table 1 summarizes the results for the personal gait identification using the STFT spectrogram images for various window lengths WL and number of participants N. All the N and WL values of the 32 samples (53.3 ms) achieved the best accuracy of approximately 99%. We achieved accurate gait identification of 99.1% in the identification of 25 participants. The results revealed that the identification accuracy worsens when either the time or frequency resolution is relatively low.

Table 1.

Results for STFT spectrogram.

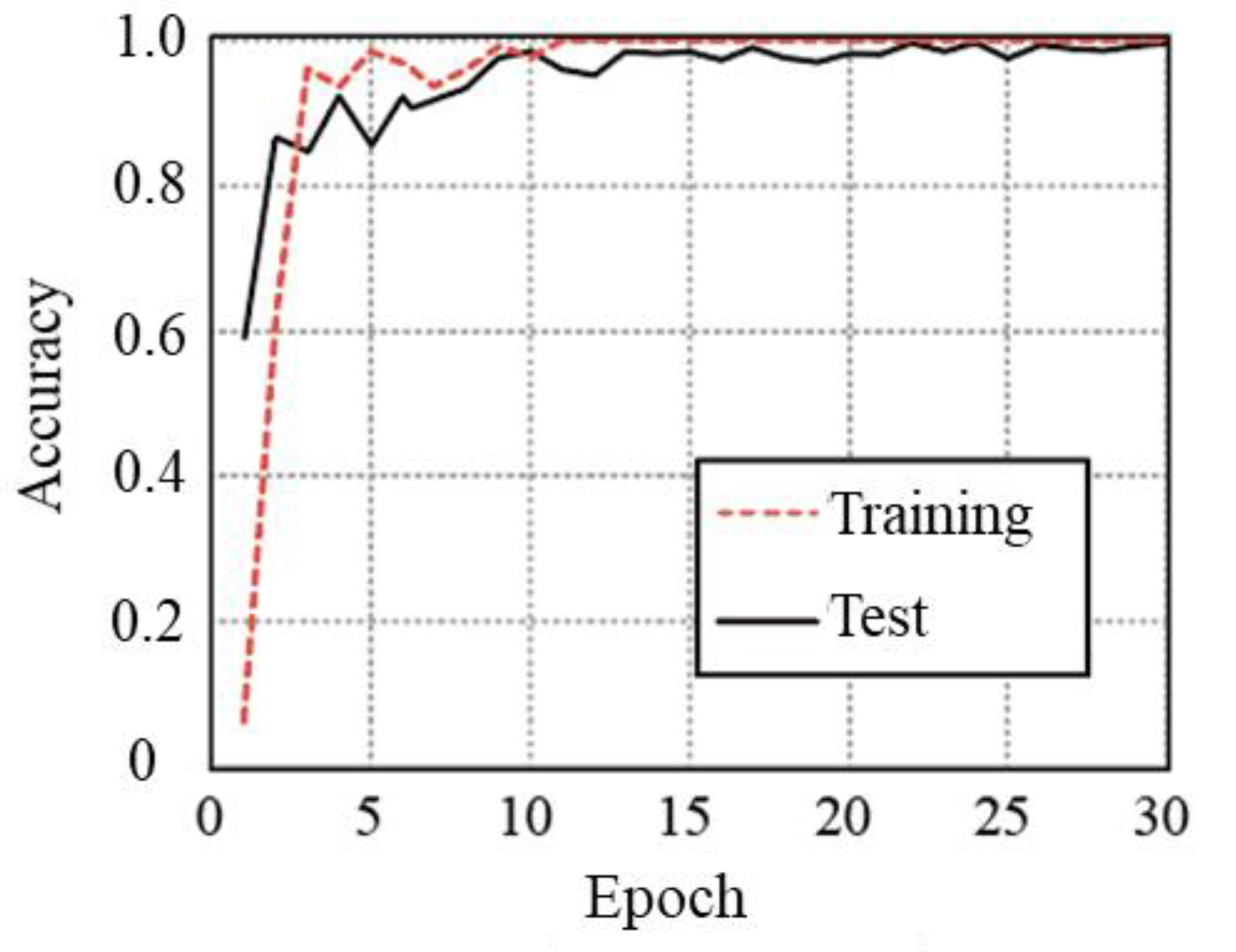

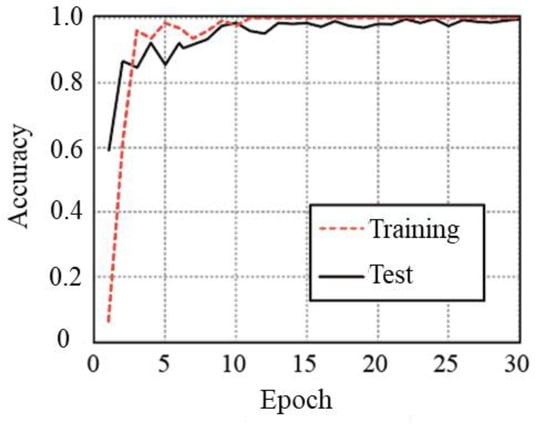

Figure 7 shows an example of the convergence curves obtained for the results of N = 25 and WL = 32 samples. As shown in these curves, the accuracy is converged with small epochs without overfitting. We confirmed the similar tendencies of the conventional curves for all cases. Thus, there are no requirements for data augmentation for the accurate personal gait identification based on our experimental data.

Figure 7.

Example of convergence curves for STFT with N = 25 and WL = 32 samples.

4.3. Results for WT

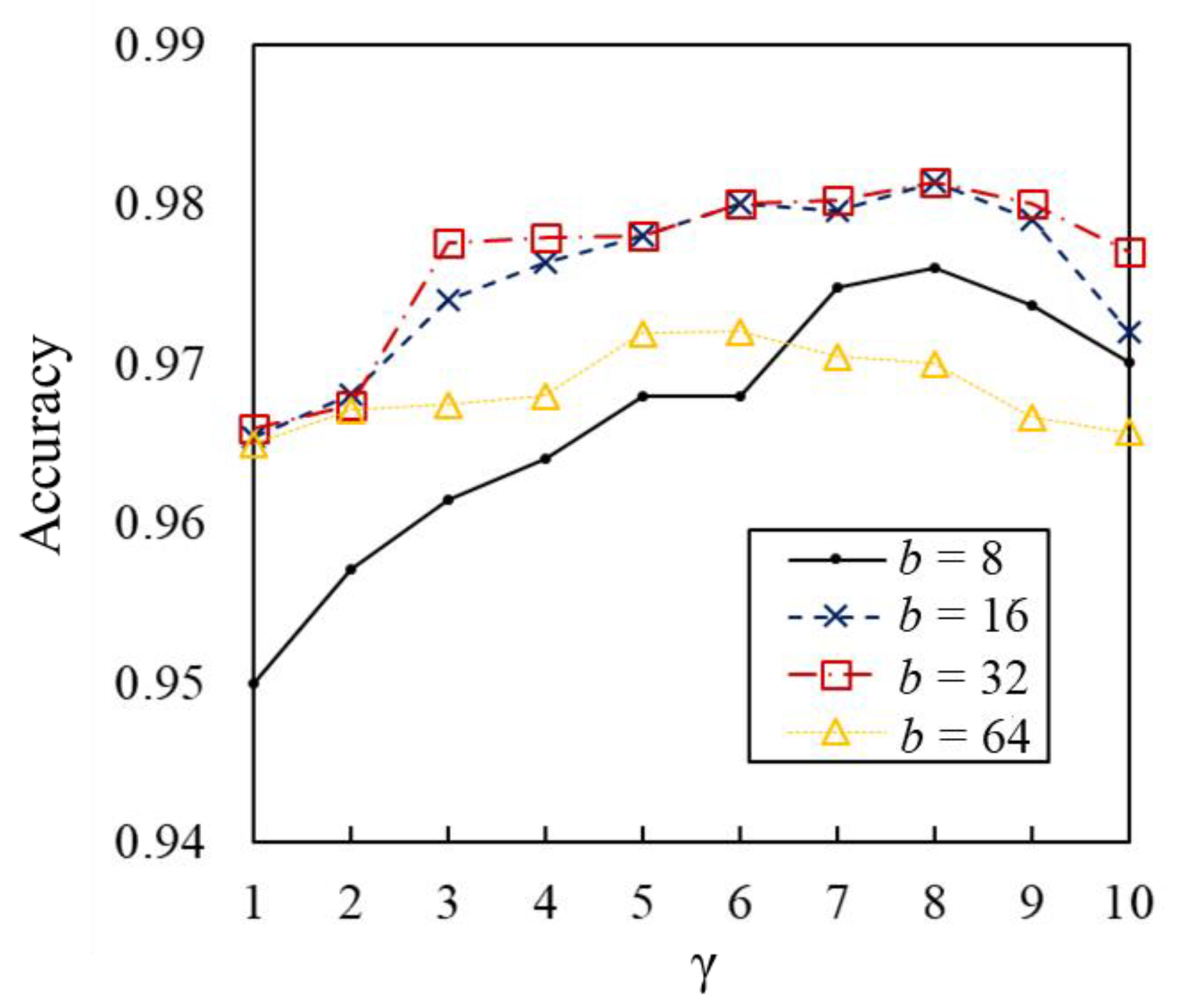

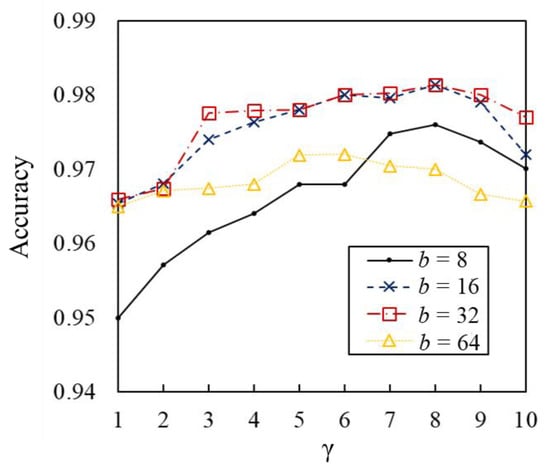

We then investigated the accuracy of the WT scalograms that have flexibility in terms of time and velocity resolution settings. Table 2 lists the results of the WT scalograms for various b and γ values. For all N values, the identification accuracies for (b, γ) = (8, 32) achieved the highest accuracy of approximately 98%. Relatively larger values of b and γ indicate that the resolutions in time and scale are the same. These results indicate that the identification accuracy is low when the time or scale resolution is high. Figure 8 shows the results for WT with various parameter settings for N = 25. As shown in this figure, larger values of b were ineffective compared with the results for b = 16 and 32. Furthermore, larger γ values slightly deteriorate the identification accuracy for various b values. These results indicate that there are appropriate resolutions of the WT scalograms for the gait-based person identification, similar to the STFT spectrograms.

Table 2.

Results for WT Scalogram.

Figure 8.

Identification accuracies using WT for various parameters (N = 25).

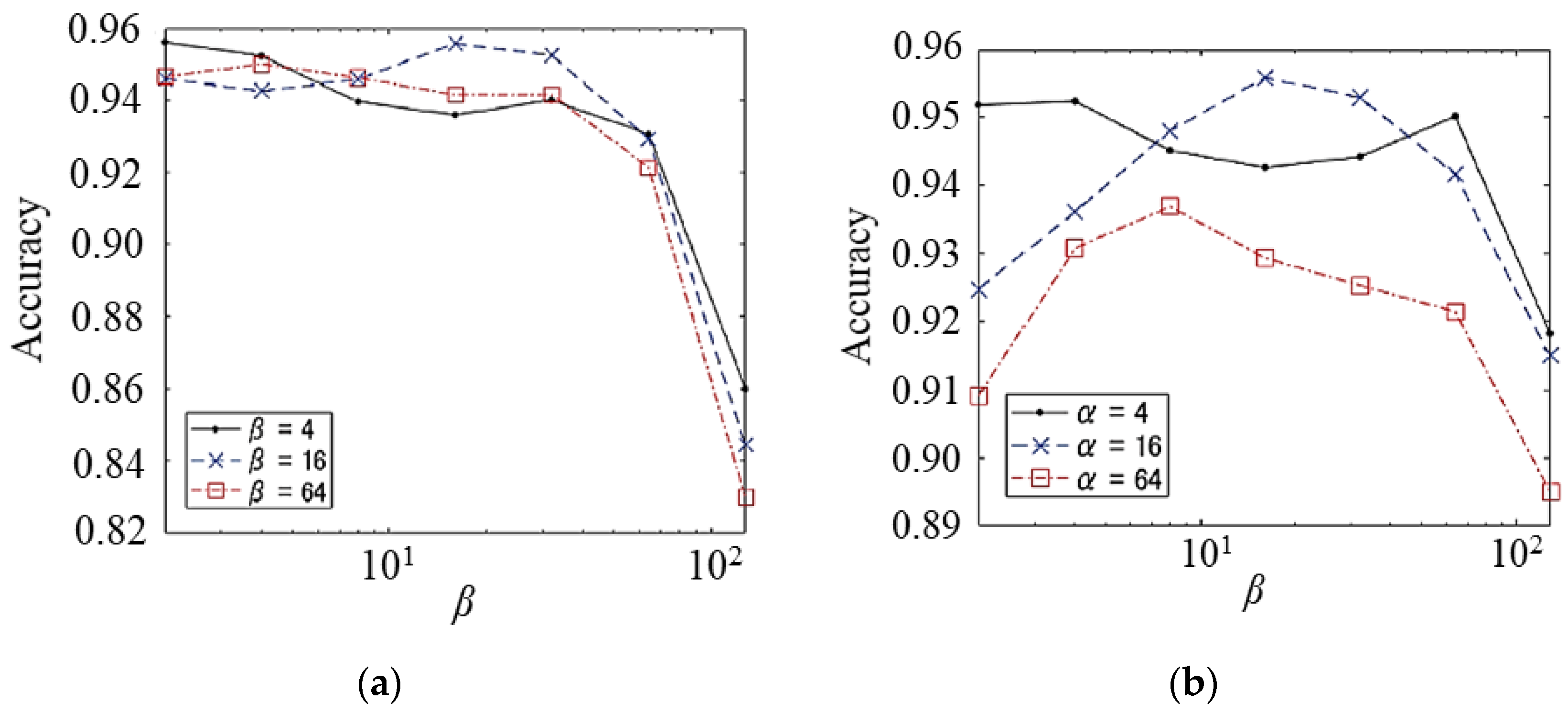

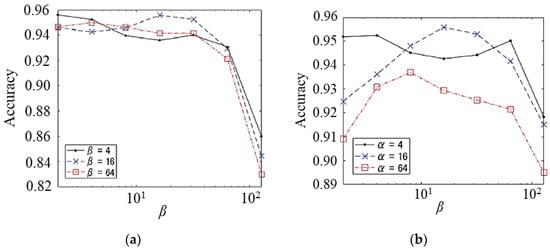

4.4. Results for WVD and SPWVD

Subsequently, we examined the effectiveness of the higher-resolution time-velocity distributions of WVD and SPWVD. Table 3 shows the representative results for the WVD and SPWVD. For all N values, the SPWVD achieved better accuracy than the WVD with appropriate parameter settings. These results indicate that the suppression of cross-terms was effective for gait identification, despite the reduction in the time-frequency distribution resolution due to the smoothing process in the SPWVD. Figure 9 shows the results for the SPWVD with various parameter settings for N = 25. We observed that there exists an optimal setting for both α and β. However, the optimal accuracy did not exceed 96%, which is worse than the results for STFT and WT.

Table 3.

Results for WVD and SPWVD.

Figure 9.

Identification accuracies using SPWVD for various parameters (N = 25). Relationships between accuracy and (a) α, (b) β.

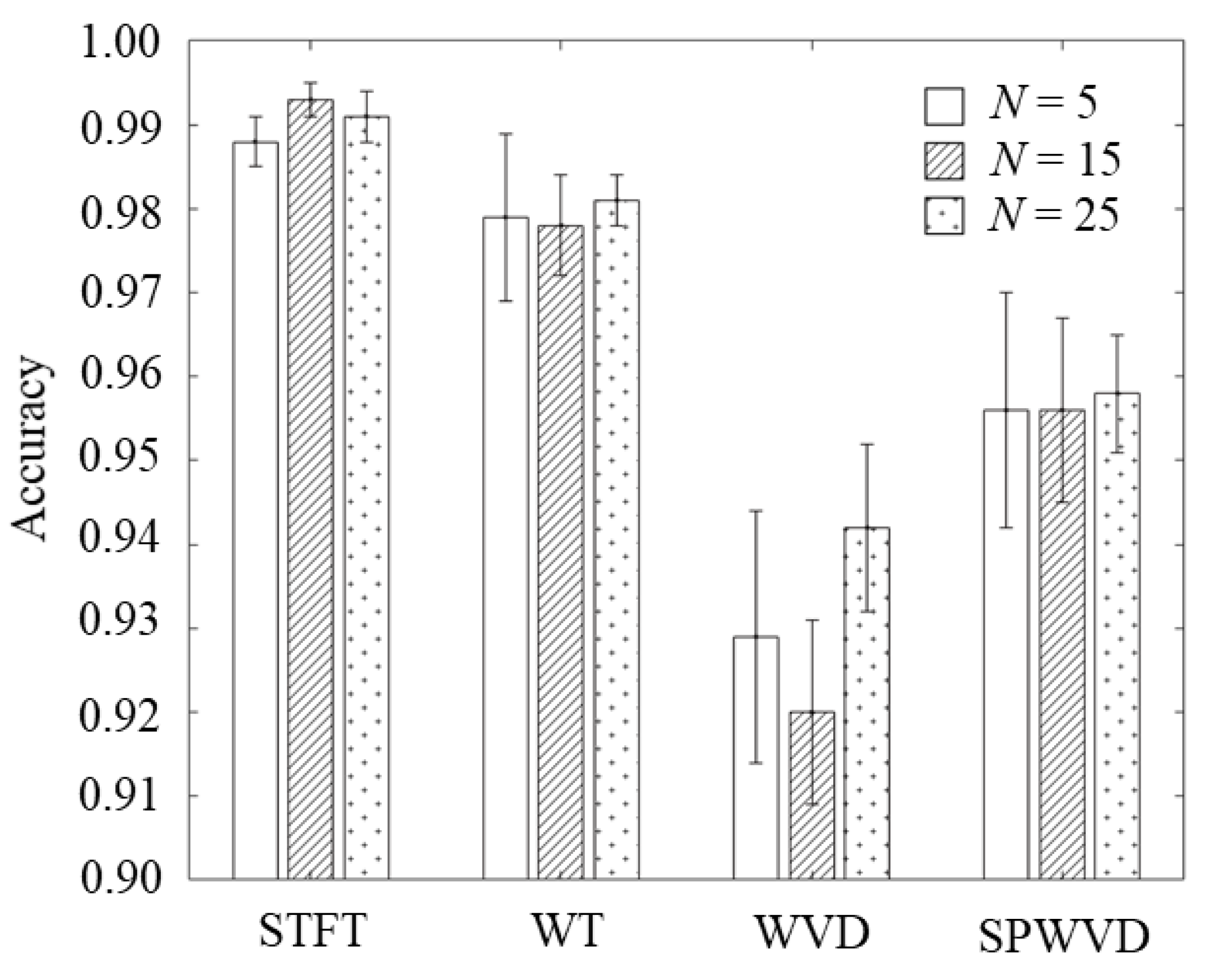

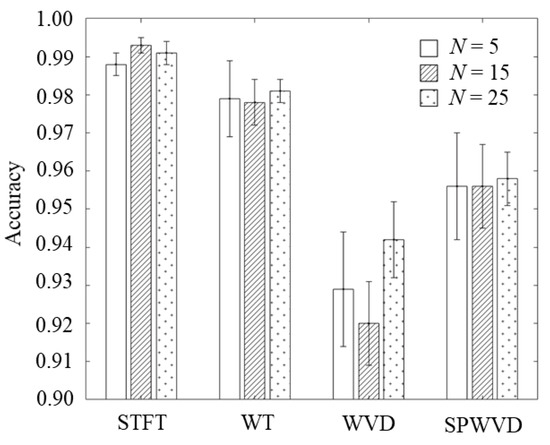

4.5. Overall Comparison and Discussion

The above results include various novel findings for the radar-based personal gait identification method with respect to the efficient time-velocity distribution images and their resolutions. Thus, we compared the results obtained using the various time-velocity distributions to clarify the findings and contributions of this study. Note that this study dealt with the personal identification based on only the natural human walking, and the properties of the signals of all participants and data are similar. Therefore, the discussion based on the time-velocity resolution (e.g., window length of the STFT) is reasonable.

Initially, we compared our results with those described in the previous subsections. Figure 10 shows the mean and standard deviation of the identification accuracies for the optimal settings of all methods. Although all methods achieved accurate identification of over 92%, the STFT method achieved significantly better results for all N. These results indicate that the various time-velocity distributions, which can flexibly control the resolutions and achieve high resolutions, were effective to a certain extent. However, conventionally used STFT has been revealed to be a more effective generation method for time-velocity distribution images for personal gait identification.

Figure 10.

Comparison of identification accuracies using various types of time-velocity distributions.

We now discuss the reasons for the results that explain the differences in accuracy caused by the time-velocity distribution. A comparison of WVD and SPWVD indicated that the high-resolution WVD was less accurate than the SPWVD. These results imply that the rich information included in the cross-terms of the WVD was ineffective for personal identification. As indicated in the WVD of Figure 5, we can confirm the larger powers of the background noise caused by several cross-terms compared with the case of the SPWVD as shown in Figure 6. Such higher background noise might affect the feature extraction. For the SPWVDs in Figure 6, although the powers of the background noise were larger for smaller α and β, the components corresponding to the body and legs in the gait were clearly confirmed compared to that in the case of WVD. Thus, the background noise caused by the cross-terms of the WVD ineffectively affected gait feature extraction, and SPWVD achieved better identification accuracy by effectively suppressing the cross-terms.

The ineffectiveness of the cross-terms can be appraised from the comparison between SPWVD and STFT, and we can confirm from the results that the SPWVD was ineffective even when the resolution of the time-velocity distribution was similar to that of the STFT method. These results imply that the cross-terms in the WVD and SPWVD images were not effectively used for feature extraction of the individuals. Furthermore, the cross-term suppression effects in SPWVD images are unstable and may lead to deterioration of identification accuracy.

The comparison between STFT and WT also slightly, but significantly, indicates better effectiveness of the STFT method despite their similar resolutions (both STFT and WT have a trade-off between time and velocity resolutions), and the flexibility in the resolution setting of the WT is better than that of the STFT. These results imply that the STFT spectrogram includes sufficient and clear information on individual gait features, and its flexible tuning via WT does not result in additional gait features. One reason why the STFT can grasp sufficient gait features is that the gait identification based on steady-state gait was considered in this study; transient state of acceleration and deceleration in walking is not assumed. Although the WT scalogram is suitable for the acquisition of transient features based on scaling, this merit might not translate toward the extraction of features in steady-state walking. It is conjectured that extremely detailed information, which is ineffective for gait identification based on steady-state walking, was extracted via the WT scalogram owing to its flexibility in time and velocity resolutions. In fact, the identification accuracy of the STFT spectrogram with WL of 32 samples was better than that of the WT scalogram with (b, γ) = (32, 3) despite their similar resolutions. Thus, the STFT spectrogram is an appropriate method for gait-based person identification using a Doppler radar.

We discuss the factors that influence gait-based personal identification using the Doppler radar. As shown in Figure 2, it can be seen that participants differ in terms of received power, trunk velocity, and leg kinetic velocity. While these are all factors that identify personal gaits, the most important factor would be the leg velocity data. This is because the WVD cross-terms mask the details of leg kinetic velocity, and the cross-terms lead to a degradation of identification accuracy according to the comparisons between WVD and SPWVD.

Furthermore, the trunk movement data are also important to identify personal gait, even though we can consider that these would not lead to differences in the results for various time-velocity distributions. Previous research on biomechanics has shown that trunk velocity and acceleration during walking contain essential information about individual gait, such as gait changes caused by the differences in physical and cognitive functions [38,39]. Therefore, trunk motion is essential for individual gait identification. However, approximately all of our generated time-velocity distribution images clearly include trunk motion, and this can be considered as the factor that indicates accurate personal identification to a certain extent for all time-velocity distributions and various resolution settings. However, this factor on the trunk movement does not lead to large differences in identification accuracy between the various time-velocity distribution images because of the clear trunk movement data.

In contrast, received power also reflects information about the location and shape of the trunk, legs, and arms, and this information includes personal information. However, as shown in Figure 2, although the trend of the distribution of received power is the same to some extent, the details of received power are not highly reproducible in the same person. Therefore, the factor of received power does not appear to be affected to a greater extent than trunk and leg movements.

Based on the above discussions, it can be considered that leg movement is an important factor in radar-based gait identification. In addition, trunk motion appears to be a factor contributing to the high accuracy of our results, although it does not appear to be significantly affected by the differences between the various types of time-velocity distribution images that we are primarily examining in this study.

4.6. Comparison with Other Studies

Our results were compared to those of conventional studies on Doppler-radar-based gait identification to further clarify the merits and novel findings in this study. Table 4 summarizes the main characteristics of the studies on gait-based personal identification by applying deep learning to Doppler radar data. The results of this study outperform various methods in terms of accuracy and shortness of the input data. However, because the experimental settings, including radar specifications and positions, are different for all studies, the accuracies listed in Table 4 should be treated as references. Nonetheless, our study suggests the effectiveness of resolution tuning for the time-velocity distribution in personal gait identification, as most conventional methods rely on empirically tuned STFT spectrograms with fixed time and velocity resolutions. That is, this study investigated the best window length, and the most important finding of this study was that a relatively shorter window length achieved better identification accuracy. The window length was significantly shorter than that in all other conventional studies with respect to its ratio to the length of the input data.

Table 4.

Comparison of Different Studies on Doppler-Radar-Based Personal Gait Identification.

The results in [33] indicated similar accuracies for the STFT and SPWVD methods with respect to the effectiveness of various time-velocity distributions, because their resolution tunings were not considered. However, we noted that the identification accuracy of the optimally tuned SPWVD method was lower than that of the STFT method. Similar results were obtained using the WT and WVD methods. Thus, we demonstrated that an optimally tuned STFT is the most suitable method. These results implied that although WVD and SPWVD can identify personal gait with moderate accuracy, the cross-terms included in these distribution images may deteriorate the identification accuracy compared with the STFT.

Based on the novel results obtained from our investigations, the effectiveness of shorter input data and suitable accuracy for a relatively larger number of people were achieved, as indicated in Table 4. These findings are also important for practical applications. The above results include various novel findings for the radar-based personal gait identification method with respect to the efficient time-velocity distribution images and their resolutions. Thus, we compared the results obtained using the various time-velocity distributions to clarify the findings and contributions of this study.

5. Conclusions

To find the effective time-velocity distribution images for Doppler-radar-based personal gait identification using a CNN, four types of representative time-velocity analysis methods and their parameter settings were explored: STFT, WT, WVD, and SPWVD. Experimental investigations to identify 25 test subjects determined the appropriate parameters that controlled the time and velocity resolution for all methods. As a result, the highest accuracy of 99.1% was achieved with the optimally tuned STFT spectrogram images. The results revealed that the performance of the STFT method, which is generally used for radar-based gait identification, was superior to that of WT, WVD, and SPWVD in terms of high-resolution time-velocity distributions. A significant finding made during this study was that the high-resolution time-velocity distributions do not necessarily lead to highly accurate individual identification because of the cross-terms in WVD. We also revealed that the shorter window function for the STFT is effective for gait identification.

The primary limitation of this study is the small sample size, with 25 participants, 22 of whom are male. Therefore, our dataset may not be sufficient for practical application in many authentication scenarios. Therefore, future research should involve a larger number of participants and data, particularly data from female participants. Another significant limitation is that the study only considered participants walking towards the radar. Future research should demonstrate that the resolution tuning of the time-velocity distribution is also effective for gait identification using data from participants walking in arbitrary directions. Furthermore, the combination of time-velocity distributions generated via other methods was not considered. The achievement of more accurate identification by combining various types of time-velocity distributions is a promising direction for the advancement of biometric technology.

Author Contributions

Conceptualization, K.S. (Kenshi Saho); methodology, K.S. (Keitaro Shioiri) and K.S. (Kenshi Saho); software, K.S. (Keitaro Shioiri); validation, K.S. (Kenshi Saho); formal analysis, K.S. (Keitaro Shioiri); investigation, K.S. (Keitaro Shioiri); resources, K.S. (Keitaro Shioiri); data curation, K.S. (Keitaro Shioiri); writing—original draft preparation, K.S. (Keitaro Shioiri) and K.S. (Kenshi Saho); writing—review and editing, K.S. (Kenshi Saho); supervision, K.S. (Kenshi Saho); project administration, K.S. (Kenshi Saho); funding acquisition, K.S. (Kenshi Saho). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Telecommunications Advancement Foundation (R2).

Institutional Review Board Statement

The experimental procedure was approved by the local ethics committee of Toyama Prefectural University (R3-7).

Informed Consent Statement

Informed consent was obtained from all participants.

Data Availability Statement

The data are available upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Masi, I.; Wu, Y.; Hassner, T.; Natarajan, P. Deep face recognition: A survey. In Proceedings of the 31st SIBGRAPI Conference on Graphics, Patterns and Images, Parana, Brazil, 29 October–1 November 2018; pp. 471–478. [Google Scholar]

- Zheng, J.; Ranjan, R.; Chen, C.H.; Chen, J.C.; Castillo, C.D.; Chellappa, R. An automatic system for unconstrained video-based face recognition. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 194–209. [Google Scholar] [CrossRef]

- Sudharsan, B.; Corcoran, P.; Ali, M.I. Smart speaker design and implementation with biometric authentication and advanced voice interaction capability. In Proceedings of the 27th AIAI Irish Conference on Artificial Intelligence and Cognitive Science (AICS), Galway, Ireland, 5–6 December 2019; pp. 305–316. [Google Scholar]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcao, A.X.; Rocha, A. Deep representations for iris, face, and fingerprint spoofing detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.A.; Alomari, O.A.; Papa, J.P.; Al-Betar, M.A.; Abdulkareem, K.H.; Mohammed, M.A.; Kadry, S.; Thinnukool, O.; Khuwuthyakorn, P. EEG channel selection based user identification via improved flower pollination algorithm. Sensors 2022, 22, 2092. [Google Scholar] [CrossRef] [PubMed]

- Singh, J.P.; Jain, S.; Arora, S.; Singh, U.P. A survey of behavioral biometric gait recognition: Current success and future perspectives. Arch. Comp. Methods Eng. 2021, 28, 107–148. [Google Scholar] [CrossRef]

- Patel, V.M.; Chellappa, R.; Chandra, D.; Barbello, B. Continuous User Authentication on Mobile Devices: Recent Progress and Remaining Challenges. IEEE Signal Process. Mag. 2016, 33, 49–61. [Google Scholar] [CrossRef]

- Khan, M.H.; Farid, M.S.; Grzegorzek, M. Vision-based approaches towards person identification using gait. Comput. Sci. Rev. 2021, 42, 100432. [Google Scholar] [CrossRef]

- Singh, J.P.; Jain, S.; Arora, S.; Singh, U.P. Vision-based gait recognition: A survey. IEEE Access 2018, 6, 70497–70527. [Google Scholar] [CrossRef]

- Bari, A.S.M.H.; Gavrilova, M.L. Artificial neural network based gait recognition using Kinect sensor. IEEE Access 2019, 7, 162708–162722. [Google Scholar] [CrossRef]

- Gianaria, E.; Grangetto, M. Robust gait identification using Kinect dynamic skeleton data. Multimed. Tool. Appl. 2019, 78, 13925–13948. [Google Scholar] [CrossRef]

- Malik, M.N.; Azam, M.A.; Ehatisham-Ul-Haq, M.; Ejaz, W.; Khalid, A. ADLAuth: Passive authentication based on activity of daily living using heterogeneous sensing in smart cities. Sensors 2019, 19, 2466. [Google Scholar] [CrossRef]

- Sprager, S.; Juric, M.B. An efficient HOS-based gait authentication of accelerometer data. IEEE Trans. Inf. Forensics Sec. 2015, 10, 1486–1498. [Google Scholar] [CrossRef]

- Saho, K.; Shioiri, K.; Inuzuka, K. Accurate person identification based on combined sit-to-stand and stand-to-sit movements measured using Doppler radars. IEEE Sens. J. 2020, 21, 4563–4570. [Google Scholar] [CrossRef]

- Islam, S.M.M.; Borić-Lubecke, O.; Zheng, Y.; Lubecke, V.M. Radar-based non-contact continuous identity authentication. Remote Sens. 2020, 12, 2279. [Google Scholar] [CrossRef]

- Chen, Z.; Li, G.; Fioranelli, F.; Griffiths, H. Personnel recognition and gait classification based on multistatic Micro-Doppler signatures using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 669–673. [Google Scholar] [CrossRef]

- Ni, Z.; Huang, B. Human identification based on natural gait micro-Doppler signatures using deep transfer learning. IET Radar Sonar Navig. 2020, 14, 1640–1646. [Google Scholar] [CrossRef]

- Ni, Z.; Huang, B. Gait-based person identification and intruder detection using mm-wave sensing in multi-person scenario. IEEE Sens. J. 2022, 22, 9713–9723. [Google Scholar] [CrossRef]

- Lang, Y.; Wang, Q.; Yang, Y.; Hou, C.; He, Y.; Xu, J. Person identification with limited training data using radar micro-Doppler signatures. Microw. Opt. Technol. Lett. 2020, 62, 1060–1068. [Google Scholar] [CrossRef]

- Ni, Z.; Huang, B. Robust person gait identification based on limited radar measurements using set-based discriminative subspaces learning. IEEE Trans. Instrum. Meas. 2021, 71, 2501614. [Google Scholar] [CrossRef]

- Vandersmissen, B.; Knudde, N.; Jalalvand, A.; Couckuyt, I.; Bourdoux, A.; Neve, W.D.; Dhaene, T. Indoor person identification using a low-power FMCW radar. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3941–3952. [Google Scholar] [CrossRef]

- Cao, P.; Xia, W.; Ye, M.; Zhang, J.; Zhou, J. Radar-ID: Human identification based on radar micro-Doppler signatures using deep convolutional neural networks. IET Radar Sonar Navig. 2018, 12, 729–734. [Google Scholar] [CrossRef]

- Yang, Y.; Hou, C.; Lang, Y.; Yue, G.; He, Y.; Xiang, W. Person identification using Micro-Doppler signatures of human motions and UWB radar. IEEE Microw. Wirel. Compon. Lett. 2019, 29, 366–368. [Google Scholar] [CrossRef]

- Yu, Z.; Zhang, D.; Wang, Z.; Han, Q.; Guo, B.; Wang, Q. SoDar: Multitarget gesture recognition based on SIMO Doppler radar. IEEE Trans. Hum. Mach. Syst. 2022, 52, 276–289. [Google Scholar] [CrossRef]

- Zhang, J. Analysis of human gait radar signal using reassigned WVD. Phys. Procedia 2012, 24, 1607–1614. [Google Scholar] [CrossRef]

- Manfredi, G.; Sáenz, I.D.H.; Menelle, M.; Saillant, S.; Ovarlez, J.P.; Thirion-Lefevre, L. Time-frequency characterisation of bistatic Doppler signature of a wooded area walk at L-band. IET Radar Sonar Navig. 2021, 15, 1573–1582. [Google Scholar] [CrossRef]

- Khare, S.K.; Bajaj, V. Time–frequency representation and convolutional neural network-based emotion recognition. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2901–2909. [Google Scholar] [CrossRef]

- Lopac, N.; Hržić, F.; Vuksanović, I.P.; Lerga, J. Detection of Non-Stationary GW Signals in High Noise from Cohen’s Class of Time–Frequency Representations Using Deep Learning. IEEE Access 2021, 10, 2408–2428. [Google Scholar] [CrossRef]

- Ullah, A.; Anwar, S.M.; Bilal, M.; Mehmood, R.M. Classification of arrhythmia by using deep learning with 2-D ECG spectral image representation. Remote Sens. 2020, 12, 1685. [Google Scholar] [CrossRef]

- Tang, L.; Jia, Y.; Qian, Y.; Yi, S.; Yuan, P. Human activity recognition based on mixed CNN With radar multi-spectrogram. IEEE Sens. J. 2021, 21, 25950–25962. [Google Scholar] [CrossRef]

- Arab, H.; Ghaffari, I.; Chioukh, L.; Tatu, S.O.; Dufour, S. A convolutional neural network for human motion recognition and classification using a millimeter-wave Doppler radar. IEEE Sens. J. 2022, 22, 4494–4502. [Google Scholar] [CrossRef]

- Su, B.Y.; Ho, K.C.; Rantz, M.J.; Skubic, M. Doppler radar fall activity detection using the wavelet transform. IEEE Trans. Biomed. Eng. 2015, 62, 865–875. [Google Scholar] [CrossRef]

- Dong, S.; Xia, W.; Li, Y.; Zhang, Q.; Tu, D. Radar-based human identification using deep neural network for long-term stability. IET Radar Sonar Navig. 2020, 14, 1521–1527. [Google Scholar] [CrossRef]

- Saho, K.; Shioiri, K.; Kudo, S.; Fujimoto, M. Estimation of Gait Parameters from Trunk Movement Measured by Doppler Radar. IEEE J. Electromagn. RF Microw. Med. Biol. 2022, 6, 461–469. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Gurbuz, S.G.; Amin, M.G. Radar-based human-motion recognition with deep learning: Promising applications for indoor monitoring. IEEE Signal Process. Mag. 2019, 36, 16–28. [Google Scholar] [CrossRef]

- Lilly, J.M.; Olhede, S.C. Higher-Order Properties of Analytic Wavelets. IEEE Trans. Signal Process. 2009, 57, 146–160. [Google Scholar] [CrossRef]

- Fujimoto, M.; Chou, L.-S. Sagittal plane momentum control during walking in elderly fallers. Gait Posture 2016, 45, 121–126. [Google Scholar] [CrossRef]

- Pradhan, A.; Kuruganti, U.; Chester, V. Biomechanical parameters and clinical assessment scores for identifying elderly fallers based on balance and dynamic tasks. IEEE Access 2020, 8, 193532–193543. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).