Driving Activity Recognition Using UWB Radar and Deep Neural Networks

Abstract

:1. Introduction

- We introduce a novel method for normal, autonomous, and distracted driving activity recognition using an ultra-wideband radar and Deep Neural Networks.

- We evaluate the generalization ability of radar-based driving activity recognition to persons not seen in the training data.

- We show that applying an IQR normalization method significantly improves the generalization ability of the neural networks for previously unseen persons.

- We introduce RaDA, the first UWB radar dataset for driving activity classification, and make it publicly available to facilitate the comparison and benchmarking of methods in the field.

2. Related Work

2.1. Radar and Driver Recognition

2.2. Radar and Deep Learning

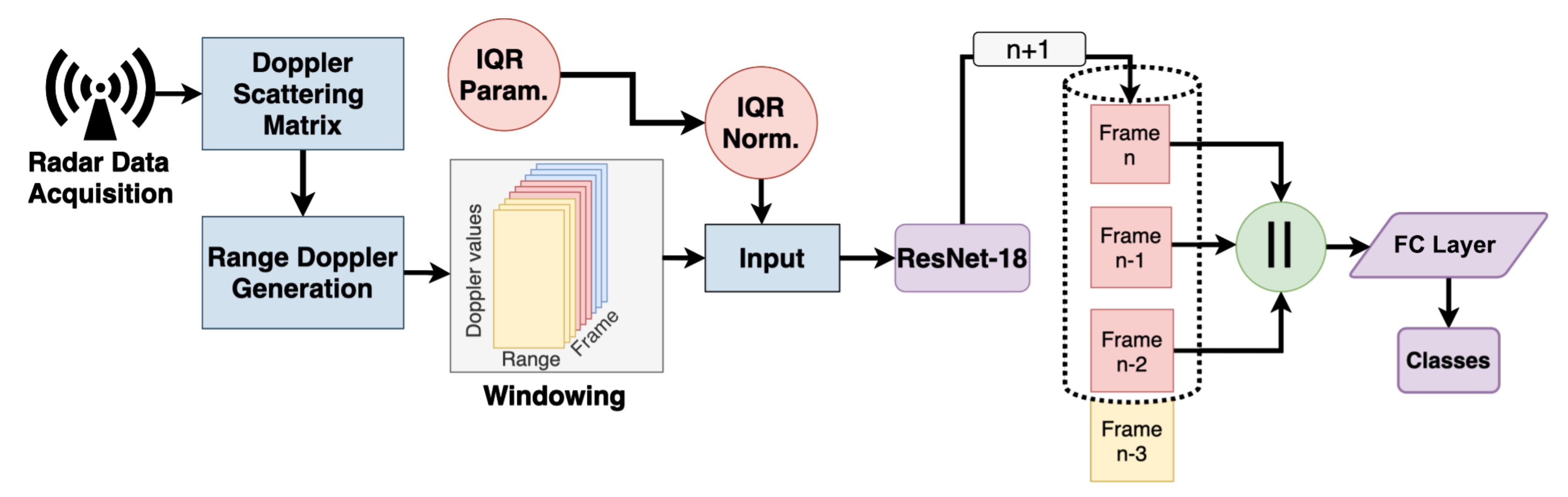

3. Proposed Approach

4. Experimental Setup and Dataset

4.1. Radar

4.2. Driving Simulation Environment

4.3. RaDA Dataset

Action Performance Protocol

- Autopilot. While driving with autopilot, participants were instructed to keep their hands on their knees while sitting in the simulator and observing the virtual environment.

- Driving. Participants were asked to drive freely through the virtual city following the general traffic rules. They were also instructed to turn at least once.

- Sleeping. For the sleeping action, participants were asked to take a comfortable position in the driving chair while keeping their head in ventral flexion, close their eyes and relax.

- Smartphone utilization. We used the same instruction as for autopilot, with the addition to check e-mails or social media using their smartphone with both hands.

- Driving and Smartphone utilization. During this action, the participants had to perform driving while steering the wheel with the left hand and checking e-mails, social media, etc. using their right hand.

- Talking to passenger. A second person was invited as a passenger to take the front seat. The drivers were instructed to actively communicate with the passenger while rotating their head toward the passenger and using the right hand for gesticulation if preferred. At the same time, they had to perform regular driving.

5. Experiments and Results

5.1. Baseline Classification

5.2. ResNet-18

5.3. LSTM

6. Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Joiner, I.A. Chapter 4—Driverless Vehicles: Pick Me Up at the…? In Emerging Library Technologies; Joiner, I.A., Ed.; Chandos Information Professional Series; Chandos Publishing: Irving, TX, USA, 2018; pp. 69–94. [Google Scholar] [CrossRef]

- Brishtel, I.; Schmidt, T.; Vozniak, I.; Rambach, J.R.; Mirbach, B.; Stricker, D. To Drive or to Be Driven? The Impact of Autopilot, Navigation System, and Printed Maps on Driver’s Cognitive Workload and Spatial Knowledge. ISPRS Int. J. Geo-Inf. 2021, 10, 668. [Google Scholar] [CrossRef]

- Gold, C.; Dambock, D.; Lorenz, L.; Bengler, K. “Take over!” How long does it take to get the driver back into the loop? Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2013, 57, 1938–1942. [Google Scholar] [CrossRef] [Green Version]

- Yang, S.; le kernec, J.; Fioranelli, F.; Romain, O. Human Activities Classification in a Complex Space Using Raw Radar Data. In Proceedings of the 2019 International Radar Conference (RADAR), Toulon, France, 23–27 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Liang, N.; Yang, J.; Yu, D.; Prakah-Asante, K.; Curry, R.; Blommer, M.; Swaminathan, R.; Pitts, B. Using eye-tracking to investigate the effects of pre-takeover visual engagement on situation awareness during automated driving. Accid. Anal. Prev. 2021, 157, 106143. [Google Scholar] [CrossRef]

- Katrolia, J.; Mirbach, B.; El-Sherif, A.; Feld, H.; Rambach, J.; Stricker, D. Ticam: A time-of-flight in-car cabin monitoring dataset. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 22–25 November 2021. [Google Scholar]

- Martin, M.; Roitberg, A.; Haurilet, M.; Horne, M.; Reiß, S.; Voit, M.; Stiefelhagen, R. Drive&act: A multi-modal dataset for fine-grained driver behavior recognition in autonomous vehicles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2801–2810. [Google Scholar]

- Brishtel, I.; Krauß, S.; Schmidt, T.; Rambach, J.R.; Vozniak, I.; Stricker, D. Classification of Manual Versus Autonomous Driving based on Machine Learning of Eye Movement Patterns. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 700–705. [Google Scholar]

- Victor, T.W.; Tivesten, E.; Gustavsson, P.; Johansson, J.; Sangberg, F.; Aust, M.L. Automation Expectation Mismatch: Incorrect Prediction Despite Eyes on Threat and Hands on Wheel. Hum. Factors 2018, 60, 1095–1116. [Google Scholar] [CrossRef] [Green Version]

- Louw, T.; Merat, N. Are you in the loop? Using gaze dispersion to understand driver visual attention during vehicle automation. Transp. Res. Part C Emerg. Technol. 2017, 76, 35–50. [Google Scholar] [CrossRef]

- Zeeb, K.; Buchner, A.; Schrauf, M. Is take-over time all that matters? The impact of visual-cognitive load on driver take-over quality after conditionally automated driving. Accid. Anal. Prev. 2016, 92, 230–239. [Google Scholar] [CrossRef]

- Cabrall, C.; Eriksson, A.; Dreger, F.; Happee, R.; de Winter, J. How to keep drivers engaged while supervising driving automation? A literature survey and categorisation of six solution areas. Theor. Issues Ergon. Sci. 2019, 22, 332–365. [Google Scholar] [CrossRef]

- Templeton, B. New Tesla Autopilot Statistics Show It’s Almost as Safe Driving with It as without. 2020. Available online: https://www.forbes.com/sites/bradtempleton/2020/10/28/new-tesla-autopilot-statistics-show-its-almost-as-safe-driving-with-it-as-without/ (accessed on 14 November 2022).

- Volvo Cars. Volvo Cars to Deploy In-Car Cameras and Intervention against Intoxication, Distraction. 2019. Available online: https://www.media.volvocars.com/global/en-gb/media/pressreleases/250015/volvo-cars-to-deploy-in-car-cameras-and-intervention-against-intoxication-distraction (accessed on 14 November 2022).

- Li, X.; He, Y.; Jing, X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Bryan, J.; Kwon, J.; Lee, N.; Kim, Y. Application of ultra-wide band radar for classification of human activities. Radar Sonar Navig. IET 2012, 6, 172–179. [Google Scholar] [CrossRef]

- Bouchard, K.; Maitre, J.; Bertuglia, C.; Gaboury, S. Activity Recognition in Smart Homes using UWB Radars. Procedia Comput. Sci. 2020, 170, 10–17. [Google Scholar] [CrossRef]

- Zhu, S.; Xu, J.; Guo, H.; Liu, Q.; Wu, S.; Wang, H. Indoor Human Activity Recognition Based on Ambient Radar with Signal Processing and Machine Learning. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Taylor, W.; Dashtipour, K.; Shah, S.A.; Hussain, A.; Abbasi, Q.H.; Imran, M.A. Radar Sensing for Activity Classification in Elderly People Exploiting Micro-Doppler Signatures Using Machine Learning. Sensors 2021, 21, 3881. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.; Guo, S.; Sun, L.; Chen, W. Human Motion Classification Based on Range Information with Deep Convolutional Neural Network. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 1519–1523. [Google Scholar] [CrossRef]

- Yang, Y.; Hou, C.; Lang, Y.; Guan, D.; Huang, D.; Xu, J. Open-set human activity recognition based on micro-Doppler signatures. Pattern Recognit. 2019, 85, 60–69. [Google Scholar] [CrossRef]

- Noori, F.M.; Uddin, M.Z.; Torresen, J. Ultra-Wideband Radar-Based Activity Recognition Using Deep Learning. IEEE Access 2021, 9, 138132–138143. [Google Scholar] [CrossRef]

- Mercuri, M.; Liu, Y.H.; Lorato, I.; Torfs, T.; Wieringa, F.; Bourdoux, A.; Van Hoof, C. A Direct Phase-Tracking Doppler Radar Using Wavelet Independent Component Analysis for Non-Contact Respiratory and Heart Rate Monitoring. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 632–643. [Google Scholar] [CrossRef]

- Yarovoy, A.; Ligthart, L.; Matuzas, J.; Levitas, B. UWB radar for human being detection. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 10–14. [Google Scholar] [CrossRef] [Green Version]

- Saeed, U.; Shah, S.; Alotaibi, A.; Althobaiti, T.; Ramzan, N.; Abbasi, Q.; Shah, S. Portable UWB RADAR Sensing System for Transforming Subtle Chest Movement Into Actionable Micro-Doppler Signatures to Extract Respiratory Rate Exploiting ResNet Algorithm. IEEE Sensors 2021, 21, 23518–23526. [Google Scholar] [CrossRef]

- Erol, B.; Amin, M.; Boashash, B.; Ahmad, F.; Zhang, Y. Wideband radar based fall motion detection for a generic elderly. In Proceedings of the 2016 50th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 6–9 November 2016; pp. 1768–1772. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Ramaiah, K. In-Cabin Radar Can Sense Children in Second- and Third-Row Vehicles. Available online: https://www.electronicproducts.com/in-cabin-radar-can-sense-children-in-second-and-third-row-vehicles/ (accessed on 14 November 2022).

- InnoSenT. Incabin Radar Monitoring. Available online: https://www.innosent.de/en/automotive/incabin-radar-monitoring/ (accessed on 14 November 2022).

- Leem, S.; Khan, F.; Cho, S.H. Vital Sign Monitoring and Mobile Phone Usage Detection Using IR-UWB Radar for Intended Use in Car Crash Prevention. Sensors 2017, 17, 1240. [Google Scholar] [CrossRef] [Green Version]

- MOBIS, H. The New Radar-based Occupant Alert System To Keep Your Children Safe. Available online: https://www.hyundaimotorgroup.com/story/CONT0000000000002988 (accessed on 14 November 2022).

- Ding, C.; Chae, R.; Wang, J.; Zhang, L.; Hong, H.; Zhu, X.; Li, C. Inattentive Driving Behavior Detection Based on Portable FMCW Radar. IEEE Trans. Microw. Theory Tech. 2019, 67, 4031–4041. [Google Scholar] [CrossRef]

- Sakamoto, T. Personal Identification Using Ultrawideband Radar Measurement of Walking and Sitting Motions and a Convolutional Neural Network. arXiv 2020, arXiv:2008.02182. [Google Scholar] [CrossRef]

- Li, J.; Zeng, Z.; Sun, J.; Liu, F. Through-Wall Detection of Human Being’s Movement by UWB Radar. IEEE Geosci. Remote. Sens. Lett. 2012, 9, 1079–1083. [Google Scholar] [CrossRef]

- Congzhang, D.; Jia, Y.; Cui, G.; Chen, C.; Zhong, X.; Guo, Y. Continuous Human Activity Recognition through Parallelism LSTM with Multi-Frequency Spectrograms. Remote Sens. 2021, 13, 4264. [Google Scholar] [CrossRef]

- Ahmed, S.; Wang, D.; Park, J.; Cho, S.H. UWB-gestures, a public dataset of dynamic hand gestures acquired using impulse radar sensors. Sci. Data 2021, 8, 102. [Google Scholar] [CrossRef] [PubMed]

- Fioranelli, D.F.; Shah, D.S.A.; Li1, H.; Shrestha, A.; Yang, D.S.; Kernec, D.J.L. Radar sensing for healthcare. Electron. Lett. 2019, 55, 1022–1024. [Google Scholar] [CrossRef] [Green Version]

- Bocus, M.J.; Li, W.; Vishwakarma, S.; Kou, R.; Tang, C.; Woodbridge, K.; Craddock, I.; McConville, R.; Santos-Rodriguez, R.; Chetty, K.; et al. OPERAnet, a multimodal activity recognition dataset acquired from radio frequency and vision-based sensors. Sci. Data 2022, 9, 474. [Google Scholar] [CrossRef] [PubMed]

- Vandersmissen, B.; Knudde, N.; Jalalvand, A.; Couckuyt, I.; Dhaene, T.; De Neve, W. Indoor human activity recognition using high-dimensional sensors and deep neural networks. Neural Comput. Appl. 2020, 32, 12295–12309. [Google Scholar] [CrossRef]

- Fontana, R. Recent system applications of short-pulse ultra-wideband (UWB) technology. IEEE Trans. Microw. Theory Tech. 2004, 52, 2087–2104. [Google Scholar] [CrossRef]

- Zhang, C.; Kuhn, M.; Merkl, B.; Fathy, A.; Mahfouz, M. Accurate UWB indoor localization system utilizing time difference of arrival approach. In Proceedings of the 2006 IEEE Radio and Wireless Symposium, San Diego, CA, USA, 17–19 January 2006; pp. 515–518. [Google Scholar] [CrossRef]

- Du, H.; He, Y.; Jin, T. Transfer Learning for Human Activities Classification Using Micro-Doppler Spectrograms. In Proceedings of the 2018 IEEE International Conference on Computational Electromagnetics (ICCEM), Chengdu, China, 26–28 March 2018. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Yang, Y.; Hong, Y.; Jing, X. LSTM based Human Activity Classification on Radar Range Profile. In Proceedings of the 2019 IEEE International Conference on Computational Electromagnetics (ICCEM), Shanghai, China, 20–22 March 2019; pp. 1–2. [Google Scholar] [CrossRef]

- University, C.M. CMU Graphics Lab Motion Capture Database. Available online: http://mocap.cs.cmu.edu/ (accessed on 14 November 2022).

- Shrestha, A.; Li, H.; le kernec, J.; Fioranelli, F. Continuous human activity classification from FMCW radar with Bi-LSTM networks. IEEE Sensors J. 2020, 20, 13607–13619. [Google Scholar] [CrossRef]

- Liang, Y.; Lee, J.; Yekhshatyan, L. How Dangerous Is Looking Away from the Road? Algorithms Predict Crash Risk from Glance Patterns in Naturalistic Driving. Hum. Factors 2012, 54, 1104–1116. [Google Scholar] [CrossRef]

- Thullier, F.; Maitre, J.; Gaboury, S.; Bouchard, K. A Systematic Evaluation of the XeThru X4 Ultra-Wideband Radar Behavior. Procedia Comput. Sci. 2022, 198, 148–155. [Google Scholar] [CrossRef]

- Regan, M.A.; Hallett, C. Chapter 20—Driver Distraction: Definition, Mechanisms, Effects, and Mitigation. In Handbook of Traffic Psychology; Porter, B.E., Ed.; Academic Press: San Diego, CA, USA, 2011; pp. 275–286. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Smith, L.N.; Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications; Pham, T., Ed.; International Society for Optics and Photonics: Washington, DC, USA, 2019; Volume 11006, pp. 369–386. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Bandwidth (GHz) | 7.25–10.20 |

| Frames Per Second | 50 |

| Doppler Samples | 1024 |

| Doppler Frequency Range (Hz) | −8.5–8.5 |

| Range Bins | 24 |

| Measurement Range (m) | 0.4–1.2 |

| No. | Action | Nb. of Samples |

|---|---|---|

| 1 | Driving | 1747 |

| 2 | Autopilot | 1844 |

| 3 | Sleeping | 1708 |

| 4 | Driving & smartphone utilization | 1692 |

| 5 | Smartphone utilization | 1715 |

| 6 | Talking to passenger | 1700 |

| Total size | 10,406 |

| Participant | Height (cm) | Weight (kg) |

|---|---|---|

| 1 | 188 | 85 |

| 2 | 169 | 50 |

| 3 | 178 | 64 |

| 4 | 180 | 93 |

| 5 | 178 | 90 |

| 6 | 167 | 74 |

| 7 | 172 | 55 |

| 8 | 179 | 77 |

| 9 | 170 | 63 |

| 10 | 164 | 59 |

| Architecture | Validation Type | IQR Norm. | Accuracy | F1-Score |

|---|---|---|---|---|

| Ding et al. [32] | 10-fold cross-validation | ✔ | 0.263 | 0.261 |

| Ding et al. [32] | Cross-validation (Leave-one-out) | ✔ | 0.252 | 0.249 |

| ResNet-18 | Random splitting (80% / 20%) | – | 1.0 | 1.0 |

| ResNet-18 | Random splitting (80% / 20%) | ✔ | 1.0 | 1.0 |

| ResNet-18 | Cross-validation (Leave-one-out) | – | 0.674 | 0.640 |

| ResNet-18 | Cross-validation (Leave-one-out) | ✔ | 0.713 | 0.690 |

| LSTM | Cross-validation (Leave-one-out) | – | 0.439 | 0.351 |

| LSTM | Cross-validation (Leave-one-out) | ✔ | 0.672 | 0.590 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brishtel, I.; Krauss, S.; Chamseddine, M.; Rambach, J.R.; Stricker, D. Driving Activity Recognition Using UWB Radar and Deep Neural Networks. Sensors 2023, 23, 818. https://doi.org/10.3390/s23020818

Brishtel I, Krauss S, Chamseddine M, Rambach JR, Stricker D. Driving Activity Recognition Using UWB Radar and Deep Neural Networks. Sensors. 2023; 23(2):818. https://doi.org/10.3390/s23020818

Chicago/Turabian StyleBrishtel, Iuliia, Stephan Krauss, Mahdi Chamseddine, Jason Raphael Rambach, and Didier Stricker. 2023. "Driving Activity Recognition Using UWB Radar and Deep Neural Networks" Sensors 23, no. 2: 818. https://doi.org/10.3390/s23020818