Cascaded Segmentation U-Net for Quality Evaluation of Scraping Workpiece

Abstract

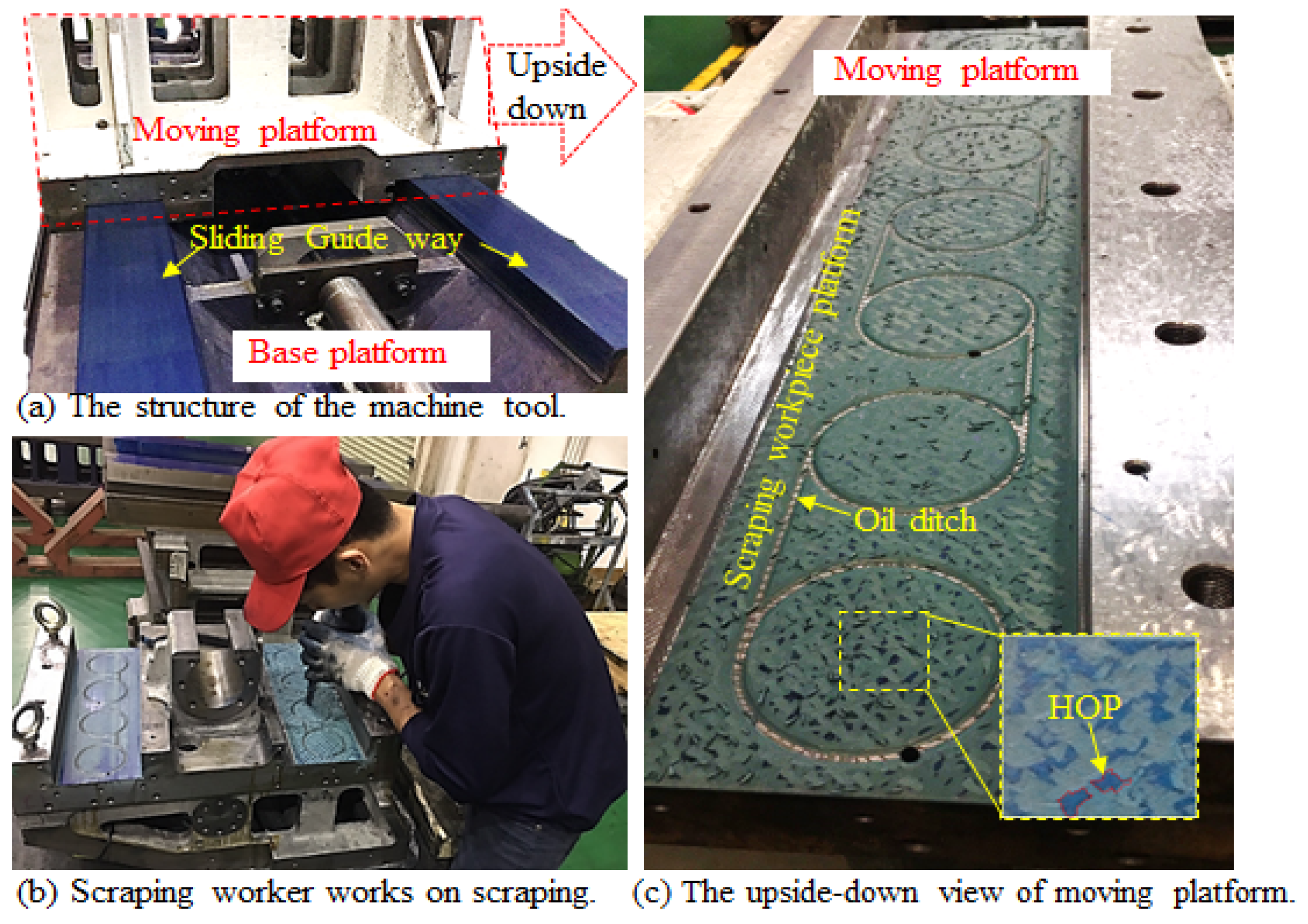

1. Introduction

2. Related Works

3. Scraping Workpiece Quality-Evaluating Edge-Cloud System

3.1. Front-End Edge (Client) on the Portable Device

3.2. Back-End Scraping Workpiece Quality-Evaluating Cloud Computing on Server

4. The Height of Points Segmentation Using Cascaded U-Net

4.1. Encoder Consists of RCU and RDU for Feature Extraction

4.2. Decoder Consists of RCU without BN and RUU for Feature Reconstruction

4.3. Cascaded Multi-Stage Head Contains Cross-Dimension Compression for Multi-Stage Classification

5. POP and PPI Calculation

5.1. Training Process of Cascaded U-Net with Loss Functions

5.2. Inference Process for POP and PPI Calculation

5.2.1. Scraping Workpiece ROI Extraction Based on the HSV Color Domain

5.2.2. Noise Removal Using Connected-Component Labeling

5.2.3. Height of Points Grouping Using K-Dimensional

6. Experimental Result

6.1. Data Collection and Augmentation

6.2. POP and PPI Evaluation on Scraping Workpiece Quality-Evaluating Edge-Cloud System

6.2.1. Error Rate of POP and PPI

6.2.2. Repeatability of POP and PPI

6.3. Height of Points Segmentation Evaluation Based on Cascaded U-Net

6.3.1. Residual Down-Sampling and Up-Sampling Unit

6.3.2. Cascaded Multi-Stage Head Contains Cross-Dimension Compression

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oßwald, K.; Gissel, J.C.; Lochmahr, I. Macroanalysis of Hand Scraping. J. Manuf. Mater. Process. 2020, 4, 90. [Google Scholar] [CrossRef]

- Yukeng, H.; Darong, C.; Linqing, Z. Effect of surface topography of scraped machine tool guideways on their tribological behaviour. Tribol. Int. 1985, 18, 125–129. [Google Scholar] [CrossRef]

- Tsutsumi, H.; Kyusojin, A.; Fukuda, K. Tribology Characteristics Estimation of Slide-way Surfaces Finished by Scraping. Nippon. Kikai Gakkai Ronbunshu C Hen (Trans. Jpn. Soc. Mech. Eng. Ser. C) 2006, 72, 3009–3015. [Google Scholar] [CrossRef]

- Chen, M.-F.; Chen, C.-W.; Su, C.-J.; Huang, W.-L.; Hsiao, W.-T. Identification of the scraping quality for the machine tool using the smartphone. Int. J. Adv. Manuf. Technol. 2019, 105, 3451–3461. [Google Scholar] [CrossRef]

- Lin, Y.; Yeh, C.-Y.; Shiu, S.-C.; Lan, P.-S.; Lin, S.-C. The design and feasibility test of a mobile semi-auto scraping system. Int. J. Adv. Manuf. Technol. 2018, 101, 2713–2721. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding Convolution for Semantic Segmentation. In Proceedings of the IEEE Winter Conference Applications of Computer Vision, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Honolulu, Hawaii, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference Computer Vision, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.-W.; Heng, P.-A. H-DenseUNet: Hybrid Densely Connected UNet for Liver and Tumor Segmentation from CT Volumes. IEEE Trans. Med. Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef]

- Li, H.; Fang, J.; Liu, S.; Liang, X.; Yang, X.; Mai, Z.; Van, M.T.; Wang, T.; Chen, Z.; Ni, D. CR-Unet: A Composite Network for Ovary and Follicle Segmentation in Ultrasound Images. IEEE J. Biomed. Health Inf. 2019, 24, 974–983. [Google Scholar] [CrossRef]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Islam, M.A.; Naha, S.; Rochan, M.; Bruce, N.; Wang, Y. Label refinement network for coarse-to-fine semantic segmentation. arXiv 2017, arXiv:1703.00551. [Google Scholar] [CrossRef]

- Naresh, Y.; Little, S.; Oconnor, N.E. A Residual Encoder-Decoder Network for Semantic Segmentation in Autonomous Driving Scenarios. In Proceedings of the 2018 26th European Signal Processing Conference, Rome, Italy, 3–7 September 2018; pp. 1052–1056. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Tao, A.; Sapra, K.; Catanzaro, B. Hierarchical multi-scale attention for semantic segmentation. arXiv 2020, arXiv:2005.10821. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, J. Ocnet: Object context network for scene parsing. arXiv 2018, arXiv:1809.00916. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Y.; Li, Z.; Hong, Z.; Liu, J.; Ma, F.; Han, J.; Ding, E. ACFNet: Attentional Class Feature Network for Semantic Segmentation. In Proceedings of the IEEE/CVF Int. Conference Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6798–6807. [Google Scholar] [CrossRef]

- Zhou, Y.; Huang, W.; Dong, P.; Xia, Y.; Wang, S. D-UNet: A Dimension-Fusion U Shape Network for Chronic Stroke Lesion Segmentation. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 940–950. [Google Scholar] [CrossRef] [PubMed]

- Kyslytsyna, A.; Xia, K.; Kislitsyn, A.; El Kader, I.A.; Wu, Y. Road Surface Crack Detection Method Based on Conditional Generative Adversarial Networks. Sensors 2021, 21, 7405. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Winn, J. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Development Kit. 2012. Available online: http://www.pascal-network.org/challenges/VOC/voc2012 (accessed on 22 November 2022).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th Int. Conference Medical image computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested U-Net architecture for medical image seg-mentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Tsutsumi, H.; Hada, C.; Koike, D. Development of an Automatic Scraping Machine with Recognition for Black Bearings (4th report). J. Jpn. Soc. Precis. Eng. 2017, 83, 468–473. [Google Scholar] [CrossRef]

- Hsieh, T.H.; Jywe, W.Y.; Huang, H.L.; Chen, S.L. Development of a laser-based measurement system for evaluation of the scraping workpiece quality. Opt. Lasers Eng. 2011, 49, 1045–1053. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Giusti, A.; Gambardella, L.; Schmidhuber, J. Deep neural networks segment neuronal membranes in electron microscopy images. In Proceedings of the 25th International Conference Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 2, pp. 2843–2851. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Teixeira, L.O.; Pereira, R.M.; Bertolini, D.; Oliveira, L.S.; Nanni, L.; Cavalcanti, G.D.C.; Costa, Y.M.G. Impact of Lung Segmentation on the Diagnosis and Explanation of COVID-19 in Chest X-ray Images. Sensors 2021, 21, 7116. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Visin, F.; Kastner, K.; Cho, K.; Matteucci, M.; Courville, A.; Bengio, Y. ReNet: A recurrent neural network based alternative to convolutional networks. arXiv 2015, arXiv:1505.00393. [Google Scholar] [CrossRef]

- Lee, G.; Jhang, K. Neural Network Analysis for Microplastic Segmentation. Sensors 2021, 21, 7030. [Google Scholar] [CrossRef] [PubMed]

- Hou, Q.; Cheng, M.M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P.H. Deeply supervised salient object detection with short connec-tions. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3203–3212. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1395–1403. [Google Scholar]

- Xu, N.; Price, B.; Cohen, S.; Huang, T. Deep image matting. In Proceedings of the IEEE Conference Computer Vision and Pattern Recogni-tion, Honolulu, HI, USA, 21–26 July 2017; pp. 2970–2979. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNeT for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar]

- Gordon, R. Essential JNI: Java Native Interface; Prentice-Hall, Inc.: River, NJ, USA, 1998. [Google Scholar]

- Liang, S. The Java Native Interface: Programmer’s Guide and Specification; Addison-Wesley Professional: Boston, MA, USA, 1999. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- He, L.; Chao, Y.; Suzuki, K. A Run-Based Two-Scan Labeling Algorithm. IEEE Trans. Image Process. 2008, 17, 749–756. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Chao, Y.; Suzuki, K.; Itoh, H. A run-based one-scan labeling algorithm. In Proceedings of the 6th International Conference Image Analysis and Recognition, Halifax, BC, Canada, 6–8 July 2009; pp. 93–102. [Google Scholar]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Friedman, J.H.; Bentley, J.L.; Finkel, R.A. An Algorithm for Finding Best Matches in Logarithmic Expected Time. ACM Trans. Math. Softw. 1977, 3, 209–226. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar] [CrossRef]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4974–4983. [Google Scholar]

| Dataset | Training Dataset (Patch) | Test Dataset (Patch) | Total (Patch) | |

|---|---|---|---|---|

| Training Dataset | Validation Dataset | |||

| Ds_1 | 320 | 80 | 100 | 500 |

| Ds_2 | 227 | 57 | 71 | 355 |

| Ds_3 | 9 | 3 | 3 | 15 |

| Ds_4 | 76 | 20 | 24 | 120 |

| Ds_5 | 19 | 5 | 6 | 30 |

| Ds_6 | 160 | 40 | 50 | 250 |

| Ds_7 | 16 | 4 | 5 | 25 |

| Ds_8 | 80 | 20 | 25 | 125 |

| Total | 1136 | 284 | 1420 | |

| Testing Dataset | Num. (Patch) | POP Error | PPI Error | ||

|---|---|---|---|---|---|

| μPOP (%/mm2) | σPPI (%) | μPPI (Point) | σPPI (Point) | ||

| Ds_1 | 100 | 3.6/23.1 | 0.8 | 0.8 | 0.5 |

| Ds_2 | 71 | 4.1/26.3 | 1.4 | 0.8 | 0.6 |

| Ds_3 | 3 | 3.9/25.0 | 0.6 | 1.3 | 0.3 |

| Ds_4 | 24 | 3.9/25.0 | 0.6 | 0.7 | 0.3 |

| Ds_5 | 6 | 2.2/14.1 | 1.7 | 1.4 | 0.2 |

| Ds_6 | 50 | 4.5/28.9 | 0.9 | 0.7 | 0.6 |

| Ds_7 | 5 | 2.8/17.9 | 10.5 | 6.3 | 7.1 |

| Ds_8 | 25 | 15.2/97.6 | 1.4 | 1.3 | 0.6 |

| Total | 284 | 3.7/23.9 | 1.2 | 0.9 | 0.6 |

| Location/ * (POP, PPI) | Oil Ditch | X Axis (POP, PPI) | Y Axis (POP, PPI) | Avg. (POP, PPI) | Std. (POP, PPI) | ||

|---|---|---|---|---|---|---|---|

| 15° | 45° | 15° | 45° | ||||

| A (40%, 11 ps) | Without | 41%, 11 ps | 41%, 12 ps | 42%, 13 ps | 41%, 12 ps | 41.3%, 12.0 ps | 0.5, 0.8 |

| With | 33%, 10 ps | 34%, 9 ps | 32%, 10 ps | 34%, 10 ps | 33.3%, 9.8 ps | 0.9, 0.5 | |

| B (23%, 12 ps) | Without | 36%, 15 ps | 36%, 13 ps | 37%, 13 ps | 37%, 15 ps | 36.5%, 14.3 ps | 0.6, 0.9 |

| With | 32%, 20 ps | 32%, 21 ps | 31%, 19 ps | 32%, 20 ps | 31.8%, 20.0 ps | 0.5, 0.8 | |

| C (33%, 15 ps) | Without | 38%, 16 ps | 37%, 15 ps | 39%, 15 ps | 37%, 14 ps | 37.8%, 15.0 ps | 0.9, 0.8 |

| With | 36%, 14 ps | 36%, 13 ps | 36%, 15 ps | 36%, 15 ps | 36.0%, 14.3 ps | 0.0, 0.9 | |

| D (18%, 17 ps) | Without | 28%, 24 ps | 29%, 23 ps | 28%, 23 ps | 29%, 24 ps | 28.5%, 23.5 ps | 0.6, 0.6 |

| With | 22%, 16 ps | 23%, 17 ps | 22%, 16 ps | 23%, 16 ps | 22.5%, 16.3 ps | 0.6, 0.5 | |

| E (18%, 20 ps) | Without | 30%, 24 ps | 29%, 24 ps | 30%, 23 ps | 30%, 23 ps | 29.8%, 23.5 ps | 0.5, 0.6 |

| With | 24%, 26 ps | 24%, 26 ps | 24%, 26 ps | 23%, 27 ps | 23.8%, 26.3 ps | 0.5, 0.5 | |

| Avg. 0.6, 0.7 | |||||||

| Dataset | Num. (Patch) | IoU (%) | Recall (%) | Precision (%) | ε (%) | εfp (%) | εfn (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cascaded U-Net | U-Net | Cascaded U-Net | U-Net | Cascaded U-Net | U-Net | Cascaded U-Net | U-Net | Cascaded U-Net | U-Net | Cascaded U-Net | U-Net | ||

| Ds_1 | 100 | 90.0 | 86.4 | 96.9 | 92.5 | 92.7 | 93.0 | 3.5 | 4.5 | 1.1 | 2.3 | 2.4 | 2.2 |

| Ds_2 | 71 | 89.0 | 86.1 | 94.7 | 90.6 | 93.7 | 94.5 | 4.0 | 4.6 | 2.1 | 2.8 | 1.9 | 1.8 |

| Ds_3 | 3 | 87.1 | 78.1 | 92.2 | 88.1 | 94.1 | 87.3 | 4.2 | 7.2 | 2.4 | 3.5 | 1.8 | 3.7 |

| Ds_4 | 24 | 95.5 | 90.0 | 98.9 | 91.8 | 96.6 | 97.9 | 2.2 | 3.2 | 0.6 | 2.5 | 1.6 | 0.7 |

| Ds_5 | 6 | 88.2 | 30.7 | 92.2 | 37.6 | 95.4 | 62.6 | 4.1 | 38.1 | 2.4 | 27.7 | 1.7 | 10.4 |

| Ds_6 | 50 | 94.0 | 89.3 | 98.0 | 93.4 | 95.9 | 95.3 | 2.6 | 3.8 | 1.0 | 2.2 | 1.6 | 1.6 |

| Ds_7 | 5 | 66.3 | 57.7 | 80.8 | 75.6 | 78.7 | 70.9 | 14.5 | 15.8 | 7.7 | 8.0 | 6.8 | 7.8 |

| Ds_8 | 25 | 88.7 | 85.5 | 93.9 | 90.4 | 94.2 | 94.0 | 3.9 | 4.7 | 2.1 | 2.8 | 1.8 | 1.9 |

| Total/Avg. | 284 | 90.2 | 85.1 | 96.0 | 90.9 | 93.8 | 93.5 | 3.6 | 5.2 | 1.5 | 3.1 | 2.1 | 2.1 |

| Method. | IoU (%) | Recall (%) | Presicion (%) | ε (%) | εfp (%) | εfn (%) |

|---|---|---|---|---|---|---|

| DeepLab V3+ | 83.3 | 88.5 | 93.4 | 5.6 | 3.6 | 2.0 |

| U-Net | 85.1 | 90.9 | 93.5 | 5.2 | 3.1 | 2.1 |

| U-Net++ | 88.2 | 92.8 | 94.7 | 4.2 | 2.4 | 1.8 |

| Cascaded U-Net | 90.2 | 96.0 | 93.8 | 3.6 | 1.5 | 2.1 |

| Method | IoU (%) | Recall (%) | Precision (%) | |

|---|---|---|---|---|

| 1 × 1 Conv, Stride: 2 | 90.2 | 96.0 | 93.8 | |

| RDU | 2 × 2 Conv, Stride: 2 | 87.1 | 95.8 | 90.6 |

| 3 × 3 Conv, Stride: 2 | 88.6 | 95.6 | 92.4 | |

| RUU | Up-Sample feature maps as the identity map | 90.2 | 96.0 | 93.8 |

| Pre-layer (encoder) feature maps as the identity map | 89.6 | 94.7 | 94.4 | |

| Method | IoU (%) | Recall (%) | Precision (%) |

|---|---|---|---|

| En-Decoder w BN | 89.4 | 94.0 | 94.9 |

| En-Decoder w/o BN | 87.0 | 92.3 | 93.9 |

| Encoder w/o BN Decoder w BN | 88.9 | 95.4 | 92.9 |

| Encoder w BN Decoder w/o BN | 90.2 | 96.0 | 93.8 |

| Stage i | S4 | S3 | S2 | S1 | BL | IoU (%) | R (%) | P (%) |

|---|---|---|---|---|---|---|---|---|

| wi/wb | 0.8 | 1.0 | 1.2 | 1.4 | 2.2 | |||

| v | - | - | - | - | 88.5 | 95.1 | 92.8 | |

| v | v | - | - | - | 88.8 | 95.4 | 92.8 | |

| v | v | v | - | - | 88.6 | 94.7 | 93.2 | |

| v | v | v | v | - | 89.2 | 94.3 | 94.3 | |

| v | v * | v * | v * | 89.8 | 95.6 | 93.7 | ||

| v | v * | v * | v * | v | 90.2 | 96.0 | 93.8 | |

| wi/wb | 1.4 | 1.2 | 1.0 | 0.8 | 2.2 | |||

| v | v | v | v | - | 87.5 | 92.0 | 94.7 |

| Method | IoU (%) | Recall (%) | Precision (%) |

|---|---|---|---|

| Cascaded U-Net with CDC | 90.2 | 96.0 | 93.8 |

| Cascaded U-Net without CDC | 88.3 | 93.8 | 93.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, H.-C.; Lien, J.-J.J. Cascaded Segmentation U-Net for Quality Evaluation of Scraping Workpiece. Sensors 2023, 23, 998. https://doi.org/10.3390/s23020998

Yin H-C, Lien J-JJ. Cascaded Segmentation U-Net for Quality Evaluation of Scraping Workpiece. Sensors. 2023; 23(2):998. https://doi.org/10.3390/s23020998

Chicago/Turabian StyleYin, Hsin-Chung, and Jenn-Jier James Lien. 2023. "Cascaded Segmentation U-Net for Quality Evaluation of Scraping Workpiece" Sensors 23, no. 2: 998. https://doi.org/10.3390/s23020998

APA StyleYin, H.-C., & Lien, J.-J. J. (2023). Cascaded Segmentation U-Net for Quality Evaluation of Scraping Workpiece. Sensors, 23(2), 998. https://doi.org/10.3390/s23020998