A Stable, Efficient, and High-Precision Non-Coplanar Calibration Method: Applied for Multi-Camera-Based Stereo Vision Measurements

Abstract

:1. Introduction

- This paper establishes a novel improved affine coordinates correction mathematical model for non-coplanar calibration. A novel calibration method based on this model is established for both monocular and binocular camera systems. Simulation and real experiments verified that our novel methods have better accuracy, stability, and efficiency than controlled methods.

- For further improving the accuracy and stability of existing calibration methods, a novel simple circle feature points extraction algorithm based on the combining of local OTSU and gradient-based radial section scanning for edges is proposed in this paper. Simulations and real experiments demonstrated our algorithm has better performance in extraction accuracy and stability for illumination and viewing angle changes than the traditional algorithm from OpenCV.

- Real all-process 3D reconstruction experiments of both discrete feature points and object surface’s full-field region of interest (ROI) have been operated from the stereo system’s calibration, features extraction, features’ stereo matching, to the final features’ stereo reconstruction. Experiments demonstrate the feasibility of our calibration methods for real measurement scenes, and the stereo measurements with this paper’s calibration parameters have better accuracy than controlled methods.

2. Related Works and Problems Formulation

2.1. Mathematical Model and Some Developments of Tsai’s Non-Coplanar Calibration Method

2.2. Mathematical Model and Recent Development of Coplanar Calibration Method

2.3. Deficiencies of Tsai’s Calibration Model and Recent Research of Non-Coplanar Calibration Model Based on Affine Coordinate Correction

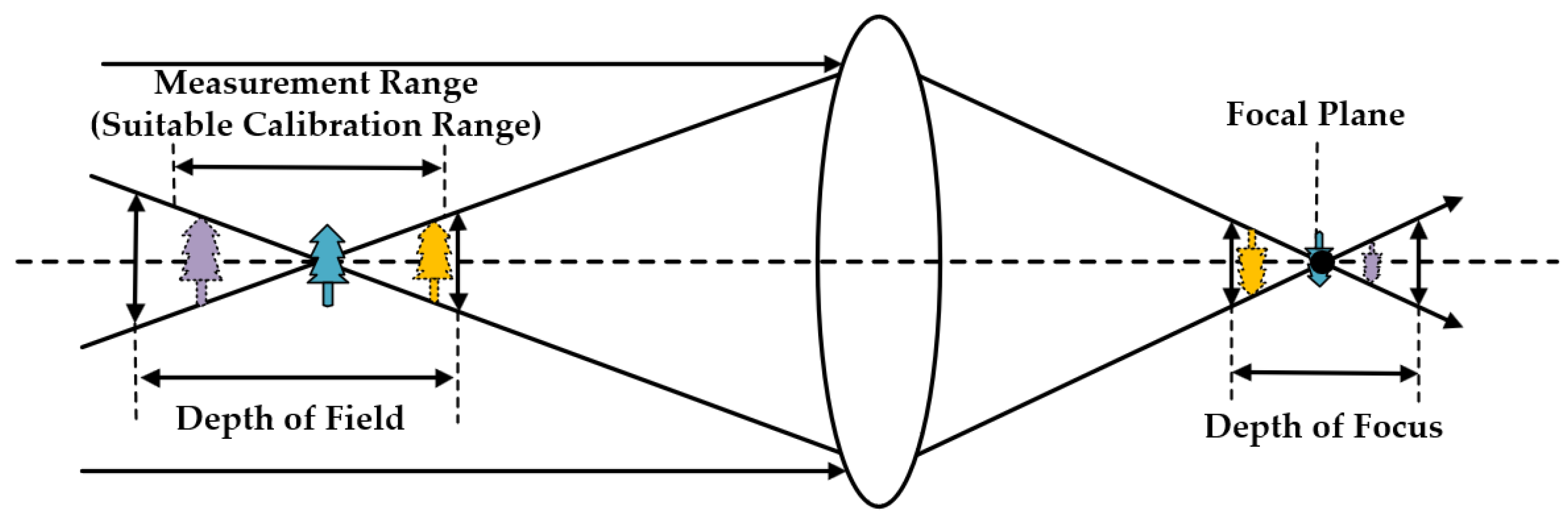

2.4. Local Spatial Optimality of Calibration Parameters

2.5. Implementation and Restrictions of Coplanar Calibration Methods and Non-Coplanar Calibration Methods

2.6. Strategies of Enhancing Calibration Performance by Improving Feature Points Extraction Algorithms

3. Novel Calibration Mathematical Model

3.1. Present Novel Improved Affine Coordinate Correction Mathematical Model for Non-Coplanar Calibration

3.2. Coordinate Space Transformation from Target Affine Space to Orthogonal World Coordinate Space

3.3. Processing of Lens’ Distortion

4. Key Procedures of IACC Calibration Method

4.1. Initial Value Linear Solving and Parameters Separation Method

4.2. Parameters’ Nonlinear Optimization

4.3. Binocular Camera System Calibration Method

4.4. Novel Simple Circle Feature Points Extraction Algorithm with High Accuracy and Stability Based on Local-ROI-OTSU and Radial Section Scanning Method

5. Experiments Results and Discussion

5.1. Performance Simulations of Proposed IACC Calibration Method with Respect to the Noise Level, the Number of Calibration Images, and the Rotation Angle of Targets’ Plane

- The proposed IACC calibration method can fit different levels of noise in images. From the simulation experiment result, our method shows better accuracy and stability than Zhang’s method. However, the increasing noise level will bring in more uncertainty of the calibrated parameters. Thus, it is necessary enhance the certainty of the parameters by reducing the noise level of the feature points’ coordinates.

- The more images used in the calibration, the less uncertainty the parameters will have. Note that in practice, taking more images means we need more displacement data of 2D targets, which may bring in new uncertainty. Thus, combining with our simulation experiments, the suggested number of images is around 10.

- The proposed calibration method can fit 2D targets’ plane at different angles with the image plane. Compared with the simulation data in [8], our improved method shows better accuracy and stability than the ACC method with respect to the rotation angle. However, increasing the angle may bring in the difficulty of extracting feature points precisely and the uncertainty of calibration parameters. Thus, try to avoid taking images from a large angle, and experience and data indicate that an angle of less than 45° is suggested.

5.2. Stability Simulations of the Proposed Novel Circle Feature Points Extraction Algorithm

5.3. Real Monocular Camera Calibration Experiments

5.4. Performance of Present New Algorithm in Appendix B for Improving Calibration Accuracy

5.5. Real Binocular Camera System Calibration and 3D Reconstruction Experiments for Discrete Feature Points

5.6. Full-Field Stereo Measurement Experiments by Stereo-DIC Technologies with the Proposed Calibration Method

- The best calibration position should cover the potential measurements spatial range.

- The present IACC binocular calibration method has the best calibration accuracy among the three contrast methods. And the circular discrete feature points’ measurement accuracy by binocular system with Paper’s calibration parameters and feature extraction algorithm can achieve less than 2.6 μm.

- The present IACC calibration method can be further combined with classical stereo-DIC technologies, e.g., Newton–Raphson (NR) method and ICGN method, to achieve the surface ROIs’ full-field measurements.

- Static measurement experiments and 3D reconstruction experiments have shown the feasibility of the present IACC method applied in stereo-DIC system calibration. Loading experiments are still needed for quantitative analysis of the improvement of measurement accuracy lifted by the present IACC calibration method. The quantitative analysis and dynamic loading experiments deserve further research.

5.7. Analysis of the Calibration Efficiency of Both Monocular and Binocular Camera Systems

- Zhang’s method is the easiest to implement, but the calibration accuracy is not the best. Thus, Zhang’s method is the best choice if there are no extreme demands of high-precision calibration and measurements.

- The present IACC method for monocular and binocular calibration has the best calibration accuracy and moderate implementation complexity. The present IACC method is the preferred choice for high-precision calibration and measurements. Especially when the structure of camera systems and measurements’ position is confirmed in advance. And in some special scenarios when 2D targets are restricted to change postures in multi-viewing positions, the present IACC method would be the best solution for both accuracy and efficiency.

- The ACC method can supply accurate calibration parameters for monocular camera systems with good efficiency. However, it is not suitable for binocular camera systems for either accuracy or efficiency.

- Tsai’s method has distinct defects in mathematical model and is inefficient using planar target with non-coplanar mode. Using real stereo targets will reduce the complexity of Tsai’s method. However, it is still not suitable for high-precision calibration and measurements.

- The Present algorithm in Appendix B can well serve for the mentioned four methods with high-precision and good stability.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

Appendix C

References

- Guan, J.; Deboeverie, F.; Slembrouck, M.; van Haerenborgh, D.; van Cauwelaert, D.; Veelaert, P.; Philips, W. Extrinsic Calibration of Camera Networks Using a Sphere. Sensors 2015, 15, 18985–19005. [Google Scholar] [CrossRef] [PubMed]

- Poulin-Girard, A.S.; Thibault, S.; Laurendeau, D. Influence of camera calibration conditions on the accuracy of 3D reconstruction. Opt. Express 2016, 24, 2678–2686. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Song, X.; Wu, J.; Xiao, Y.; Wang, Y.; Wang, Y. Camera calibration with global LBP-coded phase-shifting wedge grating arrays. Opt. Lasers Eng. 2021, 136, 106314. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Karara, H.M. Direct Linear Transformation from Comparator Coordinates into Object Space Coordinates in Close-Range Photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Shi, Z.C.; Shang, Y.; Zhang, X.F.; Wang, G. DLT-Lines Based Camera Calibration with Lens Radial and Tangential Distortion. Exp. Mech. 2021, 61, 1237–1247. [Google Scholar] [CrossRef]

- Zhang, J.; Duan, F.; Ye, S. An Easy Accurate Calibration Technique for Camera. Chin. J. Sci. 1999, 20, 193–196. [Google Scholar]

- Zheng, H.; Duan, F.-j.; Fu, X.; Liu, C.; Li, T.; Yan, M. A Non-Coplanar High-Precision Calibration Method for Cameras Based on Affine Coordinate Correction Model. Meas. Sci. Technol. 2023, 34, 095018. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, X.; Liu, Q.; Zhang, F. Camera calibration method based on optimal polarization angle. Opt. Lasers Eng. 2019, 112, 128–135. [Google Scholar] [CrossRef]

- Sels, S.; Ribbens, B.; Vanlanduit, S.; Penne, R. Camera Calibration Using Gray Code. Sensors 2019, 19, 246. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Pan, B. Camera calibration using synthetic random speckle pattern and digital image correlation. Opt. Lasers Eng. 2020, 126, 105919. [Google Scholar] [CrossRef]

- Hartley, R.I. Estimation of Relative Camera Positions for Uncalibrated Cameras. In ECCV’92: Proceedings of the Second European Conference on Computer Vision, Santa Margherita Ligure, Italy, 19–22 May 1992; Sandini, G., Ed.; Springer: Berlin/Heidelberg, Germany, 1992; pp. 579–587. [Google Scholar]

- Maybank, S.J.; Faugeras, O.D. A theory of self-calibration of a moving camera. Int. J. Comput. Vis. 1992, 8, 123–151. [Google Scholar] [CrossRef]

- Li, D.; Jia, T.; Wang, Y.; Chen, D.; Wu, C.; Wang, H.; Wu, Y.; Zhang, L. Structured Light Self-Calibration Algorithm Based on Random Speckle. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 1299–1304. [Google Scholar]

- Li, G.; Huang, X.; Li, S. A novel circular points-based self-calibration method for a camera’s intrinsic parameters using RANSAC. Meas. Sci. Technol. 2019, 30, 055005. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Song, G. Calibration Method for Line-Structured Light Three-Dimensional Measurement Based on a Simple Target. Photonics 2022, 9, 218. [Google Scholar] [CrossRef]

- Wei, Z.; Cao, L.; Zhang, G. A novel 1D target-based calibration method with unknown orientation for structured light vision sensor. Opt. Laser Technol. 2010, 42, 570–574. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Pan, B. Digital image correlation for surface deformation measurement: Historical developments, recent advances and future goals. Meas. Sci. Technol. 2018, 29, 082001. [Google Scholar] [CrossRef]

- Xu, J. Analyzing and Improving the Tsai Camera Calibration Method in Machine Vision. Comput. Eng. Sci. 2010, 32, 45–48+58. [Google Scholar]

- Tang, S.; Dong, Z.; Feng, W.; Li, Q.; Nie, L. Fast and Accuracy Camera Calibration Based on Tsai Two-Step Method. In Proceedings of the 2021 7th International Conference on Mechatronics and Robotics Engineering (ICMRE), Budapest, Hungary, 3–5 February 2021; pp. 190–194. [Google Scholar]

- Yin, Z.; Ren, X.; Du, Y.; Yuan, F.; He, X.; Yang, F. Binocular camera calibration based on timing correction. Appl. Opt. 2022, 61, 1475–1481. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Q.; Huang, P. Camera Calibration Based on Phase Estimation. IEEE Trans. Instrum. Meas. 2023, 72, 1–9. [Google Scholar] [CrossRef]

- Chen, H.; Zhuang, J.; Liu, B.; Wang, L.; Zhang, L. Camera calibration method based on circular array calibration board. Syst. Sci. Control Eng. 2023, 11, 2233562. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Y.; Yang, F. Camera calibration method based on Pascal’s theorem. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419846406. [Google Scholar] [CrossRef]

- Dong, Q.C.; Wang, L.; Feng, J.Q. Confidence-based camera calibration with modified census transform. Multimed. Tools Appl. 2020, 79, 23093–23109. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, H.; Deng, H.; Chai, Z.; Ma, M.; Zhong, X. A Robust and Rapid Camera Calibration Method by One Captured Image. IEEE Trans. Instrum. Meas. 2019, 68, 4112–4121. [Google Scholar] [CrossRef]

- Datta, A.; Kim, J.-S.; Kanade, T. Accurate Camera Calibration using Iterative Refinement of Control Points. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; IEEE: Kyoto, Japan, 2009; pp. 1201–1208. [Google Scholar]

- Hannemose, M.; Wilm, J.; Frisvad, J.R.; Bodermann, B.; Frenner, K.; Silver, R.M. Superaccurate Camera Calibration Via Inverse Rendering. In Modeling Aspects in Optical Metrology VII; SPIE: Munich, Germany, 2019. [Google Scholar]

- Sutton, M.A.; Orteu, J.-J.; Schreier, H. Image Correlation for Shape, Motion and Deformation Measurements. Basic Concepts, Theory and Applications; Springer Science & Business Media, LLC: New York, NY, USA, 2009. [Google Scholar]

- Ghorbani, R.; Matta, F.; Sutton, M.A. Full-Field Deformation Measurement and Crack Mapping on Confined Masonry Walls Using Digital Image Correlation. Exp. Mech. 2014, 55, 227–243. [Google Scholar] [CrossRef]

- Genovese, K.; Chi, Y.; Pan, B. Stereo-camera calibration for large-scale DIC measurements with active phase targets and planar mirrors. Opt. Express 2019, 27, 9040–9053. [Google Scholar] [CrossRef]

- Sutton, M.A.; Hild, F. Recent Advances and Perspectives in Digital Image Correlation. Exp. Mech. 2015, 55, 1–8. [Google Scholar] [CrossRef]

- Pan, B.; Li, K.; Tong, W. Fast, Robust and Accurate Digital Image Correlation Calculation Without Redundant Computations. Exp. Mech. 2013, 53, 1277–1289. [Google Scholar] [CrossRef]

- Turner, D. Digital Image Correlation Engine (DICe) Reference Manual, Sandia Report, SAND2015-10606 O. 2015. Available online: https://github.com/dicengine/dice (accessed on 11 August 2023).

- Liu, X.; Tian, J.; Kuang, H.; Ma, X. A Stereo Calibration Method of Multi-Camera Based on Circular Calibration Board. Electronics 2022, 11, 627. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, L.; Cai, B.; Wang, K.; Chen, X.; Wang, Y.; Tao, B. Stereo calibration with absolute phase target. Opt. Express 2019, 27, 22254–22267. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Chang, Q.; Li, X.; Li, Y.; Miyazaki, J. Multi-directional Sobel operator kernel on GPUs. J. Parallel Distrib. Comput. 2023, 177, 160–170. [Google Scholar] [CrossRef]

- Duan, F. Study on Fundamental Theories and Applied Technique of Computer Vision Inspection; Tianjin University: Tianjin, China, 1994. [Google Scholar]

| Mathematical Model (Tsai’s Method) | |||

| Distortion Coefficients | |||

| Matrix of Intrinsic Parameters | Known Optic Center | ||

| Linear Initial Values Solving (RAC Constraints) | |||

| 1st Step | 2nd Step | ||

| Nonlinear Optimization | |||

| Nonlinear Optimization (Binocular Camera System) | |||

| Mathematical Model (Zhang’s Method) | |||

| Matrix of Intrinsic Parameters | Distortion Coefficients | ||

| Linear Initial Values Solving | |||

| Nonlinear Optimization | |||

| Nonlinear Optimization (Binocular Camera System) | |||

| Mathematical Model (ACC Method) | |||

| Distortion Coefficients | |||

| Matrix of Intrinsic Parameters | Known Optic Center | ||

| Linear Initial Value Solving | |||

| Nonlinear Optimization | |||

| Final Parameters Separation | |||

| Mathematical Model (ACC Method) (Extrinsic Parameters Calibration Only) | |||

| Calibrated Distortion Coefficients | |||

| Calibrated Intrinsic Parameters | Known Optic Centers | ||

| Linear Initial Value Solving | |||

| Penalty Constraints Construction | |||

| Nonlinear Optimization | |||

| Final Parameters Separation | |||

| Mathematical Model (Present Improved-ACC Method) | |||

| Distortion Coefficients | |||

| Matrix of Intrinsic Parameters | Optic Center (Initial as Image Center) | ||

| Linear Initial Value Solving | |||

| Nonlinear Optimization | |||

| Nonlinear Optimization (Binocular Camera System) | |||

| Pattern Type | Number of Columns | Number of Rows | Clearance of Feature Points |

|---|---|---|---|

| Chessboard | 11 | 8 | 10 mm ± 1 μm 1 |

| Symmetric Circles | 9 | 7 | 15 mm ± 1 μm 1 |

| Data Sets | Number of Feature Point-Pairs | Applied Methods | Type |

|---|---|---|---|

| Single_L_Chessboard_Laser_V20 | 1760 | Tsai’s Method, ACC and IACC Method | Non-Coplanar |

| Single_R_Chessboard_Laser_V20 | 1760 | Tsai’s Method, ACC and IACC Method | Non-Coplanar |

| Single_L_Chessboard_ZZY | 1760 | Zhang’s Method | Coplanar |

| Single_R_Chessboard_ZZY | 1760 | Zhang’s Method | Coplanar |

| Pattern Type (Extraction Algorithm) | Chessboard (findChessboardCorners) | |||

|---|---|---|---|---|

| Camera | Single_L Camera | |||

| Methods | Tsai’s | ACC Method | Zhang’s | IACC Method |

| (fx, fy) | (2251.457, 2251.750) | (2252.358, 2252.167) | (2254.887, 2255.056) | (2254.071, 2254.118) |

| (U0, V0) | (640, 512) | (650, 556) | (647.79, 493.60) | (639.54, 487.16) |

| (k1, k2) | (1.23 × 10−8, −9.02 × 10−15) | (1.16 × 10−8, −7.38 × 10−15) | (−6.47 × 10−2, 2.70 × 10−1) | (−7.29 × 10−2, 4.34 × 10−1) |

| (p1, p2) | - | (−2.62 × 10−7, 2.63 × 10−9) | (1.17 × 10−3, 5.35 × 10−4) | (1.40 × 10−3, 7.26 × 10−4) |

| Reprojection Error * (Unit: Pix) | 0.641 | 0.084 | 0.177 | 0.074 |

| Reprojection Error * (Unit: mm) | 0.059 | 0.008 | 0.019 | 0.007 |

| Pattern Type (Extraction Algorithm) | Chessboard (findChessboardCorners) | |||

|---|---|---|---|---|

| Camera | Single_R Camera | |||

| Methods | Tsai’s | ACC Method | Zhang’s | IACC Method |

| (fx, fy) | (2252.373, 2252.480) | (2246.971, 2246.647) | (2247.280, 2247.239) | (2246.966, 2246.797) |

| (U0, V0) | (640, 512) | (689, 514) | (674.23, 505.23) | (675.70, 511.10) |

| (k1, k2) | (1.34 × 10−8, −3.51 × 10−15) | (1.55 × 10−8, −8.62 × 10−15) | (−7.39 × 10−2, 1.95 × 10−1) | (−6.94 × 10−2, 2.85 × 10−2) |

| (p1, p2) | - | (−5.95 × 10−7, 3.66 × 10−8) | (1.52 × 10−4, 1.48 × 10−3) | (−1.52 × 10−5, 1.45 × 10−3) |

| Reprojection Error * (Unit: Pix) | 0.539 | 0.070 | 0.098 | 0.066 |

| Reprojection Error * (Unit: mm) | 0.049 | 0.006 | 0.010 | 0.006 |

| Pattern Type (Extraction Algorithm) | Symmetric Circle Pattern (findCirclesGrid) | |||

|---|---|---|---|---|

| Camera | Single_L Camera | |||

| Methods | Tsai’s | Zhang’s | ACC Method | IACC method |

| (fx, fy) | (2258.761, 2258.832) | (2258.761, 2258.832) | (2253.180, 2253.028) | (2254.551, 2254.486) |

| (U0, V0) | (640, 512) | (646.36, 493.89) | (646, 541) | (639.47, 478.33) |

| (k1, k2) | (1.17 × 10−8, −8.91 × 10−15) | (−5.75 × 10−2, 2.39 × 10−1) | (1.10 × 10−8, −7.60 × 10−15) | (−6.61 × 10−2, 3.38 × 10−1) |

| (p1, p2) | - | (1.32 × 10−3, 4.50 × 10−4) | (−2.53 × 10−7, −7.23 × 10−9) | (1.42 × 10−3, 6.62 × 10−4) |

| Reprojection Error * (Unit: Pix) | 0.629 | 0.089 | 0.070 | 0.024 |

| Reprojection Error * (Unit: mm) | 0.064 | 0.011 | 0.007 | 0.002 |

| Pattern Type (Extraction Algorithm) | Symmetric Circle Pattern (findCirclesGrid) | |||

|---|---|---|---|---|

| Camera | Single_R camera | |||

| Methods | Tsai’s | Zhang’s | ACC Method | IACC method |

| (fx, fy) | (2232.061, 2231.872) | (2245.017, 2245.000) | (2244.275, 2244.229) | (2244.373, 2244.342) |

| (U0, V0) | (640, 512) | (670.80, 503.99) | (659, 494) | (664.59, 491.88) |

| (k1, k2) | (8.60 × 10−9, 3.95 × 10−15) | (−5.62 × 10−2, 2.03 × 10−1) | (1.53 × 10−8, −1.06 × 10−14) | (−8.26 × 10−2, 3.93 × 10−1) |

| (p1, p2) | - | (1.41 × 10−4, 1.42 × 10−3) | (−7.54 × 10−7, −5.65 × 10−9) | (5.22 × 10−5, 1.58 × 10−3) |

| Reprojection Error * (Unit: Pix) | 1.034 | 0.044 | 0.042 | 0.041 |

| Reprojection Error * (Unit: mm) | 0.109 | 0.005 | 0.004 | 0.004 |

| Methods | Zhang’s | Present IACC Method | ||

|---|---|---|---|---|

| Feature Extraction Algorithm | findCirclesGrid | New Method in Appendix B | findCirclesGrid | New Method in Appendix B |

| (fx, fy) | (2258.761, 2258.832) | (2257.609, 2257.477) | (2254.551, 2254.486) | (2254.686, 2254.609) |

| (U0,V0) | (646.36, 493.89) | (647.60, 493.95) | (639.472, 478.326) | (639.152, 480.773) |

| (k1, k2) | (−5.75 × 10−2, 2.39 × 10−1) | (−5.67 × 10−2, 2.37 × 10−1) | (−6.61 × 10−2, 3.38 × 10−1) | (−6.14 × 10−2, 2.72 × 10−1) |

| (p1, p2) | (1.32 × 10−3, 4.50 × 10−4) | (1.26 × 10−3, 6.62 × 10−4) | (1.42 × 10−3, 6.62 × 10−4) | (1.45 × 10−3, 6.57 × 10−4) |

| Reprojection Error * (Unit: Pix) | 0.089 | 0.035 | 0.024 | 0.019 |

| Reprojection Error * (Unit: mm) | 0.011 | 0.004 | 0.002 | 0.002 |

| Methods | Zhang’s | Present IACC Method | ||

|---|---|---|---|---|

| Feature Extraction Algorithm | findCirclesGrid | New Method in Appendix B | findCirclesGrid | New Method in Appendix B |

| (fx, fy) | (2245.017, 2245.000) | (2258.622, 2258.425) | (2244.373, 2244.342) | (2243.868, 2243.764) |

| (U0, V0) | (670.80, 503.99) | (671.442, 504.214) | (664.590, 491.874) | (663.304, 501.017) |

| (k1, k2) | (−5.62 × 10−2, 2.03 × 10−1) | (−5.78 × 10−2, 2.34 × 10−1) | (−8.26 × 10−2, 3.93 × 10−1) | (−7.11 × 10−2, 1.99 × 10−1) |

| (p1, p2) | (1.41 × 10−4, 1.42 × 10−3) | (1.59 × 10−4, 1.54 × 10−4) | (5.22 × 10−5, 1.58 × 10−3) | (5.82 × 10−5, 1.59 × 10−3) |

| Reprojection Error * (Unit: Pix) | 0.044 | 0.033 | 0.041 | 0.028 |

| Reprojection Error * (Unit: mm) | 0.005 | 0.004 | 0.004 | 0.003 |

| Pattern Type | Symmetric Circle Pattern (Present New Algorithm in Appendix B) | ||||

|---|---|---|---|---|---|

| Number of Images-Pairs | 5 | ||||

| Methods | ACC Method | Zhang’s Method | |||

| Type | Non-coplanar | Coplanar | |||

| Ka | Kb | ||||

| Da | Db | ||||

| β | ηx | −3.133e−3 | - | ||

| Ra_b_Mat3×3 | |||||

| Ta_b | |||||

| Reprojection Error * | 0.594 Pixels | 0.055 Pixels | |||

| Methods | Present IACC Method | ||||

| Type | Non-coplanar | ||||

| Ka | Kb | ||||

| Da | Db | ||||

| β | |||||

| Ra_b_Mat3×3 | |||||

| Ta_b | |||||

| Reprojection Error * | 0.032 Pixels | ||||

| Clearances Type | Horizontal Point-Pairs Clearances Error Data | Vertical Point-Pairs Clearances Error Data | ||||

|---|---|---|---|---|---|---|

| Number of Measured Clearances | 280 | 270 | ||||

| Real Clearance Value | 15 mm ± 1 μm | 15 mm ± 1 μm | ||||

| Calibration Methods | Zhang’s | ACC | IACC | Zhang’s | ACC | IACC |

| Reproject Error of Calibration | 0.055 Pixels (Non-Measured Position) | 0.594 Pixels (Measured Position) | 0.032 Pixels (Measured Position) | 0.055 Pixels (Non-Measured Position) | 0.594 Pixels (Measured Position) | 0.032 Pixels (Measured Position) |

| Measured Average Value (Unit: mm) | 14.9510 | 14.9952 | 15.0003 | 14.9488 | 15.0139 | 15.0001 |

| Abs of Average Error (Unit: mm) | 0.0490 | 0.0048 | 0.0003 | 0.0512 | 0.0139 | 0.0001 |

| Root Mean Square Error * (Unit: mm) | 0.0520 | 0.0211 | 0.0026 | 0.0521 | 0.0300 | 0.0018 |

| Clearances Type | Horizontal Point-pairs Clearances Error Data | Vertical Point-pairs Clearances Error Data | ||||

|---|---|---|---|---|---|---|

| Number of Measured Clearances | 280 | 270 | ||||

| Real Clearance Value | 15 mm ± 1 μm | 15 mm ± 1 μm | ||||

| Calibration Methods | Zhang’s | ACC | IACC | Zhang’s | ACC | IACC |

| Reproject Error of Calibration | 0.055 Pixels (Measured Position) | 0.594 Pixels (Non-Measured Position) | 0.032 Pixels (Non-Measured Position) | 0.055 Pixels (Measured Position) | 0.594 Pixels (Non-Measured Position) | 0.032 Pixels (Non-Measured Position) |

| Measured Average Value (Unit: mm) | 15.0076 | 15.0508 | 15.0569 | 15.0077 | 15.0694 | 15.0521 |

| Abs of Average Error (Unit: mm) | 0.0076 | 0.0508 | 0.0569 | 0.0077 | 0.0694 | 0.0521 |

| Root Mean Square Error * (Unit: mm) | 0.0217 | 0.0575 | 0.0591 | 0.0173 | 0.0770 | 0.0544 |

| Calibration Parameters from IACC method | Fitting Results | r | Numbers of Points | RMSE | ||

| Cylinder #1 | (77.168, −1.588, 246.656) | (−0.003, 1.000, −0.001) | 76.369 | 150,000 | 0.0065 | |

| Cylinder #2 | (65.792, 0.095, 290.116) | (0.009, 1.000, −0.007) | 103.510 | 100,000 | 0.0099 | |

| Cylinder #3 | (52.377, −14.842, 302.689) | (0.077, 2.692, −0.076) | 124.870 | 150,000 | 0.0063 | |

| Calibration Parameters from Zhang’s Method | Fitting Results | r | Numbers of Points | RMSE | ||

| Cylinder #1 | (75.656, 1.517, 246.340) | (−0.003, 1.000, −0.001) | 76.704 | 150,000 | 0.0066 | |

| Cylinder #2 | (64.074, 0.198, 289.784) | (0.010, 1.000, −0.007) | 103.980 | 100,000 | 0.0100 | |

| Cylinder #3 | (50.785, −8.227, 302.300) | (0.029, 1.000, −0.028) | 125.469 | 150,000 | 0.0062 |

| Methods | Tsai | Zhang | ACC | IACC | ||||

|---|---|---|---|---|---|---|---|---|

| Calibration Mode | Monocular | Binocular | Monocular | Binocular | Monocular | Binocular | Monocular | Binocular |

| Complexity of Algorithm | 5.04 × 105 | 1.008 × 106 | 1.7388 × 107 | 3.477 × 107 | 3.780 × 106 | 1.5120 × 107 | 4.284 × 106 | 8.568 × 106 |

| Complexity of Implementation | 3 × 108 | 3 × 108 | 1 × 108 | 1 × 108 | 1.5 × 108 | 3 × 108 | 1.5 × 108 | 1.5 × 108 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Duan, F.; Li, T.; Li, J.; Niu, G.; Cheng, Z.; Li, X. A Stable, Efficient, and High-Precision Non-Coplanar Calibration Method: Applied for Multi-Camera-Based Stereo Vision Measurements. Sensors 2023, 23, 8466. https://doi.org/10.3390/s23208466

Zheng H, Duan F, Li T, Li J, Niu G, Cheng Z, Li X. A Stable, Efficient, and High-Precision Non-Coplanar Calibration Method: Applied for Multi-Camera-Based Stereo Vision Measurements. Sensors. 2023; 23(20):8466. https://doi.org/10.3390/s23208466

Chicago/Turabian StyleZheng, Hao, Fajie Duan, Tianyu Li, Jiaxin Li, Guangyue Niu, Zhonghai Cheng, and Xin Li. 2023. "A Stable, Efficient, and High-Precision Non-Coplanar Calibration Method: Applied for Multi-Camera-Based Stereo Vision Measurements" Sensors 23, no. 20: 8466. https://doi.org/10.3390/s23208466