Swin Transformer-Based Edge Guidance Network for RGB-D Salient Object Detection

Abstract

:1. Introduction

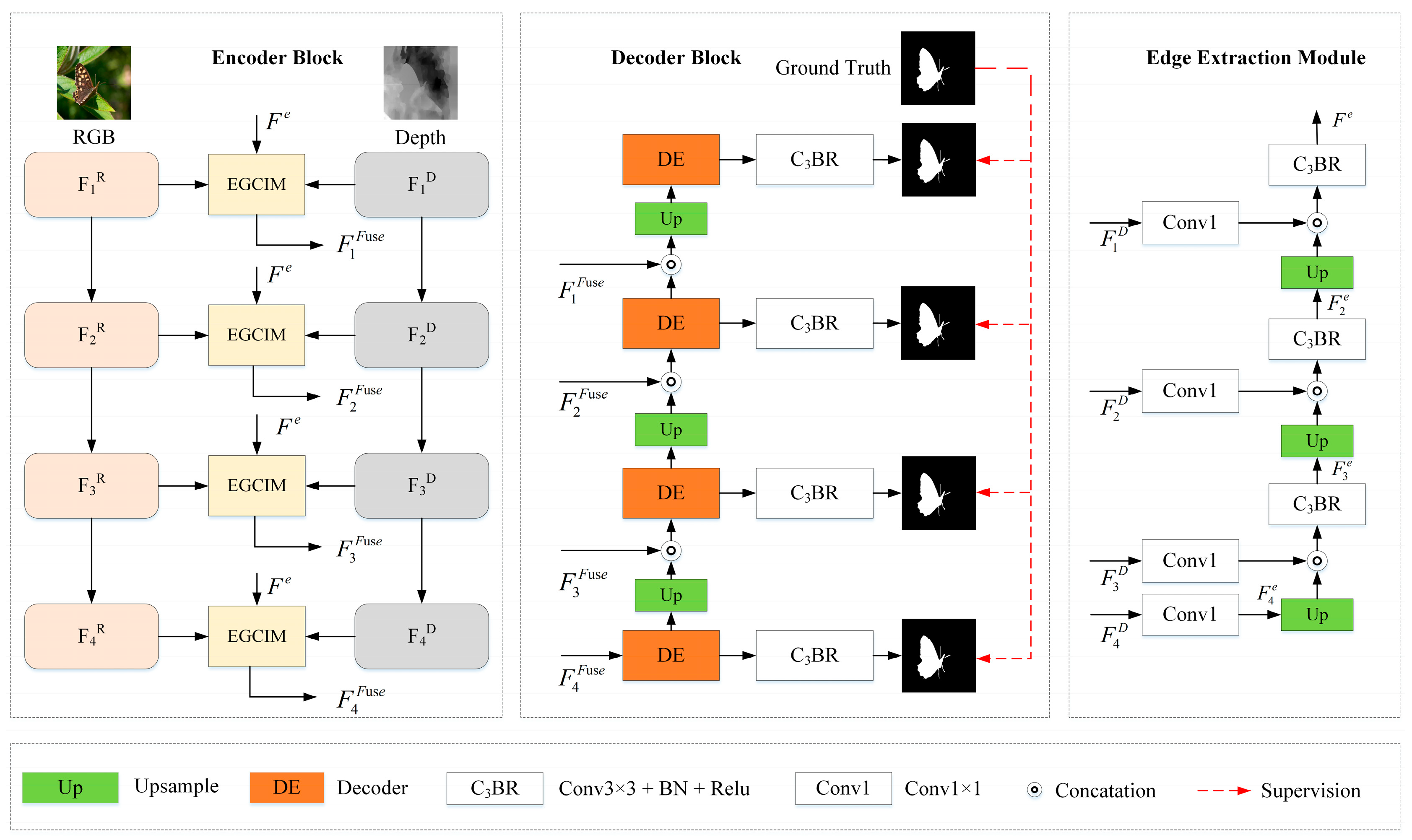

- A novel edge extraction module (EEM) is proposed, which generates edge features from the depth features.

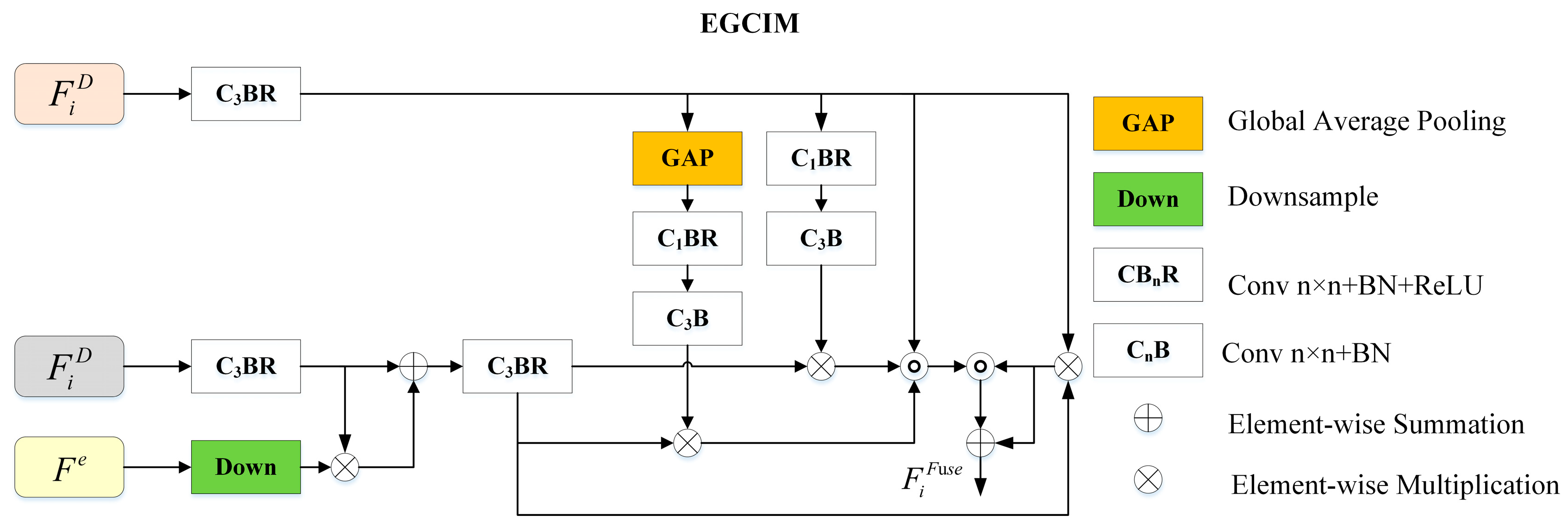

- A newly designed edge-guided cross-modal interaction was employed to effectively integrate cross-modal features, where the depth enhancement module was employed to enhance the depth feature and the cross-modal interaction module was employed to encourage cross-modal interaction from global and local aspects.

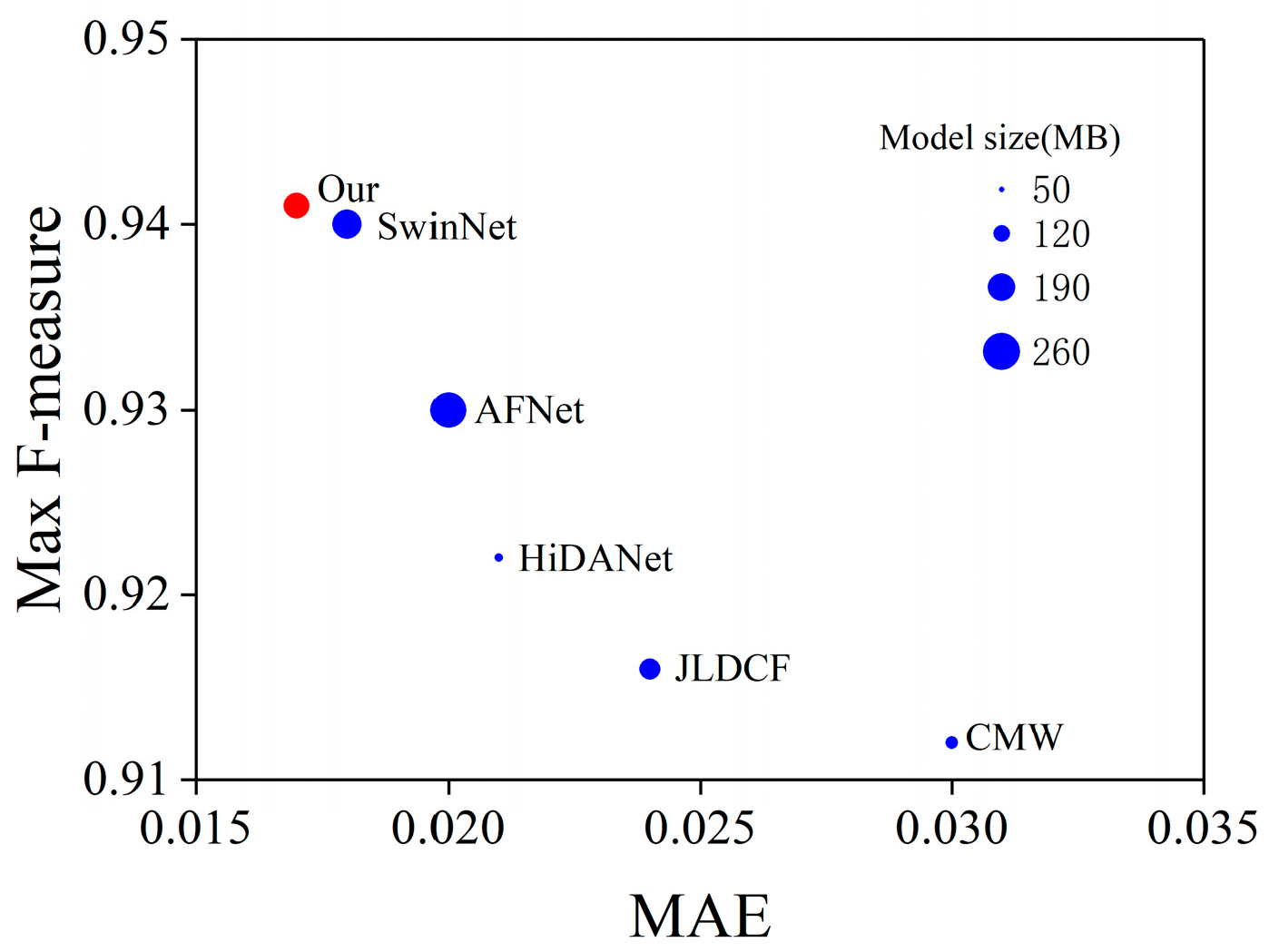

- A novel Swin Transformer-based edge guidance network (SwinEGNet) for RGB-D SOD is proposed. The proposed SwinEGNet was evaluated with four evaluation metrics and compared to 14 state-of-the-art (SOTA) RGB-D SOD methods on six public datasets. Our model achieved better performance with less parameters and FLOPs than SwinNet, as shown in Figure 1. In addition, a comprehensive ablation experiment was also conducted to verify the effectiveness of the proposed modules. The experiment results showed the outstanding performance of our proposed method.

2. Related Work

3. Methodologies

3.1. The Overall Architecture

3.2. Feature Encoder

3.3. Edge Extraction Module

3.4. Edge-Guided Cross-modal Interaction Module

3.5. Cascaded Decoder

3.6. Loss Function

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Implementation Details

4.3. Comparison with SOTAs

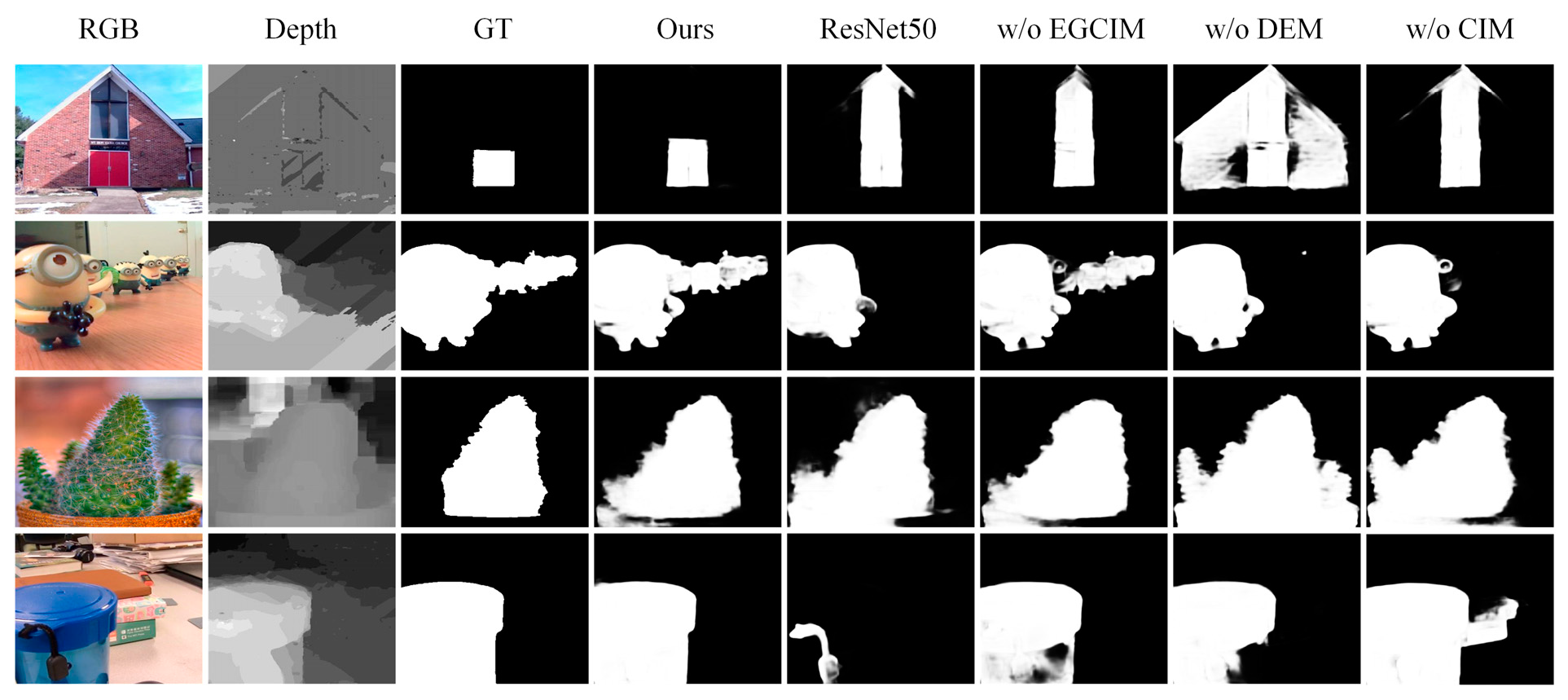

4.4. Ablation Study

4.5. Complexity Analysis

4.6. Failure Cases

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fan, D.-P.; Wang, W.; Cheng, M.; Shen, J. Shifting more attention to video salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Shimoda, W.; Yanai, K. Distinct class-specific saliency maps for weakly supervised semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Mahadevan, V.; Vasconcelos, N. Saliency-based discriminant tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Ma, C.; Huang, J.B.; Yang, X.K.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, X.; Ma, H.; Chen, X.; You, S. Edge preserving and multiscale contextual neural network for salient object detection. IEEE Trans. Image Process. 2018, 27, 121–134. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, D.; Wang, Y. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Liu, J.; Hou, Q.; Cheng, M.; Feng, J.; Jiang, J. A simple pooling-based design for real-time salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhao, J.; Cao, Y.; Fan, D.-P.; Cheng, M.; Li, X.; Zhang, L. Contrast prior and fluid pyramid integration for RGBD salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Piao, Y.; Rong, Z.; Zhang, M.; Ren, W.; Lu, H. A2dele: Adaptive and attentive depth distiller for efficient RGB-D salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, S.; Fu, Y. Progressively guided alternate refinement network for RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; pp. 520–538. [Google Scholar]

- Fan, D.-P.; Zhai, Y.; Borji, A.; Yang, J.; Shao, L. BBS-Net: RGB-D salient object detection with a bifurcated backbone strategy network. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; pp. 275–292. [Google Scholar]

- Li, G.; Liu, Z.; Ye, L.; Wang, Y.; Ling, H. Cross-modal weighting network for RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; pp. 665–681. [Google Scholar]

- Li, G.; Liu, Z.; Chen, M.; Bai, Z.; Lin, W.; Ling, H. Hierarchical Alternate Interaction Network for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3528–3542. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Fu, H.; Chen, G.; Zhou, Y.; Fan, D.-P.; Shao, L. Specificity-preserving RGB-D Saliency Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4681–4691. [Google Scholar]

- Chen, H.; Li, Y.; Su, D. Multi-modal fusion network with multi-scale multi-path and cross-modal interactions for RGB-D salient object detection. Pattern Recognit. 2019, 86, 376–385. [Google Scholar] [CrossRef]

- Zhang, J.; Fan, D.-P.; Dai, Y.; Yu, X.; Zhong, Y.; Barnes, N.; Shao, L. RGB-D saliency detection via cascaded mutual information minimization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4318–4327. [Google Scholar]

- Ji, W.; Li, J.; Yu, S.; Zhang, M.; Piao, Y.; Yao, S.; Bi, Q.; Ma, K.; Zheng, Y.; Lu, H.; et al. Calibrated rgb-d salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 9471–9481. [Google Scholar]

- Lee, M.; Park, C.; Cho, S.; Lee, S. SPSN: Superpixel prototype sampling network for RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Sun, P.; Zhang, W.; Wang, H.; Li, S.; Li, X. Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021. [Google Scholar]

- Wu, Z.; Gobichettipalayam, S.; Tamadazte, B.; Allibert, G.; Paudel, D.P.; Demonceaux, C. Robust RGB-D fusion for saliency detection. In Proceedings of the 2022 International Conference on 3D Vision (3DV), Prague, Czechia, 12–15 September 2022; pp. 403–413. [Google Scholar]

- Chen, T.; Xiao, J.; Hu, X.; Zhang, G.; Wang, S. Adaptive fusion network for RGB-D salient object detection. Neurocomputing 2023, 522, 152–164. [Google Scholar] [CrossRef]

- Wu, Z.; Allibert, G.; Meriaudeau, F.; Ma, C.; Demonceaux, C. HiDAnet: RGB-D Salient Object Detection via Hierarchical Depth Awareness. IEEE Trans. Image Process. 2023, 32, 2160–2173. [Google Scholar] [CrossRef] [PubMed]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Caver: Cross-modal view mixed transformer for bi-modal salient object detection. IEEE Trans. Image Process. 2023, 32, 892–904. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inform. Process. Syst. 2023, 30, 5998–6008. [Google Scholar]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A Survey of visual transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023. early access. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 10012–10022. [Google Scholar]

- Zhang, M.; Fei, S.; Liu, J.; Xu, S.; Piao, Y.; Lu, H. Asymmetric two-stream architecture for accurate rgb-d saliency detection. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; pp. 374–390. [Google Scholar]

- Jiang, B.; Chen, S.; Wang, B.; Luo, B. MGLNN: Semi-supervised learning via multiple graph cooperative learning neural networks. Neural Netw. 2022, 153, 204–214. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, N.; Wan, K.; Han, J.; Shao, L. Visual Saliency Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 4722–4732. [Google Scholar]

- Zeng, C.; Kwong, S. Dual Swin-Transformer based Mutual Interactive Network for RGB-D Salient Object Detection. arXiv 2022, arXiv:2206.03105. [Google Scholar] [CrossRef]

- Liu, C.; Yang, G.; Wang, S.; Wang, H.; Zhang, Y.; Wang, Y. TANet: Transformer-based Asymmetric Network for RGB-D Salient Object Detection. IET Comput. Vis. 2023, 17, 415–430. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Transcmd: Cross-modal decoder equipped with transformer for rgb-d salient object detection. arXiv 2021, arXiv:2112.02363. [Google Scholar] [CrossRef]

- Liu, Z.; Tan, Y.; He, Q.; Xiao, Y. Swinnet: Swin transformer drives edge-aware RGB-D and RGB-T salient object detection. IEEE Trans. Circ. Syst. Video Technol. 2021, 32, 4486–4497. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. DenseSPH-YOLOv5: An automated damage detection model based on DenseNet and Swin-Transformer prediction head-enabled YOLOv5 with attention mechanism. Adv. Eng. Inform. 2023, 56, 102007. [Google Scholar] [CrossRef]

- Wei, J.; Wang, S.; Huang, Q. F3net: Fusion, feedback and focus for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12321–12328. [Google Scholar]

- Niu, Y.; Geng, Y.; Li, X.; Liu, F. Leveraging stereopsis for saliency analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Province, RI, USA, 16–21 June 2012; pp. 454–461. [Google Scholar]

- Ju, R.; Ge, L.; Geng, W.; Ren, T.; Wu, G. Depth saliency based on anisotropic center-surround difference. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1115–1119. [Google Scholar]

- Peng, H.; Li, B.; Xiong, W.; Hu, W.; Ji, R. RGBD salient object detection: A benchmark and algorithms. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 5–12 September 2014; pp. 92–109. [Google Scholar]

- Li, N.; Ye, J.; Ji, Y.; Ling, H.; Yu, J. Saliency detection on light field. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Zurich, Switzerland, 5–12 September 2014; pp. 2806–2813. [Google Scholar]

- Fan, D.-P.; Lin, Z.; Zhang, Z.; Zhu, M.; Cheng, M. Rethinking RGB-D salient object detection: Models, data sets, and large-scale benchmarks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2075–2089. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.; Fu, H.; Wei, X.; Xiao, J.; Cao, X. Depth enhanced saliency detection method. In Proceedings of the International Conference on Internet Multimedia Computing and Service, Xiamen, China, 10–12 July 2014; pp. 23–27. [Google Scholar]

- Fan, D.-P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE international conference on computer vision (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4548–4557. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 22–25 June 2009; pp. 1597–1604. [Google Scholar]

- Fan, D.-P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 698–704. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Fu, K.; Fan, D.-P.; Ji, G.; Zhao, Q. JL-DCF: Joint learning and densely-cooperative fusion framework for rgb-d salient object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3052–3062. [Google Scholar]

- Bi, H.; Wu, R.; Liu, Z.; Zhu, H. Cross-modal Hierarchical Interaction Network for RGB-D Salient Object Detection. Pattern Recognit. 2023, 136, 109194. [Google Scholar] [CrossRef]

- Chen, T.; Hu, X.; Xiao, J.; Zhang, G.; Wang, S. CFIDNet: Cascaded Feature Interaction Decoder for RGB-D Salient Object Detection. Neural Comput. Applic. 2022, 34, 7547–7563. [Google Scholar] [CrossRef]

- Zhang, M.; Yao, S.; Hu, B.; Piao, Y.; Ji, W. C2DFNet: Criss-Cross Dynamic Filter Network for RGB-D Salient Object Detection. IEEE Trans. Multimed. 2022. early access. [Google Scholar] [CrossRef]

- Wang, X.; Jiang, B.; Wang, X.; Luo, B. Mutualformer: Multimodality representation learning via mutual transformer. arXiv 2021, arXiv:2112.01177. [Google Scholar] [CrossRef]

| Metric | CMW | JLDCF | HINet | HAINet | DSA2F | CFIDNet | C2DFNet | SPSNet | AFNet | HiDANet | MTFormer | VST | TANet | SwinNet | Our | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LFSD | Sm↑ | 0.876 | 0.854 | 0.852 | 0.854 | 0.882 | 0.869 | 0.863 | - | 0.89 | - | 0.872 | 0.89 | 0.875 | 0.886 | 0.893 |

| Fm↑ | 0.899 | 0.862 | 0.872 | 0.877 | 0.903 | 0.883 | 0.89 | - | 0.9 | - | 0.879 | 0.903 | 0.892 | 0.903 | 0.909 | |

| Em↑ | 0.901 | 0.893 | 0.88 | 0.882 | 0.920 | 0.897 | 0.899 | - | 0.917 | - | 0.911 | 0.918 | - | 0.914 | 0.925 | |

| M↓ | 0.067 | 0.078 | 0.076 | 0.08 | 0.054 | 0.07 | 0.065 | - | 0.056 | - | 0.062 | 0.054 | 0.059 | 0.059 | 0.052 | |

| NLPR | Sm↑ | 0.917 | 0.925 | 0.922 | 0.924 | 0.918 | 0.922 | 0.928 | 0.923 | 0.936 | 0.93 | 0.932 | 0.931 | 0.935 | 0.941 | 0.941 |

| Fm↑ | 0.912 | 0.916 | 0.915 | 0.922 | 0.917 | 0.914 | 0.926 | 0.918 | 0.93 | 0.929 | 0.925 | 0.927 | 0.943 | 0.94 | 0.941 | |

| Em↑ | 0.94 | 0.962 | 0.949 | 0.956 | 0.95 | 0.95 | 0.957 | 0.956 | 0.961 | 0.961 | 0.965 | 0.954 | - | 0.968 | 0.969 | |

| M↓ | 0.03 | 0.022 | 0.026 | 0.024 | 0.024 | 0.026 | 0.021 | 0.024 | 0.02 | 0.021 | 0.021 | A0.024 | 0.018 | 0.018 | 0.017 | |

| NJU2K | Sm↑ | 0.903 | 0.903 | 0.915 | 0.912 | 0.904 | 0.914 | 0.908 | 0.918 | 0.926 | 0.926 | 0.922 | 0.922 | 0.927 | 0.935 | 0.931 |

| Fm↑ | 0.913 | 0.903 | 0.925 | 0.925 | 0.916 | 0.923 | 0.918 | 0.927 | 0.933 | 0.939 | 0.923 | 0.926 | 0.941 | 0.943 | 0.938 | |

| Em↑ | 0.925 | 0.944 | 0.936 | 0.94 | 0.935 | 0.938 | 0.937 | 0.949 | 0.95 | 0.954 | 0.954 | 0.942 | - | 0.957 | 0.958 | |

| M↓ | 0.046 | 0.043 | 0.038 | 0.038 | 0.039 | 0.038 | 0.039 | 0.033 | 0.032 | 0.029 | 0.032 | 0.036 | 0.027 | 0.027 | 0.026 | |

| STEREO | Sm↑ | 0.913 | 0.903 | 0.892 | 0.915 | 0.898 | 0.91 | 0.911 | 0.914 | 0.918 | 0.911 | 0.908 | 0.913 | 0.923 | 0.919 | 0.919 |

| Fm↑ | 0.909 | 0.903 | 0.897 | 0.914 | 0.91 | 0.906 | 0.91 | 0.908 | 0.923 | 0.921 | 0.908 | 0.915 | 0.934 | 0.926 | 0.926 | |

| Em↑ | 0.93 | 0.944 | 0.92 | 0.938 | 0.939 | 0.935 | 0.938 | 0.941 | 0.949 | 0.946 | 0.947 | 0.939 | - | 0.947 | 0.951 | |

| M↓ | 0.042 | 0.043 | 0.048 | 0.039 | 0.039 | 0.042 | 0.037 | 0.035 | 0.034 | 0.035 | 0.038 | 0.038 | 0.027 | 0.033 | 0.031 | |

| DES | Sm↑ | 0.937 | 0.929 | 0.927 | 0.939 | 0.917 | 0.92 | 0.924 | 0.94 | 0.925 | 0.946 | - | 0.946 | - | 0.945 | 0.947 |

| Fm↑ | 0.943 | 0.919 | 0.937 | 0.949 | 0.929 | 0.937 | 0.937 | 0.944 | 0.938 | 0.952 | - | 0.949 | - | 0.952 | 0.956 | |

| Em↑ | 0.961 | 0.968 | 0.953 | 0.971 | 0.955 | 0.938 | 0.953 | 0.974 | 0.946 | 0.98 | - | 0.971 | - | 0.973 | 0.98 | |

| M↓ | 0.021 | 0.022 | 0.22 | 0.017 | 0.023 | 0.022 | 0.018 | 0.015 | 0.022 | 0.013 | - | 0.017 | - | 0.016 | 0.014 | |

| SIP | Sm↑ | 0.867 | 0.879 | 0.856 | 0.879 | 0.861 | 0.881 | 0.871 | 0.892 | 0.896 | 0.892 | 0.894 | 0.903 | 0.893 | 0.911 | 0.9 |

| Fm↑ | 0.889 | 0.885 | 0.88 | 0.906 | 0.891 | 0.9 | 0.895 | 0.91 | 0.919 | 0.919 | 0.902 | 0.924 | 0.922 | 0.936 | 0.93 | |

| Em↑ | 0.9 | 0.923 | 0.888 | 0.916 | 0.909 | 0.918 | 0.913 | 0.931 | 0.931 | 0.927 | 0.932 | 0.935 | - | 0.944 | 0.935 | |

| M↓ | 0.063 | 0.051 | 0.066 | 0.053 | 0.057 | 0.051 | 0.052 | 0.044 | 0.043 | 0.043 | 0.043 | 0.041 | 0.041 | 0.035 | 0.04 |

| Models | LFSD | STEREO | ||||||

|---|---|---|---|---|---|---|---|---|

| M↓ | Sm↑ | Fm↑ | Em↑ | M↓ | Sm↑ | Fm↑ | Em↑ | |

| Ours | 0.052 | 0.893 | 0.909 | 0.925 | 0.031 | 0.919 | 0.926 | 0.951 |

| ResNet50 | 0.084 | 0.835 | 0.864 | 0.868 | 0.044 | 0.893 | 0.0.9 | 0.927 |

| w/o EGCIM | 0.067 | 0.87 | 0.887 | 0.902 | 0.035 | 0.913 | 0.922 | 0.946 |

| w/o DEM | 0.064 | 0.875 | 0.893 | 0.906 | 0.032 | 0.917 | 0.925 | 0.949 |

| w/o CIM | 0.066 | 0.869 | 0.887 | 0.901 | 0.033 | 0.914 | 0.923 | 0.947 |

| Backbone | Model | Num_Parameters ↓ | FLOPs ↓ | LFSD Fm ↑ | NLPR Fm ↑ |

|---|---|---|---|---|---|

| CNN | CMW | 85.7 M | 208 G | 0.899 | 0.912 |

| HiDANet | 59.8 M | 73.6 G | 0.877 | 0.922 | |

| JLDCF | 143.5 M | 211.1 G | 0.862 | 0.916 | |

| AFNet | 242 M | 128 G | 0.902 | 0.93 | |

| Transformer | SwinNet | 198.7 M | 124.3 G | 0.903 | 0.94 |

| Ours | 175.6 M | 96 G | 0.909 | 0.941 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Jiang, F.; Xu, B. Swin Transformer-Based Edge Guidance Network for RGB-D Salient Object Detection. Sensors 2023, 23, 8802. https://doi.org/10.3390/s23218802

Wang S, Jiang F, Xu B. Swin Transformer-Based Edge Guidance Network for RGB-D Salient Object Detection. Sensors. 2023; 23(21):8802. https://doi.org/10.3390/s23218802

Chicago/Turabian StyleWang, Shuaihui, Fengyi Jiang, and Boqian Xu. 2023. "Swin Transformer-Based Edge Guidance Network for RGB-D Salient Object Detection" Sensors 23, no. 21: 8802. https://doi.org/10.3390/s23218802

APA StyleWang, S., Jiang, F., & Xu, B. (2023). Swin Transformer-Based Edge Guidance Network for RGB-D Salient Object Detection. Sensors, 23(21), 8802. https://doi.org/10.3390/s23218802